├── soft_intro_vae_3d

├── datasets

│ ├── __init__.py

│ ├── transforms.py

│ ├── shapenet.py

│ └── modelnet40.py

├── metrics

│ ├── __init__.py

│ └── jsd.py

├── utils

│ ├── __init__.py

│ ├── util.py

│ ├── data.py

│ └── pcutil.py

├── requirements.txt

├── render

│ ├── LICENSE

│ ├── README.md

│ └── render_mitsuba2_pc.py

├── losses

│ └── chamfer_loss.py

├── config

│ └── soft_intro_vae_hp.json

├── test_model.py

├── evaluation

│ ├── generate_data_for_metrics.py

│ └── find_best_epoch_on_validation_soft.py

├── generate_for_rendering.py

└── README.md

├── style_soft_intro_vae

├── training_artifacts

│ ├── ffhq

│ │ └── last_checkpoint

│ └── celeba-hq256

│ │ └── last_checkpoint

├── requirements.txt

├── registry.py

├── split_train_test_dirs.py

├── configs

│ ├── celeba-hq256.yaml

│ └── ffhq256.yaml

├── defaults.py

├── environment.yml

├── utils.py

├── scheduler.py

├── custom_adam.py

├── make_figures

│ ├── generate_samples.py

│ ├── make_generation_figure.py

│ ├── make_recon_figure_ffhq.py

│ ├── make_recon_figure_interpolation_2_images.py

│ ├── make_recon_figure_paged.py

│ └── make_recon_figure_interpolation.py

├── launcher.py

├── lod_driver.py

├── tracker.py

├── checkpointer.py

├── dataset_preparation

│ └── split_tfrecords_ffhq.py

└── README.md

├── soft_intro_vae_tutorial

└── README.md

├── soft_intro_vae_2d

├── main.py

└── README.md

├── soft_intro_vae

├── main.py

├── README.md

└── dataset.py

├── soft_intro_vae_bootstrap

├── main.py

├── README.md

└── dataset.py

├── environment.yml

└── README.md

/soft_intro_vae_3d/datasets/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/soft_intro_vae_3d/metrics/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/soft_intro_vae_3d/utils/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/style_soft_intro_vae/training_artifacts/ffhq/last_checkpoint:

--------------------------------------------------------------------------------

1 | training_artifacts/ffhq/ffhq_fid_17.55_epoch_270.pth

--------------------------------------------------------------------------------

/style_soft_intro_vae/training_artifacts/celeba-hq256/last_checkpoint:

--------------------------------------------------------------------------------

1 | training_artifacts/celeba-hq256/celebahq_fid_18.63_epoch_230.pth

--------------------------------------------------------------------------------

/soft_intro_vae_3d/requirements.txt:

--------------------------------------------------------------------------------

1 | h5py

2 | matplotlib

3 | numpy

4 | pandas

5 | git+https://github.com/szagoruyko/pyinn.git@master

6 | torch==0.4.1

--------------------------------------------------------------------------------

/style_soft_intro_vae/requirements.txt:

--------------------------------------------------------------------------------

1 | packaging

2 | imageio

3 | numpy

4 | scipy

5 | tqdm

6 | dlutils

7 | bimpy

8 | torch >= 1.3

9 | torchvision

10 | sklearn

11 | yacs

12 | matplotlib

13 |

--------------------------------------------------------------------------------

/style_soft_intro_vae/registry.py:

--------------------------------------------------------------------------------

1 | from utils import Registry

2 |

3 | MODELS = Registry()

4 | ENCODERS = Registry()

5 | GENERATORS = Registry()

6 | MAPPINGS = Registry()

7 | DISCRIMINATORS = Registry()

8 |

--------------------------------------------------------------------------------

/style_soft_intro_vae/split_train_test_dirs.py:

--------------------------------------------------------------------------------

1 | from pathlib import Path

2 | import os

3 | import shutil

4 |

5 | if __name__ == '__main__':

6 | all_data_dir = str(Path.home()) + '/../../mnt/data/tal/celebhq_256'

7 | train_dir = str(Path.home()) + '/../../mnt/data/tal/celebhq_256_train'

8 | test_dir = str(Path.home()) + '/../../mnt/data/tal/celebhq_256_test'

9 | os.makedirs(train_dir, exist_ok=True)

10 | os.makedirs(test_dir, exist_ok=True)

11 | num_train = 29000

12 | num_test = 1000

13 | images = []

14 | for i, filename in enumerate(os.listdir(all_data_dir)):

15 | if i < num_train:

16 | shutil.copyfile(os.path.join(all_data_dir, filename), os.path.join(train_dir, filename))

17 | else:

18 | shutil.copyfile(os.path.join(all_data_dir, filename), os.path.join(test_dir, filename))

19 |

--------------------------------------------------------------------------------

/soft_intro_vae_3d/render/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2020 Tolga Birdal

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/soft_intro_vae_3d/losses/chamfer_loss.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import torch.nn as nn

3 |

4 |

5 | class ChamferLoss(nn.Module):

6 |

7 | def __init__(self):

8 | super(ChamferLoss, self).__init__()

9 | self.use_cuda = torch.cuda.is_available()

10 |

11 | def forward(self, preds, gts):

12 | P = self.batch_pairwise_dist(gts, preds)

13 | mins, _ = torch.min(P, 1)

14 | loss_1 = torch.sum(mins, 1)

15 | mins, _ = torch.min(P, 2)

16 | loss_2 = torch.sum(mins, 1)

17 | return loss_1 + loss_2

18 |

19 | def batch_pairwise_dist(self, x, y):

20 | bs, num_points_x, points_dim = x.size()

21 | _, num_points_y, _ = y.size()

22 | xx = torch.bmm(x, x.transpose(2, 1))

23 | yy = torch.bmm(y, y.transpose(2, 1))

24 | zz = torch.bmm(x, y.transpose(2, 1))

25 | if self.use_cuda:

26 | dtype = torch.cuda.LongTensor

27 | else:

28 | dtype = torch.LongTensor

29 | diag_ind_x = torch.arange(0, num_points_x).type(dtype)

30 | diag_ind_y = torch.arange(0, num_points_y).type(dtype)

31 | rx = xx[:, diag_ind_x, diag_ind_x].unsqueeze(1).expand_as(

32 | zz.transpose(2, 1))

33 | ry = yy[:, diag_ind_y, diag_ind_y].unsqueeze(1).expand_as(zz)

34 | P = rx.transpose(2, 1) + ry - 2 * zz

35 | return P

36 |

--------------------------------------------------------------------------------

/soft_intro_vae_3d/config/soft_intro_vae_hp.json:

--------------------------------------------------------------------------------

1 | {

2 | "experiment_name": "soft_intro_vae",

3 | "results_root": "./results",

4 | "clean_results_dir": false,

5 |

6 | "cuda": true,

7 | "gpu": 0,

8 |

9 | "reconstruction_loss": "chamfer",

10 |

11 | "metrics": [

12 | ],

13 |

14 | "dataset": "shapenet",

15 | "data_dir": "./datasets/shapenet_data",

16 | "classes": ["car", "airplane"],

17 | "shuffle": true,

18 | "transforms": [],

19 | "num_workers": 8,

20 | "n_points": 2048,

21 |

22 | "max_epochs": 2000,

23 | "batch_size": 32,

24 | "beta_rec": 20.0,

25 | "beta_kl": 1.0,

26 | "beta_neg": 256,

27 | "z_size": 128,

28 | "gamma_r": 1e-8,

29 | "num_vae": 0,

30 | "prior_std": 0.2,

31 |

32 |

33 | "seed": -1,

34 | "save_frequency": 50,

35 | "valid_frequency": 2,

36 |

37 | "arch": "vae",

38 | "model": {

39 | "D": {

40 | "use_bias": true,

41 | "relu_slope": 0.2

42 | },

43 | "E": {

44 | "use_bias": true,

45 | "relu_slope": 0.2

46 | }

47 | },

48 | "optimizer": {

49 | "D": {

50 | "type": "Adam",

51 | "hyperparams": {

52 | "lr": 0.0005,

53 | "weight_decay": 0,

54 | "betas": [0.9, 0.999],

55 | "amsgrad": false

56 | }

57 | },

58 | "E": {

59 | "type": "Adam",

60 | "hyperparams": {

61 | "lr": 0.0005,

62 | "weight_decay": 0,

63 | "betas": [0.9, 0.999],

64 | "amsgrad": false

65 | }

66 | }

67 | }

68 | }

--------------------------------------------------------------------------------

/style_soft_intro_vae/configs/celeba-hq256.yaml:

--------------------------------------------------------------------------------

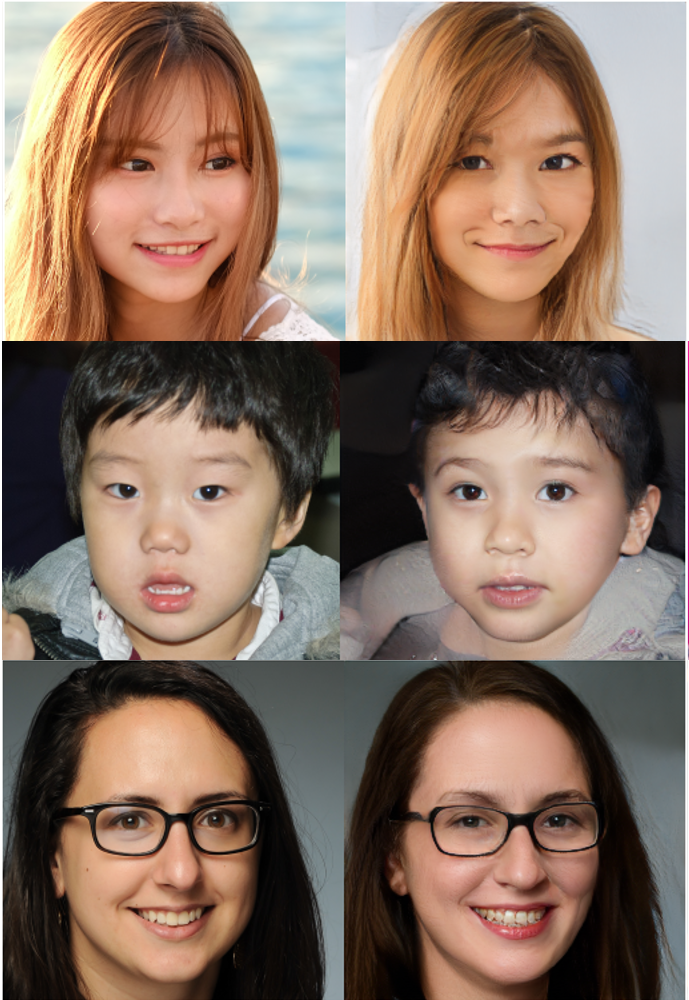

1 | # Config for training SoftIntroVAE on CelebA-HQ at resolution 256x256

2 |

3 | NAME: celeba-hq256

4 | DATASET:

5 | PART_COUNT: 16

6 | SIZE: 29000

7 | SIZE_TEST: 1000

8 | PATH: /mnt/data/tal/celebhq_256_tfrecords/celeba-r%02d.tfrecords.%03d

9 | PATH_TEST: /mnt/data/tal/celebhq_256_test_tfrecords/celeba-r%02d.tfrecords.%03d

10 | MAX_RESOLUTION_LEVEL: 8

11 | SAMPLES_PATH: /mnt/data/tal/celebhq_256_test/

12 | STYLE_MIX_PATH: /home/tal/tmp/SoftIntroVAE/style_mixing/test_images/set_ffhq

13 | MODEL:

14 | LATENT_SPACE_SIZE: 512

15 | LAYER_COUNT: 7

16 | MAX_CHANNEL_COUNT: 512

17 | START_CHANNEL_COUNT: 64

18 | DLATENT_AVG_BETA: 0.995

19 | MAPPING_LAYERS: 8

20 | BETA_KL: 0.2

21 | BETA_REC: 0.05

22 | BETA_NEG: [2048, 2048, 2048, 1024, 512, 512, 512, 512, 512]

23 | SCALE: 0.000005

24 | OUTPUT_DIR: /home/tal/tmp/SoftIntroVAE/training_artifacts/celeba-hq256

25 | TRAIN:

26 | BASE_LEARNING_RATE: 0.002

27 | EPOCHS_PER_LOD: 30

28 | NUM_VAE: 1

29 | LEARNING_DECAY_RATE: 0.1

30 | LEARNING_DECAY_STEPS: []

31 | TRAIN_EPOCHS: 300

32 | # 4 8 16 32 64 128 256 512 1024

33 | LOD_2_BATCH_8GPU: [512, 256, 128, 64, 32, 32, 32, 32, 24]

34 | LOD_2_BATCH_4GPU: [512, 256, 128, 64, 32, 32, 16, 8, 4]

35 | LOD_2_BATCH_2GPU: [128, 128, 128, 64, 32, 16, 8]

36 | LOD_2_BATCH_1GPU: [128, 128, 128, 32, 16, 8, 4]

37 |

38 | LEARNING_RATES: [0.0015, 0.0015, 0.0015, 0.0015, 0.0015, 0.0015, 0.0015, 0.003, 0.003]

39 |

--------------------------------------------------------------------------------

/style_soft_intro_vae/configs/ffhq256.yaml:

--------------------------------------------------------------------------------

1 | # Config for training SoftIntroVAE on FFHQ at resolution 1256x256

2 |

3 | NAME: ffhq

4 | DATASET:

5 | PART_COUNT: 16

6 | SIZE: 60000

7 | FFHQ_SOURCE: /mnt/data/tal/ffhq_ds/ffhq-dataset/tfrecords_custom/ffhq-r%02d.tfrecords

8 | PATH: /mnt/data/tal/ffhq_ds/ffhq-dataset/tfrecords_custom/splitted/ffhq-r%02d.tfrecords.%03d

9 |

10 | PART_COUNT_TEST: 2

11 | PATH_TEST: /mnt/data/tal/ffhq_ds/ffhq-dataset/tfrecords_custom/splitted/ffhq-r%02d.tfrecords.%03d

12 |

13 | SAMPLES_PATH: /mnt/data/tal/ffhq_ds/ffhq-dataset/images1024x1024/69000

14 | STYLE_MIX_PATH: style_mixing/test_images/set_ffhq

15 |

16 | MAX_RESOLUTION_LEVEL: 8

17 | MODEL:

18 | LATENT_SPACE_SIZE: 512

19 | LAYER_COUNT: 7

20 | MAX_CHANNEL_COUNT: 512

21 | START_CHANNEL_COUNT: 64

22 | DLATENT_AVG_BETA: 0.995

23 | MAPPING_LAYERS: 8

24 | BETA_KL: 0.2

25 | BETA_REC: 0.1

26 | BETA_NEG: [2048, 2048, 2048, 1024, 512, 512, 512, 512, 512]

27 | SCALE: 0.000005

28 | OUTPUT_DIR: /home/tal/tmp/SoftIntroVAE/training_artifacts/ffhq

29 | TRAIN:

30 | BASE_LEARNING_RATE: 0.002

31 | EPOCHS_PER_LOD: 16

32 | NUM_VAE: 1

33 | LEARNING_DECAY_RATE: 0.1

34 | LEARNING_DECAY_STEPS: []

35 | TRAIN_EPOCHS: 300

36 | # 4 8 16 32 64 128 256

37 | LOD_2_BATCH_8GPU: [512, 256, 128, 64, 32, 32, 32, 32, 32] # If GPU memory ~16GB reduce last number from 32 to 24

38 | LOD_2_BATCH_4GPU: [ 512, 256, 128, 64, 32, 32, 16, 8, 4 ]

39 | LOD_2_BATCH_2GPU: [ 128, 128, 128, 64, 32, 16, 8 ]

40 | LOD_2_BATCH_1GPU: [ 128, 128, 128, 32, 16, 8, 4 ]

41 |

42 | LEARNING_RATES: [0.0015, 0.0015, 0.0015, 0.0015, 0.0015, 0.0015, 0.002, 0.003, 0.003]

43 |

--------------------------------------------------------------------------------

/soft_intro_vae_3d/render/README.md:

--------------------------------------------------------------------------------

1 | # Rendering Beautiful Point Clouds with Mitsuba Renderer

2 | This code was adapted from the code by [tolgabirdal](https://github.com/tolgabirdal/Mitsuba2PointCloudRenderer)

3 | and modified to work with Soft-IntroVAE output.

4 |

5 | # Installing Mitsuba 2 (on a Linux machine)

6 | Follow the following steps:

7 | 1. Follow https://mitsuba2.readthedocs.io/en/latest/src/getting_started/compiling.html#linux

8 | 2. Clone mitsuba2: git clone --recursive https://github.com/mitsuba-renderer/mitsuba2

9 | 3. Install `openEXR`: `sudo apt-get install libopenexr-dev`

10 | 4. `pip install openexr`

11 | 5. Replace 'PATH_TO_MITSUBA2' in the 'render_mitsuba2_pc.py' with the path to your local 'mitsuba' file.

12 |

13 |

14 | # Multiple Point Cloud Renderer using Mitsuba 2

15 |

16 | Calling the script **render_mitsuba2_pc.py** automatically performs the following in order:

17 |

18 | 1. generates an XML file, which describes a 3D scene in the format used by Mitsuba.

19 | 2. calls Mitsuba2 to render the point cloud into an EXR

20 | 3. processes the EXR into a jpg file.

21 | 4. iterates for multiple point clouds present in the tensor (.npy)

22 |

23 | It could process both plys and npy. The script is heavily inspired by [PointFlow renderer](https://github.com/zekunhao1995/PointFlowRenderer) and here is how the outputs can look like:

24 |

25 |

26 | ## Dependencies

27 | * Python >= 3.6

28 | * [Mitsuba 2](http://www.mitsuba-renderer.org/)

29 | * Used python packages for 'render_mitsuba2_pc' : OpenEXR, Imath, PIL

30 |

31 | Ensure that Mitsuba 2 can be called as 'mitsuba' by following the [instructions here](https://mitsuba2.readthedocs.io/en/latest/src/getting_started/compiling.html#linux).

32 | Also make sure that the 'PATH_TO_MITSUBA2' in the code is replaced by the path to your local 'mitsuba' file.

33 |

34 | ## Instructions

35 |

36 | Replace 'PATH_TO_MITSUBA2' in the 'render_mitsuba2_pc.py' with the path to your local 'mitsuba' file. Then call:

37 | ```bash

38 | # Render a single or multiple JPG file(s) as:

39 | python render_mitsuba2_pc.py interpolations.npy

40 |

41 | # It could also render a ply file

42 | python render_mitsuba2_pc.py chair.ply

43 | ```

44 |

45 | All the outputs including the resulting JPG files will be saved in the directory of the input point cloud. The intermediate EXR/XML files will remain in the folder and has to be removed by the user.

46 |

47 | * To animate a sequence of images in a `gif` format, it is recommended to use `imageio`.

48 |

--------------------------------------------------------------------------------

/soft_intro_vae_tutorial/README.md:

--------------------------------------------------------------------------------

1 | # soft-intro-vae-pytorch-tutorials

2 |

3 | Step-by-step Jupyter Notebook tutorials for Soft-IntroVAE

4 |

5 |

6 |

7 |  8 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |  15 |

15 |

16 |

17 | - [soft-intro-vae-pytorch-tutorials](#soft-intro-vae-pytorch-tutorials)

18 | * [Running Instructions](#running-instructions)

19 | * [Files and directories in the repository](#files-and-directories-in-the-repository)

20 | * [But Wait, There is More...](#but-wait--there-is-more)

21 |

22 | ## Running Instructions

23 | * This Jupyter Notebook can be opened locally with Anaconda, or online via Google Colab.

24 | * To run online, go to https://colab.research.google.com/ and drag-and-drop the `soft_intro_vae_code_tutorial.ipynb` file.

25 | * On Colab, note the "directory" icon on the left, figures and checkpoints are saved in this directory.

26 | * To run the training on the image dataset, it is better to have a GPU. In Google Colab select `Runtime->Change runtime type->GPU`.

27 | * You can also use NBViewer to render the notebooks: [Open with Jupyter NBviewer](https://nbviewer.jupyter.org/github/taldatech/soft-intro-vae-pytorch/tree/main/)

28 |

29 | ## Files and directories in the repository

30 |

31 | |File name | Purpose |

32 | |----------------------|------|

33 | |`soft_intro_vae_2d_code_tutorial.ipynb`| Soft-IntroVAE tutorial for 2D datasets|

34 | |`soft_intro_vae_image_code_tutorial.ipynb`| Soft-IntroVAE tutorial for image datasets|

35 | |`soft_intro_vae_bootstrap_code_tutorial.ipynb`| Bootstrap Soft-IntroVAE tutorial for image datasets (not used in the paper)|

36 |

37 |

38 | ## But Wait, There is More...

39 | * General Tutorials (Jupyter Notebooks with code)

40 | * [CS236756 - Intro to Machine Learning](https://github.com/taldatech/cs236756-intro-to-ml)

41 | * [EE046202 - Unsupervised Learning and Data Analysis](https://github.com/taldatech/ee046202-unsupervised-learning-data-analysis)

42 | * [EE046746 - Computer Vision](https://github.com/taldatech/ee046746-computer-vision)

--------------------------------------------------------------------------------

/soft_intro_vae_3d/utils/util.py:

--------------------------------------------------------------------------------

1 | import logging

2 | import re

3 | from os import listdir, makedirs

4 | from os.path import join, exists

5 | from shutil import rmtree

6 | from time import sleep

7 |

8 | import torch

9 |

10 |

11 | def setup_logging(log_dir):

12 | makedirs(log_dir, exist_ok=True)

13 |

14 | logpath = join(log_dir, 'log.txt')

15 | filemode = 'a' if exists(logpath) else 'w'

16 |

17 | # set up logging to file - see previous section for more details

18 | logging.basicConfig(level=logging.DEBUG,

19 | format='%(asctime)s %(message)s',

20 | datefmt='%m-%d %H:%M:%S',

21 | filename=logpath,

22 | filemode=filemode)

23 | # define a Handler which writes INFO messages or higher to the sys.stderr

24 | console = logging.StreamHandler()

25 | console.setLevel(logging.DEBUG)

26 | # set a format which is simpler for console use

27 | formatter = logging.Formatter('%(asctime)s: %(levelname)-8s %(message)s')

28 | # tell the handler to use this format

29 | console.setFormatter(formatter)

30 | # add the handler to the root logger

31 | logging.getLogger('').addHandler(console)

32 |

33 |

34 | def prepare_results_dir(config):

35 | output_dir = join(config['results_root'], config['arch'],

36 | config['experiment_name'])

37 | if config['clean_results_dir']:

38 | if exists(output_dir):

39 | print('Attention! Cleaning results directory in 10 seconds!')

40 | sleep(10)

41 | rmtree(output_dir, ignore_errors=True)

42 | makedirs(output_dir, exist_ok=True)

43 | makedirs(join(output_dir, 'weights'), exist_ok=True)

44 | makedirs(join(output_dir, 'samples'), exist_ok=True)

45 | makedirs(join(output_dir, 'results'), exist_ok=True)

46 | return output_dir

47 |

48 |

49 | def find_latest_epoch(dirpath):

50 | # Files with weights are in format ddddd_{D,E,G}.pth

51 | epoch_regex = re.compile(r'^(?P\d+)_[DEG]\.pth$')

52 | epochs_completed = []

53 | if exists(join(dirpath, 'weights')):

54 | dirpath = join(dirpath, 'weights')

55 | for f in listdir(dirpath):

56 | m = epoch_regex.match(f)

57 | if m:

58 | epochs_completed.append(int(m.group('n_epoch')))

59 | return max(epochs_completed) if epochs_completed else 0

60 |

61 |

62 | def cuda_setup(cuda=False, gpu_idx=0):

63 | if cuda and torch.cuda.is_available():

64 | device = torch.device('cuda')

65 | torch.cuda.set_device(gpu_idx)

66 | else:

67 | device = torch.device('cpu')

68 | return device

69 |

70 |

--------------------------------------------------------------------------------

/style_soft_intro_vae/defaults.py:

--------------------------------------------------------------------------------

1 | # Copyright 2019-2020 Stanislav Pidhorskyi

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | # ==============================================================================

15 |

16 | from yacs.config import CfgNode as CN

17 |

18 |

19 | _C = CN()

20 |

21 | _C.NAME = ""

22 | _C.PPL_CELEBA_ADJUSTMENT = False

23 | _C.OUTPUT_DIR = "results"

24 |

25 | _C.DATASET = CN()

26 | _C.DATASET.PATH = 'celeba/data_fold_%d_lod_%d.pkl'

27 | _C.DATASET.PATH_TEST = ''

28 | _C.DATASET.FFHQ_SOURCE = '/data/datasets/ffhq-dataset/tfrecords/ffhq/ffhq-r%02d.tfrecords'

29 | _C.DATASET.PART_COUNT = 1

30 | _C.DATASET.PART_COUNT_TEST = 1

31 | _C.DATASET.SIZE = 70000

32 | _C.DATASET.SIZE_TEST = 10000

33 | _C.DATASET.FLIP_IMAGES = True

34 | _C.DATASET.SAMPLES_PATH = 'dataset_samples/faces/realign128x128'

35 |

36 | _C.DATASET.STYLE_MIX_PATH = 'style_mixing/test_images/set_celeba/'

37 |

38 | _C.DATASET.MAX_RESOLUTION_LEVEL = 10

39 |

40 | _C.MODEL = CN()

41 |

42 | _C.MODEL.LAYER_COUNT = 6

43 | _C.MODEL.START_CHANNEL_COUNT = 64

44 | _C.MODEL.MAX_CHANNEL_COUNT = 512

45 | _C.MODEL.LATENT_SPACE_SIZE = 256

46 | _C.MODEL.DLATENT_AVG_BETA = 0.995

47 | _C.MODEL.TRUNCATIOM_PSI = 0.7

48 | _C.MODEL.TRUNCATIOM_CUTOFF = 8

49 | _C.MODEL.STYLE_MIXING_PROB = 0.9

50 | _C.MODEL.MAPPING_LAYERS = 5

51 | _C.MODEL.CHANNELS = 3

52 | _C.MODEL.GENERATOR = "GeneratorDefault"

53 | _C.MODEL.ENCODER = "EncoderDefault"

54 | _C.MODEL.MAPPING_TO_LATENT = "MappingToLatent"

55 | _C.MODEL.MAPPING_FROM_LATENT = "MappingFromLatent"

56 | _C.MODEL.Z_REGRESSION = False

57 | _C.MODEL.BETA_KL = 1.0

58 | _C.MODEL.BETA_REC = 1.0

59 | _C.MODEL.BETA_NEG = [2048, 2048, 1024, 512, 512, 128, 128, 64, 64]

60 | _C.MODEL.SCALE = 1 / (3 * 256 ** 2)

61 |

62 | _C.TRAIN = CN()

63 |

64 | _C.TRAIN.EPOCHS_PER_LOD = 15

65 |

66 | _C.TRAIN.BASE_LEARNING_RATE = 0.0015

67 | _C.TRAIN.ADAM_BETA_0 = 0.0

68 | _C.TRAIN.ADAM_BETA_1 = 0.99

69 | _C.TRAIN.LEARNING_DECAY_RATE = 0.1

70 | _C.TRAIN.LEARNING_DECAY_STEPS = []

71 | _C.TRAIN.TRAIN_EPOCHS = 110

72 | _C.TRAIN.NUM_VAE = 1

73 |

74 | _C.TRAIN.LOD_2_BATCH_8GPU = [512, 256, 128, 64, 32, 32]

75 | _C.TRAIN.LOD_2_BATCH_4GPU = [512, 256, 128, 64, 32, 16]

76 | _C.TRAIN.LOD_2_BATCH_2GPU = [256, 256, 128, 64, 32, 16]

77 | _C.TRAIN.LOD_2_BATCH_1GPU = [128, 128, 128, 64, 32, 16]

78 |

79 |

80 | _C.TRAIN.SNAPSHOT_FREQ = [300, 300, 300, 100, 50, 30, 20, 20, 10]

81 |

82 | _C.TRAIN.REPORT_FREQ = [100, 80, 60, 30, 20, 10, 10, 5, 5]

83 |

84 | _C.TRAIN.LEARNING_RATES = [0.002]

85 |

86 |

87 | def get_cfg_defaults():

88 | return _C.clone()

89 |

--------------------------------------------------------------------------------

/soft_intro_vae_2d/main.py:

--------------------------------------------------------------------------------

1 | """

2 | Main function for arguments parsing

3 | Author: Tal Daniel

4 | """

5 | # imports

6 | import torch

7 | import argparse

8 | from train_soft_intro_vae_2d import train_soft_intro_vae_toy

9 |

10 | if __name__ == "__main__":

11 | """

12 | Recommended hyper-parameters:

13 | - 8Gaussians: beta_kl: 0.3, beta_rec: 0.2, beta_neg: 0.9, z_dim: 2, batch_size: 512

14 | - 2spirals: beta_kl: 0.5, beta_rec: 0.2, beta_neg: 1.0, z_dim: 2, batch_size: 512

15 | - checkerboard: beta_kl: 0.1, beta_rec: 0.2, beta_neg: 0.2, z_dim: 2, batch_size: 512

16 | - rings: beta_kl: 0.2, beta_rec: 0.2, beta_neg: 1.0, z_dim: 2, batch_size: 512

17 | """

18 | parser = argparse.ArgumentParser(description="train Soft-IntroVAE 2D")

19 | parser.add_argument("-d", "--dataset", type=str,

20 | help="dataset to train on: ['8Gaussians', '2spirals', 'checkerboard', rings']")

21 | parser.add_argument("-n", "--num_iter", type=int, help="total number of iterations to run", default=30_000)

22 | parser.add_argument("-z", "--z_dim", type=int, help="latent dimensions", default=2)

23 | parser.add_argument("-l", "--lr", type=float, help="learning rate", default=2e-4)

24 | parser.add_argument("-b", "--batch_size", type=int, help="batch size", default=512)

25 | parser.add_argument("-v", "--num_vae", type=int, help="number of iterations for vanilla vae training", default=2000)

26 | parser.add_argument("-r", "--beta_rec", type=float, help="beta coefficient for the reconstruction loss",

27 | default=0.2)

28 | parser.add_argument("-k", "--beta_kl", type=float, help="beta coefficient for the kl divergence",

29 | default=0.3)

30 | parser.add_argument("-e", "--beta_neg", type=float,

31 | help="beta coefficient for the kl divergence in the expELBO function", default=0.9)

32 | parser.add_argument("-g", "--gamma_r", type=float,

33 | help="coefficient for the reconstruction loss for fake data in the decoder", default=1e-8)

34 | parser.add_argument("-s", "--seed", type=int, help="seed", default=-1)

35 | parser.add_argument("-p", "--pretrained", type=str, help="path to pretrained model, to continue training",

36 | default="None")

37 | parser.add_argument("-c", "--device", type=int, help="device: -1 for cpu, 0 and up for specific cuda device",

38 | default=-1)

39 | args = parser.parse_args()

40 |

41 | device = torch.device("cpu") if args.device <= -1 else torch.device("cuda:" + str(args.device))

42 | pretrained = None if args.pretrained == "None" else args.pretrained

43 | if args.dataset == '8Gaussians':

44 | scale = 1

45 | else:

46 | scale = 2

47 | # train

48 | model = train_soft_intro_vae_toy(z_dim=args.z_dim, lr_e=args.lr, lr_d=args.lr, batch_size=args.batch_size,

49 | n_iter=args.num_iter, num_vae=args.num_vae, save_interval=5000,

50 | recon_loss_type="mse", beta_kl=args.beta_kl, beta_rec=args.beta_rec,

51 | beta_neg=args.beta_neg, test_iter=5000, seed=args.seed, scale=scale,

52 | device=device, dataset=args.dataset)

53 |

--------------------------------------------------------------------------------

/style_soft_intro_vae/environment.yml:

--------------------------------------------------------------------------------

1 | name: tf_torch

2 | channels:

3 | - pytorch

4 | - conda-forge

5 | - defaults

6 | dependencies:

7 | - _libgcc_mutex=0.1=main

8 | - blas=1.0=mkl

9 | - ca-certificates=2020.6.24=0

10 | - certifi=2020.6.20=py36_0

11 | - cffi=1.14.0=py36he30daa8_1

12 | - cudatoolkit=10.1.243=h6bb024c_0

13 | - cycler=0.10.0=py_2

14 | - dbus=1.13.6=he372182_0

15 | - expat=2.2.9=he1b5a44_2

16 | - fontconfig=2.13.1=he4413a7_1000

17 | - freetype=2.10.2=he06d7ca_0

18 | - glib=2.65.0=h3eb4bd4_0

19 | - gst-plugins-base=1.14.0=hbbd80ab_1

20 | - gstreamer=1.14.0=hb31296c_0

21 | - icu=58.2=hf484d3e_1000

22 | - imageio=2.8.0=py_0

23 | - intel-openmp=2020.1=217

24 | - joblib=0.16.0=py_0

25 | - jpeg=9d=h516909a_0

26 | - kiwisolver=1.2.0=py36hdb11119_0

27 | - ld_impl_linux-64=2.33.1=h53a641e_7

28 | - libedit=3.1.20191231=h7b6447c_0

29 | - libffi=3.3=he6710b0_1

30 | - libgcc-ng=9.1.0=hdf63c60_0

31 | - libgfortran-ng=7.5.0=hdf63c60_6

32 | - libopenblas=0.3.7=h5ec1e0e_6

33 | - libpng=1.6.37=hed695b0_1

34 | - libstdcxx-ng=9.1.0=hdf63c60_0

35 | - libtiff=4.1.0=hc7e4089_6

36 | - libuuid=2.32.1=h14c3975_1000

37 | - libwebp-base=1.1.0=h516909a_3

38 | - libxcb=1.13=h14c3975_1002

39 | - libxml2=2.9.10=he19cac6_1

40 | - lz4-c=1.9.2=he1b5a44_1

41 | - matplotlib=3.2.2=0

42 | - matplotlib-base=3.2.2=py36hef1b27d_0

43 | - mkl=2020.1=217

44 | - mkl-service=2.3.0=py36he904b0f_0

45 | - mkl_fft=1.1.0=py36h23d657b_0

46 | - mkl_random=1.1.1=py36h0573a6f_0

47 | - ncurses=6.2=he6710b0_1

48 | - ninja=1.9.0=py36hfd86e86_0

49 | - olefile=0.46=py_0

50 | - openssl=1.1.1g=h516909a_0

51 | - packaging=20.4=pyh9f0ad1d_0

52 | - pcre=8.44=he1b5a44_0

53 | - pillow=7.2.0=py36h8328e55_0

54 | - pip=20.1.1=py36_1

55 | - pthread-stubs=0.4=h14c3975_1001

56 | - pycparser=2.20=py_0

57 | - pyparsing=2.4.7=pyh9f0ad1d_0

58 | - pyqt=5.9.2=py36hcca6a23_4

59 | - python=3.6.10=h7579374_2

60 | - python-dateutil=2.8.1=py_0

61 | - python_abi=3.6=1_cp36m

62 | - pytorch=1.5.1=py3.6_cuda10.1.243_cudnn7.6.3_0

63 | - pyyaml=5.3.1=py36h8c4c3a4_0

64 | - qt=5.9.7=h5867ecd_1

65 | - readline=8.0=h7b6447c_0

66 | - scikit-learn=0.23.1=py36h423224d_0

67 | - scipy=1.5.0=py36h0b6359f_0

68 | - sip=4.19.8=py36hf484d3e_0

69 | - six=1.15.0=pyh9f0ad1d_0

70 | - sqlite=3.32.3=h62c20be_0

71 | - threadpoolctl=2.1.0=pyh5ca1d4c_0

72 | - tk=8.6.10=hbc83047_0

73 | - torchvision=0.6.1=py36_cu101

74 | - tornado=6.0.4=py36h8c4c3a4_1

75 | - tqdm=4.47.0=pyh9f0ad1d_0

76 | - wheel=0.34.2=py36_0

77 | - xorg-libxau=1.0.9=h14c3975_0

78 | - xorg-libxdmcp=1.1.3=h516909a_0

79 | - xz=5.2.5=h7b6447c_0

80 | - yacs=0.1.6=py_0

81 | - yaml=0.2.5=h516909a_0

82 | - zlib=1.2.11=h7b6447c_3

83 | - zstd=1.4.4=h6597ccf_3

84 | - pip:

85 | - absl-py==0.9.0

86 | - astor==0.8.1

87 | - bimpy==0.0.13

88 | - dareblopy==0.0.3

89 | - gast==0.3.3

90 | - grpcio==1.30.0

91 | - h5py==2.10.0

92 | - importlib-metadata==1.7.0

93 | - keras-applications==1.0.8

94 | - keras-preprocessing==1.1.2

95 | - markdown==3.2.2

96 | - mock==4.0.2

97 | - numpy==1.14.5

98 | - protobuf==3.12.2

99 | - setuptools==39.1.0

100 | - tensorboard==1.13.1

101 | - tensorflow-estimator==1.13.0

102 | - tensorflow-gpu==1.13.1

103 | - termcolor==1.1.0

104 | - werkzeug==1.0.1

105 | - zipp==3.1.0

106 | prefix: /home/tal/anaconda3/envs/tf_torch

107 |

108 |

--------------------------------------------------------------------------------

/soft_intro_vae/main.py:

--------------------------------------------------------------------------------

1 | """

2 | Main function for arguments parsing

3 | Author: Tal Daniel

4 | """

5 | # imports

6 | import torch

7 | import argparse

8 | from train_soft_intro_vae import train_soft_intro_vae

9 |

10 | if __name__ == "__main__":

11 | """

12 | Recommended hyper-parameters:

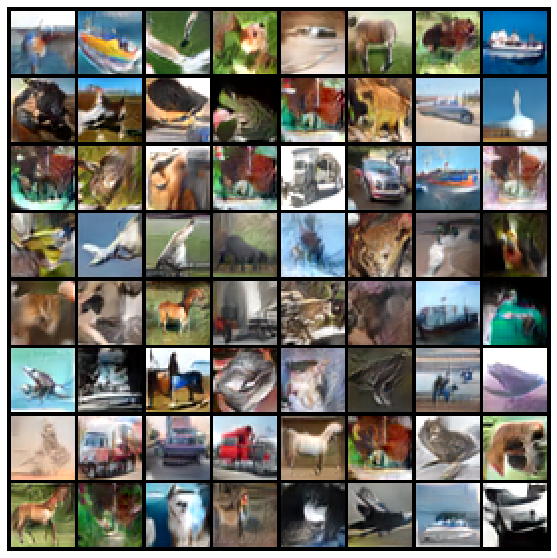

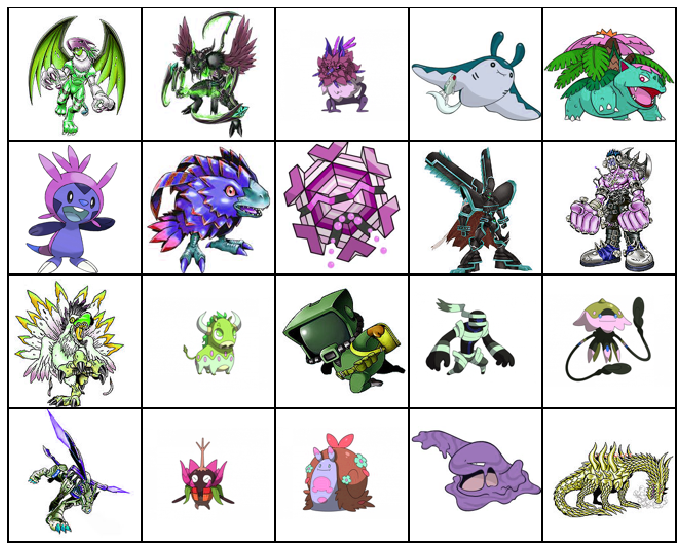

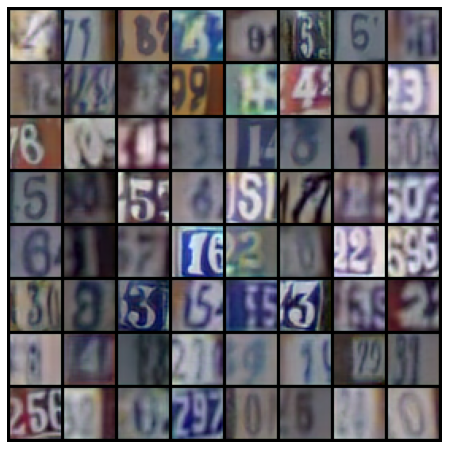

13 | - CIFAR10: beta_kl: 1.0, beta_rec: 1.0, beta_neg: 256, z_dim: 128, batch_size: 32

14 | - SVHN: beta_kl: 1.0, beta_rec: 1.0, beta_neg: 256, z_dim: 128, batch_size: 32

15 | - MNIST: beta_kl: 1.0, beta_rec: 1.0, beta_neg: 256, z_dim: 32, batch_size: 128

16 | - FashionMNIST: beta_kl: 1.0, beta_rec: 1.0, beta_neg: 256, z_dim: 32, batch_size: 128

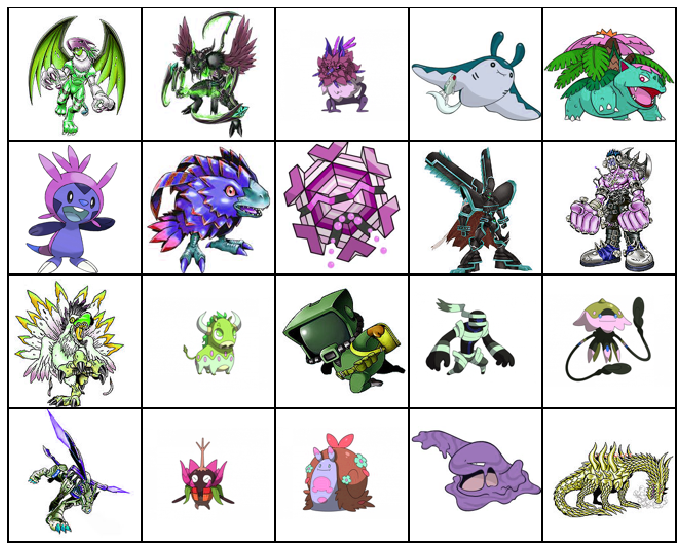

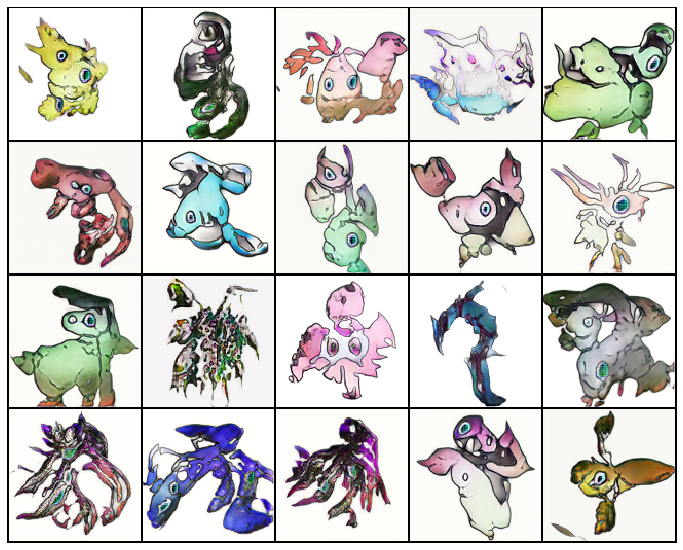

17 | - Monsters: beta_kl: 0.2, beta_rec: 0.2, beta_neg: 256, z_dim: 128, batch_size: 16

18 | - CelebA-HQ: beta_kl: 1.0, beta_rec: 0.5, beta_neg: 1024, z_dim: 256, batch_size: 8

19 | """

20 | parser = argparse.ArgumentParser(description="train Soft-IntroVAE")

21 | parser.add_argument("-d", "--dataset", type=str,

22 | help="dataset to train on: ['cifar10', 'mnist', 'fmnist', 'svhn', 'monsters128', 'celeb128', "

23 | "'celeb256', 'celeb1024']")

24 | parser.add_argument("-n", "--num_epochs", type=int, help="total number of epochs to run", default=250)

25 | parser.add_argument("-z", "--z_dim", type=int, help="latent dimensions", default=128)

26 | parser.add_argument("-l", "--lr", type=float, help="learning rate", default=2e-4)

27 | parser.add_argument("-b", "--batch_size", type=int, help="batch size", default=32)

28 | parser.add_argument("-v", "--num_vae", type=int, help="number of epochs for vanilla vae training", default=0)

29 | parser.add_argument("-r", "--beta_rec", type=float, help="beta coefficient for the reconstruction loss",

30 | default=1.0)

31 | parser.add_argument("-k", "--beta_kl", type=float, help="beta coefficient for the kl divergence",

32 | default=1.0)

33 | parser.add_argument("-e", "--beta_neg", type=float,

34 | help="beta coefficient for the kl divergence in the expELBO function", default=1.0)

35 | parser.add_argument("-g", "--gamma_r", type=float,

36 | help="coefficient for the reconstruction loss for fake data in the decoder", default=1e-8)

37 | parser.add_argument("-s", "--seed", type=int, help="seed", default=-1)

38 | parser.add_argument("-p", "--pretrained", type=str, help="path to pretrained model, to continue training",

39 | default="None")

40 | parser.add_argument("-c", "--device", type=int, help="device: -1 for cpu, 0 and up for specific cuda device",

41 | default=-1)

42 | parser.add_argument('-f', "--fid", action='store_true', help="if specified, FID wil be calculated during training")

43 | args = parser.parse_args()

44 |

45 | device = torch.device("cpu") if args.device <= -1 else torch.device("cuda:" + str(args.device))

46 | pretrained = None if args.pretrained == "None" else args.pretrained

47 | train_soft_intro_vae(dataset=args.dataset, z_dim=args.z_dim, batch_size=args.batch_size, num_workers=0,

48 | num_epochs=args.num_epochs,

49 | num_vae=args.num_vae, beta_kl=args.beta_kl, beta_neg=args.beta_neg, beta_rec=args.beta_rec,

50 | device=device, save_interval=50, start_epoch=0, lr_e=args.lr, lr_d=args.lr,

51 | pretrained=pretrained, seed=args.seed,

52 | test_iter=1000, with_fid=args.fid)

53 |

--------------------------------------------------------------------------------

/soft_intro_vae_bootstrap/main.py:

--------------------------------------------------------------------------------

1 | """

2 | Main function for arguments parsing

3 | Author: Tal Daniel

4 | """

5 | # imports

6 | import torch

7 | import argparse

8 | from train_soft_intro_vae_bootstrap import train_soft_intro_vae

9 |

10 | if __name__ == "__main__":

11 | """

12 | Recommended hyper-parameters:

13 | - CIFAR10: beta_kl: 1.0, beta_rec: 1.0, beta_neg: 256, z_dim: 128, batch_size: 32

14 | - SVHN: beta_kl: 1.0, beta_rec: 1.0, beta_neg: 256, z_dim: 128, batch_size: 32

15 | - MNIST: beta_kl: 1.0, beta_rec: 1.0, beta_neg: 256, z_dim: 32, batch_size: 128

16 | - FashionMNIST: beta_kl: 1.0, beta_rec: 1.0, beta_neg: 256, z_dim: 32, batch_size: 128

17 | - Monsters: beta_kl: 0.2, beta_rec: 0.2, beta_neg: 256, z_dim: 128, batch_size: 16

18 | """

19 | parser = argparse.ArgumentParser(description="train Soft-IntroVAE")

20 | parser.add_argument("-d", "--dataset", type=str,

21 | help="dataset to train on: ['cifar10', 'mnist', 'fmnist', 'svhn', 'monsters128', 'celeb128', "

22 | "'celeb256', 'celeb1024']")

23 | parser.add_argument("-n", "--num_epochs", type=int, help="total number of epochs to run", default=250)

24 | parser.add_argument("-z", "--z_dim", type=int, help="latent dimensions", default=128)

25 | parser.add_argument("-l", "--lr", type=float, help="learning rate", default=2e-4)

26 | parser.add_argument("-b", "--batch_size", type=int, help="batch size", default=32)

27 | parser.add_argument("-v", "--num_vae", type=int, help="number of epochs for vanilla vae training", default=0)

28 | parser.add_argument("-r", "--beta_rec", type=float, help="beta coefficient for the reconstruction loss",

29 | default=1.0)

30 | parser.add_argument("-k", "--beta_kl", type=float, help="beta coefficient for the kl divergence",

31 | default=1.0)

32 | parser.add_argument("-e", "--beta_neg", type=float,

33 | help="beta coefficient for the kl divergence in the expELBO function", default=1.0)

34 | parser.add_argument("-g", "--gamma_r", type=float,

35 | help="coefficient for the reconstruction loss for fake data in the decoder", default=1.0)

36 | parser.add_argument("-s", "--seed", type=int, help="seed", default=-1)

37 | parser.add_argument("-p", "--pretrained", type=str, help="path to pretrained model, to continue training",

38 | default="None")

39 | parser.add_argument("-c", "--device", type=int, help="device: -1 for cpu, 0 and up for specific cuda device",

40 | default=-1)

41 | parser.add_argument('-f', "--fid", action='store_true', help="if specified, FID wil be calculated during training")

42 | parser.add_argument("-o", "--freq", type=int, help="epochs between copying weights from decoder to target decoder",

43 | default=1)

44 | args = parser.parse_args()

45 |

46 | device = torch.device("cpu") if args.device <= -1 else torch.device("cuda:" + str(args.device))

47 | pretrained = None if args.pretrained == "None" else args.pretrained

48 | train_soft_intro_vae(dataset=args.dataset, z_dim=args.z_dim, batch_size=args.batch_size, num_workers=0,

49 | num_epochs=args.num_epochs, copy_to_target_freq=args.freq,

50 | num_vae=args.num_vae, beta_kl=args.beta_kl, beta_neg=args.beta_neg, beta_rec=args.beta_rec,

51 | device=device, save_interval=50, start_epoch=0, lr_e=args.lr, lr_d=args.lr,

52 | pretrained=pretrained, seed=args.seed,

53 | test_iter=1000, with_fid=args.fid)

54 |

--------------------------------------------------------------------------------

/style_soft_intro_vae/utils.py:

--------------------------------------------------------------------------------

1 | # Copyright 2019-2020 Stanislav Pidhorskyi

2 | #

3 | # Licensed under the Apache License, Version 2.0 (the "License");

4 | # you may not use this file except in compliance with the License.

5 | # You may obtain a copy of the License at

6 | #

7 | # http://www.apache.org/licenses/LICENSE-2.0

8 | #

9 | # Unless required by applicable law or agreed to in writing, software

10 | # distributed under the License is distributed on an "AS IS" BASIS,

11 | # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 | # See the License for the specific language governing permissions and

13 | # limitations under the License.

14 | # ==============================================================================

15 |

16 | from torch import nn

17 | import torch

18 | import threading

19 | import hashlib

20 | import pickle

21 | import os

22 |

23 |

24 | class cache:

25 | def __init__(self, function):

26 | self.function = function

27 | self.pickle_name = self.function.__name__

28 |

29 | def __call__(self, *args, **kwargs):

30 | m = hashlib.sha256()

31 | m.update(pickle.dumps((self.function.__name__, args, frozenset(kwargs.items()))))

32 | output_path = os.path.join('.cache', "%s_%s" % (m.hexdigest(), self.pickle_name))

33 | try:

34 | with open(output_path, 'rb') as f:

35 | data = pickle.load(f)

36 | except (FileNotFoundError, pickle.PickleError):

37 | data = self.function(*args, **kwargs)

38 | os.makedirs(os.path.dirname(output_path), exist_ok=True)

39 | with open(output_path, 'wb') as f:

40 | pickle.dump(data, f)

41 | return data

42 |

43 |

44 | def save_model(x, name):

45 | if isinstance(x, nn.DataParallel):

46 | torch.save(x.module.state_dict(), name)

47 | else:

48 | torch.save(x.state_dict(), name)

49 |

50 |

51 | class AsyncCall(object):

52 | def __init__(self, fnc, callback=None):

53 | self.Callable = fnc

54 | self.Callback = callback

55 | self.result = None

56 |

57 | def __call__(self, *args, **kwargs):

58 | self.Thread = threading.Thread(target=self.run, name=self.Callable.__name__, args=args, kwargs=kwargs)

59 | self.Thread.start()

60 | return self

61 |

62 | def wait(self, timeout=None):

63 | self.Thread.join(timeout)

64 | if self.Thread.isAlive():

65 | raise TimeoutError

66 | else:

67 | return self.result

68 |

69 | def run(self, *args, **kwargs):

70 | self.result = self.Callable(*args, **kwargs)

71 | if self.Callback:

72 | self.Callback(self.result)

73 |

74 |

75 | class AsyncMethod(object):

76 | def __init__(self, fnc, callback=None):

77 | self.Callable = fnc

78 | self.Callback = callback

79 |

80 | def __call__(self, *args, **kwargs):

81 | return AsyncCall(self.Callable, self.Callback)(*args, **kwargs)

82 |

83 |

84 | def async_func(fnc=None, callback=None):

85 | if fnc is None:

86 | def add_async_callback(f):

87 | return AsyncMethod(f, callback)

88 | return add_async_callback

89 | else:

90 | return AsyncMethod(fnc, callback)

91 |

92 |

93 | class Registry(dict):

94 | def __init__(self, *args, **kwargs):

95 | super(Registry, self).__init__(*args, **kwargs)

96 |

97 | def register(self, module_name):

98 | def register_fn(module):

99 | assert module_name not in self

100 | self[module_name] = module

101 | return module

102 | return register_fn

103 |

--------------------------------------------------------------------------------

/soft_intro_vae_3d/test_model.py:

--------------------------------------------------------------------------------

1 | """

2 | Test a trained model (on the test split of the data)

3 | """

4 |

5 | import json

6 | import numpy as np

7 |

8 | import torch

9 | import torch.nn.parallel

10 | import torch.utils.data

11 | from torch.utils.data import DataLoader

12 |

13 | from utils.util import cuda_setup

14 | from metrics.jsd import jsd_between_point_cloud_sets

15 |

16 | from models.vae import SoftIntroVAE

17 |

18 |

19 | def prepare_model(config, path_to_weights, device=torch.device("cpu")):

20 | model = SoftIntroVAE(config).to(device)

21 | model.load_state_dict(torch.load(path_to_weights, map_location=device))

22 | model.eval()

23 | return model

24 |

25 |

26 | def prepare_data(config, split='train', batch_size=32):

27 | dataset_name = config['dataset'].lower()

28 | if dataset_name == 'shapenet':

29 | from datasets.shapenet import ShapeNetDataset

30 | dataset = ShapeNetDataset(root_dir=config['data_dir'],

31 | classes=config['classes'], split=split)

32 | else:

33 | raise ValueError(f'Invalid dataset name. Expected `shapenet` or '

34 | f'`faust`. Got: `{dataset_name}`')

35 | data_loader = DataLoader(dataset, batch_size=batch_size,

36 | shuffle=False, num_workers=4,

37 | drop_last=False, pin_memory=True)

38 | return data_loader

39 |

40 |

41 | def calc_jsd_valid(model, config, prior_std=1.0, split='valid'):

42 | model.eval()

43 | device = cuda_setup(config['cuda'], config['gpu'])

44 | dataset_name = config['dataset'].lower()

45 | if dataset_name == 'shapenet':

46 | from datasets.shapenet import ShapeNetDataset

47 | dataset = ShapeNetDataset(root_dir=config['data_dir'],

48 | classes=config['classes'], split=split)

49 | else:

50 | raise ValueError(f'Invalid dataset name. Expected `shapenet` or '

51 | f'`faust`. Got: `{dataset_name}`')

52 | classes_selected = ('all' if not config['classes']

53 | else ','.join(config['classes']))

54 | num_samples = len(dataset.point_clouds_names_valid)

55 | data_loader = DataLoader(dataset, batch_size=num_samples,

56 | shuffle=False, num_workers=4,

57 | drop_last=False, pin_memory=True)

58 | # We take 3 times as many samples as there are in test data in order to

59 | # perform JSD calculation in the same manner as in the reference publication

60 |

61 | x, _ = next(iter(data_loader))

62 | x = x.to(device)

63 |

64 | # We average JSD computation from 3 independent trials.

65 | js_results = []

66 | for _ in range(3):

67 | noise = prior_std * torch.randn(3 * num_samples, model.zdim)

68 | noise = noise.to(device)

69 |

70 | with torch.no_grad():

71 | x_g = model.decode(noise)

72 | if x_g.shape[-2:] == (3, 2048):

73 | x_g.transpose_(1, 2)

74 |

75 | jsd = jsd_between_point_cloud_sets(x, x_g, voxels=28)

76 | js_results.append(jsd)

77 | js_result = np.mean(js_results)

78 | return js_result

79 |

80 |

81 | if __name__ == "__main__":

82 | path_to_weights = './results/vae/soft_intro_vae_chair/weights/00350_jsd_0.106.pth'

83 | config_path = 'config/soft_intro_vae_hp.json'

84 | config = None

85 | if config_path is not None and config_path.endswith('.json'):

86 | with open(config_path) as f:

87 | config = json.load(f)

88 | assert config is not None

89 | device = cuda_setup(config['cuda'], config['gpu'])

90 | print("using device: ", device)

91 | model = prepare_model(config, path_to_weights, device=device)

92 | test_jsd = calc_jsd_valid(model, config, prior_std=config["prior_std"], split='test')

93 | print(f'test jsd: {test_jsd}')

94 |

--------------------------------------------------------------------------------

/soft_intro_vae_3d/evaluation/generate_data_for_metrics.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | import json

3 | import logging

4 | import random

5 | from importlib import import_module

6 | from os.path import join

7 |

8 | import numpy as np

9 | import torch

10 | from torch.distributions import Beta

11 | from torch.utils.data import DataLoader

12 |

13 | from datasets.shapenet.shapenet import ShapeNetDataset

14 | from models.vae import SoftIntroVAE, reparameterize

15 | from utils.util import cuda_setup

16 |

17 |

18 | def prepare_model(config, path_to_weights, device=torch.device("cpu")):

19 | model = SoftIntroVAE(config).to(device)

20 | model.load_state_dict(torch.load(path_to_weights, map_location=device))

21 | model.eval()

22 | return model

23 |

24 |

25 | def main(eval_config):

26 | # Load hyperparameters as they were during training

27 | train_results_path = join(eval_config['results_root'], eval_config['arch'],

28 | eval_config['experiment_name'])

29 | with open(join(train_results_path, 'config.json')) as f:

30 | train_config = json.load(f)

31 |

32 | random.seed(train_config['seed'])

33 | torch.manual_seed(train_config['seed'])

34 | torch.cuda.manual_seed_all(train_config['seed'])

35 |

36 | device = cuda_setup(config['cuda'], config['gpu'])

37 | print("using device: ", device)

38 |

39 | #

40 | # Dataset

41 | #

42 | dataset_name = train_config['dataset'].lower()

43 | if dataset_name == 'shapenet':

44 | dataset = ShapeNetDataset(root_dir=train_config['data_dir'],

45 | classes=train_config['classes'], split='test')

46 | else:

47 | raise ValueError(f'Invalid dataset name. Expected `shapenet` or '

48 | f'`faust`. Got: `{dataset_name}`')

49 | classes_selected = ('all' if not train_config['classes']

50 | else ','.join(train_config['classes']))

51 |

52 | #

53 | # Models

54 | #

55 |

56 | model = prepare_model(config, path_to_weights, device=device)

57 | model.eval()

58 |

59 | num_samples = len(dataset.point_clouds_names_test)

60 | data_loader = DataLoader(dataset, batch_size=num_samples,

61 | shuffle=False, num_workers=4,

62 | drop_last=False, pin_memory=True)

63 |

64 | # We take 3 times as many samples as there are in test data in order to

65 | # perform JSD calculation in the same manner as in the reference publication

66 |

67 | x, _ = next(iter(data_loader))

68 | x = x.to(device)

69 |

70 | np.save(join(train_results_path, 'results', f'_X'), x)

71 |

72 | prior_std = config["prior_std"]

73 |

74 | for i in range(3):

75 | noise = prior_std * torch.randn(3 * num_samples, model.zdim)

76 | noise = noise.to(device)

77 |

78 | with torch.no_grad():

79 | x_g = model.decode(noise)

80 | if x_g.shape[-2:] == (3, 2048):

81 | x_g.transpose_(1, 2)

82 | np.save(join(train_results_path, 'results', f'_Xg_{i}'), x_g)

83 |

84 | with torch.no_grad():

85 | mu_z, logvar_z = model.encode(x)

86 | data_z = reparameterize(mu_z, logvar_z)

87 | # x_rec = model.decode(data_z) # stochastic

88 | x_rec = model.decode(mu_z) # deterministic

89 | if x_rec.shape[-2:] == (3, 2048):

90 | x_rec.transpose_(1, 2)

91 |

92 | np.save(join(train_results_path, 'results', f'_Xrec'), x_rec)

93 |

94 |

95 | if __name__ == '__main__':

96 | path_to_weights = './results/vae/soft_intro_vae_chair/weights/00350_jsd_0.106.pth'

97 | config_path = 'config/soft_intro_vae_hp.json'

98 | config = None

99 | if config_path is not None and config_path.endswith('.json'):

100 | with open(config_path) as f:

101 | config = json.load(f)

102 | assert config is not None

103 |

104 | main(config)

105 |

--------------------------------------------------------------------------------

/environment.yml:

--------------------------------------------------------------------------------

1 | name: torch

2 | channels:

3 | - pytorch

4 | - conda-forge

5 | - defaults

6 | dependencies:

7 | - _libgcc_mutex=0.1=main

8 | - blas=1.0=mkl

9 | - bzip2=1.0.8=h516909a_3

10 | - ca-certificates=2020.12.5=ha878542_0

11 | - cairo=1.16.0=h18b612c_1001

12 | - certifi=2020.12.5=py36h5fab9bb_0

13 | - cudatoolkit=10.1.243=h6bb024c_0

14 | - cycler=0.10.0=py_2

15 | - dataclasses=0.7=py36_0

16 | - dbus=1.13.6=he372182_0

17 | - expat=2.2.9=he1b5a44_2

18 | - ffmpeg=4.0=hcdf2ecd_0

19 | - fontconfig=2.13.1=he4413a7_1000

20 | - freeglut=3.2.1=h58526e2_0

21 | - freetype=2.10.4=h5ab3b9f_0

22 | - glib=2.66.1=h92f7085_0

23 | - graphite2=1.3.13=h58526e2_1001

24 | - gst-plugins-base=1.14.0=hbbd80ab_1

25 | - gstreamer=1.14.0=hb31296c_0

26 | - harfbuzz=1.8.8=hffaf4a1_0

27 | - hdf5=1.10.2=hc401514_3

28 | - icu=58.2=hf484d3e_1000

29 | - intel-openmp=2020.2=254

30 | - jasper=2.0.14=h07fcdf6_1

31 | - joblib=0.17.0=py_0

32 | - jpeg=9b=h024ee3a_2

33 | - kiwisolver=1.3.1=py36h51d7077_0

34 | - lcms2=2.11=h396b838_0

35 | - ld_impl_linux-64=2.33.1=h53a641e_7

36 | - libblas=3.9.0=1_h6e990d7_netlib

37 | - libcblas=3.9.0=3_h893e4fe_netlib

38 | - libedit=3.1.20191231=h14c3975_1

39 | - libffi=3.3=he6710b0_2

40 | - libgcc-ng=9.1.0=hdf63c60_0

41 | - libgfortran=3.0.0=1

42 | - libgfortran-ng=7.5.0=hae1eefd_17

43 | - libgfortran4=7.5.0=hae1eefd_17

44 | - libglu=9.0.0=he1b5a44_1001

45 | - liblapack=3.9.0=3_h893e4fe_netlib

46 | - libopencv=3.4.2=hb342d67_1

47 | - libopus=1.3.1=h7b6447c_0

48 | - libpng=1.6.37=hbc83047_0

49 | - libstdcxx-ng=9.1.0=hdf63c60_0

50 | - libtiff=4.1.0=h2733197_1

51 | - libuuid=2.32.1=h14c3975_1000

52 | - libuv=1.40.0=h7b6447c_0

53 | - libvpx=1.7.0=h439df22_0

54 | - libxcb=1.13=h14c3975_1002

55 | - libxml2=2.9.10=hb55368b_3

56 | - lz4-c=1.9.2=heb0550a_3

57 | - matplotlib=3.3.3=py36h5fab9bb_0

58 | - matplotlib-base=3.3.3=py36he12231b_0

59 | - mkl=2020.2=256

60 | - mkl-service=2.3.0=py36he8ac12f_0

61 | - mkl_fft=1.2.0=py36h23d657b_0

62 | - mkl_random=1.1.1=py36h0573a6f_0

63 | - ncurses=6.2=he6710b0_1

64 | - ninja=1.10.2=py36hff7bd54_0

65 | - numpy=1.19.2=py36h54aff64_0

66 | - numpy-base=1.19.2=py36hfa32c7d_0

67 | - olefile=0.46=py36_0

68 | - opencv=3.4.2=py36h6fd60c2_1

69 | - openssl=1.1.1h=h516909a_0

70 | - pcre=8.44=he1b5a44_0

71 | - pillow=8.0.1=py36he98fc37_0

72 | - pip=20.3=py36h06a4308_0

73 | - pixman=0.38.0=h516909a_1003

74 | - pthread-stubs=0.4=h36c2ea0_1001

75 | - py-opencv=3.4.2=py36hb342d67_1

76 | - pyparsing=2.4.7=pyh9f0ad1d_0

77 | - pyqt=5.9.2=py36hcca6a23_4

78 | - python=3.6.12=hcff3b4d_2

79 | - python-dateutil=2.8.1=py_0

80 | - python_abi=3.6=1_cp36m

81 | - pytorch=1.7.0=py3.6_cuda10.1.243_cudnn7.6.3_0

82 | - qt=5.9.7=h5867ecd_1

83 | - readline=8.0=h7b6447c_0

84 | - scikit-learn=0.23.2=py36hb6e6923_3

85 | - scipy=1.5.3=py36h976291a_0

86 | - setuptools=50.3.2=py36h06a4308_2

87 | - sip=4.19.8=py36hf484d3e_0

88 | - six=1.15.0=py36h06a4308_0

89 | - sqlite=3.33.0=h62c20be_0

90 | - threadpoolctl=2.1.0=pyh5ca1d4c_0

91 | - tk=8.6.10=hbc83047_0

92 | - torchaudio=0.7.0=py36

93 | - torchvision=0.8.1=py36_cu101

94 | - tornado=6.1=py36h1d69622_0

95 | - tqdm=4.54.1=pyhd8ed1ab_0

96 | - typing_extensions=3.7.4.3=py_0

97 | - wheel=0.36.0=pyhd3eb1b0_0

98 | - xorg-fixesproto=5.0=h14c3975_1002

99 | - xorg-inputproto=2.3.2=h14c3975_1002

100 | - xorg-kbproto=1.0.7=h14c3975_1002

101 | - xorg-libice=1.0.10=h516909a_0

102 | - xorg-libsm=1.2.3=h84519dc_1000

103 | - xorg-libx11=1.6.12=h516909a_0

104 | - xorg-libxau=1.0.9=h14c3975_0

105 | - xorg-libxdmcp=1.1.3=h516909a_0

106 | - xorg-libxext=1.3.4=h516909a_0

107 | - xorg-libxfixes=5.0.3=h516909a_1004

108 | - xorg-libxi=1.7.10=h516909a_0

109 | - xorg-libxrender=0.9.10=h516909a_1002

110 | - xorg-renderproto=0.11.1=h14c3975_1002

111 | - xorg-xextproto=7.3.0=h14c3975_1002

112 | - xorg-xproto=7.0.31=h14c3975_1007

113 | - xz=5.2.5=h7b6447c_0

114 | - zlib=1.2.11=h7b6447c_3

115 | - zstd=1.4.5=h9ceee32_0

116 | - pip:

117 | - future==0.18.2

118 | - kornia==0.4.1

119 | - protobuf==3.14.0

120 | - tensorboardx==2.1

121 |

122 |

--------------------------------------------------------------------------------

/style_soft_intro_vae/scheduler.py:

--------------------------------------------------------------------------------

1 | from bisect import bisect_right

2 | import torch

3 | import numpy as np

4 |

5 |

6 | class WarmupMultiStepLR(torch.optim.lr_scheduler._LRScheduler):

7 | def __init__(

8 | self,

9 | optimizer,

10 | milestones,

11 | gamma=0.1,

12 | warmup_factor=1.0 / 1.0,

13 | warmup_iters=1,

14 | last_epoch=-1,

15 | reference_batch_size=128,

16 | lr=[]

17 | ):

18 | if not list(milestones) == sorted(milestones):

19 | raise ValueError(

20 | "Milestones should be a list of" " increasing integers. Got {}",

21 | milestones,

22 | )

23 | self.milestones = milestones

24 | self.gamma = gamma

25 | self.warmup_factor = warmup_factor

26 | self.warmup_iters = warmup_iters

27 | self.batch_size = 1

28 | self.lod = 0

29 | self.reference_batch_size = reference_batch_size

30 |

31 | self.optimizer = optimizer

32 | self.base_lrs = []

33 | for _ in self.optimizer.param_groups:

34 | self.base_lrs.append(lr)

35 |

36 | self.last_epoch = last_epoch

37 |

38 | if not isinstance(optimizer, torch.optim.Optimizer):

39 | raise TypeError('{} is not an Optimizer'.format(

40 | type(optimizer).__name__))

41 | self.optimizer = optimizer

42 |

43 | if last_epoch == -1:

44 | for group in optimizer.param_groups:

45 | group.setdefault('initial_lr', group['lr'])

46 | last_epoch = 0

47 |

48 | self.last_epoch = last_epoch

49 |

50 | self.optimizer._step_count = 0

51 | self._step_count = 0

52 | # self.step(last_epoch)

53 | self.step()

54 |

55 | def set_batch_size(self, batch_size, lod):

56 | self.batch_size = batch_size

57 | self.lod = lod

58 | for param_group, lr in zip(self.optimizer.param_groups, self.get_lr()):

59 | param_group['lr'] = lr

60 |

61 | def get_lr(self):

62 | warmup_factor = 1

63 | if self.last_epoch < self.warmup_iters:

64 | alpha = float(self.last_epoch) / self.warmup_iters

65 | warmup_factor = self.warmup_factor * (1 - alpha) + alpha

66 | return [

67 | base_lr[self.lod]

68 | * warmup_factor

69 | * self.gamma ** bisect_right(self.milestones, self.last_epoch)

70 | # * float(self.batch_size)

71 | # / float(self.reference_batch_size)

72 | for base_lr in self.base_lrs

73 | ]

74 |

75 | def state_dict(self):

76 | return {

77 | "last_epoch": self.last_epoch

78 | }

79 |

80 | def load_state_dict(self, state_dict):

81 | self.__dict__.update(dict(last_epoch=state_dict["last_epoch"]))

82 |

83 |

84 | class ComboMultiStepLR:

85 | def __init__(

86 | self,

87 | optimizers, base_lr,

88 | **kwargs

89 | ):

90 | self.schedulers = dict()

91 | for name, opt in optimizers.items():

92 | self.schedulers[name] = WarmupMultiStepLR(opt, lr=base_lr, **kwargs)

93 | self.last_epoch = 0

94 |

95 | def set_batch_size(self, batch_size, lod):

96 | for x in self.schedulers.values():

97 | x.set_batch_size(batch_size, lod)

98 |

99 | def step(self, epoch=None):

100 | for x in self.schedulers.values():

101 | # x.step(epoch)

102 | x.step()

103 | if epoch is None:

104 | epoch = self.last_epoch + 1

105 | self.last_epoch = epoch

106 |

107 | def state_dict(self):

108 | return {key: value.state_dict() for key, value in self.schedulers.items()}

109 |

110 | def load_state_dict(self, state_dict):

111 | for k, x in self.schedulers.items():

112 | x.load_state_dict(state_dict[k])

113 |

114 | last_epochs = [x.last_epoch for k, x in self.schedulers.items()]

115 | assert np.all(np.asarray(last_epochs) == last_epochs[0])

116 | self.last_epoch = last_epochs[0]

117 |

118 | def start_epoch(self):

119 | return self.last_epoch

120 |

--------------------------------------------------------------------------------

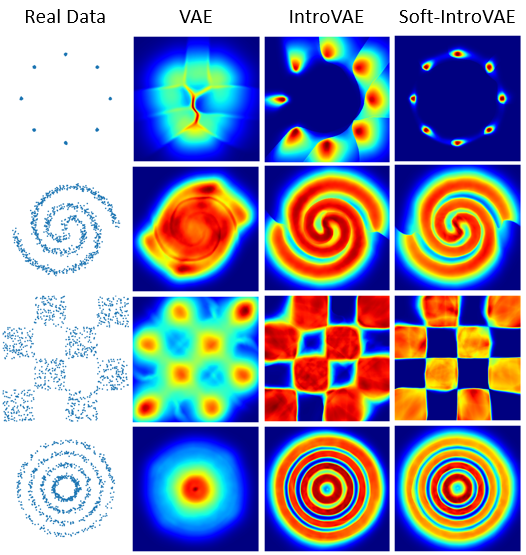

/soft_intro_vae_2d/README.md:

--------------------------------------------------------------------------------

1 | # soft-intro-vae-pytorch-2d

2 |

3 | Implementation of Soft-IntroVAE for tabular (2D) data.

4 |

5 | A step-by-step tutorial can be found in [Soft-IntroVAE Jupyter Notebook Tutorials](https://github.com/taldatech/soft-intro-vae-pytorch/tree/main/soft_intro_vae_tutorial).

6 |

7 |

8 |  9 |

9 |  10 |

10 |

11 |

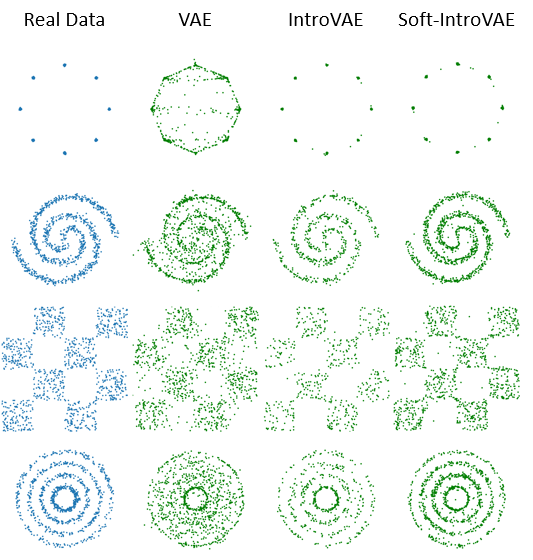

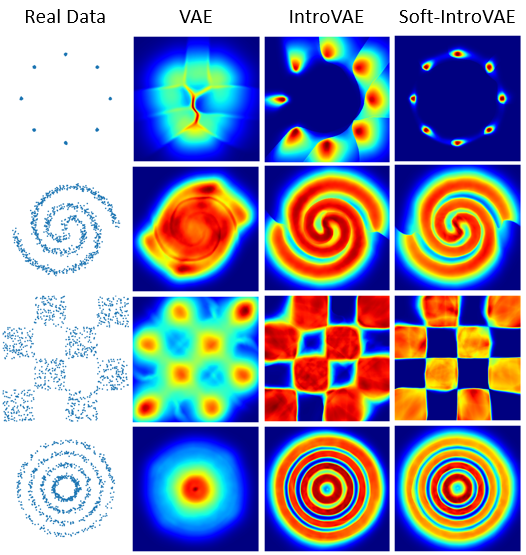

12 | - [soft-intro-vae-pytorch-2d](#soft-intro-vae-pytorch-2d)

13 | * [Training](#training)

14 | * [Recommended hyperparameters](#recommended-hyperparameters)

15 | * [What to expect](#what-to-expect)

16 | * [Files and directories in the repository](#files-and-directories-in-the-repository)

17 | * [Tutorial](#tutorial)

18 |

19 | ## Training

20 |

21 | `main.py --help`

22 |

23 |

24 | You should use the `main.py` file with the following arguments:

25 |

26 | |Argument | Description |Legal Values |

27 | |-------------------------|---------------------------------------------|-------------|

28 | |-h, --help | shows arguments description | |

29 | |-d, --dataset | dataset to train on |str: '8Gaussians', '2spirals', 'checkerboard', rings' |

30 | |-n, --num_iter | total number of iterations to run | int: default=30000|

31 | |-z, --z_dim| latent dimensions | int: default=2|

32 | |-s, --seed| random state to use. for random: -1 | int: -1 , 0, 1, 2 ,....|

33 | |-v, --num_vae| number of iterations for vanilla vae training | int: default=2000|

34 | |-l, --lr| learning rate | float: defalut=2e-4 |

35 | |-r, --beta_rec | beta coefficient for the reconstruction loss |float: default=0.2|

36 | |-k, --beta_kl| beta coefficient for the kl divergence | float: default=0.3|

37 | |-e, --beta_neg| beta coefficient for the kl divergence in the expELBO function | float: default=0.9|

38 | |-g, --gamma_r| coefficient for the reconstruction loss for fake data in the decoder | float: default=1e-8|

39 | |-b, --batch_size| batch size | int: default=512 |

40 | |-p, --pretrained | path to pretrained model, to continue training |str: default="None" |

41 | |-c, --device| device: -1 for cpu, 0 and up for specific cuda device |int: default=-1|

42 |

43 |

44 | Examples:

45 |

46 | `python main.py --dataset 8Gaussians --device 0 --seed 92 --lr 2e-4 --num_vae 2000 --num_iter 30000 --beta_kl 0.3 --beta_rec 0.2 --beta_neg 0.9`

47 |

48 | `python main.py --dataset rings --device -1 --seed -1 --lr 2e-4 --num_vae 2000 --num_iter 30000 --beta_kl 0.2 --beta_rec 0.2 --beta_neg 1.0`

49 |

50 | ## Recommended hyperparameters

51 |

52 | |Dataset | `beta_kl` | `beta_rec`| `beta_neg`|

53 | |------------|------|----|---|

54 | |`8Gaussians`|0.3|0.2| 0.9|

55 | |`2spirals`|0.5|0.2|1.0|

56 | |`checkerboard`|0.1|0.2|0.2|

57 | |`rings`|0.2|0.2|1.0|

58 |

59 |

60 | ## What to expect

61 |

62 | * During the training, figures of samples and density plots are saved locally.

63 | * During training, statistics are printed (reconstruction error, KLD, expELBO).

64 | * At the end of the training, the following quantities are calculated, printed and saved to a `.txt` file: grid-normalized ELBO (gnELBO), KL, JSD

65 | * Tips:

66 | * KL of fake/rec samples should be >= KL of real data

67 | * You will see that the deterministic reconstruction error is printed in parenthesis, it should be lower than the stochastic reconstruction error.

68 | * We found that for the 2D datasets, it better to initialize the networks with vanilla vae training (about 2000 iterations is good).

69 |

70 |

71 | ## Files and directories in the repository

72 |

73 | |File name | Purpose |

74 | |----------------------|------|

75 | |`main.py`| general purpose main application for training Soft-IntroVAE for 2D data|

76 | |`train_soft_intro_vae_2d.py`| main training function, datasets and architectures|

77 |

78 |

79 | ## Tutorial

80 | * [Jupyter Notebook tutorial for 2D datasets](https://github.com/taldatech/soft-intro-vae-pytorch/blob/main/soft_intro_vae_tutorial/soft_intro_vae_2d_code_tutorial.ipynb)

81 | * [Open in Colab](https://colab.research.google.com/github/taldatech/soft-intro-vae-pytorch/blob/main/soft_intro_vae_tutorial/soft_intro_vae_2d_code_tutorial.ipynb)

--------------------------------------------------------------------------------

/style_soft_intro_vae/custom_adam.py:

--------------------------------------------------------------------------------

1 | # Copyright 2019-2020 Stanislav Pidhorskyi

2 | # lr_equalization_coef was added for LREQ

3 |

4 | # Copyright (c) 2016- Facebook, Inc (Adam Paszke)

5 | # Copyright (c) 2014- Facebook, Inc (Soumith Chintala)

6 | # Copyright (c) 2011-2014 Idiap Research Institute (Ronan Collobert)

7 | # Copyright (c) 2012-2014 Deepmind Technologies (Koray Kavukcuoglu)

8 | # Copyright (c) 2011-2012 NEC Laboratories America (Koray Kavukcuoglu)

9 | # Copyright (c) 2011-2013 NYU (Clement Farabet)

10 | # Copyright (c) 2006-2010 NEC Laboratories America (Ronan Collobert, Leon Bottou, Iain Melvin, Jason Weston)

11 | # Copyright (c) 2006 Idiap Research Institute (Samy Bengio)

12 | # Copyright (c) 2001-2004 Idiap Research Institute (Ronan Collobert, Samy Bengio, Johnny Mariethoz)

13 |

14 | # https://github.com/pytorch/pytorch/blob/master/LICENSE

15 |

16 |

17 | import math

18 | import torch

19 | from torch.optim.optimizer import Optimizer

20 |

21 |

22 | class LREQAdam(Optimizer):

23 | def __init__(self, params, lr=1e-3, betas=(0.0, 0.99), eps=1e-8,

24 | weight_decay=0):

25 | beta_2 = betas[1]

26 | if not 0.0 <= lr:

27 | raise ValueError("Invalid learning rate: {}".format(lr))

28 | if not 0.0 <= eps:

29 | raise ValueError("Invalid epsilon value: {}".format(eps))

30 | if not 0.0 == betas[0]:

31 | raise ValueError("Invalid beta parameter at index 0: {}".format(betas[0]))

32 | if not 0.0 <= beta_2 < 1.0:

33 | raise ValueError("Invalid beta parameter at index 1: {}".format(beta_2))

34 | defaults = dict(lr=lr, beta_2=beta_2, eps=eps,

35 | weight_decay=weight_decay)

36 | super(LREQAdam, self).__init__(params, defaults)

37 |

38 | def __setstate__(self, state):

39 | super(LREQAdam, self).__setstate__(state)

40 |

41 | def step(self, closure=None):

42 | """Performs a single optimization step.

43 |

44 | Arguments:

45 | closure (callable, optional): A closure that reevaluates the model

46 | and returns the loss.

47 | """

48 | loss = None

49 | if closure is not None:

50 | loss = closure()

51 |

52 | for group in self.param_groups:

53 | for p in group['params']:

54 | if p.grad is None:

55 | continue

56 | grad = p.grad.data

57 | if grad.is_sparse:

58 | raise RuntimeError('Adam does not support sparse gradients, please consider SparseAdam instead')

59 |

60 | state = self.state[p]

61 |

62 | # State initialization

63 | if len(state) == 0:

64 | state['step'] = 0

65 | # Exponential moving average of gradient values

66 | # state['exp_avg'] = torch.zeros_like(p.data)

67 | # Exponential moving average of squared gradient values

68 | state['exp_avg_sq'] = torch.zeros_like(p.data)

69 |

70 | # exp_avg, exp_avg_sq = state['exp_avg'], state['exp_avg_sq']

71 | exp_avg_sq = state['exp_avg_sq']

72 | beta_2 = group['beta_2']

73 |

74 | state['step'] += 1

75 |

76 | if group['weight_decay'] != 0:

77 | grad.add_(group['weight_decay'], p.data / p.coef)

78 |

79 | # Decay the first and second moment running average coefficient

80 | # exp_avg.mul_(beta1).add_(1 - beta1, grad)

81 | # exp_avg_sq.mul_(beta_2).addcmul_(1 - beta_2, grad, grad)

82 | exp_avg_sq.mul_(beta_2).addcmul_(grad, grad, value=1 - beta_2)

83 | denom = exp_avg_sq.sqrt().add_(group['eps'])

84 |

85 | # bias_correction1 = 1 - beta1 ** state['step'] # 1

86 | bias_correction2 = 1 - beta_2 ** state['step']

87 | # step_size = group['lr'] * math.sqrt(bias_correction2) / bias_correction1

88 | step_size = group['lr'] * math.sqrt(bias_correction2)

89 |

90 | # p.data.addcdiv_(-step_size, exp_avg, denom)

91 | if hasattr(p, 'lr_equalization_coef'):

92 | step_size *= p.lr_equalization_coef

93 |

94 | # p.data.addcdiv_(-step_size, grad, denom)

95 | p.data.addcdiv_(grad, denom, value=-step_size)

96 |

97 | return loss

98 |

--------------------------------------------------------------------------------

/soft_intro_vae_3d/utils/data.py:

--------------------------------------------------------------------------------

1 | import math

2 | import numpy as np

3 | import os

4 | import pandas as pd

5 | import pickle

6 |

7 | from decimal import Decimal

8 | from itertools import accumulate, tee, chain

9 | from typing import List, Tuple, Dict, Optional, Any, Set

10 |

11 | from utils.plyfile import load_ply

12 |

13 | READERS = {

14 | '.ply': load_ply,

15 | '.np': lambda file_path: pickle.load(open(file_path, 'rb')),

16 | }

17 |

18 |

19 | def load_file(file_path):

20 | _, ext = os.path.splitext(file_path)

21 | return READERS[ext](file_path)

22 |

23 |

24 | def add_float(a, b):

25 | return float(Decimal(str(a)) + Decimal(str(b)))

26 |

27 |

28 | def ranges(values: List[float]) -> List[Tuple[float]]:

29 | lower, upper = tee(accumulate(values, add_float))

30 | lower = chain([0], lower)

31 |

32 | return zip(lower, upper)

33 |

34 |

35 | def make_slices(values: List[float], N: int):

36 | slices = [slice(int(N * s), int(N * e)) for s, e in ranges(values)]

37 | return slices

38 |

39 |

40 | def make_splits(

41 | data: pd.DataFrame,

42 | splits: Dict[str, float],

43 | seed: Optional[int] = None):

44 |

45 | # assert correctness

46 | if not math.isclose(sum(splits.values()), 1.0):

47 | values = " ".join([f"{k} : {v}" for k, v in splits.items()])

48 | raise ValueError(f"{values} should sum up to 1")

49 |

50 | # shuffle with random seed

51 | data = data.iloc[np.random.permutation(len(data))]

52 | slices = make_slices(list(splits.values()), len(data))

53 |

54 | return {

55 | name: data[idxs].reset_index(drop=True) for name, idxs in zip(splits.keys(), slices)

56 | }

57 |

58 |

59 | def sample_other_than(black_list: Set[int], x: np.ndarray) -> int:

60 | res = np.random.randint(0, len(x))

61 | while res in black_list:

62 | res = np.random.randint(0, len(x))

63 |

64 | return res

65 |

66 |

67 | def clip_cloud(p: np.ndarray) -> np.ndarray:

68 | # create list of extreme points

69 | black_list = set(np.hstack([

70 | np.argmax(p, axis=0), np.argmin(p, axis=0)

71 | ]))

72 |

73 | # swap any other point

74 | for idx in black_list:

75 | p[idx] = p[sample_other_than(black_list, p)]

76 |

77 | return p

78 |

79 |

80 | def find_extrema(xs, n_cols: int=3, clip: bool=True) -> Dict[Any, List[float]]:

81 | from collections import defaultdict

82 |

83 | mins = defaultdict(lambda: [np.inf for _ in range(n_cols)])

84 | maxs = defaultdict(lambda: [-np.inf for _ in range(n_cols)])

85 |

86 | for x, c in xs:

87 | x = clip_cloud(x) if clip else x

88 | mins[c] = [min(old, new) for old, new in zip(mins[c], np.min(x, axis=0))]

89 | maxs[c] = [max(old, new) for old, new in zip(maxs[c], np.max(x, axis=0))]

90 |

91 | return mins, maxs

92 |

93 |

94 | def merge_dicts(

95 | dict_old: Dict[Any, List[float]],

96 | dict_new: Dict[Any, List[float]], op=min) -> Dict[Any, List[float]]:

97 | '''

98 | Simply takes values on List of floats for given key

99 | '''

100 | d_out = {** dict_old}

101 | for k, v in dict_new.items():

102 | if k in dict_old:

103 | d_out[k] = [op(new, old) for old, new in zip(dict_new[k], dict_old[k])]

104 | else:

105 | d_out[k] = dict_new[k]

106 |

107 | return d_out

108 |

109 |

110 | def save_extrema(clazz, root_dir, splits=('train', 'test', 'valid')):

111 | '''

112 | Maybe this should be class dependent normalization?

113 | '''

114 | min_dict, max_dict = {}, {}

115 | for split in splits:

116 | data = clazz(root_dir=root_dir, split=split, remap=False)

117 | mins, maxs = find_extrema(data)

118 | min_dict = merge_dicts(min_dict, mins, min)

119 | max_dict = merge_dicts(max_dict, maxs, max)

120 |

121 | # vectorzie values

122 | for d in (min_dict, max_dict):

123 | for k in d:

124 | d[k] = np.array(d[k])

125 |

126 | with open(os.path.join(root_dir, 'extrema.np'), 'wb') as f:

127 | pickle.dump((min_dict, max_dict), f)

128 |

129 |

130 | def remap(old_value: np.ndarray,

131 | old_min: np.ndarray, old_max: np.ndarray,

132 | new_min: float = -0.5, new_max: float = 0.5) -> np.ndarray:

133 | '''

134 | Remap reange

135 | '''

136 | old_range = (old_max - old_min)

137 | new_range = (new_max - new_min)

138 | new_value = (((old_value - old_min) * new_range) / old_range) + new_min

139 |

140 | return new_value

141 |

--------------------------------------------------------------------------------

/style_soft_intro_vae/make_figures/generate_samples.py:

--------------------------------------------------------------------------------

1 | # Copyright 2020-2021 Tal Daniel

2 | # Copyright 2019-2020 Stanislav Pidhorskyi

3 | #

4 | # Copyright (c) 2019, NVIDIA CORPORATION. All rights reserved.

5 | #

6 | # This work is licensed under the Creative Commons Attribution-NonCommercial

7 | # 4.0 International License. To view a copy of this license, visit

8 | # http://creativecommons.org/licenses/by-nc/4.0/ or send a letter to

9 | # Creative Commons, PO Box 1866, Mountain View, CA 94042, USA.

10 |

11 | from net import *

12 | from model import SoftIntroVAEModelTL

13 | from launcher import run

14 | from dataloader import *

15 | from checkpointer import Checkpointer

16 | # from dlutils.pytorch import count_parameters

17 | from defaults import get_cfg_defaults

18 | from PIL import Image

19 | import PIL

20 | from pathlib import Path

21 | from tqdm import tqdm

22 |

23 |

24 | def millify(n):

25 | millnames = ['', 'k', 'M', 'G', 'T', 'P']

26 | n = float(n)

27 | millidx = max(0, min(len(millnames) - 1, int(math.floor(0 if n == 0 else math.log10(abs(n)) / 3))))

28 |