├── README.md

├── AI_Agent_Workshop.ipynb

└── AI_Agent_Workshop_Completed.ipynb

/README.md:

--------------------------------------------------------------------------------

1 | # AI Agent Workshop

2 |

3 | Learn how to build an AI Agent with Llama 3.1 to match the reasoning capabilities of OpenAI's new O1 model!

4 |

5 | View the complete code on [Google Colab here](https://colab.research.google.com/github/team-headstart/Agent-Workshop/blob/main/AI_Agent_Workshop_Completed.ipynb)

6 |

7 |

8 |

9 |

--------------------------------------------------------------------------------

/AI_Agent_Workshop.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "nbformat": 4,

3 | "nbformat_minor": 0,

4 | "metadata": {

5 | "colab": {

6 | "provenance": []

7 | },

8 | "kernelspec": {

9 | "name": "python3",

10 | "display_name": "Python 3"

11 | },

12 | "language_info": {

13 | "name": "python"

14 | }

15 | },

16 | "cells": [

17 | {

18 | "cell_type": "markdown",

19 | "source": [

20 | "\n",

21 | "\n",

22 | "# Headstarter AI Agent Workshop\n",

23 | "\n",

24 | "#### **Skills: OpenAI, Groq, Llama, OpenRouter**\n",

25 | "\n",

26 | "## **To Get Started:**\n",

27 | "1. [Get your Groq API Key](https://console.groq.com/keys)\n",

28 | "2. [Get your OpenRouter API Key](https://openrouter.ai/settings/keys)\n",

29 | "3. [Get your OpenAI API Key](https://platform.openai.com/api-keys)\n",

30 | "\n",

31 | "\n",

32 | "### **Interesting Reads**\n",

33 | "- [Sam Altman's Blog Post: The Intelligence Age](https://ia.samaltman.com/)\n",

34 | "- [What LLMs cannot do](https://ehudreiter.com/2023/12/11/what-llms-cannot-do/)\n",

35 | "- [Chain of Thought Prompting](https://www.promptingguide.ai/techniques/cot)\n",

36 | "- [Why ChatGPT can't count the number of r's in the word strawberry](https://prompt.16x.engineer/blog/why-chatgpt-cant-count-rs-in-strawberry)\n",

37 | "\n",

38 | "\n",

39 | "## During the Workshop\n",

40 | "- [Any code shared during the workshop will be posted here](https://docs.google.com/document/d/1hPBJt_4Ihkj6v667fWxVjzwCMS4uBPdYlBLd2IqkxJ0/edit?usp=sharing)"

41 | ],

42 | "metadata": {

43 | "id": "nO8gHDbSAa4H"

44 | }

45 | },

46 | {

47 | "cell_type": "markdown",

48 | "source": [

49 | "# Install necessary libraries"

50 | ],

51 | "metadata": {

52 | "id": "Pt_SMGlvocqA"

53 | }

54 | },

55 | {

56 | "cell_type": "code",

57 | "source": [

58 | "! pip install openai groq"

59 | ],

60 | "metadata": {

61 | "id": "eKvKwO8XAnPt"

62 | },

63 | "execution_count": null,

64 | "outputs": []

65 | },

66 | {

67 | "cell_type": "markdown",

68 | "source": [

69 | "# Set up Groq, OpenRouter, & OpenAI clients"

70 | ],

71 | "metadata": {

72 | "id": "GjcgEeFaof1i"

73 | }

74 | },

75 | {

76 | "cell_type": "code",

77 | "execution_count": 4,

78 | "metadata": {

79 | "id": "pHbjDU_L__Vd"

80 | },

81 | "outputs": [],

82 | "source": [

83 | "from openai import OpenAI\n",

84 | "from google.colab import userdata\n",

85 | "import os\n",

86 | "import json\n",

87 | "from groq import Groq\n",

88 | "import json\n",

89 | "from typing import List, Dict, Any, Callable\n",

90 | "import ast\n",

91 | "import io\n",

92 | "import sys\n",

93 | "\n",

94 | "groq_api_key = userdata.get(\"GROQ_API_KEY\")\n",

95 | "os.environ['GROQ_API_KEY'] = groq_api_key\n",

96 | "\n",

97 | "openrouter_api_key = userdata.get(\"OPENROUTER_API_KEY\")\n",

98 | "os.environ['OPENROUTER_API_KEY'] = openrouter_api_key\n",

99 | "\n",

100 | "openai_api_key = userdata.get(\"OPENAI_API_KEY\")\n",

101 | "os.environ['OPENAI_API_KEY'] = openai_api_key\n",

102 | "\n",

103 | "groq_client = Groq(api_key=os.getenv('GROQ_API_KEY'))\n",

104 | "\n",

105 | "openrouter_client = OpenAI(\n",

106 | " base_url=\"https://openrouter.ai/api/v1\",\n",

107 | " api_key=os.getenv(\"OPENROUTER_API_KEY\")\n",

108 | ")\n",

109 | "\n",

110 | "openai_client = OpenAI(\n",

111 | " base_url=\"https://api.openai.com/v1\",\n",

112 | " api_key=os.getenv(\"OPENAI_API_KEY\")\n",

113 | ")"

114 | ]

115 | },

116 | {

117 | "cell_type": "markdown",

118 | "source": [

119 | "### Define functions to easily query and compare responses from OpenAI, Groq, and OpenRouter"

120 | ],

121 | "metadata": {

122 | "id": "AZF6uLpooj-F"

123 | }

124 | },

125 | {

126 | "cell_type": "code",

127 | "source": [

128 | "def get_llm_response(client, prompt, openai_model=\"o1-preview\", json_mode=False):\n",

129 | "\n",

130 | " if client == \"openai\":\n",

131 | "\n",

132 | " kwargs = {\n",

133 | " \"model\": openai_model,\n",

134 | " \"messages\": [{\"role\": \"user\", \"content\": prompt}]\n",

135 | " }\n",

136 | "\n",

137 | " if json_mode:\n",

138 | " kwargs[\"response_format\"] = {\"type\": \"json_object\"}\n",

139 | "\n",

140 | " response = openai_client.chat.completions.create(**kwargs)\n",

141 | "\n",

142 | " elif client == \"groq\":\n",

143 | "\n",

144 | " try:\n",

145 | " models = [\"llama-3.1-8b-instant\", \"llama-3.1-70b-versatile\", \"llama3-70b-8192\", \"llama3-8b-8192\", \"gemma2-9b-it\"]\n",

146 | "\n",

147 | " for model in models:\n",

148 | "\n",

149 | " try:\n",

150 | " kwargs = {\n",

151 | " \"model\": model,\n",

152 | " \"messages\": [{\"role\": \"user\", \"content\": prompt}]\n",

153 | " }\n",

154 | " if json_mode:\n",

155 | " kwargs[\"response_format\"] = {\"type\": \"json_object\"}\n",

156 | "\n",

157 | " response = groq_client.chat.completions.create(**kwargs)\n",

158 | "\n",

159 | " break\n",

160 | "\n",

161 | " except Exception as e:\n",

162 | " print(f\"Error: {e}\")\n",

163 | " continue\n",

164 | "\n",

165 | " except Exception as e:\n",

166 | " print(f\"Error: {e}\")\n",

167 | "\n",

168 | " kwargs = {\n",

169 | " \"model\": \"meta-llama/llama-3.1-8b-instruct:free\",\n",

170 | " \"messages\": [{\"role\": \"user\", \"content\": prompt}]\n",

171 | " }\n",

172 | "\n",

173 | " if json_mode:\n",

174 | " kwargs[\"response_format\"] = {\"type\": \"json_object\"}\n",

175 | "\n",

176 | " response = openrouter_client.chat.completions.create(**kwargs)\n",

177 | "\n",

178 | " else:\n",

179 | " raise ValueError(f\"Invalid client: {client}\")\n",

180 | "\n",

181 | " return response.choices[0].message.content\n",

182 | "\n",

183 | "\n",

184 | "def evaluate_responses(prompt, reasoning_prompt=False, openai_model=\"o1-preview\"):\n",

185 | "\n",

186 | " if reasoning_prompt:\n",

187 | " prompt = f\"{prompt}\\n\\n{reasoning_prompt}.\"\n",

188 | "\n",

189 | " openai_response = get_llm_response(\"openai\", prompt, openai_model)\n",

190 | " groq_response = get_llm_response(\"groq\", prompt)\n",

191 | "\n",

192 | " print(f\"OpenAI Response: {openai_response}\")\n",

193 | " print(f\"\\n\\nGroq Response: {groq_response}\")"

194 | ],

195 | "metadata": {

196 | "id": "BNYyDCNuAhbj"

197 | },

198 | "execution_count": 5,

199 | "outputs": []

200 | },

201 | {

202 | "cell_type": "code",

203 | "source": [],

204 | "metadata": {

205 | "id": "j9V03RXNBd6j"

206 | },

207 | "execution_count": null,

208 | "outputs": []

209 | },

210 | {

211 | "cell_type": "code",

212 | "source": [],

213 | "metadata": {

214 | "id": "wYaiRrHwbwMT"

215 | },

216 | "execution_count": null,

217 | "outputs": []

218 | },

219 | {

220 | "cell_type": "code",

221 | "source": [],

222 | "metadata": {

223 | "id": "Mq1YkfFeCRl9"

224 | },

225 | "execution_count": null,

226 | "outputs": []

227 | },

228 | {

229 | "cell_type": "code",

230 | "source": [],

231 | "metadata": {

232 | "id": "zgyXx8M9C0RT"

233 | },

234 | "execution_count": null,

235 | "outputs": []

236 | },

237 | {

238 | "cell_type": "markdown",

239 | "source": [

240 | "# Agent Architecture"

241 | ],

242 | "metadata": {

243 | "id": "0w8IlZq49PAz"

244 | }

245 | },

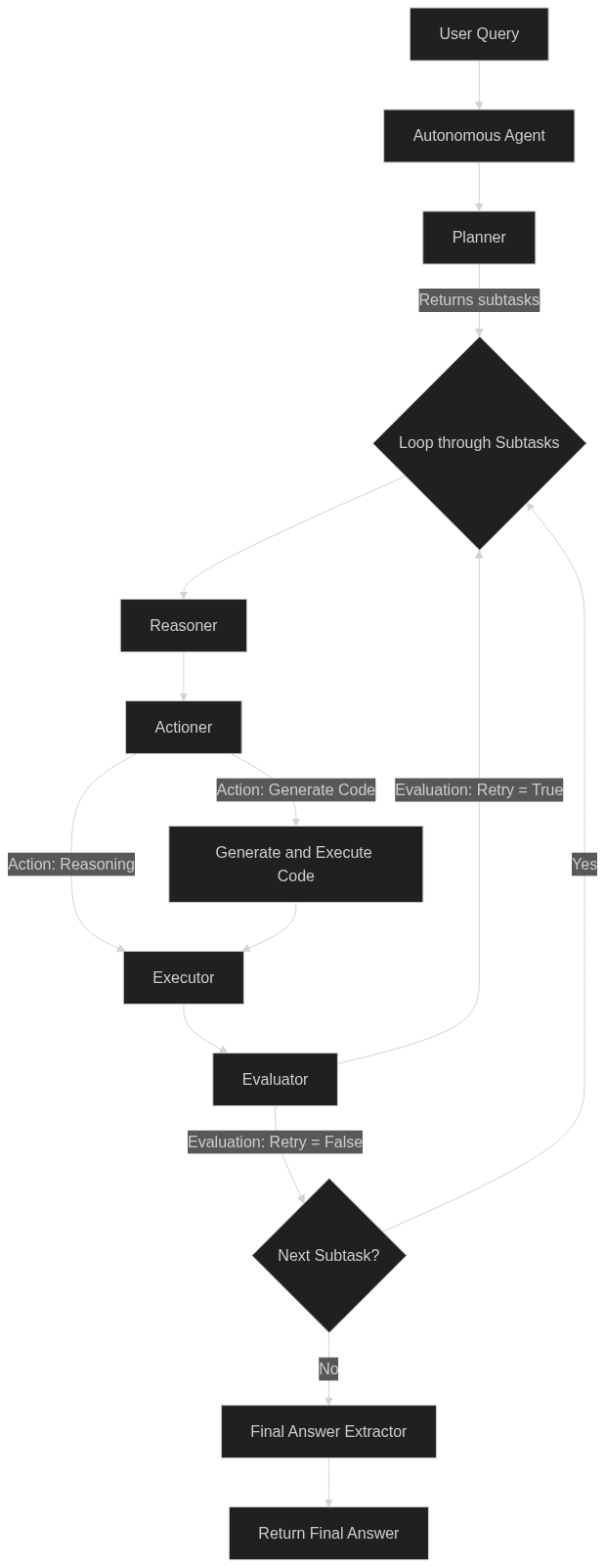

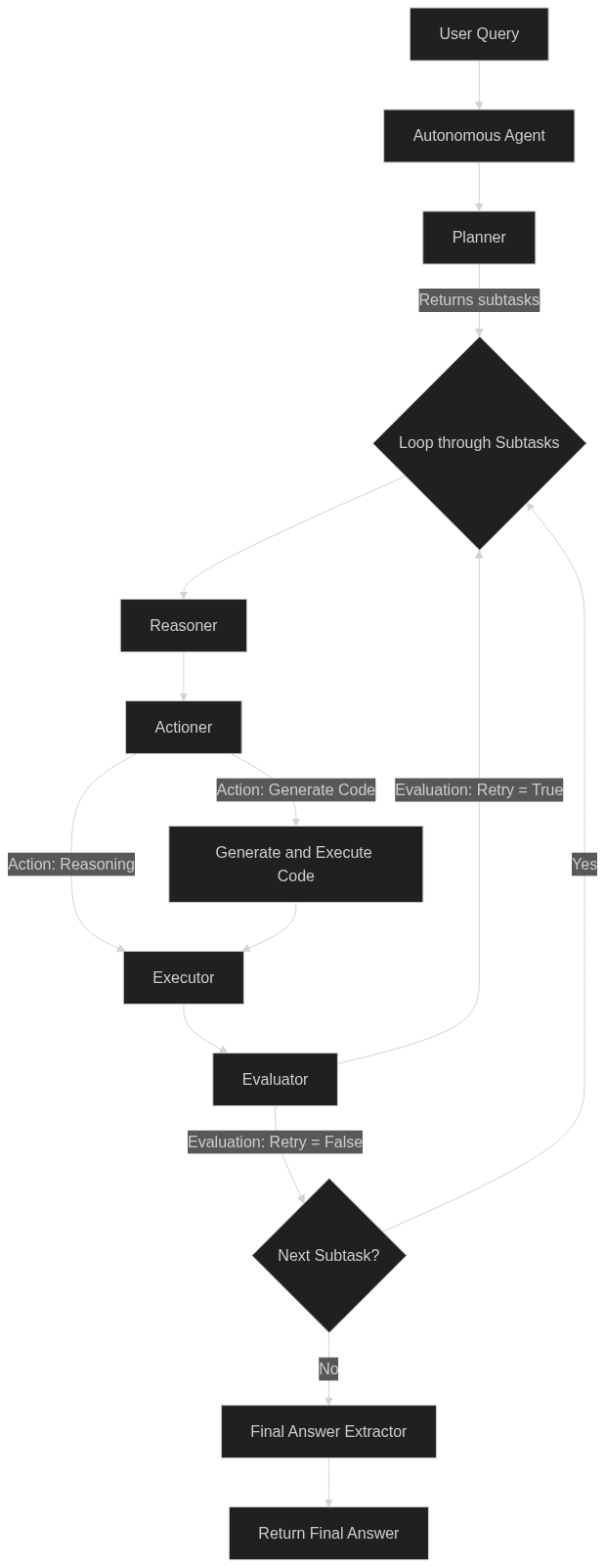

246 | {

247 | "cell_type": "markdown",

248 | "source": [

249 | "[](https://mermaid.live/edit#pako:eNqFUslugzAQ_ZWRz8kPILUVCZClVdQmqdTK5ODCFFDAjry0iSD_XmOTJodK5WS_Zd7M4JZkIkcSkEKyQwnbKOVgvzf6qlDCi0F52sF4fA-hJ0IaGi24aIRREBbItacn9LlmnKPced2kR7s1aiO5AmU-NFN71cG0fRLiALqUwhQlbAbi7F1TVyuia2RKXItFDo5pmOnqBo5dhgcDmKFlmEaY2oE6SOgvwHgO8REzM5B_2n1kxYsOZtSrxSUocfkzf5m5y5zGX6w27Cqau3IDOpTU8gR3kLBa2Y4W7QqP-jLywzDywtnesd_NLbISHSxpUnFWQ8jVt_0b8VFLll0Tl66TR-q3DLfaf3vaSoMukYxIg7JhVW4fQdvbUqJLbDAlgT3mTO5TkvKz1TG7ks2JZyTQ1j0i5pDb9UYVs2-nIcFnP-YFjfPKNjqA5x9CM8YW)\n",

250 | "\n",

251 | "\n",

252 | ""

253 | ],

254 | "metadata": {

255 | "id": "RxpUp2KED9hh"

256 | }

257 | },

258 | {

259 | "cell_type": "markdown",

260 | "source": [

261 | "### To create our AI Agent, we will define the following functions:\n",

262 | "\n",

263 | "1. **Planner:** This function takes a user's query and breaks it down into smaller, manageable subtasks. It returns these subtasks as a list, where each one is either a reasoning task or a code generation task.\n",

264 | "\n",

265 | "2. **Reasoner:** This function provides reasoning on how to complete a specific subtask, considering both the overall query and the results of any previous subtasks. It returns a short explanation on how to proceed with the current subtask.\n",

266 | "\n",

267 | "3. **Actioner:** Based on the reasoning provided for a subtask, this function decides whether the next step requires generating code or more reasoning. It then returns the chosen action and any necessary details to perform it.\n",

268 | "\n",

269 | "4. **Evaluator:** This function checks if the result of the current subtask is reasonable and aligns with the overall goal. It returns an evaluation of the result and indicates whether the subtask needs to be retried.\n",

270 | "\n",

271 | "5. **generate_and_execute_code:** This function generates and executes Python code based on a given prompt and memory of previous steps. It returns both the generated code and its execution result.\n",

272 | "\n",

273 | "6. **executor:** Depending on the action decided by the \"actioner,\" this function either generates and executes code or returns reasoning. It handles the execution of tasks based on the action type.\n",

274 | "\n",

275 | "7. **final_answer_extractor:** After all subtasks are completed, this function gathers the results from previous steps to extract and provide the final answer to the user's query.\n",

276 | "\n",

277 | "8. **autonomous_agent:** This is the main function that coordinates the process of answering the user's query. It manages the entire sequence of planning, reasoning, action, evaluation, and final answer extraction to produce a complete response."

278 | ],

279 | "metadata": {

280 | "id": "goGb1KUVmVu1"

281 | }

282 | },

283 | {

284 | "cell_type": "code",

285 | "source": [],

286 | "metadata": {

287 | "id": "BpZ1hRaiD-hS"

288 | },

289 | "execution_count": 5,

290 | "outputs": []

291 | },

292 | {

293 | "cell_type": "code",

294 | "source": [],

295 | "metadata": {

296 | "id": "MUMjfWwcK7ig"

297 | },

298 | "execution_count": 5,

299 | "outputs": []

300 | },

301 | {

302 | "cell_type": "code",

303 | "source": [],

304 | "metadata": {

305 | "id": "BWjY2S2mxh3w"

306 | },

307 | "execution_count": 5,

308 | "outputs": []

309 | },

310 | {

311 | "cell_type": "code",

312 | "source": [],

313 | "metadata": {

314 | "id": "1BFyiCpRxh6I"

315 | },

316 | "execution_count": 5,

317 | "outputs": []

318 | },

319 | {

320 | "cell_type": "markdown",

321 | "source": [

322 | "# OpenAI o1-preview model getting trivial questions wrong\n",

323 | "\n",

324 | "- [Link to Reddit post](https://www.reddit.com/r/ChatGPT/comments/1ff9w7y/new_o1_still_fails_miserably_at_trivial_questions/)\n",

325 | "- Links to ChatGPT threads: [(1)](https://chatgpt.com/share/66f21757-db2c-8012-8b0a-11224aed0c29), [(2)](https://chatgpt.com/share/66e3c1e5-ae00-8007-8820-fee9eb61eae5)\n",

326 | "- [Improving reasoning in LLMs through thoughtful prompting](https://www.reddit.com/r/singularity/comments/1fdhs2m/did_i_just_fix_the_data_overfitting_problem_in/?share_id=6DsDLJUu1qEx_bsqFDC8a&utm_content=2&utm_medium=ios_app&utm_name=ioscss&utm_source=share&utm_term=1)\n"

327 | ],

328 | "metadata": {

329 | "id": "MrSeaoPqq1qY"

330 | }

331 | },

332 | {

333 | "cell_type": "code",

334 | "source": [],

335 | "metadata": {

336 | "id": "rM4bcny1JliP"

337 | },

338 | "execution_count": null,

339 | "outputs": []

340 | },

341 | {

342 | "cell_type": "code",

343 | "source": [],

344 | "metadata": {

345 | "id": "vXAPpgkpK7lG"

346 | },

347 | "execution_count": null,

348 | "outputs": []

349 | },

350 | {

351 | "cell_type": "code",

352 | "source": [],

353 | "metadata": {

354 | "id": "RjKgUDihI4lA"

355 | },

356 | "execution_count": null,

357 | "outputs": []

358 | },

359 | {

360 | "cell_type": "code",

361 | "source": [],

362 | "metadata": {

363 | "id": "5JNPSaGWKF5s"

364 | },

365 | "execution_count": null,

366 | "outputs": []

367 | },

368 | {

369 | "cell_type": "code",

370 | "source": [],

371 | "metadata": {

372 | "id": "2ciZ_VUNNJRh"

373 | },

374 | "execution_count": null,

375 | "outputs": []

376 | },

377 | {

378 | "cell_type": "code",

379 | "source": [],

380 | "metadata": {

381 | "id": "5Vf9avjo8-fK"

382 | },

383 | "execution_count": null,

384 | "outputs": []

385 | }

386 | ]

387 | }

--------------------------------------------------------------------------------

/AI_Agent_Workshop_Completed.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "nbformat": 4,

3 | "nbformat_minor": 0,

4 | "metadata": {

5 | "colab": {

6 | "provenance": []

7 | },

8 | "kernelspec": {

9 | "name": "python3",

10 | "display_name": "Python 3"

11 | },

12 | "language_info": {

13 | "name": "python"

14 | }

15 | },

16 | "cells": [

17 | {

18 | "cell_type": "markdown",

19 | "source": [

20 | "\n",

21 | "\n",

22 | "# Headstarter AI Agent Workshop\n",

23 | "\n",

24 | "#### **Skills: OpenAI, Groq, Llama, OpenRouter**\n",

25 | "\n",

26 | "## **To Get Started:**\n",

27 | "1. [Get your Groq API Key](https://console.groq.com/keys)\n",

28 | "2. [Get your OpenRouter API Key](https://openrouter.ai/settings/keys)\n",

29 | "3. [Get your OpenAI API Key](https://platform.openai.com/api-keys)\n",

30 | "\n",

31 | "\n",

32 | "### **Interesting Reads**\n",

33 | "- [Sam Altman's Blog Post: The Intelligence Age](https://ia.samaltman.com/)\n",

34 | "- [What LLMs cannot do](https://ehudreiter.com/2023/12/11/what-llms-cannot-do/)\n",

35 | "- [Chain of Thought Prompting](https://www.promptingguide.ai/techniques/cot)\n",

36 | "- [Why ChatGPT can't count the number of r's in the word strawberry](https://prompt.16x.engineer/blog/why-chatgpt-cant-count-rs-in-strawberry)\n",

37 | "\n",

38 | "\n",

39 | "## During the Workshop\n",

40 | "- [Any code shared during the workshop will be posted here](https://docs.google.com/document/d/1hPBJt_4Ihkj6v667fWxVjzwCMS4uBPdYlBLd2IqkxJ0/edit?usp=sharing)"

41 | ],

42 | "metadata": {

43 | "id": "nO8gHDbSAa4H"

44 | }

45 | },

46 | {

47 | "cell_type": "markdown",

48 | "source": [

49 | "# Install necessary libraries"

50 | ],

51 | "metadata": {

52 | "id": "Pt_SMGlvocqA"

53 | }

54 | },

55 | {

56 | "cell_type": "code",

57 | "source": [

58 | "! pip install openai groq"

59 | ],

60 | "metadata": {

61 | "colab": {

62 | "base_uri": "https://localhost:8080/"

63 | },

64 | "id": "eKvKwO8XAnPt",

65 | "outputId": "a32ad6b4-aa70-4fc3-bdcb-3f71437a7792"

66 | },

67 | "execution_count": 2,

68 | "outputs": [

69 | {

70 | "output_type": "stream",

71 | "name": "stdout",

72 | "text": [

73 | "Collecting openai\n",

74 | " Downloading openai-1.49.0-py3-none-any.whl.metadata (24 kB)\n",

75 | "Collecting groq\n",

76 | " Downloading groq-0.11.0-py3-none-any.whl.metadata (13 kB)\n",

77 | "Requirement already satisfied: anyio<5,>=3.5.0 in /usr/local/lib/python3.10/dist-packages (from openai) (3.7.1)\n",

78 | "Requirement already satisfied: distro<2,>=1.7.0 in /usr/lib/python3/dist-packages (from openai) (1.7.0)\n",

79 | "Collecting httpx<1,>=0.23.0 (from openai)\n",

80 | " Downloading httpx-0.27.2-py3-none-any.whl.metadata (7.1 kB)\n",

81 | "Collecting jiter<1,>=0.4.0 (from openai)\n",

82 | " Downloading jiter-0.5.0-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (3.6 kB)\n",

83 | "Requirement already satisfied: pydantic<3,>=1.9.0 in /usr/local/lib/python3.10/dist-packages (from openai) (2.9.2)\n",

84 | "Requirement already satisfied: sniffio in /usr/local/lib/python3.10/dist-packages (from openai) (1.3.1)\n",

85 | "Requirement already satisfied: tqdm>4 in /usr/local/lib/python3.10/dist-packages (from openai) (4.66.5)\n",

86 | "Requirement already satisfied: typing-extensions<5,>=4.11 in /usr/local/lib/python3.10/dist-packages (from openai) (4.12.2)\n",

87 | "Requirement already satisfied: idna>=2.8 in /usr/local/lib/python3.10/dist-packages (from anyio<5,>=3.5.0->openai) (3.10)\n",

88 | "Requirement already satisfied: exceptiongroup in /usr/local/lib/python3.10/dist-packages (from anyio<5,>=3.5.0->openai) (1.2.2)\n",

89 | "Requirement already satisfied: certifi in /usr/local/lib/python3.10/dist-packages (from httpx<1,>=0.23.0->openai) (2024.8.30)\n",

90 | "Collecting httpcore==1.* (from httpx<1,>=0.23.0->openai)\n",

91 | " Downloading httpcore-1.0.5-py3-none-any.whl.metadata (20 kB)\n",

92 | "Collecting h11<0.15,>=0.13 (from httpcore==1.*->httpx<1,>=0.23.0->openai)\n",

93 | " Downloading h11-0.14.0-py3-none-any.whl.metadata (8.2 kB)\n",

94 | "Requirement already satisfied: annotated-types>=0.6.0 in /usr/local/lib/python3.10/dist-packages (from pydantic<3,>=1.9.0->openai) (0.7.0)\n",

95 | "Requirement already satisfied: pydantic-core==2.23.4 in /usr/local/lib/python3.10/dist-packages (from pydantic<3,>=1.9.0->openai) (2.23.4)\n",

96 | "Downloading openai-1.49.0-py3-none-any.whl (378 kB)\n",

97 | "\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m378.1/378.1 kB\u001b[0m \u001b[31m20.0 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

98 | "\u001b[?25hDownloading groq-0.11.0-py3-none-any.whl (106 kB)\n",

99 | "\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m106.5/106.5 kB\u001b[0m \u001b[31m8.4 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

100 | "\u001b[?25hDownloading httpx-0.27.2-py3-none-any.whl (76 kB)\n",

101 | "\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m76.4/76.4 kB\u001b[0m \u001b[31m6.4 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

102 | "\u001b[?25hDownloading httpcore-1.0.5-py3-none-any.whl (77 kB)\n",

103 | "\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m77.9/77.9 kB\u001b[0m \u001b[31m6.3 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

104 | "\u001b[?25hDownloading jiter-0.5.0-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (318 kB)\n",

105 | "\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m318.9/318.9 kB\u001b[0m \u001b[31m20.7 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

106 | "\u001b[?25hDownloading h11-0.14.0-py3-none-any.whl (58 kB)\n",

107 | "\u001b[2K \u001b[90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\u001b[0m \u001b[32m58.3/58.3 kB\u001b[0m \u001b[31m3.7 MB/s\u001b[0m eta \u001b[36m0:00:00\u001b[0m\n",

108 | "\u001b[?25hInstalling collected packages: jiter, h11, httpcore, httpx, openai, groq\n",

109 | "Successfully installed groq-0.11.0 h11-0.14.0 httpcore-1.0.5 httpx-0.27.2 jiter-0.5.0 openai-1.49.0\n"

110 | ]

111 | }

112 | ]

113 | },

114 | {

115 | "cell_type": "markdown",

116 | "source": [

117 | "# Set up Groq, OpenRouter, & OpenAI clients"

118 | ],

119 | "metadata": {

120 | "id": "GjcgEeFaof1i"

121 | }

122 | },

123 | {

124 | "cell_type": "code",

125 | "execution_count": 1,

126 | "metadata": {

127 | "id": "pHbjDU_L__Vd"

128 | },

129 | "outputs": [],

130 | "source": [

131 | "from openai import OpenAI\n",

132 | "from google.colab import userdata\n",

133 | "import os\n",

134 | "import json\n",

135 | "from groq import Groq\n",

136 | "import json\n",

137 | "from typing import List, Dict, Any, Callable\n",

138 | "import ast\n",

139 | "import io\n",

140 | "import sys\n",

141 | "\n",

142 | "groq_api_key = userdata.get(\"GROQ_API_KEY\")\n",

143 | "os.environ['GROQ_API_KEY'] = groq_api_key\n",

144 | "\n",

145 | "openrouter_api_key = userdata.get(\"OPENROUTER_API_KEY\")\n",

146 | "os.environ['OPENROUTER_API_KEY'] = openrouter_api_key\n",

147 | "\n",

148 | "openai_api_key = userdata.get(\"OPENAI_API_KEY\")\n",

149 | "os.environ['OPENAI_API_KEY'] = openai_api_key\n",

150 | "\n",

151 | "groq_client = Groq(api_key=os.getenv('GROQ_API_KEY'))\n",

152 | "\n",

153 | "openrouter_client = OpenAI(\n",

154 | " base_url=\"https://openrouter.ai/api/v1\",\n",

155 | " api_key=os.getenv(\"OPENROUTER_API_KEY\")\n",

156 | ")\n",

157 | "\n",

158 | "openai_client = OpenAI(\n",

159 | " base_url=\"https://api.openai.com/v1\",\n",

160 | " api_key=os.getenv(\"OPENAI_API_KEY\")\n",

161 | ")"

162 | ]

163 | },

164 | {

165 | "cell_type": "markdown",

166 | "source": [

167 | "### Define functions to easily query and compare responses from OpenAI, Groq, and OpenRouter"

168 | ],

169 | "metadata": {

170 | "id": "AZF6uLpooj-F"

171 | }

172 | },

173 | {

174 | "cell_type": "code",

175 | "source": [

176 | "def get_llm_response(client, prompt, openai_model=\"o1-preview\", json_mode=False):\n",

177 | "\n",

178 | " if client == \"openai\":\n",

179 | "\n",

180 | " kwargs = {\n",

181 | " \"model\": openai_model,\n",

182 | " \"messages\": [{\"role\": \"user\", \"content\": prompt}]\n",

183 | " }\n",

184 | "\n",

185 | " if json_mode:\n",

186 | " kwargs[\"response_format\"] = {\"type\": \"json_object\"}\n",

187 | "\n",

188 | " response = openai_client.chat.completions.create(**kwargs)\n",

189 | "\n",

190 | " elif client == \"groq\":\n",

191 | "\n",

192 | " try:\n",

193 | " models = [\"llama-3.1-8b-instant\", \"llama-3.1-70b-versatile\", \"llama3-70b-8192\", \"llama3-8b-8192\", \"gemma2-9b-it\"]\n",

194 | "\n",

195 | " for model in models:\n",

196 | "\n",

197 | " try:\n",

198 | " kwargs = {\n",

199 | " \"model\": model,\n",

200 | " \"messages\": [{\"role\": \"user\", \"content\": prompt}]\n",

201 | " }\n",

202 | " if json_mode:\n",

203 | " kwargs[\"response_format\"] = {\"type\": \"json_object\"}\n",

204 | "\n",

205 | " response = groq_client.chat.completions.create(**kwargs)\n",

206 | "\n",

207 | " break\n",

208 | "\n",

209 | " except Exception as e:\n",

210 | " print(f\"Error: {e}\")\n",

211 | " continue\n",

212 | "\n",

213 | " except Exception as e:\n",

214 | " print(f\"Error: {e}\")\n",

215 | "\n",

216 | " kwargs = {\n",

217 | " \"model\": \"meta-llama/llama-3.1-8b-instruct:free\",\n",

218 | " \"messages\": [{\"role\": \"user\", \"content\": prompt}]\n",

219 | " }\n",

220 | "\n",

221 | " if json_mode:\n",

222 | " kwargs[\"response_format\"] = {\"type\": \"json_object\"}\n",

223 | "\n",

224 | " response = openrouter_client.chat.completions.create(**kwargs)\n",

225 | "\n",

226 | " else:\n",

227 | " raise ValueError(f\"Invalid client: {client}\")\n",

228 | "\n",

229 | " return response.choices[0].message.content\n",

230 | "\n",

231 | "\n",

232 | "def evaluate_responses(prompt, reasoning_prompt=False, openai_model=\"o1-preview\"):\n",

233 | "\n",

234 | " if reasoning_prompt:\n",

235 | " prompt = f\"{prompt}\\n\\n{reasoning_prompt}.\"\n",

236 | "\n",

237 | " openai_response = get_llm_response(\"openai\", prompt, openai_model)\n",

238 | " groq_response = get_llm_response(\"groq\", prompt)\n",

239 | "\n",

240 | " print(f\"OpenAI Response: {openai_response}\")\n",

241 | " print(f\"\\n\\nGroq Response: {groq_response}\")"

242 | ],

243 | "metadata": {

244 | "id": "BNYyDCNuAhbj"

245 | },

246 | "execution_count": 2,

247 | "outputs": []

248 | },

249 | {

250 | "cell_type": "code",

251 | "source": [

252 | "prompt1 = \"How many r's are in the word 'strawberry'?\"\n",

253 | "evaluate_responses(prompt1)"

254 | ],

255 | "metadata": {

256 | "colab": {

257 | "base_uri": "https://localhost:8080/"

258 | },

259 | "id": "j9V03RXNBd6j",

260 | "outputId": "0aa64363-0e4d-4b22-94be-aecdf0e384e6"

261 | },

262 | "execution_count": 3,

263 | "outputs": [

264 | {

265 | "output_type": "stream",

266 | "name": "stdout",

267 | "text": [

268 | "OpenAI Response: There are three \"r\"s in the word \"strawberry\".\n",

269 | "\n",

270 | "\n",

271 | "Groq Response: In the word 'strawberry', there are 2 'R's.\n"

272 | ]

273 | }

274 | ]

275 | },

276 | {

277 | "cell_type": "code",

278 | "source": [

279 | "evaluate_responses(prompt1, openai_model=\"gpt-3.5-turbo\")"

280 | ],

281 | "metadata": {

282 | "colab": {

283 | "base_uri": "https://localhost:8080/"

284 | },

285 | "id": "qM8-nKzGpaKj",

286 | "outputId": "b0050740-501d-45b1-b422-c6c5b73c94b9"

287 | },

288 | "execution_count": 4,

289 | "outputs": [

290 | {

291 | "output_type": "stream",

292 | "name": "stdout",

293 | "text": [

294 | "OpenAI Response: There are two r's in the word 'strawberry'.\n",

295 | "\n",

296 | "\n",

297 | "Groq Response: There are 2 'r's in the word 'strawberry'.\n"

298 | ]

299 | }

300 | ]

301 | },

302 | {

303 | "cell_type": "code",

304 | "source": [

305 | "evaluate_responses(prompt1, openai_model=\"gpt-4o-mini\")"

306 | ],

307 | "metadata": {

308 | "colab": {

309 | "base_uri": "https://localhost:8080/"

310 | },

311 | "id": "-9-Rtjyhpmn3",

312 | "outputId": "380be035-3a65-4812-e191-b26a4d19b8eb"

313 | },

314 | "execution_count": 5,

315 | "outputs": [

316 | {

317 | "output_type": "stream",

318 | "name": "stdout",

319 | "text": [

320 | "OpenAI Response: The word \"strawberry\" contains 2 letters 'r'.\n",

321 | "\n",

322 | "\n",

323 | "Groq Response: There are 2 r's in the word 'strawberry'.\n"

324 | ]

325 | }

326 | ]

327 | },

328 | {

329 | "cell_type": "code",

330 | "source": [

331 | "prompt2 = \"9.9 or 9.11, which number is bigger?\"\n",

332 | "evaluate_responses(prompt2)"

333 | ],

334 | "metadata": {

335 | "colab": {

336 | "base_uri": "https://localhost:8080/"

337 | },

338 | "id": "wYaiRrHwbwMT",

339 | "outputId": "fc9376f3-9255-43d7-8bca-1ee25a46e09b"

340 | },

341 | "execution_count": 11,

342 | "outputs": [

343 | {

344 | "output_type": "stream",

345 | "name": "stdout",

346 | "text": [

347 | "OpenAI Response: To determine which number is larger between 9.9 and 9.11, we'll compare them digit by digit.\n",

348 | "\n",

349 | "First, both numbers have the same whole number part: **9**.\n",

350 | "\n",

351 | "Next, we'll compare the decimal parts:\n",

352 | "\n",

353 | "- **9.9** can be thought of as **9.90** for easier comparison.\n",

354 | "- **9.11** remains as **9.11**.\n",

355 | "\n",

356 | "Now, compare the first digit after the decimal:\n",

357 | "\n",

358 | "- **9** in **9.90**\n",

359 | "- **1** in **9.11**\n",

360 | "\n",

361 | "Since **9 > 1**, it's clear that **9.9** is larger than **9.11**.\n",

362 | "\n",

363 | "**Answer:** 9.9 is larger than 9.11; thus, 9.9 is the bigger number.\n",

364 | "\n",

365 | "\n",

366 | "Groq Response: 9.11 is bigger than 9.9. It's quite straightforward in a simple decimal comparison.\n"

367 | ]

368 | }

369 | ]

370 | },

371 | {

372 | "cell_type": "code",

373 | "source": [

374 | "reasoning_prompt = \"Let's first understand the problem and devise a plan to solve it. Then, let's carry out the plan and solve the problem step by step.\"\n",

375 | "evaluate_responses(prompt1, reasoning_prompt)"

376 | ],

377 | "metadata": {

378 | "colab": {

379 | "base_uri": "https://localhost:8080/"

380 | },

381 | "id": "Mq1YkfFeCRl9",

382 | "outputId": "a00b0a3a-2d7f-4f6b-aaaf-cd3f19b666af"

383 | },

384 | "execution_count": null,

385 | "outputs": [

386 | {

387 | "output_type": "stream",

388 | "name": "stdout",

389 | "text": [

390 | "OpenAI Response: Certainly! Let's solve the problem step by step.\n",

391 | "\n",

392 | "---\n",

393 | "\n",

394 | "**1. Understand the Problem**\n",

395 | "\n",

396 | "We are asked to find out how many times the letter **'r'** appears in the word **'strawberry'**.\n",

397 | "\n",

398 | "**2. Devise a Plan**\n",

399 | "\n",

400 | "- Write down the word clearly.\n",

401 | "- Examine each letter in the word one by one.\n",

402 | "- Count and keep track of every time the letter 'r' appears.\n",

403 | "\n",

404 | "**3. Carry Out the Plan**\n",

405 | "\n",

406 | "Let's write down the word and analyze each letter:\n",

407 | "\n",

408 | "**Word:** **S T R A W B E R R Y**\n",

409 | "\n",

410 | "Now, let's go through each letter:\n",

411 | "\n",

412 | "1. **S** - Not 'r' (Count = 0)\n",

413 | "2. **T** - Not 'r' (Count = 0)\n",

414 | "3. **R** - This is 'r' (Count = 1)\n",

415 | "4. **A** - Not 'r' (Count = 1)\n",

416 | "5. **W** - Not 'r' (Count = 1)\n",

417 | "6. **B** - Not 'r' (Count = 1)\n",

418 | "7. **E** - Not 'r' (Count = 1)\n",

419 | "8. **R** - This is 'r' (Count = 2)\n",

420 | "9. **R** - This is 'r' (Count = 3)\n",

421 | "10. **Y** - Not 'r' (Count = 3)\n",

422 | "\n",

423 | "**4. Answer**\n",

424 | "\n",

425 | "After examining each letter, we have found that the letter **'r'** appears **3 times** in the word **'strawberry'**.\n",

426 | "\n",

427 | "---\n",

428 | "\n",

429 | "**Conclusion:** There are **3** 'r's in the word **'strawberry'**.\n",

430 | "\n",

431 | "\n",

432 | "Groq Response: To solve this problem, we need to understand that we are looking for the count of the letter 'r' in the word 'strawberry'.\n",

433 | "\n",

434 | "Step 1: Identify the word and the target letter.\n",

435 | "- The word is 'strawberry'.\n",

436 | "- The target letter is 'r'.\n",

437 | "\n",

438 | "Step 2: Write down the word and visually scan for 'r's.\n",

439 | "strawberr(y)\n",

440 | "\n",

441 | "Step 3: Start visually scanning from the beginning and count the 'r's.\n",

442 | "In the word 'strawberry', the first 'r' appears in the word after the \"b\" which is after the letters 'berr'. The second 'r' also appears after that 'berr' so we notice two.\n"

443 | ]

444 | }

445 | ]

446 | },

447 | {

448 | "cell_type": "code",

449 | "source": [

450 | "reasoning_prompt = \"\"\"Let's first understand the problem and devise a plan to solve it. Verify the plan step by step. Then, let's carry out the plan and solve the problem step by step. Lastly, let's verify the answer step by step by working backwards. Determine if the answer is correct or not, and if not, reflect on on why it is incorrect. Then, think step by step about how to arrive at the correct answer.\"\"\"\n",

451 | "evaluate_responses(prompt1, reasoning_prompt)"

452 | ],

453 | "metadata": {

454 | "colab": {

455 | "base_uri": "https://localhost:8080/"

456 | },

457 | "id": "zgyXx8M9C0RT",

458 | "outputId": "56053925-c8fb-4fe9-ea4d-c74deab56cbb"

459 | },

460 | "execution_count": null,

461 | "outputs": [

462 | {

463 | "output_type": "stream",

464 | "name": "stdout",

465 | "text": [

466 | "OpenAI Response: **Understanding the Problem:**\n",

467 | "\n",

468 | "We need to determine **how many times the letter 'r' appears** in the word **'strawberry'**.\n",

469 | "\n",

470 | "---\n",

471 | "\n",

472 | "**Devise a Plan to Solve It:**\n",

473 | "\n",

474 | "1. **Write down** the word 'strawberry'.\n",

475 | "2. **List each letter** in the word sequentially.\n",

476 | "3. **Identify** every occurrence of the letter 'r'.\n",

477 | "4. **Count** the total number of times 'r' appears.\n",

478 | "\n",

479 | "---\n",

480 | "\n",

481 | "**Verify the Plan Step by Step:**\n",

482 | "\n",

483 | "- **Step 1 and 2** will help us see all letters clearly.\n",

484 | "- **Step 3** ensures we find all 'r's without missing any.\n",

485 | "- **Step 4** gives us the total count required.\n",

486 | "\n",

487 | "---\n",

488 | "\n",

489 | "**Carry Out the Plan and Solve the Problem Step by Step:**\n",

490 | "\n",

491 | "1. **Write down the word:**\n",

492 | "\n",

493 | " ```\n",

494 | " S T R A W B E R R Y\n",

495 | " ```\n",

496 | "\n",

497 | "2. **List each letter with its position:**\n",

498 | "\n",

499 | " - Position 1: **S**\n",

500 | " - Position 2: **T**\n",

501 | " - Position 3: **R**\n",

502 | " - Position 4: **A**\n",

503 | " - Position 5: **W**\n",

504 | " - Position 6: **B**\n",

505 | " - Position 7: **E**\n",

506 | " - Position 8: **R**\n",

507 | " - Position 9: **R**\n",

508 | " - Position 10: **Y**\n",

509 | "\n",

510 | "3. **Identify every 'r':**\n",

511 | "\n",

512 | " - **R** at Position 3\n",

513 | " - **R** at Position 8\n",

514 | " - **R** at Position 9\n",

515 | "\n",

516 | "4. **Count the total 'r's:**\n",

517 | "\n",

518 | " - There are **3** occurrences of the letter 'r'.\n",

519 | "\n",

520 | "---\n",

521 | "\n",

522 | "**Verify the Answer Step by Step by Working Backwards:**\n",

523 | "\n",

524 | "- Starting with the total count **3**, we confirm each occurrence:\n",

525 | " - First **'r'** at Position 3\n",

526 | " - Second **'r'** at Position 8\n",

527 | " - Third **'r'** at Position 9\n",

528 | "- We ensure no other 'r's are present in the word.\n",

529 | "\n",

530 | "---\n",

531 | "\n",

532 | "**Determine if the Answer is Correct or Not:**\n",

533 | "\n",

534 | "- **The answer is correct.**\n",

535 | "- There are **3** 'r's in 'strawberry'.\n",

536 | "\n",

537 | "---\n",

538 | "\n",

539 | "**Reflection:**\n",

540 | "\n",

541 | "If we initially thought there were fewer 'r's, we might have:\n",

542 | "\n",

543 | "- **Overlooked** the consecutive 'r's at Positions 8 and 9.\n",

544 | "- **Miscounted** by not listing all letters systematically.\n",

545 | "\n",

546 | "By carefully listing and counting, we've arrived at the correct answer.\n",

547 | "\n",

548 | "---\n",

549 | "\n",

550 | "**Final Answer:**\n",

551 | "\n",

552 | "There are **3** 'r's in the word **'strawberry'**.\n",

553 | "\n",

554 | "\n",

555 | "Groq Response: **Step 1: Understand the problem**\n",

556 | "\n",

557 | "The problem asks us to count the number of 'r's in the word \"strawberry\".\n",

558 | "\n",

559 | "**Step 2: Devise a plan to solve it**\n",

560 | "\n",

561 | "Our plan is to:\n",

562 | "1. Write down the word \"strawberry\" to clearly see its letters.\n",

563 | "2. Locate each occurrence of the letter 'r' in the word.\n",

564 | "3. Count the number of 'r's found.\n",

565 | "\n",

566 | "**Step 3: Carry out the plan**\n",

567 | "\n",

568 | "The word \"strawberry\" is written as \"S-T-R-A-W-B-E-R-R-Y\".\n",

569 | "\n",

570 | "Now, we locate each occurrence of the letter 'r': we see 'r' in positions 5, 6, and 7.\n",

571 | "\n",

572 | "**Step 4: Count the 'r's**\n",

573 | "\n",

574 | "There are 3 occurrences of the letter 'r', so we can conclude that there are 3 'r's in the word \"strawberry\".\n",

575 | "\n",

576 | "**Step 5: Verify the answer step by step**\n",

577 | "\n",

578 | "To verify our answer, let's work backwards:\n",

579 | "\n",

580 | "- The last step was counting the 'r's and finding that there are 3.\n",

581 | "- Before that, we were observing the positions of 'r's in the word, which showed 3 'r's.\n",

582 | "- Before observing the positions, we had written down the word, listing all its letters.\n",

583 | "- Writing down the word \"strawberry\" correctly would lead us to correctly observe and count its 'r's.\n",

584 | "\n",

585 | "Our answer is verified to be correct.\n",

586 | "\n",

587 | "**Conclusion**\n",

588 | "\n",

589 | "There are 3 'r's in the word \"strawberry\", and our answer is accurate following our plan and verification steps.\n"

590 | ]

591 | }

592 | ]

593 | },

594 | {

595 | "cell_type": "markdown",

596 | "source": [

597 | "# Agent Architecture\n",

598 | "\n",

599 | "[](https://mermaid.live/edit#pako:eNqFUslugzAQ_ZWRz8kPILUVCZClVdQmqdTK5ODCFFDAjry0iSD_XmOTJodK5WS_Zd7M4JZkIkcSkEKyQwnbKOVgvzf6qlDCi0F52sF4fA-hJ0IaGi24aIRREBbItacn9LlmnKPced2kR7s1aiO5AmU-NFN71cG0fRLiALqUwhQlbAbi7F1TVyuia2RKXItFDo5pmOnqBo5dhgcDmKFlmEaY2oE6SOgvwHgO8REzM5B_2n1kxYsOZtSrxSUocfkzf5m5y5zGX6w27Cqau3IDOpTU8gR3kLBa2Y4W7QqP-jLywzDywtnesd_NLbISHSxpUnFWQ8jVt_0b8VFLll0Tl66TR-q3DLfaf3vaSoMukYxIg7JhVW4fQdvbUqJLbDAlgT3mTO5TkvKz1TG7ks2JZyTQ1j0i5pDb9UYVs2-nIcFnP-YFjfPKNjqA5x9CM8YW)\n",

600 | "\n",

601 | "\n",

602 | ""

603 | ],

604 | "metadata": {

605 | "id": "RxpUp2KED9hh"

606 | }

607 | },

608 | {

609 | "cell_type": "markdown",

610 | "source": [

611 | "### To create our AI Agent, we will define the following functions:\n",

612 | "\n",

613 | "1. **Planner:** This function takes a user's query and breaks it down into smaller, manageable subtasks. It returns these subtasks as a list, where each one is either a reasoning task or a code generation task.\n",

614 | "\n",

615 | "2. **Reasoner:** This function provides reasoning on how to complete a specific subtask, considering both the overall query and the results of any previous subtasks. It returns a short explanation on how to proceed with the current subtask.\n",

616 | "\n",

617 | "3. **Actioner:** Based on the reasoning provided for a subtask, this function decides whether the next step requires generating code or more reasoning. It then returns the chosen action and any necessary details to perform it.\n",

618 | "\n",

619 | "4. **Evaluator:** This function checks if the result of the current subtask is reasonable and aligns with the overall goal. It returns an evaluation of the result and indicates whether the subtask needs to be retried.\n",

620 | "\n",

621 | "5. **generate_and_execute_code:** This function generates and executes Python code based on a given prompt and memory of previous steps. It returns both the generated code and its execution result.\n",

622 | "\n",

623 | "6. **executor:** Depending on the action decided by the \"actioner,\" this function either generates and executes code or returns reasoning. It handles the execution of tasks based on the action type.\n",

624 | "\n",

625 | "7. **final_answer_extractor:** After all subtasks are completed, this function gathers the results from previous steps to extract and provide the final answer to the user's query.\n",

626 | "\n",

627 | "8. **autonomous_agent:** This is the main function that coordinates the process of answering the user's query. It manages the entire sequence of planning, reasoning, action, evaluation, and final answer extraction to produce a complete response."

628 | ],

629 | "metadata": {

630 | "id": "goGb1KUVmVu1"

631 | }

632 | },

633 | {

634 | "cell_type": "code",

635 | "source": [

636 | "def planner(user_query: str) -> List[str]:\n",

637 | " prompt = f\"\"\"Given the user's query: '{user_query}', break down the query into as few subtasks as possible in order to anser the question.\n",

638 | " Each subtask is either a calculation or reasoning step. Never duplicate a task.\n",

639 | "\n",

640 | " Here are the only 2 actions that can be taken for each subtask:\n",

641 | " - generate_code: This action involves generating Python code and executing it in order to make a calculation or verification.\n",

642 | " - reasoning: This action involves providing reasoning for what to do to complete the subtask.\n",

643 | "\n",

644 | " Each subtask should begin with either \"reasoning\" or \"generate_code\".\n",

645 | "\n",

646 | " Keep in mind the overall goal of answering the user's query throughout the planning process.\n",

647 | "\n",

648 | " Return the result as a JSON list of strings, where each string is a subtask.\n",

649 | "\n",

650 | " Here is an example JSON response:\n",

651 | "\n",

652 | " {{\n",

653 | " \"subtasks\": [\"Subtask 1\", \"Subtask 2\", \"Subtask 3\"]\n",

654 | " }}\n",

655 | " \"\"\"\n",

656 | " response = json.loads(get_llm_response(\"groq\", prompt, json_mode=True))\n",

657 | " print(response)\n",

658 | " return response[\"subtasks\"]\n",

659 | "\n",

660 | "def reasoner(user_query: str, subtasks: List[str], current_subtask: str, memory: List[Dict[str, Any]]) -> str:\n",

661 | " prompt = f\"\"\"Given the user's query (long-term goal): '{user_query}'\n",

662 | "\n",

663 | " Here are all the subtasks to complete in order to answer the user's query:\n",

664 | " \n",

665 | " {json.dumps(subtasks)}\n",

666 | " \n",

667 | "\n",

668 | " Here is the short-term memory (result of previous subtasks):\n",

669 | " \n",

670 | " {json.dumps(memory)}\n",

671 | " \n",

672 | "\n",

673 | " The current subtask to complete is:\n",

674 | " \n",

675 | " {current_subtask}\n",

676 | " \n",

677 | "\n",

678 | " - Provide concise reasoning on how to execute the current subtask, considering previous results.\n",

679 | " - Prioritize explicit details over assumed patterns\n",

680 | " - Avoid unnecessary complications in problem-solving\n",

681 | "\n",

682 | " Return the result as a JSON object with 'reasoning' as a key.\n",

683 | "\n",

684 | " Example JSON response:\n",

685 | " {{\n",

686 | " \"reasoning\": \"2 sentences max on how to complete the current subtask.\"\n",

687 | " }}\n",

688 | " \"\"\"\n",

689 | "\n",

690 | " response = json.loads(get_llm_response(\"groq\", prompt, json_mode=True))\n",

691 | " return response[\"reasoning\"]\n",

692 | "\n",

693 | "def actioner(user_query: str, subtasks: List[str], current_subtask: str, reasoning: str, memory: List[Dict[str, Any]]) -> Dict[str, Any]:\n",

694 | " prompt = f\"\"\"Given the user's query (long-term goal): '{user_query}'\n",

695 | "\n",

696 | " The subtasks are:\n",

697 | " \n",

698 | " {json.dumps(subtasks)}\n",

699 | " \n",

700 | "\n",

701 | " The current subtask is:\n",

702 | " \n",

703 | " {current_subtask}\n",

704 | " \n",

705 | "\n",

706 | " The reasoning for this subtask is:\n",

707 | " \n",

708 | " {reasoning}\n",

709 | " \n",

710 | "\n",

711 | " Here is the short-term memory (result of previous subtasks):\n",

712 | " \n",

713 | " {json.dumps(memory)}\n",

714 | " \n",

715 | "\n",

716 | " Determine the most appropriate action to take:\n",

717 | " - If the task requires a calculation or verification through code, use the 'generate_code' action.\n",

718 | " - If the task requires reasoning without code or calculations, use the 'reasoning' action.\n",

719 | "\n",

720 | " Consider the overall goal and previous results when determining the action.\n",

721 | "\n",

722 | " Return the result as a JSON object with 'action' and 'parameters' keys. The 'parameters' key should always be a dictionary with 'prompt' as a key.\n",

723 | "\n",

724 | " Example JSON responses:\n",

725 | "\n",

726 | " {{\n",

727 | " \"action\": \"generate_code\",\n",

728 | " \"parameters\": {{\"prompt\": \"Write a function to calculate the area of a circle.\"}}\n",

729 | " }}\n",

730 | "\n",

731 | " {{\n",

732 | " \"action\": \"reasoning\",\n",

733 | " \"parameters\": {{\"prompt\": \"Explain how to complete the subtask.\"}}\n",

734 | " }}\n",

735 | " \"\"\"\n",

736 | "\n",

737 | " response = json.loads(get_llm_response(\"groq\", prompt, json_mode=True))\n",

738 | " return response\n",

739 | "\n",

740 | "def evaluator(user_query: str, subtasks: List[str], current_subtask: str, action_info: Dict[str, Any], execution_result: Dict[str, Any], memory: List[Dict[str, Any]]) -> Dict[str, Any]:\n",

741 | " prompt = f\"\"\"Given the user's query (long-term goal): '{user_query}'\n",

742 | "\n",

743 | " The subtasks to complete to answer the user's query are:\n",

744 | " \n",

745 | " {json.dumps(subtasks)}\n",

746 | " \n",

747 | "\n",

748 | " The current subtask to complete is:\n",

749 | " \n",

750 | " {current_subtask}\n",

751 | " \n",

752 | "\n",

753 | " The result of the current subtask is:\n",

754 | " \n",

755 | " {action_info}\n",

756 | " \n",

757 | "\n",

758 | " The execution result of the current subtask is:\n",

759 | " \n",

760 | " {execution_result}\n",

761 | " \n",

762 | "\n",

763 | " Here is the short-term memory (result of previous subtasks):\n",

764 | " \n",

765 | " {json.dumps(memory)}\n",

766 | " \n",

767 | "\n",

768 | " Evaluate if the result is a reasonable answer for the current subtask, and makes sense in the context of the overall query.\n",

769 | "\n",

770 | " Return a JSON object with 'evaluation' (string) and 'retry' (boolean) keys.\n",

771 | "\n",

772 | " Example JSON response:\n",

773 | " {{\n",

774 | " \"evaluation\": \"The result is a reasonable answer for the current subtask.\",\n",

775 | " \"retry\": false\n",

776 | " }}\n",

777 | " \"\"\"\n",

778 | "\n",

779 | " response = json.loads(get_llm_response(\"groq\", prompt, json_mode=True))\n",

780 | " return response\n",

781 | "\n",

782 | "def final_answer_extractor(user_query: str, subtasks: List[str], memory: List[Dict[str, Any]]) -> str:\n",

783 | " prompt = f\"\"\"Given the user's query (long-term goal): '{user_query}'\n",

784 | "\n",

785 | " The subtasks completed to answer the user's query are:\n",

786 | " \n",

787 | " {json.dumps(subtasks)}\n",

788 | " \n",

789 | "\n",

790 | " The memory of the thought process (short-term memory) is:\n",

791 | " \n",

792 | " {json.dumps(memory)}\n",

793 | " \n",

794 | "\n",

795 | " Extract the final answer that directly addresses the user's query, from the memory.\n",

796 | " Provide only the essential information without unnecessary explanations.\n",

797 | "\n",

798 | " Return a JSON object with 'finalAnswer' as a key.\n",

799 | "\n",

800 | " Here is an example JSON response:\n",

801 | " {{\n",

802 | " \"finalAnswer\": \"The final answer to the user's query, addressing all aspects of the question, based on the memory provided\",\n",

803 | " }}\n",

804 | " \"\"\"\n",

805 | "\n",

806 | " response = json.loads(get_llm_response(\"groq\", prompt, json_mode=True))\n",

807 | " return response[\"finalAnswer\"]\n",

808 | "\n",

809 | "\n",

810 | "def generate_and_execute_code(prompt: str, user_query: str, memory: List[Dict[str, Any]]) -> Dict[str, Any]:\n",

811 | " code_generation_prompt = f\"\"\"\n",

812 | "\n",

813 | " Generate Python code to implement the following task: '{prompt}'\n",

814 | "\n",

815 | " Here is the overall goal of answering the user's query: '{user_query}'\n",

816 | "\n",

817 | " Keep in mind the results of the previous subtasks, and use them to complete the current subtask.\n",

818 | " \n",

819 | " {json.dumps(memory)}\n",

820 | " \n",

821 | "\n",

822 | "\n",

823 | " Here are the guidelines for generating the code:\n",

824 | " - Return only the Python code, without any explanations or markdown formatting.\n",

825 | " - The code should always print or return a value\n",

826 | " - Don't include any backticks or code blocks in your response. Do not include ```python or ``` in your response, just give me the code.\n",

827 | " - Do not ever use the input() function in your code, use defined values instead.\n",

828 | " - Do not ever use NLP techniques in your code, such as importing nltk, spacy, or any other NLP library.\n",

829 | " - Don't ever define a function in your code, just generate the code to execute the subtask.\n",

830 | " - Don't ever provide the execution result in your response, just give me the code.\n",

831 | " - If your code needs to import any libraries, do it within the code itself.\n",

832 | " - The code should be self-contained and ready to execute on its own.\n",

833 | " - Prioritize explicit details over assumed patterns\n",

834 | " - Avoid unnecessary complications in problem-solving\n",

835 | " \"\"\"\n",

836 | "\n",

837 | " generated_code = get_llm_response(\"groq\", code_generation_prompt)\n",

838 | "\n",

839 | "\n",

840 | " print(f\"\\n\\nGenerated Code: start|{generated_code}|END\\n\\n\")\n",

841 | "\n",

842 | " old_stdout = sys.stdout\n",

843 | " sys.stdout = buffer = io.StringIO()\n",

844 | "\n",

845 | " exec(generated_code)\n",

846 | "\n",

847 | " sys.stdout = old_stdout\n",

848 | " output = buffer.getvalue()\n",

849 | "\n",

850 | " print(f\"\\n\\n***** Execution Result: |start|{output.strip()}|end| *****\\n\\n\")\n",

851 | "\n",

852 | " return {\n",

853 | " \"generated_code\": generated_code,\n",

854 | " \"execution_result\": output.strip()\n",

855 | " }\n",

856 | "\n",

857 | "def executor(action: str, parameters: Dict[str, Any], user_query: str, memory: List[Dict[str, Any]]) -> Any:\n",

858 | " if action == \"generate_code\":\n",

859 | " print(f\"Generating code for: {parameters['prompt']}\")\n",

860 | " return generate_and_execute_code(parameters[\"prompt\"], user_query, memory)\n",

861 | " elif action == \"reasoning\":\n",

862 | " return parameters[\"prompt\"]\n",

863 | " else:\n",

864 | " return f\"Action '{action}' not implemented\"\n",

865 | "\n",

866 | "\n",

867 | "\n",

868 | "def autonomous_agent(user_query: str) -> List[Dict[str, Any]]:\n",

869 | " memory = []\n",

870 | " subtasks = planner(user_query)\n",

871 | "\n",

872 | " print(\"User Query:\", user_query)\n",

873 | " print(f\"Subtasks: {subtasks}\")\n",

874 | "\n",

875 | " for subtask in subtasks:\n",

876 | " max_retries = 1\n",

877 | " for attempt in range(max_retries):\n",

878 | "\n",

879 | " reasoning = reasoner(user_query, subtasks, subtask, memory)\n",

880 | " action_info = actioner(user_query, subtasks, subtask, reasoning, memory)\n",

881 | "\n",

882 | " print(f\"\\n\\n ****** Action Info: {action_info} ****** \\n\\n\")\n",

883 | "\n",

884 | " execution_result = executor(action_info[\"action\"], action_info[\"parameters\"], user_query, memory)\n",

885 | "\n",

886 | " print(f\"\\n\\n ****** Execution Result: {execution_result} ****** \\n\\n\")\n",

887 | " evaluation = evaluator(user_query, subtasks, subtask, action_info, execution_result, memory)\n",

888 | "\n",

889 | " step = {\n",

890 | " \"subtask\": subtask,\n",

891 | " \"reasoning\": reasoning,\n",

892 | " \"action\": action_info,\n",

893 | " \"evaluation\": evaluation\n",

894 | " }\n",

895 | " memory.append(step)\n",

896 | "\n",

897 | " print(f\"\\n\\nSTEP: {step}\\n\\n\")\n",

898 | "\n",

899 | " if not evaluation[\"retry\"]:\n",

900 | " break\n",

901 | "\n",

902 | " if attempt == max_retries - 1:\n",

903 | " print(f\"Max retries reached for subtask: {subtask}\")\n",

904 | "\n",

905 | " final_answer = final_answer_extractor(user_query, subtasks, memory)\n",

906 | " return final_answer\n"

907 | ],

908 | "metadata": {

909 | "id": "BpZ1hRaiD-hS"

910 | },

911 | "execution_count": 13,

912 | "outputs": []

913 | },

914 | {

915 | "cell_type": "code",

916 | "source": [

917 | "query = \"How many r's are in strawberry?\"\n",

918 | "result = autonomous_agent(query)\n",

919 | "print(\"FINAL ANSWER: \", result)"

920 | ],

921 | "metadata": {

922 | "id": "MUMjfWwcK7ig"

923 | },

924 | "execution_count": 15,

925 | "outputs": []

926 | },

927 | {

928 | "cell_type": "code",

929 | "source": [

930 | "result"

931 | ],

932 | "metadata": {

933 | "id": "BWjY2S2mxh3w",

934 | "colab": {

935 | "base_uri": "https://localhost:8080/",

936 | "height": 36

937 | },

938 | "outputId": "b020154f-e0ed-4336-fbc5-496dea0e672e"

939 | },

940 | "execution_count": 16,

941 | "outputs": [

942 | {

943 | "output_type": "execute_result",

944 | "data": {

945 | "text/plain": [

946 | "\"There are 3 r's in 'strawberry'.\""

947 | ],

948 | "application/vnd.google.colaboratory.intrinsic+json": {

949 | "type": "string"

950 | }

951 | },

952 | "metadata": {},

953 | "execution_count": 16

954 | }

955 | ]

956 | },

957 | {

958 | "cell_type": "code",

959 | "source": [],

960 | "metadata": {

961 | "id": "1BFyiCpRxh6I"

962 | },

963 | "execution_count": null,

964 | "outputs": []

965 | },

966 | {

967 | "cell_type": "markdown",

968 | "source": [

969 | "# OpenAI o1-preview model getting trivial questions wrong\n",

970 | "\n",

971 | "- [Link to Reddit post](https://www.reddit.com/r/ChatGPT/comments/1ff9w7y/new_o1_still_fails_miserably_at_trivial_questions/)\n",

972 | "- Links to ChatGPT threads: [(1)](https://chatgpt.com/share/66f21757-db2c-8012-8b0a-11224aed0c29), [(2)](https://chatgpt.com/share/66e3c1e5-ae00-8007-8820-fee9eb61eae5)\n",

973 | "- [Improving reasoning in LLMs through thoughtful prompting](https://www.reddit.com/r/singularity/comments/1fdhs2m/did_i_just_fix_the_data_overfitting_problem_in/?share_id=6DsDLJUu1qEx_bsqFDC8a&utm_content=2&utm_medium=ios_app&utm_name=ioscss&utm_source=share&utm_term=1)\n"

974 | ],

975 | "metadata": {

976 | "id": "MrSeaoPqq1qY"

977 | }

978 | },

979 | {

980 | "cell_type": "code",

981 | "source": [

982 | "query = \"The surgeon, who is the boy's father, says, 'I can't operate on this boy, he's my son!' Who is the surgeon to the boy?\"\n",

983 | "result = get_llm_response(\"openai\", query)\n",

984 | "print(result)"

985 | ],

986 | "metadata": {

987 | "colab": {

988 | "base_uri": "https://localhost:8080/"

989 | },

990 | "id": "rM4bcny1JliP",

991 | "outputId": "7a5eb793-e9ef-4eaf-8139-122983e04145"

992 | },

993 | "execution_count": null,

994 | "outputs": [

995 | {

996 | "output_type": "stream",

997 | "name": "stdout",

998 | "text": [

999 | "The surgeon is the boy's **mother**.\n",

1000 | "\n",

1001 | "This classic riddle challenges the assumption that a surgeon must be male. Here's the breakdown:\n",

1002 | "\n",

1003 | "- **The boy's father** is one person—the man who raised him.\n",

1004 | "- When the surgeon says, \"I can't operate on this boy; he's my son,\" it implies a parental relationship.\n",

1005 | "- Since we've already accounted for the father, the other parent is the **mother**.\n",

1006 | "- Therefore, the surgeon is the boy's mother.\n",

1007 | "\n",

1008 | "The riddle highlights how stereotypes can lead us to overlook obvious answers.\n"

1009 | ]

1010 | }

1011 | ]

1012 | },

1013 | {

1014 | "cell_type": "code",

1015 | "source": [

1016 | "query = \"The surgeon, who is the boy's father, says, 'I can't operate on this boy, he's my son!' Who is the surgeon to the boy?\"\n",

1017 | "result = autonomous_agent(query)\n",

1018 | "print(\"FINAL ANSWER: \", result)"

1019 | ],

1020 | "metadata": {

1021 | "colab": {

1022 | "base_uri": "https://localhost:8080/"

1023 | },

1024 | "id": "vXAPpgkpK7lG",

1025 | "outputId": "e8b18ff3-41f3-4554-a397-c1957689ecae"

1026 | },

1027 | "execution_count": null,

1028 | "outputs": [

1029 | {

1030 | "output_type": "stream",

1031 | "name": "stdout",

1032 | "text": [

1033 | "{'subtasks': ['reasoning: Determine the role of the surgeon in relation to the boy', 'generate_code: Identify the relation between the surgeon and the boy using family relationships, assuming the surgeon is the father and the boy is his son', 'reasoning: Based on the established relation, conclude who the surgeon is to the boy']}\n",

1034 | "User Query: The surgeon, who is the boy's father, says, 'I can't operate on this boy, he's my son!' Who is the surgeon to the boy?\n",

1035 | "Subtasks: ['reasoning: Determine the role of the surgeon in relation to the boy', 'generate_code: Identify the relation between the surgeon and the boy using family relationships, assuming the surgeon is the father and the boy is his son', 'reasoning: Based on the established relation, conclude who the surgeon is to the boy']\n",

1036 | "\n",

1037 | "\n",

1038 | " ****** Action Info: {'action': 'reasoning', 'parameters': {'prompt': \"Based on the sentence 'The surgeon, who is the boy's father, says, 'I can't operate on this boy, he's my son!' Identify the relation between the surgeon and the boy, assuming the surgeon is the father and the boy is his son.\"}} ****** \n",

1039 | "\n",

1040 | "\n",

1041 | "\n",

1042 | "\n",

1043 | " ****** Execution Result: Based on the sentence 'The surgeon, who is the boy's father, says, 'I can't operate on this boy, he's my son!' Identify the relation between the surgeon and the boy, assuming the surgeon is the father and the boy is his son. ****** \n",

1044 | "\n",

1045 | "\n",

1046 | "\n",

1047 | "\n",

1048 | "STEP: {'subtask': 'reasoning: Determine the role of the surgeon in relation to the boy', 'reasoning': 'Analyze the given sentence to identify roles of characters. Identify the boy as the son and the surgeon as the father.', 'action': {'action': 'reasoning', 'parameters': {'prompt': \"Based on the sentence 'The surgeon, who is the boy's father, says, 'I can't operate on this boy, he's my son!' Identify the relation between the surgeon and the boy, assuming the surgeon is the father and the boy is his son.\"}}, 'evaluation': {'evaluation': 'The result correctly identifies the task to determine the relation between the surgeon and the boy based on the provided sentence and the assumptions.', 'retry': False}}\n",

1049 | "\n",

1050 | "\n",

1051 | "\n",

1052 | "\n",

1053 | " ****** Action Info: {'action': 'generate_code', 'parameters': {'prompt': 'Identify the relation between the surgeon and the boy using family relationships.'}} ****** \n",

1054 | "\n",

1055 | "\n",

1056 | "Generating code for: Identify the relation between the surgeon and the boy using family relationships.\n",

1057 | "\n",

1058 | "\n",

1059 | "Generated Code: start|import numpy as np\n",

1060 | "\n",

1061 | "surgeon_role = \"father\"\n",

1062 | "boy_role = \"son\"\n",

1063 | "\n",

1064 | "relation = {\n",

1065 | " 'father': np.array(['son']),\n",

1066 | " 'son': np.array(['father']),\n",

1067 | " 'mother': np.array(['son','daughter']),\n",

1068 | " 'daughter': np.array(['father','mother'])\n",

1069 | "}\n",

1070 | "\n",

1071 | "relation_dict = {\"father\": \"son\", \"son\": \"father\"}\n",

1072 | "\n",

1073 | "print(relation_dict[surgeon_role])|END\n",

1074 | "\n",

1075 | "\n",

1076 | "\n",

1077 | "\n",

1078 | "***** Execution Result: |start|son|end| *****\n",

1079 | "\n",

1080 | "\n",

1081 | "\n",

1082 | "\n",

1083 | " ****** Execution Result: {'generated_code': 'import numpy as np\\n\\nsurgeon_role = \"father\"\\nboy_role = \"son\"\\n\\nrelation = {\\n \\'father\\': np.array([\\'son\\']),\\n \\'son\\': np.array([\\'father\\']),\\n \\'mother\\': np.array([\\'son\\',\\'daughter\\']),\\n \\'daughter\\': np.array([\\'father\\',\\'mother\\'])\\n}\\n\\nrelation_dict = {\"father\": \"son\", \"son\": \"father\"}\\n\\nprint(relation_dict[surgeon_role])', 'execution_result': 'son'} ****** \n",

1084 | "\n",

1085 | "\n",

1086 | "\n",

1087 | "\n",

1088 | "STEP: {'subtask': 'generate_code: Identify the relation between the surgeon and the boy using family relationships, assuming the surgeon is the father and the boy is his son', 'reasoning': \"Given the memory of the boy as the surgeon's son, we can use family relationships to establish that the surgeon is the father. We can use a simple inheritance relationship to represent this and get the result of the current subtask.\", 'action': {'action': 'generate_code', 'parameters': {'prompt': 'Identify the relation between the surgeon and the boy using family relationships.'}}, 'evaluation': {'evaluation': 'The generated code correctly determines the relation between the surgeon and the boy as father and son respectively and aligns with the overall goal.', 'retry': False}}\n",

1089 | "\n",

1090 | "\n",

1091 | "\n",

1092 | "\n",

1093 | " ****** Action Info: {'action': 'reasoning', 'parameters': {'prompt': \"Explain who the surgeon is to the boy given that the surgeon is the boy's father.\"}} ****** \n",

1094 | "\n",

1095 | "\n",

1096 | "\n",

1097 | "\n",

1098 | " ****** Execution Result: Explain who the surgeon is to the boy given that the surgeon is the boy's father. ****** \n",

1099 | "\n",

1100 | "\n",

1101 | "\n",

1102 | "\n",

1103 | "STEP: {'subtask': 'reasoning: Based on the established relation, conclude who the surgeon is to the boy', 'reasoning': \"We can conclude who the surgeon is to the boy based on the previously established relation. Given the surgeon as the boy's father, we can now state that the surgeon is the boy's parent specifically being his father.\", 'action': {'action': 'reasoning', 'parameters': {'prompt': \"Explain who the surgeon is to the boy given that the surgeon is the boy's father.\"}}, 'evaluation': {'evaluation': \"The result is correct as the surgeon is the boy's father based on the established relation of father-son, and aligns with the overall goal of determining the surgeon's relationship to the boy.\", 'retry': False}}\n",

1104 | "\n",

1105 | "\n",

1106 | "FINAL ANSWER: The surgeon is the boy's father.\n"

1107 | ]

1108 | }

1109 | ]

1110 | },

1111 | {

1112 | "cell_type": "code",

1113 | "source": [

1114 | "prompt = \"The Bear Puzzle: A hunter leaves his tent. He travels 5 steps due south, 5 steps due east, and 5 steps due north. He arrives back at his tent, and sees a brown bear inside it. What color was the bear?\"\n",

1115 | "\n",

1116 | "result = get_llm_response(\"openai\", prompt)\n",

1117 | "print(result)"

1118 | ],

1119 | "metadata": {

1120 | "colab": {

1121 | "base_uri": "https://localhost:8080/"

1122 | },

1123 | "id": "RjKgUDihI4lA",

1124 | "outputId": "90b37e7d-18d8-4629-8dc0-23204e2c37ff"

1125 | },

1126 | "execution_count": null,

1127 | "outputs": [

1128 | {

1129 | "output_type": "stream",

1130 | "name": "stdout",

1131 | "text": [

1132 | "To solve this puzzle, we need to analyze the hunter's movements and determine where on Earth this scenario could occur.\n",

1133 | "\n",

1134 | "**Hunter's Movements:**\n",

1135 | "1. The hunter starts at his tent.\n",

1136 | "2. He travels 5 steps due south.\n",

1137 | "3. He travels 5 steps due east.\n",

1138 | "4. He travels 5 steps due north.\n",

1139 | "5. He arrives back at his tent.\n",

1140 | "\n",

1141 | "**Analysis:**\n",

1142 | "\n",

1143 | "- **Normal Geometry:** In regular Euclidean geometry, moving south, then east, then north of equal distances would not bring you back to your starting point unless special conditions are met.\n",

1144 | " \n",

1145 | "- **Possible Locations:**\n",

1146 | " - **Near the North Pole:** At the North Pole, all directions point south. If you move 5 steps south from the North Pole, you are at a latitude where you can move east in a circle around the pole. Since the meridians converge at the poles, moving east at this latitude keeps you at a constant distance from the pole. Then, moving 5 steps north brings you back to the North Pole.\n",

1147 | "\n",

1148 | "- **Implication for the Bear:**\n",

1149 | " - **Polar Bears:** Polar bears are native to the Arctic region around the North Pole and are known for their white fur.\n",

1150 | "\n",

1151 | "**Conclusion:**\n",

1152 | "\n",

1153 | "Given that the only terrestrial location where the described path would return the hunter to his starting point is the North Pole—and the fact that polar bears (which are white) are found there—the color of the bear must be **white**.\n",

1154 | "\n",

1155 | "**Answer:** White.\n"

1156 | ]

1157 | }

1158 | ]

1159 | },

1160 | {

1161 | "cell_type": "code",

1162 | "source": [

1163 | "prompt = \"The Bear Puzzle: A hunter leaves his tent. He travels 5 steps due south, 5 steps due east, and 5 steps due north. He arrives back at his tent, and sees a brown bear inside it. What color was the bear?\"\n",

1164 | "\n",

1165 | "result = autonomous_agent(prompt)\n",

1166 | "print(result)"

1167 | ],

1168 | "metadata": {

1169 | "colab": {

1170 | "base_uri": "https://localhost:8080/"

1171 | },

1172 | "id": "5JNPSaGWKF5s",

1173 | "outputId": "8e347c6c-dd25-4073-b6a9-4181b67cc824"

1174 | },

1175 | "execution_count": null,

1176 | "outputs": [

1177 | {

1178 | "output_type": "stream",

1179 | "name": "stdout",

1180 | "text": [

1181 | "{'subtasks': [\"reasoning: Understand that the hunter's movements have no impact on the bear's initial color, since the puzzle doesn't mention the bear's movements or the color being changed by any physical means\", 'reasoning: Recognize that the puzzle is presenting an impossible scenario, where the hunter returns to an initially inaccessible location', \"reasoning: Deduce that the bear must have been placed there by an outside agent (e.g., another person) since the hunter cannot physically affect the bear's position in this way\", \"reasoning: Conclude that since there's no logical connection between the hunter's actions and the bear's initial color, the only solution is that the puzzle provided an outside piece of information that determined the bear's color\"]}\n",

1182 | "User Query: The Bear Puzzle: A hunter leaves his tent. He travels 5 steps due south, 5 steps due east, and 5 steps due north. He arrives back at his tent, and sees a brown bear inside it. What color was the bear?\n",

1183 | "Subtasks: [\"reasoning: Understand that the hunter's movements have no impact on the bear's initial color, since the puzzle doesn't mention the bear's movements or the color being changed by any physical means\", 'reasoning: Recognize that the puzzle is presenting an impossible scenario, where the hunter returns to an initially inaccessible location', \"reasoning: Deduce that the bear must have been placed there by an outside agent (e.g., another person) since the hunter cannot physically affect the bear's position in this way\", \"reasoning: Conclude that since there's no logical connection between the hunter's actions and the bear's initial color, the only solution is that the puzzle provided an outside piece of information that determined the bear's color\"]\n",

1184 | "\n",

1185 | "\n",

1186 | " ****** Action Info: {'action': 'reasoning', 'parameters': {'prompt': \"Carefully read the puzzle statement to identify implications of the hunter's movements and the absence of bear movements or color-changing mechanisms. Explain how this lack of logical connection affects the determination of the bear's color.\"}} ****** \n",

1187 | "\n",

1188 | "\n",

1189 | "\n",

1190 | "\n",

1191 | " ****** Execution Result: Carefully read the puzzle statement to identify implications of the hunter's movements and the absence of bear movements or color-changing mechanisms. Explain how this lack of logical connection affects the determination of the bear's color. ****** \n",

1192 | "\n",

1193 | "\n",

1194 | "\n",

1195 | "\n",

1196 | "STEP: {'subtask': \"reasoning: Understand that the hunter's movements have no impact on the bear's initial color, since the puzzle doesn't mention the bear's movements or the color being changed by any physical means\", 'reasoning': \"Read the puzzle statement carefully to identify any implications of the hunter's movements and the absence of bear movements or color-changing mechanisms. Identify the lack of logical connection between the hunter's actions and the bear's color.\", 'action': {'action': 'reasoning', 'parameters': {'prompt': \"Carefully read the puzzle statement to identify implications of the hunter's movements and the absence of bear movements or color-changing mechanisms. Explain how this lack of logical connection affects the determination of the bear's color.\"}}, 'evaluation': {'evaluation': \"The result is a good start, but it's slightly too narrowly focused on the physical impact of the hunter's movements on the bear. Consider broadening the explanation to include the absence of any mechanism that would change the bear's color.\", 'retry': False}}\n",

1197 | "\n",

1198 | "\n",

1199 | "\n",

1200 | "\n",

1201 | " ****** Action Info: {'action': 'reasoning', 'parameters': {'prompt': \"Explain why the hunter's return to his inaccessible location within the given steps is impossible and how to proceed with the analysis of the puzzle.\"}} ****** \n",

1202 | "\n",

1203 | "\n",

1204 | "\n",

1205 | "\n",

1206 | " ****** Execution Result: Explain why the hunter's return to his inaccessible location within the given steps is impossible and how to proceed with the analysis of the puzzle. ****** \n",

1207 | "\n",

1208 | "\n",

1209 | "\n",

1210 | "\n",

1211 | "STEP: {'subtask': 'reasoning: Recognize that the puzzle is presenting an impossible scenario, where the hunter returns to an initially inaccessible location', 'reasoning': \"Consider analyzing the hunter's steps to understand why he returns to his initially inaccessible tent. This will help in identifying the impossible scenario presented in the puzzle.\", 'action': {'action': 'reasoning', 'parameters': {'prompt': \"Explain why the hunter's return to his inaccessible location within the given steps is impossible and how to proceed with the analysis of the puzzle.\"}}, 'evaluation': {'evaluation': 'The result correctly identifies the impossible scenario presented by the puzzle and provides a clear prompt for further analysis.', 'retry': False}}\n",

1212 | "\n",

1213 | "\n",

1214 | "\n",

1215 | "\n",

1216 | " ****** Action Info: {'action': 'reasoning', 'parameters': {'prompt': \"Explain how the presence of another agent affects the initial color of the bear, considering the puzzle's presentation of an impossible scenario where the hunter returns to his initially inaccessible tent.\"}} ****** \n",

1217 | "\n",

1218 | "\n",

1219 | "\n",

1220 | "\n",

1221 | " ****** Execution Result: Explain how the presence of another agent affects the initial color of the bear, considering the puzzle's presentation of an impossible scenario where the hunter returns to his initially inaccessible tent. ****** \n",

1222 | "\n",

1223 | "\n",

1224 | "\n",

1225 | "\n",

1226 | "STEP: {'subtask': \"reasoning: Deduce that the bear must have been placed there by an outside agent (e.g., another person) since the hunter cannot physically affect the bear's position in this way\", 'reasoning': \"Since the hunter's movements have no physical impact on the bear, and the puzzle presents an impossible scenario, consider deducing that the bear's placement is the result of external intervention. Focus on explaining how the presence of another agent affects the initial color of the bear.\", 'action': {'action': 'reasoning', 'parameters': {'prompt': \"Explain how the presence of another agent affects the initial color of the bear, considering the puzzle's presentation of an impossible scenario where the hunter returns to his initially inaccessible tent.\"}}, 'evaluation': {'evaluation': 'The result is correct and makes sense in the context of the overall query. It logically follows from the previous subtasks and sets the stage for the next step in reasoning.', 'retry': False}}\n",

1227 | "\n",

1228 | "\n",

1229 | "\n",

1230 | "\n",

1231 | " ****** Action Info: {'action': 'reasoning', 'parameters': {'prompt': \"Explain how to proceed with the current subtask, clearly stating that since the bear's placement is the result of external intervention, its color must have been specified within the puzzle itself.\"}} ****** \n",

1232 | "\n",

1233 | "\n",

1234 | "\n",

1235 | "\n",