No speakers loaded.

24 | {% endif %} -------------------------------------------------------------------------------- /templates/deepstory.js: -------------------------------------------------------------------------------- 1 | $(document).ready(function () { 2 | function refresh_status() { 3 | $("#status").load("{{ url_for('status') }}", function () { 4 | $("#clearCache").click(function () { 5 | $.ajax({ 6 | url: "{{ url_for('clear') }}", 7 | success: function (message) { 8 | alert(message); 9 | refresh_status(); 10 | refresh_animate(); 11 | refresh_video(); 12 | }, 13 | error: function (response) { 14 | alert(response.responseText); 15 | } 16 | }); 17 | }); 18 | }); 19 | } 20 | function refresh_sent() { 21 | $("#sentences").load("{{ url_for('sentences') }}"); 22 | } 23 | function refresh_animate() { 24 | $("#tab-3").load("{{ url_for('animate') }}", function () { 25 | $("#animate").find("select").each(function () { 26 | let img = $('

3 |

19 | {% endif %}

--------------------------------------------------------------------------------

/templates/gpt2.html:

--------------------------------------------------------------------------------

1 |

4 |

18 |

5 |

17 | Generated sentences:

6 | {% for sentence in sentences %} 7 |

8 |

15 | {% endfor %}

16 |

9 |

11 | {{ sentence | replace('\n', '

') | safe }}

12 |

13 |

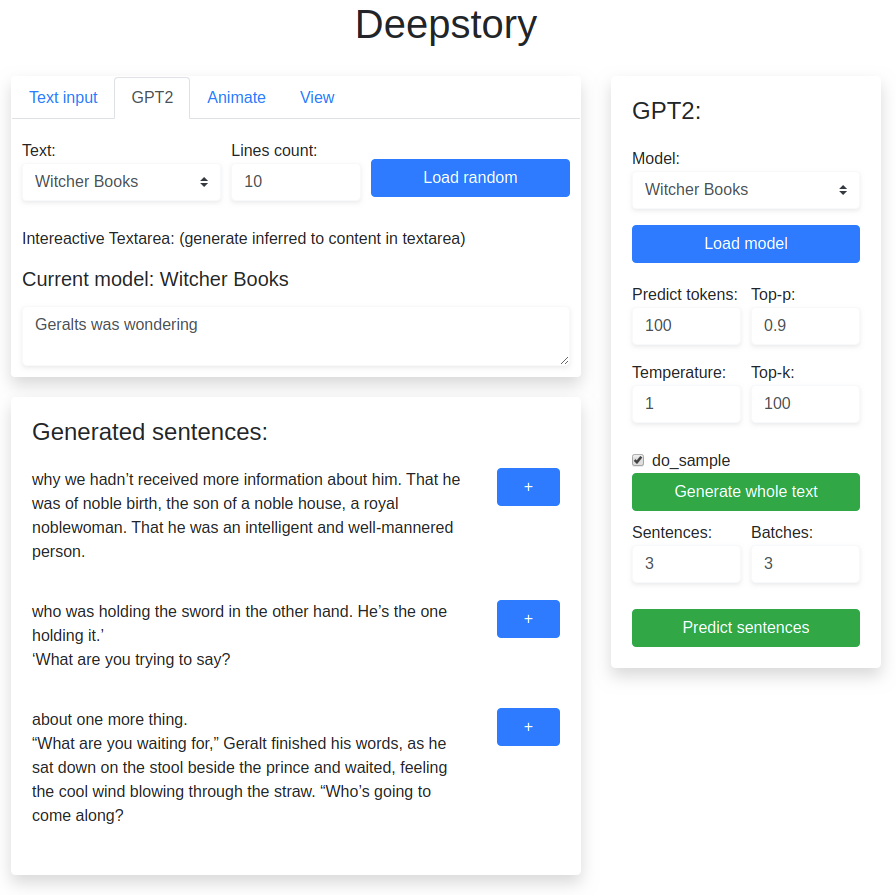

14 | Current model: {% if gpt2 %}{{ gpt2 }}{% else %}False{% endif %}

2 | -------------------------------------------------------------------------------- /templates/index.html: -------------------------------------------------------------------------------- 1 | 2 | 3 | 4 | 5 | 6 | 7 |

14 |

205 |

206 |

207 |

208 |

209 |

210 |

--------------------------------------------------------------------------------

/templates/map.html:

--------------------------------------------------------------------------------

1 | {% if speaker_map %}

2 | Deepstory

15 |

16 |

175 |

17 |

107 |

18 |

105 |

106 |

19 |

104 |

20 |

27 |

103 |

28 |

102 |

29 |

73 |

74 |

75 |

96 |

99 |

100 |

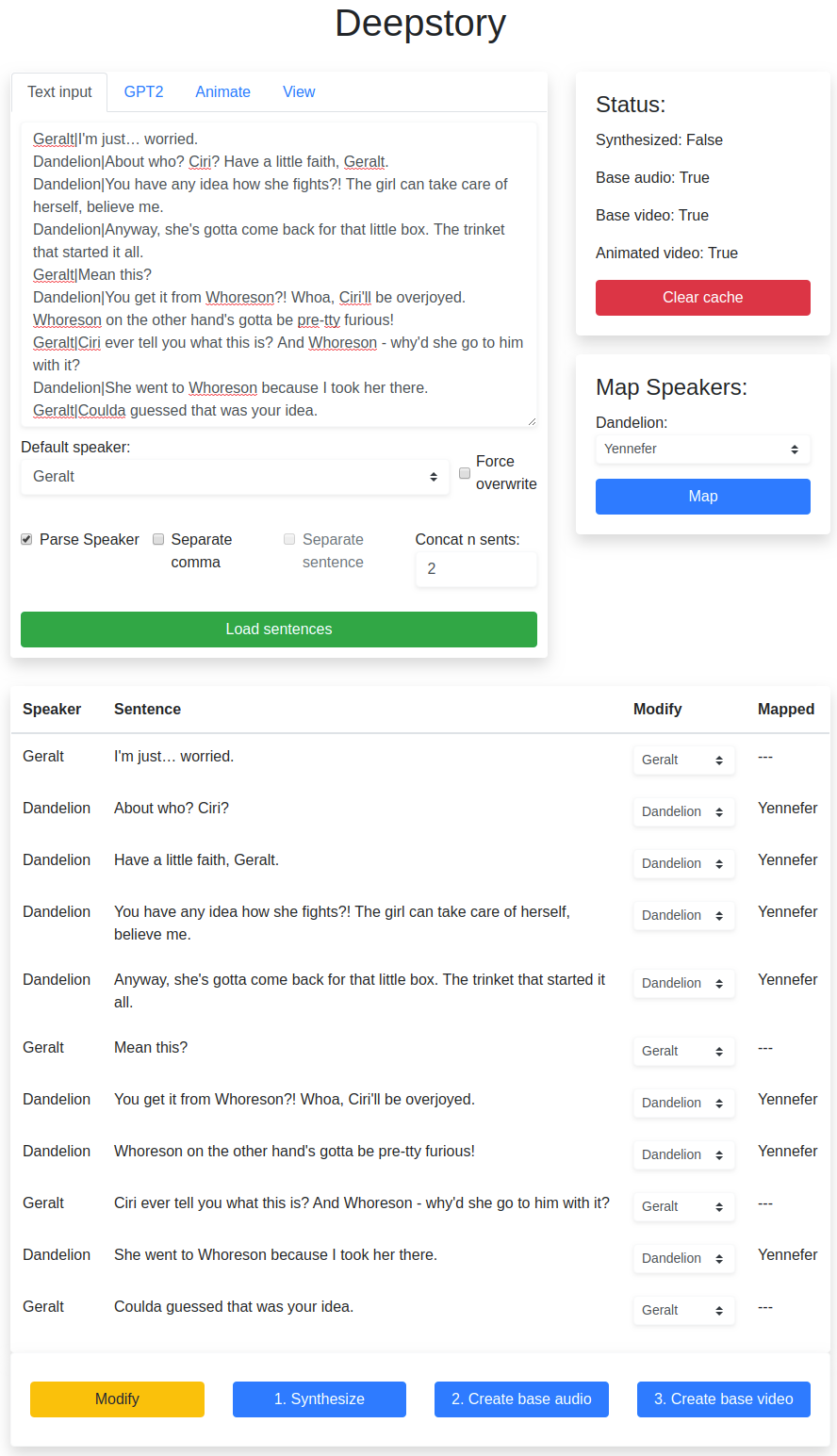

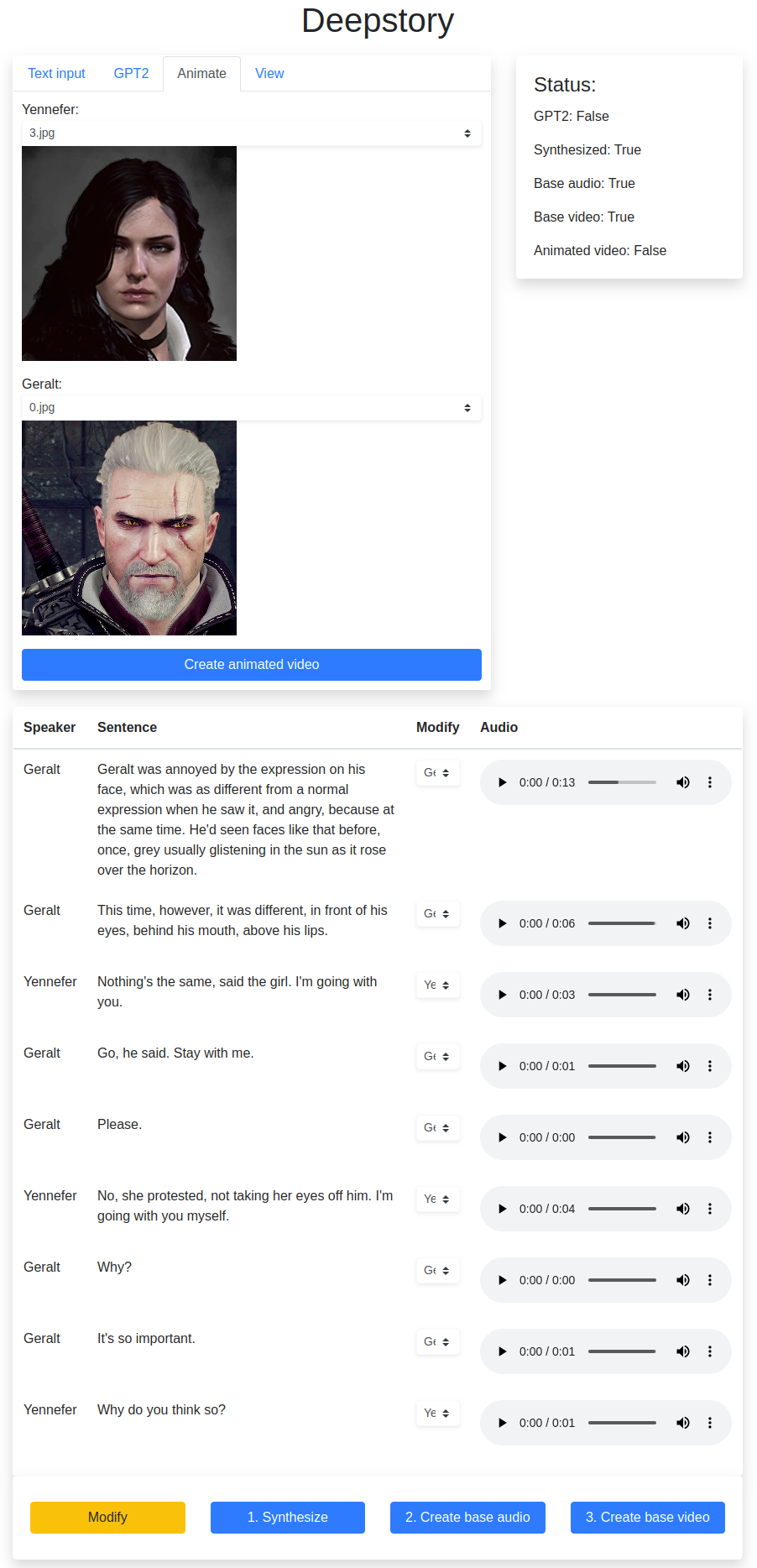

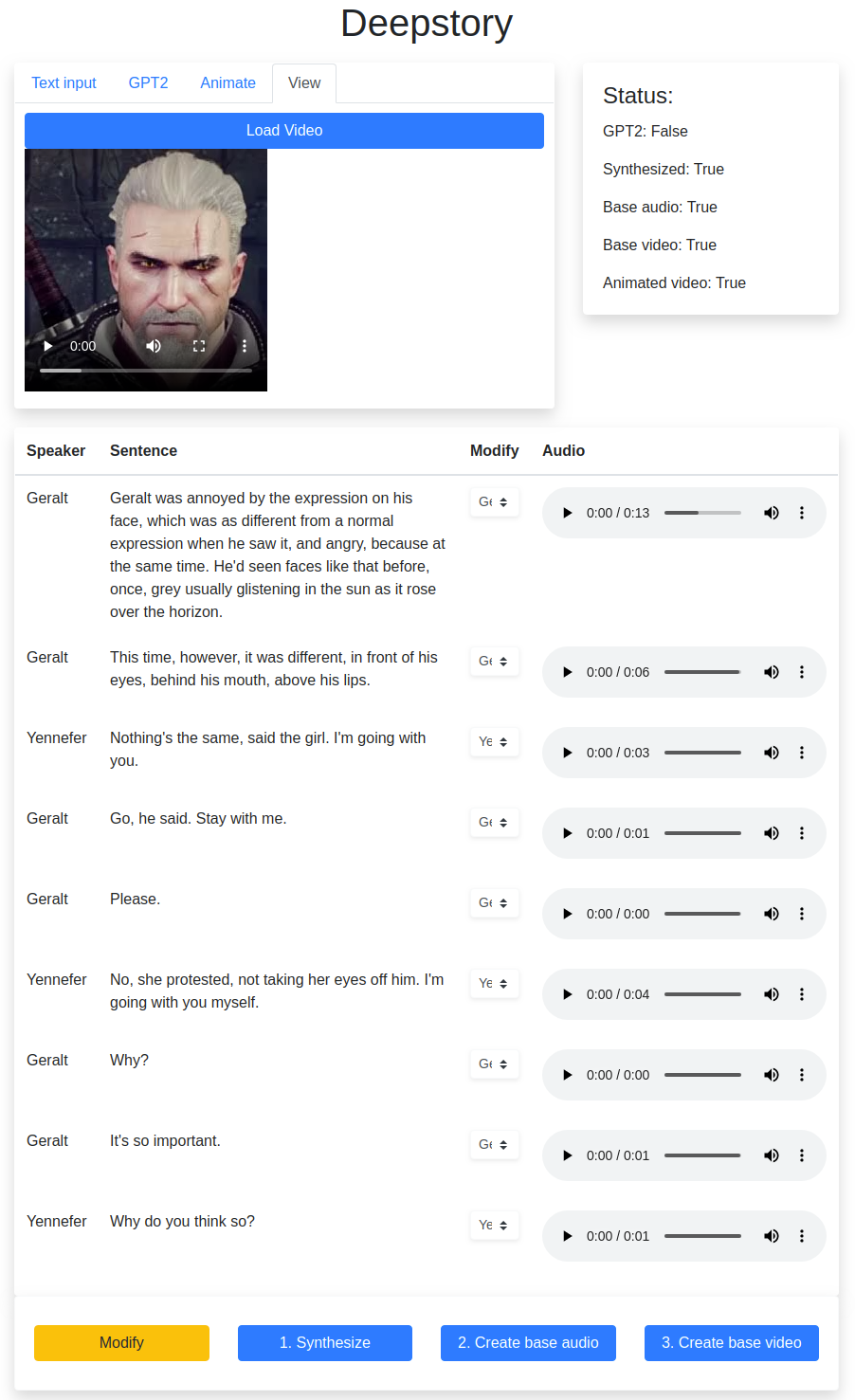

101 | Intereactive Textarea: (generate inferred to content in textarea)

97 | 98 |

108 |

109 |

110 |

174 |

111 |

173 |

112 |

172 |

113 |

171 |

114 |

170 | GPT2:

115 | 129 | 154 | 169 |

176 |

204 |

177 |

203 |

178 |

179 |

180 |

202 |

181 |

201 |

182 |

200 |

183 |

184 |

185 |

186 |

188 |

189 |

190 |

193 |

194 |

195 |

198 |

199 |

3 |

21 | {% endif %}

--------------------------------------------------------------------------------

/templates/models.html:

--------------------------------------------------------------------------------

1 |

4 |

20 |

5 |

19 | Map Speakers:

6 | 18 |-

2 | {% for model in model_list %}

3 |

- {{ model }} 4 | {% endfor %} 5 |

| Speaker | 5 |Sentence | 6 |Modify | 7 | {% if speaker_map %}Mapped | {% endif %} 8 | {% if 'wav' in sentences[0] %}Audio | {% endif %} 9 |

|---|---|---|---|---|

| {{ sentence['speaker'] }} | 15 |{{ sentence['text'] }} | 16 |17 | 31 | | 32 | {% if speaker_map %} 33 |34 | {% if sentence['speaker'] in speaker_map %}{{ speaker_map[sentence['speaker']] }}{% else %}---{% endif %} 35 | | 36 | {% endif %} 37 | {% if 'wav' in sentence %} 38 |39 | 43 | | 44 | {% endif %} 45 |

2 |

--------------------------------------------------------------------------------

/templates/video.html:

--------------------------------------------------------------------------------

1 | {% if animated %}

2 |

3 | {% else %}

4 |

3 |

12 |

4 |

11 | Status:

5 |Synthesized: {% if synthsized %}True{% else %}False{% endif %}

6 |Base audio: {% if combined %}True{% else %}False{% endif %}

7 |Base video: {% if base %}True{% else %}False{% endif %}

8 |Animated video: {% if animated %}True{% else %}False{% endif %}

9 | 10 |No videos animated.

5 | {% endif %} -------------------------------------------------------------------------------- /test.py: -------------------------------------------------------------------------------- https://raw.githubusercontent.com/thetobysiu/deepstory/34eb7b1771479b996f361c291dc36b88ca25bd17/test.py -------------------------------------------------------------------------------- /text.txt: -------------------------------------------------------------------------------- 1 | The taller of the women suddenly swayed, legs planted widely apart, and twisted her hips. Her sabre, which no one saw her draw, hissed sharply through the air. The spotty-faced man’s head flew upwards in an arc and fell into the gaping opening to the dungeon. His body toppled stiffly and heavily, like a tree being felled, among the crushed bricks. The crowd let out a scream. The second woman, hand on her sword hilt, whirled around nimbly, protecting her partner’s back. Needlessly. The crowd, stumbling and falling over on the rubble, fled towards the town as fast as they could. The Alderman loped at the front with impressive strides, outdistancing the huge butcher by only a few yards. 2 | ‘An excellent stroke,’ the white-haired man commented coldly, shielding his eyes from the sun with a black-gloved hand. ‘An excellent stroke from a Zerrikanian sabre. I bow before the skill and beauty of the free warriors. I’m Geralt of Rivia.’ 3 | ‘And I,’ the stranger in the dark brown tunic pointed at the faded coat of arms on the front of his garment, depicting three black birds sitting in a row in the centre of a uniformly gold field, ‘am Borch, also known as Three Jackdaws. And these are my girls, Téa and Véa. That’s what I call them, because you’ll twist your tongue on their right names. They are both, as you correctly surmised, Zerrikanian.’ 4 | ‘Thanks to them, it appears, I still have my horse and belongings. I thank you, warriors. My thanks to you too, sir.’ 5 | ‘Three Jackdaws. And you can drop the “sir”. Does anything detain you in this little town, Geralt of Rivia?’ 6 | ‘Quite the opposite.’ 7 | ‘Excellent. I have a proposal. Not far from here, at the crossroads on the road to the river port, is an inn. It’s called the Pensive Dragon. The vittals there have no equal in these parts. I’m heading there with food and lodging in mind. It would be my honour should you choose to keep me company.’ 8 | ‘Borch.’ The white-haired man turned around from his horse and looked into the stranger’s bright eyes. ‘I wouldn’t want anything left unclear between us. I’m a witcher.’ 9 | ‘I guessed as much. But you said it as you might have said “I’m a leper”.’ 10 | ‘There are those,’ Geralt said slowly, ‘who prefer the company of lepers to that of a witcher.’ 11 | ‘There are also those,’ Three Jackdaws laughed, ‘who prefer sheep to girls. Ah, well, one can only sympathise with the former and the latter. I repeat my proposal.’ 12 | Geralt took off his glove and shook the hand being proffered. -------------------------------------------------------------------------------- /util.py: -------------------------------------------------------------------------------- 1 | # SIU KING WAI SM4701 Deepstory 2 | import re 3 | import copy 4 | import spacy 5 | import librosa 6 | import numpy as np 7 | 8 | from unidecode import unidecode 9 | from modules.dctts import hp 10 | from pydub import AudioSegment, effects 11 | 12 | 13 | def quote_boundaries(doc): 14 | for token in doc[:-1]: 15 | # if token.text == "“" or token.text == "”": 16 | # doc[token.i + 1].is_sent_start = True 17 | if token.text == "“": 18 | doc[token.i + 1].is_sent_start = True 19 | return doc 20 | 21 | 22 | nlp = spacy.load('en_core_web_sm') 23 | nlp.add_pipe(quote_boundaries, before="parser") 24 | nlp_no_comma = copy.deepcopy(nlp) 25 | sentencizer = nlp.create_pipe("sentencizer") 26 | sentencizer.punct_chars.add(',') 27 | sentencizer_no_comma = nlp_no_comma.create_pipe("sentencizer") 28 | nlp.add_pipe(sentencizer, first=True) 29 | nlp_no_comma.add_pipe(sentencizer_no_comma, first=True) 30 | 31 | 32 | def normalize_text(text): 33 | """Normalize text so that some punctuations that indicate pauses will be replaced as commas""" 34 | replace_list = [ 35 | [r'(\.\.\.)$|…$', '.'], 36 | [r'\(|\)|:|;| “|(\s*-+\s+)|(\s+-+\s*)|\s*-{2,}\s*|(\.\.\.)|…|—', ', '], 37 | [r'\s*,[^\w]*,\s*', ', '], # capture multiple commas 38 | [r'\s*,\s*', ', '], # format commas 39 | [r'\.,', '.'], 40 | [r'[‘’“”]', ''] # strip quote 41 | ] 42 | for regex, replacement in replace_list: 43 | text = re.sub(regex, replacement, text) 44 | text = unidecode(text) # Get rid of the accented characters 45 | text = text.lower() 46 | text = re.sub(f"[^{hp.vocab}]", " ", text) 47 | text = re.sub(r' +', ' ', text).strip() 48 | return text 49 | 50 | 51 | def fix_text(text): 52 | """fix text for pasting content from the book""" 53 | replace_list = [ 54 | [r'(\w)’(\w)', r"\1'\2"], # fix apostrophe for content from books 55 | ] 56 | for regex, replacement in replace_list: 57 | text = re.sub(regex, replacement, text) 58 | text = re.sub(r' +', ' ', text) 59 | 60 | return text 61 | 62 | 63 | def trim_text(generated_text, max_sentences=0, script=False): 64 | """trim unfinished sentence generated by GPT2""" 65 | # remove this replacement character for utf-8, a bug? 66 | generated_text = generated_text.replace(b'\xef\xbf\xbd'.decode('utf-8'), '') 67 | if script: 68 | generated_text = generated_text.replace('\n', '') 69 | text_list = re.findall(r'.*?[.!\?…—][’”]*', generated_text, re.DOTALL) 70 | if script: 71 | text_list = ['\n' + text if text[0].isupper() else text for text in text_list] 72 | 73 | # if limit the max_sentence 74 | if max_sentences: 75 | # find all sentences and parsed as list and select the first nth items and join them back 76 | return ''.join(text_list[:max_sentences]) 77 | else: 78 | return ''.join(text_list) 79 | 80 | 81 | # backup... 82 | # # select until the last punctuation using regex, and create an nlp object for counting sentences 83 | # text_list = [*nlp_no_comma(re.findall(r'.*[.!\?’”]', generated_text, re.DOTALL)[0]).sents] 84 | # # figure out how to select max sentence(which structure) 85 | # text_list = re.findall(r'.*?[.!\?]|.*\w+', generated_text, re.DOTALL) 86 | # for i in reversed(range(1, len(text_list))): 87 | # try: 88 | # while not text_list[i][0].isalpha() and text_list[i][0] != '“' and text_list[i][0] != '‘' and text_list[i][0] != ' ': 89 | # text_list[i - 1] = text_list[i - 1] + text_list[i][0] 90 | # text_list[i] = text_list[i][1:] 91 | # if not text_list: 92 | # break 93 | # except IndexError: 94 | # print('ok') 95 | # if not any(text_list[-1][-1] == x for x in ['.', '!', '?']): 96 | # del text_list[-1] 97 | # if max_sentences: 98 | # text_list = [text.text for i, text in enumerate(text_list) if i < max_sentences] 99 | # else: 100 | # text_list = [text.text for text in text_list] 101 | # return ' '.join(text_list) 102 | 103 | 104 | def separate(text, n_gram, comma, max_len=30): 105 | _nlp = nlp if comma else nlp_no_comma 106 | lines = [] 107 | line = '' 108 | counter = 0 109 | for sent in _nlp(text).sents: 110 | if sent.text: 111 | if counter == 0: 112 | line = sent.text 113 | else: 114 | line = f'{line} {sent.text}' 115 | counter += 1 116 | 117 | if counter == n_gram: 118 | lines.append(_nlp(line)) 119 | line = '' 120 | counter = 0 121 | 122 | # for remaining sentences 123 | if line: 124 | lines.append(_nlp(line)) 125 | 126 | return lines 127 | 128 | 129 | def get_duration(second): 130 | return int(hp.sr * second) 131 | 132 | 133 | def normalize_audio(wav): 134 | # normalize the audio with pydub 135 | audioseg = AudioSegment(wav.tobytes(), sample_width=2, frame_rate=hp.sr, channels=1) 136 | # normalized = effects.normalize(audioseg, self.norm_factor) 137 | normalized = audioseg.apply_gain(-30 - audioseg.dBFS) 138 | wav = np.array(normalized.get_array_of_samples()) 139 | return wav 140 | 141 | 142 | # from my audio processing project 143 | def split_audio_to_list(source, preemph=True, preemphasis=0.8, min_diff=1500, min_size=get_duration(1), db=80): 144 | if preemph: 145 | source = np.append(source[0], source[1:] - preemphasis * source[:-1]) 146 | split_list = librosa.effects.split(source, top_db=db).tolist() 147 | i = len(split_list) - 1 148 | while i > 0: 149 | if split_list[i][-1] - split_list[i][0] > min_size: 150 | now = split_list[i][0] 151 | prev = split_list[i - 1][1] 152 | diff = now - prev 153 | if diff < min_diff: 154 | split_list[i - 1] = [split_list[i - 1][0], split_list.pop(i)[1]] 155 | else: 156 | split_list.pop(i) 157 | i -= 1 158 | 159 | # make sure nothing is trimmed away 160 | split_list[0][0] = 0 161 | split_list[-1][1] = len(source) 162 | for i in reversed(range(len(split_list))): 163 | if i != 0: 164 | split_list[i][0] = split_list[i - 1][1] 165 | 166 | return split_list 167 | -------------------------------------------------------------------------------- /voice.py: -------------------------------------------------------------------------------- 1 | # SIU KING WAI SM4701 Deepstory 2 | import numpy as np 3 | import torch 4 | import glob 5 | 6 | from util import normalize_text, normalize_audio 7 | from modules.dctts import Text2Mel, SSRN, hp, spectrogram2wav 8 | 9 | torch.set_grad_enabled(False) 10 | device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu") 11 | 12 | 13 | class Voice: 14 | def __init__(self, speaker): 15 | self.speaker = speaker 16 | self.text2mel = None 17 | self.ssrn = None 18 | 19 | def __enter__(self): 20 | self.load() 21 | return self 22 | 23 | def __exit__(self, exc_type, exc_val, exc_tb): 24 | self.close() 25 | 26 | def load(self): 27 | self.text2mel = Text2Mel(hp.vocab).to(device).eval() 28 | self.text2mel.load_state_dict(torch.load(glob.glob(f'data/dctts/{self.speaker}/t2m*.pth')[0])['state_dict']) 29 | self.ssrn = SSRN().to(device).eval() 30 | self.ssrn.load_state_dict(torch.load(f'data/dctts/{self.speaker}/ssrn.pth')['state_dict']) 31 | 32 | def close(self): 33 | del self.text2mel 34 | del self.ssrn 35 | torch.cuda.empty_cache() 36 | 37 | # referenced from original repo 38 | def synthesize(self, text, timeout=10000): 39 | with torch.no_grad(): # no grad to save memory 40 | normalized_text = normalize_text(text) + "E" # text normalization, E: EOS 41 | L = torch.from_numpy(np.array([[hp.char2idx[char] for char in normalized_text]], np.long)).to(device) 42 | zeros = torch.from_numpy(np.zeros((1, hp.n_mels, 1), np.float32)).to(device) 43 | Y = zeros 44 | 45 | for i in range(timeout): 46 | _, Y_t, A = self.text2mel(L, Y, monotonic_attention=True) 47 | Y = torch.cat((zeros, Y_t), -1) 48 | _, attention = torch.max(A[0, :, -1], 0) 49 | attention = attention.item() 50 | if L[0, attention] == hp.vocab.index('E'): # EOS 51 | break 52 | 53 | _, Z = self.ssrn(Y) # batch ssrn instead? 54 | Z = Z.cpu().detach().numpy() 55 | 56 | wav = spectrogram2wav(Z[0, :, :].T) 57 | wav = normalize_audio(wav) 58 | return wav 59 | -------------------------------------------------------------------------------- 36 |

37 |

36 |

37 |  38 |

39 |

38 |

39 |  40 |

41 |

40 |

41 |  42 |

43 | ## Folder structure

44 | ```

45 | Deepstory

46 | ├── animator.py

47 | ├── app.py

48 | ├── data

49 | │ ├── dctts

50 | │ │ ├── Geralt

51 | │ │ │ ├── ssrn.pth

52 | │ │ │ └── t2m.pth

53 | │ │ ├── LJ

54 | │ │ │ ├── ssrn.pth

55 | │ │ │ └── t2m.pth

56 | │ │ └── Yennefer

57 | │ │ ├── ssrn.pth

58 | │ │ └── t2m.pth

59 | │ ├── fom

60 | │ │ ├── vox-256.yaml

61 | │ │ ├── vox-adv-256.yaml

62 | │ │ ├── vox-adv-cpk.pth.tar

63 | │ │ └── vox-cpk.pth.tar

64 | │ ├── gpt2

65 | │ │ ├── Waiting for Godot

66 | │ │ │ ├── config.json

67 | │ │ │ ├── default.txt

68 | │ │ │ ├── merges.txt

69 | │ │ │ ├── pytorch_model.bin

70 | │ │ │ ├── special_tokens_map.json

71 | │ │ │ ├── text.txt

72 | │ │ │ ├── tokenizer_config.json

73 | │ │ │ └── vocab.json

74 | │ │ └── Witcher Books

75 | │ │ ├── config.json

76 | │ │ ├── default.txt

77 | │ │ ├── merges.txt

78 | │ │ ├── pytorch_model.bin

79 | │ │ ├── special_tokens_map.json

80 | │ │ ├── text.txt

81 | │ │ ├── tokenizer_config.json

82 | │ │ └── vocab.json

83 | │ ├── images

84 | │ │ ├── Geralt

85 | │ │ │ ├── 0.jpg

86 | │ │ │ └── fx.jpg

87 | │ │ └── Yennefer

88 | │ │ ├── 0.jpg

89 | │ │ ├── 1.jpg

90 | │ │ ├── 2.jpg

91 | │ │ ├── 3.jpg

92 | │ │ ├── 4.jpg

93 | │ │ └── 5.jpg

94 | │ └── sda

95 | │ ├── grid.dat

96 | │ └── image.bmp

97 | ├── deepstory.py

98 | ├── generate.py

99 | ├── modules

100 | │ ├── dctts

101 | │ │ ├── audio.py

102 | │ │ ├── hparams.py

103 | │ │ ├── __init__.py

104 | │ │ ├── layers.py

105 | │ │ ├── ssrn.py

106 | │ │ └── text2mel.py

107 | │ ├── fom

108 | │ │ ├── animate.py

109 | │ │ ├── dense_motion.py

110 | │ │ ├── generator.py

111 | │ │ ├── __init__.py

112 | │ │ ├── keypoint_detector.py

113 | │ │ ├── sync_batchnorm

114 | │ │ │ ├── batchnorm.py

115 | │ │ │ ├── comm.py

116 | │ │ │ ├── __init__.py

117 | │ │ │ └── replicate.py

118 | │ │ └── util.py

119 | │ └── sda

120 | │ ├── encoder_audio.py

121 | │ ├── encoder_image.py

122 | │ ├── img_generator.py

123 | │ ├── __init__.py

124 | │ ├── rnn_audio.py

125 | │ ├── sda.py

126 | │ └── utils.py

127 | ├── README.md

128 | ├── requirements.txt

129 | ├── static

130 | │ ├── bootstrap

131 | │ │ ├── css

132 | │ │ │ └── bootstrap.min.css

133 | │ │ └── js

134 | │ │ └── bootstrap.min.js

135 | │ ├── css

136 | │ │ └── styles.css

137 | │ └── js

138 | │ └── jquery.min.js

139 | ├── templates

140 | │ ├── animate.html

141 | │ ├── deepstory.js

142 | │ ├── gen_sentences.html

143 | │ ├── gpt2.html

144 | │ ├── index.html

145 | │ ├── map.html

146 | │ ├── models.html

147 | │ ├── sentences.html

148 | │ ├── status.html

149 | │ └── video.html

150 | ├── test.py

151 | ├── text.txt

152 | ├── util.py

153 | └── voice.py

154 | ```

155 |

156 | ## Complete project download

157 | They are available at the google drive version of this project. All the models(including Geralt, Yennefer) are included.

158 |

159 | You have to download the spacy english model first.

160 |

161 | make sure you have ffmpeg installed in your computer, and ffmpeg-python installed.

162 |

163 | https://drive.google.com/drive/folders/1AxORLF-QFd2wSORzMOKlvCQSFhdZSODJ?usp=sharing

164 |

165 | To simplify things, a google colab version will be released soon...

166 |

167 | ## Requirements

168 | It is required to have an nvidia GPU with at least 4GB of VRAM to run this project

169 |

170 | ## Credits

171 | https://github.com/tugstugi/pytorch-dc-tts

172 |

173 | https://github.com/DinoMan/speech-driven-animation

174 |

175 | https://github.com/AliaksandrSiarohin/first-order-model

176 |

177 | https://github.com/huggingface/transformers

178 |

179 | ## Notes

180 | The whole project uses PyTorch, while tensorflow is listed in requirements.txt, it was used for transformers to convert a model trained from gpt-2-simple to a Pytorch model.

181 |

182 | Only the files inside modules folder are slightly modified from the original. The remaining files are all written by me, except some parts that are referenced.

183 |

184 | ## Bugs

185 | There's still some memory issues if you synthesize sentences within a session over and over, but it takes at least 10 times to cause memory overflow.

186 |

187 | ## Training models

188 | There are other repos of tools that I created to preprocess the files. They can be found in my profile.

--------------------------------------------------------------------------------

/animator.py:

--------------------------------------------------------------------------------

1 | # SIU KING WAI SM4701 Deepstory

2 | # mostly referenced from demo.py of first order model github repo, optimized loading in gpu vram

3 | import imageio

4 | import yaml

5 | import torch

6 | import torch.nn.functional as F

7 | import numpy as np

8 |

9 | from modules.fom import OcclusionAwareGenerator, KPDetector, DataParallelWithCallback, normalize_kp

10 | device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

11 |

12 |

13 | class ImageAnimator:

14 | def __init__(self):

15 | self.config_path = 'data/fom/vox-256.yaml'

16 | self.checkpoint_path = 'data/fom/vox-cpk.pth.tar'

17 | self.generator = None

18 | self.kp_detector = None

19 |

20 | def __enter__(self):

21 | self.load()

22 | return self

23 |

24 | def __exit__(self, exc_type, exc_val, exc_tb):

25 | self.close()

26 |

27 | def load(self):

28 | with open(self.config_path) as f:

29 | config = yaml.load(f)

30 |

31 | self.generator = OcclusionAwareGenerator(**config['model_params']['generator_params'],

32 | **config['model_params']['common_params']).to(device)

33 |

34 | self.kp_detector = KPDetector(**config['model_params']['kp_detector_params'],

35 | **config['model_params']['common_params']).to(device)

36 |

37 | checkpoint = torch.load(self.checkpoint_path)

38 |

39 | self.generator.load_state_dict(checkpoint['generator'])

40 | self.kp_detector.load_state_dict(checkpoint['kp_detector'])

41 |

42 | del checkpoint

43 |

44 | self.generator = DataParallelWithCallback(self.generator)

45 | self.kp_detector = DataParallelWithCallback(self.kp_detector)

46 |

47 | self.generator.eval()

48 | self.kp_detector.eval()

49 |

50 | def close(self):

51 | del self.generator

52 | del self.kp_detector

53 | torch.cuda.empty_cache()

54 |

55 | def animate_image(self, source_image, driving_video, output_path, relative=True, adapt_movement_scale=True):

56 | with torch.no_grad():

57 | predictions = []

58 | # ====================================================================================

59 | # adapted from original to optimize memory load in gpu instead of cpu

60 | source_image = imageio.imread(source_image)

61 | # normalize color to float 0-1

62 | source = torch.from_numpy(source_image[np.newaxis].astype(np.float32)).to('cuda') / 255

63 | del source_image

64 | source = source.permute(0, 3, 1, 2)

65 | # resize

66 | source = F.interpolate(source, size=(256, 256), mode='area')

67 |

68 | # modified to fit speech driven animation

69 | driving = torch.from_numpy(driving_video).to('cuda') / 255

70 | del driving_video

71 | driving = F.interpolate(driving, scale_factor=2, mode='bilinear', align_corners=False)

72 | # pad the left and right side of the scaled 128x96->256x192 to fit 256x256

73 | driving = F.pad(input=driving, pad=(32, 32, 0, 0, 0, 0, 0, 0), mode='constant', value=0)

74 | driving = driving.permute(1, 0, 2, 3).unsqueeze(0)

75 | # ====================================================================================

76 | kp_source = self.kp_detector(source)

77 | kp_driving_initial = self.kp_detector(driving[:, :, 0])

78 |

79 | for frame_idx in range(driving.shape[2]):

80 | driving_frame = driving[:, :, frame_idx]

81 | kp_driving = self.kp_detector(driving_frame)

82 | kp_norm = normalize_kp(kp_source=kp_source, kp_driving=kp_driving,

83 | kp_driving_initial=kp_driving_initial, use_relative_movement=relative,

84 | use_relative_jacobian=relative, adapt_movement_scale=adapt_movement_scale)

85 | out = self.generator(source, kp_source=kp_source, kp_driving=kp_norm)

86 | out['prediction'] *= 255

87 | out['prediction'] = out['prediction'].byte()

88 | # predictions.append(out['prediction'][0].cpu().numpy())

89 | predictions.append(out['prediction'].permute(0, 2, 3, 1)[0].cpu().numpy())

90 | imageio.mimsave(output_path, predictions, fps=25)

91 |

--------------------------------------------------------------------------------

/app.py:

--------------------------------------------------------------------------------

1 | # SIU KING WAI SM4701 Deepstory

2 | from flask import Flask, render_template, request, make_response, send_from_directory

3 | from deepstory import Deepstory

4 | app = Flask(__name__)

5 | deepstory = Deepstory()

6 | print('Deepstory instance created')

7 |

8 |

9 | def send_message(message, status=200):

10 | response = make_response(message, status)

11 | response.mimetype = "text/plain"

12 | return response

13 |

14 |

15 | @app.route('/')

16 | def index():

17 | return render_template('index.html', model_list=deepstory.model_list, gpt2_list=deepstory.gpt2_list,

18 | image_dict=deepstory.image_dict, speaker_map=deepstory.speaker_map_dict)

19 |

20 |

21 | @app.route('/map')

22 | def map_page():

23 | return render_template('map.html', model_list=deepstory.model_list, speaker_map=deepstory.speaker_map_dict)

24 |

25 |

26 | @app.route('/deepstory.js')

27 | def deepstoryjs():

28 | response = make_response(render_template('deepstory.js'))

29 | response.mimetype = "text/javascript"

30 | return response

31 |

32 |

33 | @app.route('/status')

34 | def status():

35 | return render_template('status.html', synthsized=deepstory.is_synthesized,

36 | combined=deepstory.is_processed, base=deepstory.is_base, animated=deepstory.is_animated)

37 |

38 |

39 | @app.route('/gpt2')

40 | def gpt2():

41 | return render_template('gpt2.html', gpt2=deepstory.current_gpt2, generated_text=deepstory.generated_text)

42 |

43 |

44 | @app.route('/gen_sents')

45 | def gen_sents():

46 | return render_template('gen_sentences.html', sentences=deepstory.generated_sentences)

47 |

48 |

49 | @app.route('/sentences')

50 | def sentences():

51 | return render_template('sentences.html',

52 | sentences=deepstory.sentence_dicts, model_list=deepstory.model_list,

53 | speaker_map=deepstory.speaker_map_dict)

54 |

55 |

56 | @app.route('/load_text')

57 | def load_text():

58 | model = request.args.get('model')

59 | lines_no = int(request.args.get('lines'))

60 | try:

61 | deepstory.load_text(model, lines_no)

62 | return send_message(f'{lines_no} in {model} loaded.')

63 | except FileNotFoundError:

64 | return send_message(f'Text file not found.', 403)

65 |

66 |

67 | @app.route('/load_gpt2', methods=['GET'])

68 | def load_gpt2():

69 | model = request.args.get('model')

70 | if deepstory.gpt2:

71 | if deepstory.current_gpt2 == model:

72 | return send_message(f'{model} is already loaded.', 403)

73 | deepstory.load_gpt2(model)

74 | return send_message(f'{model} loaded.')

75 |

76 |

77 | @app.route('/generate_text', methods=['POST'])

78 | def generate_text():

79 | if deepstory.gpt2:

80 | text = request.form.get('text')

81 | predict_length = int(request.form.get('predict_length'))

82 | top_p = float(request.form.get('top_p'))

83 | top_k = int(request.form.get('top_k'))

84 | temperature = float(request.form.get('temperature'))

85 | do_sample = bool(request.form.get('do_sample'))

86 | deepstory.generate_text_gpt2(text, predict_length, top_p, top_k, temperature, do_sample)

87 | return send_message(f'Generated.')

88 | else:

89 | return send_message(f'Please load a GPT2 model first.', 403)

90 |

91 |

92 | @app.route('/generate_sents', methods=['POST'])

93 | def generate_sents():

94 | if deepstory.gpt2:

95 | text = request.form.get('text')

96 | predict_length = int(request.form.get('predict_length'))

97 | top_p = float(request.form.get('top_p'))

98 | top_k = int(request.form.get('top_k'))

99 | temperature = float(request.form.get('temperature'))

100 | do_sample = bool(request.form.get('do_sample'))

101 | batches = int(request.form.get('batches'))

102 | max_sentences = int(request.form.get('max_sentences'))

103 | deepstory.generate_sents_gpt2(

104 | text, predict_length, top_p, top_k, temperature, do_sample, batches, max_sentences)

105 | return send_message(f'Generated.')

106 | else:

107 | return send_message(f'Please load a GPT2 model first.', 403)

108 |

109 |

110 | @app.route('/add_sent', methods=['GET'])

111 | def add_sent():

112 | sent_id = int(request.args.get('id'))

113 | deepstory.add_sent(sent_id)

114 | return send_message(f'Sentence {sent_id} added.')

115 |

116 |

117 | @app.route('/load_sentence', methods=['POST'])

118 | def load_sentence():

119 | text = request.form.get('text')

120 | speaker = request.form.get('speaker')

121 | if text:

122 | is_comma = bool(request.form.get('isComma'))

123 | is_chopped = bool(request.form.get('isChopped'))

124 | is_speaker = bool(request.form.get('isSpeaker'))

125 | force = bool(request.form.get('force'))

126 | n = int(request.form.get('n'))

127 | deepstory.parse_text(text,

128 | n_gram=n,

129 | default_speaker=speaker,

130 | separate_comma=is_comma,

131 | separate_sentence=is_chopped,

132 | parse_speaker=is_speaker,

133 | force_parse=force)

134 | return send_message(f'Sentences loaded.')

135 | else:

136 | return send_message('Please enter text.', 403)

137 |

138 |

139 | @app.route('/animate', methods=['GET', 'POST'])

140 | def animate():

141 | if request.method == 'POST':

142 | deepstory.animate_image(request.form)

143 | return send_message(f'Images animated.')

144 | elif request.method == 'GET':

145 | return render_template('animate.html',

146 | image_dict=deepstory.image_dict,

147 | loaded_speakers=deepstory.get_base_speakers())

148 |

149 |

150 | @app.route('/modify', methods=['POST'])

151 | def modify():

152 | deepstory.modify_speaker(request.json)

153 | return send_message(f'Speaker updated.')

154 |

155 |

156 | @app.route('/clear')

157 | def clear():

158 | deepstory.clear_cache()

159 | return send_message(f'Cache cleared.')

160 |

161 |

162 | @app.route('/update_map', methods=['POST'])

163 | def update_map():

164 | deepstory.update_mapping(request.form)

165 | return send_message(f'Mapping updated.')

166 |

167 |

168 | @app.route('/synthesize')

169 | def synthesize():

170 | if deepstory.sentence_dicts:

171 | try:

172 | deepstory.synthesize_wavs()

173 | return send_message(f'Sentences synthesized.')

174 | except FileNotFoundError:

175 | return send_message("One of the model doesn't exist, please modify to something else.", 403)

176 | else:

177 | return send_message('Please enter text.', 403)

178 |

179 |

180 | @app.route('/combine')

181 | def combine():

182 | try:

183 | deepstory.process_wavs()

184 | return send_message(f'Clip created.')

185 | except (KeyError, ValueError):

186 | return send_message('No audio is synthesized to be combined', 403)

187 | # except:

188 | # return send_message('Unknown Error.', 403)

189 |

190 |

191 | @app.route('/create_base')

192 | def create_base():

193 | if deepstory.is_processed:

194 | deepstory.wav_to_vid()

195 | return send_message(f'Base video created.')

196 | else:

197 | return send_message('No audio is synthesized to be processed', 403)

198 |

199 |

200 | @app.route("/wav/

42 |

43 | ## Folder structure

44 | ```

45 | Deepstory

46 | ├── animator.py

47 | ├── app.py

48 | ├── data

49 | │ ├── dctts

50 | │ │ ├── Geralt

51 | │ │ │ ├── ssrn.pth

52 | │ │ │ └── t2m.pth

53 | │ │ ├── LJ

54 | │ │ │ ├── ssrn.pth

55 | │ │ │ └── t2m.pth

56 | │ │ └── Yennefer

57 | │ │ ├── ssrn.pth

58 | │ │ └── t2m.pth

59 | │ ├── fom

60 | │ │ ├── vox-256.yaml

61 | │ │ ├── vox-adv-256.yaml

62 | │ │ ├── vox-adv-cpk.pth.tar

63 | │ │ └── vox-cpk.pth.tar

64 | │ ├── gpt2

65 | │ │ ├── Waiting for Godot

66 | │ │ │ ├── config.json

67 | │ │ │ ├── default.txt

68 | │ │ │ ├── merges.txt

69 | │ │ │ ├── pytorch_model.bin

70 | │ │ │ ├── special_tokens_map.json

71 | │ │ │ ├── text.txt

72 | │ │ │ ├── tokenizer_config.json

73 | │ │ │ └── vocab.json

74 | │ │ └── Witcher Books

75 | │ │ ├── config.json

76 | │ │ ├── default.txt

77 | │ │ ├── merges.txt

78 | │ │ ├── pytorch_model.bin

79 | │ │ ├── special_tokens_map.json

80 | │ │ ├── text.txt

81 | │ │ ├── tokenizer_config.json

82 | │ │ └── vocab.json

83 | │ ├── images

84 | │ │ ├── Geralt

85 | │ │ │ ├── 0.jpg

86 | │ │ │ └── fx.jpg

87 | │ │ └── Yennefer

88 | │ │ ├── 0.jpg

89 | │ │ ├── 1.jpg

90 | │ │ ├── 2.jpg

91 | │ │ ├── 3.jpg

92 | │ │ ├── 4.jpg

93 | │ │ └── 5.jpg

94 | │ └── sda

95 | │ ├── grid.dat

96 | │ └── image.bmp

97 | ├── deepstory.py

98 | ├── generate.py

99 | ├── modules

100 | │ ├── dctts

101 | │ │ ├── audio.py

102 | │ │ ├── hparams.py

103 | │ │ ├── __init__.py

104 | │ │ ├── layers.py

105 | │ │ ├── ssrn.py

106 | │ │ └── text2mel.py

107 | │ ├── fom

108 | │ │ ├── animate.py

109 | │ │ ├── dense_motion.py

110 | │ │ ├── generator.py

111 | │ │ ├── __init__.py

112 | │ │ ├── keypoint_detector.py

113 | │ │ ├── sync_batchnorm

114 | │ │ │ ├── batchnorm.py

115 | │ │ │ ├── comm.py

116 | │ │ │ ├── __init__.py

117 | │ │ │ └── replicate.py

118 | │ │ └── util.py

119 | │ └── sda

120 | │ ├── encoder_audio.py

121 | │ ├── encoder_image.py

122 | │ ├── img_generator.py

123 | │ ├── __init__.py

124 | │ ├── rnn_audio.py

125 | │ ├── sda.py

126 | │ └── utils.py

127 | ├── README.md

128 | ├── requirements.txt

129 | ├── static

130 | │ ├── bootstrap

131 | │ │ ├── css

132 | │ │ │ └── bootstrap.min.css

133 | │ │ └── js

134 | │ │ └── bootstrap.min.js

135 | │ ├── css

136 | │ │ └── styles.css

137 | │ └── js

138 | │ └── jquery.min.js

139 | ├── templates

140 | │ ├── animate.html

141 | │ ├── deepstory.js

142 | │ ├── gen_sentences.html

143 | │ ├── gpt2.html

144 | │ ├── index.html

145 | │ ├── map.html

146 | │ ├── models.html

147 | │ ├── sentences.html

148 | │ ├── status.html

149 | │ └── video.html

150 | ├── test.py

151 | ├── text.txt

152 | ├── util.py

153 | └── voice.py

154 | ```

155 |

156 | ## Complete project download

157 | They are available at the google drive version of this project. All the models(including Geralt, Yennefer) are included.

158 |

159 | You have to download the spacy english model first.

160 |

161 | make sure you have ffmpeg installed in your computer, and ffmpeg-python installed.

162 |

163 | https://drive.google.com/drive/folders/1AxORLF-QFd2wSORzMOKlvCQSFhdZSODJ?usp=sharing

164 |

165 | To simplify things, a google colab version will be released soon...

166 |

167 | ## Requirements

168 | It is required to have an nvidia GPU with at least 4GB of VRAM to run this project

169 |

170 | ## Credits

171 | https://github.com/tugstugi/pytorch-dc-tts

172 |

173 | https://github.com/DinoMan/speech-driven-animation

174 |

175 | https://github.com/AliaksandrSiarohin/first-order-model

176 |

177 | https://github.com/huggingface/transformers

178 |

179 | ## Notes

180 | The whole project uses PyTorch, while tensorflow is listed in requirements.txt, it was used for transformers to convert a model trained from gpt-2-simple to a Pytorch model.

181 |

182 | Only the files inside modules folder are slightly modified from the original. The remaining files are all written by me, except some parts that are referenced.

183 |

184 | ## Bugs

185 | There's still some memory issues if you synthesize sentences within a session over and over, but it takes at least 10 times to cause memory overflow.

186 |

187 | ## Training models

188 | There are other repos of tools that I created to preprocess the files. They can be found in my profile.

--------------------------------------------------------------------------------

/animator.py:

--------------------------------------------------------------------------------

1 | # SIU KING WAI SM4701 Deepstory

2 | # mostly referenced from demo.py of first order model github repo, optimized loading in gpu vram

3 | import imageio

4 | import yaml

5 | import torch

6 | import torch.nn.functional as F

7 | import numpy as np

8 |

9 | from modules.fom import OcclusionAwareGenerator, KPDetector, DataParallelWithCallback, normalize_kp

10 | device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

11 |

12 |

13 | class ImageAnimator:

14 | def __init__(self):

15 | self.config_path = 'data/fom/vox-256.yaml'

16 | self.checkpoint_path = 'data/fom/vox-cpk.pth.tar'

17 | self.generator = None

18 | self.kp_detector = None

19 |

20 | def __enter__(self):

21 | self.load()

22 | return self

23 |

24 | def __exit__(self, exc_type, exc_val, exc_tb):

25 | self.close()

26 |

27 | def load(self):

28 | with open(self.config_path) as f:

29 | config = yaml.load(f)

30 |

31 | self.generator = OcclusionAwareGenerator(**config['model_params']['generator_params'],

32 | **config['model_params']['common_params']).to(device)

33 |

34 | self.kp_detector = KPDetector(**config['model_params']['kp_detector_params'],

35 | **config['model_params']['common_params']).to(device)

36 |

37 | checkpoint = torch.load(self.checkpoint_path)

38 |

39 | self.generator.load_state_dict(checkpoint['generator'])

40 | self.kp_detector.load_state_dict(checkpoint['kp_detector'])

41 |

42 | del checkpoint

43 |

44 | self.generator = DataParallelWithCallback(self.generator)

45 | self.kp_detector = DataParallelWithCallback(self.kp_detector)

46 |

47 | self.generator.eval()

48 | self.kp_detector.eval()

49 |

50 | def close(self):

51 | del self.generator

52 | del self.kp_detector

53 | torch.cuda.empty_cache()

54 |

55 | def animate_image(self, source_image, driving_video, output_path, relative=True, adapt_movement_scale=True):

56 | with torch.no_grad():

57 | predictions = []

58 | # ====================================================================================

59 | # adapted from original to optimize memory load in gpu instead of cpu

60 | source_image = imageio.imread(source_image)

61 | # normalize color to float 0-1

62 | source = torch.from_numpy(source_image[np.newaxis].astype(np.float32)).to('cuda') / 255

63 | del source_image

64 | source = source.permute(0, 3, 1, 2)

65 | # resize

66 | source = F.interpolate(source, size=(256, 256), mode='area')

67 |

68 | # modified to fit speech driven animation

69 | driving = torch.from_numpy(driving_video).to('cuda') / 255

70 | del driving_video

71 | driving = F.interpolate(driving, scale_factor=2, mode='bilinear', align_corners=False)

72 | # pad the left and right side of the scaled 128x96->256x192 to fit 256x256

73 | driving = F.pad(input=driving, pad=(32, 32, 0, 0, 0, 0, 0, 0), mode='constant', value=0)

74 | driving = driving.permute(1, 0, 2, 3).unsqueeze(0)

75 | # ====================================================================================

76 | kp_source = self.kp_detector(source)

77 | kp_driving_initial = self.kp_detector(driving[:, :, 0])

78 |

79 | for frame_idx in range(driving.shape[2]):

80 | driving_frame = driving[:, :, frame_idx]

81 | kp_driving = self.kp_detector(driving_frame)

82 | kp_norm = normalize_kp(kp_source=kp_source, kp_driving=kp_driving,

83 | kp_driving_initial=kp_driving_initial, use_relative_movement=relative,

84 | use_relative_jacobian=relative, adapt_movement_scale=adapt_movement_scale)

85 | out = self.generator(source, kp_source=kp_source, kp_driving=kp_norm)

86 | out['prediction'] *= 255

87 | out['prediction'] = out['prediction'].byte()

88 | # predictions.append(out['prediction'][0].cpu().numpy())

89 | predictions.append(out['prediction'].permute(0, 2, 3, 1)[0].cpu().numpy())

90 | imageio.mimsave(output_path, predictions, fps=25)

91 |

--------------------------------------------------------------------------------

/app.py:

--------------------------------------------------------------------------------

1 | # SIU KING WAI SM4701 Deepstory

2 | from flask import Flask, render_template, request, make_response, send_from_directory

3 | from deepstory import Deepstory

4 | app = Flask(__name__)

5 | deepstory = Deepstory()

6 | print('Deepstory instance created')

7 |

8 |

9 | def send_message(message, status=200):

10 | response = make_response(message, status)

11 | response.mimetype = "text/plain"

12 | return response

13 |

14 |

15 | @app.route('/')

16 | def index():

17 | return render_template('index.html', model_list=deepstory.model_list, gpt2_list=deepstory.gpt2_list,

18 | image_dict=deepstory.image_dict, speaker_map=deepstory.speaker_map_dict)

19 |

20 |

21 | @app.route('/map')

22 | def map_page():

23 | return render_template('map.html', model_list=deepstory.model_list, speaker_map=deepstory.speaker_map_dict)

24 |

25 |

26 | @app.route('/deepstory.js')

27 | def deepstoryjs():

28 | response = make_response(render_template('deepstory.js'))

29 | response.mimetype = "text/javascript"

30 | return response

31 |

32 |

33 | @app.route('/status')

34 | def status():

35 | return render_template('status.html', synthsized=deepstory.is_synthesized,

36 | combined=deepstory.is_processed, base=deepstory.is_base, animated=deepstory.is_animated)

37 |

38 |

39 | @app.route('/gpt2')

40 | def gpt2():

41 | return render_template('gpt2.html', gpt2=deepstory.current_gpt2, generated_text=deepstory.generated_text)

42 |

43 |

44 | @app.route('/gen_sents')

45 | def gen_sents():

46 | return render_template('gen_sentences.html', sentences=deepstory.generated_sentences)

47 |

48 |

49 | @app.route('/sentences')

50 | def sentences():

51 | return render_template('sentences.html',

52 | sentences=deepstory.sentence_dicts, model_list=deepstory.model_list,

53 | speaker_map=deepstory.speaker_map_dict)

54 |

55 |

56 | @app.route('/load_text')

57 | def load_text():

58 | model = request.args.get('model')

59 | lines_no = int(request.args.get('lines'))

60 | try:

61 | deepstory.load_text(model, lines_no)

62 | return send_message(f'{lines_no} in {model} loaded.')

63 | except FileNotFoundError:

64 | return send_message(f'Text file not found.', 403)

65 |

66 |

67 | @app.route('/load_gpt2', methods=['GET'])

68 | def load_gpt2():

69 | model = request.args.get('model')

70 | if deepstory.gpt2:

71 | if deepstory.current_gpt2 == model:

72 | return send_message(f'{model} is already loaded.', 403)

73 | deepstory.load_gpt2(model)

74 | return send_message(f'{model} loaded.')

75 |

76 |

77 | @app.route('/generate_text', methods=['POST'])

78 | def generate_text():

79 | if deepstory.gpt2:

80 | text = request.form.get('text')

81 | predict_length = int(request.form.get('predict_length'))

82 | top_p = float(request.form.get('top_p'))

83 | top_k = int(request.form.get('top_k'))

84 | temperature = float(request.form.get('temperature'))

85 | do_sample = bool(request.form.get('do_sample'))

86 | deepstory.generate_text_gpt2(text, predict_length, top_p, top_k, temperature, do_sample)

87 | return send_message(f'Generated.')

88 | else:

89 | return send_message(f'Please load a GPT2 model first.', 403)

90 |

91 |

92 | @app.route('/generate_sents', methods=['POST'])

93 | def generate_sents():

94 | if deepstory.gpt2:

95 | text = request.form.get('text')

96 | predict_length = int(request.form.get('predict_length'))

97 | top_p = float(request.form.get('top_p'))

98 | top_k = int(request.form.get('top_k'))

99 | temperature = float(request.form.get('temperature'))

100 | do_sample = bool(request.form.get('do_sample'))

101 | batches = int(request.form.get('batches'))

102 | max_sentences = int(request.form.get('max_sentences'))

103 | deepstory.generate_sents_gpt2(

104 | text, predict_length, top_p, top_k, temperature, do_sample, batches, max_sentences)

105 | return send_message(f'Generated.')

106 | else:

107 | return send_message(f'Please load a GPT2 model first.', 403)

108 |

109 |

110 | @app.route('/add_sent', methods=['GET'])

111 | def add_sent():

112 | sent_id = int(request.args.get('id'))

113 | deepstory.add_sent(sent_id)

114 | return send_message(f'Sentence {sent_id} added.')

115 |

116 |

117 | @app.route('/load_sentence', methods=['POST'])

118 | def load_sentence():

119 | text = request.form.get('text')

120 | speaker = request.form.get('speaker')

121 | if text:

122 | is_comma = bool(request.form.get('isComma'))

123 | is_chopped = bool(request.form.get('isChopped'))

124 | is_speaker = bool(request.form.get('isSpeaker'))

125 | force = bool(request.form.get('force'))

126 | n = int(request.form.get('n'))

127 | deepstory.parse_text(text,

128 | n_gram=n,

129 | default_speaker=speaker,

130 | separate_comma=is_comma,

131 | separate_sentence=is_chopped,

132 | parse_speaker=is_speaker,

133 | force_parse=force)

134 | return send_message(f'Sentences loaded.')

135 | else:

136 | return send_message('Please enter text.', 403)

137 |

138 |

139 | @app.route('/animate', methods=['GET', 'POST'])

140 | def animate():

141 | if request.method == 'POST':

142 | deepstory.animate_image(request.form)

143 | return send_message(f'Images animated.')

144 | elif request.method == 'GET':

145 | return render_template('animate.html',

146 | image_dict=deepstory.image_dict,

147 | loaded_speakers=deepstory.get_base_speakers())

148 |

149 |

150 | @app.route('/modify', methods=['POST'])

151 | def modify():

152 | deepstory.modify_speaker(request.json)

153 | return send_message(f'Speaker updated.')

154 |

155 |

156 | @app.route('/clear')

157 | def clear():

158 | deepstory.clear_cache()

159 | return send_message(f'Cache cleared.')

160 |

161 |

162 | @app.route('/update_map', methods=['POST'])

163 | def update_map():

164 | deepstory.update_mapping(request.form)

165 | return send_message(f'Mapping updated.')

166 |

167 |

168 | @app.route('/synthesize')

169 | def synthesize():

170 | if deepstory.sentence_dicts:

171 | try:

172 | deepstory.synthesize_wavs()

173 | return send_message(f'Sentences synthesized.')

174 | except FileNotFoundError:

175 | return send_message("One of the model doesn't exist, please modify to something else.", 403)

176 | else:

177 | return send_message('Please enter text.', 403)

178 |

179 |

180 | @app.route('/combine')

181 | def combine():

182 | try:

183 | deepstory.process_wavs()

184 | return send_message(f'Clip created.')

185 | except (KeyError, ValueError):

186 | return send_message('No audio is synthesized to be combined', 403)

187 | # except:

188 | # return send_message('Unknown Error.', 403)

189 |

190 |

191 | @app.route('/create_base')

192 | def create_base():

193 | if deepstory.is_processed:

194 | deepstory.wav_to_vid()

195 | return send_message(f'Base video created.')

196 | else:

197 | return send_message('No audio is synthesized to be processed', 403)

198 |

199 |

200 | @app.route("/wav/