get access to advanced cloud features like transparent bottomless object storage

5 |

don't waste time running high performance, highly available TimescaleDB and PostgreSQL in the cloud

6 |

7 |

8 |

9 |

--------------------------------------------------------------------------------

/_partials/_consider-cloud.md:

--------------------------------------------------------------------------------

1 |

2 | Timescale is a fully managed service with automatic backup and restore, high

3 | availability with replication, seamless scaling and resizing, and much more. You

4 | can try Timescale free for thirty days.

5 |

6 |

--------------------------------------------------------------------------------

/_partials/_create-hypertable-energy.md:

--------------------------------------------------------------------------------

1 | import OldCreateHypertable from "versionContent/_partials/_old-api-create-hypertable.mdx";

2 | import HypertableIntro from "versionContent/_partials/_tutorials_hypertable_intro.mdx";

3 |

4 | ## Optimize time-series data in hypertables

5 |

6 |

7 |

8 |

9 |

10 | 1. To create a $HYPERTABLE to store the energy consumption data, call [CREATE TABLE][hypertable-create-table].

11 |

12 | ```sql

13 | CREATE TABLE "metrics"(

14 | created timestamp with time zone default now() not null,

15 | type_id integer not null,

16 | value double precision not null

17 | ) WITH (

18 | tsdb.hypertable,

19 | tsdb.partition_column='time'

20 | );

21 | ```

22 |

23 |

24 |

25 |

26 |

27 |

28 | [hypertable-create-table]: /api/:currentVersion:/hypertable/create_table/

29 | [indexing]: /use-timescale/:currentVersion:/schema-management/indexing/

30 |

--------------------------------------------------------------------------------

/_partials/_data_model_metadata.md:

--------------------------------------------------------------------------------

1 |

2 | You might also notice that the metadata fields are missing. Because this is a

3 | relational database, metadata can be stored in a secondary table and `JOIN`ed at

4 | query time. Learn more about [Timescale's support for `JOIN`s](#joins-with-relational-data).

5 |

6 |

--------------------------------------------------------------------------------

/_partials/_datadog-data-exporter.md:

--------------------------------------------------------------------------------

1 |

2 |

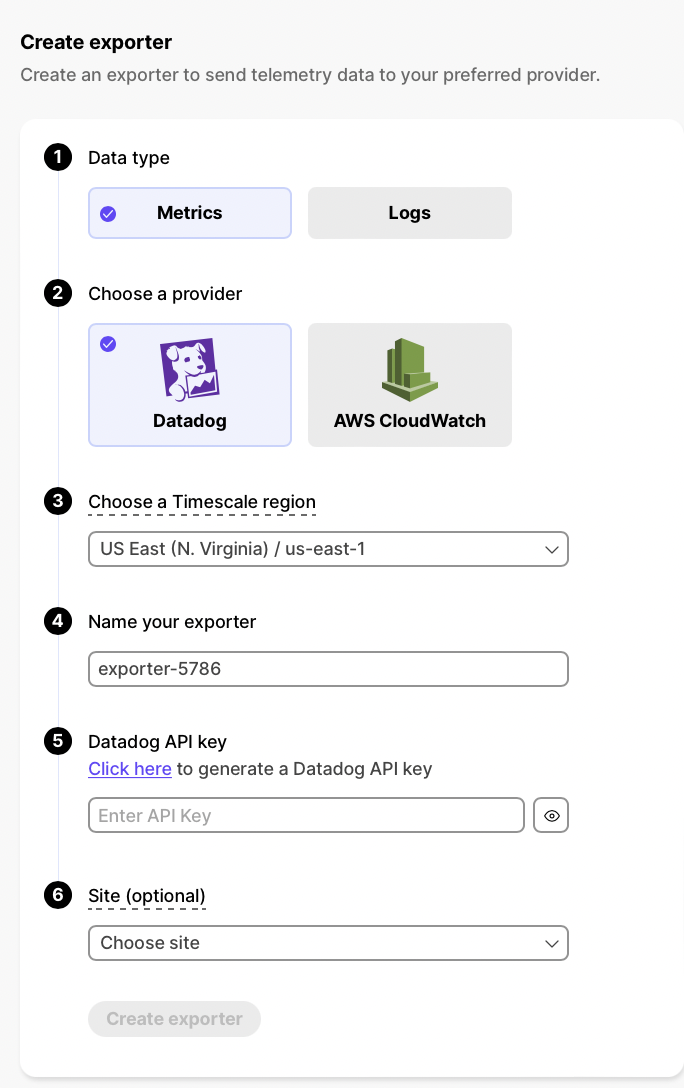

3 | 1. **In $CONSOLE, open [Exporters][console-integrations]**

4 | 1. **Click `New exporter`**

5 | 1. **Select `Metrics` for `Data type` and `Datadog` for provider**

6 |

7 |

8 |

9 | 1. **Choose your AWS region and provide the API key**

10 |

11 | The AWS region must be the same for your $CLOUD_LONG exporter and the Datadog provider.

12 |

13 | 1. **Set `Site` to your Datadog region, then click `Create exporter`**

14 |

15 |

16 |

17 | [console-integrations]: https://console.cloud.timescale.com/dashboard/integrations

--------------------------------------------------------------------------------

/_partials/_deprecated.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | This section describes a feature that is deprecated on Timescale. We strongly

4 | recommend that you do not use this feature in a production environment. If you

5 | need more information, [contact us](https://www.timescale.com/contact/).

6 |

7 |

8 |

--------------------------------------------------------------------------------

/_partials/_deprecated_2_18_0.md:

--------------------------------------------------------------------------------

1 | Old API since [TimescaleDB v2.18.0](https://github.com/timescale/timescaledb/releases/tag/2.18.0)

2 |

--------------------------------------------------------------------------------

/_partials/_deprecated_2_20_0.md:

--------------------------------------------------------------------------------

1 | Old API since [TimescaleDB v2.20.0](https://github.com/timescale/timescaledb/releases/tag/2.20.0)

2 |

--------------------------------------------------------------------------------

/_partials/_deprecated_2_21_0.md:

--------------------------------------------------------------------------------

1 | Deprecated since TimescaleDB v2.21.0

2 |

--------------------------------------------------------------------------------

/_partials/_early_access.md:

--------------------------------------------------------------------------------

1 | Early access

2 |

--------------------------------------------------------------------------------

/_partials/_early_access_2_18_0.md:

--------------------------------------------------------------------------------

1 | Early access: TimescaleDB v2.18.0

2 |

--------------------------------------------------------------------------------

/_partials/_experimental-private-beta.md:

--------------------------------------------------------------------------------

1 |

2 | This feature is experimental and offered as part of a private beta. Do not use

3 | this feature in production.

4 |

5 |

--------------------------------------------------------------------------------

/_partials/_experimental-schema-upgrade.md:

--------------------------------------------------------------------------------

1 |

2 | When you upgrade the `timescaledb` extension, the experimental schema is removed

3 | by default. To use experimental features after an upgrade, you need to add the

4 | experimental schema again.

5 |

6 |

--------------------------------------------------------------------------------

/_partials/_experimental.md:

--------------------------------------------------------------------------------

1 |

2 | Experimental features could have bugs. They might not be backwards compatible,

3 | and could be removed in future releases. Use these features at your own risk, and

4 | do not use any experimental features in production.

5 |

6 |

--------------------------------------------------------------------------------

/_partials/_financial-industry-data-analysis.md:

--------------------------------------------------------------------------------

1 | The financial industry is extremely data-heavy and relies on real-time and historical data for decision-making, risk assessment, fraud detection, and market analysis. Timescale simplifies management of these large volumes of data, while also providing you with meaningful analytical insights and optimizing storage costs.

--------------------------------------------------------------------------------

/_partials/_grafana-viz-prereqs.md:

--------------------------------------------------------------------------------

1 | Before you begin, make sure you have:

2 |

3 | * Created a [Timescale][cloud-login] service.

4 | * Installed a self-managed Grafana account, or signed up for

5 | [Grafana Cloud][install-grafana].

6 | * Ingested some data to your database. You can use the stock trade data from

7 | the [Getting Started Guide][gsg-data].

8 |

9 | The examples in this section use these variables and Grafana functions:

10 |

11 | * `$symbol`: a variable used to filter results by stock symbols.

12 | * `$__timeFrom()::timestamptz` & `$__timeTo()::timestamptz`:

13 | Grafana variables. You change the values of these variables by

14 | using the dashboard's date chooser when viewing your graph.

15 | * `$bucket_interval`: the interval size to pass to the `time_bucket`

16 | function when aggregating data.

17 |

18 | [install-grafana]: https://grafana.com/get/

19 | [gsg-data]: /getting-started/:currentVersion:/

20 | [cloud-login]: https://console.cloud.timescale.com/

21 |

--------------------------------------------------------------------------------

/_partials/_high-availability-setup.md:

--------------------------------------------------------------------------------

1 |

2 |

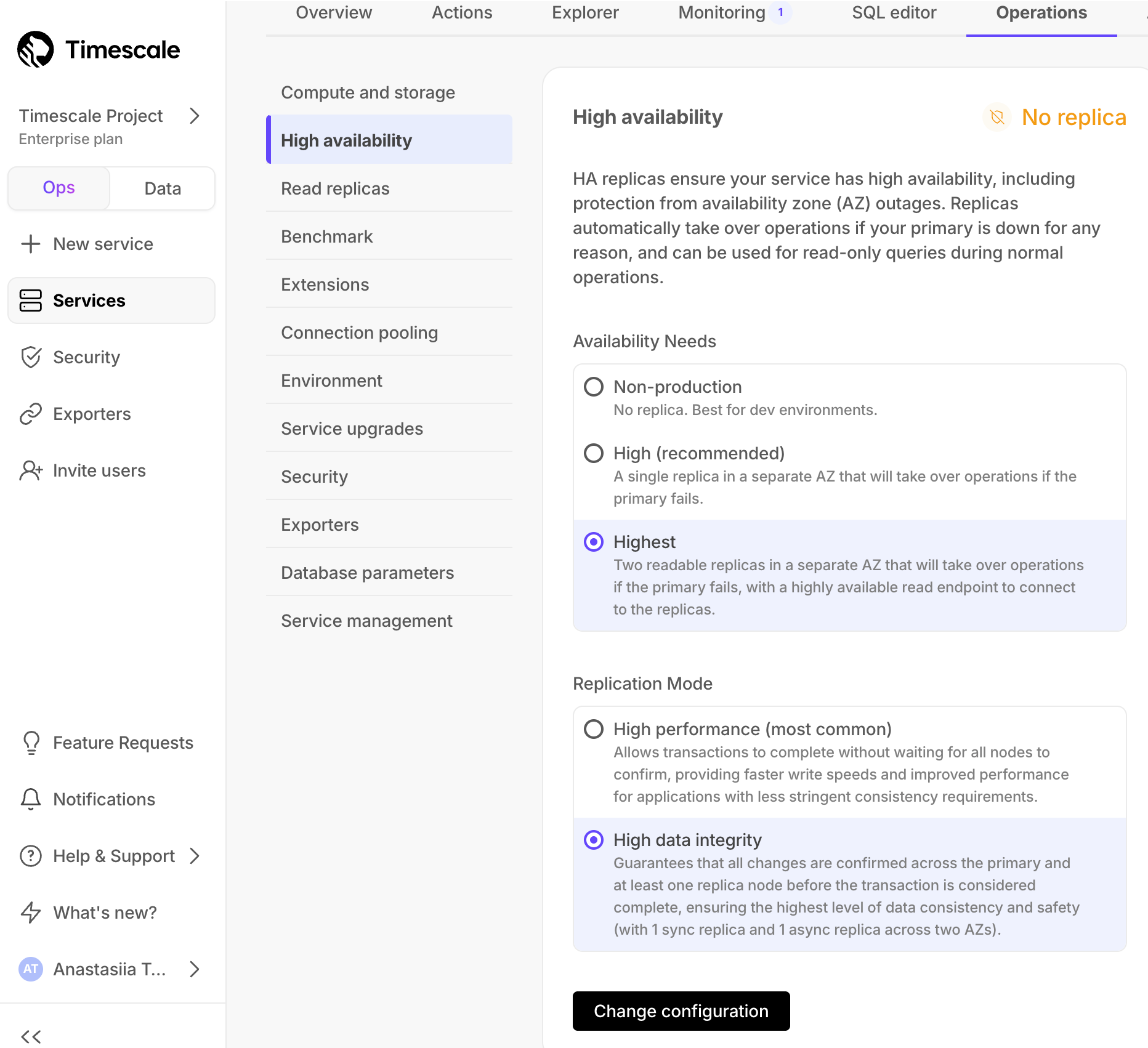

3 | 1. In [Timescale Console][cloud-login], select the service to enable replication for.

4 | 1. Click `Operations`, then select `High availability`.

5 | 1. Choose your replication strategy, then click `Change configuration`.

6 |

7 |

8 |

9 | 1. In `Change high availability configuration`, click `Change config`.

10 |

11 |

12 |

13 | [cloud-login]: https://console.cloud.timescale.com

14 |

--------------------------------------------------------------------------------

/_partials/_hypercore-conversion-overview.md:

--------------------------------------------------------------------------------

1 | When you convert chunks from the rowstore to the columnstore, multiple records are grouped into a single row.

2 | The columns of this row hold an array-like structure that stores all the data. For example, data in the following

3 | rowstore chunk:

4 |

5 | | Timestamp | Device ID | Device Type | CPU |Disk IO|

6 | |---|---|---|---|---|

7 | |12:00:01|A|SSD|70.11|13.4|

8 | |12:00:01|B|HDD|69.70|20.5|

9 | |12:00:02|A|SSD|70.12|13.2|

10 | |12:00:02|B|HDD|69.69|23.4|

11 | |12:00:03|A|SSD|70.14|13.0|

12 | |12:00:03|B|HDD|69.70|25.2|

13 |

14 | Is converted and compressed into arrays in a row in the columnstore:

15 |

16 | |Timestamp|Device ID|Device Type|CPU|Disk IO|

17 | |-|-|-|-|-|

18 | |[12:00:01, 12:00:01, 12:00:02, 12:00:02, 12:00:03, 12:00:03]|[A, B, A, B, A, B]|[SSD, HDD, SSD, HDD, SSD, HDD]|[70.11, 69.70, 70.12, 69.69, 70.14, 69.70]|[13.4, 20.5, 13.2, 23.4, 13.0, 25.2]|

19 |

20 | Because a single row takes up less disk space, you can reduce your chunk size by more than 90%, and can also

21 | speed up your queries. This saves on storage costs, and keeps your queries operating at lightning speed.

22 |

--------------------------------------------------------------------------------

/_partials/_hypershift-alternatively.md:

--------------------------------------------------------------------------------

1 |

2 | Alternatively, if you have data in an existing database, you can migrate it

3 | directly into your new Timescale database using hypershift. For more information

4 | about hypershift, including instructions for how to migrate your data, see the

5 | [hypershift documentation](https://docs.timescale.com/use-timescale/latest/migration/).

6 |

7 |

--------------------------------------------------------------------------------

/_partials/_hypershift-intro.md:

--------------------------------------------------------------------------------

1 | You can use hypershift to migrate existing PostgreSQL databases in one step, and

2 | enable compression and create hypertables instantly.

3 |

4 | Use Hypershift to migrate your data to Timescale from these sources:

5 |

6 | * Standard PostgreSQL databases

7 | * Amazon RDS databases

8 | * Other Timescale databases, including Managed Service for TimescaleDB, and

9 | self-hosted TimescaleDB

10 |

11 |

--------------------------------------------------------------------------------

/_partials/_integration-debezium-cloud-config-service.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | 1. **Connect to your $SERVICE_LONG**

4 |

5 | For $CLOUD_LONG, open an [SQL editor][run-queries] in [$CONSOLE][open-console]. For self-hosted, use [`psql`][psql].

6 |

7 | 1. **Enable logical replication for your $SERVICE_LONG**

8 |

9 | 1. Run the following command to enable logical replication:

10 |

11 | ```sql

12 | ALTER SYSTEM SET wal_level = logical;

13 | SELECT pg_reload_conf();

14 | ```

15 |

16 | 1. Restart your $SERVICE_SHORT.

17 |

18 | 1. **Create a table**

19 |

20 | Create a table to test the integration. For example:

21 |

22 | ```sql

23 | CREATE TABLE sensor_data (

24 | id SERIAL PRIMARY KEY,

25 | device_id TEXT NOT NULL,

26 | temperature FLOAT NOT NULL,

27 | recorded_at TIMESTAMPTZ DEFAULT now()

28 | );

29 | ```

30 |

--------------------------------------------------------------------------------

/_partials/_integration-prereqs-cloud-only.md:

--------------------------------------------------------------------------------

1 |

2 | To follow the steps on this page:

3 |

4 | * Create a target [$SERVICE_LONG][create-service] with time-series and analytics enabled.

5 |

6 | You need your [connection details][connection-info].

7 |

8 |

9 | [create-service]: /getting-started/:currentVersion:/services/

10 | [connection-info]: /integrations/:currentVersion:/find-connection-details/

11 |

--------------------------------------------------------------------------------

/_partials/_integration-prereqs-self-only.md:

--------------------------------------------------------------------------------

1 |

2 | To follow the steps on this page:

3 |

4 | * Create a target [self-hosted $TIMESCALE_DB][enable-timescaledb] instance.

5 |

6 |

7 | [enable-timescaledb]: /self-hosted/:currentVersion:/install/

8 |

--------------------------------------------------------------------------------

/_partials/_integration-prereqs.md:

--------------------------------------------------------------------------------

1 | To follow the steps on this page:

2 |

3 | * Create a target [$SERVICE_LONG][create-service] with time-series and analytics enabled.

4 |

5 | You need [your connection details][connection-info]. This procedure also

6 | works for [$SELF_LONG][enable-timescaledb].

7 |

8 | [create-service]: /getting-started/:currentVersion:/services/

9 | [enable-timescaledb]: /self-hosted/:currentVersion:/install/

10 | [connection-info]: /integrations/:currentVersion:/find-connection-details/

11 |

--------------------------------------------------------------------------------

/_partials/_kubernetes-prereqs.md:

--------------------------------------------------------------------------------

1 | - Install [self-managed Kubernetes][kubernetes-install] or sign up for a Kubernetes [Turnkey Cloud Solution][kubernetes-managed].

2 | - Install [kubectl][kubectl] for command-line interaction with your cluster.

3 |

4 | [kubernetes-install]: https://kubernetes.io/docs/setup/

5 | [kubectl]: https://kubernetes.io/docs/tasks/tools/

6 | [kubernetes-managed]: https://kubernetes.io/docs/setup/production-environment/turnkey-solutions/

--------------------------------------------------------------------------------

/_partials/_livesync-limitations.md:

--------------------------------------------------------------------------------

1 | * Schema changes must be co-ordinated.

2 |

3 | Make compatible changes to the schema in your $SERVICE_LONG first, then make

4 | the same changes to the source PostgreSQL instance.

5 | * Ensure that the source $PG instance and the target $SERVICE_LONG have the same extensions installed.

6 |

7 | $LIVESYNC_CAP does not create extensions on the target. If the table uses column types from an extension,

8 | first create the extension on the target $SERVICE_LONG before syncing the table.

9 | * There is WAL volume growth on the source PostgreSQL instance during large table copy.

10 | * This works for PostgreSQL databases only as source. TimescaleDB is not yet supported.

11 |

--------------------------------------------------------------------------------

/_partials/_migrate_dual_write_6e_turn_on_compression_policies.md:

--------------------------------------------------------------------------------

1 | ### 6e. Enable policies that compress data in the target hypertable

2 |

3 | In the following command, replace `` with the fully qualified table

4 | name of the target hypertable, for example `public.metrics`:

5 |

6 | ```bash

7 | psql -d $TARGET -f -v hypertable= - <<'EOF'

8 | SELECT public.alter_job(j.id, scheduled=>true)

9 | FROM _timescaledb_config.bgw_job j

10 | JOIN _timescaledb_catalog.hypertable h ON h.id = j.hypertable_id

11 | WHERE j.proc_schema IN ('_timescaledb_internal', '_timescaledb_functions')

12 | AND j.proc_name = 'policy_compression'

13 | AND j.id >= 1000

14 | AND format('%I.%I', h.schema_name, h.table_name)::text::regclass = :'hypertable'::text::regclass;

15 | EOF

16 | ```

17 |

--------------------------------------------------------------------------------

/_partials/_migrate_dual_write_backfill_getting_help.md:

--------------------------------------------------------------------------------

1 | import OpenSupportRequest from "versionContent/_partials/_migrate_open_support_request.mdx"

2 |

3 |

4 |

5 | If you get stuck, you can get help by either opening a support request, or take

6 | your issue to the `#migration` channel in the [community slack](https://slack.timescale.com/),

7 | where the developers of this migration method are there to help.

8 |

9 |

10 |

11 |

12 |

--------------------------------------------------------------------------------

/_partials/_migrate_dual_write_step1.md:

--------------------------------------------------------------------------------

1 | import OpenSupportRequest from "versionContent/_partials/_migrate_open_support_request.mdx"

2 |

3 | ## 1. Set up a target database instance in Timescale

4 |

5 | [Create a database service in Timescale][create-service].

6 |

7 | If you intend on migrating more than 400 GB, open a support request to

8 | ensure that enough disk is pre-provisioned on your Timescale instance.

9 |

10 |

11 |

12 | [create-service]: /getting-started/:currentVersion:/services/

13 |

--------------------------------------------------------------------------------

/_partials/_migrate_dual_write_step4.md:

--------------------------------------------------------------------------------

1 | ## 4. Start application in dual-write mode

2 |

3 | With the target database set up, your application can now be started in

4 | dual-write mode.

5 |

--------------------------------------------------------------------------------

/_partials/_migrate_dual_write_switch_production_workload.md:

--------------------------------------------------------------------------------

1 | Once you've validated that all the data is present, and that the target

2 | database can handle the production workload, the final step is to switch to the

3 | target database as your primary. You may want to continue writing to the source

4 | database for a period, until you are certain that the target database is

5 | holding up to all production traffic.

6 |

--------------------------------------------------------------------------------

/_partials/_migrate_dual_write_validate_production_load.md:

--------------------------------------------------------------------------------

1 | Now that dual-writes have been in place for a while, the target database should

2 | be holding up to production write traffic. Now would be the right time to

3 | determine if the target database can serve all production traffic (both reads

4 | _and_ writes). How exactly this is done is application-specific and up to you

5 | to determine.

6 |

7 |

--------------------------------------------------------------------------------

/_partials/_migrate_dump_awsrds.md:

--------------------------------------------------------------------------------

1 | import MigrateAWSRDSConnectIntermediary from "versionContent/_partials/_migrate_awsrds_connect_intermediary.mdx";

2 | import MigrateAWSRDSMigrateData from "versionContent/_partials/_migrate_awsrds_migrate_data_downtime.mdx";

3 | import MigrationValidateRestartApp from "versionContent/_partials/_migrate_validate_and_restart_app.mdx";

4 |

5 |

6 |

7 | ## Migrate your data to your Timescale Cloud service

8 |

9 | To securely migrate data from your RDS instance:

10 |

11 |

12 |

13 |

14 | ## Validate your Timescale Cloud service and restart your app

15 |

16 |

17 |

18 |

19 |

20 |

--------------------------------------------------------------------------------

/_partials/_migrate_explain_pg_dump_flags.md:

--------------------------------------------------------------------------------

1 | - `--no-tablespaces` is required because Timescale does not support

2 | tablespaces other than the default. This is a known limitation.

3 |

4 | - `--no-owner` is required because Timescale's `tsdbadmin` user is not a

5 | superuser and cannot assign ownership in all cases. This flag means that

6 | everything is owned by the user used to connect to the target, regardless of

7 | ownership in the source. This is a known limitation.

8 |

9 | - `--no-privileges` is required because Timescale's `tsdbadmin` user is not a

10 | superuser and cannot assign privileges in all cases. This flag means that

11 | privileges assigned to other users must be reassigned in the target database

12 | as a manual clean-up task. This is a known limitation.

13 |

--------------------------------------------------------------------------------

/_partials/_migrate_from_timescaledb_version.md:

--------------------------------------------------------------------------------

1 | It is very important that the version of the TimescaleDB extension is the same

2 | in the source and target databases. This requires upgrading the TimescaleDB

3 | extension in the source database before migrating.

4 |

5 | You can determine the version of TimescaleDB in the target database with the

6 | following command:

7 |

8 | ```bash

9 | psql $TARGET -c "SELECT extversion FROM pg_extension WHERE extname = 'timescaledb';"

10 | ```

11 |

12 | To update the TimescaleDB extension in your source database, first ensure that

13 | the desired version is installed from your package repository. Then you can

14 | upgrade the extension with the following query:

15 |

16 | ```bash

17 | psql $SOURCE -c "ALTER EXTENSION timescaledb UPDATE TO '';"

18 | ```

19 |

20 | For more information and guidance, consult the [Upgrade TimescaleDB] page.

21 |

22 | [Upgrade TimescaleDB]: https://docs.timescale.com/self-hosted/latest/upgrades/

23 |

--------------------------------------------------------------------------------

/_partials/_migrate_import_setup_connection_strings_parquet.md:

--------------------------------------------------------------------------------

1 | This variable hold the connection information for the target Timescale Cloud service.

2 |

3 | In Terminal on the source machine, set the following:

4 |

5 | ```bash

6 | export TARGET=postgres://tsdbadmin:@:/tsdb?sslmode=require

7 | ```

8 | You find the connection information for your Timescale Cloud service in the configuration file you

9 | downloaded when you created the service.

10 |

--------------------------------------------------------------------------------

/_partials/_migrate_live_migrate_data.md:

--------------------------------------------------------------------------------

1 | import MigrateData from "versionContent/_partials/_migrate_live_run_live_migration.mdx";

2 | import CleanupData from "versionContent/_partials/_migrate_live_run_cleanup.mdx";

3 |

4 | ## Migrate your data, then start downtime

5 |

6 |

7 |

8 |

9 |

10 |

11 | [modify-parameters]: /use-timescale/:currentVersion/configuration/customize-configuration/#modify-basic-parameters

12 | [mst-portal]: https://portal.managed.timescale.com/login

13 | [tsc-portal]: https://console.cloud.timescale.com/

14 | [configure-instance-parameters]: /use-timescale/:currentVersion/configuration/customize-configuration/#configure-database-parameters

--------------------------------------------------------------------------------

/_partials/_migrate_live_migrate_data_timescaledb.md:

--------------------------------------------------------------------------------

1 | import MigrateDataTimescaleDB from "versionContent/_partials/_migrate_live_run_live_migration_timescaledb.mdx";

2 |

3 |

4 | ## Migrate your data, then start downtime

5 |

6 |

7 |

8 |

9 |

10 |

11 |

--------------------------------------------------------------------------------

/_partials/_migrate_live_migration_cleanup.md:

--------------------------------------------------------------------------------

1 | To clean up resources associated with live migration, use the following command:

2 |

3 | ```sh

4 | docker run --rm -it --name live-migration-clean \

5 | -e PGCOPYDB_SOURCE_PGURI=$SOURCE \

6 | -e PGCOPYDB_TARGET_PGURI=$TARGET \

7 | --pid=host \

8 | -v ~/live-migration:/opt/timescale/ts_cdc \

9 | timescale/live-migration:latest clean --prune

10 | ```

11 |

12 | The `--prune` flag is used to delete temporary files in the `~/live-migration` directory

13 | that were needed for the migration process. It's important to note that executing the

14 | `clean` command means you cannot resume the interrupted live migration.

15 |

--------------------------------------------------------------------------------

/_partials/_migrate_live_run_cleanup.md:

--------------------------------------------------------------------------------

1 | 1. **Validate the migrated data**

2 |

3 | The contents of both databases should be the same. To check this you could compare

4 | the number of rows, or an aggregate of columns. However, the best validation method

5 | depends on your app.

6 |

7 | 1. **Stop app downtime**

8 |

9 | Once you are confident that your data is successfully replicated, configure your apps

10 | to use your Timescale Cloud service.

11 |

12 | 1. **Cleanup resources associated with live-migration from your migration machine**

13 |

14 | This command removes all resources and temporary files used in the migration process.

15 | When you run this command, you can no longer resume live-migration.

16 |

17 | ```shell

18 | docker run --rm -it --name live-migration-clean \

19 | -e PGCOPYDB_SOURCE_PGURI=$SOURCE \

20 | -e PGCOPYDB_TARGET_PGURI=$TARGET \

21 | --pid=host \

22 | -v ~/live-migration:/opt/timescale/ts_cdc \

23 | timescale/live-migration:latest clean --prune

24 | ```

25 |

--------------------------------------------------------------------------------

/_partials/_migrate_live_run_cleanup_postgres.md:

--------------------------------------------------------------------------------

1 | 1. **Validate the migrated data**

2 |

3 | The contents of both databases should be the same. To check this you could compare

4 | the number of rows, or an aggregate of columns. However, the best validation method

5 | depends on your app.

6 |

7 | 1. **Stop app downtime**

8 |

9 | Once you are confident that your data is successfully replicated, configure your apps

10 | to use your Timescale Cloud service.

11 |

12 | 1. **Cleanup resources associated with live-migration from your migration machine**

13 |

14 | This command removes all resources and temporary files used in the migration process.

15 | When you run this command, you can no longer resume live-migration.

16 |

17 | ```shell

18 | docker run --rm -it --name live-migration-clean \

19 | -e PGCOPYDB_SOURCE_PGURI=$SOURCE \

20 | -e PGCOPYDB_TARGET_PGURI=$TARGET \

21 | --pid=host \

22 | -v ~/live-migration:/opt/timescale/ts_cdc \

23 | timescale/live-migration:latest1 clean --prune

24 | ```

25 |

--------------------------------------------------------------------------------

/_partials/_migrate_live_setup_connection_strings.md:

--------------------------------------------------------------------------------

1 | These variables hold the connection information for the source database and target Timescale Cloud service.

2 | In Terminal on your migration machine, set the following:

3 |

4 | ```bash

5 | export SOURCE="postgres://:@:/"

6 | export TARGET="postgres://tsdbadmin:@:/tsdb?sslmode=require"

7 | ```

8 | You find the connection information for your Timescale Cloud service in the configuration file you

9 | downloaded when you created the service.

10 |

11 |

12 | Avoid using connection strings that route through connection poolers like PgBouncer or similar tools. This tool requires a direct connection to the database to function properly.

13 |

14 |

15 |

16 |

--------------------------------------------------------------------------------

/_partials/_migrate_live_setup_environment.md:

--------------------------------------------------------------------------------

1 | import SetupConnectionStrings from "versionContent/_partials/_migrate_live_setup_connection_strings.mdx";

2 | import MigrationSetupDBConnectionTimescaleDB from "versionContent/_partials/_migrate_set_up_align_db_extensions_timescaledb.mdx";

3 | import TuneSourceDatabase from "versionContent/_partials/_migrate_live_tune_source_database.mdx";

4 |

5 |

6 | ## Set your connection strings

7 |

8 |

9 |

10 | ## Align the version of TimescaleDB on the source and target

11 |

12 |

13 |

14 |

15 |

16 |

17 | ## Tune your source database

18 |

19 |

20 |

21 |

22 |

23 |

24 | [modify-parameters]: /use-timescale/:currentVersion/configuration/customize-configuration/#modify-basic-parameters

25 | [mst-portal]: https://portal.managed.timescale.com/login

26 | [tsc-portal]: https://console.cloud.timescale.com/

27 | [configure-instance-parameters]: /use-timescale/:currentVersion/configuration/customize-configuration/#configure-database-parameters

28 |

--------------------------------------------------------------------------------

/_partials/_migrate_live_setup_environment_awsrds.md:

--------------------------------------------------------------------------------

1 | import SetupConnectionStrings from "versionContent/_partials/_migrate_live_setup_connection_strings.mdx";

2 | import MigrationSetupDBConnectionPostgresql from "versionContent/_partials/_migrate_set_up_align_db_extensions_postgres_based.mdx";

3 | import TuneSourceDatabaseAWSRDS from "versionContent/_partials/_migrate_live_tune_source_database_awsrds.mdx";

4 |

5 | ## Set your connection strings

6 |

7 |

8 |

9 | ## Align the extensions on the source and target

10 |

11 |

12 |

13 |

14 |

15 |

16 | ## Tune your source database

17 |

18 |

19 |

20 |

21 |

22 |

23 |

24 |

25 | [modify-parameters]: /use-timescale/:currentVersion:/configuration/customize-configuration/#modify-basic-parameters

26 | [mst-portal]: https://portal.managed.timescale.com/login

27 |

--------------------------------------------------------------------------------

/_partials/_migrate_live_setup_environment_mst.md:

--------------------------------------------------------------------------------

1 | import SetupConnectionStrings from "versionContent/_partials/_migrate_live_setup_connection_strings.mdx";

2 | import MigrationSetupDBConnectionTimescaleDB from "versionContent/_partials/_migrate_set_up_align_db_extensions_timescaledb.mdx";

3 | import TuneSourceDatabaseMST from "versionContent/_partials/_migrate_live_tune_source_database_mst.mdx";

4 |

5 | ## Set your connection strings

6 |

7 |

8 |

9 | ## Align the version of TimescaleDB on the source and target

10 |

11 |

12 |

13 |

14 |

15 |

16 | ## Tune your source database

17 |

18 |

19 |

20 |

21 |

22 |

23 |

24 |

25 | [modify-parameters]: /use-timescale/:currentVersion:/configuration/customize-configuration/#modify-basic-parameters

26 | [mst-portal]: https://portal.managed.timescale.com/login

27 |

--------------------------------------------------------------------------------

/_partials/_migrate_live_setup_environment_postgres.md:

--------------------------------------------------------------------------------

1 | import SetupConnectionStrings from "versionContent/_partials/_migrate_live_setup_connection_strings.mdx";

2 | import MigrationSetupDBConnectionPostgresql from "versionContent/_partials/_migrate_set_up_align_db_extensions_postgres_based.mdx";

3 | import TuneSourceDatabasePostgres from "versionContent/_partials/_migrate_live_tune_source_database_postgres.mdx";

4 |

5 |

6 | ## Set your connection strings

7 |

8 |

9 |

10 |

11 | ## Align the extensions on the source and target

12 |

13 |

14 |

15 |

16 |

17 |

18 | ## Tune your source database

19 |

20 |

21 |

22 |

23 |

24 |

25 |

26 | [modify-parameters]: /use-timescale/:currentVersion/configuration/customize-configuration/#modify-basic-parameters

27 | [mst-portal]: https://portal.managed.timescale.com/login

28 | [tsc-portal]: https://console.cloud.timescale.com/

29 | [configure-instance-parameters]: /use-timescale/:currentVersion/configuration/customize-configuration/#configure-database-parameters

30 |

--------------------------------------------------------------------------------

/_partials/_migrate_live_tune_source_database_mst.md:

--------------------------------------------------------------------------------

1 | import EnableReplication from "versionContent/_partials/_migrate_live_setup_enable_replication.mdx";

2 |

3 | 1. **Enable live-migration to replicate `DELETE` and`UPDATE` operations**

4 |

5 |

6 |

7 | [mst-portal]: https://portal.managed.timescale.com/login

8 |

--------------------------------------------------------------------------------

/_partials/_migrate_live_validate_data.md:

--------------------------------------------------------------------------------

1 | import CleanupData from "versionContent/_partials/_migrate_live_run_cleanup.mdx";

2 |

3 | ## Validate your data, then restart your app

4 |

5 |

6 |

7 |

8 |

9 |

10 | [modify-parameters]: /use-timescale/:currentVersion/configuration/customize-configuration/#modify-basic-parameters

11 | [mst-portal]: https://portal.managed.timescale.com/login

12 | [tsc-portal]: https://console.cloud.timescale.com/

13 | [configure-instance-parameters]: /use-timescale/:currentVersion/configuration/customize-configuration/#configure-database-parameters

--------------------------------------------------------------------------------

/_partials/_migrate_open_support_request.md:

--------------------------------------------------------------------------------

1 | You can open a support request directly from the [Timescale console][support-link],

2 | or by email to [support@timescale.com](mailto:support@timescale.com).

3 |

4 | [support-link]: https://console.cloud.timescale.com/dashboard/support

5 |

--------------------------------------------------------------------------------

/_partials/_migrate_pg_dump_do_not_recommend_for_large_migration.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | If you want to migrate more than 400GB of data, create a [Timescale Console support request](https://console.cloud.timescale.com/dashboard/support), or

4 | send us an email at [support@timescale.com](mailto:support@timescale.com) saying how much data you want to migrate. We will pre-provision

5 | your Timescale Cloud instance for you.

6 |

7 |

8 |

--------------------------------------------------------------------------------

/_partials/_migrate_pg_dump_minimal_downtime.md:

--------------------------------------------------------------------------------

1 | For minimal downtime, run the migration commands from a machine with a low-latency,

2 | high-throughput link to the source and target databases. If you are using an AWS

3 | EC2 instance to run the migration commands, use one in the same region as your target

4 | Timescale Cloud instance.

5 |

--------------------------------------------------------------------------------

/_partials/_migrate_post_data_dump_source_schema.md:

--------------------------------------------------------------------------------

1 | import ExplainPgDumpFlags from "versionContent/_partials/_migrate_explain_pg_dump_flags.mdx";

2 |

3 | ```shell

4 | pg_dump -d "$SOURCE" \

5 | --format=plain \

6 | --quote-all-identifiers \

7 | --no-tablespaces \

8 | --no-owner \

9 | --no-privileges \

10 | --section=post-data \

11 | --file=post-data-dump.sql \

12 | --snapshot=$(cat /tmp/pgcopydb/snapshot)

13 | ```

14 |

15 | - `--section=post-data` is used to dump post-data items include definitions of

16 | indexes, triggers, rules, and constraints other than validated check

17 | constraints.

18 |

19 | - `--snapshot` is used to specified the synchronized [snapshot][snapshot] when

20 | making a dump of the database.

21 |

22 |

23 |

24 | [snapshot]: https://www.postgresql.org/docs/current/functions-admin.html#FUNCTIONS-SNAPSHOT-SYNCHRONIZATION

25 |

--------------------------------------------------------------------------------

/_partials/_migrate_pre_data_dump_source_schema.md:

--------------------------------------------------------------------------------

1 | import ExplainPgDumpFlags from "versionContent/_partials/_migrate_explain_pg_dump_flags.mdx";

2 |

3 | ```sh

4 | pg_dump -d "$SOURCE" \

5 | --format=plain \

6 | --quote-all-identifiers \

7 | --no-tablespaces \

8 | --no-owner \

9 | --no-privileges \

10 | --section=pre-data \

11 | --file=pre-data-dump.sql \

12 | --snapshot=$(cat /tmp/pgcopydb/snapshot)

13 | ```

14 |

15 | - `--section=pre-data` is used to dump only the definition of tables,

16 | sequences, check constraints and inheritance hierarchy. It excludes

17 | indexes, foreign key constraints, triggers and rules.

18 |

19 | - `--snapshot` is used to specified the synchronized [snapshot][snapshot] when

20 | making a dump of the database.

21 |

22 |

23 |

24 | [snapshot]: https://www.postgresql.org/docs/current/functions-admin.html#FUNCTIONS-SNAPSHOT-SYNCHRONIZATION

25 |

--------------------------------------------------------------------------------

/_partials/_migrate_set_up_database_first_steps.md:

--------------------------------------------------------------------------------

1 | 1. **Take the applications that connect to the source database offline**

2 |

3 | The duration of the migration is proportional to the amount of data stored in your database. By

4 | disconnection your app from your database you avoid and possible data loss.

5 |

6 | 1. **Set your connection strings**

7 |

8 | These variables hold the connection information for the source database and target Timescale Cloud service:

9 |

10 | ```bash

11 | export SOURCE="postgres://:@:/"

12 | export TARGET="postgres://tsdbadmin:@:/tsdb?sslmode=require"

13 | ```

14 | You find the connection information for your Timescale Cloud Service in the configuration file you

15 | downloaded when you created the service.

16 |

--------------------------------------------------------------------------------

/_partials/_migrate_set_up_source_and_target.md:

--------------------------------------------------------------------------------

1 |

2 | For the sake of convenience, connection strings to the source and target

3 | databases are referred to as `$SOURCE` and `$TARGET` throughout this guide.

4 |

5 | This can be set in your shell, for example:

6 |

7 | ```bash

8 | export SOURCE="postgres://:@:/"

9 | export TARGET="postgres://:@:/"

10 | ```

11 |

12 |

--------------------------------------------------------------------------------

/_partials/_migrate_source_target_note.md:

--------------------------------------------------------------------------------

1 |

2 | In the context of migrations, your existing production database is referred to

3 | as the "source" database, while the new Timescale database that you intend to

4 | migrate your data to is referred to as the "target" database.

5 |

6 |

--------------------------------------------------------------------------------

/_partials/_migrate_using_parallel_copy.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | ### Restoring data into Timescale with timescaledb-parallel-copy

4 |

5 | 1. At the command prompt, install `timescaledb-parallel-copy`:

6 |

7 | ```bash

8 | go get github.com/timescale/timescaledb-parallel-copy/cmd/timescaledb-parallel-copy

9 | ```

10 |

11 | 1. Use `timescaledb-parallel-copy` to import data into

12 | your Timescale database. Set `` to twice the number of CPUs in your

13 | database. For example, if you have 4 CPUs, `` should be `8`.

14 |

15 | ```bash

16 | timescaledb-parallel-copy \

17 | --connection "host= \

18 | user=tsdbadmin password= \

19 | port= \

20 | dbname=tsdb \

21 | sslmode=require

22 | " \

23 | --table \

24 | --file .csv \

25 | --workers \

26 | --reporting-period 30s

27 | ```

28 |

29 | Repeat for each table and hypertable you want to migrate.

30 |

31 |

32 |

--------------------------------------------------------------------------------

/_partials/_migrate_using_postgres_copy.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | ### Restoring data into Timescale with COPY

4 |

5 | 1. Connect to your Timescale database:

6 |

7 | ```sql

8 | psql "postgres://tsdbadmin:@:/tsdb?sslmode=require"

9 | ```

10 |

11 | 1. Restore the data to your Timescale database:

12 |

13 | ```sql

14 | \copy FROM '.csv' WITH (FORMAT CSV);

15 | ```

16 |

17 | Repeat for each table and hypertable you want to migrate.

18 |

19 |

20 |

--------------------------------------------------------------------------------

/_partials/_migrate_validate_and_restart_app.md:

--------------------------------------------------------------------------------

1 | 1. Update the table statistics.

2 |

3 | ```bash

4 | psql $TARGET -c "ANALYZE;"

5 | ```

6 |

7 | 1. Verify the data in the target Timescale Cloud service.

8 |

9 | Check that your data is correct, and returns the results that you expect,

10 |

11 | 1. Enable any Timescale Cloud features you want to use.

12 |

13 | Migration from PostgreSQL moves the data only. Now manually enable Timescale Cloud features like

14 | [hypertables][about-hypertables], [hypercore][data-compression] or [data retention][data-retention]

15 | while your database is offline.

16 |

17 | 1. Reconfigure your app to use the target database, then restart it.

18 |

19 |

20 | [about-hypertables]: /use-timescale/:currentVersion:/hypertables/

21 | [data-compression]: /use-timescale/:currentVersion:/hypercore/

22 | [data-retention]: /use-timescale/:currentVersion:/data-retention/about-data-retention/

23 |

--------------------------------------------------------------------------------

/_partials/_mst-intro.md:

--------------------------------------------------------------------------------

1 | Managed Service for TimescaleDB (MST) is [TimescaleDB](https://github.com/timescale/timescaledb) hosted on Azure and GCP.

2 | MST is offered in partnership with Aiven.

3 |

--------------------------------------------------------------------------------

/_partials/_multi-node-deprecation.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | [Multi-node support is sunsetted][multi-node-deprecation].

4 |

5 | TimescaleDB v2.13 is the last release that includes multi-node support for PostgreSQL

6 | versions 13, 14, and 15.

7 |

8 |

9 |

10 | [multi-node-deprecation]: https://github.com/timescale/timescaledb/blob/main/docs/MultiNodeDeprecation.md

11 |

--------------------------------------------------------------------------------

/_partials/_old-api-create-hypertable.md:

--------------------------------------------------------------------------------

1 | If you are self-hosting $TIMESCALE_DB v2.19.3 and below, create a [$PG relational table][pg-create-table],

2 | then convert it using [create_hypertable][create_hypertable]. You then enable $HYPERCORE with a call

3 | to [ALTER TABLE][alter_table_hypercore].

4 |

5 |

6 | [pg-create-table]: https://www.postgresql.org/docs/current/sql-createtable.html

7 | [create_hypertable]: /api/:currentVersion:/hypertable/create_hypertable/

8 | [alter_table_hypercore]: /api/:currentVersion:/hypercore/alter_table/

9 |

--------------------------------------------------------------------------------

/_partials/_plan_upgrade.md:

--------------------------------------------------------------------------------

1 |

2 | - Install the PostgreSQL client tools on your migration machine. This includes `psql`, and `pg_dump`.

3 | - Read [the release notes][relnotes] for the version of TimescaleDB that you are upgrading to.

4 | - [Perform a backup][backup] of your database. While Timescale

5 | upgrades are performed in-place, upgrading is an intrusive operation. Always

6 | make sure you have a backup on hand, and that the backup is readable in the

7 | case of disaster.

8 |

9 | [relnotes]: https://github.com/timescale/timescaledb/releases

10 | [upgrade-pg]: /self-hosted/:currentVersion:/upgrade-pg/#upgrade-your-postgresql-instance

11 | [backup]: /self-hosted/:currentVersion:/backup-and-restore/

12 |

--------------------------------------------------------------------------------

/_partials/_preloaded-data.md:

--------------------------------------------------------------------------------

1 |

2 | If you have been provided with a pre-loaded dataset on your Timescale service,

3 | go directly to the

4 | [queries section](https://docs.timescale.com/tutorials/latest/nyc-taxi-geospatial/plot-nyc/).

5 |

6 |

--------------------------------------------------------------------------------

/_partials/_prereqs-cloud-and-self.md:

--------------------------------------------------------------------------------

1 | To follow the procedure on this page you need to:

2 |

3 | * Create a [target $SERVICE_LONG][create-service].

4 |

5 | This procedure also works for [self-hosted $TIMESCALE_DB][enable-timescaledb].

6 |

7 | [create-service]: /getting-started/:currentVersion:/services/

8 | [enable-timescaledb]: /self-hosted/:currentVersion:/install/

9 |

--------------------------------------------------------------------------------

/_partials/_prereqs-cloud-no-connection.md:

--------------------------------------------------------------------------------

1 | To follow the steps on this page:

2 |

3 | * Create a target [$SERVICE_LONG][create-service] with time-series and analytics enabled.

4 |

5 | [create-service]: /getting-started/:currentVersion:/services/

--------------------------------------------------------------------------------

/_partials/_prereqs-cloud-only.md:

--------------------------------------------------------------------------------

1 | To follow the steps on this page:

2 |

3 | * Create a target [$SERVICE_LONG][create-service] with time-series and analytics enabled.

4 |

5 | You need your [connection details][connection-info].

6 |

7 |

8 | [create-service]: /getting-started/:currentVersion:/services/

9 | [connection-info]: /integrations/:currentVersion:/find-connection-details/

10 |

--------------------------------------------------------------------------------

/_partials/_psql-installation-homebrew.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | #### Installing psql using Homebrew

4 |

5 | 1. Install `psql`:

6 |

7 | ```bash

8 | brew install libpq

9 | ```

10 |

11 | 1. Update your path to include the `psql` tool.

12 |

13 | ```bash

14 | brew link --force libpq

15 | ```

16 |

17 | On Intel chips, the symbolic link is added to `/usr/local/bin`. On Apple

18 | Silicon, the symbolic link is added to `/opt/homebrew/bin`.

19 |

20 |

21 |

--------------------------------------------------------------------------------

/_partials/_psql-installation-macports.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | #### Installing psql using MacPorts

4 |

5 | 1. Install the latest version of `libpqxx`:

6 |

7 | ```bash

8 | sudo port install libpqxx

9 | ```

10 |

11 | 1. [](#)View the files that were installed by `libpqxx`:

12 |

13 | ```bash

14 | port contents libpqxx

15 | ```

16 |

17 |

18 |

--------------------------------------------------------------------------------

/_partials/_psql-installation-windows.md:

--------------------------------------------------------------------------------

1 | ## Install psql on Windows

2 |

3 | The `psql` tool is installed by default on Windows systems when you install

4 | PostgreSQL, and this is the most effective way to install the tool. These

5 | instructions use the interactive installer provided by PostgreSQL and

6 | EnterpriseDB.

7 |

8 |

9 |

10 | ### Installing psql on Windows

11 |

12 | 1. Download and run the PostgreSQL installer from

13 | [www.enterprisedb.com][windows-installer].

14 | 1. In the `Select Components` dialog, check `Command Line Tools`, along with

15 | any other components you want to install, and click `Next`.

16 | 1. Complete the installation wizard to install the package.

17 |

18 |

19 |

20 | [windows-installer]: https://www.postgresql.org/download/windows/

21 |

--------------------------------------------------------------------------------

/_partials/_quickstart-intro.md:

--------------------------------------------------------------------------------

1 | Easily integrate your app with $CLOUD_LONG. Use your favorite programming language to connect to your

2 | $SERVICE_LONG, create and manage hypertables, then ingest and query data.

3 |

--------------------------------------------------------------------------------

/_partials/_real-time-aggregates.md:

--------------------------------------------------------------------------------

1 | In $TIMESCALE_DB v2.13 and later, real-time aggregates are **DISABLED** by default. In earlier versions, real-time aggregates are **ENABLED** by default; when you create a continuous aggregate, queries to that view include the results from the most recent raw data.

2 |

--------------------------------------------------------------------------------

/_partials/_release_notification.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | To be notified about the latest releases, in [Github](https://github.com/timescale/timescaledb)

4 | click `Watch` > `Custom`, then enable `Releases`.

5 |

6 |

--------------------------------------------------------------------------------

/_partials/_selfhosted_cta.md:

--------------------------------------------------------------------------------

1 |

2 | Deploy a Timescale service in the cloud. We tune your database for performance and handle scalability, high availability, backups and management so you can relax.

3 |

4 |

5 |

--------------------------------------------------------------------------------

/_partials/_selfhosted_production_alert.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | The following instructions are for development and testing installations. For a production environment, we strongly recommend

4 | that you implement the following, many of which you can achieve using PostgreSQL tooling.

5 |

6 | - Incremental backup and database snapshots, with efficient point-in-time recovery.

7 | - High availability replication, ideally with nodes across multiple availability zones.

8 | - Automatic failure detection with fast restarts, for both non-replicated and replicated deployments.

9 | - Asynchronous replicas for scaling reads when needed.

10 | - Connection poolers for scaling client connections.

11 | - Zero-down-time minor version and extension upgrades.

12 | - Forking workflows for major version upgrades and other feature testing.

13 | - Monitoring and observability.

14 |

15 | Deploying for production? With a $SERVICE_LONG we tune your database for performance and handle scalability, high

16 | availability, backups and management so you can relax.

17 |

18 |

19 |

20 |

--------------------------------------------------------------------------------

/_partials/_since_2_18_0.md:

--------------------------------------------------------------------------------

1 | Since [TimescaleDB v2.18.0](https://github.com/timescale/timescaledb/releases/tag/2.18.0)

2 |

--------------------------------------------------------------------------------

/_partials/_since_2_20_0.md:

--------------------------------------------------------------------------------

1 | Since [TimescaleDB v2.20.0](https://github.com/timescale/timescaledb/releases/tag/2.20.0)

2 |

--------------------------------------------------------------------------------

/_partials/_tiered-storage-billing.md:

--------------------------------------------------------------------------------

1 | $COMPANY charges only for the storage that your data occupies in S3 in the Apache Parquet format, regardless of whether it was compressed in $CLOUD_LONG before tiering. There are no additional expenses, such as data transfer or compute.

--------------------------------------------------------------------------------

/_partials/_timescale-intro.md:

--------------------------------------------------------------------------------

1 | $COMPANY extends $PG for all of your resource-intensive production workloads, so you

2 | can build faster, scale further, and stay under budget.

3 |

--------------------------------------------------------------------------------

/_partials/_timescaledb.md:

--------------------------------------------------------------------------------

1 | TimescaleDB is an extension for PostgreSQL that enables time-series workloads,

2 | increasing ingest, query, storage and analytics performance.

3 |

4 | Best practice is to run TimescaleDB in a [Timescale Service](https://console.cloud.timescale.com/signup), but if you want to

5 | self-host you can run TimescaleDB yourself.

6 |

7 |

--------------------------------------------------------------------------------

/_partials/_tutorials_hypertable_intro.md:

--------------------------------------------------------------------------------

1 | import HypercoreIntroShort from "versionContent/_partials/_hypercore-intro-short.mdx";

2 |

3 | Time-series data represents the way a system, process, or behavior changes over time. $HYPERTABLE_CAPs enable

4 | $TIMESCALE_DB to work efficiently with time-series data. $HYPERTABLE_CAPs are $PG tables that automatically partition

5 | your time-series data by time. Each $HYPERTABLE is made up of child tables called chunks. Each chunk is assigned a range

6 | of time, and only contains data from that range. When you run a query, $TIMESCALE_DB identifies the correct chunk and

7 | runs the query on it, instead of going through the entire table.

8 |

9 |

10 |

11 | Because $TIMESCALE_DB is 100% $PG, you can use all the standard PostgreSQL tables, indexes, stored

12 | procedures, and other objects alongside your $HYPERTABLEs. This makes creating and working with $HYPERTABLEs similar

13 | to standard $PG.

14 |

15 |

--------------------------------------------------------------------------------

/_partials/_usage-based-storage-intro.md:

--------------------------------------------------------------------------------

1 | $CLOUD_LONG charges are based on the amount of storage you use. You don't pay for

2 | fixed storage size, and you don't need to worry about scaling disk size as your

3 | data grows—we handle it all for you. To reduce your data costs further,

4 | combine [$HYPERCORE][hypercore], a [data retention policy][data-retention], and

5 | [tiered storage][data-tiering].

6 |

7 | [hypercore]: /api/:currentVersion:/hypercore/

8 | [data-retention]: /use-timescale/:currentVersion:/data-retention/

9 | [data-tiering]: /use-timescale/:currentVersion:/data-tiering/

10 |

--------------------------------------------------------------------------------

/_partials/_use-case-setup-blockchain-dataset.md:

--------------------------------------------------------------------------------

1 |

2 |

3 | import CreateHypertableBlockchain from "versionContent/_partials/_create-hypertable-blockchain.mdx";

4 | import AddDataBlockchain from "versionContent/_partials/_add-data-blockchain.mdx";

5 | import IntegrationPrereqs from "versionContent/_partials/_integration-prereqs.mdx";

6 |

7 | # Ingest data into a $SERVICE_LONG

8 |

9 | This tutorial uses a dataset that contains Bitcoin blockchain data for

10 | the past five days, in a $HYPERTABLE named `transactions`.

11 |

12 | ## Prerequisites

13 |

14 |

15 |

16 |

17 |

18 |

19 |

--------------------------------------------------------------------------------

/_partials/_vpc-limitations.md:

--------------------------------------------------------------------------------

1 | * You **can attach**:

2 | * Up to 50 Customer $VPCs to a Peering $VPC.

3 | * A $SERVICE_LONG to a single Peering $VPC at a time.

4 | The $SERVICE_SHORT and the Peering $VPC must be in the same AWS region. However, you can peer a Customer $VPC and a Peering $VPC that are in different regions.

5 | * Multiple $SERVICE_LONGs to the same Peering $VPC.

6 | * You **cannot attach** a $SERVICE_LONG to multiple Peering $VPCs at the same time.

7 |

8 | The number of Peering $VPCs you can create in your project depends on your [$PRICING_PLAN][pricing-plans].

9 | If you need another Peering $VPC, either contact [support@timescale.com](mailto:support@timescale.com) or change your $PRICING_PLAN in [$CONSOLE][console-login].

10 |

11 | [console-login]: https://console.cloud.timescale.com/

12 | [pricing-plans]: /about/:currentVersion:/pricing-and-account-management/

--------------------------------------------------------------------------------

/_partials/_where-to-next.md:

--------------------------------------------------------------------------------

1 | What next? [Try the main features offered by Timescale][try-timescale-features], see the [use case tutorials][tutorials],

2 | interact with the data in your $SERVICE_LONG using [your favorite programming language][connect-with-code], integrate

3 | your $SERVICE_LONG with a range of [third-party tools][integrations], plain old [Use Timescale][use-timescale], or dive

4 | into [the API][use-the-api].

5 |

6 | [tutorials]: /tutorials/:currentVersion:/

7 | [integrations]: /integrations/:currentVersion:/

8 | [connect-with-code]: /getting-started/:currentVersion:/start-coding-with-timescale/

9 | [use-the-api]: /api/:currentVersion:/

10 | [use-timescale]: /use-timescale/:currentVersion:/

11 | [try-timescale-features]: /getting-started/:currentVersion:/try-key-features-timescale-products/

12 |

--------------------------------------------------------------------------------

/_queries/getting-started-cagg-tesla.md:

--------------------------------------------------------------------------------

1 | SELECT * FROM stock_candlestick_daily

2 | WHERE symbol='TSLA'

3 | ORDER BY day DESC

4 | LIMIT 10;

5 |

6 | -- Output

7 |

8 | day | symbol | high | open | close | low

9 | -----------------------+--------+----------+----------+----------+----------

10 | 2023-07-31 00:00:00+00 | TSLA | 269 | 266.42 | 266.995 | 263.8422

11 | 2023-07-28 00:00:00+00 | TSLA | 267.4 | 259.32 | 266.8 | 258.06

12 | 2023-07-27 00:00:00+00 | TSLA | 269.98 | 268.3 | 256.8 | 241.5539

13 | 2023-07-26 00:00:00+00 | TSLA | 271.5168 | 265.48 | 265.3283 | 258.0418

14 | 2023-07-25 00:00:00+00 | TSLA | 270.22 | 267.5099 | 264.55 | 257.21

15 | 2023-07-20 00:00:00+00 | TSLA | 267.58 | 267.34 | 260.6 | 247.4588

16 | 2023-07-14 00:00:00+00 | TSLA | 285.27 | 277.29 | 281.7 | 264.7567

17 | 2023-07-13 00:00:00+00 | TSLA | 290.0683 | 274.07 | 277.4509 | 270.6127

18 | 2023-07-12 00:00:00+00 | TSLA | 277.68 | 271.26 | 272.94 | 258.0418

19 | 2023-07-11 00:00:00+00 | TSLA | 271.44 | 270.83 | 269.8303 | 266.3885

20 | (10 rows)

21 |

--------------------------------------------------------------------------------

/_queries/getting-started-cagg.md:

--------------------------------------------------------------------------------

1 | SELECT * FROM stock_candlestick_daily

2 | ORDER BY day DESC, symbol

3 | LIMIT 10;

4 |

5 | -- Output

6 |

7 | day | symbol | high | open | close | low

8 | -----------------------+--------+----------+--------+----------+----------

9 | 2023-07-31 00:00:00+00 | AAPL | 196.71 | 195.9 | 196.1099 | 195.2699

10 | 2023-07-31 00:00:00+00 | ABBV | 151.25 | 151.25 | 148.03 | 148.02

11 | 2023-07-31 00:00:00+00 | ABNB | 154.95 | 153.43 | 152.95 | 151.65

12 | 2023-07-31 00:00:00+00 | ABT | 113 | 112.4 | 111.49 | 111.44

13 | 2023-07-31 00:00:00+00 | ADBE | 552.87 | 536.74 | 550.835 | 536.74

14 | 2023-07-31 00:00:00+00 | AMAT | 153.9786 | 152.5 | 151.84 | 150.52

15 | 2023-07-31 00:00:00+00 | AMD | 114.57 | 113.47 | 113.15 | 112.35

16 | 2023-07-31 00:00:00+00 | AMGN | 237 | 236.61 | 233.6 | 233.515

17 | 2023-07-31 00:00:00+00 | AMT | 191.69 | 189.75 | 190.55 | 188.97

18 | 2023-07-31 00:00:00+00 | AMZN | 133.89 | 132.42 | 133.055 | 132.32

19 | (10 rows)

20 |

--------------------------------------------------------------------------------

/_queries/getting-started-crypto-cagg.md:

--------------------------------------------------------------------------------

1 | SELECT * FROM assets_candlestick_daily

2 | ORDER BY day DESC, symbol

3 | LIMIT 10;

4 |

5 | -- Output

6 |

7 | day | symbol | high | open | close | low

8 | -----------------------+--------+----------+--------+----------+----------

9 | 2025-01-30 00:00:00+00 | ADA/USD | 0.9708 | 0.9396 | 0.9607 | 0.9365

10 | 2025-01-30 00:00:00+00 | ATOM/USD | 6.114 | 5.825 | 6.063 | 5.776

11 | 2025-01-30 00:00:00+00 | AVAX/USD | 34.1 | 32.8 | 33.95 | 32.44

12 | 2025-01-30 00:00:00+00 | BNB/USD | 679.3 | 668.12 | 677.81 | 666.08

13 | 2025-01-30 00:00:00+00 | BTC/USD | 105595.65 | 103735.84 | 105157.21 | 103298.84

14 | 2025-01-30 00:00:00+00 | CRO/USD | 0.13233 | 0.12869 | 0.13138 | 0.12805

15 | 2025-01-30 00:00:00+00 | DAI/USD | 1 | 1 | 0.9999 | 0.99989998

16 | 2025-01-30 00:00:00+00 | DOGE/USD | 0.33359 | 0.32392 | 0.33172 | 0.32231

17 | 2025-01-30 00:00:00+00 | DOT/USD | 6.01 | 5.779 | 6.004 | 5.732

18 | 2025-01-30 00:00:00+00 | ETH/USD | 3228.9 | 3113.36 | 3219.25 | 3092.92

19 | (10 rows)

20 |

--------------------------------------------------------------------------------

/_queries/getting-started-crypto-srt-orderby.md:

--------------------------------------------------------------------------------

1 | SELECT * FROM crypto_ticks srt

2 | WHERE symbol='ETH/USD'

3 | ORDER BY time DESC

4 | LIMIT 10;

5 |

6 | -- Output

7 |

8 | time | symbol | price | day_volume

9 | -----------------------+--------+----------+------------

10 | 2025-01-30 12:05:09+00 | ETH/USD | 3219.25 | 39425

11 | 2025-01-30 12:05:00+00 | ETH/USD | 3219.26 | 39425

12 | 2025-01-30 12:04:42+00 | ETH/USD | 3219.26 | 39459

13 | 2025-01-30 12:04:33+00 | ETH/USD | 3219.91 | 39458

14 | 2025-01-30 12:04:15+00 | ETH/USD | 3219.6 | 39458

15 | 2025-01-30 12:04:06+00 | ETH/USD | 3220.68 | 39458

16 | 2025-01-30 12:03:57+00 | ETH/USD | 3220.68 | 39483

17 | 2025-01-30 12:03:48+00 | ETH/USD | 3220.12 | 39483

18 | 2025-01-30 12:03:20+00 | ETH/USD | 3219.79 | 39482

19 | 2025-01-30 12:03:11+00 | ETH/USD | 3220.06 | 39472

20 | (10 rows)

21 |

--------------------------------------------------------------------------------

/_queries/getting-started-srt-4-days.md:

--------------------------------------------------------------------------------

1 | SELECT * FROM stocks_real_time srt

2 | LIMIT 10;

3 |

4 | -- Output

5 |

6 | time | symbol | price | day_volume

7 | -----------------------+--------+----------+------------

8 | 2023-07-31 16:32:16+00 | PEP | 187.755 | 1618189

9 | 2023-07-31 16:32:16+00 | TSLA | 268.275 | 51902030

10 | 2023-07-31 16:32:16+00 | INTC | 36.035 | 22736715

11 | 2023-07-31 16:32:15+00 | CHTR | 402.27 | 626719

12 | 2023-07-31 16:32:15+00 | TSLA | 268.2925 | 51899210

13 | 2023-07-31 16:32:15+00 | AMD | 113.72 | 29136618

14 | 2023-07-31 16:32:15+00 | NVDA | 467.72 | 13951198

15 | 2023-07-31 16:32:15+00 | AMD | 113.72 | 29137753

16 | 2023-07-31 16:32:15+00 | RTX | 87.74 | 4295687

17 | 2023-07-31 16:32:15+00 | RTX | 87.74 | 4295907

18 | (10 rows)

19 |

--------------------------------------------------------------------------------

/_queries/getting-started-srt-aggregation.md:

--------------------------------------------------------------------------------

1 | SELECT

2 | time_bucket('1 day', time) AS bucket,

3 | symbol,

4 | max(price) AS high,

5 | first(price, time) AS open,

6 | last(price, time) AS close,

7 | min(price) AS low

8 | FROM stocks_real_time srt

9 | WHERE time > now() - INTERVAL '1 week'

10 | GROUP BY bucket, symbol

11 | ORDER BY bucket, symbol

12 | LIMIT 10;

13 |

14 | -- Output

15 |

16 | day | symbol | high | open | close | low

17 | -----------------------+--------+--------------+----------+----------+--------------

18 | 2023-06-07 00:00:00+00 | AAPL | 179.25 | 178.91 | 179.04 | 178.17

19 | 2023-06-07 00:00:00+00 | ABNB | 117.99 | 117.4 | 117.9694 | 117

20 | 2023-06-07 00:00:00+00 | AMAT | 134.8964 | 133.73 | 134.8964 | 133.13

21 | 2023-06-07 00:00:00+00 | AMD | 125.33 | 124.11 | 125.13 | 123.82

22 | 2023-06-07 00:00:00+00 | AMZN | 127.45 | 126.22 | 126.69 | 125.81

23 | ...

24 |

--------------------------------------------------------------------------------

/_queries/getting-started-srt-bucket-first-last.md:

--------------------------------------------------------------------------------

1 | SELECT time_bucket('1 hour', time) AS bucket,

2 | first(price,time),

3 | last(price, time)

4 | FROM stocks_real_time srt

5 | WHERE time > now() - INTERVAL '4 days'

6 | GROUP BY bucket;

7 |

8 | -- Output

9 |

10 | bucket | first | last

11 | ------------------------+--------+--------

12 | 2023-08-07 08:00:00+00 | 88.75 | 182.87

13 | 2023-08-07 09:00:00+00 | 140.85 | 35.16

14 | 2023-08-07 10:00:00+00 | 182.89 | 52.58

15 | 2023-08-07 11:00:00+00 | 86.69 | 255.15

16 |

--------------------------------------------------------------------------------

/_queries/getting-started-srt-candlestick.md:

--------------------------------------------------------------------------------

1 | SELECT

2 | time_bucket('1 day', "time") AS day,

3 | symbol,

4 | max(price) AS high,

5 | first(price, time) AS open,

6 | last(price, time) AS close,

7 | min(price) AS low

8 | FROM stocks_real_time srt

9 | GROUP BY day, symbol

10 | ORDER BY day DESC, symbol

11 | LIMIT 10;

12 |

13 | -- Output

14 |

15 | day | symbol | high | open | close | low

16 | -----------------------+--------+--------------+----------+----------+--------------

17 | 2023-06-07 00:00:00+00 | AAPL | 179.25 | 178.91 | 179.04 | 178.17

18 | 2023-06-07 00:00:00+00 | ABNB | 117.99 | 117.4 | 117.9694 | 117

19 | 2023-06-07 00:00:00+00 | AMAT | 134.8964 | 133.73 | 134.8964 | 133.13

20 | 2023-06-07 00:00:00+00 | AMD | 125.33 | 124.11 | 125.13 | 123.82

21 | 2023-06-07 00:00:00+00 | AMZN | 127.45 | 126.22 | 126.69 | 125.81

22 | ...

23 |

--------------------------------------------------------------------------------

/_queries/getting-started-srt-first-last.md:

--------------------------------------------------------------------------------

1 | SELECT symbol, first(price,time), last(price, time)

2 | FROM stocks_real_time srt

3 | WHERE time > now() - INTERVAL '4 days'

4 | GROUP BY symbol

5 | ORDER BY symbol

6 | LIMIT 10;

7 |

8 | -- Output

9 |

10 | symbol | first | last

11 | -------+----------+----------

12 | AAPL | 179.0507 | 179.04

13 | ABNB | 118.83 | 117.9694

14 | AMAT | 133.55 | 134.8964

15 | AMD | 122.6476 | 125.13

16 | AMZN | 126.5599 | 126.69

17 | ...

18 |

--------------------------------------------------------------------------------

/_queries/getting-started-srt-orderby.md:

--------------------------------------------------------------------------------

1 | SELECT * FROM stocks_real_time srt

2 | WHERE symbol='TSLA'

3 | ORDER BY time DESC

4 | LIMIT 10;

5 |

6 | -- Output

7 |

8 | time | symbol | price | day_volume

9 | -----------------------+--------+----------+------------

10 | 2025-01-30 00:51:00+00 | TSLA | 405.32 | NULL

11 | 2025-01-30 00:41:00+00 | TSLA | 406.05 | NULL

12 | 2025-01-30 00:39:00+00 | TSLA | 406.25 | NULL

13 | 2025-01-30 00:32:00+00 | TSLA | 406.02 | NULL

14 | 2025-01-30 00:32:00+00 | TSLA | 406.10 | NULL

15 | 2025-01-30 00:25:00+00 | TSLA | 405.95 | NULL

16 | 2025-01-30 00:24:00+00 | TSLA | 406.04 | NULL

17 | 2025-01-30 00:24:00+00 | TSLA | 406.04 | NULL

18 | 2025-01-30 00:22:00+00 | TSLA | 406.38 | NULL

19 | 2025-01-30 00:21:00+00 | TSLA | 405.77 | NULL

20 | (10 rows)

21 |

--------------------------------------------------------------------------------

/_queries/getting-started-week-average.md:

--------------------------------------------------------------------------------

1 | SELECT

2 | time_bucket('1 day', time) AS bucket,

3 | symbol,

4 | avg(price)

5 | FROM stocks_real_time srt

6 | WHERE time > now() - INTERVAL '1 week'

7 | GROUP BY bucket, symbol

8 | ORDER BY bucket, symbol

9 | LIMIT 10;

10 |

11 | -- Output

12 |

13 | bucket | symbol | avg

14 | -----------------------+--------+--------------------

15 | 2023-06-01 00:00:00+00 | AAPL | 179.3242530284364

16 | 2023-06-01 00:00:00+00 | ABNB | 112.05498586371293

17 | 2023-06-01 00:00:00+00 | AMAT | 134.41263567849518

18 | 2023-06-01 00:00:00+00 | AMD | 119.43332772033834

19 | 2023-06-01 00:00:00+00 | AMZN | 122.3446364966392

20 | ...

21 |

--------------------------------------------------------------------------------

/_queries/test.md:

--------------------------------------------------------------------------------

1 | SELECT time,

2 | symbol,

3 | price

4 | FROM stocks_real_time

5 | ORDER BY time DESC

6 | LIMIT 10;

7 |

8 | -- Output

9 |

10 | here | is | some | fake | data

11 | -----|----|------|------|-----

12 | 25 | 2 | yes | no | 1.25

13 | 25 | 2 | yes | no | 1.25

14 | 25 | 2 | yes | no | 1.25

15 | 25 | 2 | yes | no | 1.25

16 | 25 | 2 | yes | no | 1.25

17 |

--------------------------------------------------------------------------------

/_troubleshooting/caggs-hypertable-retention-policy-not-applying.md:

--------------------------------------------------------------------------------

1 | ---

2 | title: Hypertable retention policy isn't applying to continuous aggregates

3 | section: troubleshooting

4 | products: [cloud, mst, self_hosted]

5 | topics: [continuous aggregates, data retention]

6 | apis:

7 | - [data retention, add_retention_policy()]

8 | keywords: [continuous aggregates, data retention]

9 | tags: [continuous aggregates, data retention]

10 | ---

11 |

12 |

20 |

21 | A retention policy set on a hypertable does not apply to any continuous

22 | aggregates made from the hypertable. This allows you to set different retention

23 | periods for raw and summarized data. To apply a retention policy to a continuous

24 | aggregate, set the policy on the continuous aggregate itself.

25 |

--------------------------------------------------------------------------------

/_troubleshooting/caggs-migrate-permissions.md:

--------------------------------------------------------------------------------

1 | ---

2 | title: Permissions error when migrating a continuous aggregate

3 | section: troubleshooting

4 | products: [cloud, mst, self_hosted]

5 | topics: [continuous aggregates]

6 | apis:

7 | - [continuous aggregates, cagg_migrate()]

8 | keywords: [continuous aggregates]

9 | tags: [continuous aggregates, migrate]

10 | ---

11 |

12 | import CaggMigratePermissions from 'versionContent/_partials/_caggs-migrate-permissions.mdx';

13 |

14 |

22 |

23 |

24 |

--------------------------------------------------------------------------------

/_troubleshooting/caggs-queries-fail.md:

--------------------------------------------------------------------------------