17 |

18 |

19 |

20 |

--------------------------------------------------------------------------------

/Documentation/ExecutingTheQueue/RunViaBackend/Index.rst:

--------------------------------------------------------------------------------

1 | .. include:: /Includes.rst.txt

2 |

3 | .. _run-backend:

4 |

5 | ===============

6 | Run via backend

7 | ===============

8 |

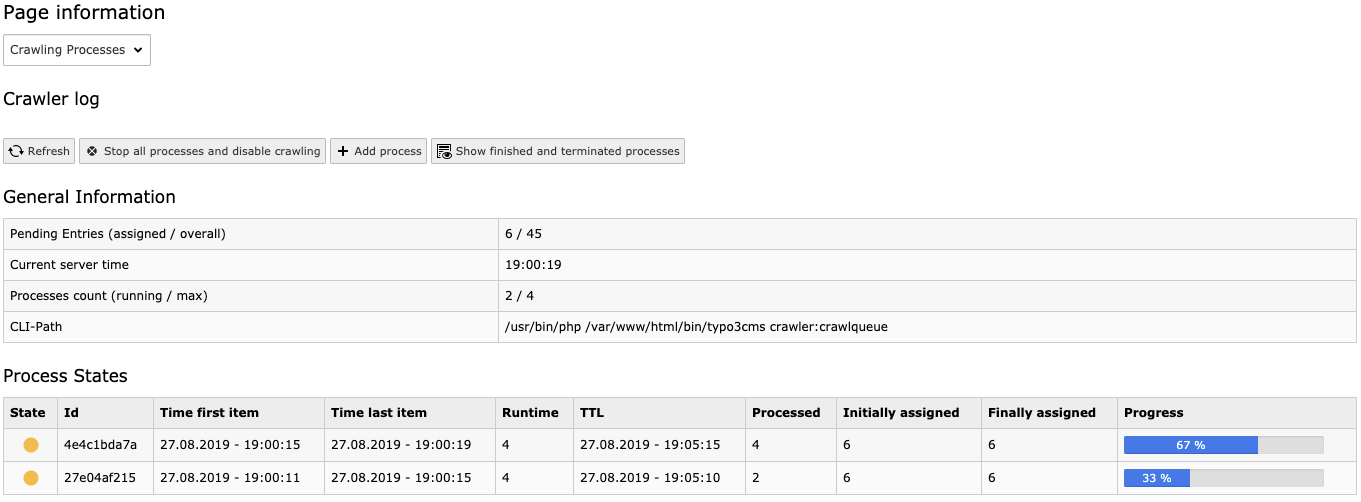

9 | To process the queue you must either set up a cron-job on your server

10 | or use the backend to process the queue:

11 |

12 | .. figure:: /Images/backend_processlist_add_process.png

13 | :alt: Process the queue via backend

14 |

15 | Process the queue via backend

16 |

17 | You can also (re-)crawl single URLs manually from within the :guilabel:`Crawler

18 | log` view in the info module:

19 |

20 | .. figure:: /Images/backend_crawlerlog_recrawl.png

21 | :alt: Crawl single URLs via backend

22 |

23 | Crawl single URLs via backend

24 |

--------------------------------------------------------------------------------

/Classes/EventListener/ShouldUseCachedPageDataIfAvailableEventListener.php:

--------------------------------------------------------------------------------

1 | getRequest()->getAttribute('tx_crawler') === null) {

18 | return;

19 | }

20 | $event->setShouldUseCachedPageData(false);

21 | }

22 | }

23 |

--------------------------------------------------------------------------------

/Classes/Exception/ProcessException.php:

--------------------------------------------------------------------------------

1 |

9 | *

10 | * This file is part of the TYPO3 Crawler Extension.

11 | *

12 | * It is free software; you can redistribute it and/or modify it under

13 | * the terms of the GNU General Public License, either version 2

14 | * of the License, or any later version.

15 | *

16 | * For the full copyright and license information, please read the

17 | * LICENSE.txt file that was distributed with this source code.

18 | *

19 | * The TYPO3 project - inspiring people to share!

20 | */

21 |

22 | /**

23 | * @internal since v12.0.0

24 | */

25 | class ProcessException extends \Exception

26 | {

27 | }

28 |

--------------------------------------------------------------------------------

/Documentation/Features/Hooks/Index.rst:

--------------------------------------------------------------------------------

1 | .. include:: /Includes.rst.txt

2 |

3 | .. _hooks:

4 |

5 | =====

6 | Hooks

7 | =====

8 |

9 | Register the following hooks in :file:`ext_localconf.php` of your extension.

10 |

11 | .. _hooks-excludeDoktype:

12 |

13 | excludeDoktype Hook

14 | ===================

15 |

16 | By adding doktype ids to following array you can exclude them from

17 | being crawled:

18 |

19 | .. code-block:: php

20 | :caption: packages/my_extension/ext_localconf.php

21 |

22 | $GLOBALS['TYPO3_CONF_VARS']['EXTCONF']['crawler']['excludeDoktype'][] =

23 |

24 | pageVeto Hook

25 | =============

26 |

27 | .. deprecated:: 11.0.0

28 | Removed in 13.0, please migrate to the PSR-14 Event :ref:`psr14-modify-skip-page-event`!

29 |

--------------------------------------------------------------------------------

/ext_emconf.php:

--------------------------------------------------------------------------------

1 | 'Site Crawler',

4 | 'description' => 'TYPO3 Crawler crawls the TYPO3 page tree. Used for cache warmup, indexing, publishing applications etc.',

5 | 'category' => 'module',

6 | 'state' => 'stable',

7 | 'uploadfolder' => 0,

8 | 'createDirs' => '',

9 | 'clearCacheOnLoad' => 0,

10 | 'author' => 'Tomas Norre Mikkelsen',

11 | 'author_email' => 'tomasnorre@gmail.com',

12 | 'author_company' => '',

13 | 'version' => '12.0.10',

14 | 'constraints' => [

15 | 'depends' => [

16 | 'php' => '8.1.0-8.99.99',

17 | 'typo3' => '12.4.0-13.4.99',

18 | ],

19 | 'conflicts' => [],

20 | 'suggests' => [],

21 | ]

22 | ];

23 |

--------------------------------------------------------------------------------

/Classes/Exception/NoIndexFoundException.php:

--------------------------------------------------------------------------------

1 |

9 | *

10 | * This file is part of the TYPO3 Crawler Extension.

11 | *

12 | * It is free software; you can redistribute it and/or modify it under

13 | * the terms of the GNU General Public License, either version 2

14 | * of the License, or any later version.

15 | *

16 | * For the full copyright and license information, please read the

17 | * LICENSE.txt file that was distributed with this source code.

18 | *

19 | * The TYPO3 project - inspiring people to share!

20 | */

21 |

22 | /**

23 | * @internal since v12.0.0

24 | */

25 | class NoIndexFoundException extends \Exception

26 | {

27 | }

28 |

--------------------------------------------------------------------------------

/Classes/EventListener/AfterQueueItemAddedEventListener.php:

--------------------------------------------------------------------------------

1 | getConnectionForTable(QueueRepository::TABLE_NAME)

17 | ->update(QueueRepository::TABLE_NAME, $event->getFieldArray(), [

18 | 'qid' => (int) $event->getQueueId(),

19 | ]);

20 | }

21 | }

22 |

--------------------------------------------------------------------------------

/Classes/Exception/CommandNotFoundException.php:

--------------------------------------------------------------------------------

1 |

9 | *

10 | * This file is part of the TYPO3 Crawler Extension.

11 | *

12 | * It is free software; you can redistribute it and/or modify it under

13 | * the terms of the GNU General Public License, either version 2

14 | * of the License, or any later version.

15 | *

16 | * For the full copyright and license information, please read the

17 | * LICENSE.txt file that was distributed with this source code.

18 | *

19 | * The TYPO3 project - inspiring people to share!

20 | */

21 |

22 | /**

23 | * @internal since v12.0.0

24 | */

25 | class CommandNotFoundException extends \Exception

26 | {

27 | }

28 |

--------------------------------------------------------------------------------

/Classes/Exception/ExtensionSettingsException.php:

--------------------------------------------------------------------------------

1 |

9 | *

10 | * This file is part of the TYPO3 Crawler Extension.

11 | *

12 | * It is free software; you can redistribute it and/or modify it under

13 | * the terms of the GNU General Public License, either version 2

14 | * of the License, or any later version.

15 | *

16 | * For the full copyright and license information, please read the

17 | * LICENSE.txt file that was distributed with this source code.

18 | *

19 | * The TYPO3 project - inspiring people to share!

20 | */

21 |

22 | /**

23 | * @internal since v12.0.0

24 | */

25 | class ExtensionSettingsException extends \Exception

26 | {

27 | }

28 |

--------------------------------------------------------------------------------

/Documentation/UseCases/CacheWarmup/_commands.bash:

--------------------------------------------------------------------------------

1 | # Done to make sure the crawler queue is empty, so that we will only crawl important pages.

2 | $ vendor/bin/typo3 crawler:flushQueue all

3 |

4 | # Now we want to fill the crawler queue,

5 | # This will start on page uid 1 with the deployment configuration and depth 99,

6 | # --mode exec crawles the pages instantly so we don't need a secondary process for that.

7 | $ vendor/bin/typo3 crawler:buildQueue 1 deployment --depth 99 --mode exec

8 |

9 | # Add the rest of the pages to crawler queue and have the processed with the scheduler

10 | # --mode queue is default, but it is added for visibility,

11 | # we assume that you have a crawler configuration called default

12 | $ vendor/bin/typo3 crawler:buildQueue 1 default --depth 99 --mode queue

13 |

--------------------------------------------------------------------------------

/Resources/Public/Icons/crawler_stop.svg:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/Documentation/ExecutingTheQueue/BuildingAndExecutingQueueRightAway(fromCli)/_output_buildQueue_6_default_mode_url.txt:

--------------------------------------------------------------------------------

1 | $ bin/typo3 crawler:buildQueue 6 default --depth 2 --mode url

2 | https://crawler-devbox.ddev.site/content-examples/overview

3 | https://crawler-devbox.ddev.site/content-examples/text/rich-text

4 | https://crawler-devbox.ddev.site/content-examples/text/headers

5 | https://crawler-devbox.ddev.site/content-examples/text/bullet-list

6 | https://crawler-devbox.ddev.site/content-examples/text/text-with-teaser

7 | https://crawler-devbox.ddev.site/content-examples/text/text-and-icon

8 | https://crawler-devbox.ddev.site/content-examples/text/text-in-columns

9 | https://crawler-devbox.ddev.site/content-examples/text/list-group

10 | https://crawler-devbox.ddev.site/content-examples/text/panel

11 |

--------------------------------------------------------------------------------

/ext_localconf.php:

--------------------------------------------------------------------------------

1 | isPackageActive('indexed_search')) {

13 | // Register with "indexed_search" extension

14 | $GLOBALS['TYPO3_CONF_VARS']['EXTCONF']['crawler']['procInstructions']['indexed_search'] = [

15 | 'key' => 'tx_indexedsearch_reindex',

16 | 'value' => 'Re-indexing'

17 | ];

18 | }

19 |

20 |

21 |

--------------------------------------------------------------------------------

/Classes/Exception/TimeStampException.php:

--------------------------------------------------------------------------------

1 |

9 | *

10 | * This file is part of the TYPO3 Crawler Extension.

11 | *

12 | * It is free software; you can redistribute it and/or modify it under

13 | * the terms of the GNU General Public License, either version 2

14 | * of the License, or any later version.

15 | *

16 | * For the full copyright and license information, please read the

17 | * LICENSE.txt file that was distributed with this source code.

18 | *

19 | * The TYPO3 project - inspiring people to share!

20 | */

21 |

22 | /**

23 | * @internal since v12.0.0

24 | * @deprecated since 12.0.5 will be removed in v14.x

25 | */

26 | class TimeStampException extends \Exception

27 | {

28 | }

29 |

--------------------------------------------------------------------------------

/Classes/Exception/CrawlerObjectException.php:

--------------------------------------------------------------------------------

1 |

9 | *

10 | * This file is part of the TYPO3 Crawler Extension.

11 | *

12 | * It is free software; you can redistribute it and/or modify it under

13 | * the terms of the GNU General Public License, either version 2

14 | * of the License, or any later version.

15 | *

16 | * For the full copyright and license information, please read the

17 | * LICENSE.txt file that was distributed with this source code.

18 | *

19 | * The TYPO3 project - inspiring people to share!

20 | */

21 |

22 | /**

23 | * @internal since v12.0.0

24 | * @deprecated since 12.0.5 will be removed in v14.x

25 | */

26 | class CrawlerObjectException extends \Exception

27 | {

28 | }

29 |

--------------------------------------------------------------------------------

/Classes/Helper/Sleeper/SleeperInterface.php:

--------------------------------------------------------------------------------

1 |

9 | *

10 | * This file is part of the TYPO3 Crawler Extension.

11 | *

12 | * It is free software; you can redistribute it and/or modify it under

13 | * the terms of the GNU General Public License, either version 2

14 | * of the License, or any later version.

15 | *

16 | * For the full copyright and license information, please read the

17 | * LICENSE.txt file that was distributed with this source code.

18 | *

19 | * The TYPO3 project - inspiring people to share!

20 | */

21 |

22 | /**

23 | * @internal since v12.0.0

24 | */

25 | interface SleeperInterface

26 | {

27 | public function sleep(int $seconds): void;

28 | }

29 |

--------------------------------------------------------------------------------

/Configuration/Icons.php:

--------------------------------------------------------------------------------

1 | [

9 | 'provider' => SvgIconProvider::class,

10 | 'source' => 'EXT:crawler/Resources/Public/Icons/crawler_configuration.svg',

11 | ],

12 | 'tx-crawler-start' => [

13 | 'provider' => SvgIconProvider::class,

14 | 'source' => 'EXT:crawler/Resources/Public/Icons/crawler_start.svg',

15 | ],

16 | 'tx-crawler-stop' => [

17 | 'provider' => SvgIconProvider::class,

18 | 'source' => 'EXT:crawler/Resources/Public/Icons/crawler_stop.svg',

19 | ],

20 | 'tx-crawler-icon' => [

21 | 'provider' => SvgIconProvider::class,

22 | 'source' => 'EXT:crawler/Resources/Public/Icons/Extension.svg',

23 | ],

24 | ];

25 |

--------------------------------------------------------------------------------

/Documentation/ExecutingTheQueue/Index.rst:

--------------------------------------------------------------------------------

1 | .. include:: /Includes.rst.txt

2 |

3 | .. _executing-the-queue-label:

4 |

5 | ===================

6 | Executing the queue

7 | ===================

8 |

9 | The idea of the queue is that a large number of tasks can be submitted

10 | to the queue and performed over longer time. This could be interesting

11 | for several reasons;

12 |

13 | - To spread server load over time.

14 |

15 | - To time the requests for nightly processing.

16 |

17 | - And simply to avoid `max_execution_time` of PHP to limit processing

18 | to 30 seconds!

19 |

20 |

21 | .. toctree::

22 | :maxdepth: 5

23 | :titlesonly:

24 | :glob:

25 |

26 | RunningViaCommandController/Index

27 | ExecutingQueueWithCron-job/Index

28 | RunViaBackend/Index

29 | BuildingAndExecutingQueueRightAway(fromCli)/Index

30 |

--------------------------------------------------------------------------------

/Makefile:

--------------------------------------------------------------------------------

1 | .PHONY: help

2 | help: ## Displays this list of targets with descriptions

3 | @echo "The following commands are available:\n"

4 | @grep -E '^[a-zA-Z0-9_-]+:.*?## .*$$' $(MAKEFILE_LIST) | sort | awk 'BEGIN {FS = ":.*?## "}; {printf "\033[32m%-30s\033[0m %s\n", $$1, $$2}'

5 |

6 |

7 | .PHONY: docs

8 | docs: ## Generate projects docs (from "Documentation" directory)

9 | mkdir -p Documentation-GENERATED-temp

10 | docker run --rm --pull always -v "$(shell pwd)":/project -t ghcr.io/typo3-documentation/render-guides:latest --config=Documentation

11 |

12 |

13 | .PHONY: test-docs

14 | test-docs: ## Test the documentation rendering

15 | mkdir -p Documentation-GENERATED-temp

16 | docker run --rm --pull always -v "$(shell pwd)":/project -t ghcr.io/typo3-documentation/render-guides:latest --config=Documentation --no-progress --minimal-test

17 |

--------------------------------------------------------------------------------

/Classes/Writer/FileWriter/CsvWriter/CsvWriterInterface.php:

--------------------------------------------------------------------------------

1 |

9 | *

10 | * This file is part of the TYPO3 Crawler Extension.

11 | *

12 | * It is free software; you can redistribute it and/or modify it under

13 | * the terms of the GNU General Public License, either version 2

14 | * of the License, or any later version.

15 | *

16 | * For the full copyright and license information, please read the

17 | * LICENSE.txt file that was distributed with this source code.

18 | *

19 | * The TYPO3 project - inspiring people to share!

20 | */

21 |

22 | /**

23 | * @internal since v12.0.0

24 | */

25 | interface CsvWriterInterface

26 | {

27 | public function arrayToCsv(array $records): string;

28 | }

29 |

--------------------------------------------------------------------------------

/Classes/Hooks/CrawlerHookInterface.php:

--------------------------------------------------------------------------------

1 |

9 | * (c) 2021- Tomas Norre Mikkelsen

10 | *

11 | * This file is part of the TYPO3 Crawler Extension.

12 | *

13 | * It is free software; you can redistribute it and/or modify it under

14 | * the terms of the GNU General Public License, either version 2

15 | * of the License, or any later version.

16 | *

17 | * For the full copyright and license information, please read the

18 | * LICENSE.txt file that was distributed with this source code.

19 | *

20 | * The TYPO3 project - inspiring people to share!

21 | */

22 |

23 | /**

24 | * @internal since v12.0.0

25 | */

26 | interface CrawlerHookInterface

27 | {

28 | public function crawler_init(): void;

29 | }

30 |

--------------------------------------------------------------------------------

/Classes/Helper/Sleeper/NullSleeper.php:

--------------------------------------------------------------------------------

1 |

9 | *

10 | * This file is part of the TYPO3 Crawler Extension.

11 | *

12 | * It is free software; you can redistribute it and/or modify it under

13 | * the terms of the GNU General Public License, either version 2

14 | * of the License, or any later version.

15 | *

16 | * For the full copyright and license information, please read the

17 | * LICENSE.txt file that was distributed with this source code.

18 | *

19 | * The TYPO3 project - inspiring people to share!

20 | */

21 |

22 | /*

23 | * @internal

24 | * @codeCoverageIgnore

25 | */

26 | final class NullSleeper implements SleeperInterface

27 | {

28 | #[\Override]

29 | public function sleep(int $seconds): void

30 | {

31 | }

32 | }

33 |

--------------------------------------------------------------------------------

/Classes/Helper/Sleeper/SystemSleeper.php:

--------------------------------------------------------------------------------

1 |

9 | *

10 | * This file is part of the TYPO3 Crawler Extension.

11 | *

12 | * It is free software; you can redistribute it and/or modify it under

13 | * the terms of the GNU General Public License, either version 2

14 | * of the License, or any later version.

15 | *

16 | * For the full copyright and license information, please read the

17 | * LICENSE.txt file that was distributed with this source code.

18 | *

19 | * The TYPO3 project - inspiring people to share!

20 | */

21 |

22 | /*

23 | * @internal

24 | */

25 | final class SystemSleeper implements SleeperInterface

26 | {

27 | #[\Override]

28 | public function sleep(int $seconds): void

29 | {

30 | \sleep($seconds);

31 | }

32 | }

33 |

--------------------------------------------------------------------------------

/Resources/Public/Icons/crawler_start.svg:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/Documentation/guides.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

11 |

17 |

18 |

--------------------------------------------------------------------------------

/Documentation/Features/MultiprocessSupport/Index.rst:

--------------------------------------------------------------------------------

1 | .. include:: /Includes.rst.txt

2 |

3 | .. _multi-process:

4 |

5 | =====================

6 | Multi process support

7 | =====================

8 |

9 | If you want to optimize the crawling process for speed (instead of low

10 | server stress), maybe because the machine is a dedicated staging

11 | machine you should experiment with the new multi process features.

12 |

13 | In the extension settings you can set how many processes are allowed to

14 | run at the same time, how many queue entries a process should grab and

15 | how long a process is allowed to run. Then run one (or even more)

16 | crawling processes per minute. You'll be able to speed up the crawler quite a lot.

17 |

18 | But choose your settings carefully as it puts loads on the server.

19 |

20 | .. figure:: /Images/crawler_settings_processLimit.png

21 | :alt: Backend configuration: Processing

22 |

23 | Backend configuration: Processing

24 |

--------------------------------------------------------------------------------

/Classes/Process/ProcessManagerInterface.php:

--------------------------------------------------------------------------------

1 |

9 | *

10 | * This file is part of the TYPO3 Crawler Extension.

11 | *

12 | * It is free software; you can redistribute it and/or modify it under

13 | * the terms of the GNU General Public License, either version 2

14 | * of the License, or any later version.

15 | *

16 | * For the full copyright and license information, please read the

17 | * LICENSE.txt file that was distributed with this source code.

18 | *

19 | * The TYPO3 project - inspiring people to share!

20 | */

21 |

22 | /**

23 | * @codeCoverageIgnore

24 | * @internal since v12.0.10

25 | */

26 | interface ProcessManagerInterface

27 | {

28 | public function processExists(int $pid): bool;

29 |

30 | public function killProcess(int $pid): void;

31 |

32 | public function findDispatcherProcesses(): array;

33 | }

34 |

--------------------------------------------------------------------------------

/SECURITY.md:

--------------------------------------------------------------------------------

1 | # Security Policy

2 |

3 | ## Supported Versions

4 |

5 | | Release | TYPO3 | PHP | Fixes will contain

6 | |---------|-----------|---------|---|

7 | | 12.x.y | 12.4-13.3 | 8.1-8.4 |Features, Bugfixes, Security Updates, Since 12.0.6 TYPO3 13.4, Since 12.0.7 PHP 8.4

8 | | 11.x.y | 10.4-11.5 | 7.4-8.1 |Security Updates, Since 11.0.3 PHP 8.1

9 | | 10.x.y | 9.5-11.0 | 7.2-7.4 |Security Updates

10 | | 9.x.y | 9.5-11.0 | 7.2-7.4 |As this version has same requirements as 10.x.y, there will be no further releases of this version, please update instead.

11 | | 8.x.y | | | Releases do not exist

12 | | 7.x.y | | | Releases do not exist

13 | | 6.x.y | 7.6-8.7 | 5.6-7.3 | Security Updates

14 |

15 | ## Reporting a Vulnerability

16 |

17 | I case you find a security issue, please write an email to: [tomasnorre@gmail.com](mailto:tomasnorre@gmail.com) or reach out to the [TYPO3 Security Team](https://typo3.org/community/teams/security)

18 |

--------------------------------------------------------------------------------

/Classes/Process/ProcessManagerFactory.php:

--------------------------------------------------------------------------------

1 |

7 | *

8 | * This file is part of the TYPO3 Crawler Extension.

9 | *

10 | * It is free software; you can redistribute it and/or modify it under

11 | * the terms of the GNU General Public License, either version 2

12 | * of the License, or any later version.

13 | *

14 | * For the full copyright and license information, please read the

15 | * LICENSE.txt file that was distributed with this source code.

16 | *

17 | * The TYPO3 project - inspiring people to share!

18 | */

19 |

20 | use TYPO3\CMS\Core\Core\Environment;

21 |

22 | /**

23 | * @internal since v12.0.10

24 | */

25 | class ProcessManagerFactory

26 | {

27 | public static function create(): ProcessManagerInterface

28 | {

29 | if (Environment::isWindows()) {

30 | return new WindowsProcessManager();

31 | }

32 |

33 | return new UnixProcessManager();

34 | }

35 | }

36 |

--------------------------------------------------------------------------------

/Classes/Event/AfterUrlCrawledEvent.php:

--------------------------------------------------------------------------------

1 |

9 | *

10 | * This file is part of the TYPO3 Crawler Extension.

11 | *

12 | * It is free software; you can redistribute it and/or modify it under

13 | * the terms of the GNU General Public License, either version 2

14 | * of the License, or any later version.

15 | *

16 | * For the full copyright and license information, please read the

17 | * LICENSE.txt file that was distributed with this source code.

18 | *

19 | * The TYPO3 project - inspiring people to share!

20 | */

21 |

22 | /**

23 | * @internal since v12.0.0

24 | */

25 | final class AfterUrlCrawledEvent

26 | {

27 | public function __construct(

28 | private readonly string $url,

29 | private readonly array $result

30 | ) {

31 | }

32 |

33 | public function getUrl(): string

34 | {

35 | return $this->url;

36 | }

37 |

38 | public function getResult(): array

39 | {

40 | return $this->result;

41 | }

42 | }

43 |

--------------------------------------------------------------------------------

/Classes/Event/AfterUrlAddedToQueueEvent.php:

--------------------------------------------------------------------------------

1 |

9 | *

10 | * This file is part of the TYPO3 Crawler Extension.

11 | *

12 | * It is free software; you can redistribute it and/or modify it under

13 | * the terms of the GNU General Public License, either version 2

14 | * of the License, or any later version.

15 | *

16 | * For the full copyright and license information, please read the

17 | * LICENSE.txt file that was distributed with this source code.

18 | *

19 | * The TYPO3 project - inspiring people to share!

20 | */

21 |

22 | /**

23 | * @internal since v12.0.0

24 | */

25 | final class AfterUrlAddedToQueueEvent

26 | {

27 | public function __construct(

28 | private readonly string $uid,

29 | private readonly array $fieldArray

30 | ) {

31 | }

32 |

33 | public function getUid(): string

34 | {

35 | return $this->uid;

36 | }

37 |

38 | public function getFieldArray(): array

39 | {

40 | return $this->fieldArray;

41 | }

42 | }

43 |

--------------------------------------------------------------------------------

/Documentation/ExecutingTheQueue/RunningViaCommandController/Index.rst:

--------------------------------------------------------------------------------

1 | .. include:: /Includes.rst.txt

2 |

3 | .. _command-controller:

4 |

5 | ==========================

6 | Run via command controller

7 | ==========================

8 |

9 | .. _command-controller-buildqueue:

10 |

11 | Create queue

12 | ------------

13 |

14 | .. code-block:: bash

15 | :caption: replace vendor/bin/typo3 with your own cli runner

16 |

17 | $ vendor/bin/typo3 crawler:buildQueue [--depth ] [--number ] [--mode ]

18 |

19 | .. _command-controller-processqueue:

20 |

21 | Run queue

22 | ---------

23 |

24 | .. code-block:: bash

25 | :caption: replace vendor/bin/typo3 with your own cli runner

26 |

27 | $ vendor/bin/typo3 crawler:processQueue [--amount ] [--sleeptime ] [--sleepafter ]

28 |

29 | .. _command-controller-flushqueue:

30 |

31 | Flush queue

32 | -----------

33 |

34 | .. code-block:: bash

35 | :caption: replace vendor/bin/typo3 with your own cli runner

36 |

37 | $ vendor/bin/typo3 crawler:flushQueue

38 |

--------------------------------------------------------------------------------

/Documentation/Index.rst:

--------------------------------------------------------------------------------

1 | .. include:: /Includes.rst.txt

2 |

3 | .. _start:

4 |

5 | ======================

6 | Site Crawler Extension

7 | ======================

8 |

9 | :Extension key:

10 | crawler

11 |

12 | :Package name:

13 | tomasnorre/crawler

14 |

15 | :Version:

16 | |release|

17 |

18 | :Language:

19 | en

20 |

21 | :Author:

22 | Tomas Norre Mikkelsen

23 |

24 | :Copyright:

25 | 2005-2021 AOE GmbH, since 2021 Tomas Norre Mikkelsen

26 |

27 | :License:

28 | This document is published under the `Open Content License

29 | `_.

30 |

31 | :Rendered:

32 | |today|

33 |

34 | ----

35 |

36 | Libraries and scripts for crawling the TYPO3 page tree. Used for re-caching, re-indexing, publishing applications etc.

37 |

38 | ----

39 |

40 | **Table of Contents:**

41 |

42 | .. toctree::

43 | :maxdepth: 2

44 | :titlesonly:

45 |

46 | Introduction/Index

47 | Configuration/Index

48 | ExecutingTheQueue/Index

49 | Scheduler/Index

50 | UseCases/Index

51 | Features/Index

52 | Troubleshooting/Index

53 | Links/Links

54 |

55 | .. toctree::

56 | :hidden:

57 |

58 | Sitemap

59 |

--------------------------------------------------------------------------------

/Classes/Event/InvokeQueueChangeEvent.php:

--------------------------------------------------------------------------------

1 |

9 | *

10 | * This file is part of the TYPO3 Crawler Extension.

11 | *

12 | * It is free software; you can redistribute it and/or modify it under

13 | * the terms of the GNU General Public License, either version 2

14 | * of the License, or any later version.

15 | *

16 | * For the full copyright and license information, please read the

17 | * LICENSE.txt file that was distributed with this source code.

18 | *

19 | * The TYPO3 project - inspiring people to share!

20 | */

21 |

22 | use AOE\Crawler\Domain\Model\Reason;

23 |

24 | /**

25 | * @internal since v12.0.0

26 | */

27 | final class InvokeQueueChangeEvent

28 | {

29 | public function __construct(

30 | private readonly Reason $reason

31 | ) {

32 | }

33 |

34 | public function getReasonDetailedText(): string

35 | {

36 | return $this->reason->getDetailText();

37 | }

38 |

39 | public function getReasonText(): string

40 | {

41 | return $this->reason->getReason();

42 | }

43 | }

44 |

--------------------------------------------------------------------------------

/Classes/Service/UserService.php:

--------------------------------------------------------------------------------

1 |

9 | *

10 | * This file is part of the TYPO3 Crawler Extension.

11 | *

12 | * It is free software; you can redistribute it and/or modify it under

13 | * the terms of the GNU General Public License, either version 2

14 | * of the License, or any later version.

15 | *

16 | * For the full copyright and license information, please read the

17 | * LICENSE.txt file that was distributed with this source code.

18 | *

19 | * The TYPO3 project - inspiring people to share!

20 | */

21 |

22 | use TYPO3\CMS\Core\Utility\GeneralUtility;

23 |

24 | /**

25 | * @internal since v9.2.5

26 | */

27 | class UserService

28 | {

29 | public static function hasGroupAccess(string $groupList, string $accessList): bool

30 | {

31 | if (empty($accessList)) {

32 | return true;

33 | }

34 | foreach (explode(',', $groupList) as $groupUid) {

35 | if (GeneralUtility::inList($accessList, $groupUid)) {

36 | return true;

37 | }

38 | }

39 | return false;

40 | }

41 | }

42 |

--------------------------------------------------------------------------------

/Classes/Value/CrawlAction.php:

--------------------------------------------------------------------------------

1 |

9 | *

10 | * This file is part of the TYPO3 Crawler Extension.

11 | *

12 | * It is free software; you can redistribute it and/or modify it under

13 | * the terms of the GNU General Public License, either version 2

14 | * of the License, or any later version.

15 | *

16 | * For the full copyright and license information, please read the

17 | * LICENSE.txt file that was distributed with this source code.

18 | *

19 | * The TYPO3 project - inspiring people to share!

20 | */

21 |

22 | use Assert\Assert;

23 |

24 | /**

25 | * @internal since v9.2.5

26 | */

27 | final class CrawlAction implements \Stringable

28 | {

29 | private readonly string $crawlAction;

30 |

31 | public function __construct(string $crawlAction)

32 | {

33 | Assert::that($crawlAction)

34 | ->inArray(['start', 'log', 'multiprocess']);

35 |

36 | $this->crawlAction = $crawlAction;

37 | }

38 |

39 | #[\Override]

40 | public function __toString(): string

41 | {

42 | return $this->crawlAction;

43 | }

44 | }

45 |

--------------------------------------------------------------------------------

/Classes/Value/QueueFilter.php:

--------------------------------------------------------------------------------

1 |

9 | *

10 | * This file is part of the TYPO3 Crawler Extension.

11 | *

12 | * It is free software; you can redistribute it and/or modify it under

13 | * the terms of the GNU General Public License, either version 2

14 | * of the License, or any later version.

15 | *

16 | * For the full copyright and license information, please read the

17 | * LICENSE.txt file that was distributed with this source code.

18 | *

19 | * The TYPO3 project - inspiring people to share!

20 | */

21 |

22 | use Assert\Assert;

23 |

24 | /**

25 | * @internal since v9.2.5

26 | */

27 | class QueueFilter implements \Stringable

28 | {

29 | private readonly string $queueFilter;

30 |

31 | public function __construct(string $queueFilter = 'all')

32 | {

33 | Assert::that($queueFilter)

34 | ->inArray(['all', 'pending', 'finished']);

35 |

36 | $this->queueFilter = $queueFilter;

37 | }

38 |

39 | #[\Override]

40 | public function __toString(): string

41 | {

42 | return $this->queueFilter;

43 | }

44 | }

45 |

--------------------------------------------------------------------------------

/Resources/Private/Language/locallang_csh_tx_crawler_configuration.xlf:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 | Page Id the crawler will use for initializing the TSFE (required)

8 |

9 |

10 | When crawling a page directly fom the TYPO3 backend, e.g. by using the "read" functionality of the "Crawler Log" module, the selected page id will be used to initialize the frontend rendering.

11 | Access to the selected page <strong>MUST NOT</strong> be restricted; crawling will fail otherwise.

12 |

13 |

14 |

15 |

16 |

--------------------------------------------------------------------------------

/Classes/Service/ProcessInstructionService.php:

--------------------------------------------------------------------------------

1 |

9 | *

10 | * This file is part of the TYPO3 Crawler Extension.

11 | *

12 | * It is free software; you can redistribute it and/or modify it under

13 | * the terms of the GNU General Public License, either version 2

14 | * of the License, or any later version.

15 | *

16 | * For the full copyright and license information, please read the

17 | * LICENSE.txt file that was distributed with this source code.

18 | *

19 | * The TYPO3 project - inspiring people to share!

20 | */

21 |

22 | use TYPO3\CMS\Core\Utility\GeneralUtility;

23 |

24 | /**

25 | * @internal since v11.0.3

26 | */

27 | class ProcessInstructionService

28 | {

29 | public function isAllowed(string $processInstruction, array $incoming): bool

30 | {

31 | if (empty($incoming)) {

32 | return true;

33 | }

34 |

35 | foreach ($incoming as $pi) {

36 | if (GeneralUtility::inList($processInstruction, $pi)) {

37 | return true;

38 | }

39 | }

40 | return false;

41 | }

42 | }

43 |

--------------------------------------------------------------------------------

/Classes/Event/ModifySkipPageEvent.php:

--------------------------------------------------------------------------------

1 |

9 | *

10 | * This file is part of the TYPO3 Crawler Extension.

11 | *

12 | * It is free software; you can redistribute it and/or modify it under

13 | * the terms of the GNU General Public License, either version 2

14 | * of the License, or any later version.

15 | *

16 | * For the full copyright and license information, please read the

17 | * LICENSE.txt file that was distributed with this source code.

18 | *

19 | * The TYPO3 project - inspiring people to share!

20 | */

21 |

22 | /**

23 | * @internal since v12.0.0

24 | */

25 | final class ModifySkipPageEvent

26 | {

27 | private false|string $skipped = false;

28 |

29 | public function __construct(

30 | private readonly array $pageRow

31 | ) {

32 | }

33 |

34 | public function isSkipped(): false|string

35 | {

36 | return $this->skipped;

37 | }

38 |

39 | public function setSkipped(false|string $skipped): void

40 | {

41 | $this->skipped = $skipped;

42 | }

43 |

44 | public function getPageRow(): array

45 | {

46 | return $this->pageRow;

47 | }

48 | }

49 |

--------------------------------------------------------------------------------

/Classes/Event/BeforeQueueItemAddedEvent.php:

--------------------------------------------------------------------------------

1 |

9 | *

10 | * This file is part of the TYPO3 Crawler Extension.

11 | *

12 | * It is free software; you can redistribute it and/or modify it under

13 | * the terms of the GNU General Public License, either version 2

14 | * of the License, or any later version.

15 | *

16 | * For the full copyright and license information, please read the

17 | * LICENSE.txt file that was distributed with this source code.

18 | *

19 | * The TYPO3 project - inspiring people to share!

20 | */

21 |

22 | /**

23 | * @internal since v12.0.0

24 | */

25 | final class BeforeQueueItemAddedEvent

26 | {

27 | public function __construct(

28 | private readonly int $queueId,

29 | private array $queueRecord

30 | ) {

31 | }

32 |

33 | public function getQueueId(): int

34 | {

35 | return $this->queueId;

36 | }

37 |

38 | public function getQueueRecord(): array

39 | {

40 | return $this->queueRecord;

41 | }

42 |

43 | public function setQueueRecord(array $queueRecord): void

44 | {

45 | $this->queueRecord = $queueRecord;

46 | }

47 | }

48 |

--------------------------------------------------------------------------------

/Documentation/ExecutingTheQueue/BuildingAndExecutingQueueRightAway(fromCli)/_output_buildQueue_6_default_mode_exec.txt:

--------------------------------------------------------------------------------

1 | $ bin/typo3 crawler:buildQueue 6 default --depth 2 --mode exec

2 | https://crawler-devbox.ddev.site/content-examples/overview

3 | https://crawler-devbox.ddev.site/content-examples/text/rich-text

4 | https://crawler-devbox.ddev.site/content-examples/text/headers

5 | https://crawler-devbox.ddev.site/content-examples/text/bullet-list

6 | https://crawler-devbox.ddev.site/content-examples/text/text-with-teaser

7 | https://crawler-devbox.ddev.site/content-examples/text/text-and-icon

8 | https://crawler-devbox.ddev.site/content-examples/text/text-in-columns

9 | https://crawler-devbox.ddev.site/content-examples/text/list-group

10 | https://crawler-devbox.ddev.site/content-examples/text/panel

11 | ...

12 | Processing

13 |

14 | https://crawler-devbox.ddev.site/content-examples/overview () =>

15 |

16 | OK:

17 | User Groups:

18 |

19 | https://crawler-devbox.ddev.site/content-examples/text/rich-text () =>

20 |

21 | OK:

22 | User Groups:

23 |

24 | https://crawler-devbox.ddev.site/content-examples/text/headers () =>

25 |

26 | OK:

27 | User Groups:

28 |

29 | https://crawler-devbox.ddev.site/content-examples/text/bullet-list () =>

30 |

31 | OK:

32 | User Groups:

33 | ...

34 |

--------------------------------------------------------------------------------

/Classes/Hooks/ProcessCleanUpHook.php:

--------------------------------------------------------------------------------

1 |

9 | * (c) 2021- Tomas Norre Mikkelsen

10 | *

11 | * This file is part of the TYPO3 Crawler Extension.

12 | *

13 | * It is free software; you can redistribute it and/or modify it under

14 | * the terms of the GNU General Public License, either version 2

15 | * of the License, or any later version.

16 | *

17 | * For the full copyright and license information, please read the

18 | * LICENSE.txt file that was distributed with this source code.

19 | *

20 | * The TYPO3 project - inspiring people to share!

21 | */

22 |

23 | use AOE\Crawler\Process\Cleaner\OldProcessCleaner;

24 | use AOE\Crawler\Process\Cleaner\OrphanProcessCleaner;

25 |

26 | /**

27 | * @internal since v9.2.5

28 | */

29 | class ProcessCleanUpHook implements CrawlerHookInterface

30 | {

31 | public function __construct(

32 | private readonly OrphanProcessCleaner $orphanCleaner,

33 | private readonly OldProcessCleaner $oldCleaner

34 | ) {

35 | }

36 |

37 | #[\Override]

38 | public function crawler_init(): void

39 | {

40 | $this->orphanCleaner->clean();

41 | $this->oldCleaner->clean();

42 | }

43 | }

44 |

--------------------------------------------------------------------------------

/Classes/Event/AfterQueueItemAddedEvent.php:

--------------------------------------------------------------------------------

1 |

9 | *

10 | * This file is part of the TYPO3 Crawler Extension.

11 | *

12 | * It is free software; you can redistribute it and/or modify it under

13 | * the terms of the GNU General Public License, either version 2

14 | * of the License, or any later version.

15 | *

16 | * For the full copyright and license information, please read the

17 | * LICENSE.txt file that was distributed with this source code.

18 | *

19 | * The TYPO3 project - inspiring people to share!

20 | */

21 |

22 | /**

23 | * @internal since v12.0.0

24 | */

25 | final class AfterQueueItemAddedEvent

26 | {

27 | /**

28 | * @param int|string $queueId

29 | */

30 | public function __construct(

31 | private $queueId,

32 | private array $fieldArray

33 | ) {

34 | }

35 |

36 | public function getQueueId(): int|string

37 | {

38 | return $this->queueId;

39 | }

40 |

41 | public function getFieldArray(): array

42 | {

43 | return $this->fieldArray;

44 | }

45 |

46 | public function setFieldArray(array $fieldArray): void

47 | {

48 | $this->fieldArray = $fieldArray;

49 | }

50 | }

51 |

--------------------------------------------------------------------------------

/Classes/CrawlStrategy/CrawlStrategyFactory.php:

--------------------------------------------------------------------------------

1 | configurationProvider = $configurationProvider ?? GeneralUtility::makeInstance(

20 | ExtensionConfigurationProvider::class

21 | );

22 | }

23 |

24 | public function create(): CrawlStrategyInterface

25 | {

26 | $extensionSettings = $this->configurationProvider->getExtensionConfiguration();

27 | if ($extensionSettings['makeDirectRequests'] ?? false) {

28 | /** @var CrawlStrategyInterface $instance */

29 | $instance = GeneralUtility::makeInstance(SubProcessExecutionStrategy::class, $this->configurationProvider);

30 | } else {

31 | $instance = GeneralUtility::makeInstance(GuzzleExecutionStrategy::class, $this->configurationProvider);

32 | }

33 |

34 | return $instance;

35 | }

36 | }

37 |

--------------------------------------------------------------------------------

/Documentation/ExecutingTheQueue/BuildingAndExecutingQueueRightAway(fromCli)/_output_buildQueue_6_default.txt:

--------------------------------------------------------------------------------

1 | 38 entries found for processing. (Use "mode" to decide action):

2 |

3 | [10-04-20 10:35] https://crawler-devbox.ddev.site/content-examples/overview

4 | [10-04-20 10:35] https://crawler-devbox.ddev.site/content-examples/text/rich-text

5 | [10-04-20 10:35] https://crawler-devbox.ddev.site/content-examples/text/headers

6 | [10-04-20 10:35] https://crawler-devbox.ddev.site/content-examples/text/bullet-list

7 | [10-04-20 10:35] https://crawler-devbox.ddev.site/content-examples/text/text-with-teaser

8 | [10-04-20 10:35] https://crawler-devbox.ddev.site/content-examples/text/text-and-icon

9 | [10-04-20 10:35] https://crawler-devbox.ddev.site/content-examples/text/text-in-columns

10 | [10-04-20 10:35] https://crawler-devbox.ddev.site/content-examples/text/list-group

11 | [10-04-20 10:35] https://crawler-devbox.ddev.site/content-examples/text/panel

12 | [10-04-20 10:35] https://crawler-devbox.ddev.site/content-examples/text/table

13 | [10-04-20 10:35] https://crawler-devbox.ddev.site/content-examples/text/quote

14 | [10-04-20 10:35] https://crawler-devbox.ddev.site/content-examples/media/audio

15 | [10-04-20 10:35] https://crawler-devbox.ddev.site/content-examples/media/text-and-images

16 | ...

17 | [10-04-20 10:36] https://crawler-devbox.ddev.site/content-examples/and-more/frames

18 |

--------------------------------------------------------------------------------

/Classes/CrawlStrategy/CallbackExecutionStrategy.php:

--------------------------------------------------------------------------------

1 |

9 | *

10 | * This file is part of the TYPO3 Crawler Extension.

11 | *

12 | * It is free software; you can redistribute it and/or modify it under

13 | * the terms of the GNU General Public License, either version 2

14 | * of the License, or any later version.

15 | *

16 | * For the full copyright and license information, please read the

17 | * LICENSE.txt file that was distributed with this source code.

18 | *

19 | * The TYPO3 project - inspiring people to share!

20 | */

21 |

22 | use AOE\Crawler\Controller\CrawlerController;

23 | use TYPO3\CMS\Core\Utility\GeneralUtility;

24 |

25 | /**

26 | * Used for hooks (e.g. crawling external files)

27 | * @internal since v12.0.0

28 | */

29 | class CallbackExecutionStrategy

30 | {

31 | /**

32 | * In the future, the callback should implement an interface.

33 | * @template T of object

34 | * @param class-string $callbackClassName

35 | */

36 | public function fetchByCallback(string $callbackClassName, array $parameters, CrawlerController $crawlerController)

37 | {

38 | // Calling custom object

39 | $callBackObj = GeneralUtility::makeInstance($callbackClassName);

40 | return $callBackObj->crawler_execute($parameters, $crawlerController);

41 | }

42 | }

43 |

--------------------------------------------------------------------------------

/Documentation/Features/PollableProcessingInstructions/Index.rst:

--------------------------------------------------------------------------------

1 | .. include:: /Includes.rst.txt

2 |

3 | .. _pollable-processing:

4 |

5 | ================================

6 | Pollable processing instructions

7 | ================================

8 |

9 | Some processing instructions are never executed on the "client side"

10 | (the TYPO3 frontend that is called by the crawler). This happens for

11 | example if a try to staticpub a page containing non-cacheable

12 | elements. That bad thing about this is, that staticpub doesn't have

13 | any chance to tell that something went wrong and why. That's why we

14 | introduced the "pollable processing instructions" feature. You can

15 | define in the :file:`ext_localconf.php` file of your extension that this

16 | extension should be "pollable" bye adding following line:

17 |

18 | .. code-block:: php

19 | :caption: packages/my_extension/ext_localconf.php

20 |

21 | $GLOBALS['TYPO3_CONF_VARS']['EXTCONF']['crawler']['pollSuccess'][] = 'tx_staticpub';

22 |

23 | In this case the crawler expects the extension to tell if everything

24 | was ok actively, assuming that something went wrong (and displaying

25 | this in the log) is no "success message" was found.

26 |

27 | In your extension than simple write your "ok" status by calling this:

28 |

29 | .. code-block:: php

30 | :caption: packages/my_extension/ext_localconf.php

31 |

32 | $GLOBALS['TSFE']->applicationData['tx_crawler']['success']['tx_staticpub'] = true;

33 |

34 |

--------------------------------------------------------------------------------

/Classes/Process/WindowsProcessManager.php:

--------------------------------------------------------------------------------

1 |

9 | *

10 | * This file is part of the TYPO3 Crawler Extension.

11 | *

12 | * It is free software; you can redistribute it and/or modify it under

13 | * the terms of the GNU General Public License, either version 2

14 | * of the License, or any later version.

15 | *

16 | * For the full copyright and license information, please read the

17 | * LICENSE.txt file that was distributed with this source code.

18 | *

19 | * The TYPO3 project - inspiring people to share!

20 | */

21 |

22 | /**

23 | * @codeCoverageIgnore

24 | * @internal since v12.0.10

25 | */

26 | class WindowsProcessManager implements ProcessManagerInterface

27 | {

28 | #[\Override]

29 | public function processExists(int $pid): bool

30 | {

31 | exec('tasklist | find "' . $pid . '"', $returnArray);

32 | return count($returnArray) > 0 && stripos($returnArray[0], 'php') !== false;

33 | }

34 |

35 | #[\Override]

36 | public function killProcess(int $pid): void

37 | {

38 | exec('taskkill /PID ' . $pid);

39 | }

40 |

41 | #[\Override]

42 | public function findDispatcherProcesses(): array

43 | {

44 | $returnArray = [];

45 | exec('tasklist | find \'typo3 crawler:processQueue\'', $returnArray);

46 | return $returnArray;

47 | }

48 | }

49 |

--------------------------------------------------------------------------------

/Classes/CrawlStrategy/CrawlStrategyInterface.php:

--------------------------------------------------------------------------------

1 |

9 | *

10 | * This file is part of the TYPO3 Crawler Extension.

11 | *

12 | * It is free software; you can redistribute it and/or modify it under

13 | * the terms of the GNU General Public License, either version 2

14 | * of the License, or any later version.

15 | *

16 | * For the full copyright and license information, please read the

17 | * LICENSE.txt file that was distributed with this source code.

18 | *

19 | * The TYPO3 project - inspiring people to share!

20 | */

21 |

22 | use Psr\Http\Message\UriInterface;

23 |

24 | /**

25 | * @internal since v12.0.0

26 | */

27 | interface CrawlStrategyInterface

28 | {

29 | /**

30 | * Fetch the given URL and return its textual response

31 | *

32 | * @return array|false "false" on errors without explanation.

33 | * Array may contain the following optional keys:

34 | * - errorlog: array of string error messages

35 | * - content: HTML content (string)

36 | * - running: bool

37 | * - parameters: array

38 | * - log: array of strings

39 | * - vars: array

40 | */

41 | public function fetchUrlContents(UriInterface $url, string $crawlerId);

42 | }

43 |

--------------------------------------------------------------------------------

/Classes/Utility/HookUtility.php:

--------------------------------------------------------------------------------

1 |

9 | *

10 | * This file is part of the TYPO3 Crawler Extension.

11 | *

12 | * It is free software; you can redistribute it and/or modify it under

13 | * the terms of the GNU General Public License, either version 2

14 | * of the License, or any later version.

15 | *

16 | * For the full copyright and license information, please read the

17 | * LICENSE.txt file that was distributed with this source code.

18 | *

19 | * The TYPO3 project - inspiring people to share!

20 | */

21 |

22 | use AOE\Crawler\Hooks\ProcessCleanUpHook;

23 |

24 | /**

25 | * @codeCoverageIgnore

26 | * @internal since v9.2.5

27 | */

28 | class HookUtility

29 | {

30 | /**

31 | * Registers hooks

32 | *

33 | * @param string $extKey

34 | */

35 | public static function registerHooks($extKey): void

36 | {

37 | // Activating Crawler cli_hooks

38 | $GLOBALS['TYPO3_CONF_VARS']['EXTCONF'][$extKey]['cli_hooks'][] =

39 | ProcessCleanUpHook::class;

40 |

41 | // Activating refresh hooks

42 | $GLOBALS['TYPO3_CONF_VARS']['EXTCONF'][$extKey]['refresh_hooks'][] =

43 | ProcessCleanUpHook::class;

44 |

45 | $GLOBALS['TYPO3_CONF_VARS']['SC_OPTIONS']['t3lib/class.t3lib_tcemain.php']['clearPageCacheEval'][] =

46 | "AOE\Crawler\Hooks\DataHandlerHook->addFlushedPagesToCrawlerQueue";

47 | }

48 | }

49 |

--------------------------------------------------------------------------------

/Resources/Public/Icons/Extension.svg:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/Resources/Public/Icons/crawler_configuration.svg:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/Classes/Writer/FileWriter/CsvWriter/CrawlerCsvWriter.php:

--------------------------------------------------------------------------------

1 |

9 | *

10 | * This file is part of the TYPO3 Crawler Extension.

11 | *

12 | * It is free software; you can redistribute it and/or modify it under

13 | * the terms of the GNU General Public License, either version 2

14 | * of the License, or any later version.

15 | *

16 | * For the full copyright and license information, please read the

17 | * LICENSE.txt file that was distributed with this source code.

18 | *

19 | * The TYPO3 project - inspiring people to share!

20 | */

21 |

22 | use TYPO3\CMS\Core\Utility\CsvUtility;

23 |

24 | /**

25 | * @internal since v9.2.5

26 | */

27 | final class CrawlerCsvWriter implements CsvWriterInterface

28 | {

29 | private const CARRIAGE_RETURN = 13;

30 | private const LINE_FEED = 10;

31 |

32 | #[\Override]

33 | public function arrayToCsv(array $records): string

34 | {

35 | $csvLines = [];

36 | reset($records);

37 |

38 | $csvLines[] = $this->getRowHeaders($records);

39 | foreach ($records as $row) {

40 | $csvLines[] = CsvUtility::csvValues($row);

41 | }

42 |

43 | return implode(chr(self::CARRIAGE_RETURN) . chr(self::LINE_FEED), $csvLines);

44 | }

45 |

46 | private function getRowHeaders(array $lines): string

47 | {

48 | $fieldNames = array_map(strval(...), array_keys(current($lines)));

49 | return CsvUtility::csvValues($fieldNames);

50 | }

51 | }

52 |

--------------------------------------------------------------------------------

/Documentation/Features/AutomaticAddPagesToQueue/Index.rst:

--------------------------------------------------------------------------------

1 | .. include:: /Includes.rst.txt

2 |

3 | .. _add-to-queue:

4 |

5 | ============================

6 | Automatic add pages to Queue

7 | ============================

8 |

9 | .. versionadded:: 9.1.0

10 |

11 | .. _add-to-queue-edit:

12 |

13 | Edit Pages

14 | ----------

15 |

16 | With this feature, you will automatically add pages to the crawler queue

17 | when you are editing content on the page, unless it's within a workspace, then

18 | it will not be added to the queue before it's published.

19 |

20 | This functionality gives you the advantages that you would not need to keep track

21 | of which pages you have edited, it will automatically be handle on next crawler

22 | process task, see :ref:`executing-the-queue-label`. This ensure that

23 | your cache or e.g. Search Index is always up to date and the end-users will see

24 | the most current content as soon as possible.

25 |

26 | .. _add-to-queue-cache:

27 |

28 | Clear Page Single Cache

29 | -----------------------

30 |

31 | As the edit and clear page cache function is using the same dataHandler hooks,

32 | we have an additional feature for free. When you clear the page cache for a specific

33 | page then it will also be added automatically to the crawler queue. Again this will

34 | be processed during the next crawler process.

35 |

36 | .. figure:: /Images/backend_clear_cache.png

37 | :alt: Clearing the page cache

38 |

39 | Clearing the page cache

40 |

41 | .. figure:: /Images/backend_clear_cache_queue.png

42 | :alt: Page is added to the crawler queue

43 |

44 | Page is added to the crawler queue

45 |

--------------------------------------------------------------------------------

/Resources/Private/Language/da.locallang_csh_tx_crawler_configuration.xlf:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 | Page Id the crawler will use for initializing the TSFE (required)

8 | Side Id som crawleren vil bruge for at indlæse TSFE (påkrævet)

9 |

10 |

11 | When crawling a page directly fom the TYPO3 backend, e.g. by using the "read" functionality of the "Crawler Log" module, the selected page id will be used to initialize the frontend rendering.

12 | Access to the selected page <strong>MUST NOT</strong> be restricted; crawling will fail otherwise.

13 | Når en side crawles direkte fra TYPO3 Backend. fx. ved at bruge "læs" funktionaliteten i "Crawler Log" modulet, bruges den valgte siden til at initialisere frontend renderingen. Adgang til den valgte side <strong>MÅ IKKE</strong> være begrænset, i så fald vil crawlingen fejle.

14 |

15 |

16 |

17 |

--------------------------------------------------------------------------------

/Classes/Process/UnixProcessManager.php:

--------------------------------------------------------------------------------

1 |

9 | *

10 | * This file is part of the TYPO3 Crawler Extension.

11 | *

12 | * It is free software; you can redistribute it and/or modify it under

13 | * the terms of the GNU General Public License, either version 2

14 | * of the License, or any later version.

15 | *

16 | * For the full copyright and license information, please read the

17 | * LICENSE.txt file that was distributed with this source code.

18 | *

19 | * The TYPO3 project - inspiring people to share!

20 | */

21 |

22 | /**

23 | * @codeCoverageIgnore

24 | * @internal since v12.0.10

25 | */

26 | class UnixProcessManager implements ProcessManagerInterface

27 | {

28 | #[\Override]

29 | public function processExists(int $pid): bool

30 | {

31 | return file_exists('/proc/' . $pid);

32 | }

33 |

34 | #[\Override]

35 | public function killProcess(int $pid): void

36 | {

37 | posix_kill($pid, 9);

38 | }

39 |

40 | #[\Override]

41 | public function findDispatcherProcesses(): array

42 | {

43 | $returnArray = [];

44 | if (exec('which ps')) {

45 | // ps command is defined

46 | exec("ps aux | grep 'typo3 crawler:processQueue'", $returnArray);

47 | } else {

48 | trigger_error(

49 | 'Crawler is unable to locate the ps command to clean up orphaned crawler processes.',

50 | E_USER_WARNING

51 | );

52 | }

53 |

54 | return $returnArray;

55 | }

56 | }

57 |

--------------------------------------------------------------------------------

/Resources/Private/Language/af.locallang_csh_tx_crawler_configuration.xlf:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 | Page Id the crawler will use for initializing the TSFE (required)

8 | Page Id the crawler will use for initializing the TSFE (required)

9 |

10 |

11 | When crawling a page directly fom the TYPO3 backend, e.g. by using the "read" functionality of the "Crawler Log" module, the selected page id will be used to initialize the frontend rendering.

12 | Access to the selected page <strong>MUST NOT</strong> be restricted; crawling will fail otherwise.

13 | When crawling a page directly fom the TYPO3 backend, e.g. by using the "read" functionality of the "Crawler Log" module, the selected page id will be used to initialize the frontend rendering.

14 | Access to the selected page <strong>MUST NOT</strong> be restricted; crawling will fail otherwise.

15 |

16 |

17 |

18 |

--------------------------------------------------------------------------------

/Resources/Private/Language/ar.locallang_csh_tx_crawler_configuration.xlf:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 | Page Id the crawler will use for initializing the TSFE (required)

8 | Page Id the crawler will use for initializing the TSFE (required)

9 |

10 |

11 | When crawling a page directly fom the TYPO3 backend, e.g. by using the "read" functionality of the "Crawler Log" module, the selected page id will be used to initialize the frontend rendering.

12 | Access to the selected page <strong>MUST NOT</strong> be restricted; crawling will fail otherwise.

13 | When crawling a page directly fom the TYPO3 backend, e.g. by using the "read" functionality of the "Crawler Log" module, the selected page id will be used to initialize the frontend rendering.

14 | Access to the selected page <strong>MUST NOT</strong> be restricted; crawling will fail otherwise.

15 |

16 |

17 |

18 |

--------------------------------------------------------------------------------

/Resources/Private/Language/ca.locallang_csh_tx_crawler_configuration.xlf:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 | Page Id the crawler will use for initializing the TSFE (required)

8 | Page Id the crawler will use for initializing the TSFE (required)

9 |

10 |

11 | When crawling a page directly fom the TYPO3 backend, e.g. by using the "read" functionality of the "Crawler Log" module, the selected page id will be used to initialize the frontend rendering.

12 | Access to the selected page <strong>MUST NOT</strong> be restricted; crawling will fail otherwise.

13 | When crawling a page directly fom the TYPO3 backend, e.g. by using the "read" functionality of the "Crawler Log" module, the selected page id will be used to initialize the frontend rendering.

14 | Access to the selected page <strong>MUST NOT</strong> be restricted; crawling will fail otherwise.

15 |

16 |

17 |

18 |

--------------------------------------------------------------------------------

/Resources/Private/Language/cs.locallang_csh_tx_crawler_configuration.xlf:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 | Page Id the crawler will use for initializing the TSFE (required)

8 | Page Id the crawler will use for initializing the TSFE (required)

9 |

10 |

11 | When crawling a page directly fom the TYPO3 backend, e.g. by using the "read" functionality of the "Crawler Log" module, the selected page id will be used to initialize the frontend rendering.

12 | Access to the selected page <strong>MUST NOT</strong> be restricted; crawling will fail otherwise.

13 | When crawling a page directly fom the TYPO3 backend, e.g. by using the "read" functionality of the "Crawler Log" module, the selected page id will be used to initialize the frontend rendering.

14 | Access to the selected page <strong>MUST NOT</strong> be restricted; crawling will fail otherwise.

15 |

16 |

17 |

18 |

--------------------------------------------------------------------------------

/Resources/Private/Language/el.locallang_csh_tx_crawler_configuration.xlf:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 | Page Id the crawler will use for initializing the TSFE (required)

8 | Page Id the crawler will use for initializing the TSFE (required)

9 |

10 |

11 | When crawling a page directly fom the TYPO3 backend, e.g. by using the "read" functionality of the "Crawler Log" module, the selected page id will be used to initialize the frontend rendering.

12 | Access to the selected page <strong>MUST NOT</strong> be restricted; crawling will fail otherwise.

13 | When crawling a page directly fom the TYPO3 backend, e.g. by using the "read" functionality of the "Crawler Log" module, the selected page id will be used to initialize the frontend rendering.

14 | Access to the selected page <strong>MUST NOT</strong> be restricted; crawling will fail otherwise.

15 |

16 |

17 |

18 |

--------------------------------------------------------------------------------

/Resources/Private/Language/fi.locallang_csh_tx_crawler_configuration.xlf:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 | Page Id the crawler will use for initializing the TSFE (required)

8 | Page Id the crawler will use for initializing the TSFE (required)

9 |

10 |

11 | When crawling a page directly fom the TYPO3 backend, e.g. by using the "read" functionality of the "Crawler Log" module, the selected page id will be used to initialize the frontend rendering.

12 | Access to the selected page <strong>MUST NOT</strong> be restricted; crawling will fail otherwise.

13 | When crawling a page directly fom the TYPO3 backend, e.g. by using the "read" functionality of the "Crawler Log" module, the selected page id will be used to initialize the frontend rendering.

14 | Access to the selected page <strong>MUST NOT</strong> be restricted; crawling will fail otherwise.

15 |

16 |

17 |

18 |

--------------------------------------------------------------------------------

/Resources/Private/Language/fr.locallang_csh_tx_crawler_configuration.xlf:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 | Page Id the crawler will use for initializing the TSFE (required)

8 | Page Id the crawler will use for initializing the TSFE (required)

9 |

10 |

11 | When crawling a page directly fom the TYPO3 backend, e.g. by using the "read" functionality of the "Crawler Log" module, the selected page id will be used to initialize the frontend rendering.

12 | Access to the selected page <strong>MUST NOT</strong> be restricted; crawling will fail otherwise.

13 | When crawling a page directly fom the TYPO3 backend, e.g. by using the "read" functionality of the "Crawler Log" module, the selected page id will be used to initialize the frontend rendering.

14 | Access to the selected page <strong>MUST NOT</strong> be restricted; crawling will fail otherwise.

15 |

16 |

17 |

18 |

--------------------------------------------------------------------------------

/Resources/Private/Language/he.locallang_csh_tx_crawler_configuration.xlf:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 | Page Id the crawler will use for initializing the TSFE (required)

8 | Page Id the crawler will use for initializing the TSFE (required)

9 |

10 |

11 | When crawling a page directly fom the TYPO3 backend, e.g. by using the "read" functionality of the "Crawler Log" module, the selected page id will be used to initialize the frontend rendering.

12 | Access to the selected page <strong>MUST NOT</strong> be restricted; crawling will fail otherwise.

13 | When crawling a page directly fom the TYPO3 backend, e.g. by using the "read" functionality of the "Crawler Log" module, the selected page id will be used to initialize the frontend rendering.

14 | Access to the selected page <strong>MUST NOT</strong> be restricted; crawling will fail otherwise.

15 |

16 |

17 |

18 |

--------------------------------------------------------------------------------

/Resources/Private/Language/hu.locallang_csh_tx_crawler_configuration.xlf:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 | Page Id the crawler will use for initializing the TSFE (required)

8 | Page Id the crawler will use for initializing the TSFE (required)

9 |

10 |

11 | When crawling a page directly fom the TYPO3 backend, e.g. by using the "read" functionality of the "Crawler Log" module, the selected page id will be used to initialize the frontend rendering.

12 | Access to the selected page <strong>MUST NOT</strong> be restricted; crawling will fail otherwise.

13 | When crawling a page directly fom the TYPO3 backend, e.g. by using the "read" functionality of the "Crawler Log" module, the selected page id will be used to initialize the frontend rendering.

14 | Access to the selected page <strong>MUST NOT</strong> be restricted; crawling will fail otherwise.

15 |

16 |

17 |

18 |

--------------------------------------------------------------------------------

/Resources/Private/Language/it.locallang_csh_tx_crawler_configuration.xlf:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 | Page Id the crawler will use for initializing the TSFE (required)

8 | Page Id the crawler will use for initializing the TSFE (required)

9 |

10 |

11 | When crawling a page directly fom the TYPO3 backend, e.g. by using the "read" functionality of the "Crawler Log" module, the selected page id will be used to initialize the frontend rendering.

12 | Access to the selected page <strong>MUST NOT</strong> be restricted; crawling will fail otherwise.

13 | When crawling a page directly fom the TYPO3 backend, e.g. by using the "read" functionality of the "Crawler Log" module, the selected page id will be used to initialize the frontend rendering.

14 | Access to the selected page <strong>MUST NOT</strong> be restricted; crawling will fail otherwise.

15 |

16 |

17 |

18 |

--------------------------------------------------------------------------------

/Resources/Private/Language/ja.locallang_csh_tx_crawler_configuration.xlf:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 | Page Id the crawler will use for initializing the TSFE (required)

8 | Page Id the crawler will use for initializing the TSFE (required)

9 |

10 |

11 | When crawling a page directly fom the TYPO3 backend, e.g. by using the "read" functionality of the "Crawler Log" module, the selected page id will be used to initialize the frontend rendering.

12 | Access to the selected page <strong>MUST NOT</strong> be restricted; crawling will fail otherwise.

13 | When crawling a page directly fom the TYPO3 backend, e.g. by using the "read" functionality of the "Crawler Log" module, the selected page id will be used to initialize the frontend rendering.

14 | Access to the selected page <strong>MUST NOT</strong> be restricted; crawling will fail otherwise.

15 |

16 |

17 |

18 |