├── .gitattributes

├── .gitignore

├── .gitmodules

├── LICENSE

├── README.md

├── docker-compose.yml

├── img_sgm

├── Dockerfile

├── completions

│ └── __DO_NOT_DELETE__

├── config.json

├── config_expample.xml

└── tasks.json

├── img_sgm_ml

├── Dockerfile

├── README.md

├── __init__.py

├── _wsgi.py

├── api.py

├── model

│ ├── __init__.py

│ ├── config.py

│ ├── dataset.py

│ ├── model.py

│ └── utils.py

├── requirements.txt

├── rle

│ ├── README.md

│ ├── __init__.py

│ ├── decode.py

│ ├── encode.py

│ ├── inputstream.py

│ ├── outputstream.py

│ └── test.py

├── scripts

│ ├── load_completion.py

│ ├── resize.py

│ └── test_interference.py

├── supervisord.conf

└── uwsgi.ini

├── out

└── _DO_NOT_DELETE_

└── rsc

├── Trainset.zip

├── checkpoints

└── mask_rcnn_1610552427.313373.h5

├── demo.png

└── user_interface.png

/.gitattributes:

--------------------------------------------------------------------------------

1 | *.h5 filter=lfs diff=lfs merge=lfs -text

2 | *.zip filter=lfs diff=lfs merge=lfs -text

3 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 |

2 | ### IntelliJ/PyCharm

3 | .idea/

4 |

5 | ### Project

6 | img_sgm/completions

7 | img_sgm/upload

8 | img_sgm/predictions

9 | rsc

10 | !./rsc/demo.png

11 | !./rsc/Trainset.zip

12 | !./rsc/checkpoints/mask_rcnn_1610552427.313373.h5

13 | img_sgm/config.xml

14 | img_sgm/source.json

15 | logs

16 | data

17 | out

18 | !_DO_NOT_DELETE_

19 | !img_sgm_ml/scripts

20 |

21 | # Created by .ignore support plugin (hsz.mobi)

22 | ### VirtualEnv template

23 | # Virtualenv

24 | # http://iamzed.com/2009/05/07/a-primer-on-virtualenv/

25 | .Python

26 | [Bb]in

27 | [Ii]nclude

28 | [Ll]ib

29 | [Ll]ib64

30 | [Ll]ocal

31 | [Ss]cripts

32 | pyvenv.cfg

33 | .venv

34 | pip-selfcheck.json

35 |

36 | ### Python template

37 | # Byte-compiled / optimized / DLL files

38 | __pycache__/

39 | *.py[cod]

40 | *$py.class

41 |

42 | # C extensions

43 | *.so

44 |

45 | # Distribution / packaging

46 | build/

47 | develop-eggs/

48 | dist/

49 | downloads/

50 | eggs/

51 | .eggs/

52 | lib/

53 | lib64/

54 | parts/

55 | sdist/

56 | var/

57 | wheels/

58 | share/python-wheels/

59 | *.egg-info/

60 | .installed.cfg

61 | *.egg

62 | MANIFEST

63 |

64 | # PyInstaller

65 | # Usually these files are written by a python script from a template

66 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

67 | *.manifest

68 | *.spec

69 |

70 | # Installer logs

71 | pip-log.txt

72 | pip-delete-this-directory.txt

73 |

74 | # Unit test / coverage reports

75 | htmlcov/

76 | .tox/

77 | .nox/

78 | .coverage

79 | .coverage.*

80 | .cache

81 | nosetests.xml

82 | coverage.xml

83 | *.cover

84 | *.py,cover

85 | .hypothesis/

86 | .pytest_cache/

87 | cover/

88 |

89 | # Translations

90 | *.mo

91 | *.pot

92 |

93 | # Django stuff:

94 | *.log

95 | local_settings.py

96 | db.sqlite3

97 | db.sqlite3-journal

98 |

99 | # Flask stuff:

100 | instance/

101 | .webassets-cache

102 |

103 | # Scrapy stuff:

104 | .scrapy

105 |

106 | # Sphinx documentation

107 | docs/_build/

108 |

109 | # PyBuilder

110 | .pybuilder/

111 | target/

112 |

113 | # Jupyter Notebook

114 | .ipynb_checkpoints

115 |

116 | # IPython

117 | profile_default/

118 | ipython_config.py

119 |

120 | # pyenv

121 | # For a library or package, you might want to ignore these files since the code is

122 | # intended to run in multiple environments; otherwise, check them in:

123 | # .python-version

124 |

125 | # pipenv

126 | # According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

127 | # However, in case of collaboration, if having platform-specific dependencies or dependencies

128 | # having no cross-platform support, pipenv may install dependencies that don't work, or not

129 | # install all needed dependencies.

130 | #Pipfile.lock

131 |

132 | # PEP 582; used by e.g. github.com/David-OConnor/pyflow

133 | __pypackages__/

134 |

135 | # Celery stuff

136 | celerybeat-schedule

137 | celerybeat.pid

138 |

139 | # SageMath parsed files

140 | *.sage.py

141 |

142 | # Environments

143 | .env

144 | env/

145 | venv/

146 | ENV/

147 | env.bak/

148 | venv.bak/

149 |

150 | # Spyder project settings

151 | .spyderproject

152 | .spyproject

153 |

154 | # Rope project settings

155 | .ropeproject

156 |

157 | # mkdocs documentation

158 | /site

159 |

160 | # mypy

161 | .mypy_cache/

162 | .dmypy.json

163 | dmypy.json

164 |

165 | # Pyre type checker

166 | .pyre/

167 |

168 | # pytype static type analyzer

169 | .pytype/

170 |

171 | # Cython debug symbols

172 | cython_debug/

--------------------------------------------------------------------------------

/.gitmodules:

--------------------------------------------------------------------------------

1 | [submodule "img_sgm_ml/Mask_RCNN"]

2 | path = img_sgm_ml/Mask_RCNN

3 | url = https://github.com/matterport/Mask_RCNN.git

4 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | Apache License

2 | Version 2.0, January 2004

3 | http://www.apache.org/licenses/

4 |

5 | TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

6 |

7 | 1. Definitions.

8 |

9 | "License" shall mean the terms and conditions for use, reproduction,

10 | and distribution as defined by Sections 1 through 9 of this document.

11 |

12 | "Licensor" shall mean the copyright owner or entity authorized by

13 | the copyright owner that is granting the License.

14 |

15 | "Legal Entity" shall mean the union of the acting entity and all

16 | other entities that control, are controlled by, or are under common

17 | control with that entity. For the purposes of this definition,

18 | "control" means (i) the power, direct or indirect, to cause the

19 | direction or management of such entity, whether by contract or

20 | otherwise, or (ii) ownership of fifty percent (50%) or more of the

21 | outstanding shares, or (iii) beneficial ownership of such entity.

22 |

23 | "You" (or "Your") shall mean an individual or Legal Entity

24 | exercising permissions granted by this License.

25 |

26 | "Source" form shall mean the preferred form for making modifications,

27 | including but not limited to software source code, documentation

28 | source, and configuration files.

29 |

30 | "Object" form shall mean any form resulting from mechanical

31 | transformation or translation of a Source form, including but

32 | not limited to compiled object code, generated documentation,

33 | and conversions to other media types.

34 |

35 | "Work" shall mean the work of authorship, whether in Source or

36 | Object form, made available under the License, as indicated by a

37 | copyright notice that is included in or attached to the work

38 | (an example is provided in the Appendix below).

39 |

40 | "Derivative Works" shall mean any work, whether in Source or Object

41 | form, that is based on (or derived from) the Work and for which the

42 | editorial revisions, annotations, elaborations, or other modifications

43 | represent, as a whole, an original work of authorship. For the purposes

44 | of this License, Derivative Works shall not include works that remain

45 | separable from, or merely link (or bind by name) to the interfaces of,

46 | the Work and Derivative Works thereof.

47 |

48 | "Contribution" shall mean any work of authorship, including

49 | the original version of the Work and any modifications or additions

50 | to that Work or Derivative Works thereof, that is intentionally

51 | submitted to Licensor for inclusion in the Work by the copyright owner

52 | or by an individual or Legal Entity authorized to submit on behalf of

53 | the copyright owner. For the purposes of this definition, "submitted"

54 | means any form of electronic, verbal, or written communication sent

55 | to the Licensor or its representatives, including but not limited to

56 | communication on electronic mailing lists, source code control systems,

57 | and issue tracking systems that are managed by, or on behalf of, the

58 | Licensor for the purpose of discussing and improving the Work, but

59 | excluding communication that is conspicuously marked or otherwise

60 | designated in writing by the copyright owner as "Not a Contribution."

61 |

62 | "Contributor" shall mean Licensor and any individual or Legal Entity

63 | on behalf of whom a Contribution has been received by Licensor and

64 | subsequently incorporated within the Work.

65 |

66 | 2. Grant of Copyright License. Subject to the terms and conditions of

67 | this License, each Contributor hereby grants to You a perpetual,

68 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

69 | copyright license to reproduce, prepare Derivative Works of,

70 | publicly display, publicly perform, sublicense, and distribute the

71 | Work and such Derivative Works in Source or Object form.

72 |

73 | 3. Grant of Patent License. Subject to the terms and conditions of

74 | this License, each Contributor hereby grants to You a perpetual,

75 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

76 | (except as stated in this section) patent license to make, have made,

77 | use, offer to sell, sell, import, and otherwise transfer the Work,

78 | where such license applies only to those patent claims licensable

79 | by such Contributor that are necessarily infringed by their

80 | Contribution(s) alone or by combination of their Contribution(s)

81 | with the Work to which such Contribution(s) was submitted. If You

82 | institute patent litigation against any entity (including a

83 | cross-claim or counterclaim in a lawsuit) alleging that the Work

84 | or a Contribution incorporated within the Work constitutes direct

85 | or contributory patent infringement, then any patent licenses

86 | granted to You under this License for that Work shall terminate

87 | as of the date such litigation is filed.

88 |

89 | 4. Redistribution. You may reproduce and distribute copies of the

90 | Work or Derivative Works thereof in any medium, with or without

91 | modifications, and in Source or Object form, provided that You

92 | meet the following conditions:

93 |

94 | (a) You must give any other recipients of the Work or

95 | Derivative Works a copy of this License; and

96 |

97 | (b) You must cause any modified files to carry prominent notices

98 | stating that You changed the files; and

99 |

100 | (c) You must retain, in the Source form of any Derivative Works

101 | that You distribute, all copyright, patent, trademark, and

102 | attribution notices from the Source form of the Work,

103 | excluding those notices that do not pertain to any part of

104 | the Derivative Works; and

105 |

106 | (d) If the Work includes a "NOTICE" text file as part of its

107 | distribution, then any Derivative Works that You distribute must

108 | include a readable copy of the attribution notices contained

109 | within such NOTICE file, excluding those notices that do not

110 | pertain to any part of the Derivative Works, in at least one

111 | of the following places: within a NOTICE text file distributed

112 | as part of the Derivative Works; within the Source form or

113 | documentation, if provided along with the Derivative Works; or,

114 | within a display generated by the Derivative Works, if and

115 | wherever such third-party notices normally appear. The contents

116 | of the NOTICE file are for informational purposes only and

117 | do not modify the License. You may add Your own attribution

118 | notices within Derivative Works that You distribute, alongside

119 | or as an addendum to the NOTICE text from the Work, provided

120 | that such additional attribution notices cannot be construed

121 | as modifying the License.

122 |

123 | You may add Your own copyright statement to Your modifications and

124 | may provide additional or different license terms and conditions

125 | for use, reproduction, or distribution of Your modifications, or

126 | for any such Derivative Works as a whole, provided Your use,

127 | reproduction, and distribution of the Work otherwise complies with

128 | the conditions stated in this License.

129 |

130 | 5. Submission of Contributions. Unless You explicitly state otherwise,

131 | any Contribution intentionally submitted for inclusion in the Work

132 | by You to the Licensor shall be under the terms and conditions of

133 | this License, without any additional terms or conditions.

134 | Notwithstanding the above, nothing herein shall supersede or modify

135 | the terms of any separate license agreement you may have executed

136 | with Licensor regarding such Contributions.

137 |

138 | 6. Trademarks. This License does not grant permission to use the trade

139 | names, trademarks, service marks, or product names of the Licensor,

140 | except as required for reasonable and customary use in describing the

141 | origin of the Work and reproducing the content of the NOTICE file.

142 |

143 | 7. Disclaimer of Warranty. Unless required by applicable law or

144 | agreed to in writing, Licensor provides the Work (and each

145 | Contributor provides its Contributions) on an "AS IS" BASIS,

146 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

147 | implied, including, without limitation, any warranties or conditions

148 | of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

149 | PARTICULAR PURPOSE. You are solely responsible for determining the

150 | appropriateness of using or redistributing the Work and assume any

151 | risks associated with Your exercise of permissions under this License.

152 |

153 | 8. Limitation of Liability. In no event and under no legal theory,

154 | whether in tort (including negligence), contract, or otherwise,

155 | unless required by applicable law (such as deliberate and grossly

156 | negligent acts) or agreed to in writing, shall any Contributor be

157 | liable to You for damages, including any direct, indirect, special,

158 | incidental, or consequential damages of any character arising as a

159 | result of this License or out of the use or inability to use the

160 | Work (including but not limited to damages for loss of goodwill,

161 | work stoppage, computer failure or malfunction, or any and all

162 | other commercial damages or losses), even if such Contributor

163 | has been advised of the possibility of such damages.

164 |

165 | 9. Accepting Warranty or Additional Liability. While redistributing

166 | the Work or Derivative Works thereof, You may choose to offer,

167 | and charge a fee for, acceptance of support, warranty, indemnity,

168 | or other liability obligations and/or rights consistent with this

169 | License. However, in accepting such obligations, You may act only

170 | on Your own behalf and on Your sole responsibility, not on behalf

171 | of any other Contributor, and only if You agree to indemnify,

172 | defend, and hold each Contributor harmless for any liability

173 | incurred by, or claims asserted against, such Contributor by reason

174 | of your accepting any such warranty or additional liability.

175 |

176 | END OF TERMS AND CONDITIONS

177 |

178 | APPENDIX: How to apply the Apache License to your work.

179 |

180 | To apply the Apache License to your work, attach the following

181 | boilerplate notice, with the fields enclosed by brackets "[]"

182 | replaced with your own identifying information. (Don't include

183 | the brackets!) The text should be enclosed in the appropriate

184 | comment syntax for the file format. We also recommend that a

185 | file or class name and description of purpose be included on the

186 | same "printed page" as the copyright notice for easier

187 | identification within third-party archives.

188 |

189 | Copyright 2020 Tristan Ratz

190 |

191 | Licensed under the Apache License, Version 2.0 (the "License");

192 | you may not use this file except in compliance with the License.

193 | You may obtain a copy of the License at

194 |

195 | http://www.apache.org/licenses/LICENSE-2.0

196 |

197 | Unless required by applicable law or agreed to in writing, software

198 | distributed under the License is distributed on an "AS IS" BASIS,

199 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

200 | See the License for the specific language governing permissions and

201 | limitations under the License.

202 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

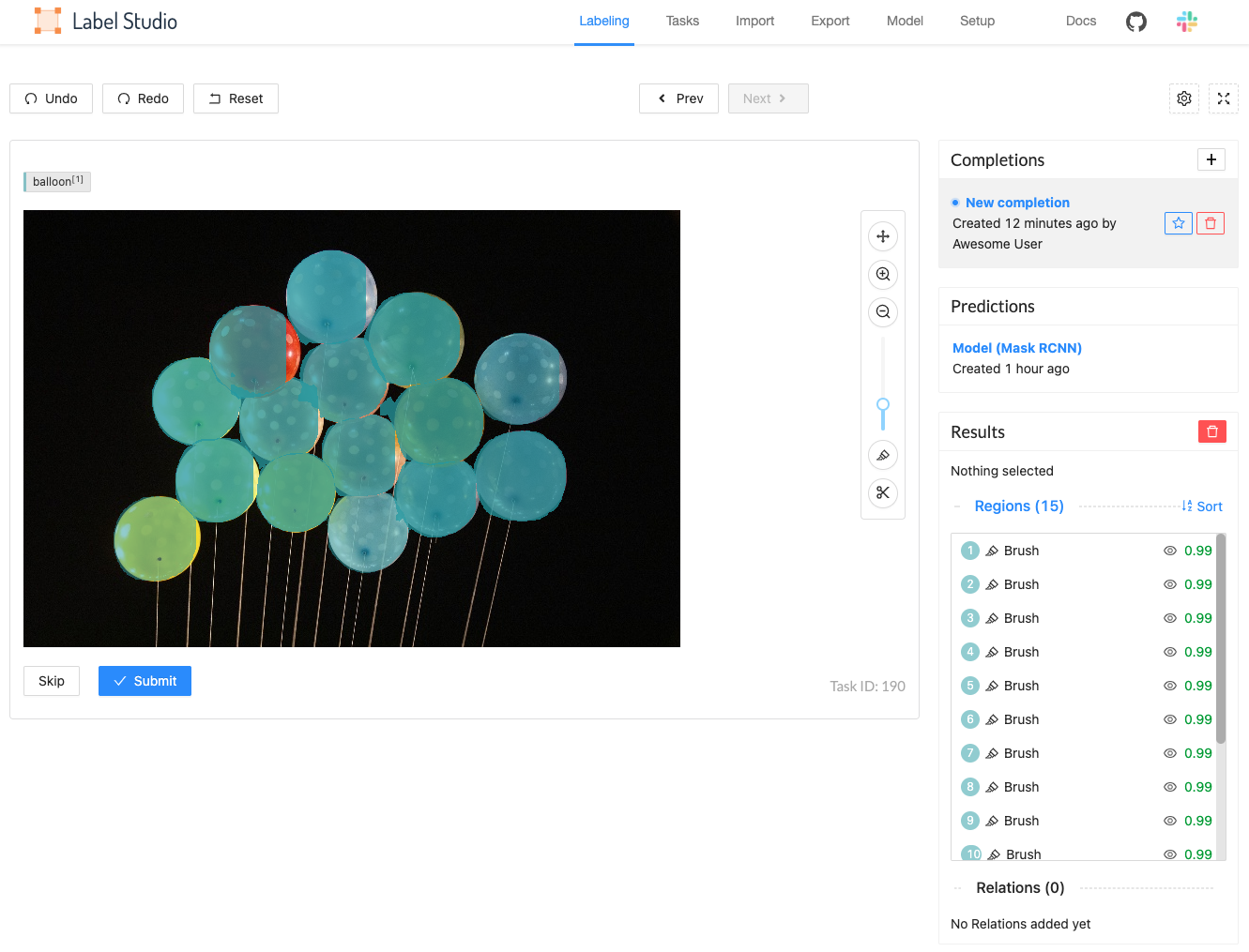

1 | # Image Segmentation Module for Label Studio

2 |

3 | This module was build facilitate the creation of large image segmentation datasets with the help of artificial intelligence with label-studio.

4 | Create image segmentations and get on the fly predictions for the dataset your labeling.

5 |

6 | It was build with the help of Matterports MaskRCNN implementation and is meant to help you label images.

7 | After configuring the program for your needs and labeling a certain amount of images, the program should start to label images itself.

8 |

9 |

10 |

11 | ## Installation

12 |

13 | First clone the project

14 |

15 | ```bash

16 | git clone --recurse-submodules -j8 https://github.com/tristanratz/Interactive-Image-Segmentation.git

17 | ```

18 |

19 | Make sure you have docker and docker-compose installed to start the ml module.

20 |

21 | ## Run

22 |

23 | To start the ML Module change to the ```img-sgm-ml``` folder and execute the following.

24 | ```bash

25 | docker-compose up -d # The -d stands for no output

26 | ```

27 | The ML-Image-Segmentation Application is now up and running. Open http://localhost:8080/

28 |

29 | Now you have to label about 70 images. After you are done, change to the model tab in label studio and train the model.

30 | After it finished learning you can continue labeling and should get predictions for your images.

31 |

32 | Let the pre-labeling begin...!

33 |

34 | ## Use with own data

35 |

36 | To create own labels go into ```img_sgm_ml/model/config.py``` and enter your labels in the following format

37 |

38 | ```python

39 | CLASSES = {

40 | 0: '__background__',

41 | 1: 'YOUR_FIRST_CLASS',

42 | 2: ...

43 | }

44 | ```

45 | Make sure that you safe the first spot for ```__background__```.

46 | After that enter your class count (+1 for background)

47 |

48 | Lastly add the images you want to label with the following command to the labeling tool:

49 |

50 | ```bash

51 | label-studio init -i ./upload/ --input-format image-dir

52 | ```

53 |

54 | or import them via the web interface (http://localhost:8080/import). Then (re)start the program.

55 | Now you can label your images and train the model under http://localhost:8080/model.

56 |

57 | #### Change of classes

58 |

59 | Make sure you delete the contents of ```rsc/checkpoints``` directory after you decided to change the classes you train on.

60 | Otherwise the model will try to load an existing model and will try to train it even if the classes do not match.

61 |

62 | ## Use your own model

63 |

64 | This projects holds a complete implementation to communicate with Label Studio and to predicting image segmentations with MaskRCNN.

65 | If you want to use a diffrent model for image segmentation you may have to rewrite or change the ```img_sgm_ml/model/model.py``` and

66 | ```img_sgm_ml/model/dataset.py``` classes.

67 |

68 | This project contains code to transform the Label Studio format into the Matterport/MaskRCNN format.

69 |

70 | ## Dataset and pretrained model

71 |

72 | Under ```rsc``` resides a dataset consisting out of ballons, it is devided into three subsets, which were used to test this tool.

73 | The first dataset consists out of 70 labeled images of balloons, the second dataset are additional 70 images of balloons to test semi-automatic labeling, and the last set consists out of 10 unrelated images.

74 | The model which resulted out of the testing can be found under ```rsc/checkpoints```.

75 | It was trained 5 epochs on the images of the first dataset.

76 |

77 | ## Citation

78 | ```bibtex

79 | @misc{LS_IMG_SGM_MODULE,

80 | title={{Interactive Labeling}: Facilitating the creation of comprehensive datasets with artificial intelligence},

81 | url={https://github.com/tristanratz/Label-Studio-image-segmentation-ML-Module},

82 | note={Open source software available from ttps://github.com/tristanratz/Label-Studio-image-segmentation-ML-Module},

83 | author={

84 | Tristan Ratz

85 | },

86 | year={2020},

87 | }

88 | ```

89 |

90 | ## Disclaimer

91 |

92 | This project was created for my Bachelor Thesis "Facilitating the creation of comprehensive datasets with

93 | artificial intelligence" at the TU Darmstadt. (read it here)

94 |

95 | A special thanks to the Finanz Informatik Solutions Plus GmbH for the great support!

96 |

--------------------------------------------------------------------------------

/docker-compose.yml:

--------------------------------------------------------------------------------

1 | version: "3.5"

2 |

3 | services:

4 | redis:

5 | image: redis:alpine

6 | container_name: redis

7 | hostname: redis

8 | volumes:

9 | - "./data/redis:/data"

10 | expose:

11 | - 6379

12 | ml_modul:

13 | container_name: ml_modul

14 | build: img_sgm_ml

15 | environment:

16 | - MODEL_DIR=/data/models

17 | # - USECOCO=true comment if you dont want to use coco if no model is found

18 | - RQ_QUEUE_NAME=default

19 | - REDIS_HOST=redis

20 | - REDIS_PORT=6379

21 | - DOCKER=TRUE

22 | ports:

23 | - 9090:9090

24 | depends_on:

25 | - redis

26 | links:

27 | - redis

28 | volumes:

29 | - "./img_sgm:/app/img_sgm"

30 | - "./rsc:/app/img_sgm_ml/rsc"

31 | - "./data/server:/data"

32 | - "./logs:/tmp"

33 | labeltool:

34 | container_name: labeltool

35 | build: img_sgm

36 | depends_on:

37 | - ml_modul

38 | ports:

39 | - 8080:8080

40 | volumes:

41 | - "./img_sgm:/img_sgm"

--------------------------------------------------------------------------------

/img_sgm/Dockerfile:

--------------------------------------------------------------------------------

1 | FROM python:3.7

2 | RUN pip install label-studio==0.8.0 --no-cache-dir --default-timeout=100

3 |

4 | CMD ["label-studio", "start", "img_sgm"]

5 | #, "--host", "labeltool", "--protocol", "http://", "--port", "8080"]

--------------------------------------------------------------------------------

/img_sgm/completions/__DO_NOT_DELETE__:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tristanratz/Interactive-Image-Segmentation/64c1bad6d7c3c32420c4b00a691210d7a8bb4b06/img_sgm/completions/__DO_NOT_DELETE__

--------------------------------------------------------------------------------

/img_sgm/config.json:

--------------------------------------------------------------------------------

1 | {

2 | "title": "Label Studio",

3 | "protocol": "http://",

4 | "host": "0.0.0.0",

5 | "port": 8080,

6 | "debug": false,

7 | "label_config": "/img_sgm/config.xml",

8 | "input_path": "/img_sgm/tasks.json",

9 | "output_dir": "/img_sgm/completions",

10 | "instruction": "Type some hypertext for label experts!",

11 | "allow_delete_completions": true,

12 | "templates_dir": "examples",

13 | "editor": {

14 | "debug": false

15 | },

16 | "sampling": "sequential",

17 | "enable_predictions_button": true,

18 | "ml_backends": [

19 | {

20 | "url": "http://ml_modul:9090",

21 | "name": "Mask RCNN"

22 | }

23 | ],

24 | "task_page_auto_update_timer": 10000,

25 | "source": {

26 | "name": "Tasks",

27 | "type": "tasks-json",

28 | "path": "/Users/tristanratz/Development/label-studio/image_polygons_project/tasks.json"

29 | },

30 | "target": {

31 | "name": "Completions",

32 | "type": "completions-dir",

33 | "path": "/Users/tristanratz/Development/label-studio/image_polygons_project/completions"

34 | },

35 | "config_path": "/img_sgm/config.json"

36 | }

--------------------------------------------------------------------------------

/img_sgm/config_expample.xml:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

--------------------------------------------------------------------------------

/img_sgm/tasks.json:

--------------------------------------------------------------------------------

1 | {}

--------------------------------------------------------------------------------

/img_sgm_ml/Dockerfile:

--------------------------------------------------------------------------------

1 | FROM python:3.7

2 | RUN pip install uwsgi supervisor

3 |

4 | ENV HOME=/app

5 | COPY requirements.txt ${HOME}/

6 | RUN pip install --upgrade pip

7 | RUN pip install -r ${HOME}/requirements.txt --no-cache-dir --default-timeout=100

8 |

9 | COPY uwsgi.ini /etc/uwsgi/

10 | COPY supervisord.conf /etc/supervisor/conf.d/

11 |

12 | # mount the checkpoints dir

13 |

14 | WORKDIR ${HOME}

15 |

16 | # RUN pip install git+https://github.com/heartexlabs/label-studio.git@master

17 | RUN pip install label-studio

18 |

19 | COPY ./ ${HOME}/img_sgm_ml

20 | COPY ./_wsgi.py ${HOME}/_wsgi.py

21 | COPY ./supervisord.conf ${HOME}/supervisord.conf

22 | COPY ./uwsgi.ini ${HOME}/uwsgi.ini

23 |

24 | EXPOSE 9090

25 | CMD ["supervisord"]

26 |

--------------------------------------------------------------------------------

/img_sgm_ml/README.md:

--------------------------------------------------------------------------------

1 | ## Quickstart

2 |

3 | Build and start Machine Learning backend on `http://localhost:9090`

4 |

5 | ```bash

6 | docker-compose up

7 | ```

8 |

9 | Check if it works:

10 |

11 | ```bash

12 | $ curl http://localhost:9090/health

13 | {"status":"UP"}

14 | ```

15 |

16 | Then connect running backend to Label Studio:

17 |

18 | ```bash

19 | label-studio start --init new_project --ml-backends http://localhost:9090 --template image_classification

20 | ```

21 |

22 |

23 | ## Writing your own model

24 | 1. Place your scripts for model training & inference inside root directory. Follow the [API guidelines](#api-guidelines) described bellow. You can put everything in a single file, or create 2 separate one say `my_training_module.py` and `my_inference_module.py`

25 |

26 | 2. Write down your python dependencies in `requirements.txt`

27 |

28 | 3. Open `wsgi.py` and make your configurations under `init_model_server` arguments:

29 | ```python

30 | from my_training_module import training_script

31 | from my_inference_module import InferenceModel

32 |

33 | init_model_server(

34 | create_model_func=InferenceModel,

35 | train_script=training_script,

36 | ...

37 | ```

38 |

39 | 4. Make sure you have docker & docker-compose installed on your system, then run

40 | ```bash

41 | docker-compose up --build

42 | ```

43 |

44 | ## API guidelines

45 |

46 |

47 | #### Inference module

48 | In order to create module for inference, you have to declare the following class:

49 |

50 | ```python

51 | from htx.base_model import BaseModel

52 |

53 | # use BaseModel inheritance provided by pyheartex SDK

54 | class MyModel(BaseModel):

55 |

56 | # Describe input types (Label Studio object tags names)

57 | INPUT_TYPES = ('Image',)

58 |

59 | # Describe output types (Label Studio control tags names)

60 | INPUT_TYPES = ('Choices',)

61 |

62 | def load(self, resources, **kwargs):

63 | """Here you load the model into the memory. resources is a dict returned by training script"""

64 | self.model_path = resources["model_path"]

65 | self.labels = resources["labels"]

66 |

67 | def predict(self, tasks, **kwargs):

68 | """Here you create list of model results with Label Studio's prediction format, task by task"""

69 | predictions = []

70 | for task in tasks:

71 | # do inference...

72 | predictions.append(task_prediction)

73 | return predictions

74 | ```

75 |

76 | #### Training module

77 | Training could be made in a separate environment. The only one convention is that data iterator and working directory are specified as input arguments for training function which outputs JSON-serializable resources consumed later by `load()` function in inference module.

78 |

79 | ```python

80 | def train(input_iterator, working_dir, **kwargs):

81 | """Here you gather input examples and output labels and train your model"""

82 | resources = {"model_path": "some/model/path", "labels": ["aaa", "bbb", "ccc"]}

83 | return resources

84 | ```

--------------------------------------------------------------------------------

/img_sgm_ml/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tristanratz/Interactive-Image-Segmentation/64c1bad6d7c3c32420c4b00a691210d7a8bb4b06/img_sgm_ml/__init__.py

--------------------------------------------------------------------------------

/img_sgm_ml/_wsgi.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | import logging.config

3 | import os

4 |

5 | from img_sgm_ml.api import ModelAPI

6 | from img_sgm_ml.model.config import LabelConfig

7 | from img_sgm_ml.model.utils import generate_config

8 |

9 | logging.config.dictConfig({

10 | "version": 1,

11 | "formatters": {

12 | "standard": {

13 | "format": "[%(asctime)s] [%(levelname)s] [%(name)s::%(funcName)s::%(lineno)d] %(message)s"

14 | }

15 | },

16 | "handlers": {

17 | "console": {

18 | "class": "logging.StreamHandler",

19 | "level": "DEBUG",

20 | "stream": "ext://sys.stdout",

21 | "formatter": "standard"

22 | }

23 | },

24 | "root": {

25 | "level": "ERROR",

26 | "handlers": [

27 | "console"

28 | ],

29 | "propagate": True

30 | }

31 | })

32 |

33 | # Generate config

34 | print("Generating config.xml...")

35 | config = LabelConfig()

36 | generate_config(config, overwrite=True)

37 |

38 | from label_studio.ml import init_app

39 |

40 | if __name__ == "__main__":

41 | parser = argparse.ArgumentParser(description='Label studio')

42 | parser.add_argument(

43 | '-p', '--port', dest='port', type=int, default=9090,

44 | help='Server port')

45 | parser.add_argument(

46 | '--host', dest='host', type=str, default='0.0.0.0',

47 | help='Server host')

48 | parser.add_argument(

49 | '--kwargs', dest='kwargs', metavar='KEY=VAL', nargs='+', type=lambda kv: kv.split('='),

50 | help='Additional LabelStudioMLBase model initialization kwargs')

51 | parser.add_argument(

52 | '-d', '--debug', dest='debug', action='store_true',

53 | help='Switch debug mode')

54 | parser.add_argument(

55 | '--log-level', dest='log_level', choices=['DEBUG', 'INFO', 'WARNING', 'ERROR'], default=None,

56 | help='Logging level')

57 | parser.add_argument(

58 | '--model-dir', dest='model_dir', default=os.path.dirname(__file__),

59 | help='Directory where models are stored (relative to the project directory)')

60 | parser.add_argument(

61 | '--check', dest='check', action='store_true',

62 | help='Validate model instance before launching server')

63 |

64 | args = parser.parse_args()

65 |

66 | # setup logging level

67 | if args.log_level:

68 | logging.root.setLevel(args.log_level)

69 |

70 |

71 | def isfloat(value):

72 | try:

73 | float(value)

74 | return True

75 | except ValueError:

76 | return False

77 |

78 |

79 | def parse_kwargs():

80 | param = dict()

81 | for k, v in args.kwargs:

82 | if v.isdigit():

83 | param[k] = int(v)

84 | elif v == 'True' or v == 'true':

85 | param[k] = True

86 | elif v == 'False' or v == 'False':

87 | param[k] = False

88 | elif isfloat(v):

89 | param[k] = float(v)

90 | else:

91 | param[k] = v

92 | return param

93 |

94 |

95 | kwargs = parse_kwargs() if args.kwargs else dict()

96 |

97 | if args.check:

98 | print('Check "' + ModelAPI.__name__ + '" instance creation..')

99 | model = ModelAPI(**kwargs)

100 |

101 | app = init_app(

102 | model_class=ModelAPI,

103 | model_dir=os.environ.get('MODEL_DIR', args.model_dir),

104 | redis_queue=os.environ.get('RQ_QUEUE_NAME', 'default'),

105 | redis_host=os.environ.get('REDIS_HOST', 'localhost'),

106 | redis_port=os.environ.get('REDIS_PORT', 6379),

107 | **kwargs

108 | )

109 |

110 | app.run(host=args.host, port=args.port, debug=args.debug)

111 |

112 | else:

113 | # for uWSGI use

114 | app = init_app(

115 | model_class=ModelAPI,

116 | model_dir=os.environ.get('MODEL_DIR', os.path.dirname(__file__)),

117 | redis_queue=os.environ.get('RQ_QUEUE_NAME', 'default'),

118 | redis_host=os.environ.get('REDIS_HOST', 'localhost'),

119 | redis_port=os.environ.get('REDIS_PORT', 6379)

120 | )

121 |

--------------------------------------------------------------------------------

/img_sgm_ml/api.py:

--------------------------------------------------------------------------------

1 | import os

2 | import sys

3 | from typing import Dict

4 |

5 | from img_sgm_ml.rle.decode import decode

6 | from img_sgm_ml.rle.encode import encode

7 |

8 | MRCNN = os.path.abspath("./img_sgm_ml/Mask_RCNN/")

9 | MRCNN2 = os.path.abspath("./Mask_RCNN/")

10 | sys.path.append(MRCNN)

11 | sys.path.append(MRCNN2)

12 |

13 | from img_sgm_ml.model.utils import generate_color, devide_completions, transform_url

14 | from img_sgm_ml.model.dataset import LabelDataset

15 | from label_studio.ml import LabelStudioMLBase

16 | from img_sgm_ml.model.config import LabelConfig

17 | from label_studio.ml.utils import get_single_tag_keys

18 | from img_sgm_ml.model.model import MaskRCNNModel

19 | import matplotlib.pyplot as plt

20 | import skimage

21 | import numpy as np

22 | import logging

23 | import json

24 |

25 | logging.basicConfig(filename="./img_sgm_ml/rsc/log.log",

26 | filemode='a',

27 | format='%(asctime)s,%(msecs)d %(name)s %(levelname)s %(message)s',

28 | datefmt='%H:%M:%S',

29 | level=logging.DEBUG)

30 |

31 |

32 | class ModelAPI(LabelStudioMLBase):

33 | model = None

34 |

35 | def __init__(self, **kwargs):

36 | super(ModelAPI, self).__init__(**kwargs)

37 |

38 | if os.getenv("DOCKER"):

39 | self.from_name, self.to_name, self.value, self.classes = get_single_tag_keys(

40 | self.parsed_label_config, 'BrushLabels', 'Image')

41 | else:

42 | self.from_name = ""

43 | self.to_name = ""

44 |

45 | # weights_path = model.find_last()

46 | self.config = LabelConfig()

47 |

48 | # Load model

49 | self.model = MaskRCNNModel(self.config)

50 |

51 | def predict(self, tasks, **kwargs):

52 | """

53 | This method will call the prediction and will format the results into the format expected by label-studio

54 |

55 | Args:

56 | tasks: Array of tasks which will be predicted

57 | **kwargs:

58 |

59 | Returns: label-studio compatible results

60 |

61 | """

62 | # Array with loaded images

63 | images = []

64 |

65 | for task in tasks:

66 | # Replace localhost with docker container name, when running with docker

67 | task['data']['image'] = transform_url(task['data']['image'])

68 | # Run model detection

69 | logging.info(str("Running on {}".format(task['data']['image'])))

70 | # Read image

71 | image = skimage.io.imread(task['data']['image'])

72 | images.append(image)

73 |

74 | # Detect objects

75 | predictions = self.model.batchInterfere(images)

76 | logging.info("Inference finished. Start conversion.")

77 |

78 | # Build the detections into an array

79 | results = []

80 | for prediction in predictions:

81 | for i in range(prediction['masks'].shape[2]):

82 | shape = np.array(prediction['masks'][:, :, i]).shape

83 |

84 | # Expand mask with 3 other dimensions to get RGBa Image

85 | mask3d = np.zeros((shape[0], shape[1], 4), dtype=np.uint8)

86 | expanded_mask = np.array(prediction['masks'][:, :, i:i + 1])

87 | mask3d[:, :, :] = expanded_mask

88 |

89 | # Load color

90 | (r, g, b, a) = generate_color(self.config.CLASSES[prediction["class_ids"][i]])

91 |

92 | mask_image = np.empty((shape[0], shape[1], 4), dtype=np.uint8)

93 | mask_image[:, :] = [r, g, b, a]

94 |

95 | # multiply mask_image with array to get a bgr image with the color of the mask/name

96 | mask_image = np.array(mask_image * mask3d, dtype=np.uint8)

97 |

98 | # Write images to see if everything is alright

99 | if not os.getenv("DOCKER"):

100 | plt.imsave(f"./out/mask_{i}.png", prediction['masks'][:, :, i])

101 | plt.imsave(f"./out/mask_{i}_expanded.png", mask3d[:, :, 3])

102 | plt.imsave(f"./out/mask_{i}_color.png", mask_image)

103 |

104 | # Flatten and encode

105 | flat = mask_image.flatten()

106 | rle = encode(flat, len(flat))

107 |

108 | # Write images to see if everything is alright

109 | if not os.getenv("DOCKER"):

110 | logging.info("Encode and decode did work:" + str(np.array_equal(flat, decode(rle))))

111 | plt.imsave(f"./out/mask_{i}_flattened.png", np.reshape(flat, [shape[0], shape[1], 4]))

112 |

113 | # Squeeze into label studio json format

114 | results.append({

115 | 'from_name': self.from_name,

116 | 'to_name': self.to_name,

117 | 'type': 'brushlabels',

118 | 'value': {

119 | 'brushlabels': [self.config.CLASSES[prediction["class_ids"][i]]],

120 | "format": "rle",

121 | "rle": rle.tolist(),

122 | },

123 | "score": float(prediction["scores"][i]),

124 | })

125 |

126 | # Other possible way to consider: Convert to segmentation/polygon format

127 | # https://github.com/cocodataset/cocoapi/issues/131

128 | return [{"result": results}]

129 |

130 | def fit(self, completions, workdir=None, **kwargs) -> Dict:

131 | """

132 | Formats the completions and images into the expected format and trains the model

133 |

134 | Args:

135 | completions: Array of labeled images

136 | workdir:

137 | **kwargs:

138 |

139 | """

140 | logging.info("-----Divide data into training and validation sets-----")

141 | train_completions, val_completions = devide_completions(completions)

142 |

143 | logging.info("-----Prepare datasets-----")

144 | # Create training dataset

145 | train_set = LabelDataset(self.config)

146 | train_set.load_completions(train_completions)

147 |

148 | # Create validation dataset

149 | val_set = LabelDataset(self.config)

150 | val_set.load_completions(val_completions)

151 |

152 | del completions

153 |

154 | train_set.prepare()

155 | val_set.prepare()

156 |

157 | logging.info("-----Train model-----")

158 | model_path = self.model.train(train_set, val_set)

159 | logging.info("-----Training finished-----")

160 |

161 | classes = []

162 | for idx in self.config.CLASSES:

163 | if self.config.CLASSES[idx] != '__background__':

164 | classes.append(self.config.CLASSES[idx])

165 |

166 | return {'model_path': model_path, 'classes': classes}

167 |

168 |

169 | if __name__ == "__main__":

170 | m = ModelAPI()

171 | predictions = m.predict(tasks=[{

172 | "data": {

173 | # "image": "http://localhost:8080/data/upload/2ccf6fecb6406e9b3badb399f85070e3-DSC_0020.JPG"

174 | # "image": "http://localhost:8080/data/upload/0462f5361cfcd2d02f94d44760b74f0c-DSC_0296.JPG"

175 | "image": "http://localhost:8080/data/upload/a7146602a93f844dbeb3cc21f9dc1fd8-DSC_0277.JPG"

176 | }

177 | }])

178 |

179 | # print(predictions)

180 | width = 700

181 | height = 468

182 |

183 | for p in predictions:

184 | plt.imsave(f"./out/{p['result'][0]['value']['brushlabels'][0]}.png",

185 | np.reshape(decode(p["result"][0]["value"]["rle"]), [height, width, 4])[:, :, 3] / 255)

186 |

--------------------------------------------------------------------------------

/img_sgm_ml/model/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tristanratz/Interactive-Image-Segmentation/64c1bad6d7c3c32420c4b00a691210d7a8bb4b06/img_sgm_ml/model/__init__.py

--------------------------------------------------------------------------------

/img_sgm_ml/model/config.py:

--------------------------------------------------------------------------------

1 | from img_sgm_ml.Mask_RCNN.mrcnn.config import Config

2 |

3 |

4 | class LabelConfig(Config):

5 | """Configuration for training on the dataset.

6 | Derives from the base Config class and overrides some values.

7 | """

8 | # Give the configuration a recognizable name

9 | NAME = "label"

10 |

11 | # We use a GPU with 12GB memory, which can fit two images.

12 | # Adjust down if you use a smaller GPU.

13 | GPU_COUNT = 1

14 | IMAGES_PER_GPU = 1

15 |

16 | # Number of training steps per epoch

17 | STEPS_PER_EPOCH = 100

18 |

19 | # Learning rate

20 | LEARNING_RATE = 0.006

21 |

22 | # Skip detections with < 90% confidence

23 | DETECTION_MIN_CONFIDENCE = 0.9

24 |

25 | # Number of classes (including background)

26 | NUM_CLASSES = 2 # Background + classes

27 |

28 | # Classes (including background)

29 | CLASSES = {0: u'__background__',

30 | 1: u'balloon',

31 | }

32 |

33 | # Coco classes (including background)

34 | # CLASSES = {0: u'__background__',

35 | # 1: u'person',

36 | # 2: u'bicycle',

37 | # 3: u'car',

38 | # 4: u'motorcycle',

39 | # 5: u'airplane',

40 | # 6: u'bus',

41 | # 7: u'train',

42 | # 8: u'truck',

43 | # 9: u'boat',

44 | # 10: u'traffic light',

45 | # 11: u'fire hydrant',

46 | # 12: u'stop sign',

47 | # 13: u'parking meter',

48 | # 14: u'bench',

49 | # 15: u'bird',

50 | # 16: u'cat',

51 | # 17: u'dog',

52 | # 18: u'horse',

53 | # 19: u'sheep',

54 | # 20: u'cow',

55 | # 21: u'elephant',

56 | # 22: u'bear',

57 | # 23: u'zebra',

58 | # 24: u'giraffe',

59 | # 25: u'backpack',

60 | # 26: u'umbrella',

61 | # 27: u'handbag',

62 | # 28: u'tie',

63 | # 29: u'suitcase',

64 | # 30: u'frisbee',

65 | # 31: u'skis',

66 | # 32: u'snowboard',

67 | # 33: u'sports ball',

68 | # 34: u'kite',

69 | # 35: u'baseball bat',

70 | # 36: u'baseball glove',

71 | # 37: u'skateboard',

72 | # 38: u'surfboard',

73 | # 39: u'tennis racket',

74 | # 40: u'bottle',

75 | # 41: u'wine glass',

76 | # 42: u'cup',

77 | # 43: u'fork',

78 | # 44: u'knife',

79 | # 45: u'spoon',

80 | # 46: u'bowl',

81 | # 47: u'banana',

82 | # 48: u'apple',

83 | # 49: u'sandwich',

84 | # 50: u'orange',

85 | # 51: u'broccoli',

86 | # 52: u'carrot',

87 | # 53: u'hot dog',

88 | # 54: u'pizza',

89 | # 55: u'donut',

90 | # 56: u'cake',

91 | # 57: u'chair',

92 | # 58: u'couch',

93 | # 59: u'potted plant',

94 | # 60: u'bed',

95 | # 61: u'dining table',

96 | # 62: u'toilet',

97 | # 63: u'tv',

98 | # 64: u'laptop',

99 | # 65: u'mouse',

100 | # 66: u'remote',

101 | # 67: u'keyboard',

102 | # 68: u'cell phone',

103 | # 69: u'microwave',

104 | # 70: u'oven',

105 | # 71: u'toaster',

106 | # 72: u'sink',

107 | # 73: u'refrigerator',

108 | # 74: u'book',

109 | # 75: u'clock',

110 | # 76: u'vase',

111 | # 77: u'scissors',

112 | # 78: u'teddy bear',

113 | # 79: u'hair drier',

114 | # 80: u'toothbrush'}

115 |

--------------------------------------------------------------------------------

/img_sgm_ml/model/dataset.py:

--------------------------------------------------------------------------------

1 | from label_studio.ml.utils import is_skipped

2 |

3 | from img_sgm_ml.Mask_RCNN.mrcnn.utils import Dataset

4 | from img_sgm_ml.model.utils import completion_to_mrnn, transform_url

5 | import logging

6 |

7 |

8 | class LabelDataset(Dataset):

9 | """ This class turns the label studio completions into readable datasets"""

10 |

11 | def __init__(self, config):

12 | super(LabelDataset, self).__init__()

13 |

14 | self.config = config

15 |

16 | # Load classes

17 | for idx in config.CLASSES:

18 | if config.CLASSES[idx] != '__background__':

19 | self.add_class("img", idx, config.CLASSES[idx])

20 |

21 | def load_completions(self, completions):

22 | """

23 | Prepare the completions

24 | Args:

25 | completions: Already labeled label studio images

26 | """

27 | print('Collecting completions...')

28 | for completion in completions:

29 | if is_skipped(completion):

30 | continue

31 |

32 | image_url = transform_url(completion['data']["image"])

33 |

34 | logging.debug("Convert completions to mrnn format for image " + image_url)

35 | # Convert completions to mrnn format

36 | bit_dict = completion_to_mrnn(completion['completions'][0], self.config)

37 |

38 | logging.debug("Add converted completion to Dataset")

39 | # Add converted completion to Dataset

40 | self.add_image(

41 | "img",

42 | image_id=completion['completions'][0]["id"], # use file name as a unique image id

43 | path=image_url,

44 | width=bit_dict["width"], height=bit_dict["height"],

45 | class_ids=bit_dict["class_ids"],

46 | bitmask=bit_dict["bitmaps"])

47 |

48 | def image_reference(self, image_id):

49 | """Return the path of the image."""

50 | info = self.image_info[image_id]

51 | if info["source"] == "img":

52 | return info["path"]

53 | else:

54 | super(self.__class__, self).image_reference(image_id)

55 |

56 | def load_mask(self, image_id):

57 | """ Return mrnn the bitmask and the accompanying class ids """

58 | info = self.image_info[image_id]

59 | return info["bitmask"], info["class_ids"]

60 |

--------------------------------------------------------------------------------

/img_sgm_ml/model/model.py:

--------------------------------------------------------------------------------

1 | import os

2 | import time

3 | import logging

4 |

5 | import mrcnn.model as modellib

6 |

7 | from img_sgm_ml.model.utils import download_weights

8 |

9 |

10 | class MaskRCNNModel():

11 | def __init__(self, config, path=None):

12 | self.config = config

13 |

14 | if path is None:

15 | self.model_dir_path = os.path.abspath("./img_sgm_ml/rsc/checkpoints/")

16 | else:

17 | self.model_dir_path = path

18 |

19 | # Create Model

20 | self.model = modellib.MaskRCNN("inference", config, self.model_dir_path)

21 | self.train_model = modellib.MaskRCNN("training", config, self.model_dir_path)

22 | self.model_path = ""

23 |

24 | # Download wights

25 | download_weights()

26 |

27 | def train(self, train_set, test_set):

28 | """

29 | Loads the model, and then trains it on the data

30 |

31 | Returns: The path to the generated model

32 |

33 | """

34 | # Training config taken from:

35 | # https://towardsdatascience.com/object-detection-using-mask-r-cnn-on-a-custom-dataset-4f79ab692f6d

36 |

37 | # Load latest models

38 | self.reset_model(train=True)

39 | model = self.train_model

40 |

41 | # Do training

42 | model.train(train_set, test_set, learning_rate=2 * self.config.LEARNING_RATE, epochs=5, layers='heads')

43 |

44 | # history = model.keras_model.history.history

45 |

46 | model_path = os.path.join(self.model_dir_path, 'mask_rcnn_' + str(time.time()) + '.h5')

47 | model.keras_model.save_weights(model_path)

48 | return model_path

49 |

50 | def interference(self, img):

51 | """

52 | Loads latest models and does interference if successfull

53 |

54 | Args:

55 | img: An image loaded with scikit image

56 |

57 | Returns: The predictions for that image

58 |

59 | """

60 | if self.reset_model():

61 | return self.model.detect([img], verbose=1)

62 | else:

63 | return []

64 |

65 | def batchInterfere(self, imgs):

66 | """

67 | Loads latest models and does interference if successfull

68 |

69 | Args:

70 | imgs: Array of images loaded with scikit image

71 |

72 | Returns: The predictions for these images

73 |

74 | """

75 | if self.reset_model():

76 | return self.model.detect(imgs, verbose=1)

77 | else:

78 | return []

79 |

80 | def reset_model(self, train=False):

81 | """

82 | Loads the latest model if available, or nothing or the pretrained COCO Model

83 | if no Model available and in training mode

84 |

85 | Args:

86 | train: training mode?

87 |

88 | Returns: False if no model loaded or true if model loaded successfully

89 |

90 | """

91 | # Path to the COCO Model

92 | coco_path = os.path.join(os.path.abspath("./img_sgm_ml/rsc/"), "mask_rcnn_coco.h5")

93 | model_path = ""

94 |

95 | # Files in the model directory

96 | model_files = [file for file in os.listdir(self.model_dir_path)

97 | if file.endswith(".h5")]

98 |

99 | if 0 < len(model_files) and not os.getenv("COCO"):

100 | # Search model

101 | model_path = self.model.find_last()

102 | if train:

103 | # Load training weight

104 | self.train_model.load_weights(model_path, by_name=True)

105 | return True

106 | else:

107 | model_path = coco_path

108 | if train:

109 | self.train_model.load_weights(coco_path,

110 | by_name=True,

111 | exclude=["mrcnn_class_logits",

112 | "mrcnn_bbox_fc",

113 | "mrcnn_bbox",

114 | "mrcnn_mask"])

115 | return True

116 |

117 | # Load interference model

118 | if not self.model_path is model_path:

119 | # Load weights

120 | self.model.load_weights(model_path, by_name=True)

121 | self.model_path = model_path

122 | elif self.model_path is "":

123 | return False

124 | return True

125 |

--------------------------------------------------------------------------------

/img_sgm_ml/model/utils.py:

--------------------------------------------------------------------------------

1 | import hashlib

2 | import json

3 | import os

4 | import random

5 | import logging

6 | import xml.etree.ElementTree as ET

7 |

8 | import numpy as np

9 | from mrcnn import utils

10 |

11 | from img_sgm_ml.rle.decode import decode

12 |

13 |

14 | def transform_url(url):

15 | url = url.replace(" ", "%20")

16 | if os.getenv("DOCKER"):

17 | url = url.replace("localhost", "labeltool", 1)

18 | return url

19 |

20 |

21 | def download_weights():

22 | """Downloads coco model if not already downloaded"""

23 | if os.getenv("DOCKER"):

24 | weights_path = os.path.join(

25 | os.path.dirname(

26 | os.path.dirname(

27 | os.path.realpath(__file__)

28 | )

29 | ),

30 | "rsc/mask_rcnn_coco.h5")

31 | else:

32 | weights_path = os.path.join(

33 | os.path.dirname(

34 | os.path.dirname(

35 | os.path.dirname(

36 | os.path.realpath(__file__)

37 | )

38 | )

39 | ),

40 | "rsc/mask_rcnn_coco.h5")

41 | if not os.path.exists(weights_path):

42 | utils.download_trained_weights(weights_path)

43 |

44 |

45 | def generate_color(string: str) -> (int, int, int, int):

46 | """Create RGB Color for a specific name"""

47 | # Generate hash

48 | result = hashlib.md5(bytes(string.encode('utf-8')))

49 | name_hash = int(result.hexdigest(), 16)

50 | r = (name_hash & 0xFF0000) >> 16

51 | g = (name_hash & 0x00FF00) >> 8

52 | b = name_hash & 0x0000FF

53 | return r, g, b, 128

54 |

55 |

56 | def generate_config(config, overwrite: bool = False):

57 | """Generate config.xml for label-studio."""

58 |

59 | # config file

60 | file = os.path.join(

61 | os.path.dirname(

62 | os.path.dirname(

63 | os.path.dirname(

64 | os.path.realpath(__file__)

65 | )

66 | )

67 | ), "img_sgm/config.xml")

68 |

69 | if not overwrite and os.path.isfile(file):

70 | print("Using existing config.")

71 | return

72 |

73 | print("Generating XML config...")

74 | view = ET.Element('View')

75 | brushlabels = ET.SubElement(view, 'BrushLabels')

76 | brushlabels.set("name", "tag")

77 | brushlabels.set("toName", "img")

78 | img = ET.SubElement(view, 'Image')

79 | img.set("name", "img")

80 | img.set("value", "$image")

81 | img.set("zoom", "true")

82 | img.set("zoomControl", "true")

83 |

84 | for key in config.CLASSES:

85 | lclass = config.CLASSES[key]

86 | if lclass != u"__background__":

87 | label = ET.SubElement(brushlabels, 'Label')

88 | label.set("value", lclass)

89 | (r, g, b, a) = generate_color(lclass)

90 | label.set("background", f"rgba({r},{g},{b},{round((a / 255), 2)})")

91 |

92 | tree = ET.ElementTree(view)

93 | tree.write(file)

94 | print("Config generated.")

95 |

96 |

97 | def decode_completions_to_bitmap(completion):

98 | """

99 | From Label-Studio completion to dict with bitmap

100 | Args:

101 | completion: a LS completion of an image

102 |

103 | Returns:

104 | { result-count: count of labels

105 | labels: [label_1, label_2...]

106 | bitmaps: [ [numpy uint8 image (width x height)] ]

107 | }

108 |

109 | """

110 | labels = []

111 | bitmaps = []

112 | counter = 0

113 | logging.debug("Decoding completion")

114 | for result in completion['result']:

115 | if result['type'] != 'brushlabels':

116 | continue

117 |

118 | label = result['value']['brushlabels'][0]

119 | labels.append(label)

120 |

121 | # result count

122 | counter += 1

123 |

124 | rle = result['value']['rle']

125 | width = result['original_width']

126 | height = result['original_height']

127 |

128 | logging.debug("Decoding RLE")

129 | dec = decode(rle)

130 | image = np.reshape(dec, [height, width, 4])[:, :, 3]

131 | f = np.vectorize(lambda l: 1 if (float(l)/255) > 0.4 else 0)

132 | bitmap = f(image)

133 | bitmaps.append(bitmap)

134 | logging.debug("Decoding finished")

135 | return {

136 | "results_count": counter,

137 | "labels": labels,

138 | "bitmaps": bitmaps

139 | }

140 |

141 |

142 | def convert_bitmaps_to_mrnn(bitmap_object, config):

143 | """

144 | Converts a Bitmap object (function above) into a mrnn compatible format

145 |

146 | Args:

147 | bitmap_object: Object returned by decode_completions_to_bitmap

148 | config: Config with classes

149 |

150 | Returns:

151 | { labels: [label_1_index, label_2_index...]

152 | bitmaps: [ [numpy bool image (width x height x instance_count)] ]

153 | }

154 |

155 | """

156 | bitmaps = bitmap_object["bitmaps"]

157 | counter = bitmap_object["results_count"]

158 | labels = bitmap_object["labels"]

159 | height = bitmaps[0].shape[0]

160 | width = bitmaps[0].shape[1]

161 |

162 | bms = np.zeros((height, width, counter), np.int32)

163 | for i, bm in enumerate(bitmaps):

164 | bms[:, :, i] = bm

165 |

166 | invlabels = dict(zip(config.CLASSES.values(), config.CLASSES.keys()))

167 | encoded_labels = [invlabels[l] for l in labels]

168 | print("Encoded labels:", encoded_labels)

169 |

170 | return {

171 | "class_ids": np.array(encoded_labels, np.int32),

172 | "bitmaps": bms.astype(np.bool),

173 | "width": width,

174 | "height": height

175 | }

176 |

177 |

178 | def completion_to_mrnn(completion, config):

179 | return convert_bitmaps_to_mrnn(

180 | decode_completions_to_bitmap(completion),

181 | config

182 | )

183 |

184 |

185 | def devide_completions(completions, train_share=0.85):

186 | """

187 | Devide completions into train and validation set.

188 | Load allocation from previous trainings from state file

189 |

190 | Args:

191 | completions: The complete set of completions

192 |

193 | Returns: A set of completions of the first

194 |

195 | """

196 | completions = [c for c in completions]

197 |

198 | f = os.path.join(

199 | os.path.dirname(

200 | os.path.dirname(

201 | os.path.realpath(__file__))),

202 | "rsc/data_allocation.json"

203 | )

204 |

205 | allocs = []

206 | if os.path.isfile(f):

207 | allocs = json.load(open(f, "r"))["completions"]

208 |

209 | alloc_train_ids = [x["id"] for x in allocs if x["subset"] == "train"]

210 | alloc_val_ids = [x["id"] for x in allocs if x["subset"] == "val"]

211 |

212 | # Devide into already classified elements, and unclassified

213 | train_set = list(filter(lambda x: x['completions'][0]["id"] in alloc_train_ids, completions))

214 | val_set = list(filter(lambda x: x['completions'][0]["id"] in alloc_val_ids, completions))

215 | unallocated = list(filter(lambda x: not (x['completions'][0]["id"] in alloc_train_ids

216 | or x['completions'][0]["id"] in alloc_val_ids), completions))

217 |

218 | # allocate yet unclassified elements

219 | random.shuffle(unallocated)

220 | add_items = int(round(len(completions) * train_share, 0)) - len(train_set)

221 |

222 | if add_items > 0:

223 | if add_items < len(completions) - 1:

224 | train_set = train_set + unallocated[:add_items]

225 | val_set = val_set + unallocated[add_items:]

226 | else:

227 | train_set = train_set + unallocated

228 | else:

229 | val_set = val_set + unallocated

230 |

231 | # Save the allocation to file

232 | allocs_train = [{"id": x['completions'][0]["id"], "subset": "train"} for x in train_set]

233 | allocs_val = [{"id": x['completions'][0]["id"], "subset": "val"} for x in val_set]

234 |

235 | allocs = allocs_train + allocs_val

236 |

237 | json.dump({"completions": allocs}, open(f, "w"))

238 | print("Allocation was written. Completions:", len(allocs))

239 |

240 | return train_set, val_set

241 |

--------------------------------------------------------------------------------

/img_sgm_ml/requirements.txt:

--------------------------------------------------------------------------------

1 | matplotlib

2 | label-studio==0.9.0

3 | opencv-python

4 |

5 | # https://github.com/neuropoly/spinalcordtoolbox/issues/2987

6 | h5py~=2.10.0

7 | # numba

8 |

9 | # MaskRCNN

10 | numpy

11 | scipy

12 | Pillow

13 | cython

14 | matplotlib

15 | scikit-image

16 | tensorflow==1.15.0

17 | keras==2.0.8

18 | opencv-python

19 | imgaug

20 | IPython[all]

--------------------------------------------------------------------------------

/img_sgm_ml/rle/README.md:

--------------------------------------------------------------------------------

1 | # Binary RLE (Run Length Encoding) Implementation

2 |

3 | This implementation of RLE is comopatible with thi-ng's rle-pack JavaScript implementation.

4 | [Find it here](https://github.com/thi-ng/umbrella/tree/develop/packages/rle-pack)

5 |

6 | Just import the encode and decode function and recieve a much smaller lossless compressed list.

7 |

8 | Learn more about the format on the site linked above.

9 | It was tested in 8bit, since the pictures in this project are stored in a 8bit RGB map.

--------------------------------------------------------------------------------

/img_sgm_ml/rle/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/tristanratz/Interactive-Image-Segmentation/64c1bad6d7c3c32420c4b00a691210d7a8bb4b06/img_sgm_ml/rle/__init__.py

--------------------------------------------------------------------------------

/img_sgm_ml/rle/decode.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 |

3 | from img_sgm_ml.rle.inputstream import InputStream

4 | """This file was taken from label_studio_converter to turn RLE into Numpy Arrays"""

5 |

6 | def access_bit(data, num):

7 | """ from bytes array to bits by num position

8 | """

9 | base = int(num // 8)

10 | shift = 7 - int(num % 8)

11 | return (data[base] & (1 << shift)) >> shift

12 |

13 |

14 | def bytes2bit(data):

15 | """ get bit string from bytes data

16 | """

17 | return ''.join([str(access_bit(data, i)) for i in range(len(data) * 8)])

18 |

19 |

20 | def decode(rle, verbose=0):

21 | """ from LS RLE to numpy uint8 3d image [width, height, channel]

22 | """

23 | input = InputStream(bytes2bit(rle))

24 | num = input.read(32)

25 | word_size = input.read(5) + 1

26 | rle_sizes = [input.read(4) + 1 for _ in range(4)]

27 |

28 | if verbose == 1:

29 | print('RLE params:', num, 'values', word_size, 'word_size', rle_sizes, 'rle_sizes')

30 | i = 0

31 | out = np.zeros(num, dtype=np.uint8)

32 | while i < num:

33 | x = input.read(1)

34 | j = i + 1 + input.read(rle_sizes[input.read(2)])

35 | if x:

36 | val = input.read(word_size)

37 | out[i:j] = val

38 | i = j

39 | else:

40 | while i < j:

41 | val = input.read(word_size)

42 | out[i] = val

43 | i += 1

44 | return out

45 |

--------------------------------------------------------------------------------

/img_sgm_ml/rle/encode.py:

--------------------------------------------------------------------------------

1 | from img_sgm_ml.rle.outputstream import OutputStream

2 |

3 |

4 | def encode(

5 | src: [int],

6 | num: [int],

7 | word_size=8,

8 | rle_sizes=None

9 | ) -> []:

10 | """

11 | Python implementation of thi-ng's rle-pack

12 | https://github.com/thi-ng/umbrella/blob/develop/packages/rle-pack/

13 |

14 | Args:

15 | src: The array to encode

16 | num: number of input words

17 | word_size: in bits, range 1 - 32

18 | rle_sizes: Four numbers [int,int,int,int]

19 |

20 | Returns: Encoded array

21 |

22 | """

23 |

24 | if rle_sizes is None:

25 | rle_sizes = [3, 4, 8, 16]

26 |

27 | if word_size < 1 or word_size > 32:

28 | print("Error: word size (1-32 bits only)")

29 | return

30 |

31 | out = OutputStream(int(round((num * word_size) / 8)) + 4 + 2 + 1)

32 | out.write(num, 32)

33 | out.write(word_size - 1, 5)

34 | for x in rle_sizes:

35 | if x < 1 or x > 16:

36 | print("Error: RLE repeat size (1-16 bits only)")

37 | return

38 | out.write(x - 1, 4)

39 |

40 | rle0: int = 1 << rle_sizes[0]

41 | rle1: int = 1 << rle_sizes[1]

42 | rle2: int = 1 << rle_sizes[2]

43 | rle3: int = 1 << rle_sizes[3]

44 |

45 | n1 = num - 1

46 | val = None

47 | tail = True

48 | i = 0

49 | chunk: [int] = []

50 | n = 0

51 |

52 | def write_rle(_n: int):

53 | t = 0 if _n < rle0 else 1 if _n < rle1 else 2 if _n < rle2 else 3

54 | out.write_bit(1)

55 | out.write(t, 2)

56 | out.write(_n, rle_sizes[t])

57 | out.write(val, word_size)

58 |

59 | def write_chunk(_chunk: [int]):

60 | m = len(_chunk) - 1

61 | t = 0 if m < rle0 else 1 if m < rle1 else 2 if m < rle2 else 3

62 | out.write_bit(0)

63 | out.write(t, 2)

64 | out.write(m, rle_sizes[t])

65 | out.write_words(_chunk, word_size)

66 |

67 | for x in src:

68 | if val is None:

69 | val = x

70 | elif x != val:

71 | if n > 0:

72 | write_rle(n)

73 | n = 0

74 | else:

75 | chunk.append(val)

76 | if len(chunk) == rle3:

77 | write_chunk(chunk)

78 | chunk = []

79 | val = x

80 | else:

81 | if len(chunk):

82 | write_chunk(chunk)

83 | chunk = []

84 | n = n + 1

85 | if n == rle3:

86 | n = n - 1

87 | write_rle(n)

88 | n = 0

89 | tail = i < n1

90 | if i == n1:

91 | break

92 | i = i + 1

93 | if len(chunk):

94 | chunk.append(val)

95 | write_chunk(chunk)

96 | chunk = []

97 | elif tail:

98 | write_rle(n)

99 | n = 0

100 | return out.bytes()

101 |

--------------------------------------------------------------------------------

/img_sgm_ml/rle/inputstream.py:

--------------------------------------------------------------------------------

1 | class InputStream:

2 | def __init__(self, data):

3 | self.data = data

4 | self.i = 0

5 |

6 | def read(self, size):

7 | out = self.data[self.i:self.i + size]

8 | self.i += size

9 | return int(out, 2)

10 |

--------------------------------------------------------------------------------

/img_sgm_ml/rle/outputstream.py:

--------------------------------------------------------------------------------

1 | import math

2 |

3 | import numpy as np

4 |

5 | U32 = int(math.pow(2, 32))

6 |

7 |

8 | class OutputStream:

9 | """

10 | Python implementation of the Output Stream by thi-ng

11 | https://github.com/thi-ng/umbrella/tree/develop/packages/bitstream/

12 | """

13 | buffer: np.array

14 | pos: int

15 | bit: int

16 | bitpos: int

17 |

18 | def __init__(self, size):

19 | self.buffer = np.empty(size, dtype=np.uint8)

20 | self.pos = 0

21 | self.bitpos = 0

22 | self.seek(0)

23 |

24 | def position(self):

25 | return self.bitpos

26 |

27 | def seek(self, pos: int):

28 | if pos >= len(self.buffer) << 3:

29 | print("seek pos out of bounds:", pos)

30 |

31 | self.pos = pos >> 3

32 | self.bit = 8 - (pos & 0x7)

33 | self.bitpos = pos

34 |

35 | def write(self, x: int, word_size: int = 1):

36 | if word_size > 32:

37 | hi = int(math.floor(x / U32))

38 | self.write(hi, word_size - 32)

39 | self.write(x - hi * U32, 32)

40 | elif word_size > 8:

41 | n: int = word_size & -8

42 | msb = word_size - n

43 | if msb > 0:

44 | self._write(x >> n, msb)

45 | n -= 8

46 | while n >= 0:

47 | self._write(x >> n, 8)

48 | n -= 8

49 | else:

50 | self._write(x, word_size)

51 |

52 | def write_bit(self, x):

53 | self.bit = self.bit - 1

54 | self.buffer[self.pos] = (self.buffer[self.pos] & ~(1 << self.bit)) | (x << self.bit)

55 |

56 | if self.bit == 0:

57 | self.ensure_size()

58 | self.bit = 8

59 | self.bitpos = self.bitpos + 1

60 |

61 | def write_words(self, data, word_size):

62 | for v in data:

63 | self.write(v, word_size)

64 |

65 | def _write(self, x: int, word_size: int):

66 | x &= (1 << word_size) - 1

67 | buf = self.buffer

68 | pos = self.pos

69 | bit = self.bit

70 | b = bit - word_size

71 | m = ~((1 << bit) - 1) if bit < 8 else 0

72 | if b >= 0:

73 | m |= (1 << b) - 1

74 | buf[pos] = (buf[pos] & m) | ((x << b) & ~m)

75 | if b == 0:

76 | self.ensure_size()

77 | self.bit = 8

78 | else:

79 | self.bit = b

80 | else:

81 | self.bit = bit = 8 + b

82 | buf[pos] = (buf[pos] & m) | ((x >> -b) & ~m)

83 | self.ensure_size()

84 | self.buffer[self.pos] = (self.buffer[self.pos] & ((1 << bit) - 1)) | ((x << bit) & 0xff)

85 | self.bitpos += word_size

86 |

87 | def bytes(self):

88 | return self.buffer[0:self.pos + (1 if self.bit & 7 else 0)]

89 |

90 | def ensure_size(self):

91 | self.pos = self.pos + 1

92 | if self.pos == len(self.buffer):

93 | b = np.empty(len(self.buffer) << 1, dtype=np.uint8)

94 | b[:len(self.buffer)] = self.buffer

95 | self.buffer = b

96 |

--------------------------------------------------------------------------------

/img_sgm_ml/rle/test.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 |

3 | from img_sgm_ml.rle.decode import decode

4 | from img_sgm_ml.rle.encode import encode

5 |

6 | if __name__ == "__main__":

7 | x = np.array([123, 12, 33, 123, 2351, 45, 6, 57, 157, 254, 56, 67], dtype=np.uint8)

8 |

9 | enc = encode(x, len(x))

10 | dec = decode(enc)

11 |

12 | print("Test worked.", np.array_equal(dec, x))

13 |

--------------------------------------------------------------------------------

/img_sgm_ml/scripts/load_completion.py:

--------------------------------------------------------------------------------

1 | import label_studio_converter.brush as b

2 | import matplotlib.pyplot as plt

3 | import numpy as np

4 |

5 | if __name__ == "__main__":

6 | b.convert_task_dir("../../img_sgm/completions", "./out")

7 | arr = np.load("./out/task-82.npy")

8 | plt.imsave("out/task82.png", arr)

9 |

10 | # import sys

11 | # import os

12 | # sys.path.append(os.path.abspath("../rle/"))

13 | # from encode import encode

14 | #

15 | # rle_own = encode(image, len(image))

16 | #

17 | # print(np.array_equal(rle, rle_own))

18 | #

19 | # for i, x in enumerate(rle):

20 | # if x != rle_own[i]:

21 | # print(i, x, rle_own[i])

22 |

--------------------------------------------------------------------------------

/img_sgm_ml/scripts/resize.py:

--------------------------------------------------------------------------------

1 | import os

2 |

3 | import cv2

4 |

5 |

6 | # Resizes all the files in an directory

7 |

8 | def resize(dir,

9 | shape,

10 | ending=None): # "JPG" e.g.

11 | files = None

12 | hashes = []

13 |

14 | # shape[0] is width

15 | x = shape[0] # If you want to resize the x site to a value and y proportional (e.g. 100)

16 | y = shape[1] # If you want to resize the y site to a value and x proportional (e.g. 100)

17 |

18 | if ending is None:

19 | files = [f for f in os.listdir(dir) if not (f.startswith(".") or os.path.isdir(os.path.join(dir,f)))]

20 | else:

21 | files = [f for f in os.listdir(dir) if f.endswith(ending) and not (f.startswith(".") or os.path.isdir(os.path.join(dir,f)))]

22 |

23 | i = 0

24 | for f in files:

25 | f = os.path.join(dir, f)

26 |

27 | print(f'Working on {f}')

28 |

29 | img = cv2.imread(f)

30 |

31 | # Check for duplicates

32 | dhash = compute_hash(img)

33 | exists = None

34 | for h in hashes:

35 | if h["hash"] == dhash:

36 | exists = h["file"]

37 | if exists:

38 | print("-----DUPLICATE-----")

39 | print("Original: " + exists)

40 | print("Same: " + f)

41 | continue

42 | else:

43 | hashes.append({"hash": dhash, "file": f})

44 |

45 | if x is not None and y is not None:

46 | shape = shape

47 | elif x is not None:

48 | shape = (x, int(float(img.shape[0]) * (float(x) / float(img.shape[1]))))

49 | elif y is not None:

50 | shape = (int(float(img.shape[1]) * (float(y) / float(img.shape[0]))), y)

51 |

52 | print("Shape: ", shape, "Hash: ",dhash)