├── .envrc

├── .github

└── workflows

│ ├── backpublish.yml

│ ├── ci.yml

│ └── clean.yml

├── .gitignore

├── COPYING

├── README.md

├── build.sbt

├── flake.lock

├── flake.nix

├── project

├── build.properties

└── plugins.sbt

├── scripts

└── back-publish

├── src

├── main

│ ├── resources

│ │ └── scalac-plugin.xml

│ ├── scala-newParser

│ │ └── StringParser.scala

│ ├── scala-newReporting

│ │ └── ReportingCompat.scala

│ ├── scala-oldParser

│ │ └── StringParser.scala

│ ├── scala-oldReporting

│ │ └── ReportingCompat.scala

│ └── scala

│ │ ├── Extractors.scala

│ │ └── KindProjector.scala

└── test

│ ├── resources

│ └── moo.scala

│ └── scala

│ ├── bounds.scala

│ ├── functor.scala

│ ├── hmm.scala

│ ├── issue80.scala

│ ├── nested.scala

│ ├── pc.scala

│ ├── polylambda.scala

│ ├── test.scala

│ ├── tony.scala

│ └── underscores

│ ├── functor.scala

│ ├── issue80.scala

│ ├── javawildcards.scala

│ ├── nested.scala

│ ├── polylambda.scala

│ ├── test.scala

│ └── tony.scala

└── version.sbt

/.envrc:

--------------------------------------------------------------------------------

1 | use flake

2 |

--------------------------------------------------------------------------------

/.github/workflows/backpublish.yml:

--------------------------------------------------------------------------------

1 | name: Back-Publish

2 |

3 | on:

4 | workflow_dispatch:

5 | inputs:

6 | tag:

7 | description: 'Tag to back-publish. Example: v0.13.2'

8 | required: true

9 | scala:

10 | description: 'Scala version. Example: 2.13.8'

11 | required: true

12 |

13 | env:

14 | GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

15 |

16 | jobs:

17 | backpublish:

18 | name: Back-publish

19 | runs-on: ubuntu-latest

20 | steps:

21 | - name: Checkout current branch (full)

22 | uses: actions/checkout@v2

23 | with:

24 | fetch-depth: 0

25 |

26 | - name: Setup Java and Scala

27 | uses: olafurpg/setup-scala@v13

28 | with:

29 | java-version: adopt@1.8

30 |

31 | - name: Run back-publish script

32 | run: ./scripts/back-publish -t ${{ github.event.inputs.tag }} -s ${{ github.event.inputs.scala }}

33 |

--------------------------------------------------------------------------------

/.github/workflows/ci.yml:

--------------------------------------------------------------------------------

1 | # This file was automatically generated by sbt-github-actions using the

2 | # githubWorkflowGenerate task. You should add and commit this file to

3 | # your git repository. It goes without saying that you shouldn't edit

4 | # this file by hand! Instead, if you wish to make changes, you should

5 | # change your sbt build configuration to revise the workflow description

6 | # to meet your needs, then regenerate this file.

7 |

8 | name: Continuous Integration

9 |

10 | on:

11 | pull_request:

12 | branches: ['**']

13 | push:

14 | branches: ['**']

15 |

16 | env:

17 | GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

18 |

19 | jobs:

20 | build:

21 | name: Build and Test

22 | strategy:

23 | matrix:

24 | os: [ubuntu-latest]

25 | scala:

26 | - 2.11.12

27 | - 2.12.8

28 | - 2.12.9

29 | - 2.12.10

30 | - 2.12.11

31 | - 2.12.12

32 | - 2.12.13

33 | - 2.12.14

34 | - 2.12.15

35 | - 2.12.16

36 | - 2.12.17

37 | - 2.12.18

38 | - 2.12.19

39 | - 2.12.20

40 | - 2.13.0

41 | - 2.13.1

42 | - 2.13.2

43 | - 2.13.3

44 | - 2.13.4

45 | - 2.13.5

46 | - 2.13.6

47 | - 2.13.7

48 | - 2.13.8

49 | - 2.13.9

50 | - 2.13.10

51 | - 2.13.11

52 | - 2.13.12

53 | - 2.13.13

54 | - 2.13.14

55 | - 2.13.15

56 | - 2.13.16

57 | java: [zulu@8]

58 | runs-on: ${{ matrix.os }}

59 | steps:

60 | - name: Checkout current branch (full)

61 | uses: actions/checkout@v4

62 | with:

63 | fetch-depth: 0

64 |

65 | - name: Setup Java (zulu@8)

66 | if: matrix.java == 'zulu@8'

67 | uses: actions/setup-java@v4

68 | with:

69 | distribution: zulu

70 | java-version: 8

71 | cache: sbt

72 |

73 | - name: Setup sbt

74 | uses: sbt/setup-sbt@v1

75 |

76 | - name: Check that workflows are up to date

77 | run: sbt '++ ${{ matrix.scala }}' githubWorkflowCheck

78 |

79 | - name: Build project

80 | run: sbt '++ ${{ matrix.scala }}' test

81 |

--------------------------------------------------------------------------------

/.github/workflows/clean.yml:

--------------------------------------------------------------------------------

1 | # This file was automatically generated by sbt-github-actions using the

2 | # githubWorkflowGenerate task. You should add and commit this file to

3 | # your git repository. It goes without saying that you shouldn't edit

4 | # this file by hand! Instead, if you wish to make changes, you should

5 | # change your sbt build configuration to revise the workflow description

6 | # to meet your needs, then regenerate this file.

7 |

8 | name: Clean

9 |

10 | on: push

11 |

12 | jobs:

13 | delete-artifacts:

14 | name: Delete Artifacts

15 | runs-on: ubuntu-latest

16 | env:

17 | GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

18 | steps:

19 | - name: Delete artifacts

20 | shell: bash {0}

21 | run: |

22 | # Customize those three lines with your repository and credentials:

23 | REPO=${GITHUB_API_URL}/repos/${{ github.repository }}

24 |

25 | # A shortcut to call GitHub API.

26 | ghapi() { curl --silent --location --user _:$GITHUB_TOKEN "$@"; }

27 |

28 | # A temporary file which receives HTTP response headers.

29 | TMPFILE=$(mktemp)

30 |

31 | # An associative array, key: artifact name, value: number of artifacts of that name.

32 | declare -A ARTCOUNT

33 |

34 | # Process all artifacts on this repository, loop on returned "pages".

35 | URL=$REPO/actions/artifacts

36 | while [[ -n "$URL" ]]; do

37 |

38 | # Get current page, get response headers in a temporary file.

39 | JSON=$(ghapi --dump-header $TMPFILE "$URL")

40 |

41 | # Get URL of next page. Will be empty if we are at the last page.

42 | URL=$(grep '^Link:' "$TMPFILE" | tr ',' '\n' | grep 'rel="next"' | head -1 | sed -e 's/.*.*//')

43 | rm -f $TMPFILE

44 |

45 | # Number of artifacts on this page:

46 | COUNT=$(( $(jq <<<$JSON -r '.artifacts | length') ))

47 |

48 | # Loop on all artifacts on this page.

49 | for ((i=0; $i < $COUNT; i++)); do

50 |

51 | # Get name of artifact and count instances of this name.

52 | name=$(jq <<<$JSON -r ".artifacts[$i].name?")

53 | ARTCOUNT[$name]=$(( $(( ${ARTCOUNT[$name]} )) + 1))

54 |

55 | id=$(jq <<<$JSON -r ".artifacts[$i].id?")

56 | size=$(( $(jq <<<$JSON -r ".artifacts[$i].size_in_bytes?") ))

57 | printf "Deleting '%s' #%d, %'d bytes\n" $name ${ARTCOUNT[$name]} $size

58 | ghapi -X DELETE $REPO/actions/artifacts/$id

59 | done

60 | done

61 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | lib_managed

2 | project7/boot

3 | project7/plugins/src_managed

4 | project7/plugins/project

5 | project/boot

6 | project/build/target

7 | project/plugins/lib_managed

8 | project/plugins/project

9 | project/plugins/src_managed

10 | project/plugins/target

11 | target

12 | .ensime

13 | .ensime_lucene

14 | TAGS

15 | \#*#

16 | *~

17 | .#*

18 | .lib

19 | usegpg.sbt

20 | .idea

21 | .bsp

22 |

--------------------------------------------------------------------------------

/COPYING:

--------------------------------------------------------------------------------

1 | Copyright (c) 2011-2014 Erik Osheim.

2 |

3 | Permission is hereby granted, free of charge, to any person obtaining a copy of

4 | this software and associated documentation files (the "Software"), to deal in

5 | the Software without restriction, including without limitation the rights to

6 | use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies

7 | of the Software, and to permit persons to whom the Software is furnished to do

8 | so, subject to the following conditions:

9 |

10 | The above copyright notice and this permission notice shall be included in all

11 | copies or substantial portions of the Software.

12 |

13 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

14 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

15 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

16 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

17 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

18 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

19 | SOFTWARE.

20 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | ## Kind Projector

2 |

3 |

4 | [](https://maven-badges.herokuapp.com/maven-central/org.typelevel/kind-projector_2.13.6)

5 |

6 | ### Note on maintenance

7 |

8 | This project is only maintained for Scala 2.x. No new features are developed, but bug fix releases will still be made available. Dotty/Scala 3 has [built-in type lambda syntax](https://docs.scala-lang.org/scala3/reference/new-types/type-lambdas-spec.html) and [kind-projector compatible syntax](https://docs.scala-lang.org/scala3/reference/changed-features/wildcards.html).

9 |

10 | ### Dedication

11 |

12 | > "But I don't want to go among mad people," Alice remarked.

13 | >

14 | > "Oh, you can't help that," said the Cat: "we're all mad here. I'm mad.

15 | > You're mad."

16 | >

17 | > "How do you know I'm mad?" said Alice.

18 | >

19 | > "You must be," said the Cat, "or you wouldn't have come here."

20 | >

21 | > --Lewis Carroll, "Alice's Adventures in Wonderland"

22 |

23 | ### Overview

24 |

25 | One piece of Scala syntactic noise that often trips people up is the

26 | use of type projections to implement anonymous, partially-applied

27 | types. For example:

28 |

29 | ```scala

30 | // partially-applied type named "IntOrA"

31 | type IntOrA[A] = Either[Int, A]

32 |

33 | // type projection implementing the same type anonymously (without a name).

34 | ({type L[A] = Either[Int, A]})#L

35 | ```

36 |

37 | Many people have wished for a better way to do this.

38 |

39 | The goal of this plugin is to add a syntax for type lambdas. We do

40 | this by rewriting syntactically valid programs into new programs,

41 | letting us seem to add new keywords to the language. This is achieved

42 | through a compiler plugin performing an (un-typed) tree

43 | transformation.

44 |

45 | One problem with this approach is that it changes the meaning of

46 | (potentially) valid programs. In practice this means that you must

47 | avoid defining the following identifiers:

48 |

49 | 1. `Lambda` and `λ`

50 | 2. `*`, `+*`, and `-*`

51 | 4. `Λ$`

52 | 5. `α$`, `β$`, ...

53 |

54 | If you find yourself using lots of type lambdas, and you don't mind

55 | reserving those identifiers, then this compiler plugin is for you!

56 |

57 | ### Using the plugin

58 |

59 | Kind-projector supports Scala 2.11, 2.12, and 2.13.

60 |

61 | _Note_: as of version 0.11.0 the plugin is published against the full Scala version

62 | (see #15)

63 |

64 | To use this plugin in your own projects, add the following lines to

65 | your `build.sbt` file:

66 |

67 | ```scala

68 | addCompilerPlugin("org.typelevel" % "kind-projector" % "0.13.2" cross CrossVersion.full)

69 | ```

70 | _Note_: for multi-project builds - put `addCompilerPlugin` clause into settings section for each sub-project.

71 |

72 | For maven projects, add the plugin to the configuration of the

73 | maven-scala-plugin (remember to use `_2.11.12`, `_2.12.15`, `_2.13.6` etc as appropriate):

74 |

75 |

76 | net.alchim31.maven

77 | scala-maven-plugin

78 | ...

79 |

80 |

81 |

82 | org.typelevel

83 | kind-projector_2.13.6

84 | 0.13.2

85 |

86 |

87 |

88 |

89 |

90 | For mill projects, add the plugin to the `scalacPluginIvyDep`

91 | Note the triple colons (`:::`) to ensure it uses the full scala version.

92 |

93 | override def scalacPluginIvyDeps = Agg(

94 | ivy"org.typelevel:::kind-projector:0.13.2"

95 | )

96 |

97 | That's it!

98 |

99 | Versions of the plugin earlier than 0.10.0 were released under a

100 | different organization (`org.spire-math`).

101 |

102 | ### Inline Syntax

103 |

104 | The simplest syntax to use is the inline syntax. This syntax resembles

105 | Scala's use of underscores to define anonymous functions like `_ + _`.

106 |

107 | Since underscore is used for existential types in Scala (and it is

108 | probably too late to change this syntax), we use `*` for the same

109 | purpose. We also use `+*` and `-*` to handle covariant and

110 | contravariant types parameters.

111 |

112 | Here are a few examples:

113 |

114 | ```scala

115 | Tuple2[*, Double] // equivalent to: type R[A] = Tuple2[A, Double]

116 | Either[Int, +*] // equivalent to: type R[+A] = Either[Int, A]

117 | Function2[-*, Long, +*] // equivalent to: type R[-A, +B] = Function2[A, Long, B]

118 | EitherT[*[_], Int, *] // equivalent to: type R[F[_], B] = EitherT[F, Int, B]

119 | ```

120 |

121 | As you can see, this syntax works when each type parameter in the type

122 | lambda is only used in the body once, and in the same order. For more

123 | complex type lambda expressions, you will need to use the function

124 | syntax.

125 |

126 | #### Inline Underscore Syntax

127 |

128 | Since version `0.13.0` kind-projector adds an option to use underscore symbol `_` instead of `*` to define anonymous type lambdas.

129 | The syntax roughly follows the [proposed new syntax for wildcards and placeholders](https://docs.scala-lang.org/scala3/reference/changed-features/wildcards.html#migration-strategy) for Scala 3.2+ and is designed to allow cross-compilation of libraries between Scala 2 and Scala 3 while using the new Scala 3 syntax for both versions.

130 |

131 | To enable this mode, add `-P:kind-projector:underscore-placeholders` to your scalac command-line. In sbt you may do this as follows:

132 |

133 | ```scala

134 | ThisBuild / scalacOptions += "-P:kind-projector:underscore-placeholders"

135 | ```

136 |

137 | This mode is designed to be used with scalac versions `2.12.14`+ and `2.13.6`+, these versions add an the ability to use `?` as the existential type wildcard ([scala/scala#9560](https://github.com/scala/scala/pull/9560)), allowing to repurpose the underscore without losing the ability to write existential types. It is not advised that you use this mode with older versions of scalac or without `-Xsource:3` flag, since you will lose the underscore syntax entirely.

138 |

139 | Here are a few examples:

140 |

141 | ```scala

142 | Tuple2[_, Double] // equivalent to: type R[A] = Tuple2[A, Double]

143 | Either[Int, +_] // equivalent to: type R[+A] = Either[Int, A]

144 | Function2[-_, Long, +_] // equivalent to: type R[-A, +B] = Function2[A, Long, B]

145 | EitherT[_[_], Int, _] // equivalent to: type R[F[_], B] = EitherT[F, Int, B]

146 | ```

147 |

148 | Examples with `-Xsource:3`'s `?`-wildcard:

149 |

150 | ```scala

151 | Tuple2[_, ?] // equivalent to: type R[A] = Tuple2[A, x] forSome { type x }

152 | Either[?, +_] // equivalent to: type R[+A] = Either[x, A] forSome { type x }

153 | Function2[-_, ?, +_] // equivalent to: type R[-A, +B] = Function2[A, x, B] forSome { type x }

154 | EitherT[_[_], ?, _] // equivalent to: type R[F[_], B] = EitherT[F, x, B] forSome { type x }

155 | ```

156 |

157 | ### Function Syntax

158 |

159 | The more powerful syntax to use is the function syntax. This syntax

160 | resembles anonymous functions like `x => x + 1` or `(x, y) => x + y`.

161 | In the case of type lambdas, we wrap the entire function type in a

162 | `Lambda` or `λ` type. Both names are equivalent: the former may be

163 | easier to type or say, and the latter is less verbose.

164 |

165 | Here are some examples:

166 |

167 | ```scala

168 | Lambda[A => (A, A)] // equivalent to: type R[A] = (A, A)

169 | Lambda[(A, B) => Either[B, A]] // equivalent to: type R[A, B] = Either[B, A]

170 | Lambda[A => Either[A, List[A]]] // equivalent to: type R[A] = Either[A, List[A]]

171 | ```

172 |

173 | Since types like `(+A, +B) => Either[A, B]` are not syntactically

174 | valid, we provide two alternate methods to specify variance when using

175 | function syntax:

176 |

177 | * Plus/minus: `(+[A], +[B]) => Either[A, B]`

178 | * Backticks: ``(`+A`, `+B`) => Either[A, B]``

179 |

180 | (Note that unlike names like `*`, `+` and `-` do not have to be

181 | reserved. They will only be interpreted this way when used in

182 | parameters to `Lambda[...]` types, which should never conflict with

183 | other usage.)

184 |

185 | Here are some examples with variance:

186 |

187 | ```scala

188 | λ[`-A` => Function1[A, Double]] // equivalent to: type R[-A] = Function1[A, Double]

189 | λ[(-[A], +[B]) => Function2[A, Int, B]] // equivalent to: type R[-A, +B] = Function2[A, Int, B]

190 | λ[`+A` => Either[List[A], List[A]]] // equivalent to: type R[+A] = Either[List[A], List[A]]

191 | ```

192 |

193 | The function syntax also supports higher-kinded types as type

194 | parameters. The syntax overloads the existential syntax in this case

195 | (since the type parameters to a type lambda should never contain an

196 | existential).

197 |

198 | Here are a few examples with higher-kinded types:

199 |

200 | ```scala

201 | Lambda[A[_] => List[A[Int]]] // equivalent to: type R[A[_]] = List[A[Int]]

202 | Lambda[(A, B[_]) => B[A]] // equivalent to: type R[A, B[_]] = B[A]

203 | ```

204 |

205 | Finally, variance annotations on higher-kinded sub-parameters are

206 | supported using backticks:

207 |

208 | ```scala

209 | Lambda[`x[+_]` => Q[x, List] // equivalent to: type R[x[+_]] = Q[x, List]

210 | Lambda[`f[-_, +_]` => B[f] // equivalent to: type R[f[-_, +_]] = B[f]

211 | ```

212 |

213 | The function syntax with backtick type parameters is the most

214 | expressive syntax kind-projector supports. The other syntaxes are

215 | easier to read at the cost of being unable to express certain

216 | (hopefully rare) type lambdas.

217 |

218 | ### Type lambda gotchas

219 |

220 | The inline syntax is the tersest and is often preferable when

221 | possible. However, there are some type lambdas which it cannot

222 | express.

223 |

224 | For example, imagine that we have `trait Functor[F[_]]`.

225 |

226 | You might want to write `Functor[Future[List[*]]]`, expecting to get

227 | something like:

228 |

229 | ```scala

230 | type X[a] = Future[List[a]]

231 | Functor[X]

232 | ```

233 |

234 | However, `*` always binds at the tightest level, meaning that

235 | `List[*]` is interpreted as `type X[a] = List[a]`, and that

236 | `Future[List[*]]` is invalid.

237 |

238 | In these cases you should prefer the lambda syntax, which would be

239 | written as:

240 |

241 | ```scala

242 | Functor[Lambda[a => Future[List[a]]]]

243 | ```

244 |

245 | Other types which cannot be written correctly using inline syntax are:

246 |

247 | * `Lambda[a => (a, a)]` (repeated use of `a`).

248 | * `Lambda[(a, b) => Either[b, a]]` (reverse order of type params).

249 | * `Lambda[(a, b) => Function1[a, Option[b]]]` (similar to example).

250 |

251 | (And of course, you can use `λ[...]` instead of `Lambda[...]` in any

252 | of these expressions.)

253 |

254 | ### Under The Hood

255 |

256 | This section shows the exact code produced for a few type lambda

257 | expressions.

258 |

259 | ```scala

260 | Either[Int, *]

261 | ({type Λ$[β$0$] = Either[Int, β$0$]})#Λ$

262 |

263 | Function2[-*, String, +*]

264 | ({type Λ$[-α$0$, +γ$0$] = Function2[α$0$, String, γ$0$]})#Λ$

265 |

266 | Lambda[A => (A, A)]

267 | ({type Λ$[A] = (A, A)})#Λ$

268 |

269 | Lambda[(`+A`, B) => Either[A, Option[B]]]

270 | ({type Λ$[+A, B] = Either[A, Option[B]]})#Λ$

271 |

272 | Lambda[(A, B[_]) => B[A]]

273 | ({type Λ$[A, B[_]] = B[A]})#Λ$

274 | ```

275 |

276 | As you can see, names like `Λ$` and `α$` are forbidden because they

277 | might conflict with names the plugin generates.

278 |

279 | If you dislike these unicode names, pass `-Dkp:genAsciiNames=true` to

280 | scalac to use munged ASCII names. This will use `L_kp` in place of

281 | `Λ$`, `X_kp0$` in place of `α$`, and so on.

282 |

283 | ### Polymorphic lambda values

284 |

285 | Scala does not have built-in syntax or types for anonymous function

286 | values which are polymorphic (i.e. which can be parameterized with

287 | types). To illustrate that consider both of these methods:

288 |

289 | ```scala

290 | def firstInt(xs: List[Int]): Option[Int] = xs.headOption

291 | def firstGeneric[A](xs: List[A]): Option[A] = xs.headOption

292 | ```

293 |

294 | Having implemented these methods, we can see that the second just

295 | generalizes the first to work with any type: the function bodies are

296 | identical. We'd like to be able to rewrite each of these methods as a

297 | function value, but we can only represent the first method

298 | (`firstInt`) this way:

299 |

300 | ```scala

301 | val firstInt0: List[Int] => Option[Int] = _.headOption

302 | val firstGeneric0

303 | ```

304 |

305 | (One reason to want to do this rewrite is that we might have a method

306 | like `.map` which we'd like to pass an anonymous function value.)

307 |

308 | Several libraries define their own polymorphic function types, such as

309 | the following polymorphic version of `Function1` (which we can use to

310 | implement `firstGeneric0`):

311 |

312 | ```scala

313 | trait PolyFunction1[-F[_], +G[_]] {

314 | def apply[A](fa: F[A]): G[A]

315 | }

316 |

317 | val firstGeneric0: PolyFunction1[List, Option] =

318 | new PolyFunction1[List, Option] {

319 | def apply[A](xs: List[A]): Option[A] = xs.headOption

320 | }

321 | ```

322 |

323 | It's nice that `PolyFunction1` enables us to express polymorphic

324 | function values, but at the level of syntax it's not clear that we've

325 | saved much over defining a polymorphic method (i.e. `firstGeneric`).

326 |

327 | Since 0.9.0, Kind-projector provides a value-level rewrite to fix this

328 | issue and make polymorphic functions (and other types that share their

329 | general shape) easier to work with:

330 |

331 | ```scala

332 | val firstGeneric0 = λ[PolyFunction1[List, Option]](_.headOption)

333 | ```

334 |

335 | Either `λ` or `Lambda` can be used (in a value position) to trigger

336 | this rewrite. By default, the rewrite assumes that the "target method"

337 | to define is called `apply` (as in the previous example), but a

338 | different method can be selected via an explicit call.

339 |

340 | In the following example we are using the polymorphic lambda syntax to

341 | define a `run` method on an instance of the `PF` trait:

342 |

343 | ```scala

344 | trait PF[-F[_], +G[_]] {

345 | def run[A](fa: F[A]): G[A]

346 | }

347 |

348 | val f = Lambda[PF[List, Option]].run(_.headOption)

349 | ```

350 |

351 | It's possible to nest this syntax. Here's an example taken from

352 | [the wild](http://www.slideshare.net/timperrett/enterprise-algebras-scala-world-2016/49)

353 | of using nested polymorphic lambdas to remove boilerplate:

354 |

355 | ```scala

356 | // without polymorphic lambdas, as in the slide

357 | def injectFC[F[_], G[_]](implicit I: Inject[F, G]) =

358 | new (FreeC[F, *] ~> FreeC[G, *]) {

359 | def apply[A](fa: FreeC[F, A]): FreeC[G, A] =

360 | fa.mapSuspension[Coyoneda[G, *]](

361 | new (Coyoneda[F, *] ~> Coyoneda[G, *]) {

362 | def apply[B](fb: Coyoneda[F, B]): Coyoneda[G, B] = fb.trans(I)

363 | }

364 | )

365 | }

366 |

367 | // with polymorphic lambdas

368 | def injectFC[F[_], G[_]](implicit I: Inject[F, G]) =

369 | λ[FreeC[F, *] ~> FreeC[G, *]](

370 | _.mapSuspension(λ[Coyoneda[F, *] ~> Coyoneda[G, *]](_.trans(I)))

371 | )

372 | ```

373 |

374 | Kind-projector's support for type lambdas operates at the *type level*

375 | (in type positions), whereas this feature operates at the *value

376 | level* (in value positions). To avoid reserving too many names the `λ`

377 | and `Lambda` names were overloaded to do both (mirroring the

378 | relationship between types and their companion objects).

379 |

380 | Here are some examples of expressions, along with whether the lambda

381 | symbol involved represents a type (traditional type lambda) or a value

382 | (polymorphic lambda):

383 |

384 | ```scala

385 | // type lambda (type level)

386 | val functor: Functor[λ[a => Either[Int, a]]] = implicitly

387 |

388 | // polymorphic lambda (value level)

389 | val f = λ[Vector ~> List](_.toList)

390 |

391 | // type lambda (type level)

392 | trait CF2 extends Contravariant[λ[a => Function2[a, a, Double]]] {

393 | ...

394 | }

395 |

396 | // polymorphic lambda (value level)

397 | xyz.translate(λ[F ~> G](fx => fx.flatMap(g)))

398 | ```

399 |

400 | One pattern you might notice is that when `λ` occurs immediately

401 | within `[]` it is referring to a type lambda (since `[]` signals a

402 | type application), whereas when it occurs after `=` or within `()` it

403 | usually refers to a polymorphic lambda, since those tokens usually

404 | signal a value. (The `()` syntax for tuple and function types is an

405 | exception to this pattern.)

406 |

407 | The bottom line is that if you could replace a λ-expression with a

408 | type constructor, it's a type lambda, and if you could replace it with

409 | a value (e.g. `new Xyz[...] { ... }`) then it's a polymorphic lambda.

410 |

411 | ### Polymorphic lambdas under the hood

412 |

413 | What follows are the gory details of the polymorphic lambda rewrite.

414 |

415 | Polymorphic lambdas are a syntactic transformation that occurs just

416 | after parsing (before name resolution or typechecking). Your code will

417 | be typechecked *after* the rewrite.

418 |

419 | Written in its most explicit form, a polymorphic lambda looks like

420 | this:

421 |

422 | ```scala

423 | λ[Op[F, G]].someMethod()

424 | ```

425 |

426 | and is rewritten into something like this:

427 |

428 | ```scala

429 | new Op[F, G] {

430 | def someMethod[A](x: F[A]): G[A] = (x)

431 | }

432 | ```

433 |

434 | (The names `A` and `x` are used for clarity –- in practice unique

435 | names will be used for both.)

436 |

437 | This rewrite requires that the following are true:

438 |

439 | * `F` and `G` are unary type constructors (i.e. of shape `F[_]` and `G[_]`).

440 | * `` is an expression of type `Function1[_, _]`.

441 | * `Op` is parameterized on two unary type constructors.

442 | * `someMethod` is parametric (for any type `A` it takes `F[A]` and returns `G[A]`).

443 |

444 | For example, `Op` might be defined like this:

445 |

446 | ```scala

447 | trait Op[M[_], N[_]] {

448 | def someMethod[A](x: M[A]): N[A]

449 | }

450 | ```

451 |

452 | The entire λ-expression will be rewritten immediately after parsing

453 | (and before name resolution or typechecking). If any of these

454 | constraints are not met, then a compiler error will occur during a

455 | later phase (likely type-checking).

456 |

457 | Here are some polymorphic lambdas along with the corresponding code

458 | after the rewrite:

459 |

460 | ```scala

461 | val f = Lambda[NaturalTransformation[Stream, List]](_.toList)

462 | val f = new NaturalTransformation[Stream, List] {

463 | def apply[A](x: Stream[A]): List[A] = x.toList

464 | }

465 |

466 | type Id[A] = A

467 | val g = λ[Id ~> Option].run(x => Some(x))

468 | val g = new (Id ~> Option) {

469 | def run[A](x: Id[A]): Option[A] = Some(x)

470 | }

471 |

472 | val h = λ[Either[Unit, *] Convert Option](_.fold(_ => None, a => Some(a)))

473 | val h = new Convert[Either[Unit, *], Option] {

474 | def apply[A](x: Either[Unit, A]): Option[A] =

475 | x.fold(_ => None, a => Some(a))

476 | }

477 |

478 | // that last example also includes a type lambda.

479 | // the full expansion would be:

480 | val h = new Convert[({type Λ$[β$0$] = Either[Unit, β$0$]})#Λ$, Option] {

481 | def apply[A](x: ({type Λ$[β$0$] = Either[Unit, β$0$]})#Λ$): Option[A] =

482 | x.fold(_ => None, a => Some(a))

483 | }

484 | ```

485 |

486 | Unfortunately the type errors produced by invalid polymorphic lambdas

487 | are likely to be difficult to read. This is an unavoidable consequence

488 | of doing this transformation at the syntactic level.

489 |

490 | ### Building the plugin

491 |

492 | You can build kind-projector using SBT 0.13.0 or newer.

493 |

494 | Here are some useful targets:

495 |

496 | * `compile`: compile the code

497 | * `package`: build the plugin jar

498 | * `test`: compile the test files (no tests run; compilation is the test)

499 | * `console`: launch a REPL with the plugin loaded so you can play around

500 |

501 | You can use the plugin with `scalac` by specifying it on the

502 | command-line. For instance:

503 |

504 | ```

505 | scalac -Xplugin:kind-projector_2.13.6-0.13.2.jar test.scala

506 | ```

507 |

508 | ### Releasing the plugin

509 |

510 | This project must use full cross-versioning and thus needs to be

511 | republished for each new release of Scala, but if the code doesn't

512 | change, we prefer not to ripple downstream with a version bump. Thus,

513 | we typically republish from a tag.

514 |

515 | 1. Be sure you're on Java 8.

516 |

517 | 2. Run `./scripts/back-publish` with the tag and Scala version

518 |

519 | ```console

520 | $ ./scripts/back-publish

521 | Usage: ./scripts/back-publish [-t ] [-s ]

522 | $ ./scripts/back-publish -t v0.13.2 -s 2.13.8

523 | ```

524 |

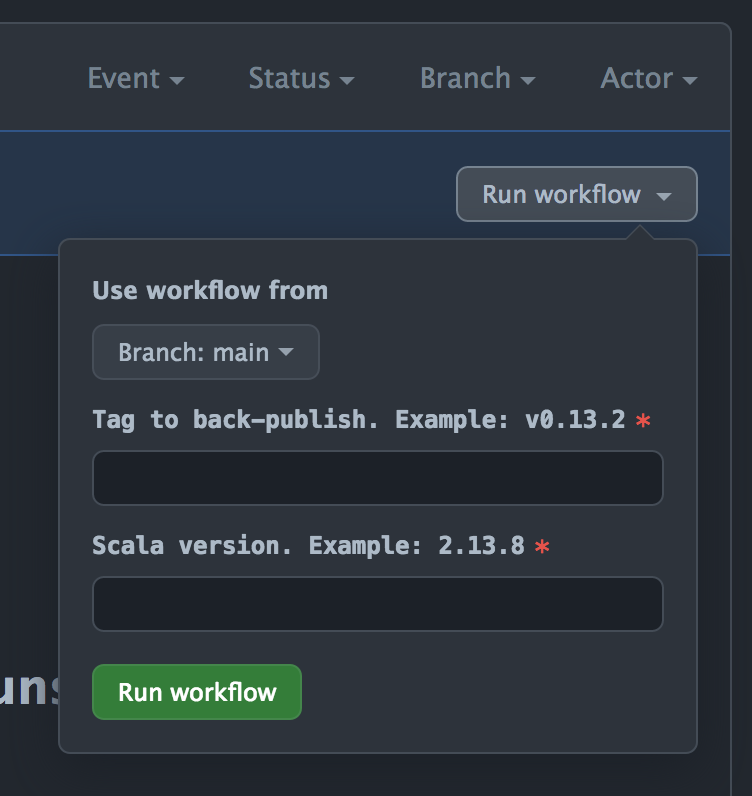

525 | You can also run the above steps in CI by manually triggering the

526 | workflow in `backpublish.yml`. To do so, navigate to the **Actions**

527 | tab, select the **Back-Publish** workflow from the left side-bar, and

528 | click **Run workflow** to access a drop-down menu to run the workflow.

529 |

530 |  531 |

532 | ### Known issues & errata

533 |

534 | When dealing with type parameters that take covariant or contravariant

535 | type parameters, only the function syntax is supported. Huh???

536 |

537 | Here's an example that highlights this issue:

538 |

539 | ```scala

540 | def xyz[F[_[+_]]] = 12345

541 | trait Q[A[+_], B[+_]]

542 |

543 | // we can use kind-projector to adapt Q for xyz

544 | xyz[λ[`x[+_]` => Q[x, List]] // ok

545 |

546 | // but these don't work (although support for the second form

547 | // could be added in a future release).

548 | xyz[Q[*[+_], List]] // invalid syntax

549 | xyz[Q[*[`+_`], List]] // unsupported

550 | ```

551 |

552 | There have been suggestions for better syntax, like

553 | `[A, B]Either[B, A]` or `[A, B] => Either[B, A]` instead of

554 | `Lambda[(A, B) => Either[B, A]]`. Unfortunately this would actually

555 | require modifying the parser (i.e. the language itself) which is

556 | outside the scope of this project (at least, until there is an earlier

557 | compiler phase to plug into).

558 |

559 | Others have noted that it would be nicer to be able to use `_` for

560 | types the way we do for values, so that we could use `Either[Int, _]`

561 | to define a type lambda the way we use `3 + _` to define a

562 | function. Unfortunately, it's probably too late to modify the meaning

563 | of `_`, which is why we chose to use `*` instead.

564 |

565 | ### Future Work

566 |

567 | As of 0.5.3, kind-projector should be able to support any type lambda

568 | that can be expressed via type projections, at least using the

569 | function syntax. If you come across a type for which kind-projector

570 | lacks a syntax, please report it.

571 |

572 | ### Disclaimers

573 |

574 | Kind projector is an unusual compiler plugin in that it runs *before*

575 | the `typer` phase. This means that the rewrites and renaming we are

576 | doing are relatively fragile, and the author disclaims all warranty or

577 | liability of any kind.

578 |

579 | (That said, there are currently no known bugs.)

580 |

581 | If you are using kind-projector in one of your projects, please feel

582 | free to get in touch to report problems (or a lack of problems)!

583 |

584 | ### Community

585 |

586 | People are expected to follow the

587 | [Scala Code of Conduct](https://scala-lang.org/conduct/)

588 | when discussing Kind-projector on GitHub or other venues.

589 |

590 | The project's current maintainers are:

591 |

592 | * [Erik Osheim](http://github.com/non)

593 | * [Tomas Mikula](http://github.com/tomasmikula)

594 | * [Seth Tisue](https://github.com/sethtisue)

595 | * [Miles Sabin](https://github.com/milessabin)

596 |

597 | ### Copyright and License

598 |

599 | All code is available to you under the MIT license, available at

600 | http://opensource.org/licenses/mit-license.php and also in the COPYING

601 | file.

602 |

603 | Copyright Erik Osheim, 2011-2021.

604 |

--------------------------------------------------------------------------------

/build.sbt:

--------------------------------------------------------------------------------

1 | inThisBuild {

2 | Seq(

3 | resolvers in Global += "scala-integration" at "https://scala-ci.typesafe.com/artifactory/scala-integration/",

4 | githubWorkflowPublishTargetBranches := Seq(),

5 | crossScalaVersions := Seq(

6 | "2.11.12",

7 | "2.12.8",

8 | "2.12.9",

9 | "2.12.10",

10 | "2.12.11",

11 | "2.12.12",

12 | "2.12.13",

13 | "2.12.14",

14 | "2.12.15",

15 | "2.12.16",

16 | "2.12.17",

17 | "2.12.18",

18 | "2.12.19",

19 | "2.12.20",

20 | "2.13.0",

21 | "2.13.1",

22 | "2.13.2",

23 | "2.13.3",

24 | "2.13.4",

25 | "2.13.5",

26 | "2.13.6",

27 | "2.13.7",

28 | "2.13.8",

29 | "2.13.9",

30 | "2.13.10",

31 | "2.13.11",

32 | "2.13.12",

33 | "2.13.13",

34 | "2.13.14",

35 | "2.13.15",

36 | "2.13.16",

37 | ),

38 | organization := "org.typelevel",

39 | licenses += ("MIT", url("http://opensource.org/licenses/MIT")),

40 | homepage := Some(url("http://github.com/typelevel/kind-projector")),

41 | Test / publishArtifact := false,

42 | pomExtra := (

43 |

44 | git@github.com:typelevel/kind-projector.git

45 | scm:git:git@github.com:typelevel/kind-projector.git

46 |

47 |

48 |

49 | d_m

50 | Erik Osheim

51 | http://github.com/non/

52 |

53 |

54 | tomasmikula

55 | Tomas Mikula

56 | http://github.com/tomasmikula/

57 |

58 |

59 | ),

60 | )

61 | }

62 |

63 | val HasScalaVersion = {

64 | object Matcher {

65 | def unapply(versionString: String) =

66 | versionString.takeWhile(ch => ch != '-').split('.').toList.map(str => scala.util.Try(str.toInt).toOption) match {

67 | case List(Some(epoch), Some(major), Some(minor)) => Some((epoch, major, minor))

68 | case _ => None

69 | }

70 | }

71 | Matcher

72 | }

73 |

74 | def hasNewReporting(versionString: String) = versionString match {

75 | case HasScalaVersion(2, 12, minor) => minor >= 13

76 | case HasScalaVersion(2, 13, minor) => minor >= 2

77 | case _ => false

78 | }

79 |

80 | def hasNewParser(versionString: String) = versionString match {

81 | case HasScalaVersion(2, 12, minor) => minor >= 13

82 | case HasScalaVersion(2, 13, minor) => minor >= 1

83 | case _ => false

84 | }

85 |

86 | lazy val `kind-projector` = project

87 | .in(file("."))

88 | .settings(

89 | name := "kind-projector",

90 | crossTarget := target.value / s"scala-${scalaVersion.value}", // workaround for https://github.com/sbt/sbt/issues/5097

91 | crossVersion := CrossVersion.full,

92 | crossScalaVersions := (ThisBuild / crossScalaVersions).value,

93 | publishMavenStyle := true,

94 | sonatypeProfileName := organization.value,

95 | publishTo := sonatypePublishToBundle.value,

96 | sonatypeCredentialHost := "s01.oss.sonatype.org",

97 | Compile / unmanagedSourceDirectories ++= {

98 | (Compile / unmanagedSourceDirectories).value.flatMap { dir =>

99 | val sv = scalaVersion.value

100 | val suffices =

101 | (if (hasNewParser(sv)) "-newParser" else "-oldParser") ::

102 | (if (hasNewReporting(sv)) "-newReporting" else "-oldReporting") ::

103 | Nil

104 | suffices.map(suffix => file(dir.getPath + suffix))

105 | }

106 | },

107 | libraryDependencies += scalaOrganization.value % "scala-compiler" % scalaVersion.value,

108 | scalacOptions ++= Seq(

109 | "-Xlint",

110 | "-feature",

111 | "-language:higherKinds",

112 | "-deprecation",

113 | "-unchecked",

114 | ),

115 | Test / scalacOptions ++= {

116 | val jar = (Compile / packageBin).value

117 | Seq("-Yrangepos", s"-Xplugin:${jar.getAbsolutePath}", s"-Jdummy=${jar.lastModified}") // ensures recompile

118 | },

119 | Test / scalacOptions ++= (scalaVersion.value match {

120 | case HasScalaVersion(2, 13, n) if n >= 2 => List("-Wconf:src=WarningSuppression.scala:error")

121 | case _ => Nil

122 | }) ++ List("-P:kind-projector:underscore-placeholders"),

123 | console / initialCommands := "import d_m._",

124 | Compile / console / scalacOptions := Seq("-language:_", "-Xplugin:" + (Compile / packageBin).value),

125 | Test / console / scalacOptions := (Compile / console / scalacOptions).value,

126 | libraryDependencies += "com.novocode" % "junit-interface" % "0.11" % Test,

127 | testOptions += Tests.Argument(TestFrameworks.JUnit, "-a", "-v"),

128 | Test / fork := true,

129 | //Test / scalacOptions ++= Seq("-Xprint:typer", "-Xprint-pos"), // Useful for debugging

130 | )

131 |

--------------------------------------------------------------------------------

/flake.lock:

--------------------------------------------------------------------------------

1 | {

2 | "nodes": {

3 | "devshell": {

4 | "inputs": {

5 | "nixpkgs": [

6 | "typelevel-nix",

7 | "nixpkgs"

8 | ]

9 | },

10 | "locked": {

11 | "lastModified": 1741473158,

12 | "narHash": "sha256-kWNaq6wQUbUMlPgw8Y+9/9wP0F8SHkjy24/mN3UAppg=",

13 | "owner": "numtide",

14 | "repo": "devshell",

15 | "rev": "7c9e793ebe66bcba8292989a68c0419b737a22a0",

16 | "type": "github"

17 | },

18 | "original": {

19 | "owner": "numtide",

20 | "repo": "devshell",

21 | "type": "github"

22 | }

23 | },

24 | "flake-utils": {

25 | "inputs": {

26 | "systems": "systems"

27 | },

28 | "locked": {

29 | "lastModified": 1731533236,

30 | "narHash": "sha256-l0KFg5HjrsfsO/JpG+r7fRrqm12kzFHyUHqHCVpMMbI=",

31 | "owner": "numtide",

32 | "repo": "flake-utils",

33 | "rev": "11707dc2f618dd54ca8739b309ec4fc024de578b",

34 | "type": "github"

35 | },

36 | "original": {

37 | "owner": "numtide",

38 | "repo": "flake-utils",

39 | "type": "github"

40 | }

41 | },

42 | "nixpkgs": {

43 | "locked": {

44 | "lastModified": 1747467164,

45 | "narHash": "sha256-JBXbjJ0t6T6BbVc9iPVquQI9XSXCGQJD8c8SgnUquus=",

46 | "owner": "nixos",

47 | "repo": "nixpkgs",

48 | "rev": "3fcbdcfc707e0aa42c541b7743e05820472bdaec",

49 | "type": "github"

50 | },

51 | "original": {

52 | "owner": "nixos",

53 | "ref": "nixpkgs-unstable",

54 | "repo": "nixpkgs",

55 | "type": "github"

56 | }

57 | },

58 | "root": {

59 | "inputs": {

60 | "flake-utils": [

61 | "typelevel-nix",

62 | "flake-utils"

63 | ],

64 | "nixpkgs": [

65 | "typelevel-nix",

66 | "nixpkgs"

67 | ],

68 | "typelevel-nix": "typelevel-nix"

69 | }

70 | },

71 | "systems": {

72 | "locked": {

73 | "lastModified": 1681028828,

74 | "narHash": "sha256-Vy1rq5AaRuLzOxct8nz4T6wlgyUR7zLU309k9mBC768=",

75 | "owner": "nix-systems",

76 | "repo": "default",

77 | "rev": "da67096a3b9bf56a91d16901293e51ba5b49a27e",

78 | "type": "github"

79 | },

80 | "original": {

81 | "owner": "nix-systems",

82 | "repo": "default",

83 | "type": "github"

84 | }

85 | },

86 | "typelevel-nix": {

87 | "inputs": {

88 | "devshell": "devshell",

89 | "flake-utils": "flake-utils",

90 | "nixpkgs": "nixpkgs"

91 | },

92 | "locked": {

93 | "lastModified": 1747667039,

94 | "narHash": "sha256-jlnFJGbksMcOYrTcyYBqmc53sHKxPa9Dq11ahze+Qf8=",

95 | "owner": "rossabaker",

96 | "repo": "typelevel-nix",

97 | "rev": "e2a19a65a9dd14b17120856f350c51fd8652cdde",

98 | "type": "github"

99 | },

100 | "original": {

101 | "owner": "rossabaker",

102 | "repo": "typelevel-nix",

103 | "type": "github"

104 | }

105 | }

106 | },

107 | "root": "root",

108 | "version": 7

109 | }

110 |

--------------------------------------------------------------------------------

/flake.nix:

--------------------------------------------------------------------------------

1 | {

2 | description = "Provision a dev environment";

3 |

4 | inputs = {

5 | typelevel-nix.url = "github:rossabaker/typelevel-nix";

6 | nixpkgs.follows = "typelevel-nix/nixpkgs";

7 | flake-utils.follows = "typelevel-nix/flake-utils";

8 | };

9 |

10 | outputs = { self, nixpkgs, flake-utils, typelevel-nix }:

11 | flake-utils.lib.eachDefaultSystem (system:

12 | let

13 | pkgs = import nixpkgs {

14 | inherit system;

15 | overlays = [ typelevel-nix.overlays.default ];

16 | };

17 | in

18 | {

19 | devShell = pkgs.devshell.mkShell {

20 | imports = [ typelevel-nix.typelevelShell ];

21 | name = "kind-projector";

22 | typelevelShell = {

23 | jdk.package = pkgs.jdk8;

24 | };

25 | commands = [{

26 | name = "back-publish";

27 | help = "back publishes a tag to Sonatype with a specific Scala version";

28 | command = "${./scripts/back-publish} $@";

29 | }];

30 | };

31 | }

32 | );

33 | }

34 |

--------------------------------------------------------------------------------

/project/build.properties:

--------------------------------------------------------------------------------

1 | sbt.version=1.10.4

2 |

--------------------------------------------------------------------------------

/project/plugins.sbt:

--------------------------------------------------------------------------------

1 | addSbtPlugin("io.crashbox" % "sbt-gpg" % "0.2.1")

2 | addSbtPlugin("org.xerial.sbt" % "sbt-sonatype" % "3.9.10")

3 | addSbtPlugin("com.github.sbt" % "sbt-github-actions" % "0.24.0")

4 |

--------------------------------------------------------------------------------

/scripts/back-publish:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env bash

2 |

3 | set -e

4 |

5 | usage() {

6 | echo "Usage: $0 [-t ] [-s ]" 1>&2

7 | echo "Example: $0 -t v0.13.3 -s 2.13.14"

8 | }

9 |

10 | while getopts "s:t:" OPTION; do

11 | case $OPTION in

12 | s)

13 | SCALA_VERSION=$OPTARG

14 | ;;

15 | t)

16 | TAG=$OPTARG

17 | ;;

18 | *)

19 | usage

20 | exit 1

21 | ;;

22 | esac

23 | done

24 |

25 | if [ -z "$SCALA_VERSION" ] || [ -z "$TAG" ]; then

26 | usage

27 | exit 1

28 | fi

29 |

30 | JAVA_VERSION=$(java -version 2>&1 | head -1 | cut -d'"' -f2 | sed '/^1\./s///' | cut -d'.' -f1)

31 | if [ "$JAVA_VERSION" -ne 8 ]; then

32 | echo "Detected Java ${JAVA_VERSION}, must be 8."

33 | exit 1

34 | fi

35 |

36 | git checkout $TAG

37 | sbt ++$SCALA_VERSION clean test publish sonatypeBundleRelease

38 |

--------------------------------------------------------------------------------

/src/main/resources/scalac-plugin.xml:

--------------------------------------------------------------------------------

1 |

2 | kind-projector

3 | d_m.KindProjector

4 |

5 |

--------------------------------------------------------------------------------

/src/main/scala-newParser/StringParser.scala:

--------------------------------------------------------------------------------

1 | package d_m

2 |

3 | import scala.tools.nsc.Global

4 | import scala.tools.nsc.Settings

5 | import scala.tools.nsc.reporters.StoreReporter

6 |

7 | class StringParser[G <: Global](val global: G) {

8 | import global._

9 | def parse(code: String): Option[Tree] = {

10 | val oldReporter = global.reporter

11 | try {

12 | val r = new StoreReporter(new Settings)

13 | global.reporter = r

14 | val tree = newUnitParser(code).templateStats().headOption

15 | if (r.infos.isEmpty) tree else None

16 | } finally {

17 | global.reporter = oldReporter

18 | }

19 | }

20 | }

21 |

22 |

--------------------------------------------------------------------------------

/src/main/scala-newReporting/ReportingCompat.scala:

--------------------------------------------------------------------------------

1 | package d_m

2 |

3 | //compatibility stub

4 | trait ReportingCompat

--------------------------------------------------------------------------------

/src/main/scala-oldParser/StringParser.scala:

--------------------------------------------------------------------------------

1 | package d_m

2 |

3 | import scala.tools.nsc.Global

4 | import scala.tools.nsc.reporters.StoreReporter

5 |

6 | class StringParser[G <: Global](val global: G) {

7 | import global._

8 | def parse(code: String): Option[Tree] = {

9 | val oldReporter = global.reporter

10 | try {

11 | val r = new StoreReporter()

12 | global.reporter = r

13 | val tree = newUnitParser(code).templateStats().headOption

14 | if (r.infos.isEmpty) tree else None

15 | } finally {

16 | global.reporter = oldReporter

17 | }

18 | }

19 | }

20 |

21 |

--------------------------------------------------------------------------------

/src/main/scala-oldReporting/ReportingCompat.scala:

--------------------------------------------------------------------------------

1 | package d_m

2 |

3 | import scala.tools.nsc._

4 |

5 | /** Taken from ScalaJS, copyright Sébastien Doeraene

6 | *

7 | * Original at

8 | * https://github.com/scala-js/scala-js/blob/950fcda1e480d54b50e75f4cce5bd4135cb58a53/compiler/src/main/scala/org/scalajs/nscplugin/CompatComponent.scala

9 | *

10 | * Modified with only superficial understanding of the code, taking only the bits

11 | * that are needed here for configurable warnings

12 | *

13 | */

14 | trait ReportingCompat {

15 |

16 | val global: Global

17 |

18 | implicit final class GlobalCompat(self: global.type) {

19 |

20 | object runReporting {

21 | def warning(pos: global.Position, msg: String, cat: Any, site: global.Symbol): Unit =

22 | global.reporter.warning(pos, msg)

23 | }

24 | }

25 |

26 | object Reporting {

27 | object WarningCategory {

28 | val Deprecation: Any = null

29 | }

30 | }

31 | }

--------------------------------------------------------------------------------

/src/main/scala/Extractors.scala:

--------------------------------------------------------------------------------

1 | package d_m

2 |

3 | import scala.reflect.internal.SymbolTable

4 |

5 | trait Extractors {

6 | val global: SymbolTable

7 | import global._

8 |

9 | def isTermLambdaMarker(tree: Tree): Boolean

10 | def freshName(s: String): String

11 |

12 | object TermLambdaMarker {

13 | def unapply(tree: Tree): Boolean = isTermLambdaMarker(tree)

14 | }

15 | object UnappliedType {

16 | def unapply(tree: Tree): Option[List[Tree]] = tree match {

17 | case AppliedTypeTree(fun, args) => Some(fun :: args)

18 | case _ => None

19 | }

20 | }

21 | object TermLambdaTypeArgs {

22 | def unapply(tree: Tree): Option[List[Tree]] = tree match {

23 | case TypeApply(TermLambdaMarker(), targs) => Some(targs)

24 | case _ => None

25 | }

26 | }

27 | object Function1Tree {

28 | private def freshTerm(): TermName = newTermName(freshName("pf"))

29 | private def casesFunction(name: TermName, cases: List[CaseDef]) = name -> Match(Ident(name), cases)

30 |

31 | def unapply(tree: Tree): Option[(TermName, Tree)] = tree match {

32 | case Function(ValDef(_, name, _, _) :: Nil, body) => Some(name -> body)

33 | case Match(EmptyTree, cases) => Some(casesFunction(freshTerm(), cases))

34 | case _ => None

35 | }

36 | }

37 | object PolyLambda {

38 | def unapply(tree: Tree): Option[(TermName, List[Tree], Tree)] = tree match {

39 | case Apply(Select(TermLambdaTypeArgs(targs), method), arg :: Nil) => Some((method.toTermName, targs, arg))

40 | case Apply(TermLambdaTypeArgs(targs), arg :: Nil) => Some((nme.apply, targs, arg))

41 | case _ => None

42 | }

43 | }

44 | }

45 |

--------------------------------------------------------------------------------

/src/main/scala/KindProjector.scala:

--------------------------------------------------------------------------------

1 | package d_m

2 |

3 | import scala.tools.nsc

4 | import nsc._

5 | import nsc.plugins.Plugin

6 | import nsc.plugins.PluginComponent

7 | import nsc.transform.Transform

8 | import nsc.transform.TypingTransformers

9 | import nsc.symtab.Flags._

10 | import nsc.ast.TreeDSL

11 |

12 | import scala.reflect.NameTransformer

13 | import scala.collection.mutable

14 |

15 | class KindProjector(val global: Global) extends Plugin {

16 | val name = "kind-projector"

17 | val description = "Expand type lambda syntax"

18 |

19 | override val optionsHelp = Some(Seq(

20 | "-P:kind-projector:underscore-placeholders - treat underscore as a type lambda placeholder,",

21 | "disables Scala 2 wildcards, you must separately enable `-Xsource:3` option to be able to",

22 | "write wildcards using `?` symbol").mkString(" "))

23 |

24 |

25 | override def init(options: List[String], error: String => Unit): Boolean = {

26 | if (options.exists(_ != "underscore-placeholders")) {

27 | error(s"Error: $name takes no options except `-P:kind-projector:underscore-placeholders`, but got ${options.mkString(",")}")

28 | }

29 | true

30 | }

31 |

32 | val components = new KindRewriter(this, global) :: Nil

33 | }

34 |

35 | class KindRewriter(plugin: Plugin, val global: Global)

36 | extends PluginComponent with Transform with TypingTransformers with TreeDSL with ReportingCompat {

37 |

38 | import global._

39 |

40 | val sp = new StringParser[global.type](global)

41 |

42 | val runsAfter = "parser" :: Nil

43 | override val runsBefore = "namer" :: Nil

44 | val phaseName = "kind-projector"

45 |

46 | lazy val useAsciiNames: Boolean =

47 | System.getProperty("kp:genAsciiNames") == "true"

48 |

49 | lazy val useUnderscoresForTypeLambda: Boolean = {

50 | plugin.options.contains("underscore-placeholders")

51 | }

52 |

53 | def newTransformer(unit: CompilationUnit): MyTransformer =

54 | new MyTransformer(unit)

55 |

56 | class MyTransformer(unit: CompilationUnit) extends TypingTransformer(unit) with Extractors {

57 | val global: KindRewriter.this.global.type = KindRewriter.this.global

58 |

59 | // we use this to avoid expensively recomputing the same tree

60 | // multiple times. this was introduced to fix a situation where

61 | // using kp with hlists was too costly.

62 | val treeCache = mutable.Map.empty[Tree, Tree]

63 |

64 | sealed abstract class Variance(val modifiers: Long)

65 | case object Invariant extends Variance(0)

66 | case object Covariant extends Variance(COVARIANT)

67 | case object Contravariant extends Variance(CONTRAVARIANT)

68 |

69 | // Reserve some names

70 | val TypeLambda1 = newTypeName("Lambda")

71 | val TypeLambda2 = newTypeName("λ")

72 | val InvPlaceholder = newTypeName("$times")

73 | val CoPlaceholder = newTypeName("$plus$times")

74 | val ContraPlaceholder = newTypeName("$minus$times")

75 |

76 | val TermLambda1 = TypeLambda1.toTermName

77 | val TermLambda2 = TypeLambda2.toTermName

78 |

79 | object InvPlaceholderScala3 {

80 | def apply(n: Name): Boolean = n match { case InvPlaceholderScala3() => true; case _ => false }

81 | def unapply(t: TypeName): Boolean = t.startsWith("_$") && t.drop(2).decoded.forall(_.isDigit)

82 | }

83 | val CoPlaceholderScala3 = newTypeName("$plus_")

84 | val ContraPlaceholderScala3 = newTypeName("$minus_")

85 |

86 | object Placeholder {

87 | def unapply(name: TypeName): Option[Variance] = name match {

88 | case InvPlaceholder => Some(Invariant)

89 | case CoPlaceholder => Some(Covariant)

90 | case ContraPlaceholder => Some(Contravariant)

91 | case _ if useUnderscoresForTypeLambda => name match {

92 | case InvPlaceholderScala3() => Some(Invariant)

93 | case CoPlaceholderScala3 => Some(Covariant)

94 | case ContraPlaceholderScala3 => Some(Contravariant)

95 | case _ => None

96 | }

97 | case _ => None

98 | }

99 | }

100 |

101 | // the name to use for the type lambda itself.

102 | // e.g. the L in ({ type L[x] = Either[x, Int] })#L.

103 | val LambdaName = newTypeName(if (useAsciiNames) "L_kp" else "Λ$")

104 |

105 | // these will be used for matching but aren't reserved

106 | val Plus = newTypeName("$plus")

107 | val Minus = newTypeName("$minus")

108 |

109 | def isTermLambdaMarker(tree: Tree): Boolean = tree match {

110 | case Ident(TermLambda1 | TermLambda2) => true

111 | case _ => false

112 | }

113 |

114 | private var cnt = -1 // So we actually start naming from 0

115 |

116 | @inline

117 | final def freshName(s: String): String = {

118 | cnt += 1

119 | s + cnt + "$"

120 | }

121 |

122 | /**

123 | * Produce type lambda param names.

124 | *

125 | * The name is always appended with a unique number for the compilation

126 | * unit to prevent shadowing of names in a nested context.

127 | *

128 | * If useAsciiNames is set, the legacy names (X_kp0$, X_kp1$, etc)

129 | * will be used.

130 | *

131 | * Otherwise:

132 | *

133 | * The first parameter (i=0) will be α$, the second β$, and so on.

134 | * After producing ω$ (for i=24), the letters wrap back around with

135 | * a number appended.

136 | */

137 | def newParamName(i: Int): TypeName = {

138 | require(i >= 0, s"negative param name index $i")

139 | val name = {

140 | if (useAsciiNames) {

141 | "X_kp%d".format(i) + "$"

142 | } else {

143 | val j = i % 25

144 | val k = i / 25

145 | val c = ('α' + j).toChar

146 | if (k == 0) s"$c$$" else s"$c$$$k$$"

147 | }

148 | }

149 | newTypeName(freshName(name))

150 | }

151 |

152 | // Define some names (and bounds) that will come in handy.

153 | val NothingLower = gen.rootScalaDot(tpnme.Nothing)

154 | val AnyUpper = gen.rootScalaDot(tpnme.Any)

155 | val AnyRefBase = gen.rootScalaDot(tpnme.AnyRef)

156 | val DefaultBounds = TypeBoundsTree(NothingLower, AnyUpper)

157 |

158 | // Handy way to make a TypeName from a Name.

159 | def makeTypeName(name: Name): TypeName =

160 | newTypeName(name.toString)

161 |

162 | // We use this to create type parameters inside our type project, e.g.

163 | // the A in: ({type L[A] = (A, Int) => A})#L.

164 | def makeTypeParam(name: Name, variance: Variance, bounds: TypeBoundsTree = DefaultBounds): TypeDef =

165 | TypeDef(Modifiers(PARAM | variance.modifiers), makeTypeName(name), Nil, bounds)

166 |

167 | def polyLambda(tree: Tree): Tree = tree match {

168 | case PolyLambda(methodName, (arrowType @ UnappliedType(_ :: targs)) :: Nil, Function1Tree(name, body)) =>

169 | val (f, g) = targs match {

170 | case a :: b :: Nil => (a, b)

171 | case a :: Nil => (a, a)

172 | case _ => return tree

173 | }

174 | val TParam = newTypeName(freshName("A"))

175 | atPos(tree.pos.makeTransparent)(

176 | q"new $arrowType { def $methodName[$TParam]($name: $f[$TParam]): $g[$TParam] = $body }"

177 | )

178 | case _ => tree

179 | }

180 |

181 | // The transform method -- this is where the magic happens.

182 | override def transform(tree: Tree): Tree = {

183 |

184 | // Given a name, e.g. A or `+A` or `A <: Foo`, build a type

185 | // parameter tree using the given name, bounds, variance, etc.

186 | def makeTypeParamFromName(name: Name): TypeDef = {

187 | val decoded = NameTransformer.decode(name.toString)

188 | val src = s"type _X_[$decoded] = Unit"

189 | sp.parse(src) match {

190 | case Some(TypeDef(_, _, List(tpe), _)) => tpe

191 | case _ => reporter.error(tree.pos, s"Can't parse param: $name"); null

192 | }

193 | }

194 |

195 | // Like makeTypeParam, but can be used recursively in the case of types

196 | // that are themselves parameterized.

197 | def makeComplexTypeParam(t: Tree): TypeDef = t match {

198 | case Ident(name) =>

199 | makeTypeParamFromName(name)

200 |

201 | case AppliedTypeTree(Ident(name), ps) =>

202 | val tparams = ps.map(makeComplexTypeParam)

203 | TypeDef(Modifiers(PARAM), makeTypeName(name), tparams, DefaultBounds)

204 |

205 | case ExistentialTypeTree(AppliedTypeTree(Ident(name), ps), _) =>

206 | val tparams = ps.map(makeComplexTypeParam)

207 | TypeDef(Modifiers(PARAM), makeTypeName(name), tparams, DefaultBounds)

208 |

209 | case x =>

210 | reporter.error(x.pos, "Can't parse %s (%s)".format(x, x.getClass.getName))

211 | null.asInstanceOf[TypeDef]

212 | }

213 |

214 | // Given the list a::as, this method finds the last argument in the list

215 | // (the "subtree") and returns that separately from the other arguments.

216 | // The stack is just used to enable tail recursion, and a and as are

217 | // passed separately to avoid dealing with empty lists.

218 | def parseLambda(a: Tree, as: List[Tree], stack: List[Tree]): (List[Tree], Tree) =

219 | as match {

220 | case Nil => (stack.reverse, a)

221 | case h :: t => parseLambda(h, t, a :: stack)

222 | }

223 |

224 | // Builds the horrendous type projection tree. To remind the reader,

225 | // given List("A", "B") and <(A, Int, B)> we are generating a tree for

226 | // ({ type L[A, B] = (A, Int, B) })#L.

227 | def makeTypeProjection(innerTypes: List[TypeDef], subtree: Tree): Tree =

228 | SelectFromTypeTree(

229 | CompoundTypeTree(

230 | Template(

231 | AnyRefBase :: Nil,

232 | ValDef(NoMods, nme.WILDCARD, TypeTree(), EmptyTree),

233 | TypeDef(

234 | NoMods,

235 | LambdaName,

236 | innerTypes,

237 | super.transform(subtree)) :: Nil)),

238 | LambdaName)

239 |

240 | // This method handles the explicit type lambda case, e.g.

241 | // Lambda[(A, B) => Function2[A, Int, B]] case.

242 | def handleLambda(a: Tree, as: List[Tree]): Tree = {

243 | val (args, subtree) = parseLambda(a, as, Nil)

244 | val innerTypes = args.map {

245 | case Ident(name) =>

246 | makeTypeParamFromName(name)

247 |

248 | case AppliedTypeTree(Ident(Plus), Ident(name) :: Nil) =>

249 | makeTypeParam(name, Covariant)

250 |

251 | case AppliedTypeTree(Ident(Minus), Ident(name) :: Nil) =>

252 | makeTypeParam(name, Contravariant)

253 |

254 | case AppliedTypeTree(Ident(name), ps) =>

255 | val tparams = ps.map(makeComplexTypeParam)

256 | TypeDef(Modifiers(PARAM), makeTypeName(name), tparams, DefaultBounds)

257 |

258 | case ExistentialTypeTree(AppliedTypeTree(Ident(name), ps), _) =>

259 | val tparams = ps.map(makeComplexTypeParam)

260 | TypeDef(Modifiers(PARAM), makeTypeName(name), tparams, DefaultBounds)

261 |

262 | case x =>

263 | reporter.error(x.pos, "Can't parse %s (%s)".format(x, x.getClass.getName))

264 | null.asInstanceOf[TypeDef]

265 | }

266 | makeTypeProjection(innerTypes, subtree)

267 | }

268 |

269 | // This method handles the implicit type lambda case, e.g.

270 | // Function2[?, Int, ?].

271 | def handlePlaceholders(t: Tree, as: List[Tree]) = {

272 | // create a new type argument list, catching placeholders and create

273 | // individual identifiers for them.

274 | val xyz = as.zipWithIndex.map {

275 | case (Ident(Placeholder(variance)), i) =>

276 | (Ident(newParamName(i)), Some(Right(variance)))

277 | case (AppliedTypeTree(Ident(Placeholder(variance)), ps), i) =>

278 | (Ident(newParamName(i)), Some(Left((variance, ps.map(makeComplexTypeParam)))))

279 | case (ExistentialTypeTree(AppliedTypeTree(Ident(Placeholder(variance)), ps), _), i) =>

280 | (Ident(newParamName(i)), Some(Left((variance, ps.map(makeComplexTypeParam)))))

281 | case (a, i) =>

282 | // Using super.transform in existential type case in underscore mode

283 | // skips the outer `ExistentialTypeTree` (reproduces in nested.scala test)

284 | // and produces invalid trees where the unused underscore variables are not cleaned up

285 | // by the current transformer

286 | // I do not know why! Using `this.transform` instead works around the issue,

287 | // however it seems to have worked correctly all this time non-underscore mode, so

288 | // we keep calling super.transform to not change anything for existing code in classic mode.

289 | val transformedA =

290 | if (useUnderscoresForTypeLambda) this.transform(a)

291 | else super.transform(a)

292 | (transformedA, None)

293 | }

294 |

295 |

296 | // for each placeholder, create a type parameter

297 | val innerTypes = xyz.collect {

298 | case (Ident(name), Some(Right(variance))) =>

299 | makeTypeParam(name, variance)

300 | case (Ident(name), Some(Left((variance, tparams)))) =>

301 | TypeDef(Modifiers(PARAM | variance.modifiers), makeTypeName(name), tparams, DefaultBounds)

302 | }

303 |

304 | val args = xyz.map(_._1)

305 |

306 | // if we didn't have any placeholders use the normal transformation.

307 | // otherwise build a type projection.

308 | if (innerTypes.isEmpty) None

309 | else Some(makeTypeProjection(innerTypes, AppliedTypeTree(t, args)))

310 | }

311 |

312 | // confirm that the type argument to a Lambda[...] expression is

313 | // valid. valid means that it is scala.FunctionN for N >= 1.

314 | //

315 | // note that it is possible to confuse the plugin using imports.

316 | // for example:

317 | //

318 | // import scala.{Function1 => Junction1}

319 | // def sink[F[_]] = ()

320 | //

321 | // sink[Lambda[A => Either[Int, A]]] // ok

322 | // sink[Lambda[Function1[A, Either[Int, A]]]] // also ok

323 | // sink[Lambda[Junction1[A, Either[Int, A]]]] // fails

324 | //

325 | // however, since the plugin encourages users to use syntactic

326 | // functions (i.e. with the => syntax) this isn't that big a

327 | // deal.

328 | //

329 | // on 2.11+ we could use quasiquotes' implementation to check

330 | // this via:

331 | //

332 | // internal.reificationSupport.SyntacticFunctionType.unapply

333 | //

334 | // but for now let's just do this.

335 | def validateLambda(pos: Position, target: Tree, a: Tree, as: List[Tree]): Tree = {

336 | def validateArgs: Tree =

337 | if (as.isEmpty) {

338 | reporter.error(tree.pos, s"Function0 cannot be used in type lambdas"); target

339 | } else {

340 | atPos(tree.pos.makeTransparent)(handleLambda(a, as))

341 | }

342 | target match {

343 | case Ident(n) if n.startsWith("Function") =>

344 | validateArgs

345 | case Select(Ident(nme.scala_), n) if n.startsWith("Function") =>

346 | validateArgs

347 | case Select(Select(Ident(nme.ROOTPKG), nme.scala_), n) if n.startsWith("Function") =>

348 | validateArgs

349 | case _ =>

350 | reporter.error(tree.pos, s"Lambda requires a literal function (found $target)"); target

351 | }

352 | }

353 |

354 | // if we've already handled this tree, let's just use the

355 | // previous result and be done now!

356 | treeCache.get(tree) match {

357 | case Some(cachedResult) => return cachedResult

358 | case None => ()

359 | }

360 |

361 | // this is where it all starts.

362 | //

363 | // given a tree, see if it could possibly be a type lambda

364 | // (either placeholder syntax or lambda syntax). if so, handle

365 | // it, and if not, transform it in the normal way.

366 | val result = polyLambda(tree match {

367 |

368 | // Lambda[A => Either[A, Int]] case.

369 | case AppliedTypeTree(Ident(TypeLambda1), AppliedTypeTree(target, a :: as) :: Nil) =>

370 | validateLambda(tree.pos, target, a, as)

371 |

372 | // λ[A => Either[A, Int]] case.

373 | case AppliedTypeTree(Ident(TypeLambda2), AppliedTypeTree(target, a :: as) :: Nil) =>

374 | validateLambda(tree.pos, target, a, as)

375 |

376 | // Either[_, Int] case (if `underscore-placeholders` is enabled)

377 | case ExistentialTypeTree(AppliedTypeTree(t, as), params) if useUnderscoresForTypeLambda =>

378 | handlePlaceholders(t, as) match {

379 | case Some(nt) =>

380 | val nonUnderscoreExistentials = params.filter(p => !InvPlaceholderScala3(p.name))

381 | atPos(tree.pos.makeTransparent)(if (nonUnderscoreExistentials.isEmpty) nt else ExistentialTypeTree(nt, nonUnderscoreExistentials))

382 | case None => super.transform(tree)

383 | }

384 |

385 | // Either[?, Int] case (if no ? present this is a noop)

386 | case AppliedTypeTree(t, as) =>

387 | handlePlaceholders(t, as) match {

388 | case Some(nt) => atPos(tree.pos.makeTransparent)(nt)

389 | case None => super.transform(tree)

390 | }

391 |

392 | // Otherwise, carry on as normal.

393 | case _ =>

394 | super.transform(tree)

395 | })

396 |

397 | // cache the result so we don't have to recompute it again later.

398 | treeCache(tree) = result

399 | result

400 | }

401 | }

402 | }

403 |

--------------------------------------------------------------------------------

/src/test/resources/moo.scala:

--------------------------------------------------------------------------------

1 | object Moo {

2 | def test[F[_]](): Unit = {}

3 | t@test@est[E@either@ither[Thr@throwable@owable, *]]()

4 | }

5 |

--------------------------------------------------------------------------------

/src/test/scala/bounds.scala:

--------------------------------------------------------------------------------

1 | package bounds

2 |

3 | trait Leibniz[-L, +H >: L, A >: L <: H, B >: L <: H]

4 |

5 | object Test {

6 | trait Foo

7 | trait Bar extends Foo

8 |

9 | def outer[A >: Bar <: Foo] = {

10 | def test[F[_ >: Bar <: Foo]] = 999

11 | test[λ[`b >: Bar <: Foo` => Leibniz[Bar, Foo, A, b]]]

12 | }

13 | }

14 |

--------------------------------------------------------------------------------

/src/test/scala/functor.scala:

--------------------------------------------------------------------------------

1 | package functor

2 |

3 | trait Functor[M[_]] {

4 | def fmap[A, B](fa: M[A])(f: A => B): M[B]

5 | }

6 |

7 | class EitherRightFunctor[L] extends Functor[Either[L, *]] {

8 | def fmap[A, B](fa: Either[L, A])(f: A => B): Either[L, B] =

9 | fa match {

10 | case Right(a) => Right(f(a))

11 | case Left(l) => Left(l)

12 | }

13 | }

14 |

15 | class EitherLeftFunctor[R] extends Functor[Lambda[A => Either[A, R]]] {

16 | def fmap[A, B](fa: Either[A, R])(f: A => B): Either[B, R] =

17 | fa match {

18 | case Right(r) => Right(r)

19 | case Left(a) => Left(f(a))

20 | }

21 | }

22 |

--------------------------------------------------------------------------------

/src/test/scala/hmm.scala:

--------------------------------------------------------------------------------

1 | package hmm

2 |

3 | class TC[A]

4 |

5 | object TC {

6 | def apply[A]: Unit = ()

7 | }

8 |

9 | object test {

10 |

11 | sealed trait HList extends Product with Serializable

12 | case class ::[+H, +T <: HList](head : H, tail : T) extends HList

13 | sealed trait HNil extends HList

14 | case object HNil extends HNil

15 |

16 | TC[Int :: Int :: Int :: Int :: Int :: Int :: Int :: Int ::

17 | Int :: Int :: Int :: Int :: Int :: Int :: Int :: Int ::

18 | Int :: Int :: Int :: Int :: Int :: Int :: Int :: Int :: HNil]

19 |

20 | TC[Int :: Int :: Int :: Int :: Int :: Int :: Int :: Int ::

21 | Int :: Int :: Int :: Int :: Int :: Int :: Int :: Int ::

22 | Int :: Int :: Int :: Int :: Int :: Int :: Int :: Int ::

23 | Int :: Int :: Int :: Int :: Int :: Int :: Int :: Int ::

24 | Int :: Int :: Int :: Int :: Int :: Int :: Int :: Int ::

25 | Int :: Int :: Int :: Int :: Int :: Int :: Int :: Int ::

26 | Int :: Int :: Int :: Int :: Int :: Int :: Int :: Int ::

27 | Int :: Int :: Int :: Int :: Int :: Int :: Int :: Int :: HNil]

28 |

29 | TC[Int :: Int :: Int :: Int :: Int :: Int :: Int :: Int ::

30 | Int :: Int :: Int :: Int :: Int :: Int :: Int :: Int ::

31 | Int :: Int :: Int :: Int :: Int :: Int :: Int :: Int ::

32 | Int :: Int :: Int :: Int :: Int :: Int :: Int :: Int ::

33 | Int :: Int :: Int :: Int :: Int :: Int :: Int :: Int ::

34 | Int :: Int :: Int :: Int :: Int :: Int :: Int :: Int ::

35 | Int :: Int :: Int :: Int :: Int :: Int :: Int :: Int ::

36 | Int :: Int :: Int :: Int :: Int :: Int :: Int :: Int ::

37 | Int :: Int :: Int :: Int :: Int :: Int :: Int :: Int ::

38 | Int :: Int :: Int :: Int :: Int :: Int :: Int :: Int ::

39 | Int :: Int :: Int :: Int :: Int :: Int :: Int :: Int ::

40 | Int :: Int :: Int :: Int :: Int :: Int :: Int :: Int ::

41 | Int :: Int :: Int :: Int :: Int :: Int :: Int :: Int :: HNil]

42 | }

43 |

--------------------------------------------------------------------------------

/src/test/scala/issue80.scala:

--------------------------------------------------------------------------------

1 | trait ~~>[A[_[_]], B[_[_]]] {

2 | def apply[X[_]](a: A[X]): B[X]

3 | }

4 |

5 | trait Bifunctor[F[_[_[_]], _[_[_]]]] {

6 | def bimap[A[_[_]], B[_[_]], C[_[_]], D[_[_]]](fab: F[A, B])(f: A ~~> C, g: B ~~> D): F[C, D]

7 | }

8 |

9 | final case class Coproduct[A[_[_]], B[_[_]], X[_]](run: Either[A[X], B[X]])

10 |

11 | object Coproduct {

12 | def coproductBifunctor[X[_]]: Bifunctor[Coproduct[*[_[_]], *[_[_]], X]] =

13 | new Bifunctor[Coproduct[*[_[_]], *[_[_]], X]] {

14 | def bimap[A[_[_]], B[_[_]], C[_[_]], D[_[_]]](abx: Coproduct[A, B, X])(f: A ~~> C, g: B ~~> D): Coproduct[C, D, X] =

15 | abx.run match {

16 | case Left(ax) => Coproduct(Left(f(ax)))

17 | case Right(bx) => Coproduct(Right(g(bx)))

18 | }

19 | }

20 | def test[X[_]]: Bifunctor[({ type L[F[_[_]], G[_[_]]] = Coproduct[F, G, X] })#L] = coproductBifunctor[X]

21 | }

22 |

--------------------------------------------------------------------------------

/src/test/scala/nested.scala:

--------------------------------------------------------------------------------

1 | package nested

2 |

3 | // // From https://github.com/non/kind-projector/issues/20

4 | // import scala.language.higherKinds

5 |

6 | object KindProjectorWarnings {

7 | trait Foo[F[_], A]

8 | trait Bar[A, B]

9 |

10 | def f[G[_]]: Unit = ()