├── 06 Getting a Little Jumpy

├── Figure 6-1-2-R.png

├── Figure 6-2-1-R.png

├── Figure 6-1-1-Stata.png

├── Figure 6-1-2-Stata.png

├── Figure 6-2-1-Julia.png

├── 06 Getting a Little Jumpy.md

├── Table 6-2-1.do

├── Figure 6-2-1.py

├── Figure 6-2-1.jl

├── Figure 6-1-1.py

├── Figure 6-1-1.r

├── Figure 6-2-1.r

├── Figure 6-2-1.do

├── Figure 6-1-1.jl

├── Figure 6-1-1.do

├── Figure 6-1-2.py

├── Figure 6-1-2.r

├── Figure 6-1-2.do

└── Figure 6-1-2.jl

├── 03 Making Regression Make Sense

├── Figure 3-1-2-Julia.png

├── Table 3-3-3.do

├── Figure 3-1-3.do

├── Figure 3-1-2.jl

├── Figure 3-1-2.do

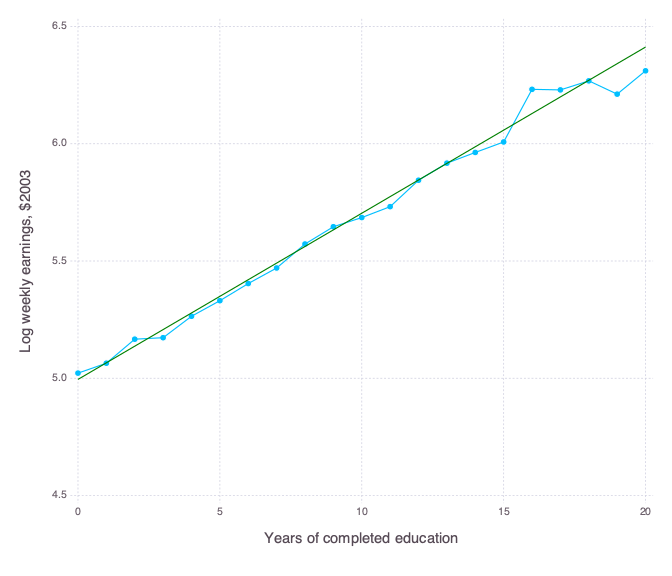

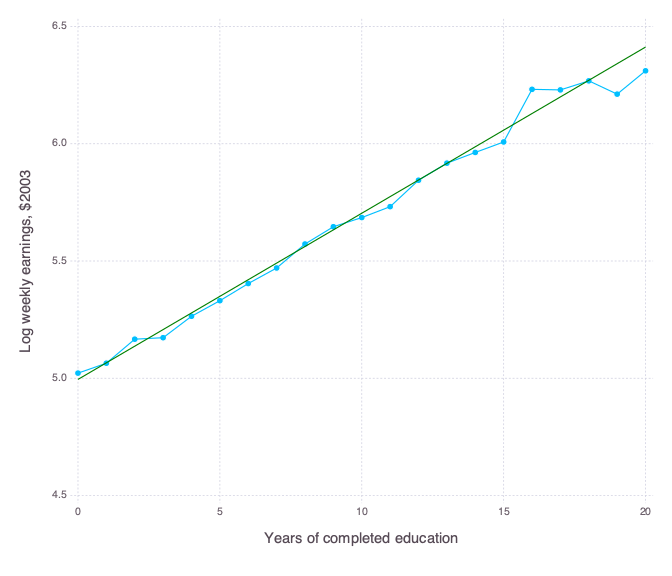

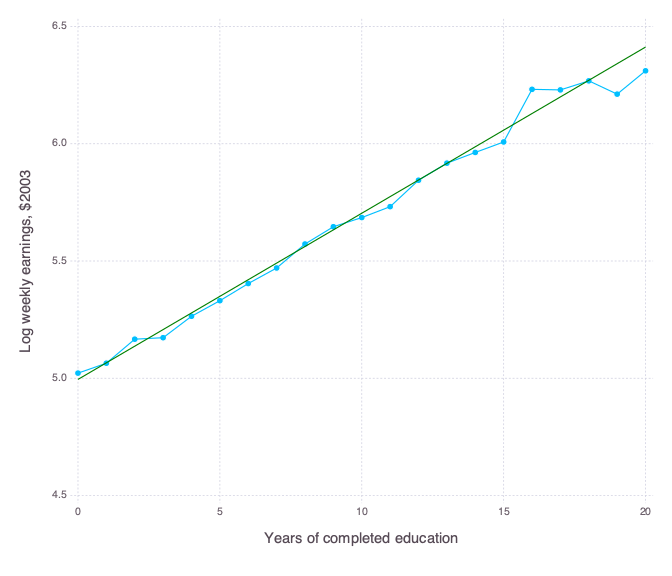

├── Figure 3-1-2.r

├── Figure 3-1-2.py

├── Figure 3-1-3.py

├── Figure 3-1-3.r

├── Table 3-3-2.do

├── Table 3-3-2.py

├── Table 3-3-2.jl

├── Table 3-3-3.r

├── Table 3-3-2.r

└── 03 Making Regression Make Sense.md

├── 04 Instrumental Variables in Action

├── Figure 4-1-1-R.png

├── Figure 4-6-1-R.png

├── Table 4-4-1.do

├── Figure 4-1-1.jl

├── Table 4-1-2.do

├── Table 4-1-2.py

├── Table 4-1-1.do

├── Figure 4-6-1.py

├── 04 Instrumental Variables in Action.md

├── Figure 4-6-1.jl

├── Table 4-1-1.r

├── Figure 4-1-1.py

├── Table 4-1-2.r

├── Figure 4-1-1.r

├── Figure 4-6-1.do

├── Figure 4-6-1.r

├── Figure 4-1-1.do

└── Table 4-6-2.do

├── 05 Fixed Effects, DD and Panel Data

├── Figure 5-2-4-Stata.png

├── Table 5-2-2.do

├── 05 Fixed Effects, DD and Panel Data.md

├── Table 5-2-1.do

├── Table 5-2-1.r

├── Figure 5-2-4.do

├── Figure 5-2-4.r

├── Figure 5-2-4.jl

├── Figure 5-2-4.py

└── Table 5-2-3.do

├── .gitignore

├── 02 The Experimental Ideal

└── Table 2-2-1.do

├── 07 Quantile Regression

├── 07 Quantile Regression.md

├── Table 7-1-1.jl

├── Table 7-1-1.do

├── Table 7-1-1.r

└── Table 7-1-1.py

├── 08 Nonstandard Standard Error Issues

├── Table 8-1-1-alt.r

├── 08 Nonstanard Standard Error Issues.md

├── Table 8-1-1.jl

├── Table 8-1-1.py

├── Table 8-1-1.r

├── Table 8-1-1.do

└── Table-8-1-1.do

└── README.md

/06 Getting a Little Jumpy/Figure 6-1-2-R.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/vikjam/mostly-harmless-replication/HEAD/06 Getting a Little Jumpy/Figure 6-1-2-R.png

--------------------------------------------------------------------------------

/06 Getting a Little Jumpy/Figure 6-2-1-R.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/vikjam/mostly-harmless-replication/HEAD/06 Getting a Little Jumpy/Figure 6-2-1-R.png

--------------------------------------------------------------------------------

/06 Getting a Little Jumpy/Figure 6-1-1-Stata.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/vikjam/mostly-harmless-replication/HEAD/06 Getting a Little Jumpy/Figure 6-1-1-Stata.png

--------------------------------------------------------------------------------

/06 Getting a Little Jumpy/Figure 6-1-2-Stata.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/vikjam/mostly-harmless-replication/HEAD/06 Getting a Little Jumpy/Figure 6-1-2-Stata.png

--------------------------------------------------------------------------------

/06 Getting a Little Jumpy/Figure 6-2-1-Julia.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/vikjam/mostly-harmless-replication/HEAD/06 Getting a Little Jumpy/Figure 6-2-1-Julia.png

--------------------------------------------------------------------------------

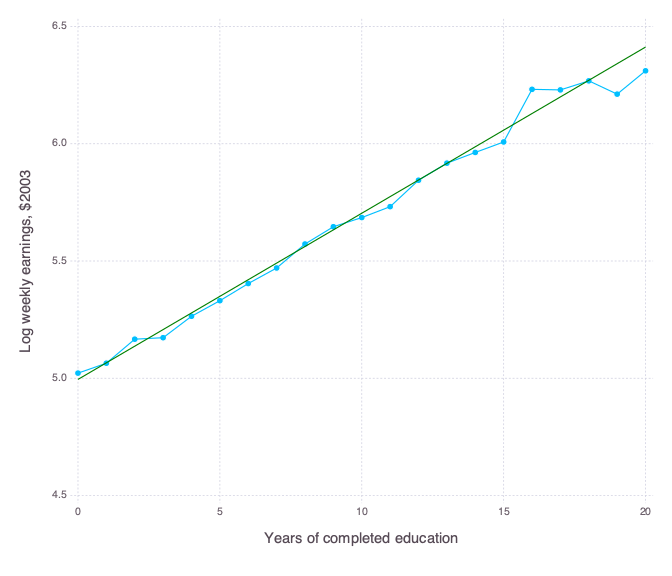

/03 Making Regression Make Sense/Figure 3-1-2-Julia.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/vikjam/mostly-harmless-replication/HEAD/03 Making Regression Make Sense/Figure 3-1-2-Julia.png

--------------------------------------------------------------------------------

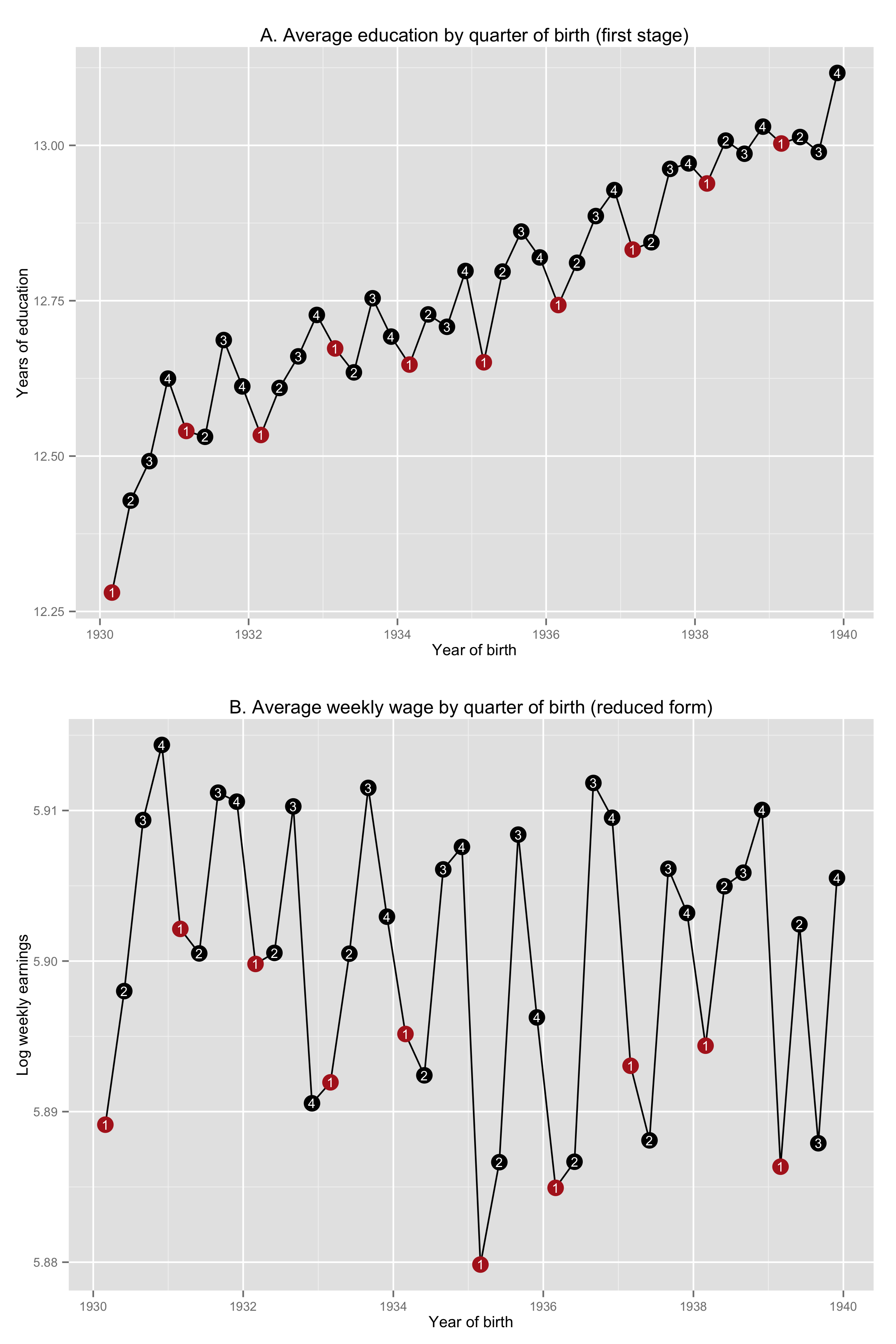

/04 Instrumental Variables in Action/Figure 4-1-1-R.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/vikjam/mostly-harmless-replication/HEAD/04 Instrumental Variables in Action/Figure 4-1-1-R.png

--------------------------------------------------------------------------------

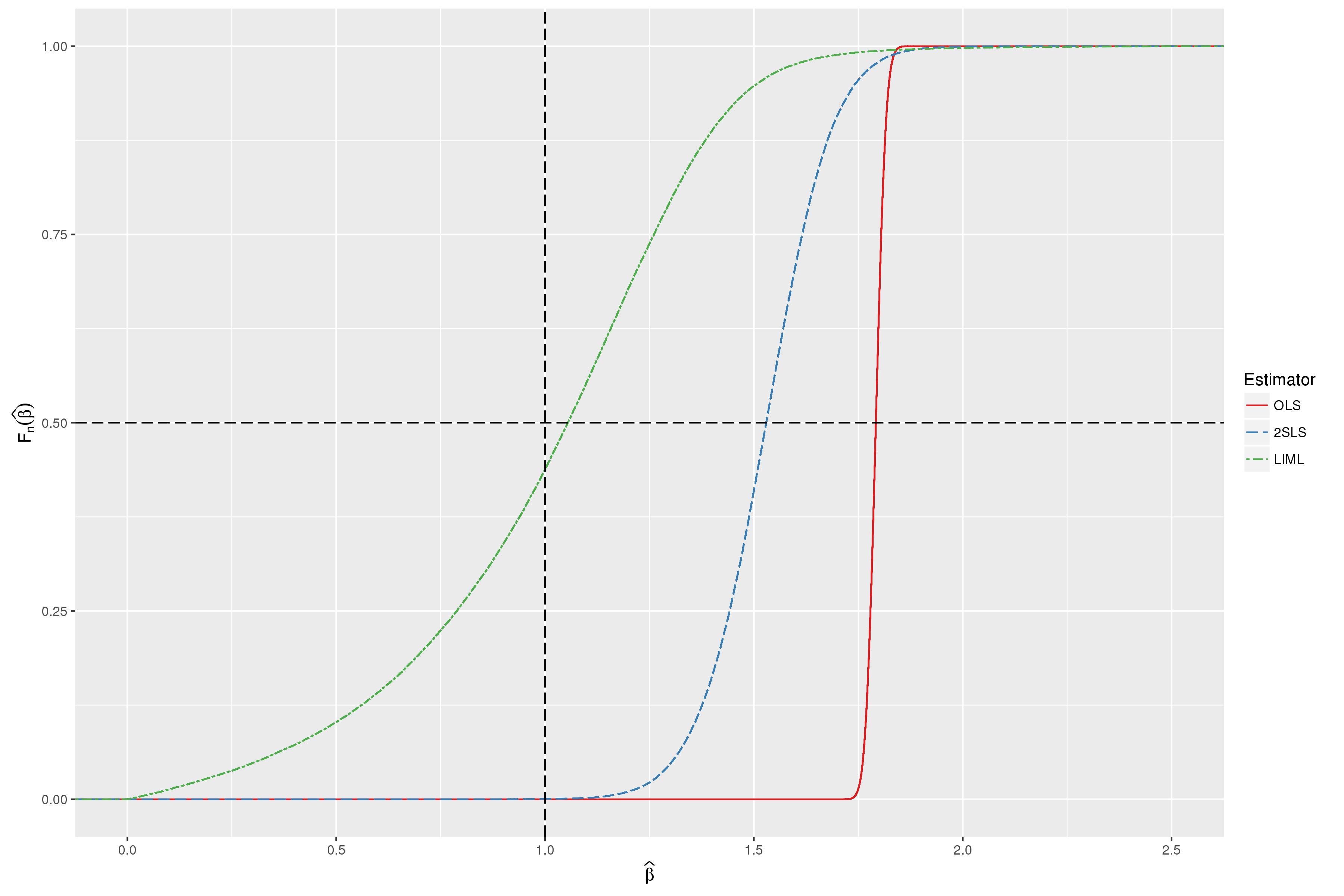

/04 Instrumental Variables in Action/Figure 4-6-1-R.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/vikjam/mostly-harmless-replication/HEAD/04 Instrumental Variables in Action/Figure 4-6-1-R.png

--------------------------------------------------------------------------------

/05 Fixed Effects, DD and Panel Data/Figure 5-2-4-Stata.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/vikjam/mostly-harmless-replication/HEAD/05 Fixed Effects, DD and Panel Data/Figure 5-2-4-Stata.png

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # System files

2 | *.DS_Store

3 |

4 | # Data and logs

5 | *.dat

6 | *.nj

7 | *.zip

8 | *.txt

9 |

10 | # Output

11 | *.pdf

12 |

13 | # R

14 | *.Rhistory

15 | *.Rdata

16 |

17 | # Stata

18 | *.dta

19 |

20 |

--------------------------------------------------------------------------------

/05 Fixed Effects, DD and Panel Data/Table 5-2-2.do:

--------------------------------------------------------------------------------

1 | clear all

2 | set more off

3 | eststo clear

4 | capture version 13

5 |

6 | /* Pull data from the 'Mostly Harmless' website */

7 | /* http://economics.mit.edu/faculty/angrist/data1/mhe/card */

8 | shell curl -o njmin.zip http://economics.mit.edu/files/3845

9 | shell unzip -j njmin.zip

10 |

11 | /* End of script*/

12 | exit

13 |

--------------------------------------------------------------------------------

/04 Instrumental Variables in Action/Table 4-4-1.do:

--------------------------------------------------------------------------------

1 | clear all

2 | set more off

3 |

4 | /* Stata code for Table 4.4.1*/

5 | * shell curl -o jtpa.raw http://economics.mit.edu/files/614

6 |

7 | /* Import data */

8 | infile ym zm dm sex xm6 xm7 xm8 xm9 xm10 ///

9 | xm17 xm18 xm12 xm13 xm14 xm15 xm16 xm19 using jtpa.raw, clear

10 |

11 | reg sex xm6

12 |

13 |

14 | /* End of file */

15 | exit

16 |

--------------------------------------------------------------------------------

/05 Fixed Effects, DD and Panel Data/05 Fixed Effects, DD and Panel Data.md:

--------------------------------------------------------------------------------

1 | # 05 Fixed Effects, DD and Panel Data

2 | ## 5.2 Differences-in-differences

3 |

4 | ### Figure 5-2-4

5 |

6 | Completed in [Stata](Figure%205-2-4.do), [R](Figure%205-2-4.r), [Python](Figure%205-2-4.py) and [Julia](Figure%205-2-4.jl).

7 |

8 |

9 |

--------------------------------------------------------------------------------

/03 Making Regression Make Sense/Table 3-3-3.do:

--------------------------------------------------------------------------------

1 | clear all

2 | set more off

3 | eststo clear

4 | capture version 13

5 | /* Required programs */

6 | /* - estout: output table */

7 |

8 | /* Stata code for Table 3.3.2*/

9 |

10 | /* Download data */

11 | shell curl -o nswre74.dta http://economics.mit.edu/files/3828

12 | shell curl -o cps1re74.dta http://economics.mit.edu/files/3824

13 | shell curl -o cps3re74.dta http://economics.mit.edu/files/3825

14 |

15 | /* End of script */

16 |

--------------------------------------------------------------------------------

/02 The Experimental Ideal/Table 2-2-1.do:

--------------------------------------------------------------------------------

1 | clear all

2 | set more off

3 | eststo clear

4 | capture version 13

5 |

6 | /* Pull data from the 'Mostly Harmless' website */

7 | /* http://economics.mit.edu/faculty/angrist/data1/mhe/krueger */

8 | shell curl -o webstar.dta http://economics.mit.edu/files/3827/

9 |

10 | /* Load downloaded data */

11 | use webstar.dta, clear

12 |

13 | /* Create variables in table */

14 | gen white_asian = (inlist(srace, 1, 3)) if !missing(srace)

15 | label var white_asian "White/Asian"

16 |

17 | /* Calculate percentiles of test scores */

18 | local testscores "treadssk tmathssk treadss1 tmathss1 treadss2 tmathss2 treadss3 tmathss3"

19 | foreach var of varlist `testscores' {

20 | xtile pct_`var' = `var', nq(100)

21 | }

22 | egen avg_pct = rowmean(pct_*)

23 | label var avg_pct "Percentile score in kindergarten"

24 |

25 | /* End of file */

26 | exit

27 |

--------------------------------------------------------------------------------

/04 Instrumental Variables in Action/Figure 4-1-1.jl:

--------------------------------------------------------------------------------

1 | # Load packages

2 | using DataFrames

3 | using Gadfly

4 | using GLM

5 |

6 | # Download the data and unzip it

7 | download("http://economics.mit.edu/files/397", "asciiqob.zip")

8 | run(`unzip -o asciiqob.zip`)

9 |

10 | # Import data

11 | pums = readtable("asciiqob.txt",

12 | header = false,

13 | separator = ' ')

14 | names!(pums, [:lwklywge, :educ, :yob, :qob, :pob])

15 |

16 | # Aggregate into means for figure

17 | means = aggregate(pums, [:yob, :qob], [mean])

18 |

19 | # Create dates

20 | means[:date] = [Date(1900 + y, m * 3, 1) for (y, m) in zip(means[:yob], means[:qob])]

21 |

22 | # Plot

23 | p = plot(means,

24 | layer(x = "date", y = "educ_mean", Geom.point, Geom.line))

25 | p = plot(means,

26 | layer(x = "date", y = "lwklywge_mean", Geom.point, Geom.line))

27 |

28 | # End of file

29 |

--------------------------------------------------------------------------------

/07 Quantile Regression/07 Quantile Regression.md:

--------------------------------------------------------------------------------

1 | # 07 Quantile Regression

2 | ## 7.1 The Quantile Regression Model

3 |

4 | ### Table 7.1.1

5 | Completed in [Stata](Table%207-1-1.do), [R](Table%207-1-1.r) and [Python](Table%207-1-1.py)

6 |

7 | | |Obs |Mean |Std Dev |0.1 |0.25 |0.5 |0.75 |0.9 |OLS |RMSE |

8 | |:----|:------|:----|:-------|:-----|:-----|:-----|:-----|:-----|:-----|:----|

9 | |1980 |65,023 |6.4 |0.671 |0.073 |0.073 |0.068 |0.07 |0.079 |0.072 |0.63 |

10 | | | | | |0.002 |0.001 |0.001 |0.001 |0.002 |0.001 | |

11 | |1990 |86,785 |6.46 |0.694 |0.112 |0.11 |0.106 |0.111 |0.137 |0.114 |0.64 |

12 | | | | | |0.003 |0.001 |0.001 |0.001 |0.002 |0.001 | |

13 | |2000 |97,397 |6.47 |0.746 |0.092 |0.105 |0.111 |0.119 |0.157 |0.114 |0.69 |

14 | | | | | |0.003 |0.001 |0.001 |0.001 |0.002 |0.001 | |

15 |

--------------------------------------------------------------------------------

/04 Instrumental Variables in Action/Table 4-1-2.do:

--------------------------------------------------------------------------------

1 | clear all

2 | set more off

3 | /* Stata code for Table 4-1-2 */

4 | /* Required additional packages */

5 | /* - estout: output results */

6 |

7 | * /* Download data */

8 | * shell curl -o asciiqob.zip http://economics.mit.edu/files/397

9 | * unzipfile asciiqob.zip, replace

10 |

11 | /* Import data */

12 | infile lwklywge educ yob qob pob using asciiqob.txt, clear

13 |

14 | /* Create binary instrument */

15 | recode qob (1/2 = 0 "Born in the 1st or 2nd quarter of year") ///

16 | (3/4 = 1 "Born in the 3rd or 4th quarter of year") ///

17 | (else = .), gen(z)

18 |

19 | /* Compare means (and differences) */

20 | ttest lwklywge, by(z)

21 | ttest educ, by(z)

22 |

23 | /* Compute Wald estimate */

24 | sureg (educ z) (lwklywge z) if !missing(z)

25 | nlcom [lwklywge]_b[z] / [educ]_b[z]

26 |

27 | /* OLS estimate */

28 | regress lwklywge educ if !missing(z)

29 |

30 | /* End of script */

31 |

--------------------------------------------------------------------------------

/03 Making Regression Make Sense/Figure 3-1-3.do:

--------------------------------------------------------------------------------

1 | clear all

2 | set more off

3 | eststo clear

4 | capture log close _all

5 | capture version 13

6 |

7 | /* Stata code for Table 3.3.2*/

8 | /* !! Can't find right data !! */

9 |

10 | /* Download data */

11 | * shell curl -o asciiqob.zip http://economics.mit.edu/files/397

12 | * unzipfile asciiqob.zip, replace

13 |

14 | /* Import data */

15 | infile lwklywge educ yob qob pob using asciiqob.txt, clear

16 |

17 | /* Panel A */

18 | /* Old-fashioned standard errors */

19 | regress lwklywge educ

20 | /* Robust standard errors */

21 | regress lwklywge educ, robust

22 |

23 | /* Collapse data for Panel B (counting only if in sample) */

24 | gen count = 1 if e(sample)

25 | collapse (sum) count (mean) lwklywge, by(educ)

26 |

27 | /* Old-fashioned standard errors */

28 | regress lwklywge educ [aweight = count]

29 | /* Robust standard errors */

30 | regress lwklywge educ [aweight = count], robust

31 |

32 | /* End of file */

33 | exit

34 |

--------------------------------------------------------------------------------

/06 Getting a Little Jumpy/06 Getting a Little Jumpy.md:

--------------------------------------------------------------------------------

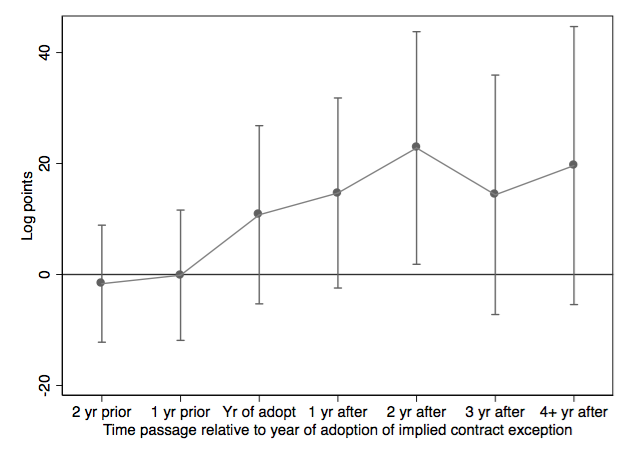

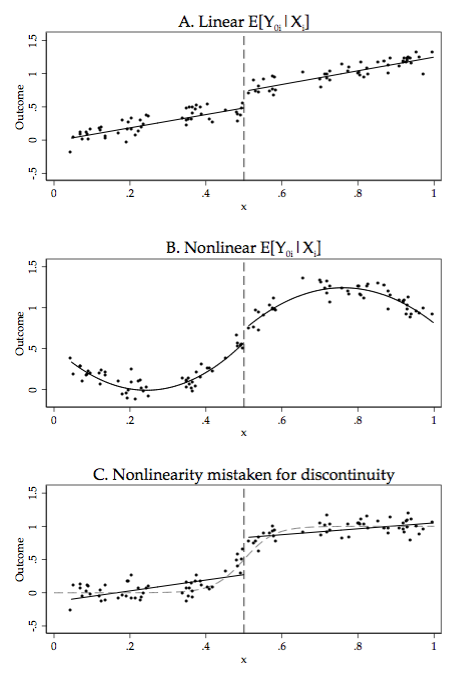

1 | # 06 Getting a Little Jumpy

2 | ## 6.1 Sharp RD

3 |

4 | ### Figure 6-1-1

5 |

6 | Completed in [Stata](Figure%206-1-1.do), [R](Figure%206-1-1.r), [Python](Figure%206-1-1.py) and [Julia](Figure%206-1-1.jl)

7 |

8 |

9 |

10 | ### Figure 6-1-2

11 |

12 | Completed in [Stata](Figure%206-1-2.do), [R](Figure%206-1-2.r), [Python](Figure%206-1-2.py) and [Julia](Figure%206-1-2.jl)

13 |

14 |

15 |

16 | ### Figure 6-2-1

17 |

18 | Completed in [Stata](Figure%206-2-1.do), [R](Figure%206-2-1.r), [Python](Figure%206-2-1.py) and [Julia](Figure%206-1-1.jl)

19 |

20 |

21 |

--------------------------------------------------------------------------------

/07 Quantile Regression/Table 7-1-1.jl:

--------------------------------------------------------------------------------

1 | # Julia code for Table 8-1-1 #

2 | # Required packages #

3 | # - DataRead: import Stata datasets #

4 | # - DataFrames: data manipulation / storage #

5 | # - QuantileRegression: quantile regression #

6 | # - GLM: OLS regression #

7 | using DataRead

8 | using DataFrames

9 | using QuantileRegression

10 | using GLM

11 |

12 | # Download the data and unzip it

13 | download("http://economics.mit.edu/files/384", "angcherfer06.zip")

14 | run(`unzip angcherfer06.zip`)

15 |

16 | # Load the data

17 | dta_path = string("Data/census", "80", ".csv")

18 | df = readtable(dta_path)

19 |

20 | # Summary statistics

21 | obs = size(df[:logwk], 1)

22 | μ = mean(df[:logwk])

23 | σ = std(df[:logwk])

24 |

25 | # Run OLS

26 | wls = glm(logwk ~ educ + black + exper + exper2, df,

27 | Normal(), IdentityLink(),

28 | wts = convert(Array, (df[:perwt])))

29 | wls_coef = coef(wls)[2]

30 | wls_se = stderr(wls)[2]

31 | wls_rmse = sqrt(sum((df[:logwk] - predict(wls)).^2) / df_residual(wls))

32 |

33 | # Print results

34 | print(obs, μ, σ, wls_coef, wls_se, wls_rmse)

35 |

36 | # End of script

37 |

--------------------------------------------------------------------------------

/03 Making Regression Make Sense/Figure 3-1-2.jl:

--------------------------------------------------------------------------------

1 | # Load packages

2 | using CSV

3 | using DataFrames

4 | using GLM

5 | using Statistics

6 | using Gadfly

7 | using Cairo

8 |

9 | # Download the data and unzip it

10 | download("http://economics.mit.edu/files/397", "asciiqob.zip")

11 | run(`unzip asciiqob.zip`)

12 |

13 | # Import data

14 | pums = DataFrame(CSV.File("asciiqob.txt", header = false, delim = " ", ignorerepeated = true))

15 | rename!(pums, [:lwklywge, :educ, :yob, :qob, :pob])

16 |

17 | # Run OLS and save predicted values

18 | OLS = lm(@formula(lwklywge ~ educ), pums)

19 | pums.predicted = predict(OLS)

20 |

21 | # Aggregate into means for figure

22 | means = combine(groupby(pums, :educ), [:lwklywge, :predicted] .=> mean)

23 |

24 | # Plot figure and export figure using Gadfly

25 | figure = plot(means,

26 | layer(x = "educ", y = "predicted_mean", Geom.line, Theme(default_color = colorant"green")),

27 | layer(x = "educ", y = "lwklywge_mean", Geom.line, Geom.point),

28 | Guide.xlabel("Years of completed education"),

29 | Guide.ylabel("Log weekly earnings, \$2003"))

30 |

31 | draw(PNG("Figure 3-1-2-Julia.png", 7inch, 6inch), figure)

32 |

33 | # End of script

34 |

--------------------------------------------------------------------------------

/05 Fixed Effects, DD and Panel Data/Table 5-2-1.do:

--------------------------------------------------------------------------------

1 | clear all

2 | set more off

3 | eststo clear

4 | capture version 13

5 |

6 | /* Stata code for Table 5.2.1*/

7 | shell curl -o njmin.zip http://economics.mit.edu/files/3845

8 | unzipfile njmin.zip, replace

9 |

10 | /* Import data */

11 | infile SHEET CHAIN CO_OWNED STATE SOUTHJ CENTRALJ NORTHJ PA1 PA2 ///

12 | SHORE NCALLS EMPFT EMPPT NMGRS WAGE_ST INCTIME FIRSTINC BONUS ///

13 | PCTAFF MEALS OPEN HRSOPEN PSODA PFRY PENTREE NREGS NREGS11 ///

14 | TYPE2 STATUS2 DATE2 NCALLS2 EMPFT2 EMPPT2 NMGRS2 WAGE_ST2 ///

15 | INCTIME2 FIRSTIN2 SPECIAL2 MEALS2 OPEN2R HRSOPEN2 PSODA2 PFRY2 ///

16 | PENTREE2 NREGS2 NREGS112 using "public.dat", clear

17 |

18 | /* Label the state variables and values */

19 | label var STATE "State"

20 | label define state_labels 0 "PA" 1 "NJ"

21 | label values STATE state_labels

22 |

23 | /* Calculate FTE employement */

24 | gen FTE = EMPFT + 0.5 * EMPPT + NMGRS

25 | label var FTE "FTE employment before"

26 | gen FTE2 = EMPFT2 + 0.5 * EMPPT2 + NMGRS2

27 | label var FTE2 "FTE employment after"

28 |

29 | /* Calculate means */

30 | tabstat FTE FTE2, by(STATE) stat(mean semean)

31 |

32 | /* End of script */

33 |

--------------------------------------------------------------------------------

/06 Getting a Little Jumpy/Table 6-2-1.do:

--------------------------------------------------------------------------------

1 | clear all

2 | set more off

3 | /* Stata code for Figure 5.2.4 */

4 |

5 | /* Download data */

6 | shell curl -o final4.dta http://economics.mit.edu/files/1359

7 | shell curl -o final5.dta http://economics.mit.edu/files/1358

8 |

9 | /* Import data */

10 | use "final5.dta", clear

11 |

12 | replace avgverb= avgverb-100 if avgverb>100

13 | replace avgmath= avgmath-100 if avgmath>100

14 |

15 | gen func1 = c_size / (floor((c_size - 1) / 40) + 1)

16 | gen func2 = cohsize / (floor(cohsize / 40) + 1)

17 |

18 | replace avgverb = . if verbsize == 0

19 | replace passverb = . if verbsize == 0

20 |

21 | replace avgmath = . if mathsize == 0

22 | replace passmath = . if mathsize == 0

23 |

24 | /* Sample restrictions */

25 | keep if 1 < classize & classize < 45 & c_size > 5

26 | keep if c_leom == 1 & c_pik < 3

27 |

28 | sum avgverb

29 | sum avgmath

30 |

31 | mmoulton avgverb classize, cluvar(schlcode)

32 | mmoulton avgverb classize tipuach, cluvar(schlcode)

33 | mmoulton avgverb classize tipuach c_size, clu(schlcode)

34 | mmoulton avgmath classize, cluvar(schlcode)

35 | mmoulton avgmath classize tipuach, cluvar(schlcode)

36 | mmoulton avgmath classize tipuach c_size, clu(schlcode)

37 |

38 | /* End of script */

39 | exit

40 |

--------------------------------------------------------------------------------

/03 Making Regression Make Sense/Figure 3-1-2.do:

--------------------------------------------------------------------------------

1 | clear all

2 | set more off

3 | eststo clear

4 | capture log close _all

5 | capture version 13

6 |

7 | /* Stata code for Table 3.1.2 */

8 | /* Required additional packages */

9 | log using "Table 3-1-2-Stata.txt", name(table030102) text replace

10 |

11 | /* Download data */

12 | shell curl -o asciiqob.zip http://economics.mit.edu/files/397

13 | unzipfile asciiqob.zip, replace

14 |

15 | /* Import data */

16 | infile lwklywge educ yob qob pob using asciiqob.txt, clear

17 |

18 | /* Get fitted line */

19 | regress lwklywge educ

20 | predict yhat, xb

21 |

22 | /* Calculate means by collapsing the data */

23 | collapse lwklywge yhat, by(educ)

24 |

25 | /* Graph the figures */

26 | graph twoway (connected lwklywge educ, lcolor(black) mcolor(black)) ///

27 | (line yhat educ, lcolor(black) lpattern("-")), ///

28 | ylabel(4.8(0.2)6.6) ymtick(4.9(0.2)6.5) ///

29 | xlabel(0(2)20) xmtick(1(2)19) ///

30 | ytitle("Log weekly earnings, $2003") ///

31 | xtitle("Years of completed education") ///

32 | legend(off) ///

33 | scheme(s1mono)

34 |

35 | graph export "Figure 3-1-2-Stata.pdf", replace

36 |

37 | log close table030102

38 | /* End of file */

39 |

--------------------------------------------------------------------------------

/03 Making Regression Make Sense/Figure 3-1-2.r:

--------------------------------------------------------------------------------

1 | # R code for Figure 3.1.2 #

2 | # Required packages #

3 | # - ggplot2: making pretty graphs #

4 | # - data.table: simple way to aggregate #

5 | library(ggplot2)

6 | library(data.table)

7 |

8 | # Download data and unzip the data

9 | download.file('http://economics.mit.edu/files/397', 'asciiqob.zip')

10 | unzip('asciiqob.zip')

11 |

12 | # Read the data into a dataframe

13 | pums <- read.table('asciiqob.txt',

14 | header = FALSE,

15 | stringsAsFactors = FALSE)

16 | names(pums) <- c('lwklywge', 'educ', 'yob', 'qob', 'pob')

17 |

18 | # Estimate OLS regression

19 | reg.model <- lm(lwklywge ~ educ, data = pums)

20 |

21 | # Calculate means by educ attainment and predicted values

22 | pums.data.table <- data.table(pums)

23 | educ.means <- pums.data.table[ , list(mean = mean(lwklywge)), by = educ]

24 | educ.means$yhat <- predict(reg.model, educ.means)

25 |

26 | # Create plot

27 | p <- ggplot(data = educ.means, aes(x = educ)) +

28 | geom_point(aes(y = mean)) +

29 | geom_line(aes(y = mean)) +

30 | geom_line(aes(y = yhat)) +

31 | ylab("Log weekly earnings, $2003") +

32 | xlab("Years of completed education")

33 |

34 | ggsave(filename = "Figure 3-1-2-R.pdf")

35 |

36 |

37 | # End of file

38 |

--------------------------------------------------------------------------------

/04 Instrumental Variables in Action/Table 4-1-2.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | """

3 | Create Table 4-1-2 in MHE

4 | Tested on Python 3.4

5 | """

6 |

7 | import zipfile

8 | import urllib.request

9 | import pandas as pd

10 | import scipy.stats

11 | import statsmodels.api as sm

12 |

13 | # Download data and unzip the data

14 | urllib.request.urlretrieve('http://economics.mit.edu/files/397', 'asciiqob.zip')

15 | with zipfile.ZipFile('asciiqob.zip', "r") as z:

16 | z.extractall()

17 |

18 | # Read the data into a pandas dataframe

19 | pums = pd.read_csv('asciiqob.txt',

20 | header = None,

21 | delim_whitespace = True)

22 | pums.columns = ['lwklywge', 'educ', 'yob', 'qob', 'pob']

23 |

24 | # Create binary variable

25 | pums['z'] = ((pums.educ == 3) | (pums.educ == 4)) * 1

26 |

27 | # Compare means (and differences)

28 | ttest_lwklywge = scipy.stats.ttest_ind(pums.lwklywge[pums.z == 1], pums.lwklywge[pums.z == 0])

29 | ttest_educ = scipy.stats.ttest_ind(pums.educ[pums.z == 1], pums.educ[pums.z == 0])

30 |

31 | # Compute Wald estimate (need to use arrays to use SUR in statsmodels)

32 | wald_estimate = (np.mean(pums.lwklywge[pums.z == 1]) - np.mean(pums.lwklywge[pums.z == 0])) / \

33 | (np.mean(pums.educ[pums.z == 1]) - np.mean(pums.educ[pums.z == 0]))

34 |

35 | # OLS estimate

36 | ols = sm.OLS(y, X).fit()

37 |

38 | # End of script

39 |

--------------------------------------------------------------------------------

/04 Instrumental Variables in Action/Table 4-1-1.do:

--------------------------------------------------------------------------------

1 | clear all

2 | set more off

3 | /* Stata code for Table 4-1-1 */

4 |

5 | /* Download data */

6 | shell curl -o asciiqob.zip http://economics.mit.edu/files/397

7 | unzipfile asciiqob.zip, replace

8 |

9 | /* Import data */

10 | infile lwklywge educ yob qob pob using asciiqob.txt, clear

11 |

12 | /* Column 1: OLS */

13 | regress lwklywge educ, robust

14 |

15 | /* Column 2: OLS with YOB, POB dummies */

16 | regress lwklywge educ i.yob i.pob, robust

17 |

18 | /* Column 3: 2SLS with instrument QOB = 1 */

19 | tabulate qob, gen(qob)

20 | ivregress 2sls lwklywge (educ = qob1), robust

21 |

22 | /* Column 4: 2SLS with YOB, POB dummies and instrument QOB = 1 */

23 | ivregress 2sls lwklywge i.yob i.pob (educ = qob1), robust

24 |

25 | /* Column 5: 2SLS with YOB, POB dummies and instrument (QOB = 1 | QOB = 2) */

26 | gen qob1or2 = (inlist(qob, 1, 2)) if !missing(qob)

27 | ivregress 2sls lwklywge i.yob i.pob (educ = qob1or2), robust

28 |

29 | /* Column 6: 2SLS with YOB, POB dummies and full QOB dummies */

30 | ivregress 2sls lwklywge i.yob i.pob (educ = i.qob), robust

31 |

32 | /* Column 7: 2SLS with YOB, POB dummies and full QOB dummies interacted with YOB */

33 | ivregress 2sls lwklywge i.yob i.pob (educ = i.qob#i.yob), robust

34 |

35 | /* Column 8: 2SLS with age, YOB, POB dummies and with full QOB dummies interacted with YOB */

36 | ivregress 2sls lwklywge i.yob i.pob (educ = i.qob#i.yob), robust

37 |

38 | /* End of script */

39 |

--------------------------------------------------------------------------------

/03 Making Regression Make Sense/Figure 3-1-2.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | """

3 | Tested on Python 3.4

4 | """

5 |

6 | import urllib

7 | import zipfile

8 | import urllib.request

9 | import pandas as pd

10 | import statsmodels.api as sm

11 | import matplotlib.pyplot as plt

12 |

13 | # Download data and unzip the data

14 | urllib.request.urlretrieve('http://economics.mit.edu/files/397', 'asciiqob.zip')

15 | with zipfile.ZipFile('asciiqob.zip', "r") as z:

16 | z.extractall()

17 |

18 | # Read the data into a pandas dataframe

19 | pums = pd.read_csv("asciiqob.txt", header=None, delim_whitespace=True)

20 | pums.columns = ["lwklywge", "educ", "yob", "qob", "pob"]

21 |

22 | # Set up the model

23 | y = pums.lwklywge

24 | X = pums.educ

25 | X = sm.add_constant(X)

26 |

27 | # Save coefficient on education

28 | model = sm.OLS(y, X)

29 | results = model.fit()

30 | educ_coef = results.params[1]

31 | intercept = results.params[0]

32 |

33 | # Calculate means by educ attainment and predicted values

34 | groupbyeduc = pums.groupby("educ")

35 | educ_means = groupbyeduc["lwklywge"].mean().reset_index()

36 | yhat = pd.Series(

37 | intercept + educ_coef * educ_means.index.values, index=educ_means.index.values

38 | )

39 |

40 | # Create plot

41 | plt.figure()

42 | educ_means.plot(kind="line", x="educ", y="lwklywge", style="-o")

43 | yhat.plot()

44 | plt.xlabel("Years of completed education")

45 | plt.ylabel("Log weekly earnings, \\$2003")

46 | plt.legend().set_visible(False)

47 | plt.savefig("Figure 3-1-2-Python.pdf")

48 |

49 | # End of script

50 |

--------------------------------------------------------------------------------

/08 Nonstandard Standard Error Issues/Table 8-1-1-alt.r:

--------------------------------------------------------------------------------

1 | # R code for Table 8-1-1 #

2 | # Required packages #

3 | # - sandwich: robust standard error #

4 | library(sandwich)

5 | library(data.table)

6 | library(knitr)

7 |

8 | # Set seed for replication

9 | set.seed(1984, "L'Ecuyer")

10 |

11 | # Set parameters

12 | NSIMS = 25000

13 | N = 30

14 | r = 0.9

15 | N1 = r * N

16 | sigma = 1

17 |

18 | # Generate random data

19 | dvec <- c(rep(0, N1), rep(1, N - N1))

20 | simulated.data <- data.table(sim = rep(1:NSIMS, each = N),

21 | y = NA,

22 | d = rep(dvec, NSIMS),

23 | epsilon = NA)

24 | simulated.data[ , epsilon := ifelse(d == 1,

25 | rnorm((N - N1) * 25),

26 | rnorm(N1 * NSIMS, sd = sigma))]

27 | simulated.data[ , y := 0 * d + epsilon]

28 |

29 | # Store a list of the standard error types

30 | se.types <- c("const", paste0("HC", 0:3))

31 |

32 | # Create a function to extract standard errors

33 | calculate.se <- function(lm.obj, type) {

34 | sqrt(vcovHC(lm.obj, type = type)[2, 2])

35 | }

36 |

37 | # Function to calculate results

38 | calculateBias <- function(formula) {

39 | lm.sim <- lm(formula)

40 | b1 <- coef(lm.sim)[2]

41 | se.sim <- sapply(se.types, calculate.se, lm.obj = lm.sim)

42 | c(b1, se.sim)

43 | }

44 | simulated.results <- simulated.data[ , as.list(calculateBias(y ~ d)), by = sim]

45 |

46 | # End of script

47 |

--------------------------------------------------------------------------------

/07 Quantile Regression/Table 7-1-1.do:

--------------------------------------------------------------------------------

1 | clear all

2 | set more off

3 |

4 | /* Stata code for Table 7.1.1 */

5 |

6 | /* Download data */

7 | shell curl -o angcherfer06.zip http://economics.mit.edu/files/384

8 | unzipfile angcherfer06.zip, replace

9 |

10 | /* Create matrix to store all the results */

11 | matrix R = J(6, 10, .)

12 | matrix rownames R = 80 80se 90 90se 00 00se

13 | matrix colnames R = Obs Mean SD 10 25 50 75 90 Coef MSE

14 |

15 | /* Loop through the years to get the results */

16 | foreach year in "80" "90" "00" {

17 | /* Load data */

18 | use "Data/census`year'.dta", clear

19 |

20 | /* Summary statistics */

21 | summ logwk

22 | matrix R[rownumb(R, "`year'"), colnumb(R, "Obs")] = r(N)

23 | matrix R[rownumb(R, "`year'"), colnumb(R, "Mean")] = r(mean)

24 | matrix R[rownumb(R, "`year'"), colnumb(R, "SD")] = r(sd)

25 |

26 | /* Define education variables */

27 | gen highschool = 1 if (educ == 12)

28 | gen college = 1 if (educ == 16)

29 |

30 | /* Run quantile regressions */

31 | foreach tau of numlist 10 25 50 75 90 {

32 | qreg logwk educ black exper exper2 [pweight = perwt], q(`tau')

33 | matrix R[rownumb(R, "`year'"), colnumb(R, "`tau'")] = _b[edu]

34 | matrix R[rownumb(R, "`year'se"), colnumb(R, "`tau'")] = _se[edu]

35 | }

36 |

37 | /* Run OLS */

38 | regress logwk educ black exper exper2 [pweight = perwt]

39 | matrix R[rownumb(R, "`year'"), colnumb(R, "Coef")] = _b[edu]

40 | matrix R[rownumb(R, "`year'se"), colnumb(R, "Coef")] = _se[edu]

41 | matrix R[rownumb(R, "`year'"), colnumb(R, "MSE")] = e(rmse)

42 | }

43 |

44 | /* List results */

45 | matlist R

46 |

47 | /* End of file */

48 |

--------------------------------------------------------------------------------

/04 Instrumental Variables in Action/Figure 4-6-1.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | """

3 | Create Figure 4-6-1 in MHE

4 | Tested on Python 3.4

5 | """

6 |

7 | import pandas as pd

8 | import numpy as np

9 | import statsmodels.api as sm

10 | from statsmodels.sandbox.regression import gmm

11 | import matplotlib.pyplot as plt

12 | import random

13 | import math

14 | from scipy.linalg import eigh

15 |

16 | # Number of simulations

17 | nsims = 10

18 |

19 | # Set seed

20 | random.seed(461)

21 |

22 | # Set parameters

23 | Sigma = [[1.0, 0.8],

24 | [0.8, 1.0]]

25 | mu = [0, 0]

26 | errors = np.random.multivariate_normal(mu, Sigma, 1000)

27 | eta = errors[:, 0]

28 | xi = errors[:, 1]

29 |

30 | # Create Z, x, y

31 | Z = np.random.multivariate_normal([0] * 20, np.identity(20), 1000)

32 | x = 0.1 * Z[: , 0] + xi

33 | y = x + eta

34 | x = sm.add_constant(x)

35 | Z = sm.add_constant(x)

36 |

37 | ols = sm.OLS(y, x).fit().params[1]

38 | # tsls = np.linalg.inv(np.transpose(Z).dot(x)).dot(np.transpose(Z).dot(y))[1]

39 | tsls = gmm.IV2SLS(y, x, Z).fit().params[1]

40 |

41 | def LIML(exogenous, endogenous, instruments):

42 | y = exogenous

43 | x = endogenous

44 | Z = instruments

45 | I = np.eye(y.shape[0])

46 | Mz = I - Z.dot(np.linalg.inv(np.transpose(Z).dot(Z))).dot(np.transpose(Z))

47 | Mx = I - x.dot(np.linalg.inv(np.transpose(x).dot(x))).dot(np.transpose(x))

48 | A = np.transpose(np.hstack((y, x[:,1]))).dot(Mz).dot(np.hstack((y, x[:,1])))

49 | k = 1

50 | beta = np.linalg.inv(np.transpose(Z).dot(I - k * M).dot(Z)).dot(np.transpose(Z).dot(I - k * M)).dot(y)

51 | return

52 |

53 | # End of script

54 |

--------------------------------------------------------------------------------

/06 Getting a Little Jumpy/Figure 6-2-1.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | """

3 | Create Figure 6.2.1 in MHE

4 | Tested on Python 3.4

5 | numpy: math and stat functions, array

6 | matplotlib: plot figures

7 | """

8 | import numpy as np

9 | import matplotlib.pyplot as plt

10 | import pandas as pd

11 |

12 | # Download data

13 | urllib.request.urlretrieve('http://economics.mit.edu/files/1359', 'final4.dta')

14 | urllib.request.urlretrieve('http://economics.mit.edu/files/1358', 'final5.dta')

15 |

16 | # Read the data into a pandas dataframe

17 | grade4 = pd.read_csv('final4.csv', encoding = 'iso8859_8')

18 | grade5 = pd.read_csv('final5.csv', encoding = 'iso8859_8')

19 |

20 | # Find means class size by grade size

21 | grade4means = grade4.groupby('c_size')['classize'].mean()

22 | grade5means = grade5.groupby('c_size')['classize'].mean()

23 |

24 | # Create grid and function for Maimonides Rule

25 | def maimonides_rule(x):

26 | return x / (np.floor((x - 1)/40) + 1)

27 |

28 | x = np.arange(0, 220, 1)

29 |

30 | # Plot figures

31 | fig = plt.figure()

32 |

33 | ax1 = fig.add_subplot(211)

34 | ax1.plot(grade4means)

35 | ax1.plot(x, maimonides_rule(x), '--')

36 | ax1.set_xticks(range(0, 221, 20))

37 | ax1.set_xlabel("Enrollment count")

38 | ax1.set_ylabel("Class size")

39 | ax1.set_title('B. Fourth grade')

40 |

41 | ax2 = fig.add_subplot(212)

42 | ax2.plot(grade5means)

43 | ax2.plot(x, maimonides_rule(x), '--')

44 | ax2.set_xticks(range(0, 221, 20))

45 | ax2.set_xlabel("Enrollment count")

46 | ax2.set_ylabel("Class size")

47 | ax2.set_title('A. Fifth grade')

48 |

49 | plt.tight_layout()

50 | plt.savefig('Figure 6-2-1-Python.png', dpi = 300)

51 |

52 | # End of script

53 |

--------------------------------------------------------------------------------

/06 Getting a Little Jumpy/Figure 6-2-1.jl:

--------------------------------------------------------------------------------

1 | # Load packages

2 | using DataFrames

3 | using Gadfly

4 |

5 | # Download the data

6 | download("http://economics.mit.edu/files/1359", "final4.dta")

7 | download("http://economics.mit.edu/files/1358", "final5.dta")

8 |

9 | # Load the data

10 | grade4 = readtable("final4.csv");

11 | grade5 = readtable("final5.csv");

12 |

13 | # Find means class size by grade size

14 | grade4 = grade4[[:c_size, :classize]];

15 | grade4means = aggregate(grade4, :c_size, [mean])

16 |

17 | grade5 = grade5[[:c_size, :classize]];

18 | grade5means = aggregate(grade5, :c_size, [mean])

19 |

20 | # Create function for Maimonides Rule

21 | function maimonides_rule(x)

22 | x / (floor((x - 1)/40) + 1)

23 | end

24 |

25 | ticks = collect(0:20:220)

26 | p_grade4 = plot(layer(x = grade4means[:c_size], y = grade4means[:classize_mean], Geom.line),

27 | layer(maimonides_rule, 1, 220, Theme(line_style = Gadfly.get_stroke_vector(:dot))),

28 | Guide.xticks(ticks = ticks),

29 | Guide.xlabel("Enrollment count"),

30 | Guide.ylabel("Class size"),

31 | Guide.title("B. Fourth grade"))

32 |

33 | p_grade5 = plot(layer(x = grade5means[:c_size], y = grade5means[:classize_mean], Geom.line),

34 | layer(maimonides_rule, 1, 220, Theme(line_style = Gadfly.get_stroke_vector(:dot))),

35 | Guide.xticks(ticks = ticks),

36 | Guide.xlabel("Enrollment count"),

37 | Guide.ylabel("Class size"),

38 | Guide.title("A. Fifth grade"))

39 |

40 | draw(PNG("Figure 6-2-1-Julia.png", 6inch, 8inch), vstack(p_grade5, p_grade4))

41 |

42 | # End of script

43 |

--------------------------------------------------------------------------------

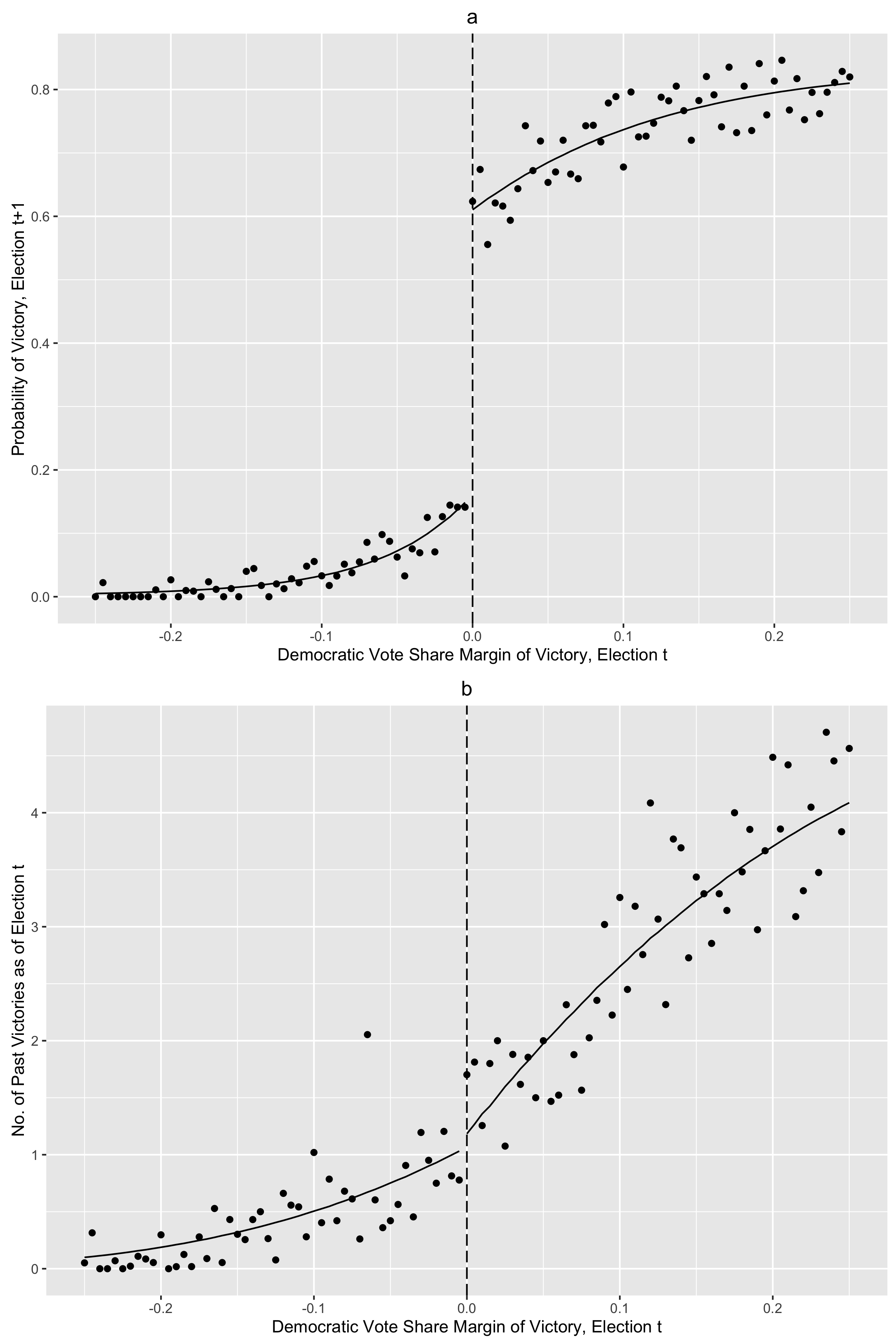

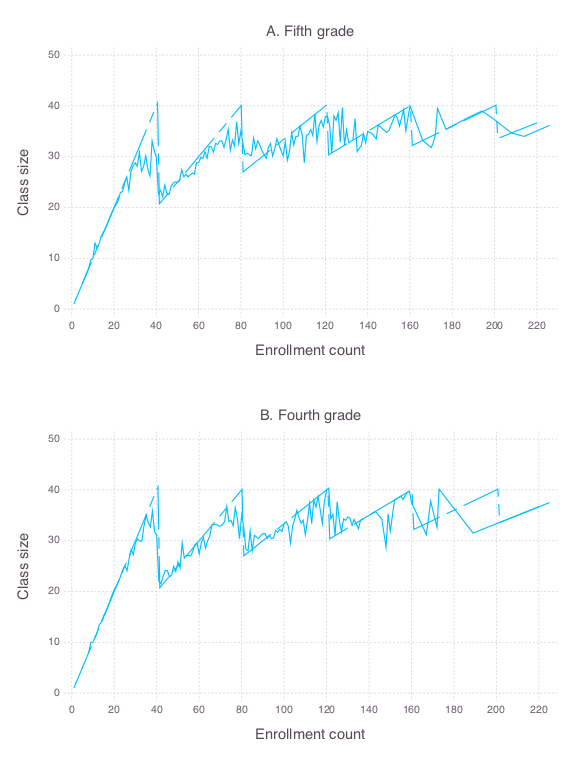

/04 Instrumental Variables in Action/04 Instrumental Variables in Action.md:

--------------------------------------------------------------------------------

1 | # 04 Instrumental Variables in Action

2 | ## 4.1 IV and causality

3 |

4 | ### Figure 4-1-1

5 |

6 | Completed in [Stata](Figure%204-1-1.do), [R](Figure%204-1-1.r) and [Python](Figure%204-1-1.py)

7 |

8 |

9 |

10 | ### Table 4-1-2

11 |

12 | Completed in [Stata](Table%204-1-2.do) and [R](Table%204-1-2.r)

13 |

14 | | | Born in the 1st or 2nd quarter of year| Born in the 3rd or 4th quarter of year| Difference|

15 | |:------------------|--------------------------------------:|--------------------------------------:|----------:|

16 | |ln(weekly wage) | 5.893844| 5.905829| 0.0119847|

17 | |Years of education | 12.716122| 12.821813| 0.1056907|

18 | |Wald estimate | NA| NA| 0.1133937|

19 | |Wald std error | NA| NA| 0.0215257|

20 | |OLS estimate | NA| NA| 0.0708510|

21 | |OLS std error | NA| NA| 0.0003386|

22 |

23 | ### Figure 4-6-1

24 |

25 | Completed in [Stata](Figure%204-6-1.do) and [R](Figure%204-6-1.r)

26 |

27 |

28 |

--------------------------------------------------------------------------------

/03 Making Regression Make Sense/Figure 3-1-3.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | """

3 | Tested on Python 3.11.5

4 | """

5 |

6 | import urllib

7 | import zipfile

8 | import urllib.request

9 | import pandas as pd

10 | import statsmodels.api as sm

11 | import statsmodels.formula.api as smf

12 |

13 | # Read the data into a pandas.DataFrame

14 | angrist_archive_url = (

15 | 'https://economics.mit.edu/sites/'

16 | 'default/files/publications/asciiqob.zip'

17 | )

18 | pums = pd.read_csv(

19 | angrist_archive_url,

20 | compression = 'zip',

21 | header = None,

22 | sep = '\s+'

23 | )

24 | pums.columns = ['lwklywge', 'educ', 'yob', 'qob', 'pob']

25 |

26 | # Panel A

27 | # Set up the model and fit it

28 | mod_a = smf.ols(

29 | formula = 'lwklywge ~ educ',

30 | data = pums

31 | )

32 | res_a = mod_a.fit()

33 | # Old-fashioned standard errors

34 | print(res_a.summary(title='Old-fashioned standard errors'))

35 | # Robust standard errors

36 | res_a_robust = res_a.get_robustcov_results(cov_type='HC1')

37 | print(

38 | res_a_robust.summary(title='Robust standard errors')

39 | )

40 | # Panel B

41 | # Calculate means and count by educ attainment

42 | pums_agg = pums.groupby('educ').agg(

43 | lwklywge = ('lwklywge', 'mean'),

44 | count = ('lwklywge', 'count')

45 | ).reset_index()

46 | # Set up the model and fit it

47 | mod_b = smf.wls(

48 | formula = 'lwklywge ~ educ',

49 | weights = pums_agg['count'],

50 | data = pums_agg

51 | )

52 | res_b = mod_b.fit()

53 | # Old-fashioned standard errors

54 | print(res_b.summary(title='Old-fashioned standard errors'))

55 | # Robust standard errors

56 | res_b_robust = res_b.get_robustcov_results(cov_type='HC1')

57 | print(

58 | res_b_robust.summary(title='Robust standard errors')

59 | )

60 |

61 | # End of script

62 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Mostly Harmless Replication

2 |  3 |

4 | ## Synopsis

5 |

6 | A bold attempt to replicate the tables and figures from the book [_Mostly Harmless Econometrics_](http://www.mostlyharmlesseconometrics.com/) in the following languages:

7 | * Stata

8 | * R

9 | * Python

10 | * Julia

11 |

12 | Why undertake this madness? My primary motivation was to see if I could replace Stata with either R, Python, or Julia in my workflow, so I tried to replicate _Mostly Harmless Econometrics_ in each of these languages.

13 |

14 | ## Chapters

15 | 1. Questions about _Questions_

16 | 2. The Experimental Ideal

17 | 3. [Making Regression Make Sense](03%20Making%20Regression%20Make%20Sense/03%20Making%20Regression%20Make%20Sense.md)

18 | 4. [Instrumental Variables in Action](04%20Instrumental%20Variables%20in%20Action/04%20Instrumental%20Variables%20in%20Action.md)

19 | 5. [Parallel Worlds](05%20Fixed%20Effects%2C%20DD%20and%20Panel%20Data/05%20Fixed%20Effects%2C%20DD%20and%20Panel%20Data.md)

20 | 6. [Getting a Little Jumpy](06%20Getting%20a%20Little%20Jumpy/06%20Getting%20a%20Little%20Jumpy.md)

21 | 7. [Quantile Regression](07%20Quantile%20Regression/07%20Quantile%20Regression.md)

22 | 8. [Nonstandard Standard Error Issues](08%20Nonstandard%20Standard%20Error%20Issues/08%20Nonstanard%20Standard%20Error%20Issues.md)

23 |

24 | ## Getting started

25 | Check out [Getting Started](https://github.com/vikjam/mostly-harmless-replication/wiki/Getting-started) in the Wiki for tips on setting up your machine with each of these languages.

26 |

27 | ## Contributions

28 | Feel free to submit [pull requests](https://github.com/blog/1943-how-to-write-the-perfect-pull-request)!

29 |

30 |

--------------------------------------------------------------------------------

/03 Making Regression Make Sense/Figure 3-1-3.r:

--------------------------------------------------------------------------------

1 | # R code for Figure 3.1.3 #

2 | # Required packages #

3 | # - sandwhich: robust standard errors #

4 | # - lmtest: print table with robust standard errors #

5 | # - data.table: aggregate function #

6 | library(sandwich)

7 | library(lmtest)

8 |

9 | # Download data and unzip the data

10 | download.file('http://economics.mit.edu/files/397', 'asciiqob.zip')

11 | unzip('asciiqob.zip')

12 |

13 | # Read the data into a dataframe

14 | pums <- read.table('asciiqob.txt',

15 | header = FALSE,

16 | stringsAsFactors = FALSE)

17 | names(pums) <- c('lwklywge', 'educ', 'yob', 'qob', 'pob')

18 |

19 | # Panel A

20 | # Estimate OLS regression

21 | reg.model <- lm(lwklywge ~ educ, data = pums)

22 | # Robust standard errors

23 | robust.reg.vcov <- vcovHC(reg.model, "HC1")

24 | # Print results

25 | print(summary(reg.model))

26 | print(coeftest(reg.model, vcov = robust.reg.vcov))

27 |

28 | # Panel B

29 | # Figure out which observations appear in the regression

30 | sample <- !is.na(predict(reg.model, data = pums))

31 | pums.data.table <- data.table(pums[sample, ])

32 | # Aggregate

33 | educ.means <- pums.data.table[ , list(mean = mean(lwklywge),

34 | count = length(lwklywge)),

35 | by = educ]

36 | # Estimate weighted OLS regression

37 | wgt.reg.model <- lm(lwklywge ~ educ,

38 | weights = pums.data.table$count,

39 | data = pums.data.table)

40 | # Robust standard errors with weighted OLS regression

41 | wgt.robust.reg.vcov <- vcovHC(wgt.reg.model, "HC1")

42 | # Print results

43 | print(summary(wgt.reg.model))

44 | print(coeftest(wgt.reg.model, vcov = wgt.reg.vcov))

45 |

46 | # End of file

47 |

--------------------------------------------------------------------------------

/03 Making Regression Make Sense/Table 3-3-2.do:

--------------------------------------------------------------------------------

1 | clear all

2 | set more off

3 | eststo clear

4 | /* Required programs */

5 | /* - estout: output table */

6 |

7 | /* Stata code for Table 3.3.2 */

8 |

9 | * Store URL to MHE Data Archive in local

10 | local base_url = "https://economics.mit.edu/sites/default/files/inline-files"

11 |

12 | /* Store variable list in local */

13 | local summary_var "age ed black hisp nodeg married re74 re75"

14 | local pscore_var "age age2 ed black hisp married nodeg re74 re75"

15 |

16 | /* Columns 1 and 2 */

17 | use "`base_url'/nswre74.dta", clear

18 | eststo column_1, title("NSW Treat"): estpost summarize `summary_var' if treat == 1

19 | eststo column_2, title("NSW Control"): estpost summarize `summary_var' if treat == 0

20 |

21 | /* Column 3 */

22 | use "`base_url'/cps1re74.dta", clear

23 | eststo column_3, title("Full CPS-1"): estpost summarize `summary_var' if treat == 0

24 |

25 | /* Column 5 */

26 | probit treat `pscore_var'

27 | predict p_score, pr

28 | keep if p_score > 0.1 & p_score < 0.9

29 |

30 | eststo column_5, title("P-score CPS-1"): estpost summarize `summary_var' if treat == 0

31 |

32 | /* Column 4 */

33 | use "`base_url'/cps3re74.dta", clear

34 | eststo column_4, title("Full CPS-3"): estpost summarize `summary_var' if treat == 0

35 |

36 | /* Column 6 */

37 | probit treat `pscore_var'

38 | predict p_score, pr

39 | keep if p_score > 0.1 & p_score < 0.9

40 |

41 | eststo column_6, title("P-score CPS-3"): estpost summarize `summary_var' if treat == 0

42 |

43 | /* Label variables */

44 | label var age "Age"

45 | label var ed "Years of Schooling"

46 | label var black "Black"

47 | label var hisp "Hispanic"

48 | label var nodeg "Dropout"

49 | label var married "Married"

50 | label var re74 "1974 earnings"

51 | label var re75 "1975 earnings"

52 |

53 | /* Output Table */

54 | esttab column_1 column_2 column_3 column_4 column_5 column_6, ///

55 | label mtitle ///

56 | cells(mean(label(Mean) fmt(2 2 2 2 2 2 0 0)))

57 |

58 | /* End of script */

59 | exit

60 |

--------------------------------------------------------------------------------

/04 Instrumental Variables in Action/Figure 4-6-1.jl:

--------------------------------------------------------------------------------

1 | # Julia code for Table 4-6-1 #

2 | # Required packages #

3 | # - DataFrames: data manipulation / storage #

4 | # - Distributions: extended stats functions #

5 | # - FixedEffectModels: IV regression #

6 | using DataFrames

7 | using Distributions

8 | using FixedEffectModels

9 | using GLM

10 | using Gadfly

11 |

12 | # Number of simulations

13 | nsims = 1000

14 |

15 | # Set seed

16 | srand(113643)

17 |

18 | # Set parameters

19 | Sigma = [1.0 0.8;

20 | 0.8 1.0]

21 | N = 1000

22 |

23 | function irrelevantInstrMC()

24 | # Create Z, xi and eta

25 | Z = DataFrame(transpose(rand(MvNormal(eye(20)), N)))

26 | errors = DataFrame(transpose(rand(MvNormal(Sigma), N)))

27 |

28 | # Rename columns of Z and errors

29 | names!(Z, [Symbol("z$i") for i in 1:20])

30 | names!(errors, [:eta, :xi])

31 |

32 | # Create y and x

33 | df = hcat(Z, errors);

34 | df[:x] = 0.1 .* df[:z1] .+ df[:xi]

35 | df[:y] = df[:x] .+ df[:eta]

36 |

37 | # Run regressions

38 | ols = coef(lm(@formula(y ~ x), df))[2]

39 | tsls = coef(reg(df, @model(y ~ z1 + z2 + z3 + z4 + z5 + z6 + z7 + z8 + z9 + z10 +

40 | z11 + z12 + z13 + z14 + z15 + z16 + z17 + z18 + z19 + z20)))[2]

41 | return([ols tsls])

42 | end

43 |

44 | # Simulate IV regressions

45 | simulation_results = zeros(nsims, 2);

46 | for i = 1:nsims

47 | simulation_results[i, :] = irrelevantInstrMC()

48 | end

49 |

50 | # Create empirical CDFs from simulated results

51 | ols_ecdf = ecdf(simulation_results[:, 1])

52 | tsls_ecdf = ecdf(simulation_results[:, 2])

53 |

54 | # Plot the empirical CDFs of each estimator

55 | p = plot(layer(ols_ecdf, 0, 2.5, Theme(default_color = colorant"red")),

56 | layer(tsls_ecdf, 0, 2.5, Theme(line_style = :dot)),

57 | layer(xintercept = [0.5], Geom.vline,

58 | Theme(default_color = colorant"black", line_style = :dot)),

59 | layer(yintercept = [0.5], Geom.hline,

60 | Theme(default_color = colorant"black", line_style = :dot)),

61 | Guide.xlabel("Estimated β"),

62 | Guide.ylabel("Fn(Estimated β)"))

63 |

64 | # Export figure as .png

65 | draw(PNG("Figure 4-6-1-Julia.png", 7inch, 6inch), p)

66 |

67 | # End of script

68 |

--------------------------------------------------------------------------------

/06 Getting a Little Jumpy/Figure 6-1-1.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | """

3 | Create Figure 4-6-1 in MHE

4 | Tested on Python 3.4

5 | numpy: math and stat functions, array

6 | matplotlib: plot figures

7 | """

8 |

9 | import numpy as np

10 | import matplotlib.pyplot as plt

11 |

12 | # Set seed

13 | np.random.seed(10633)

14 |

15 | # Set number of simulations

16 | nobs = 100

17 |

18 | # Generate series

19 | x = np.random.uniform(0, 1, nobs)

20 | x = np.sort(x)

21 | y_linear = x + (x > 0.5) * 0.25 + np.random.normal(0, 0.1, nobs)

22 | y_nonlin = 0.5 * np.sin(6 * (x - 0.5)) + 0.5 + (x > 0.5) * 0.25 + np.random.normal(0, 0.1, nobs)

23 | y_mistake = 1 / (1 + np.exp(-25 * (x - 0.5))) + np.random.normal(0, 0.1, nobs)

24 |

25 | # Fit lines using user-created function

26 | def rdfit(x, y, cutoff, degree):

27 | coef_0 = np.polyfit(x[cutoff >= x], y[cutoff >= x], degree)

28 | fit_0 = np.polyval(coef_0, x[cutoff >= x])

29 |

30 | coef_1 = np.polyfit(x[x > cutoff], y[x > cutoff], degree)

31 | fit_1 = np.polyval(coef_1, x[x > cutoff])

32 |

33 | return coef_0, fit_0, coef_1, fit_1

34 |

35 | coef_y_linear_0 , fit_y_linear_0 , coef_y_linear_1 , fit_y_linear_1 = rdfit(x, y_linear, 0.5, 1)

36 | coef_y_nonlin_0 , fit_y_nonlin_0 , coef_y_nonlin_1 , fit_y_nonlin_1 = rdfit(x, y_nonlin, 0.5, 2)

37 | coef_y_mistake_0, fit_y_mistake_0, coef_y_mistake_1, fit_y_mistake_1 = rdfit(x, y_mistake, 0.5, 1)

38 |

39 | # Plot figures

40 | fig = plt.figure()

41 |

42 | ax1 = fig.add_subplot(311)

43 | ax1.scatter(x, y_linear, edgecolors = 'none')

44 | ax1.plot(x[0.5 >= x], fit_y_linear_0)

45 | ax1.plot(x[x > 0.5], fit_y_linear_1)

46 | ax1.axvline(0.5)

47 | ax1.set_title(r'A. Linear $E[Y_{0i} | X_i]$')

48 |

49 | ax2 = fig.add_subplot(312)

50 | ax2.scatter(x, y_nonlin, edgecolors = 'none')

51 | ax2.plot(x[0.5 >= x], fit_y_nonlin_0)

52 | ax2.plot(x[x > 0.5], fit_y_nonlin_1)

53 | ax2.axvline(0.5)

54 | ax2.set_title(r'B. Nonlinear $E[Y_{0i} | X_i]$')

55 |

56 | ax3 = fig.add_subplot(313)

57 | ax3.scatter(x, y_mistake, edgecolors = 'none')

58 | ax3.plot(x[0.5 >= x], fit_y_mistake_0)

59 | ax3.plot(x[x > 0.5], fit_y_mistake_1)

60 | ax3.plot(x, 1 / (1 + np.exp(-25 * (x - 0.5))), '--')

61 | ax3.axvline(0.5)

62 | ax3.set_title('C. Nonlinearity mistaken for discontinuity')

63 |

64 | plt.tight_layout()

65 | plt.savefig('Figure 6-1-1-Python.png', dpi = 300)

66 |

67 | # End of script

68 |

--------------------------------------------------------------------------------

/04 Instrumental Variables in Action/Table 4-1-1.r:

--------------------------------------------------------------------------------

1 | # R code for Table 4-1-1 #

2 | # Required packages #

3 | # - data.table: data management #

4 | # - sandwich: standard errors #

5 | # - AER: running IV regressions #

6 |

7 | library(data.table)

8 | library(sandwich)

9 | library(AER)

10 |

11 | # Download data and unzip the data

12 | download.file('http://economics.mit.edu/files/397', 'asciiqob.zip')

13 | unzip('asciiqob.zip')

14 |

15 | # Read the data into a data.table

16 | pums <- fread('asciiqob.txt',

17 | header = FALSE,

18 | stringsAsFactors = FALSE)

19 | names(pums) <- c('lwklywge', 'educ', 'yob', 'qob', 'pob')

20 |

21 | # Column 1: OLS

22 | col1 <- lm(lwklywge ~ educ, pums)

23 |

24 | # Column 2: OLS with YOB, POB dummies

25 | col2 <- lm(lwklywge ~ educ + factor(yob) + factor(pob), pums)

26 |

27 | # Create dummies for quarter of birth

28 | qobs <- unique(pums$qob)

29 | qobs.vars <- sapply(qobs, function(x) paste0('qob', x))

30 | pums[, (qobs.vars) := lapply(qobs, function(x) qob == x)]

31 |

32 | # Column 3: 2SLS with instrument QOB = 1

33 | col3 <- ivreg(lwklywge ~ educ, ~ qob1, pums)

34 |

35 | # Column 4: 2SLS with YOB, POB dummies and instrument QOB = 1

36 | col4 <- ivreg(lwklywge ~ factor(yob) + factor(pob) + educ,

37 | ~ factor(yob) + factor(pob) + qob1,

38 | pums)

39 |

40 | # Create dummy for quarter 1 or 2

41 | pums[, qob1or2 := qob == 1 | qob == 2]

42 |

43 | # Column 5: 2SLS with YOB, POB dummies and instrument (QOB = 1 | QOB = 2)

44 | col5 <- ivreg(lwklywge ~ factor(yob) + factor(pob) + educ,

45 | ~ factor(yob) + factor(pob) + qob1or2,

46 | pums)

47 |

48 | # Column 6: 2SLS with YOB, POB dummies and full QOB dummies

49 | col6 <- ivreg(lwklywge ~ factor(yob) + factor(pob) + educ,

50 | ~ factor(yob) + factor(pob) + factor(qob),

51 | pums)

52 |

53 | # Column 7: 2SLS with YOB, POB dummies and full QOB dummies interacted with YOB

54 | col7 <- ivreg(lwklywge ~ factor(yob) + factor(pob) + educ,

55 | ~ factor(pob) + factor(qob) * factor(yob),

56 | pums)

57 |

58 | # Column 8: 2SLS with age, YOB, POB dummies and with full QOB dummies interacted with YOB

59 | col8 <- ivreg(lwklywge ~ factor(yob) + factor(pob) + educ,

60 | ~ factor(pob) + factor(qob) * factor(yob),

61 | pums)

62 |

63 | # End of script

64 |

--------------------------------------------------------------------------------

/04 Instrumental Variables in Action/Figure 4-1-1.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | """

3 | Create Figure 4-6-1 in MHE

4 | Tested on Python 3.4

5 | """

6 |

7 | import zipfile

8 | import urllib.request

9 | import pandas as pd

10 | import statsmodels.api as sm

11 | import matplotlib.pyplot as plt

12 | from matplotlib.ticker import FormatStrFormatter

13 |

14 | # Download data and unzip the data

15 | urllib.request.urlretrieve('http://economics.mit.edu/files/397', 'asciiqob.zip')

16 | with zipfile.ZipFile('asciiqob.zip', "r") as z:

17 | z.extractall()

18 |

19 | # Read the data into a pandas dataframe

20 | pums = pd.read_csv('asciiqob.txt',

21 | header = None,

22 | delim_whitespace = True)

23 | pums.columns = ['lwklywge', 'educ', 'yob', 'qob', 'pob']

24 |

25 | # Calculate means by educ and lwklywge

26 | groupbybirth = pums.groupby(['yob', 'qob'])

27 | birth_means = groupbybirth['lwklywge', 'educ'].mean()

28 |

29 | # Create function to plot figures

30 | def plot_qob(yvar, ax, title, ylabel):

31 | values = yvar.values

32 | ax.plot(values, color = 'k')

33 |

34 | for i, y in enumerate(yvar):

35 | qob = yvar.index.get_level_values('qob')[i]

36 | ax.annotate(qob,

37 | (i, y),

38 | xytext = (-5, 5),

39 | textcoords = 'offset points')

40 | if qob == 1:

41 | ax.scatter(i, y, marker = 's', facecolors = 'none', edgecolors = 'k')

42 | else:

43 | ax.scatter(i, y, marker = 's', color = 'k')

44 |

45 | ax.set_xticks(range(0, len(yvar), 4))

46 | ax.set_xticklabels(yvar.index.get_level_values('yob')[1::4])

47 | ax.set_title(title)

48 | ax.set_ylabel(ylabel)

49 | ax.yaxis.set_major_formatter(FormatStrFormatter('%.2f'))

50 | ax.set_xlabel("Year of birth")

51 | ax.margins(0.1)

52 |

53 | fig, (ax1, ax2) = plt.subplots(2, sharex = True)

54 |

55 | plot_qob(yvar = birth_means['educ'],

56 | ax = ax1,

57 | title = 'A. Average education by quarter of birth (first stage)',

58 | ylabel = 'Years of education')

59 |

60 | plot_qob(yvar = birth_means['lwklywge'],

61 | ax = ax2,

62 | title = 'B. Average weekly wage by quarter of birth (reduced form)',

63 | ylabel = 'Log weekly earnings')

64 |

65 | fig.tight_layout()

66 | fig.savefig('Figure 4-1-1-Python.pdf', format = 'pdf')

67 |

68 | # End of file

69 |

--------------------------------------------------------------------------------

/06 Getting a Little Jumpy/Figure 6-1-1.r:

--------------------------------------------------------------------------------

1 | # R code for Figure 6-1-1 #

2 | # Required packages #

3 | # - ggplot2: making pretty graphs #

4 | # - gridExtra: combine graphs #

5 | library(ggplot2)

6 | library(gridExtra)

7 |

8 | # Generate series

9 | nobs = 100

10 | x <- runif(nobs)

11 | y.linear <- x + (x > 0.5) * 0.25 + rnorm(n = nobs, mean = 0, sd = 0.1)

12 | y.nonlin <- 0.5 * sin(6 * (x - 0.5)) + 0.5 + (x > 0.5) * 0.25 + rnorm(n = nobs, mean = 0, sd = 0.1)

13 | y.mistake <- 1 / (1 + exp(-25 * (x - 0.5))) + rnorm(n = nobs, mean = 0, sd = 0.1)

14 | rd.series <- data.frame(x, y.linear, y.nonlin, y.mistake)

15 |

16 | # Make graph with ggplot2

17 | g.data <- ggplot(rd.series, aes(x = x, group = x > 0.5))

18 |

19 | p.linear <- g.data + geom_point(aes(y = y.linear)) +

20 | stat_smooth(aes(y = y.linear),

21 | method = "lm",

22 | se = FALSE) +

23 | geom_vline(xintercept = 0.5) +

24 | ylab("Outcome") +

25 | ggtitle(bquote('A. Linear E[' * Y["0i"] * '|' * X[i] * ']'))

26 |

27 | p.nonlin <- g.data + geom_point(aes(y = y.nonlin)) +

28 | stat_smooth(aes(y = y.nonlin),

29 | method = "lm",

30 | formula = y ~ poly(x, 2),

31 | se = FALSE) +

32 | geom_vline(xintercept = 0.5) +

33 | ylab("Outcome") +

34 | ggtitle(bquote('B. Nonlinear E[' * Y["0i"] * '|' * X[i] * ']'))

35 |

36 | f.mistake <- function(x) {1 / (1 + exp(-25 * (x - 0.5)))}

37 | p.mistake <- g.data + geom_point(aes(y = y.mistake)) +

38 | stat_smooth(aes(y = y.mistake),

39 | method = "lm",

40 | se = FALSE) +

41 | stat_function(fun = f.mistake,

42 | linetype = "dashed") +

43 | geom_vline(xintercept = 0.5) +

44 | ylab("Outcome") +

45 | ggtitle('C. Nonlinearity mistaken for discontinuity')

46 |

47 | p.rd.examples <- arrangeGrob(p.linear, p.nonlin, p.mistake, ncol = 1)

48 |

49 | ggsave(p.rd.examples, file = "Figure 6-1-1-R.pdf", width = 5, height = 9)

50 |

51 | # End of script

52 |

--------------------------------------------------------------------------------

/04 Instrumental Variables in Action/Table 4-1-2.r:

--------------------------------------------------------------------------------

1 | # R code for Table 4-1-2 #

2 | # Required packages #

3 | # - data.table: data management #

4 | # - systemfit: SUR #

5 | library(data.table)

6 | library(systemfit)

7 |

8 | # Download data and unzip the data

9 | download.file('http://economics.mit.edu/files/397', 'asciiqob.zip')

10 | unzip('asciiqob.zip')

11 |

12 | # Read the data into a data.table

13 | pums <- fread('asciiqob.txt',

14 | header = FALSE,

15 | stringsAsFactors = FALSE)

16 | names(pums) <- c('lwklywge', 'educ', 'yob', 'qob', 'pob')

17 |

18 | # Create binary variable

19 | pums$z <- (pums$qob == 3 | pums$qob == 4) * 1

20 |

21 | # Compare means (and differences)

22 | ttest.lwklywge <- t.test(lwklywge ~ z, pums)

23 | ttest.educ <- t.test(educ ~ z, pums)

24 |

25 | # Compute Wald estimate

26 | sur <- systemfit(list(first = educ ~ z,

27 | second = lwklywge ~ z),

28 | data = pums,

29 | method = "SUR")

30 | wald <- deltaMethod(sur, "second_z / first_z")

31 |

32 | wald.estimate <- (mean(pums$lwklywge[pums$z == 1]) - mean(pums$lwklywge[pums$z == 0])) /

33 | (mean(pums$educ[pums$z == 1]) - mean(pums$educ[pums$z == 0]))

34 | wald.se <- wald.estimate^2 * ()

35 |

36 | # OLS estimate

37 | ols <- lm(lwklywge ~ educ, pums)

38 |

39 | # Construct table

40 | lwklywge.row <- c(ttest.lwklywge$estimate[1],

41 | ttest.lwklywge$estimate[2],

42 | ttest.lwklywge$estimate[2] - ttest.lwklywge$estimate[1])

43 | educ.row <- c(ttest.educ$estimate[1],

44 | ttest.educ$estimate[2],

45 | ttest.educ$estimate[2] - ttest.educ$estimate[1])

46 | wald.row.est <- c(NA, NA, wald$Estimate)

47 | wald.row.se <- c(NA, NA, wald$SE)

48 |

49 | ols.row.est <- c(NA, NA, summary(ols)$coef['educ' , 'Estimate'])

50 | ols.row.se <- c(NA, NA, summary(ols)$coef['educ' , 'Std. Error'])

51 |

52 | table <- rbind(lwklywge.row, educ.row,

53 | wald.row.est, wald.row.se,

54 | ols.row.est, ols.row.se)

55 | colnames(table) <- c("Born in the 1st or 2nd quarter of year",

56 | "Born in the 3rd or 4th quarter of year",

57 | "Difference")

58 | rownames(table) <- c("ln(weekly wage)",

59 | "Years of education",

60 | "Wald estimate",

61 | "Wald std error",

62 | "OLS estimate",

63 | "OLS std error")

64 |

65 | # End of script

66 |

--------------------------------------------------------------------------------

/08 Nonstandard Standard Error Issues/08 Nonstanard Standard Error Issues.md:

--------------------------------------------------------------------------------

1 | # 08 Nonstandard Standard Error Issues

2 | ## 8.1 The Bias of Robust Standard Errors

3 |

4 | ### Table 8.1.1

5 | Completed in [Stata](Table%208-1-1.do), [R](Table%208-1-1.r), [Python](Table%208-1-1.py) and [Julia](Table%208-1-1.jl)

6 |

7 | _Panel A: Lots of Heteroskedasticity_

8 |

9 | |Estimate | Mean| Std| Normal| t|

10 | |:----------------------|------:|-----:|------:|-----:|

11 | |Beta_1 | -0.006| 0.581| NA| NA|

12 | |Conventional | 0.331| 0.052| 0.269| 0.249|

13 | |HC0 | 0.433| 0.210| 0.227| 0.212|

14 | |HC1 | 0.448| 0.218| 0.216| 0.201|

15 | |HC2 | 0.525| 0.260| 0.171| 0.159|

16 | |HC3 | 0.638| 0.321| 0.124| 0.114|

17 | |max(Conventional, HC0) | 0.461| 0.182| 0.174| 0.159|

18 | |max(Conventional, HC1) | 0.474| 0.191| 0.167| 0.152|

19 | |max(Conventional, HC2) | 0.543| 0.239| 0.136| 0.123|

20 | |max(Conventional, HC3) | 0.650| 0.305| 0.101| 0.091|

21 |

22 | _Panel B: Little Heteroskedasticity_

23 |

24 | |Estimate | Mean| Std| Normal| t|

25 | |:----------------------|------:|-----:|------:|-----:|

26 | |Beta_1 | -0.006| 0.595| NA| NA|

27 | |Conventional | 0.519| 0.070| 0.097| 0.084|

28 | |HC0 | 0.456| 0.200| 0.204| 0.188|

29 | |HC1 | 0.472| 0.207| 0.191| 0.175|

30 | |HC2 | 0.546| 0.251| 0.153| 0.140|

31 | |HC3 | 0.656| 0.312| 0.112| 0.102|

32 | |max(Conventional, HC0) | 0.569| 0.130| 0.081| 0.070|

33 | |max(Conventional, HC1) | 0.577| 0.139| 0.079| 0.067|

34 | |max(Conventional, HC2) | 0.625| 0.187| 0.068| 0.058|

35 | |max(Conventional, HC3) | 0.712| 0.260| 0.054| 0.045|

36 |

37 | _Panel C: No Heteroskedasticity_

38 |

39 | |Estimate | Mean| Std| Normal| t|

40 | |:----------------------|------:|-----:|------:|-----:|

41 | |Beta_1 | -0.006| 0.604| NA| NA|

42 | |Conventional | 0.603| 0.081| 0.059| 0.049|

43 | |HC0 | 0.469| 0.196| 0.193| 0.177|

44 | |HC1 | 0.485| 0.203| 0.180| 0.165|

45 | |HC2 | 0.557| 0.246| 0.145| 0.131|

46 | |HC3 | 0.667| 0.308| 0.106| 0.097|

47 | |max(Conventional, HC0) | 0.633| 0.116| 0.052| 0.043|

48 | |max(Conventional, HC1) | 0.639| 0.123| 0.051| 0.042|

49 | |max(Conventional, HC2) | 0.678| 0.166| 0.045| 0.036|

50 | |max(Conventional, HC3) | 0.752| 0.237| 0.036| 0.030|

51 |

--------------------------------------------------------------------------------

/04 Instrumental Variables in Action/Figure 4-1-1.r:

--------------------------------------------------------------------------------

1 | # R code for Figure 4-1-1 #

2 | # Required packages #

3 | # - dplyr: easy data manipulation #

4 | # - lubridate: data management #

5 | # - ggplot2: making pretty graphs #

6 | # - gridExtra: combine graphs #

7 | library(lubridate)

8 | library(dplyr)

9 | library(ggplot2)

10 | library(gridExtra)

11 |

12 | # Download data and unzip the data

13 | download.file('http://economics.mit.edu/files/397', 'asciiqob.zip')

14 | unzip('asciiqob.zip')

15 |

16 | # Read the data into a dataframe

17 | pums <- read.table('asciiqob.txt',

18 | header = FALSE,

19 | stringsAsFactors = FALSE)

20 | names(pums) <- c('lwklywge', 'educ', 'yob', 'qob', 'pob')

21 |

22 | # Collapse for means

23 | pums.qob.means <- pums %>% group_by(yob, qob) %>% summarise_each(funs(mean))

24 |

25 | # Add dates

26 | pums.qob.means$yqob <- ymd(paste0("19",

27 | pums.qob.means$yob,

28 | pums.qob.means$qob * 3),

29 | truncated = 2)

30 |

31 | # Function for plotting data

32 | plot.qob <- function(ggplot.obj, ggtitle, ylab) {

33 | gg.colours <- c("firebrick", rep("black", 3), "white")

34 | ggplot.obj + geom_line() +

35 | geom_point(aes(colour = factor(qob)),

36 | size = 5) +

37 | geom_text(aes(label = qob, colour = "white"),

38 | size = 3,

39 | hjust = 0.5, vjust = 0.5,

40 | show_guide = FALSE) +

41 | scale_colour_manual(values = gg.colours, guide = FALSE) +

42 | ggtitle(ggtitle) +

43 | xlab("Year of birth") +

44 | ylab(ylab) +

45 | theme_set(theme_gray(base_size = 10))

46 | }

47 |

48 | # Plot

49 | p.educ <- plot.qob(ggplot(pums.qob.means, aes(x = yqob, y = educ)),

50 | "A. Average education by quarter of birth (first stage)",

51 | "Years of education")

52 | p.lwklywge <- plot.qob(ggplot(pums.qob.means, aes(x = yqob, y = lwklywge)),

53 | "B. Average weekly wage by quarter of birth (reduced form)",

54 | "Log weekly earnings")

55 |

56 | p.ivgraph <- arrangeGrob(p.educ, p.lwklywge)

57 |

58 | ggsave(p.ivgraph, file = "Figure 4-1-1-R.png", height = 12, width = 8, dpi = 300)

59 |

60 | # End of script

61 |

--------------------------------------------------------------------------------

/06 Getting a Little Jumpy/Figure 6-2-1.r:

--------------------------------------------------------------------------------

1 | # R code for Figure 6-2-1 #

2 | # Required packages #

3 | # - haven: read Stata .dta files #

4 | # - ggplot2: making pretty graphs #

5 | # - gridExtra: combine graphs #

6 | library(haven)

7 | library(ggplot2)

8 | library(gridExtra)

9 |

10 | # Download the data

11 | download.file("http://economics.mit.edu/files/1359", "final4.dta")

12 | download.file("http://economics.mit.edu/files/1358", "final5.dta")

13 |

14 | # Load the data

15 | grade4 <- read_dta("final4.dta")

16 | grade5 <- read_dta("final5.dta")

17 |

18 | # Restrict sample

19 | grade4 <- grade4[which(grade4$classize & grade4$classize < 45 & grade4$c_size > 5), ]

20 | grade5 <- grade5[which(grade5$classize & grade5$classize < 45 & grade5$c_size > 5), ]

21 |

22 | # Find means class size by grade size

23 | grade4cmeans <- aggregate(grade4$classize,

24 | by = list(grade4$c_size),

25 | FUN = mean,

26 | na.rm = TRUE)

27 | grade5cmeans <- aggregate(grade5$classize,

28 | by = list(grade5$c_size),

29 | FUN = mean,

30 | na.rm = TRUE)

31 |

32 | # Rename aggregaed columns

33 | colnames(grade4cmeans) <- c("c_size", "classize.mean")

34 | colnames(grade5cmeans) <- c("c_size", "classize.mean")

35 |

36 | # Create function for Maimonides Rule

37 | maimonides.rule <- function(x) {x / (floor((x - 1)/40) + 1)}

38 |

39 | # Plot each grade

40 | g4 <- ggplot(data = grade4cmeans, aes(x = c_size))

41 | p4 <- g4 + geom_line(aes(y = classize.mean)) +

42 | stat_function(fun = maimonides.rule,

43 | linetype = "dashed") +

44 | expand_limits(y = 0) +

45 | scale_x_continuous(breaks = seq(0, 220, 20)) +

46 | ylab("Class size") +

47 | xlab("Enrollment count") +

48 | ggtitle("B. Fourth grade")

49 |

50 | g5 <- ggplot(data = grade5cmeans, aes(x = c_size))

51 | p5 <- g5 + geom_line(aes(y = classize.mean)) +

52 | stat_function(fun = maimonides.rule,

53 | linetype = "dashed") +

54 | expand_limits(y = 0) +

55 | scale_x_continuous(breaks = seq(0, 220, 20)) +

56 | ylab("Class size") +

57 | xlab("Enrollment count") +

58 | ggtitle("A. Fifth grade")

59 |

60 | first.stage <- arrangeGrob(p5, p4, ncol = 1)

61 | ggsave(first.stage, file = "Figure 6-2-1-R.png", height = 8, width = 5, dpi = 300)

62 |

63 | # End of script

64 |

--------------------------------------------------------------------------------

/07 Quantile Regression/Table 7-1-1.r:

--------------------------------------------------------------------------------

1 | # R code for Table 7.1.1 #

2 | # Required packages #

3 | # - haven: read in .dta files #

4 | # - quantreg: quantile regressions #

5 | # - knitr: markdown tables #

6 | library(haven)

7 | library(quantreg)

8 | library(knitr)

9 |

10 | # Download data and unzip the data

11 | download.file('http://economics.mit.edu/files/384', 'angcherfer06.zip')

12 | unzip('angcherfer06.zip')

13 |

14 | # Create a function to run the quantile/OLS regressions so we can use a loop

15 | quant.mincer <- function(tau, data) {

16 | r <- rq(logwk ~ educ + black + exper + exper2,

17 | weights = perwt,

18 | data = data,

19 | tau = tau)

20 | return(rbind(summary(r)$coefficients["educ", "Value"],

21 | summary(r)$coefficients["educ", "Std. Error"]))

22 | }

23 |

24 | # Create function for producing the results

25 | calculate.qr <- function(year) {

26 |

27 | # Create file path

28 | dta.path <- paste('Data/census', year, '.dta', sep = "")

29 |

30 | # Load year into the census

31 | df <- read_dta(dta.path)

32 |

33 | # Run quantile regressions

34 | taus <- c(0.1, 0.25, 0.5, 0.75, 0.9)

35 | qr <- sapply(taus, quant.mincer, data = df)

36 |

37 | # Run OLS regressions and get RMSE

38 | ols <- lm(logwk ~ educ + black + exper + exper2,

39 | weights = perwt,

40 | data = df)

41 | coef.se <- rbind(summary(ols)$coefficients["educ", "Estimate"],

42 | summary(ols)$coefficients["educ", "Std. Error"])

43 | rmse <- sqrt(sum(summary(ols)$residuals^2) / ols$df.residual)

44 |

45 | # Summary statistics

46 | obs <- length(na.omit(df$educ))

47 | mean <- mean(df$logwk, na.rm = TRUE)

48 | sd <- sd(df$logwk, na.rm = TRUE)

49 |

50 | return(cbind(rbind(obs, NA),

51 | rbind(mean, NA),

52 | rbind(sd, NA),

53 | qr,

54 | coef.se,

55 | rbind(rmse, NA)))

56 |

57 | }

58 |

59 | # Generate results

60 | results <- rbind(calculate.qr("80"),

61 | calculate.qr("90"),

62 | calculate.qr("00"))

63 |

64 | # Name rows and columns

65 | row.names(results) <- c("1980", "", "1990", "", "2000", "")

66 | colnames(results) <- c("Obs", "Mean", "Std Dev",

67 | "0.1", "0.25", "0.5", "0.75", "0.9",

68 | "OLS", "RMSE")

69 |

70 | # Format decimals

71 | results <- round(results, 3)

72 | results[ , c(2, 10)] <- round(results[ , c(2, 10)], 2)