├── nlprep

├── test

│ ├── __init__.py

│ ├── test_sentutil.py

│ ├── test_dataset.py

│ ├── test_main.py

│ ├── test_middleformat.py

│ └── test_pairutil.py

├── datasets

│ ├── __init__.py

│ ├── clas_csv

│ │ ├── __init__.py

│ │ └── dataset.py

│ ├── qa_zh

│ │ ├── __init__.py

│ │ └── dataset.py

│ ├── tag_cged

│ │ ├── __init__.py

│ │ └── dataset.py

│ ├── clas_cosmosqa

│ │ ├── __init__.py

│ │ └── dataset.py

│ ├── clas_lihkgcat

│ │ ├── __init__.py

│ │ └── dataset.py

│ ├── clas_mathqa

│ │ ├── __init__.py

│ │ └── dataset.py

│ ├── clas_snli

│ │ ├── __init__.py

│ │ └── dataset.py

│ ├── clas_udicstm

│ │ ├── __init__.py

│ │ └── dataset.py

│ ├── gen_dream

│ │ ├── __init__.py

│ │ └── dataset.py

│ ├── gen_lcccbase

│ │ ├── __init__.py

│ │ └── dataset.py

│ ├── gen_masklm

│ │ ├── __init__.py

│ │ └── dataset.py

│ ├── gen_pttchat

│ │ ├── __init__.py

│ │ └── dataset.py

│ ├── gen_squadqg

│ │ ├── __init__.py

│ │ └── dataset.py

│ ├── gen_storyend

│ │ ├── __init__.py

│ │ └── dataset.py

│ ├── gen_sumcnndm

│ │ ├── __init__.py

│ │ └── dataset.py

│ ├── gen_wmt17news

│ │ ├── __init__.py

│ │ └── dataset.py

│ ├── tag_clner

│ │ ├── __init__.py

│ │ └── dataset.py

│ ├── tag_cnername

│ │ ├── __init__.py

│ │ └── dataset.py

│ ├── tag_conllpp

│ │ ├── __init__.py

│ │ └── dataset.py

│ ├── tag_msraname

│ │ ├── __init__.py

│ │ └── dataset.py

│ └── tag_weiboner

│ │ ├── __init__.py

│ │ └── dataset.py

├── utils

│ ├── __init__.py

│ ├── sentlevel.py

│ └── pairslevel.py

├── __init__.py

├── main.py

├── middleformat.py

└── file_utils.py

├── template

└── dataset

│ └── task_datasetname

│ ├── __init__.py

│ └── dataset.py

├── docs

├── img

│ ├── nlprep.png

│ ├── nlprep-icon.png

│ └── example_report.png

├── utility.md

├── installation.md

├── datasets.md

├── usage.md

└── index.md

├── pyproject.toml

├── .pre-commit-config.yaml

├── requirements.txt

├── .github

└── workflows

│ └── python-package.yml

├── setup.py

├── CONTRIBUTING.md

├── mkdocs.yml

├── README.md

├── .gitignore

└── LICENSE

/nlprep/test/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/nlprep/datasets/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/template/dataset/task_datasetname/__init__.py:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/nlprep/datasets/clas_csv/__init__.py:

--------------------------------------------------------------------------------

1 | from .dataset import *

--------------------------------------------------------------------------------

/nlprep/datasets/qa_zh/__init__.py:

--------------------------------------------------------------------------------

1 | from .dataset import *

--------------------------------------------------------------------------------

/nlprep/datasets/tag_cged/__init__.py:

--------------------------------------------------------------------------------

1 | from .dataset import *

--------------------------------------------------------------------------------

/nlprep/datasets/clas_cosmosqa/__init__.py:

--------------------------------------------------------------------------------

1 | from .dataset import *

--------------------------------------------------------------------------------

/nlprep/datasets/clas_lihkgcat/__init__.py:

--------------------------------------------------------------------------------

1 | from .dataset import *

--------------------------------------------------------------------------------

/nlprep/datasets/clas_mathqa/__init__.py:

--------------------------------------------------------------------------------

1 | from .dataset import *

--------------------------------------------------------------------------------

/nlprep/datasets/clas_snli/__init__.py:

--------------------------------------------------------------------------------

1 | from .dataset import *

--------------------------------------------------------------------------------

/nlprep/datasets/clas_udicstm/__init__.py:

--------------------------------------------------------------------------------

1 | from .dataset import *

--------------------------------------------------------------------------------

/nlprep/datasets/gen_dream/__init__.py:

--------------------------------------------------------------------------------

1 | from .dataset import *

--------------------------------------------------------------------------------

/nlprep/datasets/gen_lcccbase/__init__.py:

--------------------------------------------------------------------------------

1 | from .dataset import *

--------------------------------------------------------------------------------

/nlprep/datasets/gen_masklm/__init__.py:

--------------------------------------------------------------------------------

1 | from .dataset import *

--------------------------------------------------------------------------------

/nlprep/datasets/gen_pttchat/__init__.py:

--------------------------------------------------------------------------------

1 | from .dataset import *

--------------------------------------------------------------------------------

/nlprep/datasets/gen_squadqg/__init__.py:

--------------------------------------------------------------------------------

1 | from .dataset import *

--------------------------------------------------------------------------------

/nlprep/datasets/gen_storyend/__init__.py:

--------------------------------------------------------------------------------

1 | from .dataset import *

--------------------------------------------------------------------------------

/nlprep/datasets/gen_sumcnndm/__init__.py:

--------------------------------------------------------------------------------

1 | from .dataset import *

--------------------------------------------------------------------------------

/nlprep/datasets/gen_wmt17news/__init__.py:

--------------------------------------------------------------------------------

1 | from .dataset import *

--------------------------------------------------------------------------------

/nlprep/datasets/tag_clner/__init__.py:

--------------------------------------------------------------------------------

1 | from .dataset import *

--------------------------------------------------------------------------------

/nlprep/datasets/tag_cnername/__init__.py:

--------------------------------------------------------------------------------

1 | from .dataset import *

--------------------------------------------------------------------------------

/nlprep/datasets/tag_conllpp/__init__.py:

--------------------------------------------------------------------------------

1 | from .dataset import *

--------------------------------------------------------------------------------

/nlprep/datasets/tag_msraname/__init__.py:

--------------------------------------------------------------------------------

1 | from .dataset import *

--------------------------------------------------------------------------------

/nlprep/datasets/tag_weiboner/__init__.py:

--------------------------------------------------------------------------------

1 | from .dataset import *

--------------------------------------------------------------------------------

/docs/img/nlprep.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/voidful/NLPrep/HEAD/docs/img/nlprep.png

--------------------------------------------------------------------------------

/nlprep/utils/__init__.py:

--------------------------------------------------------------------------------

1 | import nlprep.utils.sentlevel

2 | import nlprep.utils.pairslevel

3 |

--------------------------------------------------------------------------------

/docs/img/nlprep-icon.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/voidful/NLPrep/HEAD/docs/img/nlprep-icon.png

--------------------------------------------------------------------------------

/docs/img/example_report.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/voidful/NLPrep/HEAD/docs/img/example_report.png

--------------------------------------------------------------------------------

/pyproject.toml:

--------------------------------------------------------------------------------

1 | [tool.commitizen]

2 | name = "cz_conventional_commits"

3 | version = "0.1.1"

4 | tag_format = "v$version"

5 |

--------------------------------------------------------------------------------

/.pre-commit-config.yaml:

--------------------------------------------------------------------------------

1 | repos:

2 | - hooks:

3 | - id: commitizen

4 | stages:

5 | - commit-msg

6 | repo: https://github.com/commitizen-tools/commitizen

7 | rev: v2.1.0

8 |

--------------------------------------------------------------------------------

/nlprep/__init__.py:

--------------------------------------------------------------------------------

1 | __version__ = "2.4.1"

2 |

3 | import nlprep.file_utils

4 | from nlprep.main import load_utilities, load_dataset, list_all_utilities, list_all_datasets, convert_middleformat

5 |

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | boto3

2 | filelock

3 | requests

4 | inquirer

5 | BeautifulSoup4

6 | tqdm >= 4.27

7 | opencc-python-reimplemented

8 | pandas-profiling >= 2.8.0

9 | nlp2 >= 1.8.27

10 | phraseg >= 1.1.8

11 | transformers

12 | lxml

13 | datasets

--------------------------------------------------------------------------------

/docs/utility.md:

--------------------------------------------------------------------------------

1 | ::: nlprep.utils

2 |

3 | ## Add a new utility

4 | - sentence level: add function into utils/sentlevel.py, function name will be --util parameter

5 | - paris level - add function into utils/parislevel.py, function name will be --util parameter

6 |

--------------------------------------------------------------------------------

/nlprep/test/test_sentutil.py:

--------------------------------------------------------------------------------

1 | import importlib

2 | import os

3 | import unittest

4 | import nlprep

5 |

6 |

7 | class TestDataset(unittest.TestCase):

8 |

9 | def testS2T(self):

10 | sent_util = nlprep.utils.sentlevel

11 | self.assertTrue(sent_util.s2t("快乐") == "快樂")

12 |

13 | def testT2S(self):

14 | sent_util = nlprep.utils.sentlevel

15 | self.assertTrue(sent_util.t2s("快樂") == "快乐")

16 |

--------------------------------------------------------------------------------

/docs/installation.md:

--------------------------------------------------------------------------------

1 | ## Installation

2 | nlprep is tested on Python 3.6+, and PyTorch 1.1.0+.

3 |

4 | ### Installing via pip

5 | ```bash

6 | pip install nlprep

7 | ```

8 | ### Installing via source

9 | ```bash

10 | git clone https://github.com/voidful/nlprep.git

11 | python setup.py install

12 | ```

13 |

14 | ## Running nlprep

15 |

16 | Once you've installed nlprep, you can run with

17 |

18 | ### pip installed version:

19 | `nlprep`

20 |

21 | ### local version:

22 | `python -m nlprep.main`

--------------------------------------------------------------------------------

/nlprep/test/test_dataset.py:

--------------------------------------------------------------------------------

1 | import importlib

2 | import unittest

3 |

4 | import nlprep

5 |

6 |

7 | class TestDataset(unittest.TestCase):

8 |

9 | def testType(self):

10 | datasets = nlprep.list_all_datasets()

11 | for dataset in datasets:

12 | print(dataset)

13 | ds = importlib.import_module('.' + dataset, 'nlprep.datasets')

14 | self.assertTrue("DATASETINFO" in dir(ds))

15 | self.assertTrue("load" in dir(ds))

16 | self.assertTrue(ds.DATASETINFO['TASK'] in ['clas', 'tag', 'qa', 'gen'])

17 |

--------------------------------------------------------------------------------

/nlprep/datasets/clas_csv/dataset.py:

--------------------------------------------------------------------------------

1 | import csv

2 |

3 | from nlprep.middleformat import MiddleFormat

4 |

5 | DATASETINFO = {

6 | 'TASK': "clas"

7 | }

8 |

9 |

10 | def load(data):

11 | return data

12 |

13 |

14 | def toMiddleFormat(path):

15 | dataset = MiddleFormat(DATASETINFO)

16 | with open(path, encoding='utf8') as csvfile:

17 | spamreader = csv.reader(csvfile)

18 | for row in spamreader:

19 | if len(row[0].strip()) > 2 and len(row[1].strip()) > 2:

20 | dataset.add_data(row[0], row[1])

21 | return dataset

22 |

--------------------------------------------------------------------------------

/nlprep/utils/sentlevel.py:

--------------------------------------------------------------------------------

1 | import sys

2 | import inspect

3 |

4 | from opencc import OpenCC

5 |

6 | cc_t2s = OpenCC('t2s')

7 | cc_s2t = OpenCC('s2t')

8 |

9 |

10 | def s2t(convt):

11 | """simplify chines to traditional chines"""

12 | return cc_s2t.convert(convt)

13 |

14 |

15 | def t2s(convt):

16 | """traditional chines to simplify chines"""

17 | return cc_t2s.convert(convt)

18 |

19 |

20 | SentUtils = dict(inspect.getmembers(sys.modules[__name__],

21 | predicate=lambda f: inspect.isfunction(f) and f.__module__ == __name__))

22 |

--------------------------------------------------------------------------------

/template/dataset/task_datasetname/dataset.py:

--------------------------------------------------------------------------------

1 | from nlprep.middleformat import MiddleFormat

2 |

3 | DATASETINFO = {

4 | 'DATASET_FILE_MAP': {

5 | "dataset_name": "dataset path" # list for multiple detests in one tag

6 | },

7 | 'TASK': ["gen", "tag", "clas", "qa"],

8 | 'FULLNAME': "Dataset Full Name",

9 | 'REF': {"Some dataset reference": "useful link"},

10 | 'DESCRIPTION': 'Dataset description'

11 | }

12 |

13 |

14 | def load(data):

15 | return data

16 |

17 |

18 | def toMiddleFormat(path):

19 | dataset = MiddleFormat(DATASETINFO)

20 | # some file reading and processing

21 | dataset.add_data("input", "target")

22 | return dataset

23 |

--------------------------------------------------------------------------------

/nlprep/datasets/clas_lihkgcat/dataset.py:

--------------------------------------------------------------------------------

1 | import csv

2 |

3 | from nlprep.middleformat import MiddleFormat

4 |

5 | DATASETINFO = {

6 | 'DATASET_FILE_MAP': {

7 | "lihkgcat": ["https://media.githubusercontent.com/media/voidful/lihkg_dataset/master/lihkg_posts_title_cat.csv"]

8 | },

9 | 'TASK': "clas",

10 | 'FULLNAME': "LIHKG Post Title 分類資料",

11 | 'REF': {"Source": "https://github.com/ylchan87/LiHKG_Post_NLP"},

12 | 'DESCRIPTION': '根據title去分析屬於邊一個台'

13 | }

14 |

15 |

16 | def load(data):

17 | return data

18 |

19 |

20 | def toMiddleFormat(paths):

21 | dataset = MiddleFormat(DATASETINFO)

22 | for path in paths:

23 | with open(path, encoding='utf8') as csvfile:

24 | rows = csv.reader(csvfile)

25 | for row in rows:

26 | input = row[0]

27 | target = row[1]

28 | dataset.add_data(input.strip(), target.strip())

29 | return dataset

30 |

--------------------------------------------------------------------------------

/nlprep/datasets/clas_mathqa/dataset.py:

--------------------------------------------------------------------------------

1 | from nlprep.middleformat import MiddleFormat

2 | import datasets

3 |

4 | DATASETINFO = {

5 | 'DATASET_FILE_MAP': {

6 | "mathqa-train": 'train',

7 | "mathqa-validation": 'validation',

8 | "mathqa-test": 'test'

9 | },

10 | 'TASK': "clas",

11 | 'FULLNAME': "Math QA",

12 | 'REF': {"Source url": "https://math-qa.github.io/math-QA/data/MathQA.zip"},

13 | 'DESCRIPTION': 'Our dataset is gathered by using a new representation language to annotate over the AQuA-RAT dataset. AQuA-RAT has provided the questions, options, rationale, and the correct options.'

14 | }

15 |

16 |

17 | def load(data):

18 | return datasets.load_dataset('math_qa')[data]

19 |

20 |

21 | def toMiddleFormat(data):

22 | dataset = MiddleFormat(DATASETINFO)

23 | for d in data:

24 | input = d['Problem'] + " [SEP] " + d['options']

25 | target = d['correct']

26 | dataset.add_data(input, target)

27 | return dataset

28 |

--------------------------------------------------------------------------------

/nlprep/datasets/gen_pttchat/dataset.py:

--------------------------------------------------------------------------------

1 | import csv

2 |

3 | from nlprep.middleformat import MiddleFormat

4 |

5 | DATASETINFO = {

6 | 'DATASET_FILE_MAP': {

7 | "pttchat": [

8 | "https://raw.githubusercontent.com/zake7749/Gossiping-Chinese-Corpus/master/data/Gossiping-QA-Dataset-2_0.csv"]

9 | },

10 | 'TASK': "gen",

11 | 'FULLNAME': "PTT八卦版中文對話語料",

12 | 'REF': {"Source": "https://github.com/zake7749/Gossiping-Chinese-Corpus"},

13 | 'DESCRIPTION': """

14 | 嗨,這裡是 PTT 中文語料集,我透過某些假設與方法 將每篇文章化簡為問答配對,其中問題來自文章的標題,而回覆是該篇文章的推文。

15 | """

16 | }

17 |

18 |

19 | def load(data):

20 | return data

21 |

22 |

23 | def toMiddleFormat(paths):

24 | mf = MiddleFormat(DATASETINFO)

25 | for path in paths:

26 | with open(path, encoding='utf8') as csvfile:

27 | rows = csv.reader(csvfile)

28 | next(rows, None)

29 | for row in rows:

30 | input = row[0]

31 | target = row[1]

32 | mf.add_data(input, target)

33 | return mf

34 |

--------------------------------------------------------------------------------

/nlprep/test/test_main.py:

--------------------------------------------------------------------------------

1 | import unittest

2 |

3 | from nlprep.main import *

4 |

5 |

6 | class TestMain(unittest.TestCase):

7 |

8 | def testListAllDataset(self):

9 | self.assertTrue(isinstance(list_all_datasets(), list))

10 |

11 | def testListAllUtilities(self):

12 | self.assertTrue(isinstance(list_all_utilities(), list))

13 |

14 | def testLoadUtility(self):

15 | sent_utils, pairs_utils = load_utilities(list_all_utilities(), disable_input_panel=True)

16 | self.assertTrue(len(sent_utils + pairs_utils), len(list_all_utilities()))

17 | for func, parma in sent_utils:

18 | print(func, parma)

19 | self.assertTrue(isinstance(parma, dict))

20 | for func, parma in pairs_utils:

21 | print(func, parma)

22 | self.assertTrue(isinstance(parma, dict))

23 |

24 | def testConvertMiddleformat(self):

25 | mf_dict = convert_middleformat(load_dataset('clas_udicstm'))

26 | for mf_key, mf in mf_dict.items():

27 | self.assertTrue(isinstance(mf_key, str))

28 |

--------------------------------------------------------------------------------

/nlprep/datasets/gen_dream/dataset.py:

--------------------------------------------------------------------------------

1 | from nlprep.middleformat import MiddleFormat

2 | import json

3 | import nlp2

4 |

5 | DATASETINFO = {

6 | 'DATASET_FILE_MAP': {

7 | "dream": "https://raw.githubusercontent.com/voidful/dream_gen/master/data.csv"

8 | },

9 | 'TASK': "gen",

10 | 'FULLNAME': "周公解夢資料集",

11 | 'REF': {"Source": "https://github.com/saiwaiyanyu/tensorflow-bert-seq2seq-dream-decoder"},

12 | 'DESCRIPTION': '透過夢境解析徵兆'

13 | }

14 |

15 |

16 | def load(data):

17 | return data

18 |

19 |

20 | def toMiddleFormat(path):

21 | dataset = MiddleFormat(DATASETINFO)

22 | with open(path, encoding='utf8') as f:

23 | for _ in list(f.readlines()):

24 | data = json.loads(_)

25 | input = nlp2.split_sentence_to_array(data['dream'], True)

26 | target = nlp2.split_sentence_to_array(data["decode"], True)

27 | if len(input) + len(target) < 512:

28 | input = " ".join(input)

29 | target = " ".join(target)

30 | dataset.add_data(input, target)

31 | return dataset

32 |

--------------------------------------------------------------------------------

/nlprep/datasets/gen_squadqg/dataset.py:

--------------------------------------------------------------------------------

1 | import datasets

2 | import nlp2

3 | from nlprep.middleformat import MiddleFormat

4 |

5 | DATASETINFO = {

6 | 'DATASET_FILE_MAP': {

7 | "squad-qg-train": "train",

8 | "squad-qg-dev": "validation"

9 | },

10 | 'TASK': "gen",

11 | 'FULLNAME': "The Stanford Question Answering Dataset 2.0",

12 | 'REF': {"Source": "https://rajpurkar.github.io/SQuAD-explorer/"},

13 | 'DESCRIPTION': 'Question Generate For SQuAD 2.0'

14 | }

15 |

16 |

17 | def load(data):

18 | return datasets.load_dataset('squad')[data]

19 |

20 |

21 | def toMiddleFormat(data, context_max_len=450, answer_max_len=50):

22 | dataset = MiddleFormat(DATASETINFO)

23 | for d in data:

24 | context = nlp2.split_sentence_to_array(d['context'])

25 | answer = nlp2.split_sentence_to_array(d['answers']['text'][0])

26 | input_data = " ".join(context[:context_max_len]) + " [SEP] " + " ".join(answer[:answer_max_len])

27 | target_data = d['question']

28 | dataset.add_data(input_data, target_data)

29 |

30 | return dataset

31 |

--------------------------------------------------------------------------------

/nlprep/datasets/clas_snli/dataset.py:

--------------------------------------------------------------------------------

1 | from nlprep.middleformat import MiddleFormat

2 | import datasets

3 |

4 | DATASETINFO = {

5 | 'DATASET_FILE_MAP': {

6 | "snli-train": 'train',

7 | "snli-validation": 'validation',

8 | "snli-test": 'test'

9 | },

10 | 'TASK': "clas",

11 | 'FULLNAME': "Stanford Natural Language Inference (SNLI) Corpus",

12 | 'REF': {"Home page": "https://nlp.stanford.edu/projects/snli/"},

13 | 'DESCRIPTION': 'The SNLI corpus (version 1.0) is a collection of 570k human-written English sentence pairs manually labeled for balanced classification with the labels entailment, contradiction, and neutral, supporting the task of natural language inference (NLI), also known as recognizing textual entailment (RTE).'

14 | }

15 |

16 |

17 | def load(data):

18 | return datasets.load_dataset('snli')[data]

19 |

20 |

21 | def toMiddleFormat(data):

22 | dataset = MiddleFormat(DATASETINFO)

23 | for d in data:

24 | input = d['premise'] + " [SEP] " + d['hypothesis']

25 | target = d['label']

26 | dataset.add_data(input, target)

27 | return dataset

28 |

--------------------------------------------------------------------------------

/docs/datasets.md:

--------------------------------------------------------------------------------

1 | ## Browse All Available Dataset

2 | ### Online Explorer

3 | [https://voidful.github.io/NLPrep-Datasets/](https://voidful.github.io/NLPrep-Datasets/)

4 |

5 | ## Add a new dataset

6 | follow template from `template/dataset`

7 |

8 | 1. edit task_datasetname to your task. eg: /tag_clner

9 | 2. edit dataset.py in `template/dataset/task_datasetname`

10 | Edit DATASETINFO

11 | ```python

12 | DATASETINFO = {

13 | 'DATASET_FILE_MAP': {

14 | "dataset_name": "dataset path" # list for multiple detests in one tag

15 | },

16 | 'TASK': ["gen", "tag", "clas", "qa"],

17 | 'FULLNAME': "Dataset Full Name",

18 | 'REF': {"Some dataset reference": "useful link"},

19 | 'DESCRIPTION': 'Dataset description'

20 | }

21 | ```

22 | Implement `load` for pre-loading `'DATASET_FILE_MAP'`'s data

23 | ```python

24 | def load(data):

25 | return data

26 | ```

27 | Implement `toMiddleFormat` for converting file to input and target

28 | ```python

29 | def toMiddleFormat(path):

30 | dataset = MiddleFormat(DATASETINFO)

31 | # some file reading and processing

32 | dataset.add_data("input", "target")

33 | return dataset

34 | ```

35 | 3. move `task_datasetname` folder to `nlprep/datasets`

36 |

--------------------------------------------------------------------------------

/nlprep/datasets/tag_conllpp/dataset.py:

--------------------------------------------------------------------------------

1 | from nlprep.middleformat import MiddleFormat

2 | import datasets

3 |

4 | DATASETINFO = {

5 | 'DATASET_FILE_MAP': {

6 | "conllpp-train": 'train',

7 | "conllpp-validation": 'validation',

8 | "conllpp-test": 'test'

9 | },

10 | 'TASK': "tag",

11 | 'FULLNAME': "CoNLLpp is a corrected version of the CoNLL2003 NER dataset",

12 | 'REF': {"Home page": "https://huggingface.co/datasets/conllpp"},

13 | 'DESCRIPTION': 'CoNLLpp is a corrected version of the CoNLL2003 NER dataset where labels of 5.38% of the sentences in the test set have been manually corrected. The training set and development set from CoNLL2003 is included for completeness.'

14 | }

15 |

16 | ner_tag = {

17 | 0: "O",

18 | 1: "B-PER",

19 | 2: "I-PER",

20 | 3: "B-ORG",

21 | 4: "I-ORG",

22 | 5: "B-LOC",

23 | 6: "I-LOC",

24 | 7: "B-MISC",

25 | 8: "I-MISC"

26 | }

27 |

28 |

29 | def load(data):

30 | return datasets.load_dataset('conllpp')[data]

31 |

32 |

33 | def toMiddleFormat(data):

34 | dataset = MiddleFormat(DATASETINFO)

35 | for d in data:

36 | input = d['tokens']

37 | target = [ner_tag[i] for i in d['ner_tags']]

38 | dataset.add_data(input, target)

39 | return dataset

40 |

--------------------------------------------------------------------------------

/nlprep/datasets/clas_udicstm/dataset.py:

--------------------------------------------------------------------------------

1 | from nlprep.middleformat import MiddleFormat

2 |

3 | DATASETINFO = {

4 | 'DATASET_FILE_MAP': {

5 | "udicstm": [

6 | "https://raw.githubusercontent.com/UDICatNCHU/UdicOpenData/master/udicOpenData/Snownlp訓練資料/twpos.txt",

7 | "https://raw.githubusercontent.com/UDICatNCHU/UdicOpenData/master/udicOpenData/Snownlp訓練資料/twneg.txt"]

8 | },

9 | 'TASK': "clas",

10 | 'FULLNAME': "UDIC Sentiment Analysis Dataset",

11 | 'REF': {"Source": "https://github.com/UDICatNCHU/UdicOpenData"},

12 | 'DESCRIPTION': '正面情緒:約有309163筆,44M / 負面情緒:約有320456筆,15M'

13 | }

14 |

15 |

16 | def load(data):

17 | return data

18 |

19 |

20 | def toMiddleFormat(paths):

21 | dataset = MiddleFormat(DATASETINFO)

22 | for path in paths:

23 | added_data = []

24 | with open(path, encoding='utf8') as f:

25 | if "失望" in f.readline():

26 | sentiment = "negative"

27 | else:

28 | sentiment = "positive"

29 | for i in list(f.readlines()):

30 | input_data = i.strip().replace(" ", "")

31 | if input_data not in added_data:

32 | dataset.add_data(i.strip(), sentiment)

33 | added_data.append(input_data)

34 | return dataset

35 |

--------------------------------------------------------------------------------

/nlprep/datasets/clas_cosmosqa/dataset.py:

--------------------------------------------------------------------------------

1 | from nlprep.middleformat import MiddleFormat

2 | import datasets

3 |

4 | DATASETINFO = {

5 | 'DATASET_FILE_MAP': {

6 | "cosmosqa-train": 'train',

7 | "cosmosqa-validation": 'validation',

8 | "cosmosqa-test": 'test'

9 | },

10 | 'TASK': "clas",

11 | 'FULLNAME': "Cosmos QA",

12 | 'REF': {"HomePage": "https://wilburone.github.io/cosmos/",

13 | "Dataset": " https://github.com/huggingface/nlp/blob/master/datasets/cosmos_qa/cosmos_qa.py"},

14 | 'DESCRIPTION': "Cosmos QA is a large-scale dataset of 35.6K problems that require commonsense-based reading comprehension, formulated as multiple-choice questions. It focuses on reading between the lines over a diverse collection of people's everyday narratives, asking questions concerning on the likely causes or effects of events that require reasoning beyond the exact text spans in the context"

15 | }

16 |

17 |

18 | def load(data):

19 | return datasets.load_dataset('cosmos_qa')[data]

20 |

21 |

22 | def toMiddleFormat(data):

23 | dataset = MiddleFormat(DATASETINFO)

24 | for d in data:

25 | input = d['context'] + " [SEP] " + d['question'] + " [SEP] " + d['answer0'] + " [SEP] " + d[

26 | 'answer1'] + " [SEP] " + d['answer2'] + " [SEP] " + d['answer3']

27 | target = d['label']

28 | dataset.add_data(input, target)

29 | return dataset

30 |

--------------------------------------------------------------------------------

/nlprep/datasets/tag_cnername/dataset.py:

--------------------------------------------------------------------------------

1 | from nlprep.middleformat import MiddleFormat

2 |

3 | DATASETINFO = {

4 | 'DATASET_FILE_MAP': {

5 | "cnername-train": "https://raw.githubusercontent.com/zjy-ucas/ChineseNER/master/data/example.train",

6 | "cnername-test": "https://raw.githubusercontent.com/zjy-ucas/ChineseNER/master/data/example.test",

7 | "cnername-dev": "https://raw.githubusercontent.com/zjy-ucas/ChineseNER/master/data/example.dev",

8 | },

9 | 'TASK': "tag",

10 | 'FULLNAME': "ChineseNER with only name",

11 | 'REF': {"Source": "https://github.com/zjy-ucas/ChineseNER"},

12 | 'DESCRIPTION': 'From https://github.com/zjy-ucas/ChineseNER/tree/master/data, source unknown.'

13 | }

14 |

15 |

16 | def load(data):

17 | return data

18 |

19 |

20 | def toMiddleFormat(path):

21 | dataset = MiddleFormat(DATASETINFO)

22 | with open(path, encoding='utf8') as f:

23 | sent_input = []

24 | sent_target = []

25 | for i in list(f.readlines()):

26 | i = i.strip()

27 | if len(i) > 1:

28 | sent, tar = i.split(' ')

29 | sent_input.append(sent)

30 | if "PER" not in tar:

31 | tar = 'O'

32 | sent_target.append(tar)

33 | else:

34 | dataset.add_data(sent_input, sent_target)

35 | sent_input = []

36 | sent_target = []

37 | return dataset

38 |

--------------------------------------------------------------------------------

/nlprep/datasets/tag_weiboner/dataset.py:

--------------------------------------------------------------------------------

1 | from nlprep.middleformat import MiddleFormat

2 |

3 | DATASETINFO = {

4 | 'DATASET_FILE_MAP': {

5 | "weiboner-train": "https://raw.githubusercontent.com/hltcoe/golden-horse/master/data/weiboNER.conll.train",

6 | "weiboner-test": "https://raw.githubusercontent.com/hltcoe/golden-horse/master/data/weiboNER.conll.test",

7 | "weiboner-dev": "https://raw.githubusercontent.com/hltcoe/golden-horse/master/data/weiboNER.conll.dev",

8 | },

9 | 'TASK': "tag",

10 | 'FULLNAME': "Weibo NER dataset",

11 | 'REF': {"Source": "https://github.com/hltcoe/golden-horse"},

12 | 'DESCRIPTION': 'Entity Recognition (NER) for Chinese Social Media (Weibo). This dataset contains messages selected from Weibo and annotated according to the DEFT ERE annotation guidelines.'

13 | }

14 |

15 |

16 | def load(data):

17 | return data

18 |

19 |

20 | def toMiddleFormat(path):

21 | dataset = MiddleFormat(DATASETINFO)

22 | with open(path, encoding='utf8', errors='replace') as f:

23 | sent_input = []

24 | sent_target = []

25 | for i in list(f.readlines()):

26 | i = i.strip()

27 | if len(i) > 1:

28 | sent, tar = i.split(' ')

29 | sent_input.append(sent)

30 | sent_target.append(tar)

31 | else:

32 | dataset.add_data(sent_input, sent_target)

33 | sent_input = []

34 | sent_target = []

35 | return dataset

36 |

--------------------------------------------------------------------------------

/.github/workflows/python-package.yml:

--------------------------------------------------------------------------------

1 | # This workflow will install Python dependencies, run tests and lint with a variety of Python versions

2 | # For more information see: https://help.github.com/actions/language-and-framework-guides/using-python-with-github-actions

3 |

4 | name: Python package

5 |

6 | on:

7 | push:

8 | branches: [ master ]

9 | pull_request:

10 | branches: [ master ]

11 |

12 | jobs:

13 | build:

14 |

15 | runs-on: ubuntu-latest

16 | strategy:

17 | matrix:

18 | python-version: [3.6, 3.7, 3.8]

19 |

20 | steps:

21 | - uses: actions/checkout@v2

22 | - name: Set up Python ${{ matrix.python-version }}

23 | uses: actions/setup-python@v2

24 | with:

25 | python-version: ${{ matrix.python-version }}

26 | - name: Install dependencies

27 | run: |

28 | python -m pip install --upgrade pip

29 | pip install flake8 pytest

30 | pip install -r requirements.txt

31 | - name: Lint with flake8

32 | run: |

33 | # stop the build if there are Python syntax errors or undefined names

34 | flake8 . --count --select=E9,F63,F7,F82 --show-source --statistics

35 | - name: Test with pytest

36 | run: |

37 | pytest

38 | - name: Generate coverage report

39 | run: |

40 | pip install pytest-cov

41 | pytest --cov=./ --cov-report=xml

42 | - name: Upload coverage to Codecov

43 | uses: codecov/codecov-action@v1

44 | - name: Build

45 | run: |

46 | python setup.py install

47 |

--------------------------------------------------------------------------------

/setup.py:

--------------------------------------------------------------------------------

1 | from setuptools import setup, find_packages

2 |

3 | setup(

4 | name='nlprep',

5 | version='0.2.01',

6 | description='Download and pre-processing data for nlp tasks',

7 | url='https://github.com/voidful/nlprep',

8 | author='Voidful',

9 | author_email='voidful.stack@gmail.com',

10 | long_description=open("README.md", encoding="utf8").read(),

11 | long_description_content_type="text/markdown",

12 | keywords='nlp tfkit classification generation tagging deep learning machine reading',

13 | packages=find_packages(),

14 | install_requires=[

15 | # accessing files from S3 directly

16 | "boto3",

17 | # filesystem locks e.g. to prevent parallel downloads

18 | "filelock",

19 | # for downloading models over HTTPS

20 | "requests",

21 | # progress bars in model download and training scripts

22 | "tqdm >= 4.27",

23 | # Open Chinese convert (OpenCC) in pure Python.

24 | "opencc-python-reimplemented",

25 | # tool for handling textinquirer

26 | "nlp2 >= 1.8.27",

27 | # generate report

28 | "pandas-profiling >= 2.8.0",

29 | # dataset

30 | "datasets",

31 | # phrase segmentation

32 | "phraseg >= 1.1.8",

33 | # tokenizer support

34 | "transformers>=3.3.0",

35 | # input panel

36 | "inquirer",

37 | "BeautifulSoup4",

38 | "lxml"

39 | ],

40 | entry_points={

41 | 'console_scripts': ['nlprep=nlprep.main:main']

42 | },

43 | zip_safe=False,

44 | )

45 |

--------------------------------------------------------------------------------

/nlprep/test/test_middleformat.py:

--------------------------------------------------------------------------------

1 | import unittest

2 |

3 | from nlprep.middleformat import MiddleFormat

4 |

5 |

6 | class TestDataset(unittest.TestCase):

7 | DATASETINFO = {

8 | 'DATASET_FILE_MAP': {

9 | "dataset_name": "dataset path" # list for multiple detests in one tag

10 | },

11 | 'TASK': ["gen", "tag", "clas", "qa"],

12 | 'FULLNAME': "Dataset Full Name",

13 | 'REF': {"Some dataset reference": "useful link"},

14 | 'DESCRIPTION': 'Dataset description'

15 | }

16 |

17 | def testQA(self):

18 | mf = MiddleFormat(self.DATASETINFO)

19 | input, target = mf.convert_to_taskformat(input="okkkkkk", target=[0, 2], sentu_func=[])

20 | row = [input] + target if isinstance(target, list) else [input, target]

21 | print(row)

22 |

23 | def testNormalize(self):

24 | mf = MiddleFormat(self.DATASETINFO)

25 | norm_input, norm_target = mf._normalize_input_target("fas[SEP]df", "fasdf")

26 | self.assertTrue("[SEP]" in norm_input)

27 | norm_input, norm_target = mf._normalize_input_target("我[SEP]df", "fasdf")

28 | self.assertTrue(len(norm_input.split(" ")) == 3)

29 | norm_input, norm_target = mf._normalize_input_target("how [SEP] you", "fasdf")

30 | self.assertTrue(len(norm_input.split(" ")) == 3)

31 |

32 | def testConvertToTaskFormat(self):

33 | mf = MiddleFormat(self.DATASETINFO)

34 | mf.task = 'qa'

35 | _, norm_target = mf.convert_to_taskformat("how [SEP] you", [3, 4], sentu_func=[])

36 | self.assertTrue(isinstance(norm_target, list))

37 |

--------------------------------------------------------------------------------

/nlprep/datasets/tag_msraname/dataset.py:

--------------------------------------------------------------------------------

1 | from nlprep.middleformat import MiddleFormat

2 |

3 | DATASETINFO = {

4 | "DATASET_FILE_MAP": {

5 | "msraner": "https://raw.githubusercontent.com/InsaneLife/ChineseNLPCorpus/master/NER/MSRA/train1.txt",

6 | },

7 | "TASK": "tag",

8 | "FULLNAME": "MSRA simplified character corpora for WS and NER",

9 | "REF": {

10 | "Source": "https://github.com/InsaneLife/ChineseNLPCorpus",

11 | "Paper": "https://faculty.washington.edu/levow/papers/sighan06.pdf",

12 | },

13 | "DESCRIPTION": "50k+ of Chinese naming entities including Location, Organization, and Person",

14 | }

15 |

16 |

17 | def load(data):

18 | return data

19 |

20 |

21 | def toMiddleFormat(path):

22 | dataset = MiddleFormat(DATASETINFO)

23 | with open(path, encoding="utf8") as f:

24 | for sentence in list(f.readlines()):

25 | sent_input = []

26 | sent_target = []

27 | word_tags = sentence.split()

28 | for word_tag in word_tags:

29 | context, tag = word_tag.split("/")

30 | if tag == "nr" and len(context) > 1:

31 | sent_input.append(context[0])

32 | sent_target.append("B-PER")

33 | for char in context[1:]:

34 | sent_input.append(char)

35 | sent_target.append("I-PER")

36 | else:

37 | for char in context:

38 | sent_input.append(char)

39 | sent_target.append("O")

40 | dataset.add_data(sent_input, sent_target)

41 | return dataset

42 |

--------------------------------------------------------------------------------

/nlprep/datasets/gen_masklm/dataset.py:

--------------------------------------------------------------------------------

1 | import re

2 | import nlp2

3 | import random

4 |

5 | from tqdm import tqdm

6 |

7 | from nlprep.middleformat import MiddleFormat

8 |

9 | DATASETINFO = {

10 | 'TASK': "gen"

11 | }

12 |

13 |

14 | def load(data):

15 | return data

16 |

17 |

18 | def toMiddleFormat(path):

19 | from phraseg import Phraseg

20 | punctuations = r"[.﹑︰〈〉─《﹖﹣﹂﹁﹔!?。。"#$%&'()*+,﹐-/:;<=>@[\]^_`{|}~⦅⦆「」、、〃》「」『』【】〔〕〖〗〘〙〚〛〜〝〞〟〰〾〿–—‘’‛“”„‟…‧﹏..!\"#$%&()*+,\-.\:;<=>?@\[\]\\\/^_`{|}~]+"

21 | MASKTOKEN = "[MASK]"

22 | dataset = MiddleFormat(DATASETINFO, [MASKTOKEN])

23 | phraseg = Phraseg(path)

24 |

25 | for line in tqdm(nlp2.read_files_yield_lines(path)):

26 | line = nlp2.clean_all(line).strip()

27 |

28 | if len(nlp2.split_sentence_to_array(line)) > 1:

29 | phrases = list((phraseg.extract(sent=line, merge_overlap=False)).keys())

30 | reg = "[0-9]+|[a-zA-Z]+\'*[a-z]*|[\w]" + "|" + punctuations

31 | reg = "|".join(phrases) + "|" + reg

32 | input_sent = re.findall(reg, line, re.UNICODE)

33 | target_sent = re.findall(reg, line, re.UNICODE)

34 | for ind, word in enumerate(input_sent):

35 | prob = random.random()

36 | if prob <= 0.15 and len(word) > 0:

37 | input_sent[ind] = MASKTOKEN

38 | if len(input_sent) > 2 and len(target_sent) > 2 and len("".join(input_sent).strip()) > 2 and len(

39 | "".join(target_sent).strip()) > 2:

40 | dataset.add_data(nlp2.join_words_to_sentence(input_sent), nlp2.join_words_to_sentence(target_sent))

41 |

42 | return dataset

43 |

--------------------------------------------------------------------------------

/nlprep/datasets/tag_clner/dataset.py:

--------------------------------------------------------------------------------

1 | from nlprep.middleformat import MiddleFormat

2 |

3 | DATASETINFO = {

4 | 'DATASET_FILE_MAP': {

5 | "clner-train": "https://raw.githubusercontent.com/lancopku/Chinese-Literature-NER-RE-Dataset/master/ner/train.txt",

6 | "clner-test": "https://raw.githubusercontent.com/lancopku/Chinese-Literature-NER-RE-Dataset/master/ner/test.txt",

7 | "clner-validation": "https://raw.githubusercontent.com/lancopku/Chinese-Literature-NER-RE-Dataset/master/ner/validation.txt",

8 | },

9 | 'TASK': "tag",

10 | 'FULLNAME': "Chinese-Literature-NER-RE-Dataset",

11 | 'REF': {"Source": "https://github.com/lancopku/Chinese-Literature-NER-RE-Dataset",

12 | "Paper": "https://arxiv.org/pdf/1711.07010.pdf"},

13 | 'DESCRIPTION': 'We provide a new Chinese literature dataset for Named Entity Recognition (NER) and Relation Extraction (RE). We define 7 entity tags and 9 relation tags based on several available NER and RE datasets but with some additional categories specific to Chinese literature text. '

14 | }

15 |

16 |

17 | def load(data):

18 | return data

19 |

20 |

21 | def toMiddleFormat(path):

22 | dataset = MiddleFormat(DATASETINFO)

23 | with open(path, encoding='utf8') as f:

24 | sent_input = []

25 | sent_target = []

26 | for i in list(f.readlines()):

27 | i = i.strip()

28 | if len(i) > 1:

29 | sent, tar = i.split(' ')

30 | sent_input.append(sent)

31 | sent_target.append(tar)

32 | else:

33 | dataset.add_data(sent_input, sent_target)

34 | sent_input = []

35 | sent_target = []

36 | return dataset

37 |

--------------------------------------------------------------------------------

/nlprep/datasets/gen_storyend/dataset.py:

--------------------------------------------------------------------------------

1 | from nlprep.middleformat import MiddleFormat

2 |

3 | DATASETINFO = {

4 | 'DATASET_FILE_MAP': {

5 | "storyend-train": ["https://raw.githubusercontent.com/JianGuanTHU/StoryEndGen/master/data/train.post",

6 | "https://raw.githubusercontent.com/JianGuanTHU/StoryEndGen/master/data/train.response"],

7 | "storyend-test": ["https://raw.githubusercontent.com/JianGuanTHU/StoryEndGen/master/data/test.post",

8 | "https://raw.githubusercontent.com/JianGuanTHU/StoryEndGen/master/data/test.response"],

9 | "storyend-val": ["https://raw.githubusercontent.com/JianGuanTHU/StoryEndGen/master/data/val.post",

10 | "https://raw.githubusercontent.com/JianGuanTHU/StoryEndGen/master/data/val.response"]

11 | },

12 | 'TASK': "gen",

13 | 'FULLNAME': "Five-sentence stories from ROCStories corpus ",

14 | 'REF': {"Source": "https://github.com/JianGuanTHU/StoryEndGen",

15 | "ROCStories corpus": "http://cs.rochester.edu/nlp/rocstories/"},

16 | 'DESCRIPTION': 'This corpus is unique in two ways: (1) it captures a rich set of causal and temporal commonsense relations between daily events, and (2) it is a high quality collection of everyday life stories that can also be used for story generation.'

17 | }

18 |

19 |

20 | def load(data):

21 | return data

22 |

23 |

24 | def toMiddleFormat(paths):

25 | dataset = MiddleFormat(DATASETINFO)

26 | with open(paths[0], 'r', encoding='utf8', errors='ignore') as posts:

27 | with open(paths[1], 'r', encoding='utf8', errors='ignore') as resps:

28 | for p, r in zip(posts.readlines(), resps.readlines()):

29 | p = p.replace('\t', " [SEP] ").replace('\n', "")

30 | r = r.replace('\n', "")

31 | dataset.add_data(p, r)

32 | return dataset

33 |

--------------------------------------------------------------------------------

/nlprep/datasets/gen_lcccbase/dataset.py:

--------------------------------------------------------------------------------

1 | import json

2 | import os

3 | import re

4 |

5 | from nlprep.file_utils import cached_path

6 | from nlprep.middleformat import MiddleFormat

7 | import nlp2

8 |

9 | DATASETINFO = {

10 | 'DATASET_FILE_MAP': {

11 | "lccc-base": "https://coai-dataset.oss-cn-beijing.aliyuncs.com/LCCC-base.zip"

12 | },

13 | 'TASK': "gen",

14 | 'FULLNAME': "LCCC(Large-scale Cleaned Chinese Conversation) base",

15 | 'REF': {"paper": "https://arxiv.org/abs/2008.03946",

16 | "download source": "https://github.com/thu-coai/CDial-GPT#Dataset-zh"},

17 | 'DESCRIPTION': '我們所提供的數據集LCCC(Large-scale Cleaned Chinese Conversation)主要包含兩部分: LCCC-base 和 LCCC-large. 我們設計了一套嚴格的數據過濾流程來確保該數據集中對話數據的質量。這一數據過濾流程中包括一系列手工規則以及若干基於機器學習算法所構建的分類器。我們所過濾掉的噪聲包括:髒字臟詞、特殊字符、顏表情、語法不通的語句、上下文不相關的對話等。該數據集的統計信息如下表所示。其中,我們將僅包含兩個語句的對話稱為“單輪對話”,我們將包含兩個以上語句的對話稱為“多輪對話”。'

18 | }

19 |

20 |

21 | def load(data_path):

22 | import zipfile

23 | cache_path = cached_path(data_path)

24 | cache_dir = os.path.abspath(os.path.join(cache_path, os.pardir))

25 | data_folder = os.path.join(cache_dir, 'lccc_data')

26 | if nlp2.is_dir_exist(data_folder) is False:

27 | with zipfile.ZipFile(cache_path, 'r') as zip_ref:

28 | zip_ref.extractall(data_folder)

29 | path = [f for f in nlp2.get_files_from_dir(data_folder) if

30 | '.json' in f]

31 | return path

32 |

33 |

34 | def toMiddleFormat(path):

35 | dataset = MiddleFormat(DATASETINFO)

36 | with open(path[0], encoding='utf8') as f:

37 | pairs = json.load(f)

38 | for pair in pairs:

39 | input_s = []

40 | for p in pair[:-1]:

41 | input_s.append(nlp2.join_words_to_sentence(nlp2.split_sentence_to_array(p)))

42 | dataset.add_data(" [SEP] ".join(input_s),

43 | nlp2.join_words_to_sentence(nlp2.split_sentence_to_array(pair[-1])))

44 | return dataset

45 |

--------------------------------------------------------------------------------

/nlprep/datasets/gen_sumcnndm/dataset.py:

--------------------------------------------------------------------------------

1 | import os

2 | import re

3 |

4 | from nlprep.file_utils import cached_path

5 | from nlprep.middleformat import MiddleFormat

6 | import nlp2

7 |

8 | DATASETINFO = {

9 | 'DATASET_FILE_MAP': {

10 | "cnndm-train": "train.txt",

11 | "cnndm-test": "test.txt",

12 | "cnndm-val": "val.txt"

13 | },

14 | 'TASK': "gen",

15 | 'FULLNAME': "CNN/DM Abstractive Summary Dataset",

16 | 'REF': {"Source": "https://github.com/harvardnlp/sent-summary"},

17 | 'DESCRIPTION': 'Abstractive Text Summarization on CNN / Daily Mail'

18 | }

19 |

20 |

21 | def load(data):

22 | import tarfile

23 | cache_path = cached_path("https://s3.amazonaws.com/opennmt-models/Summary/cnndm.tar.gz")

24 | cache_dir = os.path.abspath(os.path.join(cache_path, os.pardir))

25 | data_folder = os.path.join(cache_dir, 'cnndm_data')

26 | if nlp2.is_dir_exist(data_folder) is False:

27 | tar = tarfile.open(cache_path, "r:gz")

28 | tar.extractall(data_folder)

29 | tar.close()

30 | return [os.path.join(data_folder, data + ".src"), os.path.join(data_folder, data + ".tgt.tagged")]

31 |

32 |

33 | REMAP = {"-lrb-": "(", "-rrb-": ")", "-lcb-": "{", "-rcb-": "}",

34 | "-lsb-": "[", "-rsb-": "]", "``": '"', "''": '"'}

35 |

36 |

37 | def clean_text(text):

38 | text = re.sub(

39 | r"-lrb-|-rrb-|-lcb-|-rcb-|-lsb-|-rsb-|``|''",

40 | lambda m: REMAP.get(m.group()), text)

41 | return nlp2.clean_all(text.strip().replace('``', '"').replace('\'\'', '"').replace('`', '\''))

42 |

43 |

44 | def toMiddleFormat(path):

45 | dataset = MiddleFormat(DATASETINFO)

46 | with open(path[0], 'r', encoding='utf8') as src:

47 | with open(path[1], 'r', encoding='utf8') as tgt:

48 | for ori, sum in zip(src, tgt):

49 | ori = clean_text(ori)

50 | sum = clean_text(sum)

51 | dataset.add_data(ori, sum)

52 | return dataset

53 |

--------------------------------------------------------------------------------

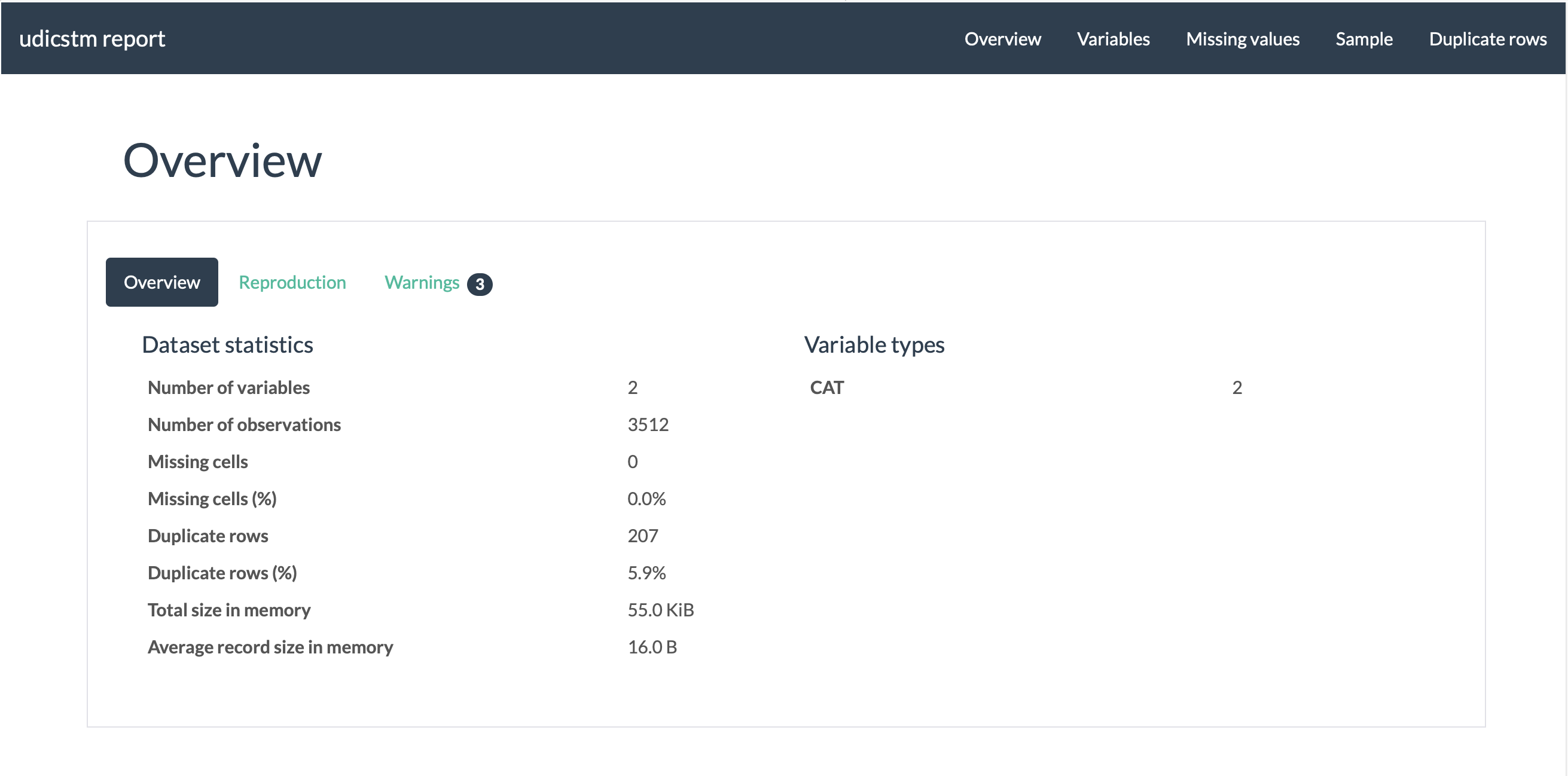

/docs/usage.md:

--------------------------------------------------------------------------------

1 | #Usage

2 | ## Overview

3 | ```

4 | $ nlprep

5 | arguments:

6 | --dataset which dataset to use

7 | --outdir processed result output directory

8 |

9 | optional arguments:

10 | -h, --help show this help message and exit

11 | --util data preprocessing utility, multiple utility are supported

12 | --cachedir dir for caching raw dataset

13 | --infile local dataset path

14 | --report generate a html statistics report

15 | ```

16 | ## Python

17 | ```python

18 | import os

19 | import nlprep

20 | datasets = nlprep.list_all_datasets()

21 | ds = nlprep.load_dataset(datasets[0])

22 | ds_info = ds.DATASETINFO

23 | for ds_name, mf in nlprep.convert_middleformat(ds).items():

24 | print(ds_name, ds_info, mf.dump_list()[:3])

25 | profile = mf.get_report(ds_name)

26 | profile.to_file(os.path.join('./', ds_name + "_report.html"))

27 | ```

28 |

29 | ## Example

30 | Download udicstm dataset that

31 | ```bash

32 | nlprep --dataset clas_udicstm --outdir sentiment --util splitData --report

33 | ```

34 | Show result file

35 | ```text

36 | !head -10 ./sentiment/udicstm_valid.csv

37 |

38 | 會生孩子不等於會當父母,這可能讓許多人無法接受,不少父母打着“愛孩子”的旗號做了許多阻礙孩子心智發展的事,甚至傷害了孩子卻還不知道,反而怪孩子。看了這本書我深受教育,我慶幸在寶寶才七個月就看到了這本書,而不是七歲或者十七歲,可能會讓我在教育孩子方面少走許多彎路。非常感謝尹建莉老師,希望她再寫出更好的書。也希望衆多的年輕父母好好看看這本書。我已向許多朋友推薦此書。,positive

39 | 第一,一插入無線上網卡(usb接口)就自動關機;第二,待機時間沒有宣稱的那麼長久;第三,比較容易沾手印。,negative

40 | "小巧實用,外觀好看;而且系統盤所在的區和其它區已經分開,儘管只有兩個區,不過已經足夠了",positive

41 | 特價房非常小 四步走到房間牆角 基本是用不隔音的板材隔出來的 隔壁的電視聲音 還有臨近房間夜晚男女做事的呻吟和同浴的聲音都能很清楚的聽見 簡直就是網友見面的炮房 房間裏空氣質量很差 且無法通過換氣排出 攜程價格與門市價相同 主要考慮辦事地點在附近 纔去住的,negative

42 | 在同等價位上來講配置不錯,品牌知名度高,品質也有保證。商務機型,外觀一般,按鍵手感很好,戴爾的電源適配器造型很好,也比較輕巧。,positive

43 | 一般的書。。。。。。。。。。。。。,negative

44 | "有點重,是個遺憾。能買這麼小的筆記本,就是希望可以方便攜帶。尺寸是OK了,要是再輕薄些就更完美了。沒有光驅的說,所以華碩有待改善。然後就是外殼雖然是烤漆的,很漂亮(請勿觸摸),因爲一觸摸就會留下指紋",negative

45 | 自帶了一個白色的包包,不用額外買了,positive

46 | "剛收到,發現鍵盤有些鬆,觸摸屏太難按了,最主要的是開機的時候打開和關上光驅導致系統藍屏,不知道是不是這個原因 , 其他的到目前爲止正常.",negative

47 | "酒店地理位置不錯,門口時高速和輕軌.",negative

48 | ```

49 | Report will be at `sentiment/udicstm_valid_report.html`

50 |

51 |

52 |

--------------------------------------------------------------------------------

/nlprep/datasets/tag_cged/dataset.py:

--------------------------------------------------------------------------------

1 | from bs4 import BeautifulSoup

2 |

3 | from nlprep.middleformat import MiddleFormat

4 |

5 | DATASETINFO = {

6 | 'DATASET_FILE_MAP': {

7 | "cged": [

8 | "https://raw.githubusercontent.com/voidful/ChineseErrorDataset/master/CGED/CGED16_HSK_TrainingSet.xml",

9 | "https://raw.githubusercontent.com/voidful/ChineseErrorDataset/master/CGED/CGED17_HSK_TrainingSet.xml",

10 | "https://raw.githubusercontent.com/voidful/ChineseErrorDataset/master/CGED/CGED18_HSK_TrainingSet.xml"]

11 | },

12 | 'TASK': "tag",

13 | 'FULLNAME': "中文語法錯誤診斷 - Chinese Grammatical Error Diagnosis",

14 | 'REF': {"Project Page": "http://nlp.ee.ncu.edu.tw/resource/cged.html"},

15 | 'DESCRIPTION': 'The grammatical errors are broadly categorized into 4 error types: word ordering, redundant, missing, and incorrect selection of linguistic components (also called PADS error types, denoting errors of Permutation, Addition, Deletion, and Selection, correspondingly).'

16 | }

17 |

18 |

19 | def load(data):

20 | return data

21 |

22 |

23 | def toMiddleFormat(paths):

24 | dataset = MiddleFormat(DATASETINFO)

25 | for path in paths:

26 | soup = BeautifulSoup(open(path, 'r', encoding='utf8'), features="lxml")

27 | temp = soup.root.find_all('doc')

28 |

29 | for i in temp:

30 | tag_s = i.find('text').string

31 | error_temp = i.find_all('error')

32 |

33 | tag_s = tag_s.strip(' ')

34 | tag_s = tag_s.strip('\n')

35 |

36 | if (len(tag_s)) >= 2:

37 | try:

38 | empty_tag = list()

39 |

40 | for i in range(len(tag_s)):

41 | empty_tag.append('O')

42 |

43 | for e in error_temp:

44 | for i in range(int(e['start_off']), int(e['end_off'])):

45 | empty_tag[i] = str(e['type'])

46 | except:

47 | pass

48 |

49 | if len(tag_s) == len(empty_tag):

50 | dataset.add_data(tag_s, empty_tag)

51 |

52 | return dataset

53 |

--------------------------------------------------------------------------------

/CONTRIBUTING.md:

--------------------------------------------------------------------------------

1 | # Contributing to nlprep

2 | We love your input! We want to make contributing to this project as easy and transparent as possible, whether it's:

3 |

4 | - Reporting a bug

5 | - Discussing the current state of the code

6 | - Submitting a fix

7 | - Proposing new features

8 | - Becoming a maintainer

9 |

10 | ## We Develop with Github

11 | We use github to host code, to track issues and feature requests, as well as accept pull requests.

12 |

13 | ## We Use [Github Flow](https://guides.github.com/introduction/flow/index.html), So All Code Changes Happen Through Pull Requests

14 | Pull requests are the best way to propose changes to the codebase (we use [Github Flow](https://guides.github.com/introduction/flow/index.html)). We actively welcome your pull requests:

15 |

16 | 1. Fork the repo and create your branch from `master`.

17 | 2. If you've added code that should be tested, add tests.

18 | 3. If you've changed APIs, update the documentation.

19 | 4. Ensure the test suite passes.

20 | 5. Make sure your code lints.

21 | 6. Issue that pull request!

22 |

23 | ## Any contributions you make will be under the Apache 2.0 Software License

24 | In short, when you submit code changes, your submissions are understood to be under the same [Apache 2.0 License](https://choosealicense.com/licenses/apache-2.0/) that covers the project. Feel free to contact the maintainers if that's a concern.

25 |

26 | ## Report bugs using Github's [issues](https://github.com/voidful/nlprep/issues)

27 | We use GitHub issues to track public bugs. Report a bug by [opening a new issue](); it's that easy!

28 |

29 | ## Write bug reports with detail, background, and sample code

30 | **Great Bug Reports** tend to have:

31 |

32 | - A quick summary and/or background

33 | - Steps to reproduce

34 | - Be specific!

35 | - Give sample code if you can.

36 | - What you expected would happen

37 | - What actually happens

38 | - Notes (possibly including why you think this might be happening, or stuff you tried that didn't work)

39 |

40 | People *love* thorough bug reports. I'm not even kidding.

41 |

42 | ## License

43 | By contributing, you agree that your contributions will be licensed under its Apache 2.0 License.

44 |

45 |

--------------------------------------------------------------------------------

/mkdocs.yml:

--------------------------------------------------------------------------------

1 | # Project information

2 | site_name: nlprep

3 | site_description: 🍳 dataset tool for many natural language processing task

4 | site_author: Voidful

5 | site_url: https://github.com/voidful/nlprep

6 | repo_name: nlprep

7 | repo_url: https://github.com/voidful/nlprep

8 | copyright: Copyright © Voidful

9 |

10 | nav:

11 | - Home: index.md

12 | - Installation: installation.md

13 | - Usage: usage.md

14 | - Datasets: datasets.md

15 | - Utilities: utility.md

16 |

17 | plugins:

18 | - search

19 | - mkdocstrings:

20 | default_handler: python

21 | handlers:

22 | python:

23 | setup_commands:

24 | - import sys

25 | - sys.path.append("docs")

26 | watch:

27 | - nlprep

28 |

29 | theme:

30 | name: material

31 | language: en

32 | palette:

33 | primary: blue grey

34 | accent: blue grey

35 | font:

36 | text: Roboto

37 | code: Roboto Mono

38 | logo: img/nlprep-icon.png

39 | favicon: img/nlprep-icon.png

40 |

41 | # Extras

42 | extra:

43 | social:

44 | - icon: fontawesome/brands/github-alt

45 | link: https://github.com/voidful/nlprep

46 | - icon: fontawesome/brands/twitter

47 | link: https://twitter.com/voidful_stack

48 | - icon: fontawesome/brands/linkedin

49 | link: https://www.linkedin.com/in/voidful/

50 |

51 | # Google Analytics

52 | google_analytics:

53 | - UA-127062540-4

54 | - auto

55 |

56 | # Extensions

57 | markdown_extensions:

58 | - markdown.extensions.admonition

59 | - markdown.extensions.attr_list

60 | - markdown.extensions.codehilite:

61 | guess_lang: false

62 | - markdown.extensions.def_list

63 | - markdown.extensions.footnotes

64 | - markdown.extensions.meta

65 | - markdown.extensions.toc:

66 | permalink: true

67 | - pymdownx.arithmatex

68 | - pymdownx.betterem:

69 | smart_enable: all

70 | - pymdownx.caret

71 | - pymdownx.critic

72 | - pymdownx.details

73 | - pymdownx.emoji:

74 | emoji_index: !!python/name:materialx.emoji.twemoji

75 | emoji_generator: !!python/name:materialx.emoji.to_svg

76 | # - pymdownx.highlight:

77 | # linenums_style: pymdownx-inline

78 | - pymdownx.inlinehilite

79 | - pymdownx.keys

80 | - pymdownx.magiclink:

81 | repo_url_shorthand: true

82 | user: squidfunk

83 | repo: mkdocs-material

84 | - pymdownx.mark

85 | - pymdownx.smartsymbols

86 | - pymdownx.snippets:

87 | check_paths: true

88 | - pymdownx.superfences

89 | - pymdownx.tabbed

90 | - pymdownx.tasklist:

91 | custom_checkbox: true

92 | - pymdownx.tilde

93 |

--------------------------------------------------------------------------------

/nlprep/test/test_pairutil.py:

--------------------------------------------------------------------------------

1 | import importlib

2 | import os

3 | import unittest

4 | import nlprep

5 |

6 |

7 | class TestDataset(unittest.TestCase):

8 |

9 | def testReverse(self):

10 | pair_util = nlprep.utils.pairslevel

11 | dummyPath = "path"

12 | dummyPair = [["a", "b"]]

13 | rev_pair = pair_util.reverse(dummyPath, dummyPair)[0][1]

14 | dummyPair.reverse()

15 | self.assertTrue(rev_pair == dummyPair)

16 |

17 | def testSplitData(self):

18 | pair_util = nlprep.utils.pairslevel

19 | dummyPath = "path"

20 | dummyPair = [[1, 1], [2, 2], [3, 3], [4, 4], [5, 5], [6, 6], [7, 7], [8, 8], [9, 9], [10, 10]]

21 |

22 | splited = pair_util.splitData(dummyPath, dummyPair, train_ratio=0.7, test_ratio=0.2, valid_ratio=0.1)

23 | print(splited)

24 | for s in splited:

25 | if "train" in s[0]:

26 | self.assertTrue(len(s[1]) == 7)

27 | elif "test" in s[0]:

28 | self.assertTrue(len(s[1]) == 2)

29 | elif "valid" in s[0]:

30 | self.assertTrue(len(s[1]) == 1)

31 |

32 | def testSetSepToken(self):

33 | pair_util = nlprep.utils.pairslevel

34 | dummyPath = "path"

35 | dummyPair = [["a [SEP] b", "c"]]

36 | processed = pair_util.setSepToken(dummyPath, dummyPair, sep_token="QAQ")

37 | print(processed[0][1][0])

38 | self.assertTrue("QAQ" in processed[0][1][0][0])

39 |

40 | def testSetMaxLen(self):

41 | pair_util = nlprep.utils.pairslevel

42 | dummyPath = "path"

43 | dummyPair = [["a" * 513, "c"]]

44 | processed = pair_util.setMaxLen(dummyPath, dummyPair, maxlen=512, tokenizer="char",

45 | with_target=False, handle_over='remove')

46 | self.assertTrue(0 == len(processed[0][1]))

47 | processed = pair_util.setMaxLen(dummyPath, dummyPair, maxlen=512, tokenizer="char",

48 | with_target=False, handle_over='slice')

49 | self.assertTrue(len(processed[0][1][0][0]) < 512)

50 | processed = pair_util.setMaxLen(dummyPath, dummyPair, maxlen=514, tokenizer="char",

51 | with_target=True, handle_over='remove')

52 | self.assertTrue(0 == len(processed[0][1]))

53 |

54 | def testsplitDataIntoPart(self):

55 | pair_util = nlprep.utils.pairslevel

56 | dummyPath = "path"

57 | dummyPair = [["a", "b"]] * 10

58 | processed = pair_util.splitDataIntoPart(dummyPath, dummyPair, part=4)

59 | print(processed)

60 |

--------------------------------------------------------------------------------

/docs/index.md:

--------------------------------------------------------------------------------

1 |

2 |

3 |  4 |

4 |

5 |

6 |

7 |

8 |  9 |

10 |

11 |

9 |

10 |

11 |  12 |

13 |

14 |

12 |

13 |

14 |  15 |

16 |

17 |

15 |

16 |

17 |  18 |

19 |

18 |

19 |

20 |

21 | ## Feature

22 |

23 | - handle over 100 dataset

24 | - generate statistic report about processed dataset

25 | - support many pre-processing ways

26 | - Provide a panel for entering your parameters at runtime

27 | - easy to adapt your own dataset and pre-processing utility

28 |

29 | ## Online Explorer

30 | [https://voidful.github.io/NLPrep-Datasets/](https://voidful.github.io/NLPrep-Datasets/)

31 |

32 | ## Quick Start

33 | ### Installing via pip

34 | ```bash

35 | pip install nlprep

36 | ```

37 | ### get one of the dataset

38 | ```bash

39 | nlprep --dataset clas_udicstm --outdir sentiment --util

40 | ```

41 |

42 | **You can also try nlprep in Google Colab: [](https://colab.research.google.com/drive/1EfVXa0O1gtTZ1xEAPDyvXMnyjcHxO7Jk?usp=sharing)**

43 |

44 | ## Overview

45 | ```

46 | $ nlprep

47 | arguments:

48 | --dataset which dataset to use

49 | --outdir processed result output directory

50 |

51 | optional arguments:

52 | -h, --help show this help message and exit

53 | --util data preprocessing utility, multiple utility are supported

54 | --cachedir dir for caching raw dataset

55 | --infile local dataset path

56 | --report generate a html statistics report

57 | ```

58 |

59 | ## Contributing

60 | Thanks for your interest.There are many ways to contribute to this project. Get started [here](https://github.com/voidful/nlprep/blob/master/CONTRIBUTING.md).

61 |

62 | ## License

63 |

64 | * [License](https://github.com/voidful/nlprep/blob/master/LICENSE)

65 |

66 | ## Icons reference

67 | Icons modify from Darius Dan from www.flaticon.com

68 | Icons modify from Freepik from www.flaticon.com

69 |

70 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

2 |

3 |  4 |

4 |

5 |

6 |

7 |

8 |  9 |

10 |

11 |

9 |

10 |

11 |  12 |

13 |

14 |

12 |

13 |

14 |  15 |

16 |

17 |

15 |

16 |

17 |  18 |

19 |

18 |

19 |

20 |

21 | ## Feature

22 | - handle over 100 dataset

23 | - generate statistic report about processed dataset

24 | - support many pre-processing ways

25 | - Provide a panel for entering your parameters at runtime

26 | - easy to adapt your own dataset and pre-processing utility

27 |

28 | # Online Explorer

29 | [https://voidful.github.io/NLPrep-Datasets/](https://voidful.github.io/NLPrep-Datasets/)

30 |

31 | # Documentation

32 | Learn more from the [docs](https://voidful.github.io/NLPrep/).

33 |

34 | ## Quick Start

35 | ### Installing via pip

36 | ```bash

37 | pip install nlprep

38 | ```

39 | ### get one of the dataset

40 | ```bash

41 | nlprep --dataset clas_udicstm --outdir sentiment

42 | ```

43 |

44 | **You can also try nlprep in Google Colab: [](https://colab.research.google.com/drive/1EfVXa0O1gtTZ1xEAPDyvXMnyjcHxO7Jk?usp=sharing)**

45 |

46 | ## Overview

47 | ```

48 | $ nlprep

49 | arguments:

50 | --dataset which dataset to use

51 | --outdir processed result output directory

52 |

53 | optional arguments:

54 | -h, --help show this help message and exit

55 | --util data preprocessing utility, multiple utility are supported

56 | --cachedir dir for caching raw dataset

57 | --infile local dataset path

58 | --report generate a html statistics report

59 | ```

60 |

61 | ## Contributing

62 | Thanks for your interest.There are many ways to contribute to this project. Get started [here](https://github.com/voidful/nlprep/blob/master/CONTRIBUTING.md).

63 |

64 | ## License

65 |

66 | * [License](https://github.com/voidful/nlprep/blob/master/LICENSE)

67 |

68 | ## Icons reference

69 | Icons modify from Darius Dan from www.flaticon.com

70 | Icons modify from Freepik from www.flaticon.com

71 |

--------------------------------------------------------------------------------

/nlprep/main.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | import importlib

3 | import datasets

4 |

5 | from nlprep.file_utils import cached_path

6 | from nlprep.utils.sentlevel import *

7 | from nlprep.utils.pairslevel import *

8 |

9 | import os

10 |

11 | os.environ["PYTHONIOENCODING"] = "utf-8"

12 |

13 |

14 | def list_all_datasets(ignore_list=[]):

15 | dataset_dir = os.path.dirname(__file__) + '/datasets'

16 | return list(filter(

17 | lambda x: os.path.isdir(os.path.join(dataset_dir, x)) and '__pycache__' not in x and x not in ignore_list,

18 | os.listdir(dataset_dir)))

19 |

20 |

21 | def list_all_utilities():

22 | return list(SentUtils.keys()) + list(PairsUtils.keys())

23 |

24 |

25 | def load_dataset(dataset_name):

26 | return importlib.import_module('.' + dataset_name, 'nlprep.datasets')

27 |

28 |

29 | def load_utilities(util_name_list, disable_input_panel=False):

30 | sent_utils = [SentUtils[i] for i in util_name_list if i in SentUtils]

31 | pairs_utils = [PairsUtils[i] for i in util_name_list if i in PairsUtils]

32 | # handle utility argument input

33 | for util_list in [pairs_utils, sent_utils]:

34 | for ind, util in enumerate(util_list):

35 | util_arg = nlp2.function_argument_panel(util, disable_input_panel=disable_input_panel)

36 | util_list[ind] = [util, util_arg]

37 | return sent_utils, pairs_utils

38 |

39 |

40 | def convert_middleformat(dataset, input_file_map=None, cache_dir=None, dataset_arg={}):

41 | sets = {}

42 | dataset_map = input_file_map if input_file_map else dataset.DATASETINFO['DATASET_FILE_MAP']

43 | for map_name, map_dataset in dataset_map.items():

44 | loaded_dataset = dataset.load(map_dataset)

45 | if isinstance(loaded_dataset, list):

46 | for i, path in enumerate(loaded_dataset):

47 | loaded_dataset[i] = cached_path(path, cache_dir=cache_dir)

48 | dataset_path = loaded_dataset

49 | elif isinstance(loaded_dataset, datasets.arrow_dataset.Dataset):

50 | dataset_path = loaded_dataset

51 | else:

52 | dataset_path = cached_path(loaded_dataset, cache_dir=cache_dir)

53 | sets[map_name] = dataset.toMiddleFormat(dataset_path, **dataset_arg)

54 | return sets

55 |

56 |

57 | def main():

58 | parser = argparse.ArgumentParser()

59 | parser.add_argument("--dataset", type=str,

60 | choices=list_all_datasets(),

61 | required=True)

62 | parser.add_argument("--infile", type=str)

63 | parser.add_argument("--outdir", type=str, required=True)

64 | parser.add_argument("--cachedir", type=str)

65 | parser.add_argument("--report", action='store_true', help='dataset statistic report')

66 | parser.add_argument("--util", type=str, default=[], nargs='+',

67 | choices=list_all_utilities())

68 | global arg

69 | arg = parser.parse_args()

70 |

71 | # creat dir if not exist

72 | nlp2.get_dir_with_notexist_create(arg.outdir)

73 |

74 | # load dataset and utility

75 | dataset = load_dataset(arg.dataset)

76 | sent_utils, pairs_utils = load_utilities(arg.util)

77 |

78 | # handle local file1

79 | if arg.infile:

80 | fname = nlp2.get_filename_from_path(arg.infile)

81 | input_map = {

82 | fname: arg.infile

83 | }

84 | else:

85 | input_map = None

86 |

87 | print("Start processing data...")

88 | dataset_arg = nlp2.function_argument_panel(dataset.toMiddleFormat, ignore_empty=True)

89 | for k, middleformat in convert_middleformat(dataset, input_file_map=input_map, cache_dir=arg.cachedir,

90 | dataset_arg=dataset_arg).items():

91 | middleformat.dump_csvfile(os.path.join(arg.outdir, k), pairs_utils, sent_utils)

92 | if arg.report:

93 | profile = middleformat.get_report(k)

94 | profile.to_file(os.path.join(arg.outdir, k + "_report.html"))

95 |

96 |

97 | if __name__ == "__main__":

98 | main()

99 |

--------------------------------------------------------------------------------

/nlprep/datasets/qa_zh/dataset.py:

--------------------------------------------------------------------------------

1 | import json

2 |

3 | import nlp2

4 |

5 | from nlprep.middleformat import MiddleFormat

6 |

7 | DATASETINFO = {

8 | 'DATASET_FILE_MAP': {

9 | "drcd-train": "https://raw.githubusercontent.com/voidful/zh_mrc/master/drcd/DRCD_training.json",

10 | "drcd-test": "https://raw.githubusercontent.com/voidful/zh_mrc/master/drcd/DRCD_test.json",

11 | "drcd-dev": "https://raw.githubusercontent.com/voidful/zh_mrc/master/drcd/DRCD_dev.json",

12 | "cmrc-train": "https://raw.githubusercontent.com/voidful/zh_mrc/master/cmrc2018/train.json",

13 | "cmrc-test": "https://raw.githubusercontent.com/voidful/zh_mrc/master/cmrc2018/test.json",

14 | "cmrc-dev": "https://raw.githubusercontent.com/voidful/zh_mrc/master/cmrc2018/dev.json",

15 | "cail-train": "https://raw.githubusercontent.com/voidful/zh_mrc/master/cail/big_train_data.json",

16 | "cail-test": "https://raw.githubusercontent.com/voidful/zh_mrc/master/cail/test_ground_truth.json",

17 | "cail-dev": "https://raw.githubusercontent.com/voidful/zh_mrc/master/cail/dev_ground_truth.json",

18 | "combine-train": ["https://raw.githubusercontent.com/voidful/zh_mrc/master/drcd/DRCD_training.json",

19 | "https://raw.githubusercontent.com/voidful/zh_mrc/master/cmrc2018/train.json",

20 | "https://raw.githubusercontent.com/voidful/zh_mrc/master/cail/big_train_data.json"],

21 | "combine-test": ["https://raw.githubusercontent.com/voidful/zh_mrc/master/drcd/DRCD_test.json",

22 | "https://raw.githubusercontent.com/voidful/zh_mrc/master/drcd/DRCD_dev.json",

23 | "https://raw.githubusercontent.com/voidful/zh_mrc/master/cmrc2018/test.json",

24 | "https://raw.githubusercontent.com/voidful/zh_mrc/master/cmrc2018/dev.json",

25 | "https://raw.githubusercontent.com/voidful/zh_mrc/master/cail/test_ground_truth.json",

26 | "https://raw.githubusercontent.com/voidful/zh_mrc/master/cail/dev_ground_truth.json"

27 | ]

28 | },

29 | 'TASK': "qa",

30 | 'FULLNAME': "多個抽取式的中文閱讀理解資料集",

31 | 'REF': {"DRCD Source": "https://github.com/DRCKnowledgeTeam/DRCD",

32 | "CMRC2018 Source": "https://github.com/ymcui/cmrc2018",

33 | "CAIL2019 Source": "https://github.com/iFlytekJudiciary/CAIL2019_CJRC"},

34 | 'DESCRIPTION': '有DRCD/CMRC/CAIL三個資料集'

35 | }

36 |

37 |

38 | def load(data):

39 | return data

40 |

41 |

42 | def toMiddleFormat(paths):

43 | dataset = MiddleFormat(DATASETINFO)

44 | if not isinstance(paths, list):

45 | paths = [paths]

46 |

47 | for path in paths: