7 | detect stages in video automatically 8 |

9 | 10 | --- 11 | 12 | 13 | | Type | Status | 14 | |----------------------|----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------| 15 | | package version | [](https://badge.fury.io/py/stagesepx) | 16 | | python version |  | 17 | | auto test |  | 18 | | code maintainability | [](https://codeclimate.com/github/williamfzc/stagesepx/maintainability) | 19 | | code coverage | [](https://codecov.io/gh/williamfzc/stagesepx) | 20 | | code style | [](https://github.com/psf/black) | 21 | | stat | [](https://pepy.tech/project/stagesepx) [](https://pepy.tech/project/stagesepx) [](https://pepy.tech/project/stagesepx) | 22 | 23 | 24 | --- 25 | 26 | > 2022/08/13:在 0.18.0 之后,ffmpeg 以 [imageio_ffmpeg](https://github.com/imageio/imageio-ffmpeg) 方式内置(请自行评估其LICENSE影响)。此版本为解决opencv版本及M1芯片的限制,并不再需要额外安装ffmpeg。详见 [#178](https://github.com/williamfzc/stagesepx/issues/178)。 27 | > 28 | > 2022/03/30:官方不维护任何诸如微信群、QQ群的多人群组,所有信息请通过issue公开交流。不需要任何捐赠支持,如遇到涉及金钱相关的信息请不要相信。 29 | > 30 | > 2021/12/15:在 0.16.0 之后,stagesepx 将开始提供部分功能测试的支持,详见 [#158](https://github.com/williamfzc/stagesepx/issues/158) 31 | > 32 | > 2020/05/21:目前,该项目已经达到一个较为稳定的状态,并顺利在多家头部公司、团队落地,作为正式工具存在。Bug跟踪与建议请优先通过 [issue](https://github.com/williamfzc/stagesepx/issues) 联系我,感谢所有支持过这个项目的人。欢迎有心优化的同学、落地成功的团队共同建设:) 33 | 34 | --- 35 | 36 | > [English README here](./README_en.md) 37 | 38 | 这段视频展示了一个应用的完整启动过程: 39 | 40 |  41 | 42 | 将视频传递给 stagesepx,它将自动分析拆解,得到视频中所有的阶段。包括变化的过程及其耗时,以及在稳定的阶段停留的时长: 43 | 44 |  45 | 46 | 你可以据此得到每个阶段对应的精确耗时。 47 | 48 | ### 跨端运作 49 | 50 | 当然,它是天然跨端的,例如web端。甚至,任何端: 51 | 52 |  53 | 54 |  55 | 56 | ### 高准确度 57 | 58 | 与视频一致的高准确度。以秒表为例: 59 | 60 |  61 | 62 | 可以看到,与秒表的表现几乎没有差异。**请注意,这里的准确度指的是 stagesepx 能够精确还原视频本身的数据与表现。而对于现象(例如某某时间点出现什么状态)而言,准确度很大程度上取决于视频本身,如fps/分辨率等。** 63 | 64 | ### 彻底解耦 & 可编程 65 | 66 | 如果比起报告,更希望亲自处理原始数据,进而进行二次开发,你可以直接将 report 部分去除。如此做,你将得到一个 python 对象供你随意使用。它提供了大量的API,例如转换成字典: 67 | 68 | ```text 69 | { 70 | "data": [{ 71 | "data": null, 72 | "frame_id": 1, 73 | "stage": "0", 74 | "timestamp": 0.0, 75 | "video_path": "../demo.mp4" 76 | }, { 77 | "data": null, 78 | "frame_id": 2, 79 | "stage": "0", 80 | "timestamp": 0.04, 81 | "video_path": "../demo.mp4" 82 | }, { 83 | "data": null, 84 | "frame_id": 3, 85 | "stage": "0", 86 | "timestamp": 0.08, 87 | "video_path": "../demo.mp4" 88 | }, { 89 | 90 | ... 91 | ``` 92 | 93 | 从这个字典中我们可以知道,每一帧分别对应的: 94 | 95 | - 被分类到哪一个类别 96 | - 时间戳 97 | - 帧编号 98 | - ... 99 | 100 | 用户可以随意处理这些数据,无论是保存或是交给下一段代码。 101 | 102 | ### 完整自动化支持 & 规模化 103 | 104 | - 既然它是可编程的,那么它必然是朝着彻底替代人力的方向演进的。这也是它最强大的特性; 105 | - 它允许用户利用自己的训练集进行模型训练,利用神经网络进行规模化、全自动化的特定阶段耗时计算; 106 | - 此方案能够被广泛应用到各类业务迭代中,与持续集成配合,有效降低人力消耗; 107 | - 一些方向参考: 108 | - 为你的应用建立高频次的性能回归测试,形成benchmark 109 | - 对模型进行补足,为一系列同类应用(如小程序、小游戏,etc.)构建巡检能力 110 | - ... 111 | 112 | 具体可参见 [将 stagesepx 应用到实际业务中](https://github.com/williamfzc/work_with_stagesepx)。 113 | 114 | --- 115 | 116 | - 标准模式下无需前置训练与学习 117 | - 更少的代码需要 118 | - 高度可配置化,适应不同场景 119 | - 支持与其他框架结合,融入你的业务 120 | - 所有你需要的,只是一个视频 121 | 122 | ## 开始 123 | 124 | ### 正式使用 125 | 126 | > 在正式落地时,推荐使用 完整的python脚本 而不是命令行,以保证更高的可编程性。完整的落地例子另外单独开了一个 repo 存放,[传送门](https://github.com/williamfzc/work_with_stagesepx)。 127 | > 请一定配合 [这篇文章](https://blog.csdn.net/wsc106/article/details/107351675) 使用,基本能解决90%的问题。 128 | 129 | - 配置: 130 | - [用30行代码快速跑一个demo](example/mini.py) 131 | - [30行代码怎么没有注释](example/stable.py) 132 | - [还有更多配置吗](example/cut_and_classify.py) 133 | - 应用: 134 | - [我想结合真实场景理解这个项目的原理](https://github.com/150109514/stagesepx_with_keras) 135 | - [我想看看实际落地方案,最好有把饭喂嘴里的例子](https://github.com/williamfzc/work_with_stagesepx) 136 | - [我们的app很复杂,能搞定吗](https://testerhome.com/topics/22215) 137 | - [太麻烦了,有没有开箱即用、简单配置下可以落地的工具](https://github.com/williamfzc/sepmachine) 138 | - 其他: 139 | - [我有问题要问](https://github.com/williamfzc/stagesepx/issues/new) 140 | - [(使用时请参考上面的其他链接,此文档更新不及时)官方文档](https://williamfzc.github.io/stagesepx/) 141 | 142 | ### 命令行 143 | 144 | 你也可以直接通过命令行使用,而无需编写脚本: 145 | 146 | ```bash 147 | stagesepx analyse your_video.mp4 report.html 148 | ``` 149 | 150 | 基于此,你可以非常方便地利用 shell 建立工作流。以 android 为例: 151 | 152 | ```bash 153 | adb shell screenrecord --time-limit 10 /sdcard/demo.mp4 154 | adb pull /sdcard/demo.mp4 . 155 | stagesepx analyse demo.mp4 report.html 156 | ``` 157 | 158 | 关于结果不准确的问题请参考 [#46](https://github.com/williamfzc/stagesepx/issues/46)。 159 | 160 | ### 配置化运行(0.15.0) 161 | 162 | 当然,通常因为场景差异,我们需要对参数进行修改使其达到更好的效果。这使得用户需要投入一些精力在脚本编写上。在 0.15.0 之后,配置化运行的加入使用户能够在不需要编写脚本的情况下直接使用所有能力,大大降低了接入门槛。 163 | 164 | ```json 165 | { 166 | "output": ".", 167 | "video": { 168 | "path": "./PATH_TO_YOUR/VIDEO.mp4", 169 | "fps": 30 170 | } 171 | } 172 | ``` 173 | 174 | 命令行运行: 175 | 176 | ```bash 177 | stagesepx run YOUR_CONFIG.json 178 | ``` 179 | 180 | 即可达到与脚本相同的效果。其他的配置项可以参考:[work_with_stagesepx](https://github.com/williamfzc/work_with_stagesepx/tree/master/run_with_config) 181 | 182 | ## 安装 183 | 184 | 标准版(pypi) 185 | 186 | ```bash 187 | pip install stagesepx 188 | ``` 189 | 190 | 预览版(github): 191 | 192 | ```bash 193 | pip install --upgrade git+https://github.com/williamfzc/stagesepx.git 194 | ``` 195 | 196 | ## 常见问题 197 | 198 | 最终我还是决定通过 issue 面板维护所有的 Q&A ,毕竟问题的提出与回复是一个强交互过程。如果在查看下列链接之后你的问题依旧没有得到解答: 199 | 200 | - 请 [新建issue](https://github.com/williamfzc/stagesepx/issues/new) 201 | - 或在相关的 issue 下进行追问与补充 202 | - 你的提问将不止帮助到你一个人 :) 203 | 204 | 问题列表: 205 | 206 | - [安装过程遇到问题?](https://github.com/williamfzc/stagesepx/issues/80) 207 | - [如何根据图表分析得出app启动的时间?](https://github.com/williamfzc/stagesepx/issues/73) 208 | - [日志太多了,如何关闭或者导出成文件?](https://github.com/williamfzc/stagesepx/issues/58) 209 | - [我的视频有 轮播图 或 干扰分类 的区域](https://github.com/williamfzc/stagesepx/issues/55) 210 | - [分类结果如何定制?](https://github.com/williamfzc/stagesepx/issues/48) 211 | - [算出来的结果不准确 / 跟传统方式有差距](https://github.com/williamfzc/stagesepx/issues/46) 212 | - [出现 OutOfMemoryError](https://github.com/williamfzc/stagesepx/issues/86) 213 | - [工具没法满足我的业务需要](https://github.com/williamfzc/stagesepx/issues/93) 214 | - [为什么报告中的时间戳跟实际不一样?](https://github.com/williamfzc/stagesepx/issues/75) 215 | - [自定义模型的分类结果不准确,跟我提供的训练集对不上](https://github.com/williamfzc/stagesepx/issues/100) 216 | - ... 217 | 218 | 不仅是问题,如果有任何建议与交流想法,同样可以通过 issue 面板找到我。我们每天都会查看 issue 面板,无需担心跟进不足。 219 | 220 | ## 相关文章 221 | 222 | - [图像分类、AI 与全自动性能测试](https://testerhome.com/topics/19978) 223 | - [全自动化的抖音启动速度测试](https://testerhome.com/topics/22215) 224 | - [(MTSC2019) 基于图像分类的下一代速度类测试解决方案](https://testerhome.com/topics/21874) 225 | 226 | ## 架构 227 | 228 |  229 | 230 | ## 参与项目 231 | 232 | ### 规划 233 | 234 | 在 1.0版本 之前,我们接下来的工作主要分为下面几个部分: 235 | 236 | #### 标准化 237 | 238 | 随着越来越多的业务落地,我们开始思考它是否能够作为行业级别的方案。 239 | 240 | - [x] 基于实验室数据的准确度对比(未公开) 241 | - [x] [规范且适合落地的例子](https://github.com/williamfzc/work_with_stagesepx) 242 | - [ ] 边界情况下的确认 243 | - [x] 代码覆盖率 95%+ 244 | - [ ] API参数相关文档 245 | 246 | #### 新需求的收集与开发 247 | 248 | 该部分由 issue 面板管理。 249 | 250 | ### 贡献代码 251 | 252 | 欢迎感兴趣的同学为这个项目添砖加瓦,三个必备步骤: 253 | 254 | - 请在开始编码前留个 issue 告知你想完成的功能,因为可能这个功能已经在开发中或者已有; 255 | - commit规范我们严格遵守 [约定式提交](https://www.conventionalcommits.org/zh-hans/); 256 | - 该repo有较为完善的单测与CI以保障整个项目的质量,在过去的迭代中发挥了巨大的作用。所以请为你新增的代码同步新增单元测试(具体写法请参考 tests 中的已有用例)。 257 | 258 | ### 联系我们 259 | 260 | - 邮箱:`fengzc@vip.qq.com` 261 | - QQ:`178894043` 262 | 263 | ## Changelog / History 264 | 265 | see [CHANGELOG.md](CHANGELOG.md) 266 | 267 | ## Thanks 268 | 269 | Thank you [JetBrains](https://www.jetbrains.com/) for supporting the project with free product licenses. 270 | 271 | ## License 272 | 273 | [MIT](LICENSE) 274 | -------------------------------------------------------------------------------- /README_en.md: -------------------------------------------------------------------------------- 1 |7 | detect stages in video automatically 8 |

9 | 10 | --- 11 | 12 | | Type | Status | 13 | |----------------------|--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------| 14 | | package version | [](https://badge.fury.io/py/stagesepx) | 15 | | python version |  | 16 | | auto test |  | 17 | | code maintainability | [](https://codeclimate.com/github/williamfzc/stagesepx/maintainability) | 18 | | code coverage | [](https://codecov.io/gh/williamfzc/stagesepx) | 19 | | docker build status |   | 20 | | code style | [](https://github.com/psf/black) | 21 | 22 | --- 23 | 24 | > For English users: 25 | > 26 | > Mainly we used Chinese in discussions and communications, so maybe the most of issues/document are wrote in Chinese currently. 27 | > 28 | > But don't worry: 29 | > - maybe google translate is a good helper :) 30 | > - read the code directly (all the code and comments are wrote in English) 31 | > - feel free to contact with us via building a new issue with your questions 32 | > 33 | > Thanks ! 34 | 35 | --- 36 | 37 | This video shows the complete startup process of an app: 38 | 39 |  40 | 41 | By sending this video to stagesepx, you would get a report like this automatically: 42 | 43 |  44 | 45 | You can get the exact time consumption for each stage easily. Of course it is cross-platform, which can be also used in Android/Web/PC or something like that. Even, any platforms: 46 | 47 |  48 | 49 |  50 | 51 | And precisely: 52 | 53 |  54 | 55 | As you can see, its result is very close to the timer. 56 | 57 | --- 58 | 59 | - Fully automatic, no pre-training required 60 | - Less code required 61 | - Configurable for different scenes 62 | - All you need is a video! 63 | 64 | ## Structure 65 | 66 |  67 | 68 | ## Quick Start 69 | 70 | > Translation is working in progress. But not ready. You can use something like google translate instead for now. Feel free to leave me a issue when you are confused. 71 | 72 | - [30 lines demo](example/mini.py) 73 | - [how to use it in production (in Chinese)](https://github.com/williamfzc/stagesepx/blob/master/README_en.md) 74 | - [demo with all the features (in Chinese)](example/cut_and_classify.py) 75 | - [i have some questions](https://github.com/williamfzc/stagesepx/issues/new) 76 | 77 | ## Installation 78 | 79 | ```bash 80 | pip install stagesepx 81 | ``` 82 | 83 | ## License 84 | 85 | [MIT](LICENSE) 86 | -------------------------------------------------------------------------------- /docs/.nojekyll: -------------------------------------------------------------------------------- https://raw.githubusercontent.com/williamfzc/stagesepx/e3d9538a1f94b77788544af129e7795d85026a54/docs/.nojekyll -------------------------------------------------------------------------------- /docs/README.md: -------------------------------------------------------------------------------- 1 |7 | detect stages in video automatically 8 |

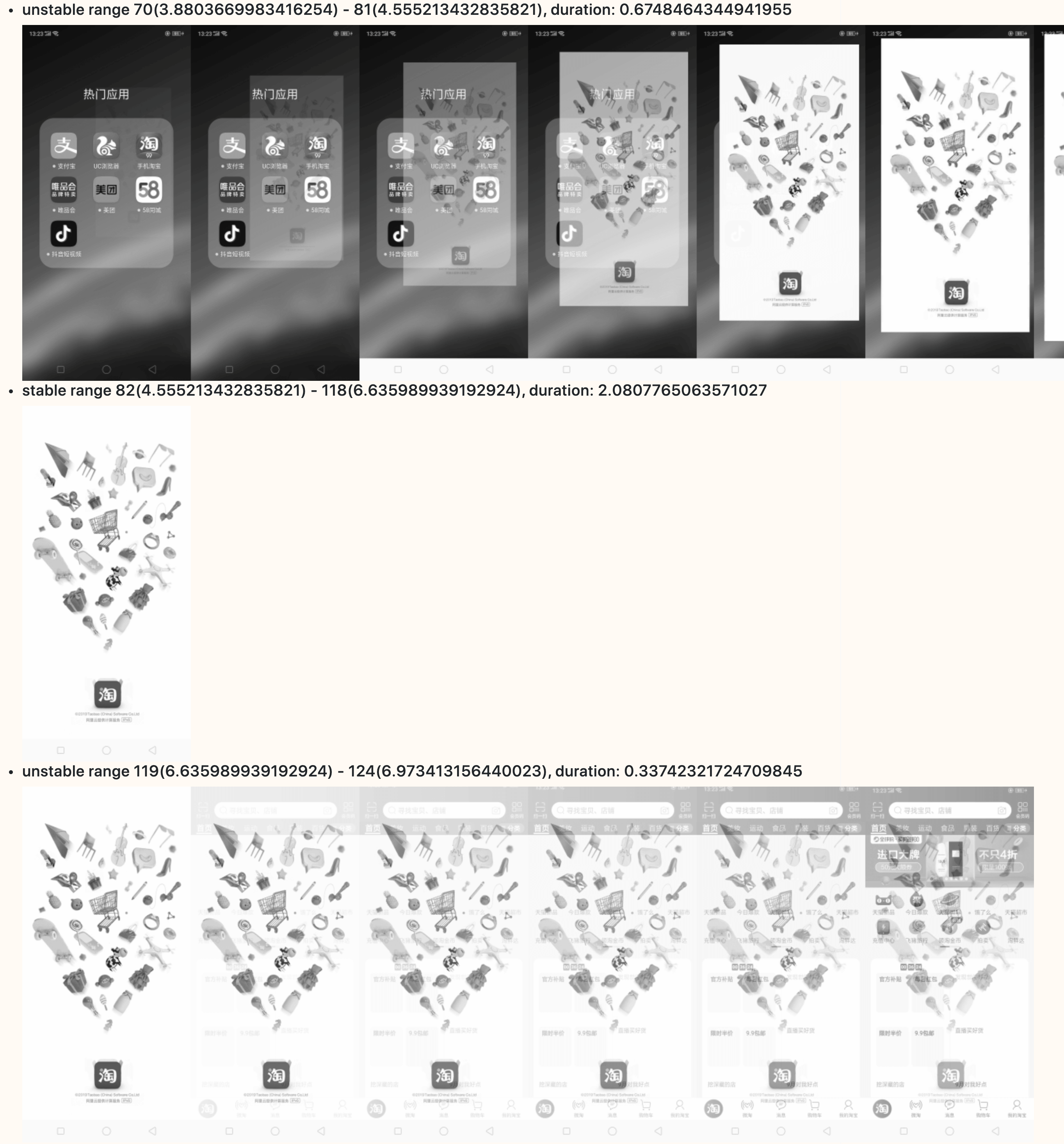

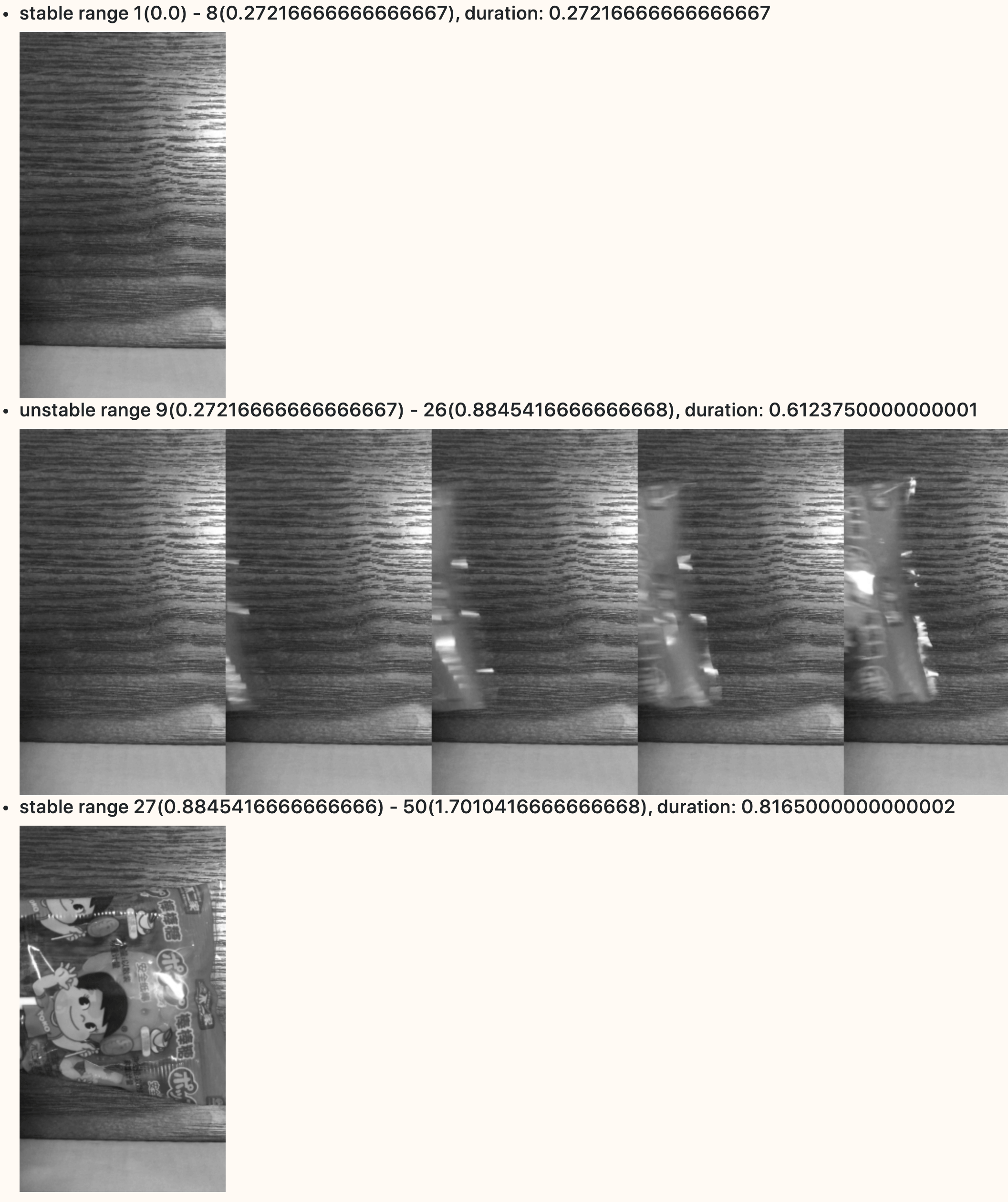

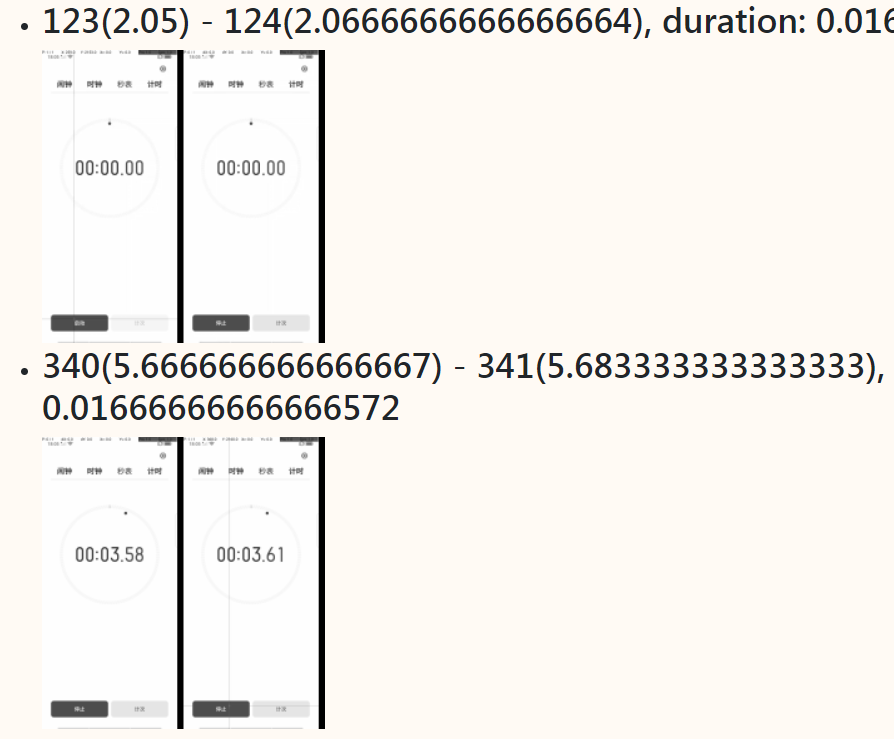

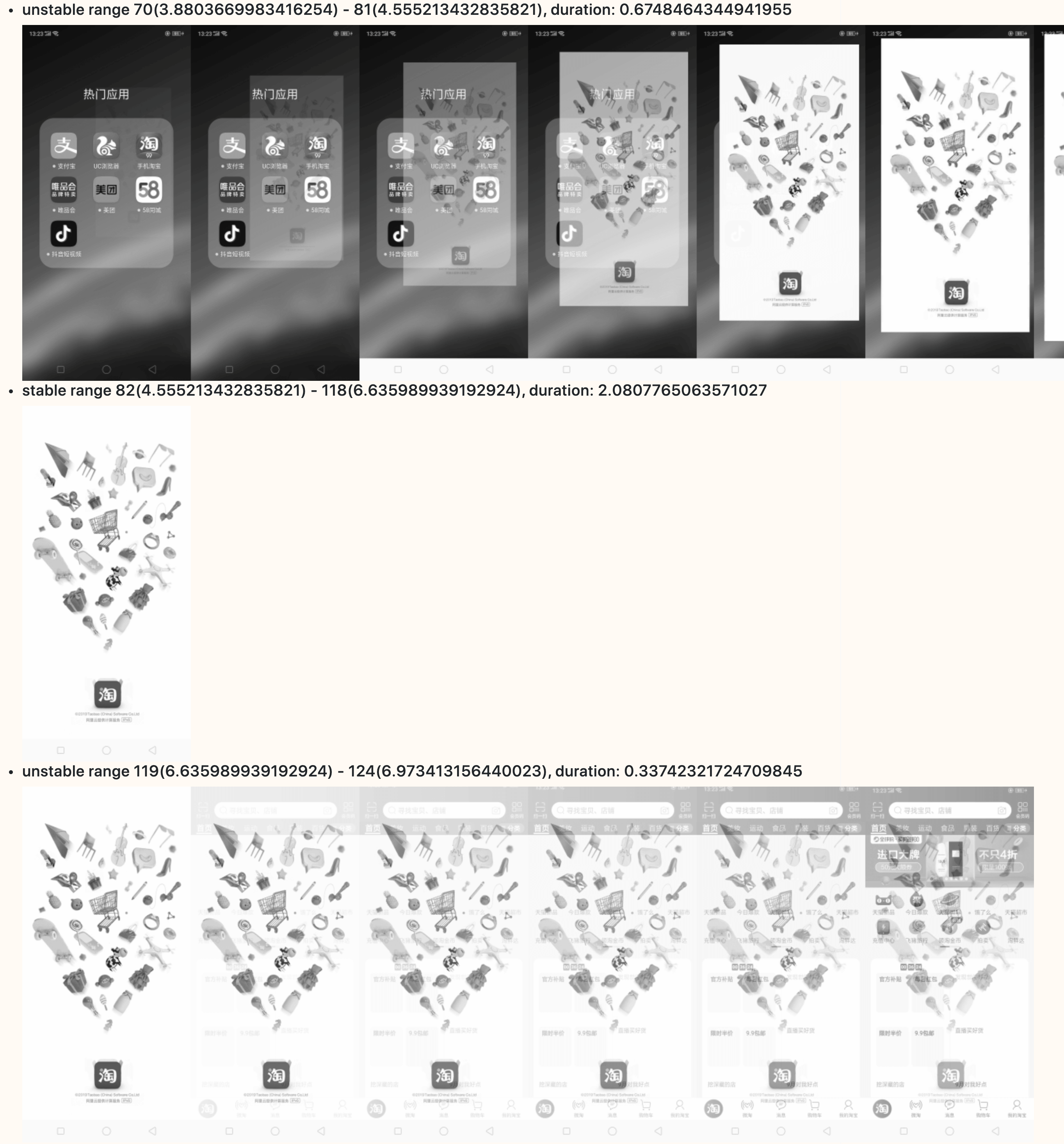

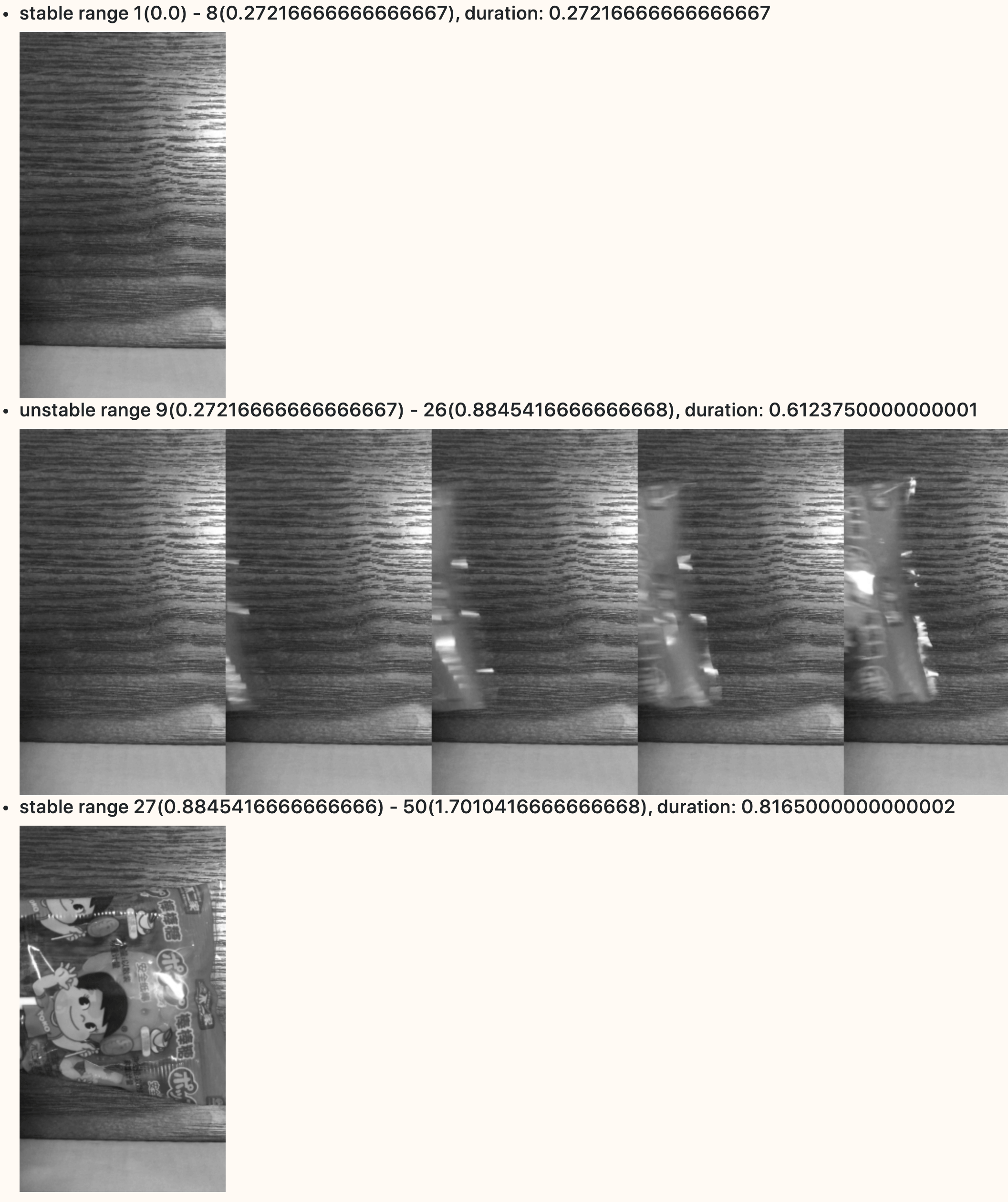

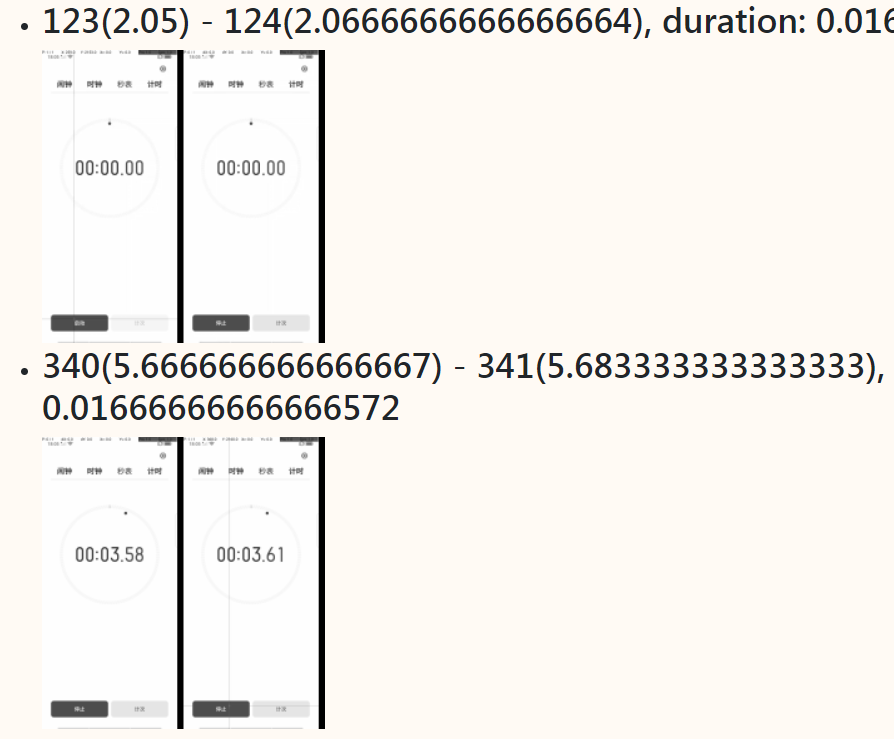

9 | 10 | --- 11 | 12 | | Type | Status | 13 | |----------------------|--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------| 14 | | python version | [](https://badge.fury.io/py/stagesepx) | 15 | | auto test |  | 16 | | code maintainability | [](https://codeclimate.com/github/williamfzc/stagesepx/maintainability) | 17 | | code coverage | [](https://codecov.io/gh/williamfzc/stagesepx) | 18 | | docker build status |   | 19 | | code style | [](https://github.com/psf/black) | 20 | 21 | --- 22 | 23 | > welcome to stagesepx :P 24 | 25 | Please start from sidebar! 26 | -------------------------------------------------------------------------------- /docs/_coverpage.md: -------------------------------------------------------------------------------- 1 | # stagesep x 2 | 3 | > detect stages in video automatically 4 | 5 | [GitHub](https://github.com/williamfzc/stagesepx/) 6 | [Documentation](/pages/0_what_is_it) 7 | -------------------------------------------------------------------------------- /docs/_sidebar.md: -------------------------------------------------------------------------------- 1 | - [主页](/README) 2 | - [关于 stagesepx](/pages/0_what_is_it) 3 | - [应用场景](/pages/1_where_can_it_be_used) 4 | - [如何使用](/pages/2_how_to_use_it) 5 | - [运作原理](/pages/3_how_it_works) 6 | - [发展规划](/pages/4_roadmap) 7 | - [其他](/pages/5_others) 8 | -------------------------------------------------------------------------------- /docs/index.html: -------------------------------------------------------------------------------- 1 | 2 | 3 | 4 | 5 |