├── README.md

├── feat

└── README.md

├── input

└── README.md

├── output

├── README.md

├── m1

│ ├── catboost03

│ │ └── .gitkeep

│ ├── inferSent1

│ │ └── .gitkeep

│ └── nn02

│ │ └── .gitkeep

└── m3

│ ├── lgb_m3_32-50-0

│ └── .gitkeep

│ ├── lgb_m3_37-0

│ └── .gitkeep

│ └── lgb_m3_38-0

│ └── .gitkeep

├── src

├── ensemble

│ └── .gitkeep

├── feature

│ ├── .gitkeep

│ ├── .ipynb_checkpoints

│ │ └── gen_dict-checkpoint.ipynb

│ ├── data_preprocess.py

│ ├── feat30-50.py

│ ├── feat31-50.py

│ ├── feat32-50.py

│ ├── feat37-pairwise.py

│ ├── feat38-stk.py

│ ├── feat40.py

│ ├── gen_dict.ipynb

│ ├── gen_samples.py

│ └── tfidf_recall_30.py

├── rank

│ ├── m1

│ │ ├── catboost03.py

│ │ ├── glove

│ │ │ ├── .gitignore

│ │ │ ├── .travis.yml

│ │ │ ├── LICENSE

│ │ │ ├── Makefile

│ │ │ ├── README.md

│ │ │ ├── demo.sh

│ │ │ ├── eval

│ │ │ │ ├── matlab

│ │ │ │ │ ├── WordLookup.m

│ │ │ │ │ ├── evaluate_vectors.m

│ │ │ │ │ └── read_and_evaluate.m

│ │ │ │ ├── octave

│ │ │ │ │ ├── WordLookup_octave.m

│ │ │ │ │ ├── evaluate_vectors_octave.m

│ │ │ │ │ └── read_and_evaluate_octave.m

│ │ │ │ ├── python

│ │ │ │ │ ├── distance.py

│ │ │ │ │ ├── evaluate.py

│ │ │ │ │ └── word_analogy.py

│ │ │ │ └── question-data

│ │ │ │ │ ├── capital-common-countries.txt

│ │ │ │ │ ├── capital-world.txt

│ │ │ │ │ ├── city-in-state.txt

│ │ │ │ │ ├── currency.txt

│ │ │ │ │ ├── family.txt

│ │ │ │ │ ├── gram1-adjective-to-adverb.txt

│ │ │ │ │ ├── gram2-opposite.txt

│ │ │ │ │ ├── gram3-comparative.txt

│ │ │ │ │ ├── gram4-superlative.txt

│ │ │ │ │ ├── gram5-present-participle.txt

│ │ │ │ │ ├── gram6-nationality-adjective.txt

│ │ │ │ │ ├── gram7-past-tense.txt

│ │ │ │ │ ├── gram8-plural.txt

│ │ │ │ │ └── gram9-plural-verbs.txt

│ │ │ └── src

│ │ │ │ ├── README.md

│ │ │ │ ├── cooccur.c

│ │ │ │ ├── glove.c

│ │ │ │ ├── shuffle.c

│ │ │ │ └── vocab_count.c

│ │ ├── inferSent1-5-fold_predict.py

│ │ ├── inferSent1-5-fold_train.py

│ │ ├── nn02_predict.py

│ │ ├── nn02_train.py

│ │ ├── prepare_rank_train.py

│ │ ├── run.sh

│ │ └── w2v_training.py

│ ├── m2

│ │ ├── bert_5_fold_predict.py

│ │ ├── bert_5_fold_train.py

│ │ ├── bert_preprocessing.py

│ │ ├── change_formatting4stk.py

│ │ ├── final_blend.py

│ │ ├── fold_result_integration.py

│ │ ├── gen_w2v.sh

│ │ ├── mk_submission.py

│ │ ├── model.py

│ │ ├── nn_5_fold_predict.py

│ │ ├── nn_5_fold_train.py

│ │ ├── nn_preprocessing.py

│ │ ├── preprocessing.py

│ │ ├── run.sh

│ │ └── utils.py

│ └── m3

│ │ ├── convert.py

│ │ ├── eval.py

│ │ ├── flow.py

│ │ ├── kfold_merge.py

│ │ ├── lgb_train_32-50-0.py

│ │ ├── lgb_train_37-0.py

│ │ ├── lgb_train_38-0.py

│ │ ├── lgb_train_38-1.py

│ │ └── lgb_train_40-0.py

├── recall

│ └── tfidf_recall_30.py

└── utils

│ └── .gitkeep

├── stk_feat

└── README.md

└── tools

├── __pycache__

├── basic_learner.cpython-37.pyc

├── custom_bm25.cpython-37.pyc

├── custom_metrics.cpython-37.pyc

├── feat_utils.cpython-37.pyc

├── lgb_learner.cpython-37.pyc

├── loader.cpython-37.pyc

├── nlp_preprocess.cpython-37.pyc

└── pandas_util.cpython-37.pyc

├── basic_learner.py

├── basic_learner.pyc

├── custom_bm25.py

├── custom_bm25.pyc

├── custom_metrics.py

├── custom_metrics.pyc

├── feat_utils.py

├── lgb_learner.py

├── lgb_learner.pyc

├── loader.py

├── loader.pyc

├── nlp_preprocess.py

├── pandas_util.py

└── pandas_util.pyc

/README.md:

--------------------------------------------------------------------------------

1 | # WSDM2020-solution

2 | ## Team Name: funny

3 | Team Member: just4fun, greedisgood, slowdown, funny

4 | ## No Data Leak

5 | We achieve map@3 score 0.37458 at part 1 and 0.38020 at part 2 without using any data leak in the competition. During the recall process we search the related papers from the whole dataset without tricky data screening.

6 |

7 | ## Our Basic Solution

8 | data preprocess -> recall by text similarity-> single model (LGB + NN) -> model stacking -> linear ensemble -> final result

9 |

10 |

--------------------------------------------------------------------------------

/feat/README.md:

--------------------------------------------------------------------------------

1 | ## Dir of generated features

2 |

--------------------------------------------------------------------------------

/input/README.md:

--------------------------------------------------------------------------------

1 | ## Dir of input

2 |

--------------------------------------------------------------------------------

/output/README.md:

--------------------------------------------------------------------------------

1 | ## Dir of cv results and results.

2 |

--------------------------------------------------------------------------------

/output/m1/catboost03/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/wsdm-Teamfunny/wsdm2020-solution/dae072da26ccf629d1a96185acedeaf4199b6ec4/output/m1/catboost03/.gitkeep

--------------------------------------------------------------------------------

/output/m1/inferSent1/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/wsdm-Teamfunny/wsdm2020-solution/dae072da26ccf629d1a96185acedeaf4199b6ec4/output/m1/inferSent1/.gitkeep

--------------------------------------------------------------------------------

/output/m1/nn02/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/wsdm-Teamfunny/wsdm2020-solution/dae072da26ccf629d1a96185acedeaf4199b6ec4/output/m1/nn02/.gitkeep

--------------------------------------------------------------------------------

/output/m3/lgb_m3_32-50-0/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/wsdm-Teamfunny/wsdm2020-solution/dae072da26ccf629d1a96185acedeaf4199b6ec4/output/m3/lgb_m3_32-50-0/.gitkeep

--------------------------------------------------------------------------------

/output/m3/lgb_m3_37-0/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/wsdm-Teamfunny/wsdm2020-solution/dae072da26ccf629d1a96185acedeaf4199b6ec4/output/m3/lgb_m3_37-0/.gitkeep

--------------------------------------------------------------------------------

/output/m3/lgb_m3_38-0/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/wsdm-Teamfunny/wsdm2020-solution/dae072da26ccf629d1a96185acedeaf4199b6ec4/output/m3/lgb_m3_38-0/.gitkeep

--------------------------------------------------------------------------------

/src/ensemble/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/wsdm-Teamfunny/wsdm2020-solution/dae072da26ccf629d1a96185acedeaf4199b6ec4/src/ensemble/.gitkeep

--------------------------------------------------------------------------------

/src/feature/.gitkeep:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/wsdm-Teamfunny/wsdm2020-solution/dae072da26ccf629d1a96185acedeaf4199b6ec4/src/feature/.gitkeep

--------------------------------------------------------------------------------

/src/feature/data_preprocess.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | #coding=utf-8

3 |

4 | # 基础模块

5 | import os

6 | import sys

7 | import time

8 | from tqdm import tqdm

9 | from datetime import datetime

10 |

11 | # 数据处理

12 | import re

13 | import pickle

14 | import numpy as np

15 | import pandas as pd

16 | from multiprocessing import Pool

17 |

18 | # 自定义工具包

19 | sys.path.append('../../tools/')

20 | import loader

21 | import pandas_util

22 | from nlp_preprocess import preprocess

23 |

24 | # 设置随机种子

25 | SEED = 2020

26 | PROCESS_NUM, PARTITION_NUM = 32, 32

27 |

28 | input_root_path = '../../input/'

29 | output_root_path = '../../input/'

30 |

31 | postfix = 'final_all'

32 | file_type = 'ftr'

33 |

34 | tr_out_path = output_root_path + 'tr_input_{}.{}'.format(postfix, file_type)

35 | te_out_path = output_root_path + 'te_input_{}.{}'.format(postfix, file_type)

36 | paper_out_path = output_root_path + 'paper_input_{}.{}'.format(postfix, file_type)

37 |

38 | # 获取关键句函数

39 | def digest(text):

40 | backup = text[:]

41 | text = text.replace('al.', '').split('. ')

42 | t=''

43 | pre_text=[]

44 | len_text=len(text)-1

45 | add=True

46 | pre=''

47 | while len_text>=0:

48 | index=text[len_text]

49 | index+=pre

50 | if len(index.split(' '))<=3 :

51 | add=False

52 | pre=index+pre

53 | else:

54 | add=True

55 | pre=''

56 | if add:

57 | pre_text.append(index)

58 | len_text-=1

59 | if len(pre_text)==0:

60 | pre_text=text

61 | pre_text.reverse()

62 | for index in pre_text:

63 | if index.find('[**##**]') != -1:

64 | index = re.sub(r'[\[|,]+\*\*\#\#\*\*[\]|,]+','',index)

65 | index+='. '

66 | t+=index

67 | return t

68 |

69 | def partition(df, num):

70 | df_partitions, step = [], int(np.ceil(df.shape[0]/num))

71 | for i in range(0, df.shape[0], step):

72 | df_partitions.append(df.iloc[i:i+step])

73 | return df_partitions

74 |

75 | def tr_single_process(params=None):

76 | (tr, i) = params

77 | print (i, 'start', datetime.now())

78 | tr['quer_key'] = tr['description_text'].fillna('').progress_apply(lambda s: preprocess(digest(s)))

79 | tr['quer_all'] = tr['description_text'].fillna('').progress_apply(lambda s: preprocess(s))

80 | print (i, 'completed', datetime.now())

81 | return tr

82 |

83 | def paper_single_process(params=None):

84 | (df, i) = params

85 | print (i, 'start', datetime.now())

86 | df['titl'] = df['title'].fillna('').progress_apply(lambda s: preprocess(s))

87 | df['abst'] = df['abstract'].fillna('').progress_apply(lambda s: preprocess(s))

88 | print (i, 'completed', datetime.now())

89 | return df

90 |

91 | def multi_text_process(df, task, process_num=30):

92 | pool = Pool(process_num)

93 | df_parts = partition(df, process_num)

94 | print ('{} processes init and partition to {} parts' \

95 | .format(process_num, process_num))

96 | param_list = [(df_parts[i], i) for i in range(process_num)]

97 | if task in ['tr', 'te']:

98 | dfs = pool.map(tr_single_process, param_list)

99 | elif task in ['paper']:

100 | dfs = pool.map(paper_single_process, param_list)

101 | df = pd.concat(dfs, axis=0)

102 | print (task, 'multi process completed')

103 | print (df.columns)

104 | return df

105 |

106 | if __name__ == "__main__":

107 |

108 | ts = time.time()

109 | tqdm.pandas()

110 | print('start time: %s' % datetime.now())

111 | # load data

112 | df = loader.load_df(input_root_path + 'candidate_paper_for_wsdm2020.ftr')

113 | tr = loader.load_df(input_root_path + 'train_release.csv')

114 | te = loader.load_df(input_root_path + 'test.csv')

115 | cv = loader.load_df(input_root_path + 'cv_ids_0109.csv')

116 |

117 | # 过滤重复数据 & 异常数据

118 | tr = tr[tr['description_id'].isin(cv['description_id'].tolist())]

119 | tr = tr[tr.description_id != '6.45E+04']

120 |

121 | df = df[~pd.isnull(df['paper_id'])]

122 | tr = tr[~pd.isnull(tr['description_id'])]

123 | print ('pre', te.shape)

124 | te = te[~pd.isnull(te['description_id'])]

125 | print ('post', te.shape)

126 |

127 | #df = df.head(1000)

128 | #tr = tr.head(1000)

129 | #te = te.head(1000)

130 |

131 | tr = multi_text_process(tr, task='tr')

132 | te = multi_text_process(te, task='te')

133 | df = multi_text_process(df, task='paper')

134 |

135 | tr.drop(['description_text'], axis=1, inplace=True)

136 | te.drop(['description_text'], axis=1, inplace=True)

137 | df.drop(['abstract', 'title'], axis=1, inplace=True)

138 | print ('text preprocess completed')

139 |

140 | loader.save_df(tr, tr_out_path)

141 | print (tr.columns)

142 | print (tr.head())

143 |

144 | loader.save_df(te, te_out_path)

145 | print (te.columns)

146 | print (te.head())

147 |

148 | loader.save_df(df, paper_out_path)

149 | print (df.columns)

150 | print (df.head())

151 |

152 | print('all completed: {}, cost {}s'.format(datetime.now(), np.round(time.time() - ts, 2)))

153 |

154 |

155 |

156 |

--------------------------------------------------------------------------------

/src/feature/feat31-50.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | #coding=utf-8

3 |

4 | # 生成词向量距离特征

5 |

6 | # 基础模块

7 | import os

8 | import gc

9 | import sys

10 | import time

11 | import pickle

12 | from datetime import datetime

13 | from tqdm import tqdm

14 |

15 | # 数据处理

16 | import numpy as np

17 | import pandas as pd

18 | from tqdm import tqdm

19 | from multiprocessing import Pool

20 |

21 | # 自定义工具包

22 | sys.path.append('../../tools/')

23 | import loader

24 | import pandas_util

25 | import custom_bm25 as bm25

26 | from feat_utils import try_divide, dump_feat_name

27 |

28 | # 开源工具包

29 | import nltk

30 | import gensim

31 | from gensim.models import Word2Vec

32 | from gensim.models.word2vec import LineSentence

33 | from gensim import corpora, models, similarities

34 | from gensim.similarities import SparseMatrixSimilarity

35 | from sklearn.metrics.pairwise import cosine_similarity as cos_sim

36 |

37 | # 设置随机种子

38 | SEED = 2020

39 |

40 | input_root_path = '../../input/'

41 | output_root_path = '../../feat/'

42 |

43 | postfix = '31-50'

44 | file_type = 'ftr'

45 |

46 | # 当前特征

47 | tr_fea_out_path = output_root_path + 'tr_fea_{}.{}'.format(postfix, file_type)

48 | te_fea_out_path = output_root_path + 'te_fea_{}.{}'.format(postfix, file_type)

49 |

50 | # 当前特征 + 之前特征 merge 之后的完整训练数据

51 | tr_out_path = output_root_path + 'tr_s0_{}.{}'.format(postfix, file_type)

52 | te_out_path = output_root_path + 'te_s0_{}.{}'.format(postfix, file_type)

53 |

54 | ID_NAMES = ['description_id', 'paper_id']

55 | PROCESS_NUM = 15

56 |

57 | # load data

58 | ts = time.time()

59 | dictionary = corpora.Dictionary.load('../../feat/corpus.dict')

60 | tfidf = models.TfidfModel.load('../../feat/tfidf.model')

61 |

62 | print ('load data completed, cost {}s'.format(np.round(time.time() - ts, 2)))

63 |

64 | def sum_score(x, y):

65 | return max(x, 0) + max(y, 0)

66 |

67 | def cos_dis(vec_x, vec_y, norm=False):

68 | if vec_x == None or vec_y == None:

69 | return -1

70 | dic_x = {v[0]: v[1] for v in vec_x}

71 | dic_y = {v[0]: v[1] for v in vec_y}

72 |

73 | dot_prod = 0

74 | for k, x in dic_x.items():

75 | y = dic_y.get(k, 0)

76 | dot_prod += x * y

77 | norm_x = np.linalg.norm([v[1] for v in vec_x])

78 | norm_y = np.linalg.norm([v[1] for v in vec_y])

79 |

80 | cos = dot_prod / (norm_x * norm_y)

81 | return 0.5 * cos + 0.5 if norm else cos # 归一化到[0, 1]区间内

82 |

83 | def eucl_dis(vec_x, vec_y):

84 | if vec_x == None or vec_y == None:

85 | return -1

86 | dic_x = {v[0]: v[1] for v in vec_x}

87 | dic_y = {v[0]: v[1] for v in vec_y}

88 | lis_i = list(set(list(dic_x.keys()) + list(dic_y.keys())))

89 | squa_sum = 0

90 | for i in lis_i:

91 | x, y = dic_x.get(i, 0), dic_y.get(i, 0)

92 | squa_sum += np.square(x - y)

93 | return np.sqrt(squa_sum)

94 |

95 | def manh_dis(vec_x, vec_y):

96 | if vec_x == None or vec_y == None:

97 | return -1

98 | dic_x = {v[0]: v[1] for v in vec_x}

99 | dic_y = {v[0]: v[1] for v in vec_y}

100 | lis_i = list(set(list(dic_x.keys()) + list(dic_y.keys())))

101 | abs_sum = 0

102 | for i in lis_i:

103 | x, y = dic_x.get(i, 0), dic_y.get(i, 0)

104 | abs_sum += np.abs(x - y)

105 | return abs_sum

106 |

107 | def get_bm25_corp(quer, paper_id):

108 | quer_vec = dictionary.doc2bow(quer.split(' '))

109 | corp_score = bm25_corp.get_score(quer_vec, paper_ids.index(paper_id))

110 | return corp_score

111 |

112 | def get_bm25_abst(quer, paper_id):

113 | quer_vec = dictionary.doc2bow(quer.split(' '))

114 | abst_score = bm25_abst.get_score(quer_vec, paper_ids.index(paper_id))

115 | return abst_score

116 |

117 | def get_bm25_titl(quer, paper_id):

118 | quer_vec = dictionary.doc2bow(quer.split(' '))

119 | titl_score = bm25_titl.get_score(quer_vec, paper_ids.index(paper_id))

120 | return titl_score

121 |

122 | def single_process_feat(params=None):

123 | ts = time.time()

124 | (df, i) = params

125 |

126 | ts = time.time()

127 | print (i, 'start', datetime.now())

128 | # tfidf vec dis

129 | df['quer_key_vec'] = df['quer_key'].progress_apply(lambda s: tfidf[dictionary.doc2bow(s.split(' '))])

130 | df['quer_all_vec'] = df['quer_all'].progress_apply(lambda s: tfidf[dictionary.doc2bow(s.split(' '))])

131 | df['titl_vec'] = df['titl'].progress_apply(lambda s: tfidf[dictionary.doc2bow(s.split(' '))])

132 | df['abst_vec'] = df['abst'].progress_apply(lambda s: tfidf[dictionary.doc2bow(s.split(' '))])

133 | df['corp_vec'] = df['corp'].progress_apply(lambda s: tfidf[dictionary.doc2bow(s.split(' '))])

134 | print (i, 'load vec completed, cost {}s'.format(np.round(time.time() - ts), 2))

135 |

136 | ts = time.time()

137 | vec_type = 'tfidf'

138 | for vec_x in ['quer_key', 'quer_all']:

139 | for vec_y in ['abst', 'titl', 'corp']:

140 | df['{}_{}_{}_cos_dis'.format(vec_x, vec_type, vec_y)] = df.progress_apply(lambda row: \

141 | cos_dis(row['{}_vec'.format(vec_x)], row['{}_vec'.format(vec_y)]), axis=1)

142 | df['{}_{}_{}_eucl_dis'.format(vec_x, vec_type, vec_y)] = df.progress_apply(lambda row: \

143 | eucl_dis(row['{}_vec'.format(vec_x)], row['{}_vec'.format(vec_y)]), axis=1)

144 | df['{}_{}_{}_manh_dis'.format(vec_x, vec_type, vec_y)] = df.progress_apply(lambda row: \

145 | manh_dis(row['{}_vec'.format(vec_x)], row['{}_vec'.format(vec_y)]), axis=1)

146 |

147 | print (i, vec_x, 'tfidf completed, cost {}s'.format(np.round(time.time() - ts), 2))

148 |

149 | del_cols = [col for col in df.columns if df[col].dtype == 'O' and col not in ID_NAMES]

150 | print ('del cols', del_cols)

151 | df.drop(del_cols, axis=1, inplace=True)

152 | return df

153 |

154 | def partition(df, num):

155 | df_partitions, step = [], int(np.ceil(df.shape[0]/num))

156 | for i in range(0, df.shape[0], step):

157 | df_partitions.append(df.iloc[i:i+step])

158 | return df_partitions

159 |

160 | def multi_process_feat(df):

161 | pool = Pool(PROCESS_NUM)

162 | df = df[ID_NAMES + ['quer_key', 'quer_all', 'abst', 'titl', 'corp']]

163 | df_parts = partition(df, PROCESS_NUM)

164 | print ('{} processes init and partition to {} parts' \

165 | .format(PROCESS_NUM, PROCESS_NUM))

166 | ts = time.time()

167 |

168 | param_list = [(df_parts[i], i) \

169 | for i in range(PROCESS_NUM)]

170 | dfs = pool.map(single_process_feat, param_list)

171 | df_out = pd.concat(dfs, axis=0)

172 | return df_out

173 |

174 | def gen_samples(paper, tr_desc_path, tr_recall_path, fea_out_path):

175 | tr_desc = loader.load_df(tr_desc_path)

176 | tr = loader.load_df(tr_recall_path)

177 | # tr = tr.head(1000)

178 |

179 | tr = tr.merge(paper, on=['paper_id'], how='left')

180 | tr = tr.merge(tr_desc[['description_id', 'quer_key', 'quer_all']], on=['description_id'], how='left')

181 |

182 | print (tr.columns)

183 | print (tr.head())

184 |

185 | tr_feat = multi_process_feat(tr)

186 | loader.save_df(tr_feat, fea_out_path)

187 |

188 | tr = tr.merge(tr_feat, on=ID_NAMES, how='left')

189 | del_cols = [col for col in tr.columns if tr[col].dtype == 'O' and col not in ID_NAMES]

190 | print ('tr del cols', del_cols)

191 | return tr.drop(del_cols, axis=1)

192 |

193 |

194 | # 增加 vec sim 特征

195 |

196 | if __name__ == "__main__":

197 |

198 | ts = time.time()

199 | tqdm.pandas()

200 | print('start time: %s' % datetime.now())

201 | paper = loader.load_df('../../input/paper_input_final.ftr')

202 | paper['abst'] = paper['abst'].apply(lambda s: s.replace('no_content', ''))

203 | paper['corp'] = paper['abst'] + ' ' + paper['titl'] + ' ' + paper['keywords'].fillna('').replace(';', ' ')

204 |

205 | tr_desc_path = '../../input/tr_input_final.ftr'

206 | te_desc_path = '../../input/te_input_final.ftr'

207 |

208 | tr_recall_path = '../../feat/tr_s0_30-50.ftr'

209 | te_recall_path = '../../feat/te_s0_30-50.ftr'

210 |

211 | tr = gen_samples(paper, tr_desc_path, tr_recall_path, tr_fea_out_path)

212 | print (tr.columns)

213 | print ([col for col in tr.columns if tr[col].dtype == 'O'])

214 | loader.save_df(tr, tr_out_path)

215 |

216 | te = gen_samples(paper, te_desc_path, te_recall_path, te_fea_out_path)

217 | print (te.columns)

218 | loader.save_df(te, te_out_path)

219 | print('all completed: {}, cost {}s'.format(datetime.now(), np.round(time.time() - ts, 2)))

220 |

221 |

222 |

223 |

224 |

--------------------------------------------------------------------------------

/src/feature/feat37-pairwise.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | #coding=utf-8

3 |

4 | # 生成词向量距离特征

5 |

6 | # 基础模块

7 | import os

8 | import gc

9 | import sys

10 | import time

11 | import pickle

12 | from datetime import datetime

13 | from tqdm import tqdm

14 |

15 | # 数据处理

16 | import numpy as np

17 | import pandas as pd

18 | from tqdm import tqdm

19 | from multiprocessing import Pool

20 |

21 | # 自定义工具包

22 | sys.path.append('../../tools/')

23 | import loader

24 | import pandas_util

25 | import custom_bm25 as bm25

26 | from feat_utils import try_divide, dump_feat_name

27 |

28 | # 开源工具包

29 | import nltk

30 | import gensim

31 | from gensim.models import Word2Vec

32 | from gensim.models.word2vec import LineSentence

33 | from gensim import corpora, models, similarities

34 | from gensim.similarities import SparseMatrixSimilarity

35 | from sklearn.metrics.pairwise import cosine_similarity as cos_sim

36 |

37 | # 设置随机种子

38 | SEED = 2020

39 |

40 | input_root_path = '../../input/'

41 | output_root_path = '../../feat/'

42 |

43 | FEA_NUM = '37'

44 | postfix = 's0_{}'.format(FEA_NUM)

45 | file_type = 'ftr'

46 |

47 | # 当前特征

48 | tr_fea_out_path = output_root_path + 'tr_fea_{}.{}'.format(postfix, file_type)

49 | te_fea_out_path = output_root_path + 'te_fea_{}.{}'.format(postfix, file_type)

50 |

51 | # 当前特征 + 之前特征 merge 之后的完整训练数据

52 | tr_out_path = output_root_path + 'tr_{}.{}'.format(postfix, file_type)

53 | te_out_path = output_root_path + 'te_{}.{}'.format(postfix, file_type)

54 |

55 | ID_NAMES = ['description_id', 'paper_id']

56 | PROCESS_NUM = 20

57 |

58 | # load data

59 | ts = time.time()

60 |

61 | def feat_extract(df, is_te=False):

62 | if is_te:

63 | df_pred = loader.load_df('../../output/m3/lgb_m3_32-50-0/lgb_m3_32-50-0.ftr')

64 | else:

65 | df_pred = loader.load_df('../../output/m3/lgb_m3_32-50-0/lgb_m3_32-50-0_cv.ftr')

66 | df_pred = df_pred[ID_NAMES + ['target']]

67 |

68 | df_pred = df_pred.sort_values(by=['target'], ascending=False)

69 | df_pred['pred_rank'] = df_pred.groupby(['description_id']).cumcount().values

70 | df_pred = df_pred.sort_values(by=['description_id', 'target'])

71 | print (df_pred.shape)

72 | print (df_pred.head(10))

73 |

74 | pred_top1 = df_pred[df_pred['pred_rank'] == 0] \

75 | .drop_duplicates(subset='description_id', keep='first')

76 | pred_top1 = pred_top1[['description_id', 'target']]

77 | pred_top1.columns = ['description_id', 'top1_pred']

78 |

79 | pred_top2 = df_pred[df_pred['pred_rank'] < 2]

80 | pred_top2['top2_pred_avg'] = pred_top2.groupby('description_id')['target'].transform('mean')

81 | pred_top2['top2_pred_std'] = pred_top2.groupby('description_id')['target'].transform('std')

82 | pred_top2 = pred_top2[['description_id', 'top2_pred_avg', \

83 | 'top2_pred_std']].drop_duplicates(subset=['description_id'])

84 |

85 | pred_top3 = df_pred[df_pred['pred_rank'] < 3]

86 | pred_top3['top3_pred_avg'] = pred_top3.groupby('description_id')['target'].transform('mean')

87 | pred_top3['top3_pred_std'] = pred_top3.groupby('description_id')['target'].transform('std')

88 | pred_top3 = pred_top3[['description_id', 'top3_pred_avg', \

89 | 'top3_pred_std']].drop_duplicates(subset=['description_id'])

90 |

91 | pred_top5 = df_pred[df_pred['pred_rank'] < 5]

92 | pred_top5['top5_pred_avg'] = pred_top5.groupby('description_id')['target'].transform('mean')

93 | pred_top5['top5_pred_std'] = pred_top5.groupby('description_id')['target'].transform('std')

94 | pred_top5 = pred_top5[['description_id', 'top5_pred_avg', \

95 | 'top5_pred_std']].drop_duplicates(subset=['description_id'])

96 |

97 | df_pred.rename(columns={'target': 'pred'}, inplace=True)

98 | df = df.merge(df_pred, on=ID_NAMES, how='left')

99 | df = df.merge(pred_top1, on=['description_id'], how='left')

100 | df = df.merge(pred_top2, on=['description_id'], how='left')

101 | df = df.merge(pred_top3, on=['description_id'], how='left')

102 | df = df.merge(pred_top5, on=['description_id'], how='left')

103 |

104 | df['pred_sub_top1'] = df['pred'] - df['top1_pred']

105 | df['pred_sub_top2_avg'] = df['pred'] - df['top2_pred_avg']

106 | df['pred_sub_top3_avg'] = df['pred'] - df['top3_pred_avg']

107 | df['pred_sub_top5_avg'] = df['pred'] - df['top5_pred_avg']

108 |

109 | del_cols = ['paper_id', 'pred', 'pred_rank']

110 | df.drop(del_cols, axis=1, inplace=True)

111 | df_feat = df.drop_duplicates(subset=['description_id'])

112 |

113 | print ('df_feat info')

114 | print (df_feat.shape)

115 | print (df_feat.head())

116 | print (df_feat.columns.tolist())

117 |

118 | return df_feat

119 |

120 | def output_fea(tr, te):

121 | print (tr.head())

122 | print (te.head())

123 |

124 | loader.save_df(tr, tr_fea_out_path)

125 | loader.save_df(te, te_fea_out_path)

126 |

127 | def gen_fea():

128 | tr = loader.load_df('../../feat/tr_s0_32-50.ftr')

129 | te = loader.load_df('../../feat/te_s0_32-50.ftr')

130 |

131 | tr_feat = feat_extract(tr[ID_NAMES])

132 | te_feat = feat_extract(te[ID_NAMES], is_te=True)

133 |

134 | tr = tr[ID_NAMES].merge(tr_feat, on=['description_id'], how='left')

135 | te = te[ID_NAMES].merge(te_feat, on=['description_id'], how='left')

136 |

137 | print (tr.shape, te.shape)

138 | print (tr.head())

139 | print (te.head())

140 | print (tr.columns)

141 |

142 | output_fea(tr, te)

143 |

144 | # merge 已有特征

145 | def merge_fea(tr_list, te_list):

146 | tr = loader.merge_fea(tr_list, primary_keys=ID_NAMES)

147 | te = loader.merge_fea(te_list, primary_keys=ID_NAMES)

148 |

149 | print (tr.head())

150 | print (te.head())

151 | print (tr.columns.tolist())

152 |

153 | loader.save_df(tr, tr_out_path)

154 | loader.save_df(te, te_out_path)

155 |

156 | if __name__ == "__main__":

157 |

158 | print('start time: %s' % datetime.now())

159 | root_path = '../../feat/'

160 | base_tr_path = root_path + 'tr_s0_32-50.ftr'

161 | base_te_path = root_path + 'te_s0_32-50.ftr'

162 |

163 | gen_fea()

164 |

165 | # merge fea

166 | prefix = 's0'

167 | fea_list = [FEA_NUM]

168 |

169 | tr_list = [base_tr_path] + \

170 | [root_path + 'tr_fea_{}_{}.ftr'.format(prefix, i) for i in fea_list]

171 | te_list = [base_te_path] + \

172 | [root_path + 'te_fea_{}_{}.ftr'.format(prefix, i) for i in fea_list]

173 |

174 | merge_fea(tr_list, te_list)

175 |

176 | print('all completed: %s' % datetime.now())

177 |

178 |

179 |

--------------------------------------------------------------------------------

/src/feature/feat38-stk.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | #coding=utf-8

3 |

4 | # 生成词向量距离特征

5 |

6 | # 基础模块

7 | import os

8 | import gc

9 | import sys

10 | import time

11 | import pickle

12 | from datetime import datetime

13 | from tqdm import tqdm

14 |

15 | # 数据处理

16 | import numpy as np

17 | import pandas as pd

18 | from tqdm import tqdm

19 | from multiprocessing import Pool

20 |

21 | # 自定义工具包

22 | sys.path.append('../../tools/')

23 | import loader

24 | import pandas_util

25 | import custom_bm25 as bm25

26 | from preprocess import preprocess

27 | from feat_utils import try_divide, dump_feat_name

28 |

29 | # 开源工具包

30 | import nltk

31 | import gensim

32 | from gensim.models import Word2Vec

33 | from gensim.models.word2vec import LineSentence

34 | from gensim import corpora, models, similarities

35 | from gensim.similarities import SparseMatrixSimilarity

36 | from sklearn.metrics.pairwise import cosine_similarity as cos_sim

37 |

38 | # 设置随机种子

39 | SEED = 2020

40 |

41 | input_root_path = '../../input/'

42 | output_root_path = '../../feat/'

43 |

44 | FEA_NUM = 38

45 |

46 | postfix = 's0_{}'.format(FEA_NUM)

47 | file_type = 'ftr'

48 |

49 | # 当前特征

50 | tr_fea_out_path = output_root_path + 'tr_fea_{}.{}'.format(postfix, file_type)

51 | te_fea_out_path = output_root_path + 'te_fea_{}.{}'.format(postfix, file_type)

52 |

53 | # 当前特征 + 之前特征 merge 之后的完整训练数据

54 | tr_out_path = output_root_path + 'tr_{}.{}'.format(postfix, file_type)

55 | te_out_path = output_root_path + 'te_{}.{}'.format(postfix, file_type)

56 |

57 | ID_NAMES = ['description_id', 'paper_id']

58 | PROCESS_NUM = 20

59 |

60 | # load data

61 | ts = time.time()

62 |

63 | def feat_extract(tr_path, te_path, prefix):

64 | tr_sample = loader.load_df('../../feat/tr_s0_37.ftr')

65 | te_sample = loader.load_df('../../feat/te_s0_37.ftr')

66 |

67 | tr = loader.load_df(tr_path)

68 | te = loader.load_df(te_path)

69 |

70 | del_cols = ['label']

71 | del_cols = [col for col in tr.columns if col in del_cols]

72 | tr.drop(del_cols, axis=1, inplace=True)

73 |

74 | tr = tr_sample[ID_NAMES].merge(tr, on=ID_NAMES, how='left')

75 | te = te_sample[ID_NAMES].merge(te, on=ID_NAMES, how='left')

76 |

77 | tr.columns = ID_NAMES + [prefix]

78 | te.columns = ID_NAMES + [prefix]

79 |

80 | print (prefix)

81 | print (tr.shape, te.shape)

82 | print (tr.head())

83 |

84 | tr = tr[prefix]

85 | te = te[prefix]

86 |

87 | return tr, te

88 |

89 | def output_fea(tr, te):

90 | print (tr.head())

91 | print (te.head())

92 |

93 | loader.save_df(tr, tr_fea_out_path)

94 | loader.save_df(te, te_fea_out_path)

95 |

96 | # 生成特征

97 | def gen_fea(base_tr_path=None, base_te_path=None):

98 |

99 | tr_sample = loader.load_df('../../feat/tr_s0_37.ftr')

100 | te_sample = loader.load_df('../../feat/te_s0_37.ftr')

101 |

102 | prefixs = ['m1_cat_03', 'm1_infesent_simple', 'm1_nn_02', \

103 | 'm2_ESIM_001', 'm2_ESIMplus_001', 'lgb_m3_37-0']

104 |

105 | tr_paths = ['{}_tr.ftr'.format(prefix) for prefix in prefixs]

106 | te_paths = ['final_{}_te.ftr'.format(prefix) for prefix in prefixs]

107 |

108 | tr_paths = ['../../stk_feat/{}'.format(p) for p in tr_paths]

109 | te_paths = ['../../stk_feat/{}'.format(p) for p in te_paths]

110 |

111 |

112 | trs, tes = [], []

113 | for i, prefix in enumerate(prefixs):

114 | tr, te = feat_extract(tr_paths[i], te_paths[i], prefix + '_prob')

115 | trs.append(tr)

116 | tes.append(te)

117 | tr = pd.concat([tr_sample[ID_NAMES]] + trs, axis=1)

118 | te = pd.concat([te_sample[ID_NAMES]] + tes, axis=1)

119 |

120 | float_cols = [c for c in tr.columns if tr[c].dtype == 'float']

121 | tr[float_cols] = tr[float_cols].astype('float32')

122 | te[float_cols] = te[float_cols].astype('float32')

123 |

124 | print (tr.shape, te.shape)

125 | print (tr.head())

126 | print (te.head())

127 | print (tr.columns)

128 |

129 | output_fea(tr, te)

130 |

131 | # merge 已有特征

132 | def merge_fea(tr_list, te_list):

133 | tr = loader.merge_fea(tr_list, primary_keys=ID_NAMES)

134 | te = loader.merge_fea(te_list, primary_keys=ID_NAMES)

135 |

136 | print (tr.head())

137 | print (te.head())

138 | print (tr.columns.tolist())

139 |

140 | loader.save_df(tr, tr_out_path)

141 | loader.save_df(te, te_out_path)

142 |

143 | if __name__ == "__main__":

144 |

145 | print('start time: %s' % datetime.now())

146 | root_path = '../../feat/'

147 | base_tr_path = root_path + 'tr_s0_37.ftr'

148 | base_te_path = root_path + 'te_s0_37.ftr'

149 |

150 | gen_fea()

151 |

152 | # merge fea

153 | prefix = 's0'

154 | fea_list = [FEA_NUM]

155 |

156 | tr_list = [base_tr_path] + \

157 | [root_path + 'tr_fea_{}_{}.ftr'.format(prefix, i) for i in fea_list]

158 | te_list = [base_te_path] + \

159 | [root_path + 'te_fea_{}_{}.ftr'.format(prefix, i) for i in fea_list]

160 |

161 | merge_fea(tr_list, te_list)

162 |

163 | print('all completed: %s' % datetime.now())

164 |

165 |

166 |

167 |

--------------------------------------------------------------------------------

/src/feature/gen_samples.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | #coding=utf-8

3 |

4 | import warnings

5 | warnings.filterwarnings('always')

6 | warnings.filterwarnings('ignore')

7 |

8 | # 基础模块

9 | import os

10 | import sys

11 | import time

12 | from datetime import datetime

13 | from tqdm import tqdm

14 |

15 | # 数据处理

16 | import numpy as np

17 | import pandas as pd

18 |

19 | # 自定义工具包

20 | sys.path.append('../../tools/')

21 | import loader

22 | import pandas_util

23 |

24 | # 开源工具包

25 | from gensim.models import Word2Vec

26 | from gensim.models.word2vec import LineSentence

27 | from gensim import corpora, models, similarities

28 | from gensim.similarities import SparseMatrixSimilarity

29 | from sklearn.metrics.pairwise import cosine_similarity as cos_sim

30 |

31 | # 设置随机种子

32 | SEED = 2020

33 |

34 | def topk_lines(df, k):

35 | df.loc[:, 'rank'] = df.groupby(['description_id']).cumcount().values

36 | df = df[df['rank'] < k]

37 | df.drop(['rank'], axis=1, inplace=True)

38 | return df

39 |

40 | def process(in_path, k):

41 | ID_NAMES = ['description_id', 'paper_id']

42 |

43 | df = loader.load_df(in_path)

44 | df = topk_lines(df, k)

45 | df['sim_score'] = df['sim_score'].astype('float')

46 | df.rename(columns={'sim_score': 'corp_sim_score'}, inplace=True)

47 | return df

48 |

49 |

50 | if __name__ == "__main__":

51 |

52 | ts = time.time()

53 | tr_path = '../../feat/tr_tfidf_30.ftr'

54 | te_path = '../../feat/te_tfidf_30.ftr'

55 |

56 | cv = loader.load_df('../../input/cv_ids_0109.csv')[['description_id', 'cv']]

57 |

58 | tr = process(tr_path, k=50)

59 | tr = tr.merge(cv, on=['description_id'], how='left')

60 |

61 | te = process(te_path, k=50)

62 | te['cv'] = 0

63 |

64 | loader.save_df(tr, '../../feat/tr_samples_30-50.ftr')

65 | loader.save_df(te, '../../feat/te_samples_30-50.ftr')

66 | print('all completed: {}, cost {}s'.format(datetime.now(), np.round(time.time() - ts, 2)))

67 |

68 |

69 |

70 |

71 |

72 |

--------------------------------------------------------------------------------

/src/feature/tfidf_recall_30.py:

--------------------------------------------------------------------------------

1 | #!/usr/bin/env python

2 | #coding=utf-8

3 |

4 | # bm25 recall

5 |

6 | # 基础模块

7 | import os

8 | import gc

9 | import sys

10 | import time

11 | import functools

12 | from tqdm import tqdm

13 | from six import iteritems

14 | from datetime import datetime

15 |

16 | # 数据处理

17 | import re

18 | import math

19 | import pickle

20 | import numpy as np

21 | import pandas as pd

22 | from multiprocessing import Pool

23 |

24 | # 自定义工具包

25 | sys.path.append('../../tools/')

26 | import loader

27 | import pandas_util

28 | import custom_bm25 as bm25

29 |

30 | # 开源工具包

31 | from gensim.models import Word2Vec

32 | from gensim.models.word2vec import LineSentence

33 | from gensim import corpora, models, similarities

34 | from gensim.similarities import SparseMatrixSimilarity

35 | from sklearn.metrics.pairwise import cosine_similarity as cos_sim

36 |

37 | # 设置随机种子

38 | SEED = 2020

39 | PROCESS_NUM, PARTITION_NUM = 18, 18

40 |

41 | input_root_path = '../../input/'

42 | output_root_path = '../../feat/'

43 |

44 | postfix = '30'

45 | file_type = 'ftr'

46 |

47 | train_out_path = output_root_path + 'tr_tfidf_{}.{}'.format(postfix, file_type)

48 | test_out_path = output_root_path + 'te_tfidf_{}.{}'.format(postfix, file_type)

49 |

50 | def topk_sim_samples(desc, desc_ids, paper_ids, bm25_model, k=10):

51 | desc_id2papers = {}

52 | for desc_i in tqdm(range(len(desc))):

53 | query_vec, query_desc_id = desc[desc_i], desc_ids[desc_i]

54 | sims = bm25_model.get_scores(query_vec)

55 | sort_sims = sorted(enumerate(sims), key=lambda item: -item[1])

56 | sim_papers = [paper_ids[val[0]] for val in sort_sims[:k]]

57 | sim_scores = [str(val[1]) for val in sort_sims[:k]]

58 | desc_id2papers[query_desc_id] = ['|'.join(sim_papers), '|'.join(sim_scores)]

59 | sim_df = pd.DataFrame.from_dict(desc_id2papers, orient='index', columns=['paper_id', 'sim_score'])

60 | sim_df = sim_df.reset_index().rename(columns={'index':'description_id'})

61 | return sim_df

62 |

63 | def partition(queries, num):

64 | queries_partitions, step = [], int(np.ceil(len(queries)/num))

65 | for i in range(0, len(queries), step):

66 | queries_partitions.append(queries[i:i+step])

67 | return queries_partitions

68 |

69 | def single_process_search(params=None):

70 | (query_vecs, desc_ids, paper_ids, bm25_model, k, i) = params

71 | print (i, 'start', datetime.now())

72 | gc.collect()

73 | sim_df = topk_sim_samples(query_vecs, desc_ids, paper_ids, bm25_model, k)

74 | print (i, 'completed', datetime.now())

75 | return sim_df

76 |

77 | def multi_process_search(query_vecs, desc_ids, paper_ids, bm25_model, k):

78 | pool = Pool(PROCESS_NUM)

79 | queries_parts = partition(query_vecs, PARTITION_NUM)

80 | desc_ids_parts = partition(desc_ids, PARTITION_NUM)

81 | print ('{} processes init and partition to {} parts' \

82 | .format(PROCESS_NUM, PARTITION_NUM))

83 |

84 | param_list = [(queries_parts[i], desc_ids_parts[i], \

85 | paper_ids, bm25_model, k, i) for i in range(PARTITION_NUM)]

86 | sim_dfs = pool.map(single_process_search, param_list)

87 | sim_df = pd.concat(sim_dfs, axis=0)

88 | return sim_df

89 |

90 | def gen_samples(df, desc, desc_ids, corpus_list, paper_ids_list, k):

91 | df_samples_list = []

92 | for i, corpus in enumerate(corpus_list):

93 | bm25_model = bm25.BM25(corpus[0])

94 | cur_df_sample = multi_process_search(desc, desc_ids, \

95 | paper_ids_list[i], bm25_model, k)

96 | cur_df_sample_out = pandas_util.explode(cur_df_sample, ['paper_id', 'sim_score'])

97 | cur_df_sample_out['type'] = corpus[1] # recall_name

98 | df_samples_list.append(cur_df_sample_out)

99 | df_samples = pd.concat(df_samples_list, axis=0)

100 | df_samples.drop_duplicates(subset=['description_id', 'paper_id'], inplace=True)

101 | df_samples['target'] = 0

102 | return df_samples

103 |

104 | if __name__ == "__main__":

105 |

106 | ts = time.time()

107 | tqdm.pandas()

108 | print('start time: %s' % datetime.now())

109 | # load data

110 | df = loader.load_df(input_root_path + 'paper_input_final.ftr')

111 | df = df[~pd.isnull(df['paper_id'])]

112 |

113 | # gen tfidf vecs

114 | dictionary = pickle.load(open('../../feat/corpus.dict', 'rb'))

115 | print ('dic len', len(dictionary))

116 |

117 | df['corp'] = df['abst'] + ' ' + df['titl'] + ' ' + df['keywords'].fillna('').replace(';', ' ')

118 | df_corp, corp_paper_ids = [dictionary.doc2bow(line.split(' ')) for line in df['corp'].tolist()], \

119 | df['paper_id'].tolist()

120 |

121 | # gen topk sim samples

122 | paper_ids_list = [corp_paper_ids]

123 | corpus_list = [(df_corp, 'corp_bm25')]

124 | out_cols = ['description_id', 'paper_id', 'sim_score', 'target', 'type']

125 |

126 | if sys.argv[1] in ['tr']:

127 | # for tr ins

128 | tr = loader.load_df(input_root_path + 'tr_input_final.ftr')

129 | tr = tr[~pd.isnull(tr['description_id'])]

130 |

131 | # tr = tr.head(1000)

132 | tr_desc, tr_desc_ids = [dictionary.doc2bow(line.split(' ')) for line in tr['quer_all'].tolist()], \

133 | tr['description_id'].tolist()

134 | print ('gen tf completed, cost {}s'.format(np.round(time.time() - ts, 2)))

135 |

136 | tr_samples = gen_samples(tr, tr_desc, tr_desc_ids, \

137 | corpus_list, paper_ids_list, k=50)

138 | tr_samples = tr.rename(columns={'paper_id': 'target_paper_id'}) \

139 | .merge(tr_samples, on='description_id', how='left')

140 | tr_samples.loc[tr_samples['target_paper_id'] == tr_samples['paper_id'], 'target'] = 1

141 | loader.save_df(tr_samples[out_cols], train_out_path)

142 | print ('recall succ {} from {}'.format(tr_samples['target'].sum(), tr.shape[0]))

143 | print (tr.shape, tr_samples.shape)

144 |

145 | if sys.argv[1] in ['te']:

146 | # for te ins

147 | te = loader.load_df(input_root_path + 'te_input_final.ftr')

148 | te = te[~pd.isnull(te['description_id'])]

149 |

150 | # te = te.head(1000)

151 | te_desc, te_desc_ids = [dictionary.doc2bow(line.split(' ')) for line in te['quer_all'].tolist()], \

152 | te['description_id'].tolist()

153 | print ('gen tf completed, cost {}s'.format(np.round(time.time() - ts, 2)))

154 |

155 | te_samples = gen_samples(te, te_desc, te_desc_ids, \

156 | corpus_list, paper_ids_list, k=50)

157 | te_samples = te.merge(te_samples, on='description_id', how='left')

158 | loader.save_df(te_samples[out_cols], test_out_path)

159 | print (te.shape, te_samples.shape)

160 |

161 | print('all completed: {}, cost {}s'.format(datetime.now(), np.round(time.time() - ts, 2)))

162 |

163 |

164 |

165 |

--------------------------------------------------------------------------------

/src/rank/m1/catboost03.py:

--------------------------------------------------------------------------------

1 |

2 | # coding: utf-8

3 |

4 | # In[1]:

5 |

6 |

7 | import numpy as np

8 | import pandas as pd

9 | import datetime

10 | from catboost import CatBoostClassifier

11 | from time import time

12 | from tqdm import tqdm_notebook as tqdm

13 |

14 |

15 | # In[2]:

16 |

17 |

18 | feat_dir = "../../../feat/"

19 | input_dir = "../../../input/"

20 | cv_id = pd.read_csv("../../../input/cv_ids_0109.csv")

21 |

22 |

23 | # In[3]:

24 |

25 |

26 | train = pd.read_feather(f'{feat_dir}/tr_s0_32-50.ftr')

27 | train.drop(columns=['cv'],axis=1,inplace=True)

28 | train = train.merge(cv_id,on=['description_id'],how='left')

29 | train = train.dropna(subset=['cv']).reset_index(drop=True)

30 | # test = pd.read_feather(f'{feat_dir}/te_s0_20-50.ftr')

31 | test = pd.read_feather(f'{feat_dir}/te_s0_32-50.ftr')

32 |

33 |

34 | # In[4]:

35 |

36 |

37 | ID_NAMES = ['description_id', 'paper_id']

38 | TARGET_NAME = 'target'

39 |

40 |

41 | # In[5]:

42 |

43 |

44 | def get_feas(data):

45 | cols = data.columns.tolist()

46 | del_cols = ID_NAMES + ['target', 'cv']

47 | #sub_cols = ['year', 'corp_cos', 'corp_eucl', 'corp_manh', 'quer_all']

48 | sub_cols = ['year', 'corp_sim_score']

49 | sub_cols = ['year', 'pos_of_corp', 'pos_of_abst', 'pos_of_titl']

50 | for col in data.columns:

51 | for sub_col in sub_cols:

52 | if sub_col in col:

53 | del_cols.append(col)

54 |

55 | cols = [val for val in cols if val not in del_cols]

56 | print ('del_cols', del_cols)

57 | return cols

58 |

59 |

60 | # In[6]:

61 |

62 |

63 | feas = get_feas(train)

64 |

65 |

66 | # In[7]:

67 |

68 |

69 | def make_classifier():

70 | clf = CatBoostClassifier(

71 | loss_function='Logloss',

72 | eval_metric="AUC",

73 | # task_type="CPU",

74 | learning_rate=0.1, ###0.01

75 | iterations=2500, ###2000

76 | od_type="Iter",

77 | # depth=8,

78 | thread_count=10,

79 | early_stopping_rounds=100, ###100

80 | # l2_leaf_reg=1,

81 | # border_count=96,

82 | random_seed=42

83 | )

84 |

85 | return clf

86 |

87 |

88 | # In[8]:

89 |

90 |

91 | # 开源工具包

92 | import ml_metrics as metrics

93 | def cal_map(pred_valid,cv,train_df,tr_data):

94 | df_pred = train_df[train_df['cv']==cv].copy()

95 | df_pred['pred'] = pred_valid

96 | df_pred = df_pred[['description_id','paper_id','pred']]

97 | sort_df_pred = df_pred.sort_values(['description_id', 'pred'], ascending=False)

98 | df_pred = df_pred[['description_id']].drop_duplicates() .merge(sort_df_pred, on=['description_id'], how='left')

99 | df_pred['rank'] = df_pred.groupby('description_id').cumcount().values

100 | df_pred = df_pred[df_pred['rank'] < 3]

101 | df_pred = df_pred.groupby(['description_id'])['paper_id'] .apply(lambda s : ','.join((s))).reset_index()

102 | df_pred = df_pred.merge(tr_data, on=['description_id'], how='left')

103 | df_pred.rename(columns={'paper_id': 'paper_ids'}, inplace=True)

104 | df_pred['paper_ids'] = df_pred['paper_ids'].apply(lambda s: s.split(','))

105 | df_pred['target_id'] = df_pred['target_id'].apply(lambda s: [s])

106 | return metrics.mapk(df_pred['target_id'].tolist(), df_pred['paper_ids'].tolist(), 3)

107 |

108 |

109 | # In[9]:

110 |

111 |

112 | import os

113 | model_dir = "./m1_model/catboost03"

114 | if not os.path.exists(model_dir):

115 | os.makedirs(model_dir)

116 |

117 |

118 | # In[10]:

119 |

120 |

121 | tr_data = pd.read_csv(f'{input_dir}/train_release.csv')

122 | tr_data = tr_data[['description_id', 'paper_id']].rename(columns={'paper_id': 'target_id'})

123 |

124 |

125 | # In[13]:

126 |

127 |

128 | for fea in feas:

129 | if fea not in test.columns:

130 | print(fea)

131 |

132 |

133 | # In[14]:

134 |

135 |

136 | CV_RESULT_OUT=True

137 |

138 |

139 | # In[15]:

140 |

141 |

142 | def train_one_fold(type_train_df,type_test_df,model_dir,cv,pi=False):

143 | print(" fold " + str(cv))

144 | train_data = type_train_df[(type_train_df['cv']!=cv)]

145 | valid_data = type_train_df[(type_train_df['cv']==cv)]

146 |

147 | des_id = valid_data['description_id']

148 | paper_id = valid_data['paper_id']

149 |

150 | idx_train = train_data.index

151 | idx_val = valid_data.index

152 | des_id = valid_data['description_id']

153 | paper_id = valid_data['paper_id']

154 | model_name = "fold_{}_cbt_best.model".format(str(cv))

155 | model_name_wrt = os.path.join(model_dir,model_name)

156 | clf = make_classifier()

157 | imp=pd.DataFrame()

158 | if not os.path.exists(model_name_wrt):

159 | clf.fit(train_data[feas], train_data[['target']], eval_set=(valid_data[feas],valid_data[['target']]),

160 | use_best_model=True, verbose=100)

161 | clf.save_model(model_name_wrt)

162 | fea_ = clf.feature_importances_

163 | fea_name = clf.feature_names_

164 | imp = pd.DataFrame({'name':fea_name,'imp':fea_})

165 | else:

166 | clf.load_model(model_name_wrt)

167 | cv_predict=clf.predict_proba(valid_data[feas])[:,1]

168 | # print(cv_predict.shape)

169 | cv_score_fold = cal_map(cv_predict,cv,type_train_df,tr_data)

170 | if CV_RESULT_OUT:

171 | cv_preds = cv_predict

172 | rdf = pd.DataFrame()

173 | rdf = rdf.reindex(columns=['description_id','paper_id','pred'])

174 | rdf['description_id'] = des_id

175 | rdf['paper_id'] = paper_id

176 | rdf['pred'] = cv_preds

177 | test_des_id = type_test_df['description_id']

178 | test_paper_id = type_test_df['paper_id']

179 | test_preds = clf.predict_proba(type_test_df[feas])[:,1]

180 | test_df = pd.DataFrame()

181 | test_df = test_df.reindex(columns=['description_id','paper_id','pred'])

182 | test_df['description_id'] = test_des_id

183 | test_df['paper_id'] = test_paper_id

184 | test_df['pred'] = test_preds

185 | return rdf,test_df,cv_score_fold,imp

186 |

187 |

188 | # In[16]:

189 |

190 |

191 | kfold = 5

192 | type_scores = []

193 | type_cv_results = []

194 | type_test_results = []

195 | model_name = '../../../output/m1/catboost03/'

196 | fold_scores = []

197 | fold_cv_results = []

198 | fold_test_results = []

199 | imps=[]

200 | # test_preds = np.zeros(len(test))

201 | for cv in range(1,kfold+1):#####这里是因为cv是1~5

202 | cv_df,test_df,cv_score,imp = train_one_fold(train,test,model_dir,cv)

203 | # fold_cv_results.append(cv_df)

204 | # fold_test_results.append(test_df)

205 | cv_df.to_csv(f"{model_name}_cv_{cv}.csv",index=False)

206 | test_df.to_csv(f"{model_name}_result_{cv}.csv",index=False)

207 | imp.to_csv(f"{model_name}_imp_{cv}.csv",index=False)

208 | print("fold {} finished".format(cv))

209 | print(cv_score)

210 | fold_scores.append(cv_score)

211 | imps.append(imp)

212 |

213 |

214 | # In[1]:

215 |

216 |

217 | np.mean(fold_scores)

218 |

219 | #0.35309347230573923

220 | #0.3522860689007414

221 | #0.3585175465159315

222 | #0.35720084429290466

223 | #0.34729405401751007

224 |

225 |

226 | # In[ ]:

227 |

228 |

229 | result = []

230 | for i in range(1,6):

231 | re_csv = f"{model_name}_result_{i}.csv"

232 | test_df = pd.read_csv(re_csv)

233 | result.append(test_df)

234 |

235 |

236 | # In[ ]:

237 |

238 |

239 | final_test = result[0].copy()

240 |

241 |

242 | # In[ ]:

243 |

244 |

245 | for i in range(1,5):

246 | final_test['pred']+=result[i]['pred']

247 |

248 |

249 | # In[ ]:

250 |

251 |

252 | final_test['pred'] = final_test['pred']/5

253 |

254 |

255 | # In[ ]:

256 |

257 |

258 | final_test.to_csv("../../../output/m1/nn02/te_catboost03newtest.csv",index=False)

259 |

260 |

--------------------------------------------------------------------------------

/src/rank/m1/glove/.gitignore:

--------------------------------------------------------------------------------

1 | # Object files

2 | *.o

3 | *.ko

4 | *.obj

5 | *.elf

6 |

7 | # Precompiled Headers

8 | *.gch

9 | *.pch

10 |

11 | # Libraries

12 | *.lib

13 | *.a

14 | *.la

15 | *.lo

16 |

17 | # Shared objects (inc. Windows DLLs)

18 | *.dll

19 | *.so

20 | *.so.*

21 | *.dylib

22 |

23 | # Executables

24 | *.exe

25 | *.out

26 | *.app

27 | *.i*86

28 | *.x86_64

29 | *.hex

30 |

31 | # Debug files

32 | *.dSYM/

33 |

34 |

35 | build/*

36 | *.swp

37 |

38 | # OS X stuff

39 | ._*

40 |

--------------------------------------------------------------------------------

/src/rank/m1/glove/.travis.yml:

--------------------------------------------------------------------------------

1 | language: c

2 | dist: trusty

3 | sudo: required

4 | before_install:

5 | - sudo apt-get install python2.7 python-numpy python-pip

6 | script: pip install numpy && ./demo.sh | tee results.txt && [[ `cat results.txt | egrep "Total accuracy. 2[23]" | wc -l` = "1" ]] && echo test-passed

7 |

--------------------------------------------------------------------------------

/src/rank/m1/glove/Makefile:

--------------------------------------------------------------------------------

1 | CC = gcc

2 | #For older gcc, use -O3 or -O2 instead of -Ofast

3 | # CFLAGS = -lm -pthread -Ofast -march=native -funroll-loops -Wno-unused-result

4 | CFLAGS = -lm -pthread -Ofast -march=native -funroll-loops -Wall -Wextra -Wpedantic

5 | BUILDDIR := build

6 | SRCDIR := src

7 |

8 | all: dir glove shuffle cooccur vocab_count

9 |

10 | dir :

11 | mkdir -p $(BUILDDIR)

12 | glove : $(SRCDIR)/glove.c

13 | $(CC) $(SRCDIR)/glove.c -o $(BUILDDIR)/glove $(CFLAGS)

14 | shuffle : $(SRCDIR)/shuffle.c

15 | $(CC) $(SRCDIR)/shuffle.c -o $(BUILDDIR)/shuffle $(CFLAGS)

16 | cooccur : $(SRCDIR)/cooccur.c

17 | $(CC) $(SRCDIR)/cooccur.c -o $(BUILDDIR)/cooccur $(CFLAGS)

18 | vocab_count : $(SRCDIR)/vocab_count.c

19 | $(CC) $(SRCDIR)/vocab_count.c -o $(BUILDDIR)/vocab_count $(CFLAGS)

20 |

21 | clean:

22 | rm -rf glove shuffle cooccur vocab_count build

23 |

--------------------------------------------------------------------------------

/src/rank/m1/glove/README.md:

--------------------------------------------------------------------------------

1 | ## GloVe: Global Vectors for Word Representation

2 |

3 |

4 | | nearest neighbors of

frog | Litoria | Leptodactylidae | Rana | Eleutherodactylus |

5 | | --- | ------------------------------- | ------------------- | ---------------- | ------------------- |

6 | | Pictures |  |

|  |

|  |

|  |

7 |

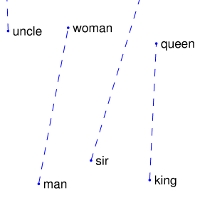

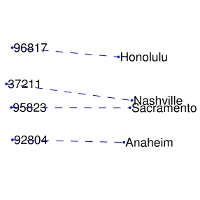

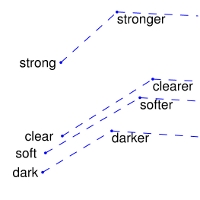

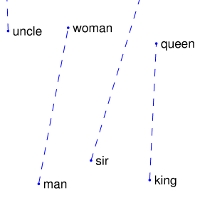

8 | | Comparisons | man -> woman | city -> zip | comparative -> superlative |

9 | | --- | ------------------------|-------------------------|-------------------------|

10 | | GloVe Geometry |

|

7 |

8 | | Comparisons | man -> woman | city -> zip | comparative -> superlative |

9 | | --- | ------------------------|-------------------------|-------------------------|

10 | | GloVe Geometry |  |

|  |

|  |

11 |

12 | We provide an implementation of the GloVe model for learning word representations, and describe how to download web-dataset vectors or train your own. See the [project page](http://nlp.stanford.edu/projects/glove/) or the [paper](http://nlp.stanford.edu/pubs/glove.pdf) for more information on glove vectors.

13 |

14 | ## Download pre-trained word vectors

15 | The links below contain word vectors obtained from the respective corpora. If you want word vectors trained on massive web datasets, you need only download one of these text files! Pre-trained word vectors are made available under the Public Domain Dedication and License.

16 |

|

11 |

12 | We provide an implementation of the GloVe model for learning word representations, and describe how to download web-dataset vectors or train your own. See the [project page](http://nlp.stanford.edu/projects/glove/) or the [paper](http://nlp.stanford.edu/pubs/glove.pdf) for more information on glove vectors.

13 |

14 | ## Download pre-trained word vectors

15 | The links below contain word vectors obtained from the respective corpora. If you want word vectors trained on massive web datasets, you need only download one of these text files! Pre-trained word vectors are made available under the Public Domain Dedication and License.

16 |

17 |

18 | - Common Crawl (42B tokens, 1.9M vocab, uncased, 300d vectors, 1.75 GB download): glove.42B.300d.zip

19 | - Common Crawl (840B tokens, 2.2M vocab, cased, 300d vectors, 2.03 GB download): glove.840B.300d.zip

20 | - Wikipedia 2014 + Gigaword 5 (6B tokens, 400K vocab, uncased, 300d vectors, 822 MB download): glove.6B.zip

21 | - Twitter (2B tweets, 27B tokens, 1.2M vocab, uncased, 200d vectors, 1.42 GB download): glove.twitter.27B.zip

22 |

23 |

28 |

29 | If the web datasets above don't match the semantics of your end use case, you can train word vectors on your own corpus.

30 |

31 | $ git clone http://github.com/stanfordnlp/glove

32 | $ cd glove && make

33 | $ ./demo.sh

34 |

35 | The demo.sh script downloads a small corpus, consisting of the first 100M characters of Wikipedia. It collects unigram counts, constructs and shuffles cooccurrence data, and trains a simple version of the GloVe model. It also runs a word analogy evaluation script in python to verify word vector quality. More details about training on your own corpus can be found by reading [demo.sh](https://github.com/stanfordnlp/GloVe/blob/master/demo.sh) or the [src/README.md](https://github.com/stanfordnlp/GloVe/tree/master/src)

36 |

37 | ### License

38 | All work contained in this package is licensed under the Apache License, Version 2.0. See the include LICENSE file.

39 |

--------------------------------------------------------------------------------

/src/rank/m1/glove/demo.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 | set -e

3 |

4 | # Makes programs, downloads sample data, trains a GloVe model, and then evaluates it.

5 | # One optional argument can specify the language used for eval script: matlab, octave or [default] python

6 |

7 | make

8 | if [ ! -e text8 ]; then

9 | if hash wget 2>/dev/null; then

10 | wget http://mattmahoney.net/dc/text8.zip

11 | else

12 | curl -O http://mattmahoney.net/dc/text8.zip

13 | fi

14 | unzip text8.zip

15 | rm text8.zip

16 | fi

17 |

18 | CORPUS=../corpus.txt

19 | VOCAB_FILE=vocab.txt

20 | COOCCURRENCE_FILE=cooccurrence.bin

21 | COOCCURRENCE_SHUF_FILE=cooccurrence.shuf.bin

22 | BUILDDIR=build

23 | SAVE_FILE=vectors

24 | VERBOSE=2

25 | MEMORY=4.0

26 | VOCAB_MIN_COUNT=2

27 | VECTOR_SIZE=300

28 | MAX_ITER=15

29 | WINDOW_SIZE=15

30 | BINARY=2

31 | NUM_THREADS=8

32 | X_MAX=10

33 |

34 | echo

35 | echo "$ $BUILDDIR/vocab_count -min-count $VOCAB_MIN_COUNT -verbose $VERBOSE < $CORPUS > $VOCAB_FILE"

36 | $BUILDDIR/vocab_count -min-count $VOCAB_MIN_COUNT -verbose $VERBOSE < $CORPUS > $VOCAB_FILE

37 | echo "$ $BUILDDIR/cooccur -memory $MEMORY -vocab-file $VOCAB_FILE -verbose $VERBOSE -window-size $WINDOW_SIZE < $CORPUS > $COOCCURRENCE_FILE"

38 | $BUILDDIR/cooccur -memory $MEMORY -vocab-file $VOCAB_FILE -verbose $VERBOSE -window-size $WINDOW_SIZE < $CORPUS > $COOCCURRENCE_FILE

39 | echo "$ $BUILDDIR/shuffle -memory $MEMORY -verbose $VERBOSE < $COOCCURRENCE_FILE > $COOCCURRENCE_SHUF_FILE"

40 | $BUILDDIR/shuffle -memory $MEMORY -verbose $VERBOSE < $COOCCURRENCE_FILE > $COOCCURRENCE_SHUF_FILE

41 | echo "$ $BUILDDIR/glove -save-file $SAVE_FILE -threads $NUM_THREADS -input-file $COOCCURRENCE_SHUF_FILE -x-max $X_MAX -iter $MAX_ITER -vector-size $VECTOR_SIZE -binary $BINARY -vocab-file $VOCAB_FILE -verbose $VERBOSE"

42 | $BUILDDIR/glove -save-file $SAVE_FILE -threads $NUM_THREADS -input-file $COOCCURRENCE_SHUF_FILE -x-max $X_MAX -iter $MAX_ITER -vector-size $VECTOR_SIZE -binary $BINARY -vocab-file $VOCAB_FILE -verbose $VERBOSE

43 | if [ "$CORPUS" = 'text8' ]; then

44 | if [ "$1" = 'matlab' ]; then

45 | matlab -nodisplay -nodesktop -nojvm -nosplash < ./eval/matlab/read_and_evaluate.m 1>&2

46 | elif [ "$1" = 'octave' ]; then

47 | octave < ./eval/octave/read_and_evaluate_octave.m 1>&2

48 | else

49 | echo "$ python eval/python/evaluate.py"

50 | python eval/python/evaluate.py

51 | fi

52 | fi

53 |

--------------------------------------------------------------------------------

/src/rank/m1/glove/eval/matlab/WordLookup.m:

--------------------------------------------------------------------------------

1 | function index = WordLookup(InputString)

2 | global wordMap

3 | if wordMap.isKey(InputString)

4 | index = wordMap(InputString);

5 | elseif wordMap.isKey('')

6 | index = wordMap('');

7 | else

8 | index = 0;

9 | end

10 |

--------------------------------------------------------------------------------

/src/rank/m1/glove/eval/matlab/evaluate_vectors.m:

--------------------------------------------------------------------------------

1 | function [BB] = evaluate_vectors(W)

2 |

3 | global wordMap

4 |

5 | filenames = {'capital-common-countries' 'capital-world' 'currency' 'city-in-state' 'family' 'gram1-adjective-to-adverb' ...

6 | 'gram2-opposite' 'gram3-comparative' 'gram4-superlative' 'gram5-present-participle' 'gram6-nationality-adjective' ...

7 | 'gram7-past-tense' 'gram8-plural' 'gram9-plural-verbs'};

8 | path = './eval/question-data/';

9 |

10 | split_size = 100; %to avoid memory overflow, could be increased/decreased depending on system and vocab size

11 |

12 | correct_sem = 0; %count correct semantic questions

13 | correct_syn = 0; %count correct syntactic questions

14 | correct_tot = 0; %count correct questions

15 | count_sem = 0; %count all semantic questions

16 | count_syn = 0; %count all syntactic questions

17 | count_tot = 0; %count all questions

18 | full_count = 0; %count all questions, including those with unknown words

19 |

20 | if wordMap.isKey('')

21 | unkkey = wordMap('');

22 | else

23 | unkkey = 0;

24 | end

25 |

26 | for j=1:length(filenames);

27 |

28 | clear dist;

29 |

30 | fid=fopen([path filenames{j} '.txt']);

31 | temp=textscan(fid,'%s%s%s%s');

32 | fclose(fid);

33 | ind1 = cellfun(@WordLookup,temp{1}); %indices of first word in analogy

34 | ind2 = cellfun(@WordLookup,temp{2}); %indices of second word in analogy

35 | ind3 = cellfun(@WordLookup,temp{3}); %indices of third word in analogy

36 | ind4 = cellfun(@WordLookup,temp{4}); %indices of answer word in analogy

37 | full_count = full_count + length(ind1);

38 | ind = (ind1 ~= unkkey) & (ind2 ~= unkkey) & (ind3 ~= unkkey) & (ind4 ~= unkkey); %only look at those questions which have no unknown words

39 | ind1 = ind1(ind);

40 | ind2 = ind2(ind);

41 | ind3 = ind3(ind);

42 | ind4 = ind4(ind);

43 | disp([filenames{j} ':']);

44 | mx = zeros(1,length(ind1));

45 | num_iter = ceil(length(ind1)/split_size);

46 | for jj=1:num_iter

47 | range = (jj-1)*split_size+1:min(jj*split_size,length(ind1));

48 | dist = full(W * (W(ind2(range),:)' - W(ind1(range),:)' + W(ind3(range),:)')); %cosine similarity if input W has been normalized

49 | for i=1:length(range)

50 | dist(ind1(range(i)),i) = -Inf;

51 | dist(ind2(range(i)),i) = -Inf;

52 | dist(ind3(range(i)),i) = -Inf;

53 | end

54 | [~, mx(range)] = max(dist); %predicted word index

55 | end

56 |

57 | val = (ind4 == mx'); %correct predictions

58 | count_tot = count_tot + length(ind1);

59 | correct_tot = correct_tot + sum(val);

60 | disp(['ACCURACY TOP1: ' num2str(mean(val)*100,'%-2.2f') '% (' num2str(sum(val)) '/' num2str(length(val)) ')']);

61 | if j < 6

62 | count_sem = count_sem + length(ind1);

63 | correct_sem = correct_sem + sum(val);

64 | else

65 | count_syn = count_syn + length(ind1);

66 | correct_syn = correct_syn + sum(val);

67 | end

68 |

69 | disp(['Total accuracy: ' num2str(100*correct_tot/count_tot,'%-2.2f') '% Semantic accuracy: ' num2str(100*correct_sem/count_sem,'%-2.2f') '% Syntactic accuracy: ' num2str(100*correct_syn/count_syn,'%-2.2f') '%']);

70 |

71 | end

72 | disp('________________________________________________________________________________');

73 | disp(['Questions seen/total: ' num2str(100*count_tot/full_count,'%-2.2f') '% (' num2str(count_tot) '/' num2str(full_count) ')']);

74 | disp(['Semantic Accuracy: ' num2str(100*correct_sem/count_sem,'%-2.2f') '% (' num2str(correct_sem) '/' num2str(count_sem) ')']);

75 | disp(['Syntactic Accuracy: ' num2str(100*correct_syn/count_syn,'%-2.2f') '% (' num2str(correct_syn) '/' num2str(count_syn) ')']);

76 | disp(['Total Accuracy: ' num2str(100*correct_tot/count_tot,'%-2.2f') '% (' num2str(correct_tot) '/' num2str(count_tot) ')']);

77 | BB = [100*correct_sem/count_sem 100*correct_syn/count_syn 100*correct_tot/count_tot];

78 |

79 | end

80 |

--------------------------------------------------------------------------------

/src/rank/m1/glove/eval/matlab/read_and_evaluate.m:

--------------------------------------------------------------------------------

1 | addpath('./eval/matlab');

2 | if(~exist('vocab_file'))

3 | vocab_file = 'vocab.txt';

4 | end

5 | if(~exist('vectors_file'))

6 | vectors_file = 'vectors.bin';

7 | end

8 |

9 | fid = fopen(vocab_file, 'r');

10 | words = textscan(fid, '%s %f');

11 | fclose(fid);

12 | words = words{1};

13 | vocab_size = length(words);

14 | global wordMap

15 | wordMap = containers.Map(words(1:vocab_size),1:vocab_size);

16 |

17 | fid = fopen(vectors_file,'r');

18 | fseek(fid,0,'eof');

19 | vector_size = ftell(fid)/16/vocab_size - 1;

20 | frewind(fid);

21 | WW = fread(fid, [vector_size+1 2*vocab_size], 'double')';

22 | fclose(fid);

23 |

24 | W1 = WW(1:vocab_size, 1:vector_size); % word vectors

25 | W2 = WW(vocab_size+1:end, 1:vector_size); % context (tilde) word vectors

26 |

27 | W = W1 + W2; %Evaluate on sum of word vectors

28 | W = bsxfun(@rdivide,W,sqrt(sum(W.*W,2))); %normalize vectors before evaluation

29 | evaluate_vectors(W);

30 | exit

31 |

32 |

--------------------------------------------------------------------------------

/src/rank/m1/glove/eval/octave/WordLookup_octave.m:

--------------------------------------------------------------------------------

1 | function index = WordLookup_octave(InputString)

2 | global wordMap

3 |

4 | if isfield(wordMap, InputString)

5 | index = wordMap.(InputString);

6 | elseif isfield(wordMap, '')

7 | index = wordMap.('');

8 | else

9 | index = 0;

10 | end

11 |

--------------------------------------------------------------------------------

/src/rank/m1/glove/eval/octave/evaluate_vectors_octave.m:

--------------------------------------------------------------------------------

1 | function [BB] = evaluate_vectors_octave(W)

2 |

3 | global wordMap

4 |

5 | filenames = {'capital-common-countries' 'capital-world' 'currency' 'city-in-state' 'family' 'gram1-adjective-to-adverb' ...

6 | 'gram2-opposite' 'gram3-comparative' 'gram4-superlative' 'gram5-present-participle' 'gram6-nationality-adjective' ...

7 | 'gram7-past-tense' 'gram8-plural' 'gram9-plural-verbs'};

8 | path = './eval/question-data/';

9 |

10 | split_size = 100; %to avoid memory overflow, could be increased/decreased depending on system and vocab size

11 |

12 | correct_sem = 0; %count correct semantic questions

13 | correct_syn = 0; %count correct syntactic questions

14 | correct_tot = 0; %count correct questions

15 | count_sem = 0; %count all semantic questions

16 | count_syn = 0; %count all syntactic questions

17 | count_tot = 0; %count all questions

18 | full_count = 0; %count all questions, including those with unknown words

19 |

20 |

21 | if isfield(wordMap, '')

22 | unkkey = wordMap.('');

23 | else

24 | unkkey = 0;

25 | end

26 |

27 | for j=1:length(filenames);

28 |

29 | clear dist;

30 |

31 | fid=fopen([path filenames{j} '.txt']);

32 | temp=textscan(fid,'%s%s%s%s');

33 | fclose(fid);

34 | ind1 = cellfun(@WordLookup_octave,temp{1}); %indices of first word in analogy

35 | ind2 = cellfun(@WordLookup_octave,temp{2}); %indices of second word in analogy

36 | ind3 = cellfun(@WordLookup_octave,temp{3}); %indices of third word in analogy

37 | ind4 = cellfun(@WordLookup_octave,temp{4}); %indices of answer word in analogy

38 | full_count = full_count + length(ind1);

39 | ind = (ind1 ~= unkkey) & (ind2 ~= unkkey) & (ind3 ~= unkkey) & (ind4 ~= unkkey); %only look at those questions which have no unknown words

40 | ind1 = ind1(ind);

41 | ind2 = ind2(ind);

42 | ind3 = ind3(ind);

43 | ind4 = ind4(ind);

44 | disp([filenames{j} ':']);

45 | mx = zeros(1,length(ind1));

46 | num_iter = ceil(length(ind1)/split_size);

47 | for jj=1:num_iter

48 | range = (jj-1)*split_size+1:min(jj*split_size,length(ind1));

49 | dist = full(W * (W(ind2(range),:)' - W(ind1(range),:)' + W(ind3(range),:)')); %cosine similarity if input W has been normalized

50 | for i=1:length(range)

51 | dist(ind1(range(i)),i) = -Inf;

52 | dist(ind2(range(i)),i) = -Inf;

53 | dist(ind3(range(i)),i) = -Inf;

54 | end

55 | [~, mx(range)] = max(dist); %predicted word index

56 | end

57 |

58 | val = (ind4 == mx'); %correct predictions

59 | count_tot = count_tot + length(ind1);

60 | correct_tot = correct_tot + sum(val);

61 | disp(['ACCURACY TOP1: ' num2str(mean(val)*100,'%-2.2f') '% (' num2str(sum(val)) '/' num2str(length(val)) ')']);

62 | if j < 6

63 | count_sem = count_sem + length(ind1);

64 | correct_sem = correct_sem + sum(val);

65 | else

66 | count_syn = count_syn + length(ind1);

67 | correct_syn = correct_syn + sum(val);

68 | end

69 |

70 | disp(['Total accuracy: ' num2str(100*correct_tot/count_tot,'%-2.2f') '% Semantic accuracy: ' num2str(100*correct_sem/count_sem,'%-2.2f') '% Syntactic accuracy: ' num2str(100*correct_syn/count_syn,'%-2.2f') '%']);

71 |

72 | end

73 | disp('________________________________________________________________________________');

74 | disp(['Questions seen/total: ' num2str(100*count_tot/full_count,'%-2.2f') '% (' num2str(count_tot) '/' num2str(full_count) ')']);

75 | disp(['Semantic Accuracy: ' num2str(100*correct_sem/count_sem,'%-2.2f') '% (' num2str(correct_sem) '/' num2str(count_sem) ')']);

76 | disp(['Syntactic Accuracy: ' num2str(100*correct_syn/count_syn,'%-2.2f') '% (' num2str(correct_syn) '/' num2str(count_syn) ')']);

77 | disp(['Total Accuracy: ' num2str(100*correct_tot/count_tot,'%-2.2f') '% (' num2str(correct_tot) '/' num2str(count_tot) ')']);

78 | BB = [100*correct_sem/count_sem 100*correct_syn/count_syn 100*correct_tot/count_tot];

79 |

80 | end

81 |

--------------------------------------------------------------------------------

/src/rank/m1/glove/eval/octave/read_and_evaluate_octave.m:

--------------------------------------------------------------------------------

1 | addpath('./eval/octave');

2 | if(~exist('vocab_file'))

3 | vocab_file = 'vocab.txt';

4 | end

5 | if(~exist('vectors_file'))

6 | vectors_file = 'vectors.bin';

7 | end

8 |

9 | fid = fopen(vocab_file, 'r');

10 | words = textscan(fid, '%s %f');

11 | fclose(fid);

12 | words = words{1};

13 | vocab_size = length(words);

14 | global wordMap

15 |

16 | wordMap = struct();

17 | for i=1:numel(words)

18 | wordMap.(words{i}) = i;

19 | end

20 |

21 | fid = fopen(vectors_file,'r');

22 | fseek(fid,0,'eof');

23 | vector_size = ftell(fid)/16/vocab_size - 1;

24 | frewind(fid);

25 | WW = fread(fid, [vector_size+1 2*vocab_size], 'double')';

26 | fclose(fid);

27 |

28 | W1 = WW(1:vocab_size, 1:vector_size); % word vectors

29 | W2 = WW(vocab_size+1:end, 1:vector_size); % context (tilde) word vectors

30 |

31 | W = W1 + W2; %Evaluate on sum of word vectors

32 | W = bsxfun(@rdivide,W,sqrt(sum(W.*W,2))); %normalize vectors before evaluation

33 | evaluate_vectors_octave(W);

34 | exit

35 |

36 |

--------------------------------------------------------------------------------

/src/rank/m1/glove/eval/python/distance.py:

--------------------------------------------------------------------------------

1 | import argparse

2 | import numpy as np

3 | import sys

4 |

5 | def generate():

6 | parser = argparse.ArgumentParser()

7 | parser.add_argument('--vocab_file', default='vocab.txt', type=str)

8 | parser.add_argument('--vectors_file', default='vectors.txt', type=str)

9 | args = parser.parse_args()

10 |

11 | with open(args.vocab_file, 'r') as f: