├── .github

├── ISSUE_TEMPLATE

│ ├── bug-report.yml

│ ├── config.yml

│ ├── feature-request.yml

│ └── question.yml

├── dependabot.yml

├── translate-readme.yml

└── workflows

│ ├── ci.yaml

│ ├── cla.yml

│ └── docker.yaml

├── .gitignore

├── .pre-commit-config.yaml

├── CITATION.cff

├── CONTRIBUTING.md

├── LICENSE

├── MANIFEST.in

├── README.md

├── README.zh-CN.md

├── docker

├── Dockerfile

├── Dockerfile-arm64

└── Dockerfile-cpu

├── docs

├── CNAME

├── README.md

├── SECURITY.md

├── app.md

├── assets

│ └── favicon.ico

├── callbacks.md

├── cfg.md

├── cli.md

├── engine.md

├── hub.md

├── index.md

├── predict.md

├── python.md

├── quickstart.md

├── reference

│ ├── base_pred.md

│ ├── base_trainer.md

│ ├── base_val.md

│ ├── exporter.md

│ ├── model.md

│ ├── nn.md

│ ├── ops.md

│ └── results.md

├── stylesheets

│ └── style.css

└── tasks

│ ├── classification.md

│ ├── detection.md

│ ├── segmentation.md

│ └── tracking.md

├── examples

├── README.md

├── YOLOv8-CPP-Inference

│ ├── CMakeLists.txt

│ ├── README.md

│ ├── inference.cpp

│ ├── inference.h

│ └── main.cpp

├── YOLOv8-OpenCV-ONNX-Python

│ ├── README.md

│ └── main.py

└── tutorial.ipynb

├── mkdocs.yml

├── requirements.txt

├── setup.cfg

├── setup.py

├── tests

├── test_cli.py

├── test_engine.py

└── test_python.py

└── ultralytics

├── __init__.py

├── assets

├── bus.jpg

└── zidane.jpg

├── datasets

├── Argoverse.yaml

├── GlobalWheat2020.yaml

├── ImageNet.yaml

├── Objects365.yaml

├── SKU-110K.yaml

├── VOC.yaml

├── VisDrone.yaml

├── coco.yaml

├── coco128-seg.yaml

├── coco128.yaml

├── coco8-seg.yaml

├── coco8.yaml

└── xView.yaml

├── hub

├── __init__.py

├── auth.py

├── session.py

└── utils.py

├── models

├── README.md

├── v3

│ ├── yolov3-sppu.yaml

│ ├── yolov3-tinyu.yaml

│ └── yolov3u.yaml

├── v5

│ ├── yolov5lu.yaml

│ ├── yolov5mu.yaml

│ ├── yolov5nu.yaml

│ ├── yolov5su.yaml

│ └── yolov5xu.yaml

└── v8

│ ├── cls

│ ├── yolov8l-cls.yaml

│ ├── yolov8m-cls.yaml

│ ├── yolov8n-cls.yaml

│ ├── yolov8s-cls.yaml

│ └── yolov8x-cls.yaml

│ ├── seg

│ ├── yolov8l-seg.yaml

│ ├── yolov8m-seg.yaml

│ ├── yolov8n-seg.yaml

│ ├── yolov8s-seg.yaml

│ └── yolov8x-seg.yaml

│ ├── yolov8l.yaml

│ ├── yolov8m.yaml

│ ├── yolov8n.yaml

│ ├── yolov8s.yaml

│ ├── yolov8x.yaml

│ └── yolov8x6.yaml

├── nn

├── __init__.py

├── autobackend.py

├── autoshape.py

├── modules.py

└── tasks.py

├── tracker

├── README.md

├── __init__.py

├── cfg

│ ├── botsort.yaml

│ └── bytetrack.yaml

├── track.py

├── trackers

│ ├── __init__.py

│ ├── basetrack.py

│ ├── bot_sort.py

│ └── byte_tracker.py

└── utils

│ ├── __init__.py

│ ├── gmc.py

│ ├── kalman_filter.py

│ └── matching.py

└── yolo

├── __init__.py

├── cfg

├── __init__.py

└── default.yaml

├── data

├── __init__.py

├── augment.py

├── base.py

├── build.py

├── dataloaders

│ ├── __init__.py

│ ├── stream_loaders.py

│ ├── v5augmentations.py

│ └── v5loader.py

├── dataset.py

├── dataset_wrappers.py

├── scripts

│ ├── download_weights.sh

│ ├── get_coco.sh

│ ├── get_coco128.sh

│ └── get_imagenet.sh

└── utils.py

├── engine

├── __init__.py

├── exporter.py

├── model.py

├── predictor.py

├── results.py

├── trainer.py

└── validator.py

├── utils

├── __init__.py

├── autobatch.py

├── benchmarks.py

├── callbacks

│ ├── __init__.py

│ ├── base.py

│ ├── clearml.py

│ ├── comet.py

│ ├── hub.py

│ └── tensorboard.py

├── checks.py

├── dist.py

├── downloads.py

├── files.py

├── instance.py

├── loss.py

├── metrics.py

├── ops.py

├── plotting.py

├── tal.py

└── torch_utils.py

└── v8

├── __init__.py

├── classify

├── __init__.py

├── predict.py

├── train.py

└── val.py

├── detect

├── __init__.py

├── predict.py

├── train.py

└── val.py

└── segment

├── __init__.py

├── predict.py

├── train.py

└── val.py

/.github/ISSUE_TEMPLATE/bug-report.yml:

--------------------------------------------------------------------------------

1 | name: 🐛 Bug Report

2 | # title: " "

3 | description: Problems with YOLOv8

4 | labels: [bug, triage]

5 | body:

6 | - type: markdown

7 | attributes:

8 | value: |

9 | Thank you for submitting a YOLOv8 🐛 Bug Report!

10 |

11 | - type: checkboxes

12 | attributes:

13 | label: Search before asking

14 | description: >

15 | Please search the [issues](https://github.com/ultralytics/ultralytics/issues) to see if a similar bug report already exists.

16 | options:

17 | - label: >

18 | I have searched the YOLOv8 [issues](https://github.com/ultralytics/ultralytics/issues) and found no similar bug report.

19 | required: true

20 |

21 | - type: dropdown

22 | attributes:

23 | label: YOLOv8 Component

24 | description: |

25 | Please select the part of YOLOv8 where you found the bug.

26 | multiple: true

27 | options:

28 | - "Training"

29 | - "Validation"

30 | - "Detection"

31 | - "Export"

32 | - "PyTorch Hub"

33 | - "Multi-GPU"

34 | - "Evolution"

35 | - "Integrations"

36 | - "Other"

37 | validations:

38 | required: false

39 |

40 | - type: textarea

41 | attributes:

42 | label: Bug

43 | description: Provide console output with error messages and/or screenshots of the bug.

44 | placeholder: |

45 | 💡 ProTip! Include as much information as possible (screenshots, logs, tracebacks etc.) to receive the most helpful response.

46 | validations:

47 | required: true

48 |

49 | - type: textarea

50 | attributes:

51 | label: Environment

52 | description: Please specify the software and hardware you used to produce the bug.

53 | placeholder: |

54 | - YOLO: Ultralytics YOLOv8.0.21 🚀 Python-3.8.10 torch-1.13.1+cu117 CUDA:0 (A100-SXM-80GB, 81251MiB)

55 | - OS: Ubuntu 20.04

56 | - Python: 3.8.10

57 | validations:

58 | required: false

59 |

60 | - type: textarea

61 | attributes:

62 | label: Minimal Reproducible Example

63 | description: >

64 | When asking a question, people will be better able to provide help if you provide code that they can easily understand and use to **reproduce** the problem.

65 | This is referred to by community members as creating a [minimal reproducible example](https://stackoverflow.com/help/minimal-reproducible-example).

66 | placeholder: |

67 | ```

68 | # Code to reproduce your issue here

69 | ```

70 | validations:

71 | required: false

72 |

73 | - type: textarea

74 | attributes:

75 | label: Additional

76 | description: Anything else you would like to share?

77 |

78 | - type: checkboxes

79 | attributes:

80 | label: Are you willing to submit a PR?

81 | description: >

82 | (Optional) We encourage you to submit a [Pull Request](https://github.com/ultralytics/ultralytics/pulls) (PR) to help improve YOLOv8 for everyone, especially if you have a good understanding of how to implement a fix or feature.

83 | See the YOLOv8 [Contributing Guide](https://github.com/ultralytics/ultralytics/blob/main/CONTRIBUTING.md) to get started.

84 | options:

85 | - label: Yes I'd like to help by submitting a PR!

86 |

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/config.yml:

--------------------------------------------------------------------------------

1 | blank_issues_enabled: true

2 | contact_links:

3 | - name: 📄Docs

4 | url: https://docs.ultralytics.com/

5 | about: Full Ultralytics YOLOv8 Documentation

6 | - name: 💬 Forum

7 | url: https://community.ultralytics.com/

8 | about: Ask on Ultralytics Community Forum

9 | - name: Stack Overflow

10 | url: https://stackoverflow.com/search?q=YOLOv8

11 | about: Ask on Stack Overflow with 'YOLOv8' tag

12 |

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/feature-request.yml:

--------------------------------------------------------------------------------

1 | name: 🚀 Feature Request

2 | description: Suggest a YOLOv8 idea

3 | # title: " "

4 | labels: [enhancement]

5 | body:

6 | - type: markdown

7 | attributes:

8 | value: |

9 | Thank you for submitting a YOLOv8 🚀 Feature Request!

10 |

11 | - type: checkboxes

12 | attributes:

13 | label: Search before asking

14 | description: >

15 | Please search the [issues](https://github.com/ultralytics/ultralytics/issues) to see if a similar feature request already exists.

16 | options:

17 | - label: >

18 | I have searched the YOLOv8 [issues](https://github.com/ultralytics/ultralytics/issues) and found no similar feature requests.

19 | required: true

20 |

21 | - type: textarea

22 | attributes:

23 | label: Description

24 | description: A short description of your feature.

25 | placeholder: |

26 | What new feature would you like to see in YOLOv8?

27 | validations:

28 | required: true

29 |

30 | - type: textarea

31 | attributes:

32 | label: Use case

33 | description: |

34 | Describe the use case of your feature request. It will help us understand and prioritize the feature request.

35 | placeholder: |

36 | How would this feature be used, and who would use it?

37 |

38 | - type: textarea

39 | attributes:

40 | label: Additional

41 | description: Anything else you would like to share?

42 |

43 | - type: checkboxes

44 | attributes:

45 | label: Are you willing to submit a PR?

46 | description: >

47 | (Optional) We encourage you to submit a [Pull Request](https://github.com/ultralytics/ultralytics/pulls) (PR) to help improve YOLOv8 for everyone, especially if you have a good understanding of how to implement a fix or feature.

48 | See the YOLOv8 [Contributing Guide](https://github.com/ultralytics/ultralytics/blob/main/CONTRIBUTING.md) to get started.

49 | options:

50 | - label: Yes I'd like to help by submitting a PR!

51 |

--------------------------------------------------------------------------------

/.github/ISSUE_TEMPLATE/question.yml:

--------------------------------------------------------------------------------

1 | name: ❓ Question

2 | description: Ask a YOLOv8 question

3 | # title: " "

4 | labels: [question]

5 | body:

6 | - type: markdown

7 | attributes:

8 | value: |

9 | Thank you for asking a YOLOv8 ❓ Question!

10 |

11 | - type: checkboxes

12 | attributes:

13 | label: Search before asking

14 | description: >

15 | Please search the [issues](https://github.com/ultralytics/ultralytics/issues) and [discussions](https://github.com/ultralytics/ultralytics/discussions) to see if a similar question already exists.

16 | options:

17 | - label: >

18 | I have searched the YOLOv8 [issues](https://github.com/ultralytics/ultralytics/issues) and [discussions](https://github.com/ultralytics/ultralytics/discussions) and found no similar questions.

19 | required: true

20 |

21 | - type: textarea

22 | attributes:

23 | label: Question

24 | description: What is your question?

25 | placeholder: |

26 | 💡 ProTip! Include as much information as possible (screenshots, logs, tracebacks etc.) to receive the most helpful response.

27 | validations:

28 | required: true

29 |

30 | - type: textarea

31 | attributes:

32 | label: Additional

33 | description: Anything else you would like to share?

34 |

--------------------------------------------------------------------------------

/.github/dependabot.yml:

--------------------------------------------------------------------------------

1 | # To get started with Dependabot version updates, you'll need to specify which

2 | # package ecosystems to update and where the package manifests are located.

3 | # Please see the documentation for all configuration options:

4 | # https://docs.github.com/github/administering-a-repository/configuration-options-for-dependency-updates

5 |

6 | version: 2

7 | updates:

8 | - package-ecosystem: pip

9 | directory: "/"

10 | schedule:

11 | interval: weekly

12 | time: "04:00"

13 | open-pull-requests-limit: 10

14 | reviewers:

15 | - glenn-jocher

16 | labels:

17 | - dependencies

18 |

19 | - package-ecosystem: github-actions

20 | directory: "/"

21 | schedule:

22 | interval: weekly

23 | time: "04:00"

24 | open-pull-requests-limit: 5

25 | reviewers:

26 | - glenn-jocher

27 | labels:

28 | - dependencies

29 |

--------------------------------------------------------------------------------

/.github/translate-readme.yml:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 | # README translation action to translate README.md to Chinese as README.zh-CN.md on any change to README.md

3 |

4 | name: Translate README

5 |

6 | on:

7 | push:

8 | branches:

9 | - translate_readme # replace with 'main' to enable action

10 | paths:

11 | - README.md

12 |

13 | jobs:

14 | Translate:

15 | runs-on: ubuntu-latest

16 | steps:

17 | - uses: actions/checkout@v3

18 | - name: Setup Node.js

19 | uses: actions/setup-node@v3

20 | with:

21 | node-version: 16

22 | # ISO Language Codes: https://cloud.google.com/translate/docs/languages

23 | - name: Adding README - Chinese Simplified

24 | uses: dephraiim/translate-readme@main

25 | with:

26 | LANG: zh-CN

27 |

--------------------------------------------------------------------------------

/.github/workflows/cla.yml:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 |

3 | name: "CLA Assistant"

4 | on:

5 | issue_comment:

6 | types:

7 | - created

8 | pull_request_target:

9 | types:

10 | - reopened

11 | - opened

12 | - synchronize

13 |

14 | jobs:

15 | CLA:

16 | if: github.repository == 'ultralytics/ultralytics'

17 | runs-on: ubuntu-latest

18 | steps:

19 | - name: "CLA Assistant"

20 | if: (github.event.comment.body == 'recheck' || github.event.comment.body == 'I have read the CLA Document and I sign the CLA') || github.event_name == 'pull_request_target'

21 | uses: contributor-assistant/github-action@v2.3.0

22 | env:

23 | GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

24 | # must be repository secret token

25 | PERSONAL_ACCESS_TOKEN: ${{ secrets.PERSONAL_ACCESS_TOKEN }}

26 | with:

27 | path-to-signatures: 'signatures/version1/cla.json'

28 | path-to-document: 'https://github.com/ultralytics/assets/blob/main/documents/CLA.md' # CLA document

29 | # branch should not be protected

30 | branch: 'main'

31 | allowlist: dependabot[bot],github-actions,[pre-commit*,pre-commit*,bot*

32 |

33 | remote-organization-name: ultralytics

34 | remote-repository-name: cla

35 | custom-pr-sign-comment: 'I have read the CLA Document and I sign the CLA'

36 | custom-allsigned-prcomment: All Contributors have signed the CLA. ✅

37 | #custom-notsigned-prcomment: 'pull request comment with Introductory message to ask new contributors to sign'

38 |

--------------------------------------------------------------------------------

/.github/workflows/docker.yaml:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 | # Builds ultralytics/ultralytics:latest images on DockerHub https://hub.docker.com/r/ultralytics

3 |

4 | name: Publish Docker Images

5 |

6 | on:

7 | push:

8 | branches: [main]

9 |

10 | jobs:

11 | docker:

12 | if: github.repository == 'ultralytics/ultralytics'

13 | name: Push Docker image to Docker Hub

14 | runs-on: ubuntu-latest

15 | steps:

16 | - name: Checkout repo

17 | uses: actions/checkout@v3

18 |

19 | - name: Set up QEMU

20 | uses: docker/setup-qemu-action@v2

21 |

22 | - name: Set up Docker Buildx

23 | uses: docker/setup-buildx-action@v2

24 |

25 | - name: Login to Docker Hub

26 | uses: docker/login-action@v2

27 | with:

28 | username: ${{ secrets.DOCKERHUB_USERNAME }}

29 | password: ${{ secrets.DOCKERHUB_TOKEN }}

30 |

31 | - name: Build and push arm64 image

32 | uses: docker/build-push-action@v4

33 | continue-on-error: true

34 | with:

35 | context: .

36 | platforms: linux/arm64

37 | file: docker/Dockerfile-arm64

38 | push: true

39 | tags: ultralytics/ultralytics:latest-arm64

40 |

41 | - name: Build and push CPU image

42 | uses: docker/build-push-action@v4

43 | continue-on-error: true

44 | with:

45 | context: .

46 | file: docker/Dockerfile-cpu

47 | push: true

48 | tags: ultralytics/ultralytics:latest-cpu

49 |

50 | - name: Build and push GPU image

51 | uses: docker/build-push-action@v4

52 | continue-on-error: true

53 | with:

54 | context: .

55 | file: docker/Dockerfile

56 | push: true

57 | tags: ultralytics/ultralytics:latest

58 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 |

6 | # C extensions

7 | *.so

8 |

9 | # Distribution / packaging

10 | .Python

11 | build/

12 | develop-eggs/

13 | dist/

14 | downloads/

15 | eggs/

16 | .eggs/

17 | lib/

18 | lib64/

19 | parts/

20 | sdist/

21 | var/

22 | wheels/

23 | pip-wheel-metadata/

24 | share/python-wheels/

25 | *.egg-info/

26 | .installed.cfg

27 | *.egg

28 | MANIFEST

29 |

30 | # PyInstaller

31 | # Usually these files are written by a python script from a template

32 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

33 | *.manifest

34 | *.spec

35 |

36 | # Installer logs

37 | pip-log.txt

38 | pip-delete-this-directory.txt

39 |

40 | # Unit test / coverage reports

41 | htmlcov/

42 | .tox/

43 | .nox/

44 | .coverage

45 | .coverage.*

46 | .cache

47 | nosetests.xml

48 | coverage.xml

49 | *.cover

50 | *.py,cover

51 | .hypothesis/

52 | .pytest_cache/

53 |

54 | # Translations

55 | *.mo

56 | *.pot

57 |

58 | # Django stuff:

59 | *.log

60 | local_settings.py

61 | db.sqlite3

62 | db.sqlite3-journal

63 |

64 | # Flask stuff:

65 | instance/

66 | .webassets-cache

67 |

68 | # Scrapy stuff:

69 | .scrapy

70 |

71 | # Sphinx documentation

72 | docs/_build/

73 |

74 | # PyBuilder

75 | target/

76 |

77 | # Jupyter Notebook

78 | .ipynb_checkpoints

79 |

80 | # IPython

81 | profile_default/

82 | ipython_config.py

83 |

84 | # Profiling

85 | *.pclprof

86 |

87 | # pyenv

88 | .python-version

89 |

90 | # pipenv

91 | # According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

92 | # However, in case of collaboration, if having platform-specific dependencies or dependencies

93 | # having no cross-platform support, pipenv may install dependencies that don't work, or not

94 | # install all needed dependencies.

95 | #Pipfile.lock

96 |

97 | # PEP 582; used by e.g. github.com/David-OConnor/pyflow

98 | __pypackages__/

99 |

100 | # Celery stuff

101 | celerybeat-schedule

102 | celerybeat.pid

103 |

104 | # SageMath parsed files

105 | *.sage.py

106 |

107 | # Environments

108 | .env

109 | .venv

110 | env/

111 | venv/

112 | ENV/

113 | env.bak/

114 | venv.bak/

115 |

116 | # Spyder project settings

117 | .spyderproject

118 | .spyproject

119 |

120 | # Rope project settings

121 | .ropeproject

122 |

123 | # mkdocs documentation

124 | /site

125 |

126 | # mypy

127 | .mypy_cache/

128 | .dmypy.json

129 | dmypy.json

130 |

131 | # Pyre type checker

132 | .pyre/

133 |

134 | # datasets and projects

135 | datasets/

136 | runs/

137 | wandb/

138 |

139 | .DS_Store

140 |

141 | # Neural Network weights -----------------------------------------------------------------------------------------------

142 | weights/

143 | *.weights

144 | *.pt

145 | *.pb

146 | *.onnx

147 | *.engine

148 | *.mlmodel

149 | *.torchscript

150 | *.tflite

151 | *.h5

152 | *_saved_model/

153 | *_web_model/

154 | *_openvino_model/

155 | *_paddle_model/

156 |

--------------------------------------------------------------------------------

/.pre-commit-config.yaml:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 | # Pre-commit hooks. For more information see https://github.com/pre-commit/pre-commit-hooks/blob/main/README.md

3 |

4 | exclude: 'docs/'

5 | # Define bot property if installed via https://github.com/marketplace/pre-commit-ci

6 | ci:

7 | autofix_prs: true

8 | autoupdate_commit_msg: '[pre-commit.ci] pre-commit suggestions'

9 | autoupdate_schedule: monthly

10 | # submodules: true

11 |

12 | repos:

13 | - repo: https://github.com/pre-commit/pre-commit-hooks

14 | rev: v4.4.0

15 | hooks:

16 | - id: end-of-file-fixer

17 | - id: trailing-whitespace

18 | - id: check-case-conflict

19 | - id: check-yaml

20 | - id: check-docstring-first

21 | - id: double-quote-string-fixer

22 | - id: detect-private-key

23 |

24 | - repo: https://github.com/asottile/pyupgrade

25 | rev: v3.3.1

26 | hooks:

27 | - id: pyupgrade

28 | name: Upgrade code

29 | args: [--py37-plus]

30 |

31 | - repo: https://github.com/PyCQA/isort

32 | rev: 5.12.0

33 | hooks:

34 | - id: isort

35 | name: Sort imports

36 |

37 | - repo: https://github.com/google/yapf

38 | rev: v0.32.0

39 | hooks:

40 | - id: yapf

41 | name: YAPF formatting

42 |

43 | - repo: https://github.com/executablebooks/mdformat

44 | rev: 0.7.16

45 | hooks:

46 | - id: mdformat

47 | name: MD formatting

48 | additional_dependencies:

49 | - mdformat-gfm

50 | - mdformat-black

51 | # exclude: "README.md|README.zh-CN.md|CONTRIBUTING.md"

52 |

53 | - repo: https://github.com/PyCQA/flake8

54 | rev: 6.0.0

55 | hooks:

56 | - id: flake8

57 | name: PEP8

58 |

59 | - repo: https://github.com/codespell-project/codespell

60 | rev: v2.2.2

61 | hooks:

62 | - id: codespell

63 | args:

64 | - --ignore-words-list=crate,nd,strack,dota

65 |

66 | #- repo: https://github.com/asottile/yesqa

67 | # rev: v1.4.0

68 | # hooks:

69 | # - id: yesqa

70 |

--------------------------------------------------------------------------------

/CITATION.cff:

--------------------------------------------------------------------------------

1 | cff-version: 1.2.0

2 | preferred-citation:

3 | type: software

4 | message: If you use this software, please cite it as below.

5 | authors:

6 | - family-names: Jocher

7 | given-names: Glenn

8 | orcid: "https://orcid.org/0000-0001-5950-6979"

9 | - family-names: Chaurasia

10 | given-names: Ayush

11 | orcid: "https://orcid.org/0000-0002-7603-6750"

12 | - family-names: Qiu

13 | given-names: Jing

14 | orcid: "https://orcid.org/0000-0003-3783-7069"

15 | title: "YOLO by Ultralytics"

16 | version: 8.0.0

17 | # doi: 10.5281/zenodo.3908559 # TODO

18 | date-released: 2023-1-10

19 | license: GPL-3.0

20 | url: "https://github.com/ultralytics/ultralytics"

21 |

--------------------------------------------------------------------------------

/MANIFEST.in:

--------------------------------------------------------------------------------

1 | include *.md

2 | include requirements.txt

3 | include LICENSE

4 | include setup.py

5 | recursive-include ultralytics *.yaml

6 | recursive-exclude __pycache__ *

7 |

--------------------------------------------------------------------------------

/docker/Dockerfile:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 | # Builds ultralytics/ultralytics:latest image on DockerHub https://hub.docker.com/r/ultralytics/ultralytics

3 | # Image is CUDA-optimized for YOLOv8 single/multi-GPU training and inference

4 |

5 | # Start FROM NVIDIA PyTorch image https://ngc.nvidia.com/catalog/containers/nvidia:pytorch

6 | # FROM docker.io/pytorch/pytorch:latest

7 | FROM pytorch/pytorch:latest

8 |

9 | # Downloads to user config dir

10 | ADD https://ultralytics.com/assets/Arial.ttf https://ultralytics.com/assets/Arial.Unicode.ttf /root/.config/Ultralytics/

11 |

12 | # Install linux packages

13 | ENV DEBIAN_FRONTEND noninteractive

14 | RUN apt update

15 | RUN TZ=Etc/UTC apt install -y tzdata

16 | RUN apt install --no-install-recommends -y gcc git zip curl htop libgl1-mesa-glx libglib2.0-0 libpython3-dev gnupg g++

17 | # RUN alias python=python3

18 |

19 | # Security updates

20 | # https://security.snyk.io/vuln/SNYK-UBUNTU1804-OPENSSL-3314796

21 | RUN apt upgrade --no-install-recommends -y openssl tar

22 |

23 | # Create working directory

24 | RUN mkdir -p /usr/src/ultralytics

25 | WORKDIR /usr/src/ultralytics

26 |

27 | # Copy contents

28 | # COPY . /usr/src/app (issues as not a .git directory)

29 | RUN git clone https://github.com/ultralytics/ultralytics /usr/src/ultralytics

30 |

31 | # Install pip packages

32 | RUN python3 -m pip install --upgrade pip wheel

33 | RUN pip install --no-cache '.[export]' albumentations comet gsutil notebook

34 |

35 | # Set environment variables

36 | ENV OMP_NUM_THREADS=1

37 |

38 | # Cleanup

39 | ENV DEBIAN_FRONTEND teletype

40 |

41 |

42 | # Usage Examples -------------------------------------------------------------------------------------------------------

43 |

44 | # Build and Push

45 | # t=ultralytics/ultralytics:latest && sudo docker build -f docker/Dockerfile -t $t . && sudo docker push $t

46 |

47 | # Pull and Run

48 | # t=ultralytics/ultralytics:latest && sudo docker pull $t && sudo docker run -it --ipc=host --gpus all $t

49 |

50 | # Pull and Run with local directory access

51 | # t=ultralytics/ultralytics:latest && sudo docker pull $t && sudo docker run -it --ipc=host --gpus all -v "$(pwd)"/datasets:/usr/src/datasets $t

52 |

53 | # Kill all

54 | # sudo docker kill $(sudo docker ps -q)

55 |

56 | # Kill all image-based

57 | # sudo docker kill $(sudo docker ps -qa --filter ancestor=ultralytics/ultralytics:latest)

58 |

59 | # DockerHub tag update

60 | # t=ultralytics/ultralytics:latest tnew=ultralytics/ultralytics:v6.2 && sudo docker pull $t && sudo docker tag $t $tnew && sudo docker push $tnew

61 |

62 | # Clean up

63 | # sudo docker system prune -a --volumes

64 |

65 | # Update Ubuntu drivers

66 | # https://www.maketecheasier.com/install-nvidia-drivers-ubuntu/

67 |

68 | # DDP test

69 | # python -m torch.distributed.run --nproc_per_node 2 --master_port 1 train.py --epochs 3

70 |

71 | # GCP VM from Image

72 | # docker.io/ultralytics/ultralytics:latest

73 |

--------------------------------------------------------------------------------

/docker/Dockerfile-arm64:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 | # Builds ultralytics/ultralytics:latest-arm64 image on DockerHub https://hub.docker.com/r/ultralytics/ultralytics

3 | # Image is aarch64-compatible for Apple M1 and other ARM architectures i.e. Jetson Nano and Raspberry Pi

4 |

5 | # Start FROM Ubuntu image https://hub.docker.com/_/ubuntu

6 | FROM arm64v8/ubuntu:rolling

7 |

8 | # Downloads to user config dir

9 | ADD https://ultralytics.com/assets/Arial.ttf https://ultralytics.com/assets/Arial.Unicode.ttf /root/.config/Ultralytics/

10 |

11 | # Install linux packages

12 | ENV DEBIAN_FRONTEND noninteractive

13 | RUN apt update

14 | RUN TZ=Etc/UTC apt install -y tzdata

15 | RUN apt install --no-install-recommends -y python3-pip git zip curl htop gcc libgl1-mesa-glx libglib2.0-0 libpython3-dev

16 | # RUN alias python=python3

17 |

18 | # Create working directory

19 | RUN mkdir -p /usr/src/ultralytics

20 | WORKDIR /usr/src/ultralytics

21 |

22 | # Copy contents

23 | # COPY . /usr/src/app (issues as not a .git directory)

24 | RUN git clone https://github.com/ultralytics/ultralytics /usr/src/ultralytics

25 |

26 | # Install pip packages

27 | RUN python3 -m pip install --upgrade pip wheel

28 | RUN pip install --no-cache . albumentations gsutil notebook

29 |

30 | # Cleanup

31 | ENV DEBIAN_FRONTEND teletype

32 |

33 |

34 | # Usage Examples -------------------------------------------------------------------------------------------------------

35 |

36 | # Build and Push

37 | # t=ultralytics/ultralytics:latest-arm64 && sudo docker build --platform linux/arm64 -f docker/Dockerfile-arm64 -t $t . && sudo docker push $t

38 |

39 | # Pull and Run

40 | # t=ultralytics/ultralytics:latest-arm64 && sudo docker pull $t && sudo docker run -it --ipc=host -v "$(pwd)"/datasets:/usr/src/datasets $t

41 |

--------------------------------------------------------------------------------

/docker/Dockerfile-cpu:

--------------------------------------------------------------------------------

1 | # Ultralytics YOLO 🚀, GPL-3.0 license

2 | # Builds ultralytics/ultralytics:latest-cpu image on DockerHub https://hub.docker.com/r/ultralytics/ultralytics

3 | # Image is CPU-optimized for ONNX, OpenVINO and PyTorch YOLOv8 deployments

4 |

5 | # Start FROM Ubuntu image https://hub.docker.com/_/ubuntu

6 | FROM ubuntu:rolling

7 |

8 | # Downloads to user config dir

9 | ADD https://ultralytics.com/assets/Arial.ttf https://ultralytics.com/assets/Arial.Unicode.ttf /root/.config/Ultralytics/

10 |

11 | # Install linux packages

12 | ENV DEBIAN_FRONTEND noninteractive

13 | RUN apt update

14 | RUN TZ=Etc/UTC apt install -y tzdata

15 | RUN apt install --no-install-recommends -y python3-pip git zip curl htop libgl1-mesa-glx libglib2.0-0 libpython3-dev gnupg g++

16 | # RUN alias python=python3

17 |

18 | # Create working directory

19 | RUN mkdir -p /usr/src/ultralytics

20 | WORKDIR /usr/src/ultralytics

21 |

22 | # Copy contents

23 | # COPY . /usr/src/app (issues as not a .git directory)

24 | RUN git clone https://github.com/ultralytics/ultralytics /usr/src/ultralytics

25 |

26 | # Install pip packages

27 | RUN python3 -m pip install --upgrade pip wheel

28 | RUN pip install --no-cache '.[export]' albumentations gsutil notebook \

29 | --extra-index-url https://download.pytorch.org/whl/cpu

30 |

31 | # Cleanup

32 | ENV DEBIAN_FRONTEND teletype

33 |

34 |

35 | # Usage Examples -------------------------------------------------------------------------------------------------------

36 |

37 | # Build and Push

38 | # t=ultralytics/ultralytics:latest-cpu && sudo docker build -f docker/Dockerfile-cpu -t $t . && sudo docker push $t

39 |

40 | # Pull and Run

41 | # t=ultralytics/ultralytics:latest-cpu && sudo docker pull $t && sudo docker run -it --ipc=host -v "$(pwd)"/datasets:/usr/src/datasets $t

42 |

--------------------------------------------------------------------------------

/docs/CNAME:

--------------------------------------------------------------------------------

1 | docs.ultralytics.com

--------------------------------------------------------------------------------

/docs/README.md:

--------------------------------------------------------------------------------

1 | # Ultralytics Docs

2 |

3 | Ultralytics Docs are deployed to [https://docs.ultralytics.com](https://docs.ultralytics.com).

4 |

5 | ### Install Ultralytics package

6 |

7 | To install the ultralytics package in developer mode, you will need to have Git and Python 3 installed on your system.

8 | Then, follow these steps:

9 |

10 | 1. Clone the ultralytics repository to your local machine using Git:

11 |

12 | ```bash

13 | git clone https://github.com/ultralytics/ultralytics.git

14 | ```

15 |

16 | 2. Navigate to the root directory of the repository:

17 |

18 | ```bash

19 | cd ultralytics

20 | ```

21 |

22 | 3. Install the package in developer mode using pip:

23 |

24 | ```bash

25 | pip install -e '.[dev]'

26 | ```

27 |

28 | This will install the ultralytics package and its dependencies in developer mode, allowing you to make changes to the

29 | package code and have them reflected immediately in your Python environment.

30 |

31 | Note that you may need to use the pip3 command instead of pip if you have multiple versions of Python installed on your

32 | system.

33 |

34 | ### Building and Serving Locally

35 |

36 | The `mkdocs serve` command is used to build and serve a local version of the MkDocs documentation site. It is typically

37 | used during the development and testing phase of a documentation project.

38 |

39 | ```bash

40 | mkdocs serve

41 | ```

42 |

43 | Here is a breakdown of what this command does:

44 |

45 | - `mkdocs`: This is the command-line interface (CLI) for the MkDocs static site generator. It is used to build and serve

46 | MkDocs sites.

47 | - `serve`: This is a subcommand of the `mkdocs` CLI that tells it to build and serve the documentation site locally.

48 | - `-a`: This flag specifies the hostname and port number to bind the server to. The default value is `localhost:8000`.

49 | - `-t`: This flag specifies the theme to use for the documentation site. The default value is `mkdocs`.

50 | - `-s`: This flag tells the `serve` command to serve the site in silent mode, which means it will not display any log

51 | messages or progress updates.

52 | When you run the `mkdocs serve` command, it will build the documentation site using the files in the `docs/` directory

53 | and serve it at the specified hostname and port number. You can then view the site by going to the URL in your web

54 | browser.

55 |

56 | While the site is being served, you can make changes to the documentation files and see them reflected in the live site

57 | immediately. This is useful for testing and debugging your documentation before deploying it to a live server.

58 |

59 | To stop the serve command and terminate the local server, you can use the `CTRL+C` keyboard shortcut.

60 |

61 | ### Deploying Your Documentation Site

62 |

63 | To deploy your MkDocs documentation site, you will need to choose a hosting provider and a deployment method. Some

64 | popular options include GitHub Pages, GitLab Pages, and Amazon S3.

65 |

66 | Before you can deploy your site, you will need to configure your `mkdocs.yml` file to specify the remote host and any

67 | other necessary deployment settings.

68 |

69 | Once you have configured your `mkdocs.yml` file, you can use the `mkdocs deploy` command to build and deploy your site.

70 | This command will build the documentation site using the files in the `docs/` directory and the specified configuration

71 | file and theme, and then deploy the site to the specified remote host.

72 |

73 | For example, to deploy your site to GitHub Pages using the gh-deploy plugin, you can use the following command:

74 |

75 | ```bash

76 | mkdocs gh-deploy

77 | ```

78 |

79 | If you are using GitHub Pages, you can set a custom domain for your documentation site by going to the "Settings" page

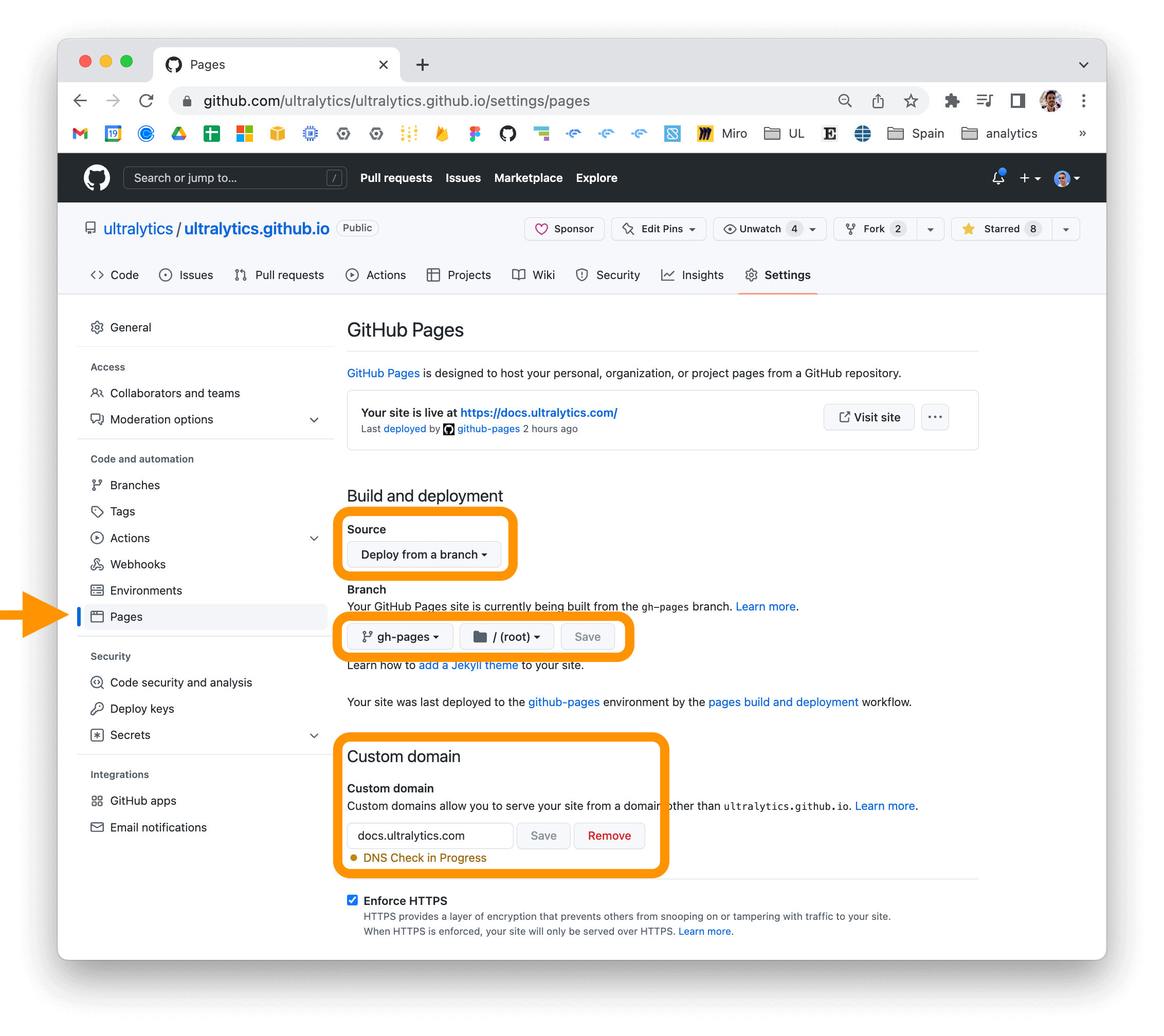

80 | for your repository and updating the "Custom domain" field in the "GitHub Pages" section.

81 |

82 |

83 |

84 | For more information on deploying your MkDocs documentation site, see

85 | the [MkDocs documentation](https://www.mkdocs.org/user-guide/deploying-your-docs/).

86 |

--------------------------------------------------------------------------------

/docs/SECURITY.md:

--------------------------------------------------------------------------------

1 | At [Ultralytics](https://ultralytics.com), the security of our users' data and systems is of utmost importance. To

2 | ensure the safety and security of our [open-source projects](https://github.com/ultralytics), we have implemented

3 | several measures to detect and prevent security vulnerabilities.

4 |

5 | [](https://snyk.io/advisor/python/ultralytics)

6 |

7 | ## Snyk Scanning

8 |

9 | We use [Snyk](https://snyk.io/advisor/python/ultralytics) to regularly scan the YOLOv8 repository for vulnerabilities

10 | and security issues. Our goal is to identify and remediate any potential threats as soon as possible, to minimize any

11 | risks to our users.

12 |

13 | ## GitHub CodeQL Scanning

14 |

15 | In addition to our Snyk scans, we also use

16 | GitHub's [CodeQL](https://docs.github.com/en/code-security/code-scanning/automatically-scanning-your-code-for-vulnerabilities-and-errors/about-code-scanning-with-codeql)

17 | scans to proactively identify and address security vulnerabilities.

18 |

19 | ## Reporting Security Issues

20 |

21 | If you suspect or discover a security vulnerability in the YOLOv8 repository, please let us know immediately. You can

22 | reach out to us directly via our [contact form](https://ultralytics.com/contact) or

23 | via [security@ultralytics.com](mailto:security@ultralytics.com). Our security team will investigate and respond as soon

24 | as possible.

25 |

26 | We appreciate your help in keeping the YOLOv8 repository secure and safe for everyone.

27 |

--------------------------------------------------------------------------------

/docs/app.md:

--------------------------------------------------------------------------------

1 | # Ultralytics HUB App for YOLOv8

2 |

3 |

4 |  5 |

5 |

6 |

7 |

35 |

36 |

37 | Welcome to the Ultralytics HUB app for demonstrating YOLOv5 and YOLOv8 models! In this app, available on the [Apple App

38 | Store](https://apps.apple.com/xk/app/ultralytics/id1583935240) and the

39 | [Google Play Store](https://play.google.com/store/apps/details?id=com.ultralytics.ultralytics_app), you will be able

40 | to see the power and capabilities of YOLOv5, a state-of-the-art object detection model developed by Ultralytics.

41 |

42 | **To install simply scan the QR code above**. The App currently features YOLOv5 models, with YOLOv8 models coming soon.

43 |

44 | With YOLOv5, you can detect and classify objects in images and videos with high accuracy and speed. The model has been

45 | trained on a large dataset and is able to detect a wide range of objects, including cars, pedestrians, and traffic

46 | signs.

47 |

48 | In this app, you will be able to try out YOLOv5 on your own images and videos, and see the model in action. You can also

49 | learn more about how YOLOv5 works and how it can be used in real-world applications.

50 |

51 | We hope you enjoy using YOLOv5 and seeing its capabilities firsthand. Thank you for choosing Ultralytics for your object

52 | detection needs!

--------------------------------------------------------------------------------

/docs/assets/favicon.ico:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/wudashuo/yolov8/3861e6c82aaa1bbb214f020ece3a4bd4712eacbe/docs/assets/favicon.ico

--------------------------------------------------------------------------------

/docs/callbacks.md:

--------------------------------------------------------------------------------

1 | ## Callbacks

2 |

3 | Ultralytics framework supports callbacks as entry points in strategic stages of train, val, export, and predict modes.

4 | Each callback accepts a `Trainer`, `Validator`, or `Predictor` object depending on the operation type. All properties of

5 | these objects can be found in Reference section of the docs.

6 |

7 | ## Examples

8 |

9 | ### Returning additional information with Prediction

10 |

11 | In this example, we want to return the original frame with each result object. Here's how we can do that

12 |

13 | ```python

14 | def on_predict_batch_end(predictor):

15 | # results -> List[batch_size]

16 | _, _, im0s, _, _ = predictor.batch

17 | im0s = im0s if isinstance(im0s, list) else [im0s]

18 | predictor.results = zip(predictor.results, im0s)

19 |

20 | model = YOLO(f"yolov8n.pt")

21 | model.add_callback("on_predict_batch_end", on_predict_batch_end)

22 | for (result, frame) in model.track/predict():

23 | pass

24 | ```

25 |

26 | ## All callbacks

27 |

28 | Here are all supported callbacks.

29 |

30 | ### Trainer

31 |

32 | `on_pretrain_routine_start`

33 |

34 | `on_pretrain_routine_end`

35 |

36 | `on_train_start`

37 |

38 | `on_train_epoch_start`

39 |

40 | `on_train_batch_start`

41 |

42 | `optimizer_step`

43 |

44 | `on_before_zero_grad`

45 |

46 | `on_train_batch_end`

47 |

48 | `on_train_epoch_end`

49 |

50 | `on_fit_epoch_end`

51 |

52 | `on_model_save`

53 |

54 | `on_train_end`

55 |

56 | `on_params_update`

57 |

58 | `teardown`

59 |

60 | ### Validator

61 |

62 | `on_val_start`

63 |

64 | `on_val_batch_start`

65 |

66 | `on_val_batch_end`

67 |

68 | `on_val_end`

69 |

70 | ### Predictor

71 |

72 | `on_predict_start`

73 |

74 | `on_predict_batch_start`

75 |

76 | `on_predict_postprocess_end`

77 |

78 | `on_predict_batch_end`

79 |

80 | `on_predict_end`

81 |

82 | ### Exporter

83 |

84 | `on_export_start`

85 |

86 | `on_export_end`

87 |

--------------------------------------------------------------------------------

/docs/engine.md:

--------------------------------------------------------------------------------

1 | Both the Ultralytics YOLO command-line and python interfaces are simply a high-level abstraction on the base engine

2 | executors. Let's take a look at the Trainer engine.

3 |

4 | ## BaseTrainer

5 |

6 | BaseTrainer contains the generic boilerplate training routine. It can be customized for any task based over overriding

7 | the required functions or operations as long the as correct formats are followed. For example, you can support your own

8 | custom model and dataloader by just overriding these functions:

9 |

10 | * `get_model(cfg, weights)` - The function that builds the model to be trained

11 | * `get_dataloder()` - The function that builds the dataloader

12 | More details and source code can be found in [`BaseTrainer` Reference](reference/base_trainer.md)

13 |

14 | ## DetectionTrainer

15 |

16 | Here's how you can use the YOLOv8 `DetectionTrainer` and customize it.

17 |

18 | ```python

19 | from ultralytics.yolo.v8.detect import DetectionTrainer

20 |

21 | trainer = DetectionTrainer(overrides={...})

22 | trainer.train()

23 | trained_model = trainer.best # get best model

24 | ```

25 |

26 | ### Customizing the DetectionTrainer

27 |

28 | Let's customize the trainer **to train a custom detection model** that is not supported directly. You can do this by

29 | simply overloading the existing the `get_model` functionality:

30 |

31 | ```python

32 | from ultralytics.yolo.v8.detect import DetectionTrainer

33 |

34 |

35 | class CustomTrainer(DetectionTrainer):

36 | def get_model(self, cfg, weights):

37 | ...

38 |

39 |

40 | trainer = CustomTrainer(overrides={...})

41 | trainer.train()

42 | ```

43 |

44 | You now realize that you need to customize the trainer further to:

45 |

46 | * Customize the `loss function`.

47 | * Add `callback` that uploads model to your Google Drive after every 10 `epochs`

48 | Here's how you can do it:

49 |

50 | ```python

51 | from ultralytics.yolo.v8.detect import DetectionTrainer

52 |

53 |

54 | class CustomTrainer(DetectionTrainer):

55 | def get_model(self, cfg, weights):

56 | ...

57 |

58 | def criterion(self, preds, batch):

59 | # get ground truth

60 | imgs = batch["imgs"]

61 | bboxes = batch["bboxes"]

62 | ...

63 | return loss, loss_items # see Reference-> Trainer for details on the expected format

64 |

65 |

66 | # callback to upload model weights

67 | def log_model(trainer):

68 | last_weight_path = trainer.last

69 | ...

70 |

71 |

72 | trainer = CustomTrainer(overrides={...})

73 | trainer.add_callback("on_train_epoch_end", log_model) # Adds to existing callback

74 | trainer.train()

75 | ```

76 |

77 | To know more about Callback triggering events and entry point, checkout our Callbacks guide # TODO

78 |

79 | ## Other engine components

80 |

81 | There are other components that can be customized similarly like `Validators` and `Predictors`

82 | See Reference section for more information on these.

83 |

84 |

--------------------------------------------------------------------------------

/docs/index.md:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

10 |

10 |  11 |

12 |

11 |

12 |  13 |

13 |  14 |

15 |

14 |

15 |  16 |

16 |  17 |

18 |

17 |

18 |  19 |

19 |  20 |

21 |

20 |

21 |  22 |

22 |  23 |

24 |

23 |

24 |  25 |

25 |  26 |

27 |

26 |

27 |  28 |

28 |  5 |

5 |  10 |

10 |  11 |

12 |

11 |

12 |  13 |

13 |  14 |

15 |

14 |

15 |  16 |

16 |  17 |

18 |

17 |

18 |  19 |

19 |  20 |

21 |

20 |

21 |  22 |

22 |  23 |

24 |

23 |

24 |  25 |

25 |  26 |

27 |

26 |

27 |  28 |

28 |