├── .nojekyll

├── 循环神经网络

└── README.md

├── 行业应用

├── 聊天对话

│ └── README.md

└── 机器人问答

│ └── README.md

├── INTRODUCTION.md

├── LLM

└── README.link

├── 经典自然语言

├── 词嵌入

│ ├── 99~参考资料

│ │ └── 2023~Embeddings: What they are and why they matter.md

│ ├── 概述.md

│ └── 词向量

│ │ └── 基于 Gensim 的 Word2Vec 实践.md

├── 语法语义分析

│ └── 命名实体识别.md

├── 统计语言模型

│ ├── 词表示.md

│ ├── 统计语言模型.md

│ ├── 基础文本处理.md

│ └── Word2Vec.md

└── 主题模型

│ └── LDA.md

├── 99~参考资料

├── 2023~吴恩达~《Building Systems with the ChatGPT API》

│ ├── 11.conclusion.md

│ ├── 1.Introduction.md

│ ├── readme.md

│ ├── 7.Check Outputs.ipynb

│ ├── products.json

│ ├── 3.Classification.ipynb

│ └── 5.Chain of Thought Reasoning.ipynb

├── 2023~吴恩达~《LangChain for LLM Application Development》

│ ├── readme.md

│ ├── 8.课程总结.md

│ ├── 1.开篇介绍.md

│ └── Data.csv

├── 2023~吴恩达~《ChatGPT Prompt Engineering for Developers》

│ ├── 09. 总结.md

│ ├── 00.README.md

│ ├── 01. 简介.md

│ └── 07. 文本扩展 Expanding.ipynb

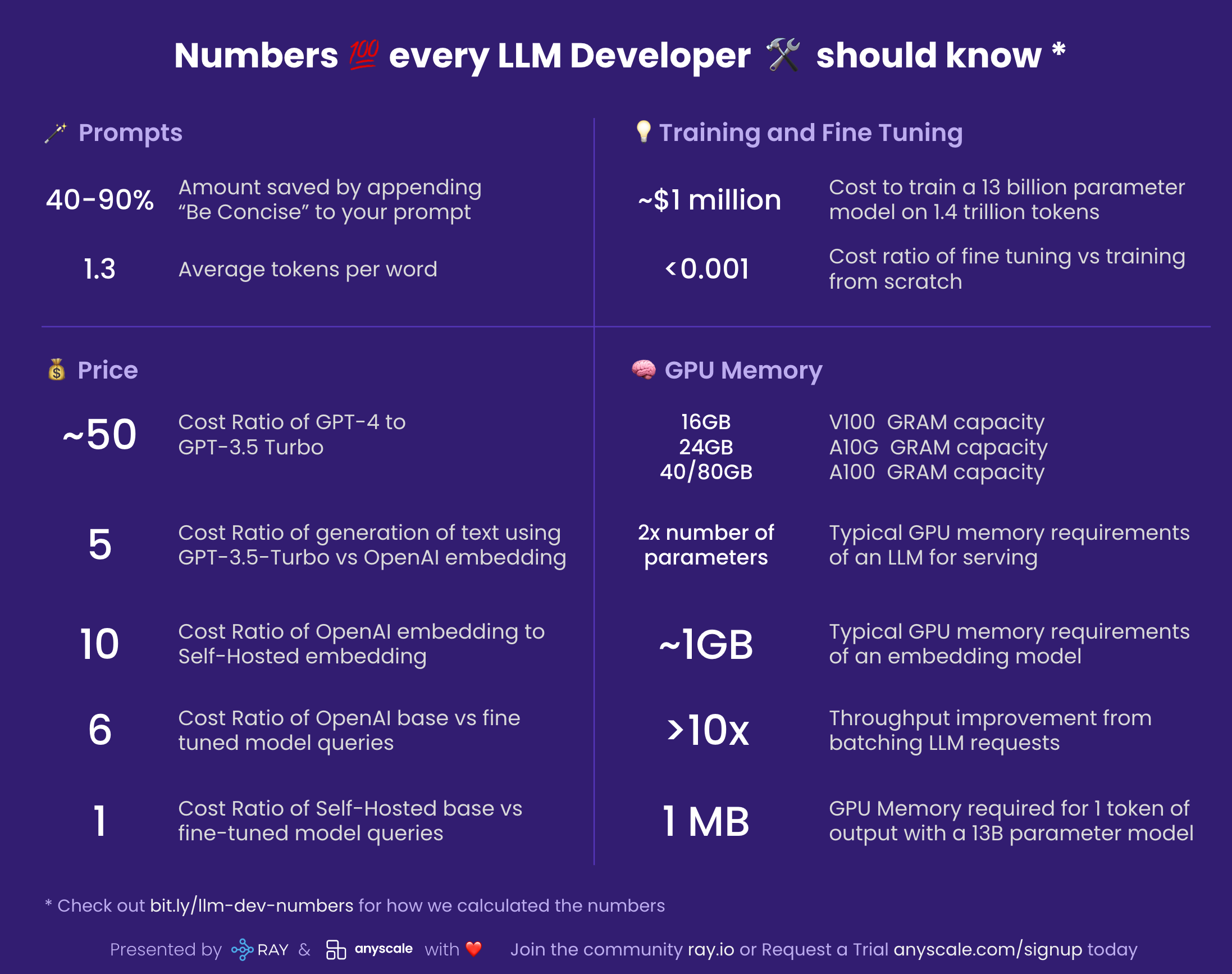

└── 2023~Numbers every LLM Developer should know.md

├── 00~导论

└── README.md

├── .gitignore

├── README.md

├── _sidebar.md

├── index.html

├── header.svg

└── LICENSE

/.nojekyll:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/循环神经网络/README.md:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/行业应用/聊天对话/README.md:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/INTRODUCTION.md:

--------------------------------------------------------------------------------

1 | # 本篇导读

2 |

--------------------------------------------------------------------------------

/LLM/README.link:

--------------------------------------------------------------------------------

1 | https://github.com/wx-chevalier/LLM-Notes

--------------------------------------------------------------------------------

/经典自然语言/词嵌入/99~参考资料/2023~Embeddings: What they are and why they matter.md:

--------------------------------------------------------------------------------

1 | > [原文地址](https://simonwillison.net/2023/Oct/23/embeddings/)

2 |

3 | # Embeddings: What they are and why they matter

4 |

--------------------------------------------------------------------------------

/99~参考资料/2023~吴恩达~《Building Systems with the ChatGPT API》/11.conclusion.md:

--------------------------------------------------------------------------------

1 | ## 吴恩达 用ChatGPT API构建系统 总结篇

2 |

3 | ## Building Systems with the ChatGPT API

4 |

5 | 本次简短课程涵盖了一系列 ChatGPT 的应用实践,包括处理处理输入、审查输出以及评估等,实现了一个搭建系统的完整流程。

6 |

7 | ### 📚 课程回顾

8 |

9 | 本课程详细介绍了LLM工作原理,包括分词器(tokenizer)等微妙之处、评估用户输入的质量和安全性的方法、使用思维链作为提示词、通过链提示分割任务以及返回用户前检查输出等。

10 |

11 | 本课程还介绍了评估系统长期性能以监控和改进表现的方法。

12 |

13 | 此外,课程也涉及到构建负责任的系统以保证模型提供合理相关的反馈。

14 |

15 | ### 💪🏻 出发~去探索新世界吧~

16 |

17 | 实践是掌握真知的必经之路。开始构建令人激动的应用吧~

18 |

--------------------------------------------------------------------------------

/99~参考资料/2023~吴恩达~《LangChain for LLM Application Development》/readme.md:

--------------------------------------------------------------------------------

1 | # 使用 LangChain 开发基于 LLM 的应用程序

2 |

3 | 吴恩达老师发布的大模型开发新课程,指导开发者如何结合框架LangChain 使用 ChatGPT API 来搭建基于 LLM 的应用程序,帮助开发者学习使用 LangChain 的一些技巧,包括:模型、提示和解析器,应用程序所需要用到的存储,搭建模型链,基于文档的问答系统,评估与代理等。

4 |

5 | ### 目录

6 | 1. 简介 Introduction @Sarai

7 | 2. 模型,提示和解析器 Models, Prompts and Output Parsers @Joye

8 | 3. 存储 Memory @徐虎

9 | 4. 模型链 Chains @徐虎

10 | 5. 基于文档的问答 Question and Answer @苟晓攀

11 | 6. 评估 Evaluation @苟晓攀

12 | 7. 代理 Agent @Joye

13 | 8. 总结 Conclusion @Sarai

14 |

--------------------------------------------------------------------------------

/99~参考资料/2023~吴恩达~《Building Systems with the ChatGPT API》/1.Introduction.md:

--------------------------------------------------------------------------------

1 | # 使用 ChatGPT API 搭建系统

2 |

3 | ## 简介

4 |

5 | 欢迎来到课程《使用 ChatGPT API 搭建系统》👏🏻👏🏻

6 |

7 | 本课程由吴恩达老师联合 OpenAI 开发,旨在指导开发者如何基于 ChatGPT 搭建完整的智能问答系统。

8 |

9 | ### 📚 课程基本内容

10 |

11 | 使用ChatGPT不仅仅是一个单一的提示或单一的模型调用,本课程将分享使用LLM构建复杂应用的最佳实践。

12 |

13 | 以构建客服助手为例,使用不同的指令链式调用语言模型,具体取决于上一个调用的输出,有时甚至需要从外部来源查找信息。

14 |

15 | 本课程将围绕该主题,逐步了解应用程序内部的构建步骤,以及长期视角下系统评估和持续改进的最佳实践。

16 |

17 |

18 | ### 🌹致谢课程重要贡献者

19 |

20 | 感谢来自OpenAI团队的Andrew Kondrick、Joe Palermo、Boris Power和Ted Sanders,

21 | 以及来自DeepLearning.ai团队的Geoff Ladwig、Eddie Shyu和Tommy Nelson。

--------------------------------------------------------------------------------

/00~导论/README.md:

--------------------------------------------------------------------------------

1 | # NLP 通用技术

2 |

3 | ## 文本生成

4 |

5 | 文本生成是使用计算机模拟人来生成文本的技术,可以分为 text-to-text,image-to-text,以及 data-to-text 等。文本生成的应用领域包括机器翻译、QA、文本摘要、文字改写、新闻报道(体育、气象、财经、医疗等)、报告的自动生成等。

6 |

7 | 随着深度学习等技术在文本生成领域的应用,近年来文本生成技术发展比较快,特别是源于机器翻译的 seq2seq 结构,广泛应用到了文本生成的各个领域。但是应用中还是存在很多诸如创新度不够、不流畅、语句之间相关性不强等问题。文本生成的难度在于,由于人类的语言表达是多种多样的,因此文本生成的结果的质量没有确定的标准,难以评估模型效果,同时对于结果质量和多样性的的平衡也很难把握。

8 |

9 | ## 情感分析

10 |

11 | 文本情感分析(Sentiment Analysis),又称意见挖掘(Opinion Mining),是自然语言处理领域的一个重要研究方向,在工业界和学术界都有广泛的研究和应用,在每年的国际顶会中(例如:ACL、EMNLP、IJCAI、AAAI、WWW 等)都有大量的论文。

12 |

13 | 简单而言,它是对带有情感色彩的主观性文本进行分析、处理、归纳和推理的过程。相对于客观文本,主观文本包含了用户个人的想法或态度,是用户群体对某产品或事件,从不同角度、不同需求和自身体验去分析评价的结果,这些评价具有主观能动性和多样性,具有情感分析的意义和价值。

14 |

--------------------------------------------------------------------------------

/99~参考资料/2023~吴恩达~《LangChain for LLM Application Development》/8.课程总结.md:

--------------------------------------------------------------------------------

1 | ## 吴恩达 LangChain大模型应用开发 总结篇

2 |

3 | ## LangChain for LLM Application Development

4 |

5 | 本次简短课程涵盖了一系列LangChain的应用实践,包括处理顾客评论和基于文档回答问题,以及通过LLM判断何时求助外部工具 (如网站) 来回答复杂问题。

6 |

7 | ### 👍🏻 LangChain如此强大

8 |

9 | 构建这类应用曾经需要耗费数周时间,而现在只需要非常少的代码,就可以通过LangChain高效构建所需的应用程序。LangChain已成为开发大模型应用的有力范式,希望大家拥抱这个强大工具,积极探索更多更广泛的应用场景。

10 |

11 | ### 🌈 不同组合->更多可能性

12 |

13 | LangChain还可以协助我们做什么呢:基于CSV文件回答问题、查询sql数据库、与api交互,有很多例子通过Chain以及不同的提示(Prompts)和输出解析器(output parsers)组合得以实现。

14 |

15 | ### 💪🏻 出发~去探索新世界吧~

16 |

17 | 因此非常感谢社区中做出贡献的每一个人,无论是协助文档的改进,还是让其他人更容易上手,还是构建新的Chain打开一个全新的世界。

18 |

19 | 如果你还没有这样做,快去打开电脑,运行 pip install LangChain,然后去使用LangChain、搭建惊艳的应用吧~

20 |

21 |

--------------------------------------------------------------------------------

/99~参考资料/2023~吴恩达~《Building Systems with the ChatGPT API》/readme.md:

--------------------------------------------------------------------------------

1 | # 使用 ChatGPT API 搭建系统

2 |

3 | 吴恩达老师发布的大模型开发新课程,在《Prompt Engineering for Developers》课程的基础上,指导开发者如何基于 ChatGPT 提供的 API 开发一个完整的、全面的智能问答系统,包括使用大语言模型的基本规范,通过分类与监督评估输入,通过思维链推理及链式提示处理输入,检查并评估系统输出等,介绍了基于大模型开发的新范式,值得每一个有志于使用大模型开发应用程序的开发者学习。

4 |

5 | ### 目录

6 |

7 | 1. 简介 Introduction @Sarai

8 | 2. 模型,范式和 token Language Models, the Chat Format and Tokens @仲泰

9 | 3. 检查输入-分类 Classification @诸世纪

10 | 4. 检查输入-监督 Moderation @诸世纪

11 | 5. 思维链推理 Chain of Thought Reasoning @万礼行

12 | 6. 提示链 Chaining Prompts @万礼行

13 | 7. 检查输入 Check Outputs @仲泰

14 | 8. 评估(端到端系统)Evaluation @邹雨衡

15 | 9. 评估(简单问答)Evaluation-part1 @陈志宏

16 | 10. 评估(复杂问答)Evaluation-part2 @邹雨衡

17 | 11. 总结 Conclusion @Sarai

18 |

--------------------------------------------------------------------------------

/99~参考资料/2023~吴恩达~《ChatGPT Prompt Engineering for Developers》/09. 总结.md:

--------------------------------------------------------------------------------

1 | 恭喜你完成了这门短期课程。

2 |

3 | 总的来说,在这门课程中,我们学习了关于prompt的两个关键原则:

4 |

5 | - 编写清晰具体的指令;

6 | - 如果适当的话,给模型一些思考时间。

7 |

8 | 你还学习了迭代式prompt开发的方法,并了解了如何找到适合你应用程序的prompt的过程是非常关键的。

9 |

10 | 我们还介绍了许多大型语言模型的功能,包括摘要、推断、转换和扩展。你还学会了如何构建自定义聊天机器人。在这门短期课程中,你学到了很多,希望你喜欢这些学习材料。

11 |

12 | 我们希望你能想出一些应用程序的想法,并尝试自己构建它们。请尝试一下并让我们知道你的想法。你可以从一个非常小的项目开始,也许它具有一定的实用价值,也可能完全没有实用价值,只是一些有趣好玩儿的东西。请利用你第一个项目的学习经验来构建更好的第二个项目,甚至更好的第三个项目等。或者,如果你已经有一个更大的项目想法,那就去做吧。

13 |

14 | 大型语言模型非常强大,作为提醒,我们希望大家负责任地使用它们,请仅构建对他人有积极影响的东西。在这个时代,构建人工智能系统的人可以对他人产生巨大的影响。因此必须负责任地使用这些工具。

15 |

16 | 现在,基于大型语言模型构建应用程序是一个非常令人兴奋和不断发展的领域。现在你已经完成了这门课程,我们认为你现在拥有了丰富的知识,可以帮助你构建其他人今天不知道如何构建的东西。因此,我希望你也能帮助我们传播并鼓励其他人也参加这门课程。

17 |

18 | 最后,希望你在完成这门课程时感到愉快,感谢你完成了这门课程。我们期待听到你构建的惊人之作。

--------------------------------------------------------------------------------

/经典自然语言/词嵌入/概述.md:

--------------------------------------------------------------------------------

1 | 预训练的词向量已经引领自然语言处理很长时间。Word2vec[4] 在 2013 年被作为一个近似的语言建模模型而提出。当时,硬件速度比现在要慢很多,并且深度学习模型也还没有得到广泛的支持,Word2vec 凭借着自身的效率和易用性被采用。从那时起,实施 NLP 项目的标准方法基本上就没变过:通过 Word2vec 和 GloVe[5] 等算法在大量未标注的数据上进行预训练获得词嵌入向量 (word embedding),然后把词嵌入向量用于初始化神经网络的第一层,而网络的其它部分则是根据特定的任务,利用其余的数据进行训练。在大多数训练数据有限的任务中,这种做法能够使准确率提升 2 到 3 个百分点 [6]。不过,尽管这些预训练的词嵌入向量具有极大的影响力,但是它们存在一个主要的局限:它们只将先前的知识纳入模型的第一层,而网络的其余部分仍然需要从头开始训练。

2 |

3 |

4 |

5 | 由 word2vec 捕捉到的关系(来源:TensorFlow 教程)

6 | Word2vec 以及相关的其它方法属于浅层方法,这是一种以效率换表达力的做法。使用词嵌入向量就像使用仅对图像边缘进行编码的预训练表征来初始化计算机视觉模型,尽管这种做法对许多任务都是有帮助的,但是却无法捕捉到那些也许更有用的高层次信息。采用词嵌入向量初始化的模型需要从头开始学习,模型不仅要学会消除单词歧义,还要理解单词序列的意义。这是语言理解的核心内容,它需要对复杂的语言现象建模,例如语义合成性(compositionality)、多义性(polysemy)、指代(anaphora)、长期依赖(long-term dependencies)、一致性(agreement)和否定(negation)等。因此,使用这些浅层表征初始化的自然语言处理模型仍然需要大量的训练样本,才能获得良好的性能。

7 |

--------------------------------------------------------------------------------

/经典自然语言/语法语义分析/命名实体识别.md:

--------------------------------------------------------------------------------

1 | # 命名实体识别

2 |

3 | 命名实体识别(Named Entity Recognition, NER),又称作“专名识别”,主要任务是识别出文本中的人名、地名等专有名称和有意义的时间、日期等数量短语并加以归类。对很多文本挖掘任务来说,命名实体识别系统是重要的组成部分:一方面,命名实体识别可以帮助识别未登录词,而根据 SIGHAN Bakeoff 的数据评测结果,未登录词造成的分词精度损失远大于歧义;另一方面,对关键词提取等任务来说,命名实体的类别是非常有用的文本特征。

4 |

5 | 命名实体是命名实体识别的研究主体,一般包括3大类(实体类、时间类和数字类)和7小类(人名、地名、机构名、时间、日期、货币和百分比)命名实体。当然对于某些特定的应用场景,也可以把产品名、电影电视剧名、编程类库名等作为命名实体的类别。时间、日期、货币等实体识别通常可以采用模式匹配的方式获得较好的识别效果,而人名、地名、机构名的识别方法则比较复杂。

6 |

7 | 命名实体识别的过程通常分两步:识别实体边界、确定实体类别。英语中的命名实体具有比较明显的形态标志,如人名、地名等实体中的每个词的第一个字母要大写等,所以实体边界识别相对来说比较容易。中文内在的特殊性决定了在文本处理时首先必须进行词法分析,中文命名实体识别的难度要比英文的难度大。

8 |

9 | 一个完善的命名实体识别系统应该是词典、规则、统计学习的方法相结合。

10 |

11 | 1. 可以对原始文本进行细粒度的分词,多个连续的单字可以作为命名实体的候选结果;识别文本中的“”以及《》等配对的标点符号,当中的文本也可以作为候选结果。

12 | 2. 挖掘各个领域的专名词典,对候选结果进行前向最大匹配,匹配到的很有可能是各个类别的命名实体。

13 | 3. 利用隐马尔科夫链(HMM)、最大熵(ME)、条件随机场(CRF)等统计模型进行识别,[命名实体识别调研](www.nilday.com/命名实体识别调研/) 有各个模型的效果总结。

14 |

--------------------------------------------------------------------------------

/99~参考资料/2023~吴恩达~《LangChain for LLM Application Development》/1.开篇介绍.md:

--------------------------------------------------------------------------------

1 | ## 吴恩达 LangChain大模型应用开发 开端篇

2 |

3 | ## LangChain for LLM Application Development

4 |

5 | 欢迎来到LangChain大模型应用开发短期课程👏🏻👏🏻

6 |

7 | 本课程由哈里森·蔡斯 (Harrison Chase,LangChain作者)与Deeplearning.ai合作开发,旨在教大家使用这个神奇工具。

8 |

9 | ### 🚀 LangChain的诞生和发展

10 |

11 | 通过提示LLM或大型语言模型,现在可以比以往更快地开发AI应用程序,但是一个应用程序可能需要提示和多次并暂停作为输出。

12 |

13 | 在此过程有很多胶水代码需要编写,因此哈里森·蔡斯 (Harrison Chase) 创建了LangChain,整合了常见的抽象功能,使开发过程变得更加丝滑。

14 |

15 | LangChain开源社区快速发展,贡献者已达数百人,正以惊人的速度更新代码和功能。

16 |

17 |

18 |

19 | ### 📚 课程基本内容

20 |

21 | LangChain是用于构建大模型应用程序的开源框架,有Python和JavaScript两个不同版本的包。LangChain基于模块化组合,有许多单独的组件,可以一起使用或单独使用。此外LangChain还拥有很多应用案例,帮助我们了解如何将这些模块化组件组合成链式方式,以形成更多端到端的应用程序 。

22 |

23 | 在本课程中,我们将介绍LandChain的常见组件,并讨论模型、提示(使模型执行操作的方式)、索引(处理数据的方式),然后将讨论链式(端到端用例)以及令人激动的代理(使用模型作为推理引擎的端到端应用)。

24 |

25 |

26 |

27 | ### 🌹致谢课程重要贡献者

28 |

29 | 最后特别感谢Ankush Gholar(LandChain的联合作者)、Geoff Ladwig,、Eddy Shyu 以及 Diala Ezzedine,他们也为课程内容投入了很多思考~

--------------------------------------------------------------------------------

/99~参考资料/2023~吴恩达~《ChatGPT Prompt Engineering for Developers》/00.README.md:

--------------------------------------------------------------------------------

1 | # Prompt Engineering for Developers

2 |

3 | LLM 正在逐步改变人们的生活,而对于开发者,如何基于 LLM 提供的 API 快速、便捷地开发一些具备更强能力、集成LLM 的应用,来便捷地实现一些更新颖、更实用的能力,是一个急需学习的重要能力。由吴恩达老师与 OpenAI 合作推出的 《ChatGPT Prompt Engineering for Developers》教程面向入门 LLM 的开发者,深入浅出地介绍了对于开发者,如何构造 Prompt 并基于 OpenAI 提供的 API 实现包括总结、推断、转换等多种常用功能,是入门 LLM 开发的经典教程。在可预见的未来,该教程会成为 LLM 的重要入门教程,但是目前还只支持英文版且国内访问受限,打造中文版且国内流畅访问的教程具有重要意义。因此,我们将该课程翻译为中文,并复现其范例代码,支持国内中文学习者直接使用,以帮助中文学习者更好地学习 LLM 开发。

4 |

5 | 本教程为吴恩达《ChatGPT Prompt Engineering for Developers》课程中文版,主要内容为指导开发者如何构建 Prompt 并基于 OpenAI API 构建新的、基于 LLM 的应用,包括:

6 | > 书写 Prompt 的原则

7 | > 文本总结(如总结用户评论);

8 | > 文本推断(如情感分类、主题提取);

9 | > 文本转换(如翻译、自动纠错);

10 | > 扩展(如书写邮件)

11 |

12 | **目录:**

13 | 1. 简介 Introduction @邹雨衡

14 | 2. Prompt 的构建原则 Guidelines @邹雨衡

15 | 3. 如何迭代优化 Prompt Itrative @邹雨衡

16 | 4. 文本总结 Summarizing @玉琳

17 | 5. 文本推断 @长琴

18 | 6. 文本转换 Transforming @玉琳

19 | 7. 文本扩展 Expand @邹雨衡

20 | 8. 聊天机器人 @长琴

21 | 9. 总结 @长琴

22 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Ignore all

2 | *

3 |

4 | # Unignore all with extensions

5 | !*.*

6 |

7 | # Unignore all dirs

8 | !*/

9 |

10 | .DS_Store

11 |

12 | # Logs

13 | logs

14 | *.log

15 | npm-debug.log*

16 | yarn-debug.log*

17 | yarn-error.log*

18 |

19 | # Runtime data

20 | pids

21 | *.pid

22 | *.seed

23 | *.pid.lock

24 |

25 | # Directory for instrumented libs generated by jscoverage/JSCover

26 | lib-cov

27 |

28 | # Coverage directory used by tools like istanbul

29 | coverage

30 |

31 | # nyc test coverage

32 | .nyc_output

33 |

34 | # Grunt intermediate storage (http://gruntjs.com/creating-plugins#storing-task-files)

35 | .grunt

36 |

37 | # Bower dependency directory (https://bower.io/)

38 | bower_components

39 |

40 | # node-waf configuration

41 | .lock-wscript

42 |

43 | # Compiled binary addons (https://nodejs.org/api/addons.html)

44 | build/Release

45 |

46 | # Dependency directories

47 | node_modules/

48 | jspm_packages/

49 |

50 | # TypeScript v1 declaration files

51 | typings/

52 |

53 | # Optional npm cache directory

54 | .npm

55 |

56 | # Optional eslint cache

57 | .eslintcache

58 |

59 | # Optional REPL history

60 | .node_repl_history

61 |

62 | # Output of 'npm pack'

63 | *.tgz

64 |

65 | # Yarn Integrity file

66 | .yarn-integrity

67 |

68 | # dotenv environment variables file

69 | .env

70 |

71 | # next.js build output

72 | .next

73 |

--------------------------------------------------------------------------------

/99~参考资料/2023~吴恩达~《ChatGPT Prompt Engineering for Developers》/01. 简介.md:

--------------------------------------------------------------------------------

1 | # 简介

2 |

3 | **作者 吴恩达教授**

4 |

5 | 欢迎来到本课程,我们将为开发人员介绍 ChatGPT 提示工程。本课程由 Isa Fulford 教授和我一起授课。Isa Fulford 是 OpenAI 的技术团队成员,曾开发过受欢迎的 ChatGPT 检索插件,并且在教授人们如何在产品中使用 LLM 或 LLM 技术方面做出了很大贡献。她还参与编写了教授人们使用 Prompt 的 OpenAI cookbook。

6 |

7 | 互联网上有很多有关提示的材料,例如《30 prompts everyone has to know》之类的文章。这些文章主要集中在 ChatGPT Web 用户界面上,许多人在使用它执行特定的、通常是一次性的任务。但是,我认为 LLM 或大型语言模型作为开发人员的更强大功能是使用 API 调用到 LLM,以快速构建软件应用程序。我认为这方面还没有得到充分的重视。实际上,我们在 DeepLearning.AI 的姊妹公司 AI Fund 的团队一直在与许多初创公司合作,将这些技术应用于许多不同的应用程序上。看到 LLM API 能够让开发人员非常快速地构建应用程序,这真是令人兴奋。

8 |

9 | 在本课程中,我们将与您分享一些可能性以及如何实现它们的最佳实践。

10 |

11 | 随着大型语言模型(LLM)的发展,LLM 大致可以分为两种类型,即基础LLM和指令微调LLM。基础LLM是基于文本训练数据,训练出预测下一个单词能力的模型,其通常是在互联网和其他来源的大量数据上训练的。例如,如果你以“从前有一只独角兽”作为提示,基础LLM可能会继续预测“生活在一个与所有独角兽朋友的神奇森林中”。但是,如果你以“法国的首都是什么”为提示,则基础LLM可能会根据互联网上的文章,将答案预测为“法国最大的城市是什么?法国的人口是多少?”,因为互联网上的文章很可能是有关法国国家的问答题目列表。

12 |

13 | 许多 LLMs 的研究和实践的动力正在指令调整的 LLMs 上。指令调整的 LLMs 已经被训练来遵循指令。因此,如果你问它,“法国的首都是什么?”,它更有可能输出“法国的首都是巴黎”。指令调整的 LLMs 的训练通常是从已经训练好的基本 LLMs 开始,该模型已经在大量文本数据上进行了训练。然后,使用输入是指令、输出是其应该返回的结果的数据集来对其进行微调,要求它遵循这些指令。然后通常使用一种称为 RLHF(reinforcement learning from human feedback,人类反馈强化学习)的技术进行进一步改进,使系统更能够有帮助地遵循指令。

14 |

15 | 因为指令调整的 LLMs 已经被训练成有益、诚实和无害的,所以与基础LLMs相比,它们更不可能输出有问题的文本,如有害输出。许多实际使用场景已经转向指令调整的LLMs。您在互联网上找到的一些最佳实践可能更适用于基础LLMs,但对于今天的大多数实际应用,我们建议将注意力集中在指令调整的LLMs上,这些LLMs更容易使用,而且由于OpenAI和其他LLM公司的工作,它们变得更加安全和更加协调。

16 |

17 | 因此,本课程将重点介绍针对指令调整 LLM 的最佳实践,这是我们建议您用于大多数应用程序的。在继续之前,我想感谢 OpenAI 和 DeepLearning.ai 团队为 Izzy 和我所提供的材料作出的贡献。我非常感激 OpenAI 的 Andrew Main、Joe Palermo、Boris Power、Ted Sanders 和 Lillian Weng,他们参与了我们的头脑风暴材料的制定和审核,为这个短期课程编制了课程大纲。我也感激 Deep Learning 方面的 Geoff Ladwig、Eddy Shyu 和 Tommy Nelson 的工作。

18 |

19 | 当您使用指令调整 LLM 时,请类似于考虑向另一个人提供指令,假设它是一个聪明但不知道您任务的具体细节的人。当 LLM 无法正常工作时,有时是因为指令不够清晰。例如,如果您说“请为我写一些关于阿兰·图灵的东西”,清楚表明您希望文本专注于他的科学工作、个人生活、历史角色或其他方面可能会更有帮助。更多的,您还可以指定文本采取像专业记者写作的语调,或者更像是您向朋友写的随笔。

20 |

21 | 当然,如果你想象一下让一位新毕业的大学生为你完成这个任务,你甚至可以提前指定他们应该阅读哪些文本片段来写关于 Alan Turing的文本,那么这能够帮助这位新毕业的大学生更好地成功完成这项任务。下一章你会看到如何让提示清晰明确,创建提示的一个重要原则,你还会从提示的第二个原则中学到给LLM时间去思考。

--------------------------------------------------------------------------------

/99~参考资料/2023~吴恩达~《LangChain for LLM Application Development》/Data.csv:

--------------------------------------------------------------------------------

1 | Product,Review

2 | Queen Size Sheet Set,"I ordered a king size set. My only criticism would be that I wish seller would offer the king size set with 4 pillowcases. I separately ordered a two pack of pillowcases so I could have a total of four. When I saw the two packages, it looked like the color did not exactly match. Customer service was excellent about sending me two more pillowcases so I would have four that matched. Excellent! For the cost of these sheets, I am satisfied with the characteristics and coolness of the sheets."

3 | Waterproof Phone Pouch,"I loved the waterproof sac, although the opening was made of a hard plastic. I don’t know if that would break easily. But I couldn’t turn my phone on, once it was in the pouch."

4 | Luxury Air Mattress,"This mattress had a small hole in the top of it (took forever to find where it was), and the patches that they provide did not work, maybe because it's the top of the mattress where it's kind of like fabric and a patch won't stick. Maybe I got unlucky with a defective mattress, but where's quality assurance for this company? That flat out should not happen. Emphasis on flat. Cause that's what the mattress was. Seriously horrible experience, ruined my friend's stay with me. Then they make you ship it back instead of just providing a refund, which is also super annoying to pack up an air mattress and take it to the UPS store. This company is the worst, and this mattress is the worst."

5 | Pillows Insert,"This is the best throw pillow fillers on Amazon. I’ve tried several others, and they’re all cheap and flat no matter how much fluffing you do. Once you toss these in the dryer after you remove them from the vacuum sealed shipping material, they fluff up great"

6 | "Milk Frother Handheld

7 | "," I loved this product. But they only seem to last a few months. The company was great replacing the first one (the frother falls out of the handle and can't be fixed). The after 4 months my second one did the same. I only use the frother for coffee once a day. It's not overuse or abuse. I'm very disappointed and will look for another. As I understand they will only replace once. Anyway, if you have one good luck."

8 | "L'Or Espresso Café

9 | ","Je trouve le goût médiocre. La mousse ne tient pas, c'est bizarre. J'achète les mêmes dans le commerce et le goût est bien meilleur...

10 | Vieux lot ou contrefaçon !?"

11 | Hervidor de Agua Eléctrico,"Está lu bonita calienta muy rápido, es muy funcional, solo falta ver cuánto dura, solo llevo 3 días en funcionamiento."

--------------------------------------------------------------------------------

/行业应用/机器人问答/README.md:

--------------------------------------------------------------------------------

1 | # 机器人问答

2 |

3 | 当用户询问了机器人,机器人会根据聊天上下文,到知识库里寻找最合适的解决方案,并将答案返回给用户。如果机器人解决不了,则会由人工进行处理。机器人问答常用的组织形式有 FAQ(非结构化),KB(结构化)两类。我们这里讲的定位是 FAQ 形式。

4 |

5 | 知识库首先由很多的 FAQ 构成,比如构建一个客服领域的知识库,就需要将客服整个垂直场景涉及的问题都罗列出来,并配置相应的答案。比如,“我要怎么开淘宝店?”,“我要怎么退款”,“我的密码要怎么重置”。成千上万的 FAQ 对,构成了整个知识库的基础。机器人在回答时,就是从知识库里找到和用户问题非常接近的标准问题(称为“知识”),用它的答案进行回复。

6 |

7 |

8 |

9 | # 知识库的组织

10 |

11 | 知识库的逻辑构成如下,3 层结构:标准问题,相似问题,标准答案。一个标准问题对应一个标准答案,一个标准问题下有多个相似问题。机器人定位时,使用标准问题和相似问题进行定位。

12 |

13 |

14 |

15 | # 算法架构

16 |

17 | 一般的机器人问答链路中我们主要会从不同的链路去生成结果:

18 |

19 | - 检索链路:BERT, HCNN, OpenSearch

20 | - 生成链路:Transformer

21 | - 规则链路:Tire 树,基于依存句法的生成

22 | - 辅助算法:敏感词过滤,语言模型,关键词聚类

23 |

24 |

25 |

26 | ## 检索链路

27 |

28 | ### 模糊搜索

29 |

30 | 检索链路首先是从 OpenSearch 中进行搜索,我们的检索库是定期更新数据,数据通过 ODPS 进行处理,从 ODPS 导入 OpenSearch,没有实时增量,所以 OpenSearch 的基础能力满足我们的需求。公开语料库譬如百度知道,全量 4.5 亿调。语料都通过 ODPS 的 UDF,先进行语料清洗,再进行语料去重。

31 |

32 | 索引构建阶段,使用 alinlp 电商分词的结果进行索引构建,对名词和动词做了单独处理。使用 alinlp 电商分词后,构建搜索表达式,增加名词和动词的权重,通过 OpenSearch 进行搜索。

33 |

34 | ### HCNN 精排

35 |

36 | 精排过程就是比较两个句子的相似度,比较方式一般有两种,Sentence Interaction 和 Sentence Embedding。SE 就是将两个句子变成同一空间里的独立向量,然后计算这两个向量之间的余弦相似度。典型代表如:DSSM,ABCNN。SI 就是将两个句子进行交叉,比如使用向量构造矩阵,通过对矩阵的理解,得到句子之间的相似度。典型代表如:Pyramid。

37 |

38 | HCNN 是 Hybrid CNN 的缩写,它包括了 SE 和 SI,分别构造左右两个子网络,一个是 SI,一个是 SE,把两种方式进行了结合。

39 |

40 |

41 |

42 | ### BERT 精排

43 |

44 | BERT 的信息抽取器是 Transformer,Transformer 在翻译的任务里就表现出了极强的信息抽取能力,再经过大量数据进行训练,我们相信 BERT 能够比 HCNN 有更好的效果。

45 |

46 | ## 生成链路

47 |

48 | 针对生成问题,由于是有无到有,而且整个模型是端到端生成,人工可以干预的地方并不多,对整个模型的控制几种在模型设计、超参配置和训练过程中。

49 |

50 | 在开发阶段,我们尝试了 Seq2Seq、ConSeq2Seq,以上模型经常生成 save answer 和不通顺的语句,场景建模能力较弱,非该场景的生成语句偏多。在尝试了 Transformer + Beam Search 的架构后,Transformer 本身的并行化特性,以及网络结构里,Multi-head Attention 能够获取到更丰富语义,生成效率和生成句子的多样性以及句子质量都得到了答复提高。

51 |

52 |

53 |

54 | ## 规则链路

55 |

56 | ### Trie 树

57 |

58 | 使用 Trie 树替换原始句子里的同义词。原始 Trie 树包含了模糊匹配、语义节点、集合词、同义词等,目的是为了扩大覆盖,语义归一。但是我们的需求是替换原始句子的同义词,所以很多功能用不上。考虑到修改原始 Trie 树的成本比较大,于是写了 MiniTrie 树,只做同义词替换。

59 |

60 | MiniTrie 先读取 Trie 树的同义词文件,在内存里建立替换关系图,然后对输入的 Query 可以进行替换,输出结果。MiniTrie 在同义词替换时,支持最小匹配、最大匹配、全匹配三种匹配方式,通过参数进行配置。

61 |

62 | ### 基于依存句法的生成

63 |

64 |

65 |

66 | 将 root 出发的子树进行合并,调整/删除子树,构造新的句子。整个流程包括两部分,训练和预测。训练是依赖于相似的句对,构造可转换的规则库。

67 |

68 | 1)确保相似句对来自同一个领域

69 | 2)使用 Alinlp 对句对分别进行依存句法分析,获得 chunk 序列,包含依存关系及相应的 label

70 | 3)使用 chunk 合并器,对生成的 chunk 序列进行合并

71 | 4)取 chunk 序列的 label 作为句子表示,将句对的 label 序列作为一条规则加入到规则库

72 |

73 | 预测阶段通过规则库,对转换后的句子进行筛选:

74 |

75 | 1)使用 Alinlp 对输入语句进行依存句法分析,获得 chunk 序列,包含依存关系及相应的 label

76 | 2)使用 chunk 合并器,对生成的 chunk 序列进行合并

77 | 3)对 chunk 序列进行位置置换,或者删除 chunk,生成候选集

78 | 4)对候选集中的 chunk 序列,取 label 作为表示,用规则库判断转换是否合理,不合理的则丢弃

79 |

80 |

81 |

82 | ## 辅助算法

83 |

84 | ### 语言模型

85 |

86 | 多层双向 LSTM,使用淘系语料进行训练

87 |

88 | ### 聚类

89 |

90 | 基于 TextRank 的关键词聚类

91 |

92 | ### 敏感词过滤

93 |

94 | 基于 KFC 的 AC 自动机和双数组 trie 树的关键词过滤

95 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | [![Contributors][contributors-shield]][contributors-url]

2 | [![Forks][forks-shield]][forks-url]

3 | [![Stargazers][stars-shield]][stars-url]

4 | [![Issues][issues-shield]][issues-url]

5 | [][license-url]

6 |

7 |

8 |

9 |

10 |

11 |  12 |

13 |

14 |

12 |

13 |

14 |

15 | 在线阅读 >>

16 |

17 |

18 | 代码案例

19 | ·

20 | 参考资料

21 |

22 |

23 |

24 |

25 |

26 |

27 |

28 |

29 | # 深入浅出 Python 机器学习与自然语言处理

30 |

31 | 20 年来,NLP 的技术也经历了从基于语法语义规则系统(1970s-1990s)迁移到基于统计机器学习的框架(2000s-2014)并进一步发展为基于大数据和深度学习的 NLP 技术范式(2014 至今)。

32 |

33 |

34 |

35 |

36 |

37 | # Nav | 关联导航

38 |

39 | # About | 关于

40 |

41 |

42 |

43 | ## Contributing

44 |

45 | Contributions are what make the open source community such an amazing place to be learn, inspire, and create. Any contributions you make are **greatly appreciated**.

46 |

47 | 1. Fork the Project

48 | 2. Create your Feature Branch (`git checkout -b feature/AmazingFeature`)

49 | 3. Commit your Changes (`git commit -m 'Add some AmazingFeature'`)

50 | 4. Push to the Branch (`git push origin feature/AmazingFeature`)

51 | 5. Open a Pull Request

52 |

53 |

54 |

55 | ## Acknowledgements

56 |

57 | - [Awesome-Lists](https://github.com/wx-chevalier/Awesome-Lists): 📚 Guide to Galaxy, curated, worthy and up-to-date links/reading list for ITCS-Coding/Algorithm/SoftwareArchitecture/AI. 💫 ITCS-编程/算法/软件架构/人工智能等领域的文章/书籍/资料/项目链接精选。

58 |

59 | - [Awesome-CS-Books](https://github.com/wx-chevalier/Awesome-CS-Books): :books: Awesome CS Books/Series(.pdf by git lfs) Warehouse for Geeks, ProgrammingLanguage, SoftwareEngineering, Web, AI, ServerSideApplication, Infrastructure, FE etc. :dizzy: 优秀计算机科学与技术领域相关的书籍归档。

60 |

61 | ## Copyright & More | 延伸阅读

62 |

63 | 笔者所有文章遵循[知识共享 署名 - 非商业性使用 - 禁止演绎 4.0 国际许可协议](https://creativecommons.org/licenses/by-nc-nd/4.0/deed.zh),欢迎转载,尊重版权。您还可以前往 [NGTE Books](https://ng-tech.icu/books-gallery/) 主页浏览包含知识体系、编程语言、软件工程、模式与架构、Web 与大前端、服务端开发实践与工程架构、分布式基础架构、人工智能与深度学习、产品运营与创业等多类目的书籍列表:

64 |

65 | [](https://ng-tech.icu/books-gallery/)

66 |

67 |

68 |

69 |

70 | [contributors-shield]: https://img.shields.io/github/contributors/wx-chevalier/NLP-Notes.svg?style=flat-square

71 | [contributors-url]: https://github.com/wx-chevalier/NLP-Notes/graphs/contributors

72 | [forks-shield]: https://img.shields.io/github/forks/wx-chevalier/NLP-Notes.svg?style=flat-square

73 | [forks-url]: https://github.com/wx-chevalier/NLP-Notes/network/members

74 | [stars-shield]: https://img.shields.io/github/stars/wx-chevalier/NLP-Notes.svg?style=flat-square

75 | [stars-url]: https://github.com/wx-chevalier/NLP-Notes/stargazers

76 | [issues-shield]: https://img.shields.io/github/issues/wx-chevalier/NLP-Notes.svg?style=flat-square

77 | [issues-url]: https://github.com/wx-chevalier/NLP-Notes/issues

78 | [license-shield]: https://img.shields.io/github/license/wx-chevalier/NLP-Notes.svg?style=flat-square

79 | [license-url]: https://github.com/wx-chevalier/NLP-Notes/blob/master/LICENSE.txt

80 |

--------------------------------------------------------------------------------

/经典自然语言/统计语言模型/词表示.md:

--------------------------------------------------------------------------------

1 | # Natural Language Processing

2 |

3 | ———这里是正式回答的分割线————

4 |

5 | 自然语言处理(简称 NLP),是研究计算机处理人类语言的一门技术,包括:

6 |

7 | 1.句法语义分析:对于给定的句子,进行分词、词性标记、命名实体识别和链接、句法分析、语义角色识别和多义词消歧。

8 |

9 | 2.信息抽取:从给定文本中抽取重要的信息,比如,时间、地点、人物、事件、原因、结果、数字、日期、货币、专有名词等等。通俗说来,就是要了解谁在什么时候、什么原因、对谁、做了什么事、有什么结果。涉及到实体识别、时间抽取、因果关系抽取等关键技术。

10 |

11 | 3.文本挖掘(或者文本数据挖掘):包括文本聚类、分类、信息抽取、摘要、情感分析以及对挖掘的信息和知识的可视化、交互式的表达界面。目前主流的技术都是基于统计机器学习的。

12 |

13 | 4.机器翻译:把输入的源语言文本通过自动翻译获得另外一种语言的文本。根据输入媒介不同,可以细分为文本翻译、语音翻译、手语翻译、图形翻译等。机器翻译从最早的基于规则的方法到二十年前的基于统计的方法,再到今天的基于神经网络(编码-解码)的方法,逐渐形成了一套比较严谨的方法体系。

14 |

15 | 5.信息检索:对大规模的文档进行索引。可简单对文档中的词汇,赋之以不同的权重来建立索引,也可利用 1,2,3 的技术来建立更加深层的索引。在查询的时候,对输入的查询表达式比如一个检索词或者一个句子进行分析,然后在索引里面查找匹配的候选文档,再根据一个排序机制把候选文档排序,最后输出排序得分最高的文档。

16 |

17 | 6.问答系统: 对一个自然语言表达的问题,由问答系统给出一个精准的答案。需要对自然语言查询语句进行某种程度的语义分析,包括实体链接、关系识别,形成逻辑表达式,然后到知识库中查找可能的候选答案并通过一个排序机制找出最佳的答案。

18 |

19 | 7.对话系统:系统通过一系列的对话,跟用户进行聊天、回答、完成某一项任务。涉及到用户意图理解、通用聊天引擎、问答引擎、对话管理等技术。此外,为了体现上下文相关,要具备多轮对话能力。同时,为了体现个性化,要开发用户画像以及基于用户画像的个性化回复。自然语言处理经历了从规则的方法到基于统计的方法。基于统计的自然语言处理方法,在数学模型上和通信就是相同的,甚至相同的。但是科学家们也是用了几十年才认识到这个问题。统计语言模型的初衷是为了解决语音识别问题,在语音识别中,计算机需要知道一个文字序列能否构成一个有意义的句子。

20 |

21 | **简单**

22 |

23 | - 拼写检查

24 | - 关键字搜索

25 | - 查找同义词

26 |

27 | **中等难度**

28 |

29 | - 从网络或文档中提取信息

30 |

31 | **难**

32 |

33 | - 机器翻译(号称自然语言领域的圣杯)

34 | - 语义分析(一句话是什么意思)

35 | - 交叉引用(一句话中,他,这个等代词所对应的主体是哪个)

36 | - 问答系统(Siri, Google Now, 小娜等)

37 |

38 | # Word Representation:词表示

39 |

40 | 自然语言理解的问题要转化为机器学习的问题,第一步肯定是要找一种方法把这些符号数学化。

41 |

42 | ## 词典

43 |

44 | 现实生活中,我们通过查词典来知道一个词的意思,这实际上是用另外的词或短语来表达一个词。这一方法在计算机领域也有,比如 [WordNet](http://wordnet.princeton.edu/) 实际上就是个电子化的英语词典。

45 |

46 | 然而,这一方式有以下几个问题:

47 |

48 | - 有大量的同义词,不利于计算

49 | - 更新缓慢,没有办法自动地添加新词

50 | - 一个词释义含有比较明显的主观色彩

51 | - 需要人工来创建和维护

52 | - 很难计算词的相似性

53 | - 很难进行计算,因为计算机本质上只认识 0 和 1

54 |

55 | ## One-hot Representation:基于统计的词语向量表达

56 |

57 | NLP 中最直观,也是到目前为止最常用的词表示方法是 One-hot Representation,这种方法把每个词表示为一个很长的向量。这个向量的维度是词表大小,其中绝大多数元素为 0,只有一个维度的值为 1,这个维度就代表了当前的词。

58 | 比如,在一个精灵国里,他们的语言非常简单,总共只有三句话:

59 |

60 | 1. I like NLP.

61 | 2. I like deep learning.

62 | 3. I enjoy flying.

63 |

64 | 这样,我们可以看到这个精灵国的词典是 [I, like, NLP, deep, learning, enjoy, flying, .]。没错,我们把标点也认为是一个词。用向量来表达词时,我们创建一个向量,向量的维度与词典的个数相同,然后让向量的某个位置为 1,其他位置全为 0。这样就创建了一个向量词 (one-hot)。

65 |

66 | 比如,在我们的精灵国里,I 这个词的向量是:[1 0 0 0 0 0 0 0], deep 这个词的向量表达是 [0 0 0 1 0 0 0 0]。

67 |

68 | 看起来挺好,我们终于把词转换为 0 和 1 这种计算机能理解的格式了。然而,这种表达也有个问题,很多同义词没办法表达出来,因为他们是不同的向量。怎么解决这个问题呢?我们可以通过词的上下文来表达一个词。通过上下文表达一个词的另外一个好处是,一个词往往有多个意思,具体在某个句子里是什么意思往往由它的上下文决定。

69 |

70 | 这种 One-hot Representation 如果采用稀疏方式存储,会是非常的简洁:也就是给每个词分配一个数字

71 |

72 | ID。比如刚才的例子中,话筒记为 3,麦克记为 8(假设从 0 开始记)。如果要编程实现的话,用 Hash

73 |

74 | 表给每个词分配一个编号就可以了。这么简洁的表示方法配合上最大熵、SVM、CRF 等等算法已经很好地完成了 NLP 领域的各种主流任务。

75 |

76 | 当然这种表示方法也存在一个重要的问题就是“词汇鸿沟”现象:任意两个词之间都是孤立的。光从这两个向量中看不出两个词是否有关系,哪怕是话筒和麦克这样的同义词也不能幸免于难。

77 |

78 | ## 基于上下文的表达

79 |

80 | > You shall know a word by the company it keeps. --- (J. R. Firth 1957: 11)

81 |

82 | 词向量(Distributed Representation)

83 |

84 | 而是用 **Distributed Representation**(不知道这个应该怎么翻译,因为还存在一种叫“Distributional Representation”的表示方法,又是另一个不同的概念)表示的一种低维实数向量。这种向量一般长成这个样子:[0.792, −0.177,−0.107, 0.109, −0.542, …]。维度以 50 维和 100 维比较常见。这种向量的表示不是唯一的,后文会提到目前计算出这种向量的主流方法。(个人认为)Distributed representation

85 |

86 | 最大的贡献就是让相关或者相似的词,在距离上更接近了。向量的距离可以用最传统的欧氏距离来衡量,也可以用 cos 夹角来衡量。用这种方式表示的向量,“麦克”和“话筒”的距离会远远小于“麦克”和“天气”。可能理想情况下“麦克”和“话筒”的表示应该是完全一样的,但是由于有些人会把英文名“迈克”也写成“麦克”,导致“麦克”一词带上了一些人名的语义,因此不会和“话筒”完全一致。

87 |

88 | # Document Representation(文档表示)

89 |

90 | ## Bag-of-Words

91 |

92 | BOW (bag of words) 模型简介 Bag of words 模型最初被用在文本分类中,将文档表示成特征矢量。它的基本思想是假定对于一个文本,忽略其词序和语法、句法,仅仅将其看做是一些词汇的集合,而文本中的每个词汇都是独立的。简单说就是讲每篇文档都看成一个袋子(因为里面装的都是词汇,所以称为词袋,Bag of words 即因此而来),然后看这个袋子里装的都是些什么词汇,将其分类。如果文档中猪、马、牛、羊、山谷、土地、拖拉机这样的词汇多些,而银行、大厦、汽车、公园这样的词汇少些,我们就倾向于判断它是一篇描绘乡村的文档,而不是描述城镇的。举个例子,有如下两个文档:

93 |

94 | 文档一:Bob likes to play basketball, Jim likes too.

95 |

96 | 文档二:Bob also likes to play football games.

97 |

98 | BOW 不仅是直观的感受,其数学原理还依托于离散数学中的[多重集](https://zh.m.wikipedia.org/wiki/%E5%A4%9A%E9%87%8D%E9%9B%86)这个概念,多重集或多重集合是数学中的一个概念,是集合概念的推广。在一个集合中,相同的元素只能出现一次,因此只能显示出有或无的属性。在多重集之中,同一个元素可以出现多次。正式的多重集的概念大约出现在 1970 年代。多重集的势的计算和一般集合的计算方法一样,出现多次的元素则需要按出现的次数计算,不能只算一次。一个元素在多重集里出现的次数称为这个元素在多重集里面的重数(或重次、重复度)。举例来说,{1,2,3} 是一个集合,而 {\displaystyle \left\{1,1,1,2,2,3\right\}} 不是一个集合,而是一个多重集。其中元素 1 的重数是 3,2 的重数是 2,3 的重数是 1。{\displaystyle \left\{1,1,1,2,2,3\right\}} 的元素个数是 6。有时为了和一般的集合相区别,多重集合会用方括号而不是花括号标记,比如 {\displaystyle \left\{1,1,1,2,2,3\right\}} 会被记为 {\displaystyle \left[1,1,1,2,2,3\right]}。和多元组或数组的概念不同,多重集中的元素是没有顺序分别的,也就是说 {\displaystyle \left[1,1,1,2,2,3\right]} 和 {\displaystyle \left[1,1,2,1,2,3\right]} 是同一个多重集。

99 |

100 | 基于这两个文本文档,构造一个词典:

101 |

102 | Dictionary = {1:”Bob”, 2. “like”, 3. “to”, 4. “play”, 5. “basketball”, 6. “also”, 7. “football”,8. “games”, 9. “Jim”, 10. “too”}。

103 |

104 | 这个词典一共包含 10 个不同的单词,利用词典的索引号,上面两个文档每一个都可以用一个 10 维向量表示(用整数数字 0~n(n 为正整数)表示某个单词在文档中出现的次数):

105 |

106 | 1:[1, 2, 1, 1, 1, 0, 0, 0, 1, 1]

107 |

108 | 2:[1, 1, 1, 1 ,0, 1, 1, 1, 0, 0]

109 |

110 | 向量中每个元素表示词典中相关元素在文档中出现的次数(下文中,将用单词的直方图表示)。不过,在构造文档向量的过程中可以看到,我们并没有表达单词在原来句子中出现的次序(这是本 Bag-of-words 模型的缺点之一,不过瑕不掩瑜甚至在此处无关紧要)。

111 |

112 | # Distributed Representation:分布式表示

113 |

114 | 分布式表示的

115 |

116 | 某个词的含义可以由其所处上下文中的其他词推导而来,譬如在 `saying that Europe needs unified banking regulation to replace the hodgepodge` 与 `government debt problems turning into banking cries as has happened in`

117 |

--------------------------------------------------------------------------------

/_sidebar.md:

--------------------------------------------------------------------------------

1 | - [1 00~导论](/00~导论/README.md)

2 |

3 | - 2 99~参考资料 [5]

4 | - [2.1 Numbers every LLM Developer should know](/99~参考资料/2023-Numbers%20every%20LLM%20Developer%20should%20know.md)

5 | - 2.2 吴恩达 《Building Systems with the ChatGPT API》 [3]

6 | - [2.2.1 1.Introduction](/99~参考资料/2023-吴恩达-《Building%20Systems%20with%20the%20ChatGPT%20API》/1.Introduction.md)

7 | - [2.2.2 11.conclusion](/99~参考资料/2023-吴恩达-《Building%20Systems%20with%20the%20ChatGPT%20API》/11.conclusion.md)

8 | - [2.2.3 readme](/99~参考资料/2023-吴恩达-《Building%20Systems%20with%20the%20ChatGPT%20API》/readme.md)

9 | - 2.3 吴恩达 《ChatGPT Prompt Engineering for Developers》 [2]

10 | - [2.3.1 00.README](/99~参考资料/2023-吴恩达-《ChatGPT%20Prompt%20Engineering%20for%20Developers》/00.README.md)

11 | - [2.3.2 01. 简介](/99~参考资料/2023-吴恩达-《ChatGPT%20Prompt%20Engineering%20for%20Developers》/01.%20简介.md)

12 | - [2.3.3 09. 总结](/99~参考资料/2023-吴恩达-《ChatGPT%20Prompt%20Engineering%20for%20Developers》/09.%20总结.md)

13 | - 2.4 吴恩达 《LangChain for LLM Application Development》 [3]

14 | - [2.4.1 1.开篇介绍](/99~参考资料/2023-吴恩达-《LangChain%20for%20LLM%20Application%20Development》/1.开篇介绍.md)

15 | - [2.4.2 8.课程总结](/99~参考资料/2023-吴恩达-《LangChain%20for%20LLM%20Application%20Development》/8.课程总结.md)

16 | - [2.4.3 readme](/99~参考资料/2023-吴恩达-《LangChain%20for%20LLM%20Application%20Development》/readme.md)

17 | - [2.5 陆奇 我的大模型世界观](/99~参考资料/2023-陆奇-我的大模型世界观.md)

18 | - [3 INTRODUCTION](/INTRODUCTION.md)

19 | - [4 LLM [7]](/LLM/README.md)

20 | - 4.1 99~参考资料 [3]

21 | - [4.1.1 2023~Ben Clarkson~Building an LLM from scratch](/LLM/99~参考资料/2023~Ben%20Clarkson~Building%20an%20LLM%20from%20scratch/README.md)

22 |

23 | - [4.1.2 2023~赵鑫~大语言模型综述 [2]](/LLM/99~参考资料/2023~赵鑫~大语言模型综述/README.md)

24 | - [4.1.2.1 01~引言](/LLM/99~参考资料/2023~赵鑫~大语言模型综述/01~引言.md)

25 | - [4.1.2.2 09~参考](/LLM/99~参考资料/2023~赵鑫~大语言模型综述/09~参考.md)

26 | - [4.1.3 cohere~LLM University [1]](/LLM/99~参考资料/cohere~LLM%20University/README.md)

27 | - [4.1.3.1 01~What are Large Language Models? [1]](/LLM/99~参考资料/cohere~LLM%20University/01~What%20are%20Large%20Language%20Models?/README.md)

28 | - [4.1.3.1.1 01.Text Embeddings](/LLM/99~参考资料/cohere~LLM%20University/01~What%20are%20Large%20Language%20Models?/01.Text%20Embeddings.md)

29 | - 4.2 Agent [1]

30 | - 4.2.1 99~参考资料 [1]

31 | - [4.2.1.1 2023~LLM Agent Survey](/LLM/Agent/99~参考资料/2023~LLM%20Agent%20Survey.md)

32 | - 4.3 GPT [1]

33 | - 4.3.1 ChatGPT [1]

34 | - 4.3.1.1 99~参考资料 [1]

35 | - [4.3.1.1.1 GPT 4 大模型硬核解读](/LLM/GPT/ChatGPT/99~参考资料/2023-GPT-4%20大模型硬核解读.md)

36 | - 4.4 LangChain [1]

37 | - 4.4.1 99~参考资料 [2]

38 | - [4.4.1.1 Hacking LangChain For Fun and Profit](/LLM/LangChain/99~参考资料/2023-Hacking%20LangChain%20For%20Fun%20and%20Profit.md)

39 | - [4.4.1.2 LangChain 中文入门教程](/LLM/LangChain/99~参考资料/2023-LangChain%20中文入门教程.md)

40 | - 4.5 代码生成 [1]

41 | - 4.5.1 99~参考资料 [2]

42 | - [4.5.1.1 An example of LLM prompting for programming](/LLM/代码生成/99~参考资料/2023-An%20example%20of%20LLM%20prompting%20for%20programming.md)

43 | - [4.5.1.2 花了大半个月,我终于逆向分析了 Github Copilot](/LLM/代码生成/99~参考资料/2023-花了大半个月,我终于逆向分析了%20Github%20Copilot.md)

44 | - 4.6 语言模型微调 [2]

45 | - 4.6.1 99~参考资料 [2]

46 | - [4.6.1.1 Finetuning Large Language Models](/LLM/语言模型微调/99~参考资料/2023-Finetuning%20Large%20Language%20Models.md)

47 | - [4.6.1.2 Prompt Tuning:深度解读一种新的微调范式](/LLM/语言模型微调/99~参考资料/2023-Prompt-Tuning:深度解读一种新的微调范式.md)

48 | - 4.6.2 LoRA [1]

49 | - 4.6.2.1 99~参考资料 [1]

50 | - [4.6.2.1.1 2023~LoRA From Scratch – Implement Low Rank Adaptation for LLMs in PyTorch](/LLM/语言模型微调/LoRA/99~参考资料/2023~LoRA%20From%20Scratch%20–%20Implement%20Low-Rank%20Adaptation%20for%20LLMs%20in%20PyTorch.md)

51 | - 4.7 预训练语言模型 [2]

52 | - [4.7.1 BERT [2]](/LLM/预训练语言模型/BERT/README.md)

53 | - [4.7.1.1 目标函数](/LLM/预训练语言模型/BERT/目标函数.md)

54 | - [4.7.1.2 输入表示](/LLM/预训练语言模型/BERT/输入表示.md)

55 | - [4.7.2 Transformer [1]](/LLM/预训练语言模型/Transformer/README.md)

56 | - 4.7.2.1 99~参考资料 [7]

57 | - [4.7.2.1.1 NLP 中的 RNN、Seq2Seq 与 Attention 注意力机制](/LLM/预训练语言模型/Transformer/99~参考资料/2019-NLP%20中的%20RNN、Seq2Seq%20与%20Attention%20注意力机制.md)

58 | - [4.7.2.1.2 举个例子讲下 Transformer 的输入输出细节及其他](/LLM/预训练语言模型/Transformer/99~参考资料/2020-举个例子讲下%20Transformer%20的输入输出细节及其他.md)

59 | - [4.7.2.1.3 完全解析 RNN, Seq2Seq, Attention 注意力机制](/LLM/预训练语言模型/Transformer/99~参考资料/2020-完全解析%20RNN,%20Seq2Seq,%20Attention%20注意力机制.md)

60 | - [4.7.2.1.4 Transformer 模型详解(图解最完整版)](/LLM/预训练语言模型/Transformer/99~参考资料/2021-Transformer%20模型详解(图解最完整版).md)

61 | - [4.7.2.1.5 王嘉宁 【预训练语言模型】Attention Is All You Need(Transformer)](/LLM/预训练语言模型/Transformer/99~参考资料/2021-王嘉宁-【预训练语言模型】Attention%20Is%20All%20You%20Need(Transformer).md)

62 | - [4.7.2.1.6 超详细图解 Self Attention](/LLM/预训练语言模型/Transformer/99~参考资料/2021-超详细图解%20Self-Attention.md)

63 | - [4.7.2.1.7 Transformers from Scratch](/LLM/预训练语言模型/Transformer/99~参考资料/2023-Transformers%20from%20Scratch.md)

64 | - [5 循环神经网络](/循环神经网络/README.md)

65 |

66 | - 6 经典自然语言 [4]

67 | - 6.1 主题模型 [1]

68 | - [6.1.1 LDA](/经典自然语言/主题模型/LDA.md)

69 | - 6.2 统计语言模型 [4]

70 | - [6.2.1 Word2Vec](/经典自然语言/统计语言模型/Word2Vec.md)

71 | - [6.2.2 基础文本处理](/经典自然语言/统计语言模型/基础文本处理.md)

72 | - [6.2.3 统计语言模型](/经典自然语言/统计语言模型/统计语言模型.md)

73 | - [6.2.4 词表示](/经典自然语言/统计语言模型/词表示.md)

74 | - 6.3 词嵌入 [3]

75 | - 6.3.1 99~参考资料 [1]

76 | - [6.3.1.1 2023~Embeddings: What they are and why they matter](/经典自然语言/词嵌入/99~参考资料/2023~Embeddings:%20What%20they%20are%20and%20why%20they%20matter.md)

77 | - [6.3.2 概述](/经典自然语言/词嵌入/概述.md)

78 | - 6.3.3 词向量 [1]

79 | - [6.3.3.1 基于 Gensim 的 Word2Vec 实践](/经典自然语言/词嵌入/词向量/基于%20Gensim%20的%20Word2Vec%20实践.md)

80 | - 6.4 语法语义分析 [1]

81 | - [6.4.1 命名实体识别](/经典自然语言/语法语义分析/命名实体识别.md)

82 | - 7 行业应用 [2]

83 | - [7.1 机器人问答](/行业应用/机器人问答/README.md)

84 |

85 | - [7.2 聊天对话](/行业应用/聊天对话/README.md)

86 |

--------------------------------------------------------------------------------

/index.html:

--------------------------------------------------------------------------------

1 |

2 |

3 |

4 |

5 |

6 | AIDL Series

7 |

8 |

9 |

11 |

12 |

13 |

14 |

15 |

16 |

17 |

34 |

38 |  40 |

45 |

46 |

47 |

64 |

97 |

116 |

117 |

118 |

119 |

120 |

121 |

122 |

123 |

124 |

125 |

126 |

127 |

128 |

129 |

130 |

131 |

132 |

143 |

144 |

145 |

146 |

155 |

156 |

157 |

--------------------------------------------------------------------------------

/经典自然语言/词嵌入/词向量/基于 Gensim 的 Word2Vec 实践.md:

--------------------------------------------------------------------------------

1 | # Word2Vec

2 |

3 | - [基于 Gensim 的 Word2Vec 实践](https://zhuanlan.zhihu.com/p/24961011),从属于笔者的[程序猿的数据科学与机器学习实战手册](https://github.com/wx-chevalier/DataScience-And-MachineLearning-Handbook-For-Coders),代码参考[gensim.ipynb](https://github.com/wx-chevalier/DataScience-And-MachineLearning-Handbook-For-Coders/blob/master/code/python/nlp/genism/gensim.ipynb)。推荐前置阅读[Python 语法速览与机器学习开发环境搭建](https://zhuanlan.zhihu.com/p/24536868),[Scikit-Learn 备忘录](https://zhuanlan.zhihu.com/p/24770526)。

4 |

5 |

6 |

7 | > - [Word2Vec Tutorial](https://rare-technologies.com/word2vec-tutorial/)

8 | > - [Getting Started with Word2Vec and GloVe in Python](http://textminingonline.com/getting-started-with-word2vec-and-glove-in-python)

9 |

10 | ## 模型创建

11 |

12 | [Gensim](http://radimrehurek.com/gensim/models/word2vec.html)中 Word2Vec 模型的期望输入是进过分词的句子列表,即是某个二维数组。这里我们暂时使用 Python 内置的数组,不过其在输入数据集较大的情况下会占用大量的 RAM。Gensim 本身只是要求能够迭代的有序句子列表,因此在工程实践中我们可以使用自定义的生成器,只在内存中保存单条语句。

13 |

14 | ```

15 | # 引入 word2vec

16 | from gensim.models import word2vec

17 |

18 | # 引入日志配置

19 | import logging

20 |

21 | logging.basicConfig(format='%(asctime)s : %(levelname)s : %(message)s', level=logging.INFO)

22 |

23 | # 引入数据集

24 | raw_sentences = ["the quick brown fox jumps over the lazy dogs","yoyoyo you go home now to sleep"]

25 |

26 | # 切分词汇

27 | sentences= [s.encode('utf-8').split() for s in sentences]

28 |

29 | # 构建模型

30 | model = word2vec.Word2Vec(sentences, min_count=1)

31 |

32 | # 进行相关性比较

33 | model.similarity('dogs','you')

34 | ```

35 |

36 | 这里我们调用`Word2Vec`创建模型实际上会对数据执行两次迭代操作,第一轮操作会统计词频来构建内部的词典数结构,第二轮操作会进行神经网络训练,而这两个步骤是可以分步进行的,这样对于某些不可重复的流(譬如 Kafka 等流式数据中)可以手动控制:

37 |

38 | ```

39 | model = gensim.models.Word2Vec(iter=1) # an empty model, no training yet

40 | model.build_vocab(some_sentences) # can be a non-repeatable, 1-pass generator

41 | model.train(other_sentences) # can be a non-repeatable, 1-pass generator

42 | ```

43 |

44 | ### Word2Vec 参数

45 |

46 | - min_count

47 |

48 | ```

49 | model = Word2Vec(sentences, min_count=10) # default value is 5

50 | ```

51 |

52 | 在不同大小的语料集中,我们对于基准词频的需求也是不一样的。譬如在较大的语料集中,我们希望忽略那些只出现过一两次的单词,这里我们就可以通过设置`min_count`参数进行控制。一般而言,合理的参数值会设置在 0~100 之间。

53 |

54 | - size

55 |

56 | `size`参数主要是用来设置神经网络的层数,Word2Vec 中的默认值是设置为 100 层。更大的层次设置意味着更多的输入数据,不过也能提升整体的准确度,合理的设置范围为 10~数百。

57 |

58 | ```

59 | model = Word2Vec(sentences, size=200) # default value is 100

60 | ```

61 |

62 | - workers

63 |

64 | `workers`参数用于设置并发训练时候的线程数,不过仅当`Cython`安装的情况下才会起作用:

65 |

66 | ```

67 | model = Word2Vec(sentences, workers=4) # default = 1 worker = no parallelization

68 | ```

69 |

70 | ## 外部语料集

71 |

72 | 在真实的训练场景中我们往往会使用较大的语料集进行训练,譬如这里以 Word2Vec 官方的[text8](http://mattmahoney.net/dc/text8.zip)为例,只要改变模型中的语料集开源即可:

73 |

74 | ```

75 | sentences = word2vec.Text8Corpus('text8')

76 | model = word2vec.Word2Vec(sentences, size=200)

77 | ```

78 |

79 | 这里语料集中的语句是经过分词的,因此可以直接使用。笔者在第一次使用该类时报错了,因此把 Gensim 中的源代码贴一下,也方便以后自定义处理其他语料集:

80 |

81 | ```

82 | class Text8Corpus(object):

83 | """Iterate over sentences from the "text8" corpus, unzipped from http://mattmahoney.net/dc/text8.zip ."""

84 | def __init__(self, fname, max_sentence_length=MAX_WORDS_IN_BATCH):

85 | self.fname = fname

86 | self.max_sentence_length = max_sentence_length

87 |

88 | def __iter__(self):

89 | # the entire corpus is one gigantic line -- there are no sentence marks at all

90 | # so just split the sequence of tokens arbitrarily: 1 sentence = 1000 tokens

91 | sentence, rest = [], b''

92 | with utils.smart_open(self.fname) as fin:

93 | while True:

94 | text = rest + fin.read(8192) # avoid loading the entire file (=1 line) into RAM

95 | if text == rest: # EOF

96 | words = utils.to_unicode(text).split()

97 | sentence.extend(words) # return the last chunk of words, too (may be shorter/longer)

98 | if sentence:

99 | yield sentence

100 | break

101 | last_token = text.rfind(b' ') # last token may have been split in two... keep for next iteration

102 | words, rest = (utils.to_unicode(text[:last_token]).split(),

103 | text[last_token:].strip()) if last_token >= 0 else ([], text)

104 | sentence.extend(words)

105 | while len(sentence) >= self.max_sentence_length:

106 | yield sentence[:self.max_sentence_length]

107 | sentence = sentence[self.max_sentence_length:]

108 | ```

109 |

110 | 我们在上文中也提及,如果是对于大量的输入语料集或者需要整合磁盘上多个文件夹下的数据,我们可以以迭代器的方式而不是一次性将全部内容读取到内存中来节省 RAM 空间:

111 |

112 | ```

113 | class MySentences(object):

114 | def __init__(self, dirname):

115 | self.dirname = dirname

116 |

117 | def __iter__(self):

118 | for fname in os.listdir(self.dirname):

119 | for line in open(os.path.join(self.dirname, fname)):

120 | yield line.split()

121 |

122 | sentences = MySentences('/some/directory') # a memory-friendly iterator

123 | model = gensim.models.Word2Vec(sentences)

124 | ```

125 |

126 | ## 模型保存与读取

127 |

128 | ```

129 | model.save('text8.model')

130 | 2015-02-24 11:19:26,059 : INFO : saving Word2Vec object under text8.model, separately None

131 | 2015-02-24 11:19:26,060 : INFO : not storing attribute syn0norm

132 | 2015-02-24 11:19:26,060 : INFO : storing numpy array 'syn0' to text8.model.syn0.npy

133 | 2015-02-24 11:19:26,742 : INFO : storing numpy array 'syn1' to text8.model.syn1.npy

134 |

135 | model1 = Word2Vec.load('text8.model')

136 |

137 | model.save_word2vec_format('text.model.bin', binary=True)

138 | 2015-02-24 11:19:52,341 : INFO : storing 71290x200 projection weights into text.model.bin

139 |

140 | model1 = word2vec.Word2Vec.load_word2vec_format('text.model.bin', binary=True)

141 | 2015-02-24 11:22:08,185 : INFO : loading projection weights from text.model.bin

142 | 2015-02-24 11:22:10,322 : INFO : loaded (71290, 200) matrix from text.model.bin

143 | 2015-02-24 11:22:10,322 : INFO : precomputing L2-norms of word weight vectors

144 | ```

145 |

146 | ## 模型预测

147 |

148 | Word2Vec 最著名的效果即是以语义化的方式推断出相似词汇:

149 |

150 | ```

151 | model.most_similar(positive=['woman', 'king'], negative=['man'], topn=1)

152 | [('queen', 0.50882536)]

153 | model.doesnt_match("breakfast cereal dinner lunch";.split())

154 | 'cereal'

155 | model.similarity('woman', 'man')

156 | 0.73723527

157 | model.most_similar(['man'])

158 | [(u'woman', 0.5686948895454407),

159 | (u'girl', 0.4957364797592163),

160 | (u'young', 0.4457539916038513),

161 | (u'luckiest', 0.4420626759529114),

162 | (u'serpent', 0.42716869711875916),

163 | (u'girls', 0.42680859565734863),

164 | (u'smokes', 0.4265017509460449),

165 | (u'creature', 0.4227582812309265),

166 | (u'robot', 0.417464017868042),

167 | (u'mortal', 0.41728296875953674)]

168 | ```

169 |

170 | 如果我们希望直接获取某个单词的向量表示,直接以下标方式访问即可:

171 |

172 | ```

173 | model['computer'] # raw NumPy vector of a word

174 | array([-0.00449447, -0.00310097, 0.02421786, ...], dtype=float32)

175 | ```

176 |

177 | ### 模型评估

178 |

179 | Word2Vec 的训练属于无监督模型,并没有太多的类似于监督学习里面的客观评判方式,更多的依赖于端应用。Google 之前公开了 20000 条左右的语法与语义化训练样本,每一条遵循`A is to B as C is to D`这个格式,地址在[这里](https://word2vec.googlecode.com/svn/trunk/questions-words.txt):

180 |

181 | ```

182 | model.accuracy('/tmp/questions-words.txt')

183 | 2014-02-01 22:14:28,387 : INFO : family: 88.9% (304/342)

184 | 2014-02-01 22:29:24,006 : INFO : gram1-adjective-to-adverb: 32.4% (263/812)

185 | 2014-02-01 22:36:26,528 : INFO : gram2-opposite: 50.3% (191/380)

186 | 2014-02-01 23:00:52,406 : INFO : gram3-comparative: 91.7% (1222/1332)

187 | 2014-02-01 23:13:48,243 : INFO : gram4-superlative: 87.9% (617/702)

188 | 2014-02-01 23:29:52,268 : INFO : gram5-present-participle: 79.4% (691/870)

189 | 2014-02-01 23:57:04,965 : INFO : gram7-past-tense: 67.1% (995/1482)

190 | 2014-02-02 00:15:18,525 : INFO : gram8-plural: 89.6% (889/992)

191 | 2014-02-02 00:28:18,140 : INFO : gram9-plural-verbs: 68.7% (482/702)

192 | 2014-02-02 00:28:18,140 : INFO : total: 74.3% (5654/7614)

193 | ```

194 |

195 | 还是需要强调下,训练集上表现的好也不意味着 Word2Vec 在真实应用中就会表现的很好,还是需要因地制宜。

196 |

197 | # 模型训练

198 |

199 | ## 简单语料集

200 |

201 | ## 外部语料集

202 |

203 | ## 中文语料集

204 |

205 | 约三十余万篇文章

206 |

207 | # 模型应用

208 |

209 | ## 可视化预览

210 |

211 | ```

212 | from sklearn.decomposition import PCA

213 | import matplotlib.pyplot as plt

214 |

215 |

216 | def wv_visualizer(model, word = ["man"]):

217 |

218 | # 寻找出最相似的十个词

219 | words = [wp[0] for wp in model.most_similar(word,20)]

220 |

221 | # 提取出词对应的词向量

222 | wordsInVector = [model[word] for word in words]

223 |

224 | # 进行 PCA 降维

225 | pca = PCA(n_components=2)

226 | pca.fit(wordsInVector)

227 | X = pca.transform(wordsInVector)

228 |

229 | # 绘制图形

230 | xs = X[:, 0]

231 | ys = X[:, 1]

232 |

233 | # draw

234 | plt.figure(figsize=(12,8))

235 | plt.scatter(xs, ys, marker = 'o')

236 | for i, w in enumerate(words):

237 | plt.annotate(

238 | w,

239 | xy = (xs[i], ys[i]), xytext = (6, 6),

240 | textcoords = 'offset points', ha = 'left', va = 'top',

241 | **dict(fontsize=10)

242 | )

243 |

244 | plt.show()

245 |

246 | # 调用时传入目标词组即可

247 | wv_visualizer(model,["China","Airline"])

248 | ```

249 |

--------------------------------------------------------------------------------

/99~参考资料/2023~吴恩达~《Building Systems with the ChatGPT API》/7.Check Outputs.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "markdown",

5 | "id": "f99b8a44",

6 | "metadata": {},

7 | "source": [

8 | "# L6: 检查结果\n",

9 | "比较简单轻松的一节"

10 | ]

11 | },

12 | {

13 | "cell_type": "code",

14 | "execution_count": 1,

15 | "id": "5daec1c7",

16 | "metadata": {},

17 | "outputs": [],

18 | "source": [

19 | "import os\n",

20 | "import openai\n",

21 | "\n",

22 | "# from dotenv import load_dotenv, find_dotenv\n",

23 | "# _ = load_dotenv(find_dotenv()) # 读取本地的.env环境文件\n",

24 | "\n",

25 | "openai.api_key = 'sk-xxxxxxxxxxxx' #更换成你自己的key"

26 | ]

27 | },

28 | {

29 | "cell_type": "code",

30 | "execution_count": 2,

31 | "id": "9c40b32d",

32 | "metadata": {},

33 | "outputs": [],

34 | "source": [

35 | "def get_completion_from_messages(messages, model=\"gpt-3.5-turbo\", temperature=0, max_tokens=500):\n",

36 | " response = openai.ChatCompletion.create(\n",

37 | " model=model,\n",

38 | " messages=messages,\n",

39 | " temperature=temperature, \n",

40 | " max_tokens=max_tokens, \n",

41 | " )\n",

42 | " return response.choices[0].message[\"content\"]"

43 | ]

44 | },

45 | {

46 | "cell_type": "markdown",

47 | "id": "59f69c2e",

48 | "metadata": {},

49 | "source": [

50 | "### 检查输出是否有潜在的有害内容\n",

51 | "重要的就是一个moderation"

52 | ]

53 | },

54 | {

55 | "cell_type": "code",

56 | "execution_count": 4,

57 | "id": "943f5396",

58 | "metadata": {},

59 | "outputs": [

60 | {

61 | "name": "stdout",

62 | "output_type": "stream",

63 | "text": [

64 | "{\n",

65 | " \"categories\": {\n",

66 | " \"hate\": false,\n",

67 | " \"hate/threatening\": false,\n",

68 | " \"self-harm\": false,\n",

69 | " \"sexual\": false,\n",

70 | " \"sexual/minors\": false,\n",

71 | " \"violence\": false,\n",

72 | " \"violence/graphic\": false\n",

73 | " },\n",

74 | " \"category_scores\": {\n",

75 | " \"hate\": 2.6680607e-06,\n",

76 | " \"hate/threatening\": 1.2194433e-08,\n",

77 | " \"self-harm\": 8.294434e-07,\n",

78 | " \"sexual\": 3.41087e-05,\n",

79 | " \"sexual/minors\": 1.5462567e-07,\n",

80 | " \"violence\": 6.3285606e-06,\n",

81 | " \"violence/graphic\": 2.9102332e-06\n",

82 | " },\n",

83 | " \"flagged\": false\n",

84 | "}\n"

85 | ]

86 | }

87 | ],

88 | "source": [

89 | "final_response_to_customer = f\"\"\"\n",

90 | "SmartX ProPhone有一个6.1英寸的显示屏,128GB存储、1200万像素的双摄像头,以及5G。FotoSnap单反相机有一个2420万像素的传感器,1080p视频,3英寸LCD和 \n",

91 | "可更换的镜头。我们有各种电视,包括CineView 4K电视,55英寸显示屏,4K分辨率、HDR,以及智能电视功能。我们也有SoundMax家庭影院系统,具有5.1声道,1000W输出,无线 \n",

92 | "重低音扬声器和蓝牙。关于这些产品或我们提供的任何其他产品您是否有任何具体问题?\n",

93 | "\"\"\"\n",

94 | "# Moderation是OpenAI的内容审核函数,用于检测这段内容的危害含量\n",

95 | "\n",

96 | "response = openai.Moderation.create(\n",

97 | " input=final_response_to_customer\n",

98 | ")\n",

99 | "moderation_output = response[\"results\"][0]\n",

100 | "print(moderation_output)"

101 | ]

102 | },

103 | {

104 | "cell_type": "markdown",

105 | "id": "f57f8dad",

106 | "metadata": {},

107 | "source": [

108 | "### 检查输出结果是否与提供的产品信息相符合"

109 | ]

110 | },

111 | {

112 | "cell_type": "code",

113 | "execution_count": 7,

114 | "id": "552e3d8c",

115 | "metadata": {},

116 | "outputs": [

117 | {

118 | "name": "stdout",

119 | "output_type": "stream",

120 | "text": [

121 | "Y\n"

122 | ]

123 | }

124 | ],

125 | "source": [

126 | "# 这是一段电子产品相关的信息\n",

127 | "system_message = f\"\"\"\n",

128 | "You are an assistant that evaluates whether \\\n",

129 | "customer service agent responses sufficiently \\\n",

130 | "answer customer questions, and also validates that \\\n",

131 | "all the facts the assistant cites from the product \\\n",

132 | "information are correct.\n",

133 | "The product information and user and customer \\\n",

134 | "service agent messages will be delimited by \\\n",

135 | "3 backticks, i.e. ```.\n",

136 | "Respond with a Y or N character, with no punctuation:\n",

137 | "Y - if the output sufficiently answers the question \\\n",

138 | "AND the response correctly uses product information\n",

139 | "N - otherwise\n",

140 | "\n",

141 | "Output a single letter only.\n",

142 | "\"\"\"\n",

143 | "\n",

144 | "#这是顾客的提问\n",

145 | "customer_message = f\"\"\"\n",

146 | "tell me about the smartx pro phone and \\\n",

147 | "the fotosnap camera, the dslr one. \\\n",

148 | "Also tell me about your tvs\"\"\"\n",

149 | "product_information = \"\"\"{ \"name\": \"SmartX ProPhone\", \"category\": \"Smartphones and Accessories\", \"brand\": \"SmartX\", \"model_number\": \"SX-PP10\", \"warranty\": \"1 year\", \"rating\": 4.6, \"features\": [ \"6.1-inch display\", \"128GB storage\", \"12MP dual camera\", \"5G\" ], \"description\": \"A powerful smartphone with advanced camera features.\", \"price\": 899.99 } { \"name\": \"FotoSnap DSLR Camera\", \"category\": \"Cameras and Camcorders\", \"brand\": \"FotoSnap\", \"model_number\": \"FS-DSLR200\", \"warranty\": \"1 year\", \"rating\": 4.7, \"features\": [ \"24.2MP sensor\", \"1080p video\", \"3-inch LCD\", \"Interchangeable lenses\" ], \"description\": \"Capture stunning photos and videos with this versatile DSLR camera.\", \"price\": 599.99 } { \"name\": \"CineView 4K TV\", \"category\": \"Televisions and Home Theater Systems\", \"brand\": \"CineView\", \"model_number\": \"CV-4K55\", \"warranty\": \"2 years\", \"rating\": 4.8, \"features\": [ \"55-inch display\", \"4K resolution\", \"HDR\", \"Smart TV\" ], \"description\": \"A stunning 4K TV with vibrant colors and smart features.\", \"price\": 599.99 } { \"name\": \"SoundMax Home Theater\", \"category\": \"Televisions and Home Theater Systems\", \"brand\": \"SoundMax\", \"model_number\": \"SM-HT100\", \"warranty\": \"1 year\", \"rating\": 4.4, \"features\": [ \"5.1 channel\", \"1000W output\", \"Wireless subwoofer\", \"Bluetooth\" ], \"description\": \"A powerful home theater system for an immersive audio experience.\", \"price\": 399.99 } { \"name\": \"CineView 8K TV\", \"category\": \"Televisions and Home Theater Systems\", \"brand\": \"CineView\", \"model_number\": \"CV-8K65\", \"warranty\": \"2 years\", \"rating\": 4.9, \"features\": [ \"65-inch display\", \"8K resolution\", \"HDR\", \"Smart TV\" ], \"description\": \"Experience the future of television with this stunning 8K TV.\", \"price\": 2999.99 } { \"name\": \"SoundMax Soundbar\", \"category\": \"Televisions and Home Theater Systems\", \"brand\": \"SoundMax\", \"model_number\": \"SM-SB50\", \"warranty\": \"1 year\", \"rating\": 4.3, \"features\": [ \"2.1 channel\", \"300W output\", \"Wireless subwoofer\", \"Bluetooth\" ], \"description\": \"Upgrade your TV's audio with this sleek and powerful soundbar.\", \"price\": 199.99 } { \"name\": \"CineView OLED TV\", \"category\": \"Televisions and Home Theater Systems\", \"brand\": \"CineView\", \"model_number\": \"CV-OLED55\", \"warranty\": \"2 years\", \"rating\": 4.7, \"features\": [ \"55-inch display\", \"4K resolution\", \"HDR\", \"Smart TV\" ], \"description\": \"Experience true blacks and vibrant colors with this OLED TV.\", \"price\": 1499.99 }\"\"\"\n",

150 | "\n",

151 | "q_a_pair = f\"\"\"\n",

152 | "Customer message: ```{customer_message}```\n",

153 | "Product information: ```{product_information}```\n",

154 | "Agent response: ```{final_response_to_customer}```\n",

155 | "\n",

156 | "Does the response use the retrieved information correctly?\n",

157 | "Does the response sufficiently answer the question?\n",

158 | "\n",

159 | "Output Y or N\n",

160 | "\"\"\"\n",

161 | "#判断相关性\n",

162 | "messages = [\n",

163 | " {'role': 'system', 'content': system_message},\n",

164 | " {'role': 'user', 'content': q_a_pair}\n",

165 | "]\n",

166 | "\n",

167 | "response = get_completion_from_messages(messages, max_tokens=1)\n",

168 | "print(response)"

169 | ]

170 | },

171 | {

172 | "cell_type": "code",

173 | "execution_count": 6,

174 | "id": "afb1b82f",

175 | "metadata": {},

176 | "outputs": [

177 | {

178 | "name": "stdout",

179 | "output_type": "stream",

180 | "text": [

181 | "N\n"

182 | ]

183 | }

184 | ],

185 | "source": [

186 | "another_response = \"life is like a box of chocolates\"\n",

187 | "q_a_pair = f\"\"\"\n",

188 | "Customer message: ```{customer_message}```\n",

189 | "Product information: ```{product_information}```\n",

190 | "Agent response: ```{another_response}```\n",

191 | "\n",

192 | "Does the response use the retrieved information correctly?\n",

193 | "Does the response sufficiently answer the question?\n",

194 | "\n",

195 | "Output Y or N\n",

196 | "\"\"\"\n",

197 | "messages = [\n",

198 | " {'role': 'system', 'content': system_message},\n",

199 | " {'role': 'user', 'content': q_a_pair}\n",

200 | "]\n",

201 | "\n",

202 | "response = get_completion_from_messages(messages)\n",

203 | "print(response)"

204 | ]

205 | }

206 | ],

207 | "metadata": {

208 | "kernelspec": {

209 | "display_name": "Python 3 (ipykernel)",

210 | "language": "python",

211 | "name": "python3"

212 | },

213 | "language_info": {

214 | "codemirror_mode": {

215 | "name": "ipython",

216 | "version": 3

217 | },

218 | "file_extension": ".py",

219 | "mimetype": "text/x-python",

220 | "name": "python",

221 | "nbconvert_exporter": "python",

222 | "pygments_lexer": "ipython3",

223 | "version": "3.10.9"

224 | }

225 | },

226 | "nbformat": 4,

227 | "nbformat_minor": 5

228 | }

229 |

--------------------------------------------------------------------------------

/header.svg:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/99~参考资料/2023~吴恩达~《Building Systems with the ChatGPT API》/products.json:

--------------------------------------------------------------------------------

1 | {"TechPro Ultrabook": {"name": "TechPro Ultrabook", "category": "Computers and Laptops", "brand": "TechPro", "model_number": "TP-UB100", "warranty": "1 year", "rating": 4.5, "features": ["13.3-inch display", "8GB RAM", "256GB SSD", "Intel Core i5 processor"], "description": "A sleek and lightweight ultrabook for everyday use.", "price": 799.99}, "BlueWave Gaming Laptop": {"name": "BlueWave Gaming Laptop", "category": "Computers and Laptops", "brand": "BlueWave", "model_number": "BW-GL200", "warranty": "2 years", "rating": 4.7, "features": ["15.6-inch display", "16GB RAM", "512GB SSD", "NVIDIA GeForce RTX 3060"], "description": "A high-performance gaming laptop for an immersive experience.", "price": 1199.99}, "PowerLite Convertible": {"name": "PowerLite Convertible", "category": "Computers and Laptops", "brand": "PowerLite", "model_number": "PL-CV300", "warranty": "1 year", "rating": 4.3, "features": ["14-inch touchscreen", "8GB RAM", "256GB SSD", "360-degree hinge"], "description": "A versatile convertible laptop with a responsive touchscreen.", "price": 699.99}, "TechPro Desktop": {"name": "TechPro Desktop", "category": "Computers and Laptops", "brand": "TechPro", "model_number": "TP-DT500", "warranty": "1 year", "rating": 4.4, "features": ["Intel Core i7 processor", "16GB RAM", "1TB HDD", "NVIDIA GeForce GTX 1660"], "description": "A powerful desktop computer for work and play.", "price": 999.99}, "BlueWave Chromebook": {"name": "BlueWave Chromebook", "category": "Computers and Laptops", "brand": "BlueWave", "model_number": "BW-CB100", "warranty": "1 year", "rating": 4.1, "features": ["11.6-inch display", "4GB RAM", "32GB eMMC", "Chrome OS"], "description": "A compact and affordable Chromebook for everyday tasks.", "price": 249.99}, "SmartX ProPhone": {"name": "SmartX ProPhone", "category": "Smartphones and Accessories", "brand": "SmartX", "model_number": "SX-PP10", "warranty": "1 year", "rating": 4.6, "features": ["6.1-inch display", "128GB storage", "12MP dual camera", "5G"], "description": "A powerful smartphone with advanced camera features.", "price": 899.99}, "MobiTech PowerCase": {"name": "MobiTech PowerCase", "category": "Smartphones and Accessories", "brand": "MobiTech", "model_number": "MT-PC20", "warranty": "1 year", "rating": 4.3, "features": ["5000mAh battery", "Wireless charging", "Compatible with SmartX ProPhone"], "description": "A protective case with built-in battery for extended usage.", "price": 59.99}, "SmartX MiniPhone": {"name": "SmartX MiniPhone", "category": "Smartphones and Accessories", "brand": "SmartX", "model_number": "SX-MP5", "warranty": "1 year", "rating": 4.2, "features": ["4.7-inch display", "64GB storage", "8MP camera", "4G"], "description": "A compact and affordable smartphone for basic tasks.", "price": 399.99}, "MobiTech Wireless Charger": {"name": "MobiTech Wireless Charger", "category": "Smartphones and Accessories", "brand": "MobiTech", "model_number": "MT-WC10", "warranty": "1 year", "rating": 4.5, "features": ["10W fast charging", "Qi-compatible", "LED indicator", "Compact design"], "description": "A convenient wireless charger for a clutter-free workspace.", "price": 29.99}, "SmartX EarBuds": {"name": "SmartX EarBuds", "category": "Smartphones and Accessories", "brand": "SmartX", "model_number": "SX-EB20", "warranty": "1 year", "rating": 4.4, "features": ["True wireless", "Bluetooth 5.0", "Touch controls", "24-hour battery life"], "description": "Experience true wireless freedom with these comfortable earbuds.", "price": 99.99}, "CineView 4K TV": {"name": "CineView 4K TV", "category": "Televisions and Home Theater Systems", "brand": "CineView", "model_number": "CV-4K55", "warranty": "2 years", "rating": 4.8, "features": ["55-inch display", "4K resolution", "HDR", "Smart TV"], "description": "A stunning 4K TV with vibrant colors and smart features.", "price": 599.99}, "SoundMax Home Theater": {"name": "SoundMax Home Theater", "category": "Televisions and Home Theater Systems", "brand": "SoundMax", "model_number": "SM-HT100", "warranty": "1 year", "rating": 4.4, "features": ["5.1 channel", "1000W output", "Wireless subwoofer", "Bluetooth"], "description": "A powerful home theater system for an immersive audio experience.", "price": 399.99}, "CineView 8K TV": {"name": "CineView 8K TV", "category": "Televisions and Home Theater Systems", "brand": "CineView", "model_number": "CV-8K65", "warranty": "2 years", "rating": 4.9, "features": ["65-inch display", "8K resolution", "HDR", "Smart TV"], "description": "Experience the future of television with this stunning 8K TV.", "price": 2999.99}, "SoundMax Soundbar": {"name": "SoundMax Soundbar", "category": "Televisions and Home Theater Systems", "brand": "SoundMax", "model_number": "SM-SB50", "warranty": "1 year", "rating": 4.3, "features": ["2.1 channel", "300W output", "Wireless subwoofer", "Bluetooth"], "description": "Upgrade your TV's audio with this sleek and powerful soundbar.", "price": 199.99}, "CineView OLED TV": {"name": "CineView OLED TV", "category": "Televisions and Home Theater Systems", "brand": "CineView", "model_number": "CV-OLED55", "warranty": "2 years", "rating": 4.7, "features": ["55-inch display", "4K resolution", "HDR", "Smart TV"], "description": "Experience true blacks and vibrant colors with this OLED TV.", "price": 1499.99}, "GameSphere X": {"name": "GameSphere X", "category": "Gaming Consoles and Accessories", "brand": "GameSphere", "model_number": "GS-X", "warranty": "1 year", "rating": 4.9, "features": ["4K gaming", "1TB storage", "Backward compatibility", "Online multiplayer"], "description": "A next-generation gaming console for the ultimate gaming experience.", "price": 499.99}, "ProGamer Controller": {"name": "ProGamer Controller", "category": "Gaming Consoles and Accessories", "brand": "ProGamer", "model_number": "PG-C100", "warranty": "1 year", "rating": 4.2, "features": ["Ergonomic design", "Customizable buttons", "Wireless", "Rechargeable battery"], "description": "A high-quality gaming controller for precision and comfort.", "price": 59.99}, "GameSphere Y": {"name": "GameSphere Y", "category": "Gaming Consoles and Accessories", "brand": "GameSphere", "model_number": "GS-Y", "warranty": "1 year", "rating": 4.8, "features": ["4K gaming", "500GB storage", "Backward compatibility", "Online multiplayer"], "description": "A compact gaming console with powerful performance.", "price": 399.99}, "ProGamer Racing Wheel": {"name": "ProGamer Racing Wheel", "category": "Gaming Consoles and Accessories", "brand": "ProGamer", "model_number": "PG-RW200", "warranty": "1 year", "rating": 4.5, "features": ["Force feedback", "Adjustable pedals", "Paddle shifters", "Compatible with GameSphere X"], "description": "Enhance your racing games with this realistic racing wheel.", "price": 249.99}, "GameSphere VR Headset": {"name": "GameSphere VR Headset", "category": "Gaming Consoles and Accessories", "brand": "GameSphere", "model_number": "GS-VR", "warranty": "1 year", "rating": 4.6, "features": ["Immersive VR experience", "Built-in headphones", "Adjustable headband", "Compatible with GameSphere X"], "description": "Step into the world of virtual reality with this comfortable VR headset.", "price": 299.99}, "AudioPhonic Noise-Canceling Headphones": {"name": "AudioPhonic Noise-Canceling Headphones", "category": "Audio Equipment", "brand": "AudioPhonic", "model_number": "AP-NC100", "warranty": "1 year", "rating": 4.6, "features": ["Active noise-canceling", "Bluetooth", "20-hour battery life", "Comfortable fit"], "description": "Experience immersive sound with these noise-canceling headphones.", "price": 199.99}, "WaveSound Bluetooth Speaker": {"name": "WaveSound Bluetooth Speaker", "category": "Audio Equipment", "brand": "WaveSound", "model_number": "WS-BS50", "warranty": "1 year", "rating": 4.5, "features": ["Portable", "10-hour battery life", "Water-resistant", "Built-in microphone"], "description": "A compact and versatile Bluetooth speaker for music on the go.", "price": 49.99}, "AudioPhonic True Wireless Earbuds": {"name": "AudioPhonic True Wireless Earbuds", "category": "Audio Equipment", "brand": "AudioPhonic", "model_number": "AP-TW20", "warranty": "1 year", "rating": 4.4, "features": ["True wireless", "Bluetooth 5.0", "Touch controls", "18-hour battery life"], "description": "Enjoy music without wires with these comfortable true wireless earbuds.", "price": 79.99}, "WaveSound Soundbar": {"name": "WaveSound Soundbar", "category": "Audio Equipment", "brand": "WaveSound", "model_number": "WS-SB40", "warranty": "1 year", "rating": 4.3, "features": ["2.0 channel", "80W output", "Bluetooth", "Wall-mountable"], "description": "Upgrade your TV's audio with this slim and powerful soundbar.", "price": 99.99}, "AudioPhonic Turntable": {"name": "AudioPhonic Turntable", "category": "Audio Equipment", "brand": "AudioPhonic", "model_number": "AP-TT10", "warranty": "1 year", "rating": 4.2, "features": ["3-speed", "Built-in speakers", "Bluetooth", "USB recording"], "description": "Rediscover your vinyl collection with this modern turntable.", "price": 149.99}, "FotoSnap DSLR Camera": {"name": "FotoSnap DSLR Camera", "category": "Cameras and Camcorders", "brand": "FotoSnap", "model_number": "FS-DSLR200", "warranty": "1 year", "rating": 4.7, "features": ["24.2MP sensor", "1080p video", "3-inch LCD", "Interchangeable lenses"], "description": "Capture stunning photos and videos with this versatile DSLR camera.", "price": 599.99}, "ActionCam 4K": {"name": "ActionCam 4K", "category": "Cameras and Camcorders", "brand": "ActionCam", "model_number": "AC-4K", "warranty": "1 year", "rating": 4.4, "features": ["4K video", "Waterproof", "Image stabilization", "Wi-Fi"], "description": "Record your adventures with this rugged and compact 4K action camera.", "price": 299.99}, "FotoSnap Mirrorless Camera": {"name": "FotoSnap Mirrorless Camera", "category": "Cameras and Camcorders", "brand": "FotoSnap", "model_number": "FS-ML100", "warranty": "1 year", "rating": 4.6, "features": ["20.1MP sensor", "4K video", "3-inch touchscreen", "Interchangeable lenses"], "description": "A compact and lightweight mirrorless camera with advanced features.", "price": 799.99}, "ZoomMaster Camcorder": {"name": "ZoomMaster Camcorder", "category": "Cameras and Camcorders", "brand": "ZoomMaster", "model_number": "ZM-CM50", "warranty": "1 year", "rating": 4.3, "features": ["1080p video", "30x optical zoom", "3-inch LCD", "Image stabilization"], "description": "Capture life's moments with this easy-to-use camcorder.", "price": 249.99}, "FotoSnap Instant Camera": {"name": "FotoSnap Instant Camera", "category": "Cameras and Camcorders", "brand": "FotoSnap", "model_number": "FS-IC10", "warranty": "1 year", "rating": 4.1, "features": ["Instant prints", "Built-in flash", "Selfie mirror", "Battery-powered"], "description": "Create instant memories with this fun and portable instant camera.", "price": 69.99}}

--------------------------------------------------------------------------------

/99~参考资料/2023~Numbers every LLM Developer should know.md:

--------------------------------------------------------------------------------

1 |

21 |

22 | # Numbers every LLM Developer should know

23 |

24 | When I was at Google, there was a document put together by [Jeff Dean](https://en.wikipedia.org/wiki/Jeff_Dean), the legendary engineer, called [Numbers every Engineer should know](http://brenocon.com/dean_perf.html). It’s really useful to have a similar set of numbers for LLM developers to know that are useful for back-of-the envelope calculations. Here we share particular numbers we at Anyscale use, why the number is important and how to use it to your advantage.

25 |

26 | ## Notes on the Github version

27 |

28 | Last updates: 2023-05-17

29 |

30 | If you feel there's an issue with the accuracy of the numbers, please file an issue. Think there are more numbers that should be in this doc? Let us know or file a PR.

31 |

32 | We are thinking the next thing we should add here is some stats on tokens per second of different models.

33 |

34 | ## Prompts

35 |

36 | ### 40-90%[^1]: Amount saved by appending “Be Concise” to your prompt

37 |

38 | It’s important to remember that you pay by the token for responses. This means that asking an LLM to be concise can save you a lot of money. This can be broadened beyond simply appending “be concise” to your prompt: if you are using GPT-4 to come up with 10 alternatives, maybe ask it for 5 and keep the other half of the money.

39 |

40 | ### 1.3: Average tokens per word

41 |

42 | LLMs operate on tokens. Tokens are words or sub-parts of words, so “eating” might be broken into two tokens “eat” and “ing”. A 750 word document in English will be about 1000 tokens. For languages other than English, the tokens per word increases depending on their commonality in the LLM's embedding corpus.

43 |

44 | Knowing this ratio is important because most billing is done in tokens, and the LLM’s context window size is also defined in tokens.

45 |

46 | ## Prices[^2]

47 |

48 | Prices are of course subject to change, but given how expensive LLMs are to operate, the numbers in this section are critical. We use OpenAI for the numbers here, but prices from other providers you should check out ([Anthropic](https://cdn2.assets-servd.host/anthropic-website/production/images/model_pricing_may2023.pdf), [Cohere](https://cohere.com/pricing)) are in the same ballpark.

49 |

50 | ### ~50: Cost Ratio of GPT-4 to GPT-3.5 Turbo[^3]

51 |

52 | What this means is that for many practical applications, it’s much better to use GPT-4 for things like generation and then use that data to fine tune a smaller model. It is roughly 50 times cheaper to use GPT-3.5-Turbo than GPT-4 (the “roughly” is because GPT-4 charges differently for the prompt and the generated output) – so you really need to check on how far you can get with GPT-3.5-Turbo. GPT-3.5-Turbo is more than enough for tasks like summarization for example.

53 |

54 | ### 5: Cost Ratio of generation of text using GPT-3.5-Turbo vs OpenAI embedding

55 |

56 | This means it is way cheaper to look something up in a vector store than to ask an LLM to generate it. E.g. “What is the capital of Delaware?” when looked up in an neural information retrieval system costs about 5x[^4] less than if you asked GPT-3.5-Turbo. The cost difference compared to GPT-4 is a whopping 250x!

57 |

58 | ### 10: Cost Ratio of OpenAI embedding to Self-Hosted embedding

59 |

60 | > Note: this number is sensitive to load and embedding batch size, so please consider this approximate.

61 |

62 | In our blog post, we noted that using a g4dn.4xlarge (on-demand price: $1.20/hr) we were able to embed at about 9000 tokens per second using HuggingFace’s SentenceTransformers (which are pretty much as good as OpenAI’s embeddings). Doing some basic math of that rate and that node type indicates it is considerably cheaper (factor of 10 cheaper) to self-host embeddings (and that is before you start to think about things like ingress and egress fees).

63 |

64 | ### 6: Cost Ratio of OpenAI base vs fine tuned model queries

65 |

66 | It costs you 6 times as much to serve a fine tuned model as it does the base model on OpenAI. This is pretty exorbitant, but might make sense because of the possible multi-tenancy of base models. It also means it is far more cost effective to tweak the prompt for a base model than to fine tune a customized model.

67 |

68 | ### 1: Cost Ratio of Self-Hosted base vs fine-tuned model queries

69 |

70 | If you’re self hosting a model, then it more or less costs the same amount to serve a fine tuned model as it does to serve a base one: the models have the same number of parameters.

71 |