├── GenMM.py

├── LICENSE

├── README.md

├── __init__.py

├── configs

├── default.yaml

└── ganimator.yaml

├── dataset

├── blender_motion.py

├── bvh

│ ├── Quaternions.py

│ ├── __pycache__

│ │ ├── Quaternions.cpython-37.pyc

│ │ ├── bvh_io.cpython-37.pyc

│ │ ├── bvh_parser.cpython-37.pyc

│ │ └── bvh_writer.cpython-37.pyc

│ ├── bvh_io.py

│ ├── bvh_parser.py

│ └── bvh_writer.py

├── bvh_motion.py

├── motion.py

└── tracks_motion.py

├── demo.blend

├── docker

├── Dockerfile

├── README.md

├── apt-sources.list

├── requirements.txt

└── requirements_blender.txt

├── fix_contact.py

├── nearest_neighbor

├── losses.py

└── utils.py

├── run_random_generation.py

├── run_web_server.py

└── utils

├── base.py

├── contact.py

├── kinematics.py

├── skeleton.py

└── transforms.py

/GenMM.py:

--------------------------------------------------------------------------------

1 | import os

2 | import os.path as osp

3 | import numpy as np

4 | import torch

5 | import torch.nn.functional as F

6 |

7 | from utils.base import logger

8 |

9 | class GenMM:

10 | def __init__(self, mode = 'random_synthesis', noise_sigma = 1.0, coarse_ratio = 0.2, coarse_ratio_factor = 6, pyr_factor = 0.75, num_stages_limit = -1, device = 'cuda:0', silent = False):

11 | '''

12 | GenMM main constructor

13 | Args:

14 | device : str = 'cuda:0', default device.

15 | silent : bool = False, whether to mute the output.

16 | '''

17 | self.device = torch.device(device)

18 | self.silent = silent

19 |

20 | def _get_pyramid_lengths(self, final_len, coarse_ratio, pyr_factor):

21 | '''

22 | Get a list of pyramid lengths using given target length and ratio

23 | '''

24 | lengths = [int(np.round(final_len * coarse_ratio))]

25 | while lengths[-1] < final_len:

26 | lengths.append(int(np.round(lengths[-1] / pyr_factor)))

27 | if lengths[-1] == lengths[-2]:

28 | lengths[-1] += 1

29 | lengths[-1] = final_len

30 |

31 | return lengths

32 |

33 | def _get_target_pyramid(self, target, coarse_ratio, pyr_factor, num_stages_limit=-1):

34 | '''

35 | Reads a target motion(s) and create a pyraimd out of it. Ordered in increatorch.sing size

36 | '''

37 | self.num_target = len(target)

38 | lengths = []

39 | min_len = 10000

40 | for i in range(len(target)):

41 | new_length = self._get_pyramid_lengths(len(target[i].motion_data), coarse_ratio, pyr_factor)

42 | min_len = min(min_len, len(new_length))

43 | if num_stages_limit != -1:

44 | new_length = new_length[:num_stages_limit]

45 | lengths.append(new_length)

46 | for i in range(len(target)):

47 | lengths[i] = lengths[i][-min_len:]

48 | self.pyraimd_lengths = lengths

49 |

50 | target_pyramid = [[] for _ in range(len(lengths[0]))]

51 | for step in range(len(lengths[0])):

52 | for i in range(len(target)):

53 | length = lengths[i][step]

54 | target_pyramid[step].append(target[i].sample(size=length).to(self.device))

55 |

56 | if not self.silent:

57 | print('Levels:', lengths)

58 | for i in range(len(target_pyramid)):

59 | print(f'Number of clips in target pyramid {i} is {len(target_pyramid[i])}, ranging {[[tgt.min(), tgt.max()] for tgt in target_pyramid[i]]}')

60 |

61 | return target_pyramid

62 |

63 | def _get_initial_motion(self, init_length, noise_sigma):

64 | '''

65 | Prepare the initial motion for optimization

66 | '''

67 | initial_motion = F.interpolate(torch.cat([self.target_pyramid[0][i] for i in range(self.num_target)], dim=-1),

68 | size=init_length, mode='linear', align_corners=True)

69 | if noise_sigma > 0:

70 | initial_motion_w_noise = initial_motion + torch.randn_like(initial_motion) * noise_sigma

71 | initial_motion_w_noise = torch.fmod(initial_motion_w_noise, 1.0)

72 | else:

73 | initial_motion_w_noise = initial_motion

74 |

75 | if not self.silent:

76 | print('Initial motion:', initial_motion.min(), initial_motion.max())

77 | print('Initial motion with noise:', initial_motion_w_noise.min(), initial_motion_w_noise.max())

78 |

79 | return initial_motion_w_noise

80 |

81 | def run(self, target, criteria, num_frames, num_steps, noise_sigma, patch_size, coarse_ratio, pyr_factor, ext=None, debug_dir=None):

82 | '''

83 | generation function

84 | Args:

85 | mode : - string = 'x?', generate x times longer frames results

86 | : - int, specifying the number of times to generate

87 | noise_sigma : float = 1.0, random noise.

88 | coarse_ratio : float = 0.2, ratio at the coarse level.

89 | pyr_factor : float = 0.75, pyramid factor.

90 | num_stages_limit : int = -1, no limit.

91 | '''

92 | if debug_dir is not None:

93 | from tensorboardX import SummaryWriter

94 | writer = SummaryWriter(log_dir=debug_dir)

95 |

96 | # build target pyramid

97 | if 'patchsize' in coarse_ratio:

98 | coarse_ratio = patch_size * float(coarse_ratio.split('x_')[0]) / max([len(t.motion_data) for t in target])

99 | elif 'nframes' in coarse_ratio:

100 | coarse_ratio = float(coarse_ratio.split('x_')[0])

101 | else:

102 | raise ValueError('Unsupported coarse ratio specified')

103 | self.target_pyramid = self._get_target_pyramid(target, coarse_ratio, pyr_factor)

104 |

105 | # get the initial motion data

106 | if 'nframes' in num_frames:

107 | syn_length = int(sum([i[-1] for i in self.pyraimd_lengths]) * float(num_frames.split('x_')[0]))

108 | elif num_frames.isdigit():

109 | syn_length = int(num_frames)

110 | else:

111 | raise ValueError(f'Unsupported mode {self.mode}')

112 | self.synthesized_lengths = self._get_pyramid_lengths(syn_length, coarse_ratio, pyr_factor)

113 | if not self.silent:

114 | print('Synthesized lengths:', self.synthesized_lengths)

115 | self.synthesized = self._get_initial_motion(self.synthesized_lengths[0], noise_sigma)

116 |

117 | # perform the optimization

118 | self.synthesized.requires_grad_(False)

119 | self.pbar = logger(num_steps, len(self.target_pyramid))

120 | for lvl, lvl_target in enumerate(self.target_pyramid):

121 | self.pbar.new_lvl()

122 | if lvl > 0:

123 | with torch.no_grad():

124 | self.synthesized = F.interpolate(self.synthesized.detach(), size=self.synthesized_lengths[lvl], mode='linear')

125 |

126 | self.synthesized, losses = GenMM.match_and_blend(self.synthesized, lvl_target, criteria, num_steps, self.pbar, ext=ext)

127 |

128 | criteria.clean_cache()

129 | if debug_dir is not None:

130 | for itr in range(len(losses)):

131 | writer.add_scalar(f'optimize/losses_lvl{lvl}', losses[itr], itr)

132 | self.pbar.pbar.close()

133 |

134 | return self.synthesized.detach()

135 |

136 |

137 | @staticmethod

138 | @torch.no_grad()

139 | def match_and_blend(synthesized, targets, criteria, n_steps, pbar, ext=None):

140 | '''

141 | Minimizes criteria bewteen synthesized and target

142 | Args:

143 | synthesized : torch.Tensor, optimized motion data

144 | targets : torch.Tensor, target motion data

145 | criteria : optimmize target function

146 | n_steps : int, number of steps to optimize

147 | pbar : logger

148 | ext : extra configurations or constraints (optional)

149 | '''

150 | losses = []

151 | for _i in range(n_steps):

152 | synthesized, loss = criteria(synthesized, targets, ext=ext, return_blended_results=True)

153 |

154 | # Update staus

155 | losses.append(loss.item())

156 | pbar.step()

157 | pbar.print()

158 |

159 | return synthesized, losses

160 |

161 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | Apache License

2 | Version 2.0, January 2004

3 | http://www.apache.org/licenses/

4 |

5 | TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

6 |

7 | 1. Definitions.

8 |

9 | "License" shall mean the terms and conditions for use, reproduction,

10 | and distribution as defined by Sections 1 through 9 of this document.

11 |

12 | "Licensor" shall mean the copyright owner or entity authorized by

13 | the copyright owner that is granting the License.

14 |

15 | "Legal Entity" shall mean the union of the acting entity and all

16 | other entities that control, are controlled by, or are under common

17 | control with that entity. For the purposes of this definition,

18 | "control" means (i) the power, direct or indirect, to cause the

19 | direction or management of such entity, whether by contract or

20 | otherwise, or (ii) ownership of fifty percent (50%) or more of the

21 | outstanding shares, or (iii) beneficial ownership of such entity.

22 |

23 | "You" (or "Your") shall mean an individual or Legal Entity

24 | exercising permissions granted by this License.

25 |

26 | "Source" form shall mean the preferred form for making modifications,

27 | including but not limited to software source code, documentation

28 | source, and configuration files.

29 |

30 | "Object" form shall mean any form resulting from mechanical

31 | transformation or translation of a Source form, including but

32 | not limited to compiled object code, generated documentation,

33 | and conversions to other media types.

34 |

35 | "Work" shall mean the work of authorship, whether in Source or

36 | Object form, made available under the License, as indicated by a

37 | copyright notice that is included in or attached to the work

38 | (an example is provided in the Appendix below).

39 |

40 | "Derivative Works" shall mean any work, whether in Source or Object

41 | form, that is based on (or derived from) the Work and for which the

42 | editorial revisions, annotations, elaborations, or other modifications

43 | represent, as a whole, an original work of authorship. For the purposes

44 | of this License, Derivative Works shall not include works that remain

45 | separable from, or merely link (or bind by name) to the interfaces of,

46 | the Work and Derivative Works thereof.

47 |

48 | "Contribution" shall mean any work of authorship, including

49 | the original version of the Work and any modifications or additions

50 | to that Work or Derivative Works thereof, that is intentionally

51 | submitted to Licensor for inclusion in the Work by the copyright owner

52 | or by an individual or Legal Entity authorized to submit on behalf of

53 | the copyright owner. For the purposes of this definition, "submitted"

54 | means any form of electronic, verbal, or written communication sent

55 | to the Licensor or its representatives, including but not limited to

56 | communication on electronic mailing lists, source code control systems,

57 | and issue tracking systems that are managed by, or on behalf of, the

58 | Licensor for the purpose of discussing and improving the Work, but

59 | excluding communication that is conspicuously marked or otherwise

60 | designated in writing by the copyright owner as "Not a Contribution."

61 |

62 | "Contributor" shall mean Licensor and any individual or Legal Entity

63 | on behalf of whom a Contribution has been received by Licensor and

64 | subsequently incorporated within the Work.

65 |

66 | 2. Grant of Copyright License. Subject to the terms and conditions of

67 | this License, each Contributor hereby grants to You a perpetual,

68 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

69 | copyright license to reproduce, prepare Derivative Works of,

70 | publicly display, publicly perform, sublicense, and distribute the

71 | Work and such Derivative Works in Source or Object form.

72 |

73 | 3. Grant of Patent License. Subject to the terms and conditions of

74 | this License, each Contributor hereby grants to You a perpetual,

75 | worldwide, non-exclusive, no-charge, royalty-free, irrevocable

76 | (except as stated in this section) patent license to make, have made,

77 | use, offer to sell, sell, import, and otherwise transfer the Work,

78 | where such license applies only to those patent claims licensable

79 | by such Contributor that are necessarily infringed by their

80 | Contribution(s) alone or by combination of their Contribution(s)

81 | with the Work to which such Contribution(s) was submitted. If You

82 | institute patent litigation against any entity (including a

83 | cross-claim or counterclaim in a lawsuit) alleging that the Work

84 | or a Contribution incorporated within the Work constitutes direct

85 | or contributory patent infringement, then any patent licenses

86 | granted to You under this License for that Work shall terminate

87 | as of the date such litigation is filed.

88 |

89 | 4. Redistribution. You may reproduce and distribute copies of the

90 | Work or Derivative Works thereof in any medium, with or without

91 | modifications, and in Source or Object form, provided that You

92 | meet the following conditions:

93 |

94 | (a) You must give any other recipients of the Work or

95 | Derivative Works a copy of this License; and

96 |

97 | (b) You must cause any modified files to carry prominent notices

98 | stating that You changed the files; and

99 |

100 | (c) You must retain, in the Source form of any Derivative Works

101 | that You distribute, all copyright, patent, trademark, and

102 | attribution notices from the Source form of the Work,

103 | excluding those notices that do not pertain to any part of

104 | the Derivative Works; and

105 |

106 | (d) If the Work includes a "NOTICE" text file as part of its

107 | distribution, then any Derivative Works that You distribute must

108 | include a readable copy of the attribution notices contained

109 | within such NOTICE file, excluding those notices that do not

110 | pertain to any part of the Derivative Works, in at least one

111 | of the following places: within a NOTICE text file distributed

112 | as part of the Derivative Works; within the Source form or

113 | documentation, if provided along with the Derivative Works; or,

114 | within a display generated by the Derivative Works, if and

115 | wherever such third-party notices normally appear. The contents

116 | of the NOTICE file are for informational purposes only and

117 | do not modify the License. You may add Your own attribution

118 | notices within Derivative Works that You distribute, alongside

119 | or as an addendum to the NOTICE text from the Work, provided

120 | that such additional attribution notices cannot be construed

121 | as modifying the License.

122 |

123 | You may add Your own copyright statement to Your modifications and

124 | may provide additional or different license terms and conditions

125 | for use, reproduction, or distribution of Your modifications, or

126 | for any such Derivative Works as a whole, provided Your use,

127 | reproduction, and distribution of the Work otherwise complies with

128 | the conditions stated in this License.

129 |

130 | 5. Submission of Contributions. Unless You explicitly state otherwise,

131 | any Contribution intentionally submitted for inclusion in the Work

132 | by You to the Licensor shall be under the terms and conditions of

133 | this License, without any additional terms or conditions.

134 | Notwithstanding the above, nothing herein shall supersede or modify

135 | the terms of any separate license agreement you may have executed

136 | with Licensor regarding such Contributions.

137 |

138 | 6. Trademarks. This License does not grant permission to use the trade

139 | names, trademarks, service marks, or product names of the Licensor,

140 | except as required for reasonable and customary use in describing the

141 | origin of the Work and reproducing the content of the NOTICE file.

142 |

143 | 7. Disclaimer of Warranty. Unless required by applicable law or

144 | agreed to in writing, Licensor provides the Work (and each

145 | Contributor provides its Contributions) on an "AS IS" BASIS,

146 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

147 | implied, including, without limitation, any warranties or conditions

148 | of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

149 | PARTICULAR PURPOSE. You are solely responsible for determining the

150 | appropriateness of using or redistributing the Work and assume any

151 | risks associated with Your exercise of permissions under this License.

152 |

153 | 8. Limitation of Liability. In no event and under no legal theory,

154 | whether in tort (including negligence), contract, or otherwise,

155 | unless required by applicable law (such as deliberate and grossly

156 | negligent acts) or agreed to in writing, shall any Contributor be

157 | liable to You for damages, including any direct, indirect, special,

158 | incidental, or consequential damages of any character arising as a

159 | result of this License or out of the use or inability to use the

160 | Work (including but not limited to damages for loss of goodwill,

161 | work stoppage, computer failure or malfunction, or any and all

162 | other commercial damages or losses), even if such Contributor

163 | has been advised of the possibility of such damages.

164 |

165 | 9. Accepting Warranty or Additional Liability. While redistributing

166 | the Work or Derivative Works thereof, You may choose to offer,

167 | and charge a fee for, acceptance of support, warranty, indemnity,

168 | or other liability obligations and/or rights consistent with this

169 | License. However, in accepting such obligations, You may act only

170 | on Your own behalf and on Your sole responsibility, not on behalf

171 | of any other Contributor, and only if You agree to indemnify,

172 | defend, and hold each Contributor harmless for any liability

173 | incurred by, or claims asserted against, such Contributor by reason

174 | of your accepting any such warranty or additional liability.

175 |

176 | END OF TERMS AND CONDITIONS

177 |

178 | APPENDIX: How to apply the Apache License to your work.

179 |

180 | To apply the Apache License to your work, attach the following

181 | boilerplate notice, with the fields enclosed by brackets "[]"

182 | replaced with your own identifying information. (Don't include

183 | the brackets!) The text should be enclosed in the appropriate

184 | comment syntax for the file format. We also recommend that a

185 | file or class name and description of purpose be included on the

186 | same "printed page" as the copyright notice for easier

187 | identification within third-party archives.

188 |

189 | Copyright [yyyy] [name of copyright owner]

190 |

191 | Licensed under the Apache License, Version 2.0 (the "License");

192 | you may not use this file except in compliance with the License.

193 | You may obtain a copy of the License at

194 |

195 | http://www.apache.org/licenses/LICENSE-2.0

196 |

197 | Unless required by applicable law or agreed to in writing, software

198 | distributed under the License is distributed on an "AS IS" BASIS,

199 | WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

200 | See the License for the specific language governing permissions and

201 | limitations under the License.

202 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

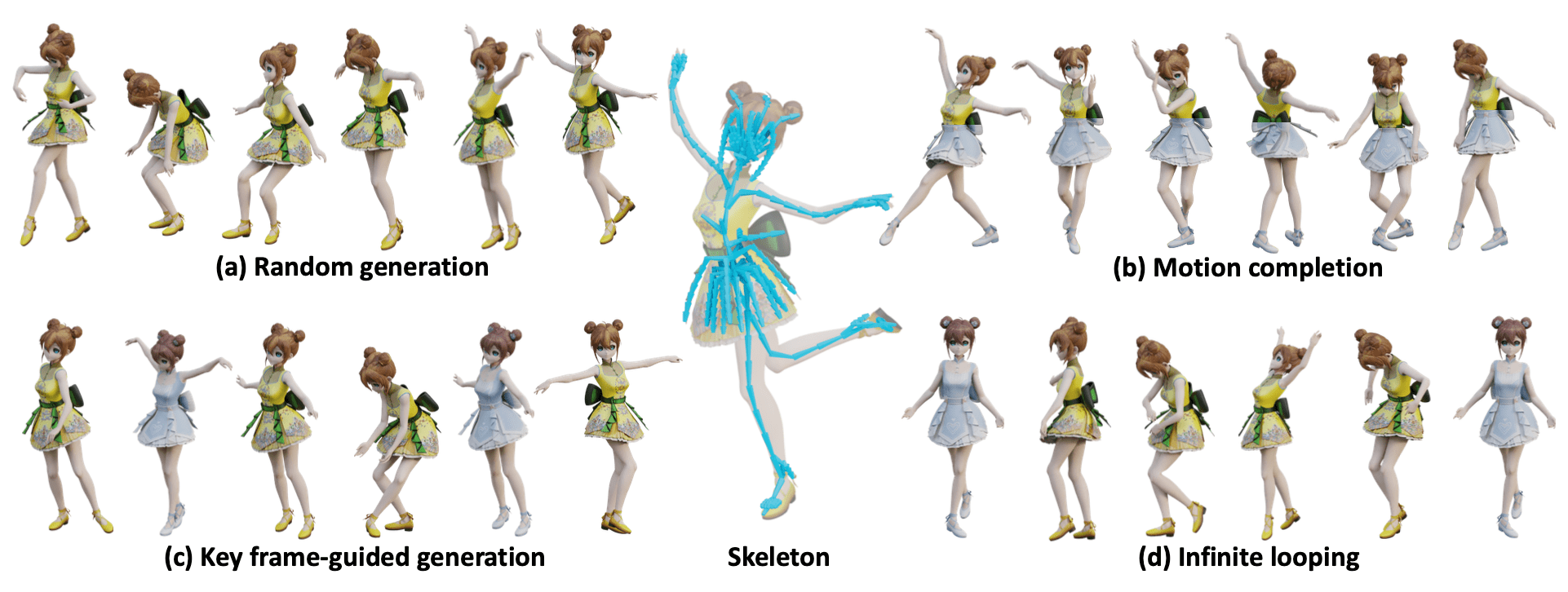

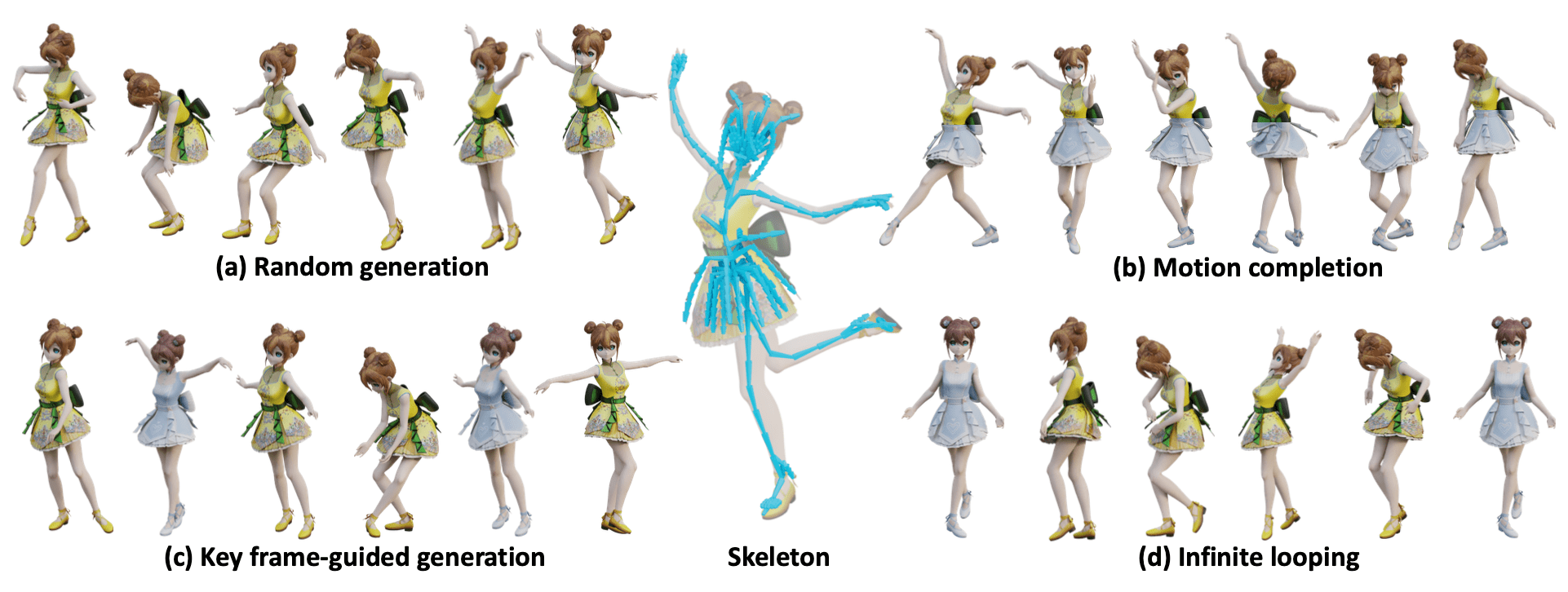

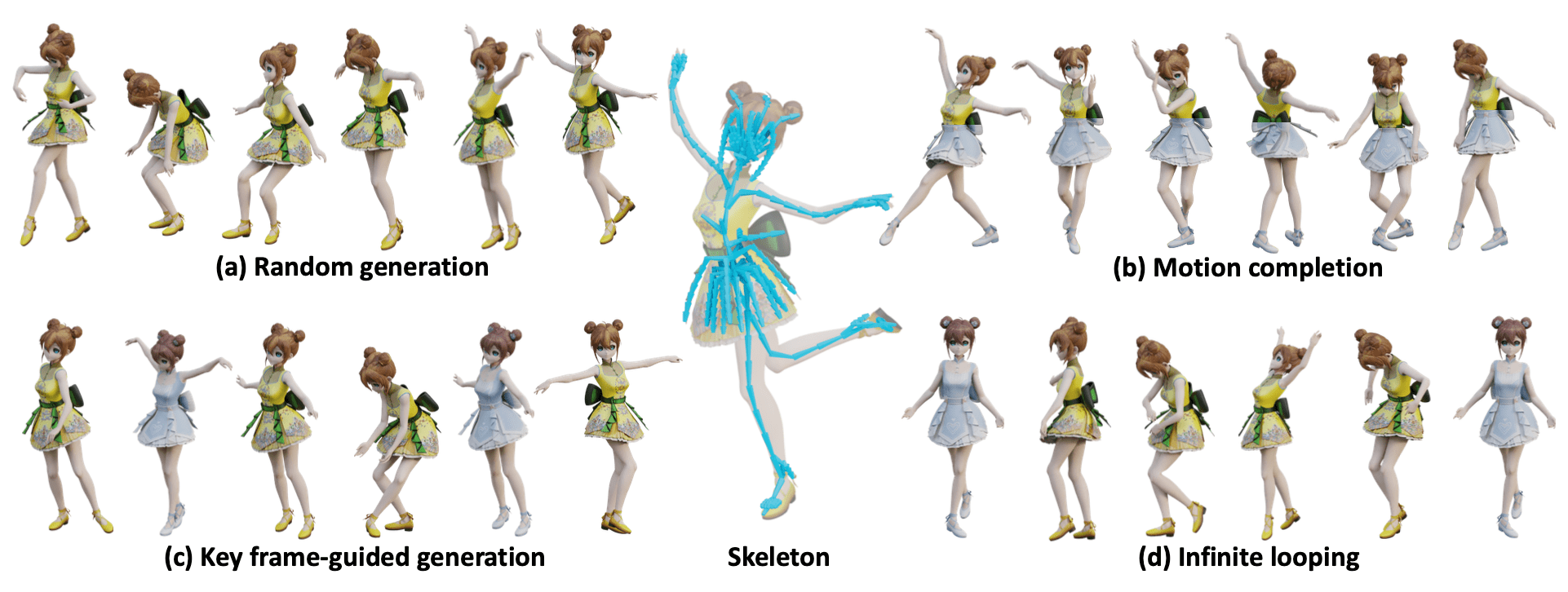

1 | # Example-based Motion Synthesis via Generative Motion Matching, ACM Transactions on Graphics (Proceedings of SIGGRAPH 2023)

2 |

3 | ##### [Weiyu Li*](https://wyysf-98.github.io/), [Xuelin Chen*†](https://xuelin-chen.github.io/), [Peizhuo Li](https://peizhuoli.github.io/), [Olga Sorkine-Hornung](https://igl.ethz.ch/people/sorkine/), [Baoquan Chen](https://cfcs.pku.edu.cn/baoquan/)

4 |

5 | #### [Project Page](https://wyysf-98.github.io/GenMM) | [ArXiv](https://arxiv.org/abs/2306.00378) | [Paper](https://wyysf-98.github.io/GenMM/paper/Paper_high_res.pdf) | [Video](https://youtu.be/lehnxcade4I)

6 |

7 |

8 |  9 |

9 |

10 |

11 | All Code and demo will be released in this week(still ongoing...) 🏗️ 🚧 🔨

12 |

13 | - [x] Release main code

14 | - [x] Release blender addon

15 | - [x] Detailed README and installation guide

16 | - [ ] Release skeleton-aware component, WIP as we need to split the joints into groups manually.

17 | - [ ] Release codes for evaluation

18 |

19 | ## Prerequisite

20 |

21 | Setup environment

22 |

23 | :smiley: We also provide a Dockerfile for easy installation, see [Setup using Docker](./docker/README.md).

24 |

25 | - Python 3.8

26 | - PyTorch 1.12.1

27 | - [unfoldNd](https://github.com/f-dangel/unfoldNd)

28 |

29 | Clone this repository.

30 |

31 | ```sh

32 | git clone git@github.com:wyysf-98/GenMM.git

33 | ```

34 |

35 | Install the required packages.

36 |

37 | ```sh

38 | conda create -n GenMM python=3.8

39 | conda activate GenMM

40 | conda install -c pytorch pytorch=1.12.1 torchvision=0.13.1 cudatoolkit=11.3 && \

41 | pip install -r docker/requirements.txt

42 | pip install torch-scatter==2.1.1

43 | ```

44 |

45 |

46 |

47 | ## Quick inference demo

48 | For local quick inference demo using .bvh file, you can use

49 |

50 | ```sh

51 | python run_random_generation.py -i './data/Malcolm/Gangnam-Style.bvh'

52 | ```

53 | More configuration can be found in the `run_random_generation.py`.

54 | We use an Apple M1 and NVIDIA Tesla V100 with 32 GB RAM to generate each motion, which takes about ~0.2s and ~0.05s as mentioned in our paper.

55 |

56 | ## Blender add-on

57 | You can install and use the blender add-on with easy installation as our method is efficient and you do not need to install CUDA Toolkit.

58 | We test our code using blender 3.22.0, and will support 2.8.0 in the future.

59 |

60 | Step 1: Find yout blender python path. Common paths are as follows

61 | ```sh

62 | (Windows) 'C:\Program Files\Blender Foundation\Blender 3.2\3.2\python\bin'

63 | (Linux) '/path/to/blender/blender-path/3.2/python/bin'

64 | (Windows) '/Applications/Blender.app/Contents/Resources/3.2/python/bin'

65 | ```

66 |

67 | Step 2: Install required packages. Open your shell(Linux) or powershell(Windows),

68 | ```sh

69 | cd {your python path} && pip3 install -r docker/requirements.txt && pip3 install torch-scatter==2.1.0 -f https://data.pyg.org/whl/torch-1.12.0+${CUDA}.html

70 | ```

71 | , where ${CUDA} should be replaced by either cpu, cu117, or cu118 depending on your PyTorch installation.

72 | On my MacOS with M1 cpu,

73 |

74 | ```sh

75 | cd /Applications/Blender.app/Contents/Resources/3.2/python/bin && pip3 install -r docker/requirements_blender.txt && pip3 install torch-scatter==2.1.0 -f https://data.pyg.org/whl/torch-1.12.0+cpu.html

76 | ```

77 |

78 | Step 3: Install add-on in blender. [Blender Add-ons Official Tutorial](https://docs.blender.org/manual/en/latest/editors/preferences/addons.html). `edit -> Preferences -> Add-ons -> Install -> Select the downloaded .zip file`

79 |

80 | Step 4: Have fun! Click the armature and you will find a `GenMM` tag.

81 |

82 | (GPU support) If you have GPU and CUDA Toolskits installed, we automatically dectect the running device.

83 |

84 | Feel free to submit an issue if you run into any issues during the installation :)

85 |

86 | ## Acknowledgement

87 |

88 | We thank [@stefanonuvoli](https://github.com/stefanonuvoli/skinmixer) for the help for the discussion of implementation about `Motion Reassembly` part (we eventually manually merged the meshes of different characters). And [@Radamés Ajna](https://github.com/radames) for the help of a better huggingface demo.

89 |

90 |

91 | ## Citation

92 |

93 | If you find our work useful for your research, please consider citing using the following BibTeX entry.

94 |

95 | ```BibTeX

96 | @article{10.1145/weiyu23GenMM,

97 | author = {Li, Weiyu and Chen, Xuelin and Li, Peizhuo and Sorkine-Hornung, Olga and Chen, Baoquan},

98 | title = {Example-Based Motion Synthesis via Generative Motion Matching},

99 | journal = {ACM Transactions on Graphics (TOG)},

100 | volume = {42},

101 | number = {4},

102 | year = {2023},

103 | articleno = {94},

104 | doi = {10.1145/3592395},

105 | publisher = {Association for Computing Machinery},

106 | }

107 | ```

108 |

--------------------------------------------------------------------------------

/__init__.py:

--------------------------------------------------------------------------------

1 | # This program is free software; you can redistribute it and/or modify

2 | # it under the terms of the GNU General Public License as published by

3 | # the Free Software Foundation; either version 3 of the License, or

4 | # (at your option) any later version.

5 | #

6 | # This program is distributed in the hope that it will be useful, but

7 | # WITHOUT ANY WARRANTY; without even the implied warranty of

8 | # MERCHANTIBILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU

9 | # General Public License for more details.

10 | #

11 | # You should have received a copy of the GNU General Public License

12 | # along with this program. If not, see .

13 | import os

14 | import sys

15 | import bpy

16 | import torch

17 | import mathutils

18 | import numpy as np

19 | from math import degrees, radians, ceil

20 | from mathutils import Vector, Matrix, Euler

21 | from typing import List, Iterable, Tuple, Any, Dict

22 |

23 | abs_path = os.path.abspath(__file__)

24 | sys.path.append(os.path.dirname(abs_path))

25 | from GenMM import GenMM

26 | from nearest_neighbor.losses import PatchCoherentLoss

27 | from dataset.blender_motion import BlenderMotion

28 |

29 | bl_info = {

30 | "name" : "GenMM",

31 | "author" : "Weiyu Li",

32 | "description" : "Blender addon for SIGGRAPH paper 'Example-Based Motion Synthesis via Generative Motion Matching'",

33 | "blender" : (3, 2, 0),

34 | "version" : (0, 0, 1),

35 | "location": "3D View",

36 | "description": "Synthesis novel motions form a few exemplars.",

37 | "location" : "",

38 | "support": "TESTING",

39 | "warning" : "",

40 | "category" : "Generic"

41 | }

42 |

43 |

44 | # This function is modified from

45 | # https://github.com/bwrsandman/blender-addons/blob/master/io_anim_bvh

46 | def get_bvh_data(context,

47 | frame_end,

48 | frame_start,

49 | global_scale=1.0,

50 | rotate_mode='NATIVE',

51 | root_transform_only=False,

52 | ):

53 |

54 | def ensure_rot_order(rot_order_str):

55 | if set(rot_order_str) != {'X', 'Y', 'Z'}:

56 | rot_order_str = "XYZ"

57 | return rot_order_str

58 |

59 | file_str = []

60 |

61 | obj = context.object

62 | arm = obj.data

63 |

64 | # Build a dictionary of children.

65 | # None for parentless

66 | children = {None: []}

67 |

68 | # initialize with blank lists

69 | for bone in arm.bones:

70 | children[bone.name] = []

71 |

72 | # keep bone order from armature, no sorting, not esspential but means

73 | # we can maintain order from import -> export which secondlife incorrectly expects.

74 | for bone in arm.bones:

75 | children[getattr(bone.parent, "name", None)].append(bone.name)

76 |

77 | # bone name list in the order that the bones are written

78 | serialized_names = []

79 |

80 | node_locations = {}

81 |

82 | file_str.append("HIERARCHY\n")

83 |

84 | def write_recursive_nodes(bone_name, indent):

85 | my_children = children[bone_name]

86 |

87 | indent_str = "\t" * indent

88 |

89 | bone = arm.bones[bone_name]

90 | pose_bone = obj.pose.bones[bone_name]

91 | loc = bone.head_local

92 | node_locations[bone_name] = loc

93 |

94 | if rotate_mode == "NATIVE":

95 | rot_order_str = ensure_rot_order(pose_bone.rotation_mode)

96 | else:

97 | rot_order_str = rotate_mode

98 |

99 | # make relative if we can

100 | if bone.parent:

101 | loc = loc - node_locations[bone.parent.name]

102 |

103 | if indent:

104 | file_str.append("%sJOINT %s\n" % (indent_str, bone_name))

105 | else:

106 | file_str.append("%sROOT %s\n" % (indent_str, bone_name))

107 |

108 | file_str.append("%s{\n" % indent_str)

109 | file_str.append("%s\tOFFSET %.6f %.6f %.6f\n" % (indent_str, loc.x * global_scale, loc.y * global_scale, loc.z * global_scale))

110 | if (bone.use_connect or root_transform_only) and bone.parent:

111 | file_str.append("%s\tCHANNELS 3 %srotation %srotation %srotation\n" % (indent_str, rot_order_str[0], rot_order_str[1], rot_order_str[2]))

112 | else:

113 | file_str.append("%s\tCHANNELS 6 Xposition Yposition Zposition %srotation %srotation %srotation\n" % (indent_str, rot_order_str[0], rot_order_str[1], rot_order_str[2]))

114 |

115 | if my_children:

116 | # store the location for the children

117 | # to get their relative offset

118 |

119 | # Write children

120 | for child_bone in my_children:

121 | serialized_names.append(child_bone)

122 | write_recursive_nodes(child_bone, indent + 1)

123 |

124 | else:

125 | # Write the bone end.

126 | file_str.append("%s\tEnd Site\n" % indent_str)

127 | file_str.append("%s\t{\n" % indent_str)

128 | loc = bone.tail_local - node_locations[bone_name]

129 | file_str.append("%s\t\tOFFSET %.6f %.6f %.6f\n" % (indent_str, loc.x * global_scale, loc.y * global_scale, loc.z * global_scale))

130 | file_str.append("%s\t}\n" % indent_str)

131 |

132 | file_str.append("%s}\n" % indent_str)

133 |

134 | if len(children[None]) == 1:

135 | key = children[None][0]

136 | serialized_names.append(key)

137 | indent = 0

138 |

139 | write_recursive_nodes(key, indent)

140 |

141 | else:

142 | # Write a dummy parent node, with a dummy key name

143 | # Just be sure it's not used by another bone!

144 | i = 0

145 | key = "__%d" % i

146 | while key in children:

147 | i += 1

148 | key = "__%d" % i

149 | file_str.append("ROOT %s\n" % key)

150 | file_str.append("{\n")

151 | file_str.append("\tOFFSET 0.0 0.0 0.0\n")

152 | file_str.append("\tCHANNELS 0\n") # Xposition Yposition Zposition Xrotation Yrotation Zrotation

153 | indent = 1

154 |

155 | # Write children

156 | for child_bone in children[None]:

157 | serialized_names.append(child_bone)

158 | write_recursive_nodes(child_bone, indent)

159 |

160 | file_str.append("}\n")

161 | file_str = ''.join(file_str)

162 | # redefine bones as sorted by serialized_names

163 | # so we can write motion

164 |

165 | class DecoratedBone:

166 | __slots__ = (

167 | # Bone name, used as key in many places.

168 | "name",

169 | "parent", # decorated bone parent, set in a later loop

170 | # Blender armature bone.

171 | "rest_bone",

172 | # Blender pose bone.

173 | "pose_bone",

174 | # Blender pose matrix.

175 | "pose_mat",

176 | # Blender rest matrix (armature space).

177 | "rest_arm_mat",

178 | # Blender rest matrix (local space).

179 | "rest_local_mat",

180 | # Pose_mat inverted.

181 | "pose_imat",

182 | # Rest_arm_mat inverted.

183 | "rest_arm_imat",

184 | # Rest_local_mat inverted.

185 | "rest_local_imat",

186 | # Last used euler to preserve euler compatibility in between keyframes.

187 | "prev_euler",

188 | # Is the bone disconnected to the parent bone?

189 | "skip_position",

190 | "rot_order",

191 | "rot_order_str",

192 | # Needed for the euler order when converting from a matrix.

193 | "rot_order_str_reverse",

194 | )

195 |

196 | _eul_order_lookup = {

197 | 'XYZ': (0, 1, 2),

198 | 'XZY': (0, 2, 1),

199 | 'YXZ': (1, 0, 2),

200 | 'YZX': (1, 2, 0),

201 | 'ZXY': (2, 0, 1),

202 | 'ZYX': (2, 1, 0),

203 | }

204 |

205 | def __init__(self, bone_name):

206 | self.name = bone_name

207 | self.rest_bone = arm.bones[bone_name]

208 | self.pose_bone = obj.pose.bones[bone_name]

209 |

210 | if rotate_mode == "NATIVE":

211 | self.rot_order_str = ensure_rot_order(self.pose_bone.rotation_mode)

212 | else:

213 | self.rot_order_str = rotate_mode

214 | self.rot_order_str_reverse = self.rot_order_str[::-1]

215 |

216 | self.rot_order = DecoratedBone._eul_order_lookup[self.rot_order_str]

217 |

218 | self.pose_mat = self.pose_bone.matrix

219 |

220 | # mat = self.rest_bone.matrix # UNUSED

221 | self.rest_arm_mat = self.rest_bone.matrix_local

222 | self.rest_local_mat = self.rest_bone.matrix

223 |

224 | # inverted mats

225 | self.pose_imat = self.pose_mat.inverted()

226 | self.rest_arm_imat = self.rest_arm_mat.inverted()

227 | self.rest_local_imat = self.rest_local_mat.inverted()

228 |

229 | self.parent = None

230 | self.prev_euler = Euler((0.0, 0.0, 0.0), self.rot_order_str_reverse)

231 | self.skip_position = ((self.rest_bone.use_connect or root_transform_only) and self.rest_bone.parent)

232 |

233 | def update_posedata(self):

234 | self.pose_mat = self.pose_bone.matrix

235 | self.pose_imat = self.pose_mat.inverted()

236 |

237 | def __repr__(self):

238 | if self.parent:

239 | return "[\"%s\" child on \"%s\"]\n" % (self.name, self.parent.name)

240 | else:

241 | return "[\"%s\" root bone]\n" % (self.name)

242 |

243 | bones_decorated = [DecoratedBone(bone_name) for bone_name in serialized_names]

244 |

245 | # Assign parents

246 | bones_decorated_dict = {dbone.name: dbone for dbone in bones_decorated}

247 | for dbone in bones_decorated:

248 | parent = dbone.rest_bone.parent

249 | if parent:

250 | dbone.parent = bones_decorated_dict[parent.name]

251 | del bones_decorated_dict

252 | # finish assigning parents

253 |

254 | scene = context.scene

255 | frame_current = scene.frame_current

256 |

257 | file_str += "MOTION\n"

258 | file_str += "Frames: %d\n" % (frame_end - frame_start + 1)

259 | file_str += "Frame Time: %.6f\n" % (1.0 / (scene.render.fps / scene.render.fps_base))

260 |

261 | for frame in range(frame_start, frame_end + 1):

262 | scene.frame_set(frame)

263 |

264 | for dbone in bones_decorated:

265 | dbone.update_posedata()

266 |

267 | for dbone in bones_decorated:

268 | trans = Matrix.Translation(dbone.rest_bone.head_local)

269 | itrans = Matrix.Translation(-dbone.rest_bone.head_local)

270 |

271 | if dbone.parent:

272 | mat_final = dbone.parent.rest_arm_mat @ dbone.parent.pose_imat @ dbone.pose_mat @ dbone.rest_arm_imat

273 | mat_final = itrans @ mat_final @ trans

274 | loc = mat_final.to_translation() + (dbone.rest_bone.head_local - dbone.parent.rest_bone.head_local)

275 | else:

276 | mat_final = dbone.pose_mat @ dbone.rest_arm_imat

277 | mat_final = itrans @ mat_final @ trans

278 | loc = mat_final.to_translation() + dbone.rest_bone.head

279 |

280 | # keep eulers compatible, no jumping on interpolation.

281 | rot = mat_final.to_euler(dbone.rot_order_str_reverse, dbone.prev_euler)

282 |

283 | if not dbone.skip_position:

284 | file_str += "%.6f %.6f %.6f " % (loc * global_scale)[:]

285 |

286 | file_str += "%.6f %.6f %.6f " % (degrees(rot[dbone.rot_order[0]]), degrees(rot[dbone.rot_order[1]]), degrees(rot[dbone.rot_order[2]]))

287 |

288 | dbone.prev_euler = rot

289 |

290 | file_str += "\n"

291 |

292 | scene.frame_set(frame_current)

293 |

294 | return file_str

295 |

296 |

297 | class BVH_Node:

298 | __slots__ = (

299 | # Bvh joint name.

300 | 'name',

301 | # BVH_Node type or None for no parent.

302 | 'parent',

303 | # A list of children of this type..

304 | 'children',

305 | # Worldspace rest location for the head of this node.

306 | 'rest_head_world',

307 | # Localspace rest location for the head of this node.

308 | 'rest_head_local',

309 | # Worldspace rest location for the tail of this node.

310 | 'rest_tail_world',

311 | # Worldspace rest location for the tail of this node.

312 | 'rest_tail_local',

313 | # List of 6 ints, -1 for an unused channel,

314 | # otherwise an index for the BVH motion data lines,

315 | # loc triple then rot triple.

316 | 'channels',

317 | # A triple of indices as to the order rotation is applied.

318 | # [0,1,2] is x/y/z - [None, None, None] if no rotation..

319 | 'rot_order',

320 | # Same as above but a string 'XYZ' format..

321 | 'rot_order_str',

322 | # A list one tuple's one for each frame: (locx, locy, locz, rotx, roty, rotz),

323 | # euler rotation ALWAYS stored xyz order, even when native used.

324 | 'anim_data',

325 | # Convenience function, bool, same as: (channels[0] != -1 or channels[1] != -1 or channels[2] != -1).

326 | 'has_loc',

327 | # Convenience function, bool, same as: (channels[3] != -1 or channels[4] != -1 or channels[5] != -1).

328 | 'has_rot',

329 | # Index from the file, not strictly needed but nice to maintain order.

330 | 'index',

331 | # Use this for whatever you want.

332 | 'temp',

333 | )

334 |

335 | _eul_order_lookup = {

336 | (None, None, None): 'XYZ', # XXX Dummy one, no rotation anyway!

337 | (0, 1, 2): 'XYZ',

338 | (0, 2, 1): 'XZY',

339 | (1, 0, 2): 'YXZ',

340 | (1, 2, 0): 'YZX',

341 | (2, 0, 1): 'ZXY',

342 | (2, 1, 0): 'ZYX',

343 | }

344 |

345 | def __init__(self, name, rest_head_world, rest_head_local, parent, channels, rot_order, index):

346 | self.name = name

347 | self.rest_head_world = rest_head_world

348 | self.rest_head_local = rest_head_local

349 | self.rest_tail_world = None

350 | self.rest_tail_local = None

351 | self.parent = parent

352 | self.channels = channels

353 | self.rot_order = tuple(rot_order)

354 | self.rot_order_str = BVH_Node._eul_order_lookup[self.rot_order]

355 | self.index = index

356 |

357 | # convenience functions

358 | self.has_loc = channels[0] != -1 or channels[1] != -1 or channels[2] != -1

359 | self.has_rot = channels[3] != -1 or channels[4] != -1 or channels[5] != -1

360 |

361 | self.children = []

362 |

363 | # List of 6 length tuples: (lx, ly, lz, rx, ry, rz)

364 | # even if the channels aren't used they will just be zero.

365 | self.anim_data = [(0, 0, 0, 0, 0, 0)]

366 |

367 | def __repr__(self):

368 | return (

369 | "BVH name: '%s', rest_loc:(%.3f,%.3f,%.3f), rest_tail:(%.3f,%.3f,%.3f)" % (

370 | self.name,

371 | *self.rest_head_world,

372 | *self.rest_head_world,

373 | )

374 | )

375 |

376 |

377 | def sorted_nodes(bvh_nodes):

378 | bvh_nodes_list = list(bvh_nodes.values())

379 | bvh_nodes_list.sort(key=lambda bvh_node: bvh_node.index)

380 | return bvh_nodes_list

381 |

382 |

383 | def read_bvh(context, bvh_str, rotate_mode='XYZ', global_scale=1.0):

384 | # Separate into a list of lists, each line a list of words.

385 | file_lines = bvh_str

386 | # Non standard carriage returns?

387 | if len(file_lines) == 1:

388 | file_lines = file_lines[0].split('\r')

389 |

390 | # Split by whitespace.

391 | file_lines = [ll for ll in [l.split() for l in file_lines] if ll]

392 |

393 | # Create hierarchy as empties

394 | if file_lines[0][0].lower() == 'hierarchy':

395 | # print 'Importing the BVH Hierarchy for:', file_path

396 | pass

397 | else:

398 | raise Exception("This is not a BVH file")

399 |

400 | bvh_nodes = {None: None}

401 | bvh_nodes_serial = [None]

402 | bvh_frame_count = None

403 | bvh_frame_time = None

404 |

405 | channelIndex = -1

406 |

407 | lineIdx = 0 # An index for the file.

408 | while lineIdx < len(file_lines) - 1:

409 | if file_lines[lineIdx][0].lower() in {'root', 'joint'}:

410 |

411 | # Join spaces into 1 word with underscores joining it.

412 | if len(file_lines[lineIdx]) > 2:

413 | file_lines[lineIdx][1] = '_'.join(file_lines[lineIdx][1:])

414 | file_lines[lineIdx] = file_lines[lineIdx][:2]

415 |

416 | # MAY NEED TO SUPPORT MULTIPLE ROOTS HERE! Still unsure weather multiple roots are possible?

417 |

418 | # Make sure the names are unique - Object names will match joint names exactly and both will be unique.

419 | name = file_lines[lineIdx][1]

420 |

421 | # print '%snode: %s, parent: %s' % (len(bvh_nodes_serial) * ' ', name, bvh_nodes_serial[-1])

422 |

423 | lineIdx += 2 # Increment to the next line (Offset)

424 | rest_head_local = global_scale * Vector((

425 | float(file_lines[lineIdx][1]),

426 | float(file_lines[lineIdx][2]),

427 | float(file_lines[lineIdx][3]),

428 | ))

429 | lineIdx += 1 # Increment to the next line (Channels)

430 |

431 | # newChannel[Xposition, Yposition, Zposition, Xrotation, Yrotation, Zrotation]

432 | # newChannel references indices to the motiondata,

433 | # if not assigned then -1 refers to the last value that will be added on loading at a value of zero, this is appended

434 | # We'll add a zero value onto the end of the MotionDATA so this always refers to a value.

435 | my_channel = [-1, -1, -1, -1, -1, -1]

436 | my_rot_order = [None, None, None]

437 | rot_count = 0

438 | for channel in file_lines[lineIdx][2:]:

439 | channel = channel.lower()

440 | channelIndex += 1 # So the index points to the right channel

441 | if channel == 'xposition':

442 | my_channel[0] = channelIndex

443 | elif channel == 'yposition':

444 | my_channel[1] = channelIndex

445 | elif channel == 'zposition':

446 | my_channel[2] = channelIndex

447 |

448 | elif channel == 'xrotation':

449 | my_channel[3] = channelIndex

450 | my_rot_order[rot_count] = 0

451 | rot_count += 1

452 | elif channel == 'yrotation':

453 | my_channel[4] = channelIndex

454 | my_rot_order[rot_count] = 1

455 | rot_count += 1

456 | elif channel == 'zrotation':

457 | my_channel[5] = channelIndex

458 | my_rot_order[rot_count] = 2

459 | rot_count += 1

460 |

461 | channels = file_lines[lineIdx][2:]

462 |

463 | my_parent = bvh_nodes_serial[-1] # account for none

464 |

465 | # Apply the parents offset accumulatively

466 | if my_parent is None:

467 | rest_head_world = Vector(rest_head_local)

468 | else:

469 | rest_head_world = my_parent.rest_head_world + rest_head_local

470 |

471 | bvh_node = bvh_nodes[name] = BVH_Node(

472 | name,

473 | rest_head_world,

474 | rest_head_local,

475 | my_parent,

476 | my_channel,

477 | my_rot_order,

478 | len(bvh_nodes) - 1,

479 | )

480 |

481 | # If we have another child then we can call ourselves a parent, else

482 | bvh_nodes_serial.append(bvh_node)

483 |

484 | # Account for an end node.

485 | # There is sometimes a name after 'End Site' but we will ignore it.

486 | if file_lines[lineIdx][0].lower() == 'end' and file_lines[lineIdx][1].lower() == 'site':

487 | # Increment to the next line (Offset)

488 | lineIdx += 2

489 | rest_tail = global_scale * Vector((

490 | float(file_lines[lineIdx][1]),

491 | float(file_lines[lineIdx][2]),

492 | float(file_lines[lineIdx][3]),

493 | ))

494 |

495 | bvh_nodes_serial[-1].rest_tail_world = bvh_nodes_serial[-1].rest_head_world + rest_tail

496 | bvh_nodes_serial[-1].rest_tail_local = bvh_nodes_serial[-1].rest_head_local + rest_tail

497 |

498 | # Just so we can remove the parents in a uniform way,

499 | # the end has kids so this is a placeholder.

500 | bvh_nodes_serial.append(None)

501 |

502 | if len(file_lines[lineIdx]) == 1 and file_lines[lineIdx][0] == '}': # == ['}']

503 | bvh_nodes_serial.pop() # Remove the last item

504 |

505 | # End of the hierarchy. Begin the animation section of the file with

506 | # the following header.

507 | # MOTION

508 | # Frames: n

509 | # Frame Time: dt

510 | if len(file_lines[lineIdx]) == 1 and file_lines[lineIdx][0].lower() == 'motion':

511 | lineIdx += 1 # Read frame count.

512 | if (

513 | len(file_lines[lineIdx]) == 2 and

514 | file_lines[lineIdx][0].lower() == 'frames:'

515 | ):

516 | bvh_frame_count = int(file_lines[lineIdx][1])

517 |

518 | lineIdx += 1 # Read frame rate.

519 | if (

520 | len(file_lines[lineIdx]) == 3 and

521 | file_lines[lineIdx][0].lower() == 'frame' and

522 | file_lines[lineIdx][1].lower() == 'time:'

523 | ):

524 | bvh_frame_time = float(file_lines[lineIdx][2])

525 |

526 | lineIdx += 1 # Set the cursor to the first frame

527 |

528 | break

529 |

530 | lineIdx += 1

531 |

532 | # Remove the None value used for easy parent reference

533 | del bvh_nodes[None]

534 | # Don't use anymore

535 | del bvh_nodes_serial

536 |

537 | # importing world with any order but nicer to maintain order

538 | # second life expects it, which isn't to spec.

539 | bvh_nodes_list = sorted_nodes(bvh_nodes)

540 |

541 | while lineIdx < len(file_lines):

542 | line = file_lines[lineIdx]

543 | for bvh_node in bvh_nodes_list:

544 | # for bvh_node in bvh_nodes_serial:

545 | lx = ly = lz = rx = ry = rz = 0.0

546 | channels = bvh_node.channels

547 | anim_data = bvh_node.anim_data

548 | if channels[0] != -1:

549 | lx = global_scale * float(line[channels[0]])

550 |

551 | if channels[1] != -1:

552 | ly = global_scale * float(line[channels[1]])

553 |

554 | if channels[2] != -1:

555 | lz = global_scale * float(line[channels[2]])

556 |

557 | if channels[3] != -1 or channels[4] != -1 or channels[5] != -1:

558 |

559 | rx = radians(float(line[channels[3]]))

560 | ry = radians(float(line[channels[4]]))

561 | rz = radians(float(line[channels[5]]))

562 |

563 | # Done importing motion data #

564 | anim_data.append((lx, ly, lz, rx, ry, rz))

565 | lineIdx += 1

566 |

567 | # Assign children

568 | for bvh_node in bvh_nodes_list:

569 | bvh_node_parent = bvh_node.parent

570 | if bvh_node_parent:

571 | bvh_node_parent.children.append(bvh_node)

572 |

573 | # Now set the tip of each bvh_node

574 | for bvh_node in bvh_nodes_list:

575 |

576 | if not bvh_node.rest_tail_world:

577 | if len(bvh_node.children) == 0:

578 | # could just fail here, but rare BVH files have childless nodes

579 | bvh_node.rest_tail_world = Vector(bvh_node.rest_head_world)

580 | bvh_node.rest_tail_local = Vector(bvh_node.rest_head_local)

581 | elif len(bvh_node.children) == 1:

582 | bvh_node.rest_tail_world = Vector(bvh_node.children[0].rest_head_world)

583 | bvh_node.rest_tail_local = bvh_node.rest_head_local + bvh_node.children[0].rest_head_local

584 | else:

585 | # allow this, see above

586 | # if not bvh_node.children:

587 | # raise Exception("bvh node has no end and no children. bad file")

588 |

589 | # Removed temp for now

590 | rest_tail_world = Vector((0.0, 0.0, 0.0))

591 | rest_tail_local = Vector((0.0, 0.0, 0.0))

592 | for bvh_node_child in bvh_node.children:

593 | rest_tail_world += bvh_node_child.rest_head_world

594 | rest_tail_local += bvh_node_child.rest_head_local

595 |

596 | bvh_node.rest_tail_world = rest_tail_world * (1.0 / len(bvh_node.children))

597 | bvh_node.rest_tail_local = rest_tail_local * (1.0 / len(bvh_node.children))

598 |

599 | # Make sure tail isn't the same location as the head.

600 | if (bvh_node.rest_tail_local - bvh_node.rest_head_local).length <= 0.001 * global_scale:

601 | print("\tzero length node found:", bvh_node.name)

602 | bvh_node.rest_tail_local.y = bvh_node.rest_tail_local.y + global_scale / 10

603 | bvh_node.rest_tail_world.y = bvh_node.rest_tail_world.y + global_scale / 10

604 |

605 | return bvh_nodes, bvh_frame_time, bvh_frame_count

606 |

607 |

608 | def bvh_node_dict2objects(context, bvh_name, bvh_nodes, rotate_mode='NATIVE', frame_start=1, IMPORT_LOOP=False):

609 |

610 | if frame_start < 1:

611 | frame_start = 1

612 |

613 | scene = context.scene

614 | for obj in scene.objects:

615 | obj.select_set(False)

616 |

617 | objects = []

618 |

619 | def add_ob(name):

620 | obj = bpy.data.objects.new(name, None)

621 | context.collection.objects.link(obj)

622 | objects.append(obj)

623 | obj.select_set(True)

624 |

625 | # nicer drawing.

626 | obj.empty_display_type = 'CUBE'

627 | obj.empty_display_size = 0.1

628 |

629 | return obj

630 |

631 | # Add objects

632 | for name, bvh_node in bvh_nodes.items():

633 | bvh_node.temp = add_ob(name)

634 | bvh_node.temp.rotation_mode = bvh_node.rot_order_str[::-1]

635 |

636 | # Parent the objects

637 | for bvh_node in bvh_nodes.values():

638 | for bvh_node_child in bvh_node.children:

639 | bvh_node_child.temp.parent = bvh_node.temp

640 |

641 | # Offset

642 | for bvh_node in bvh_nodes.values():

643 | # Make relative to parents offset

644 | bvh_node.temp.location = bvh_node.rest_head_local

645 |

646 | # Add tail objects

647 | for name, bvh_node in bvh_nodes.items():

648 | if not bvh_node.children:

649 | ob_end = add_ob(name + '_end')

650 | ob_end.parent = bvh_node.temp

651 | ob_end.location = bvh_node.rest_tail_world - bvh_node.rest_head_world

652 |

653 | for name, bvh_node in bvh_nodes.items():

654 | obj = bvh_node.temp

655 |

656 | for frame_current in range(len(bvh_node.anim_data)):

657 |

658 | lx, ly, lz, rx, ry, rz = bvh_node.anim_data[frame_current]

659 |

660 | if bvh_node.has_loc:

661 | obj.delta_location = Vector((lx, ly, lz)) - bvh_node.rest_head_world

662 | obj.keyframe_insert("delta_location", index=-1, frame=frame_start + frame_current)

663 |

664 | if bvh_node.has_rot:

665 | obj.delta_rotation_euler = rx, ry, rz

666 | obj.keyframe_insert("delta_rotation_euler", index=-1, frame=frame_start + frame_current)

667 |

668 | return objects

669 |

670 |

671 | def bvh_node_dict2armature(

672 | context,

673 | bvh_name,

674 | bvh_nodes,

675 | bvh_frame_time,

676 | rotate_mode='XYZ',

677 | frame_start=1,

678 | IMPORT_LOOP=False,

679 | global_matrix=None,

680 | use_fps_scale=False,

681 | ):

682 |

683 | if frame_start < 1:

684 | frame_start = 1

685 |

686 | # Add the new armature,

687 | scene = context.scene

688 | for obj in scene.objects:

689 | obj.select_set(False)

690 |

691 | arm_data = bpy.data.armatures.new(bvh_name)

692 | arm_ob = bpy.data.objects.new(bvh_name, arm_data)

693 |

694 | context.collection.objects.link(arm_ob)

695 |

696 | arm_ob.select_set(True)

697 | context.view_layer.objects.active = arm_ob

698 |

699 | bpy.ops.object.mode_set(mode='OBJECT', toggle=False)

700 | bpy.ops.object.mode_set(mode='EDIT', toggle=False)

701 |

702 | bvh_nodes_list = sorted_nodes(bvh_nodes)

703 |

704 | # Get the average bone length for zero length bones, we may not use this.

705 | average_bone_length = 0.0

706 | nonzero_count = 0

707 | for bvh_node in bvh_nodes_list:

708 | l = (bvh_node.rest_head_local - bvh_node.rest_tail_local).length

709 | if l:

710 | average_bone_length += l

711 | nonzero_count += 1

712 |

713 | # Very rare cases all bones could be zero length???

714 | if not average_bone_length:

715 | average_bone_length = 0.1

716 | else:

717 | # Normal operation

718 | average_bone_length = average_bone_length / nonzero_count

719 |

720 | # XXX, annoying, remove bone.

721 | while arm_data.edit_bones:

722 | arm_ob.edit_bones.remove(arm_data.edit_bones[-1])

723 |

724 | ZERO_AREA_BONES = []

725 | for bvh_node in bvh_nodes_list:

726 |

727 | # New editbone

728 | bone = bvh_node.temp = arm_data.edit_bones.new(bvh_node.name)

729 |

730 | bone.head = bvh_node.rest_head_world

731 | bone.tail = bvh_node.rest_tail_world

732 |

733 | # Zero Length Bones! (an exceptional case)

734 | if (bone.head - bone.tail).length < 0.001:

735 | print("\tzero length bone found:", bone.name)

736 | if bvh_node.parent:

737 | ofs = bvh_node.parent.rest_head_local - bvh_node.parent.rest_tail_local

738 | if ofs.length: # is our parent zero length also?? unlikely

739 | bone.tail = bone.tail - ofs

740 | else:

741 | bone.tail.y = bone.tail.y + average_bone_length

742 | else:

743 | bone.tail.y = bone.tail.y + average_bone_length

744 |

745 | ZERO_AREA_BONES.append(bone.name)

746 |

747 | for bvh_node in bvh_nodes_list:

748 | if bvh_node.parent:

749 | # bvh_node.temp is the Editbone

750 |

751 | # Set the bone parent

752 | bvh_node.temp.parent = bvh_node.parent.temp

753 |

754 | # Set the connection state

755 | if(

756 | (not bvh_node.has_loc) and

757 | (bvh_node.parent.temp.name not in ZERO_AREA_BONES) and

758 | (bvh_node.parent.rest_tail_local == bvh_node.rest_head_local)

759 | ):

760 | bvh_node.temp.use_connect = True

761 |

762 | # Replace the editbone with the editbone name,

763 | # to avoid memory errors accessing the editbone outside editmode

764 | for bvh_node in bvh_nodes_list:

765 | bvh_node.temp = bvh_node.temp.name

766 |

767 | # Now Apply the animation to the armature

768 |

769 | # Get armature animation data

770 | bpy.ops.object.mode_set(mode='OBJECT', toggle=False)

771 |

772 | pose = arm_ob.pose

773 | pose_bones = pose.bones

774 |

775 | if rotate_mode == 'NATIVE':

776 | for bvh_node in bvh_nodes_list:

777 | bone_name = bvh_node.temp # may not be the same name as the bvh_node, could have been shortened.

778 | pose_bone = pose_bones[bone_name]

779 | pose_bone.rotation_mode = bvh_node.rot_order_str

780 |

781 | elif rotate_mode != 'QUATERNION':

782 | for pose_bone in pose_bones:

783 | pose_bone.rotation_mode = rotate_mode

784 | else:

785 | # Quats default

786 | pass

787 |

788 | context.view_layer.update()

789 |

790 | arm_ob.animation_data_create()

791 | action = bpy.data.actions.new(name=bvh_name)

792 | arm_ob.animation_data.action = action

793 |

794 | # Replace the bvh_node.temp (currently an editbone)

795 | # With a tuple (pose_bone, armature_bone, bone_rest_matrix, bone_rest_matrix_inv)

796 | num_frame = 0

797 | for bvh_node in bvh_nodes_list:

798 | bone_name = bvh_node.temp # may not be the same name as the bvh_node, could have been shortened.

799 | pose_bone = pose_bones[bone_name]

800 | rest_bone = arm_data.bones[bone_name]

801 | bone_rest_matrix = rest_bone.matrix_local.to_3x3()

802 |

803 | bone_rest_matrix_inv = Matrix(bone_rest_matrix)

804 | bone_rest_matrix_inv.invert()

805 |

806 | bone_rest_matrix_inv.resize_4x4()

807 | bone_rest_matrix.resize_4x4()

808 | bvh_node.temp = (pose_bone, bone, bone_rest_matrix, bone_rest_matrix_inv)

809 |

810 | if 0 == num_frame:

811 | num_frame = len(bvh_node.anim_data)

812 |

813 | # Choose to skip some frames at the beginning. Frame 0 is the rest pose

814 | # used internally by this importer. Frame 1, by convention, is also often

815 | # the rest pose of the skeleton exported by the motion capture system.

816 | skip_frame = 1

817 | if num_frame > skip_frame:

818 | num_frame = num_frame - skip_frame

819 |

820 | # Create a shared time axis for all animation curves.

821 | time = [float(frame_start)] * num_frame

822 | if use_fps_scale:

823 | dt = scene.render.fps * bvh_frame_time

824 | for frame_i in range(1, num_frame):

825 | time[frame_i] += float(frame_i) * dt

826 | else:

827 | for frame_i in range(1, num_frame):

828 | time[frame_i] += float(frame_i)

829 |

830 | # print("bvh_frame_time = %f, dt = %f, num_frame = %d"

831 | # % (bvh_frame_time, dt, num_frame]))

832 |

833 | for i, bvh_node in enumerate(bvh_nodes_list):

834 | pose_bone, bone, bone_rest_matrix, bone_rest_matrix_inv = bvh_node.temp

835 |

836 | if bvh_node.has_loc:

837 | # Not sure if there is a way to query this or access it in the

838 | # PoseBone structure.

839 | data_path = 'pose.bones["%s"].location' % pose_bone.name

840 |

841 | location = [(0.0, 0.0, 0.0)] * num_frame

842 | for frame_i in range(num_frame):

843 | bvh_loc = bvh_node.anim_data[frame_i + skip_frame][:3]

844 |

845 | bone_translate_matrix = Matrix.Translation(

846 | Vector(bvh_loc) - bvh_node.rest_head_local)

847 | location[frame_i] = (bone_rest_matrix_inv @

848 | bone_translate_matrix).to_translation()

849 |

850 | # For each location x, y, z.

851 | for axis_i in range(3):

852 | curve = action.fcurves.new(data_path=data_path, index=axis_i, action_group=bvh_node.name)

853 | keyframe_points = curve.keyframe_points

854 | keyframe_points.add(num_frame)

855 |

856 | for frame_i in range(num_frame):

857 | keyframe_points[frame_i].co = (

858 | time[frame_i],

859 | location[frame_i][axis_i],

860 | )

861 |

862 | if bvh_node.has_rot:

863 | data_path = None

864 | rotate = None

865 |

866 | if 'QUATERNION' == rotate_mode:

867 | rotate = [(1.0, 0.0, 0.0, 0.0)] * num_frame

868 | data_path = ('pose.bones["%s"].rotation_quaternion'

869 | % pose_bone.name)

870 | else:

871 | rotate = [(0.0, 0.0, 0.0)] * num_frame

872 | data_path = ('pose.bones["%s"].rotation_euler' %

873 | pose_bone.name)

874 |

875 | prev_euler = Euler((0.0, 0.0, 0.0))

876 | for frame_i in range(num_frame):

877 | bvh_rot = bvh_node.anim_data[frame_i + skip_frame][3:]

878 |

879 | # apply rotation order and convert to XYZ

880 | # note that the rot_order_str is reversed.

881 | euler = Euler(bvh_rot, bvh_node.rot_order_str[::-1])

882 | bone_rotation_matrix = euler.to_matrix().to_4x4()

883 | bone_rotation_matrix = (

884 | bone_rest_matrix_inv @

885 | bone_rotation_matrix @

886 | bone_rest_matrix

887 | )

888 |

889 | if len(rotate[frame_i]) == 4:

890 | rotate[frame_i] = bone_rotation_matrix.to_quaternion()

891 | else:

892 | rotate[frame_i] = bone_rotation_matrix.to_euler(

893 | pose_bone.rotation_mode, prev_euler)

894 | prev_euler = rotate[frame_i]

895 |

896 | # For each euler angle x, y, z (or quaternion w, x, y, z).

897 | for axis_i in range(len(rotate[0])):

898 | curve = action.fcurves.new(data_path=data_path, index=axis_i, action_group=bvh_node.name)

899 | keyframe_points = curve.keyframe_points

900 | keyframe_points.add(num_frame)

901 |

902 | for frame_i in range(num_frame):

903 | keyframe_points[frame_i].co = (

904 | time[frame_i],

905 | rotate[frame_i][axis_i],

906 | )

907 |

908 | for cu in action.fcurves:

909 | if IMPORT_LOOP:

910 | pass # 2.5 doenst have cyclic now?

911 |

912 | for bez in cu.keyframe_points:

913 | bez.interpolation = 'LINEAR'

914 |

915 | # finally apply matrix

916 | try:

917 | arm_ob.matrix_world = global_matrix

918 | except:

919 | pass

920 | bpy.ops.object.transform_apply(location=False, rotation=True, scale=False)

921 |

922 | return arm_ob

923 |

924 |

925 | def load(

926 | context,

927 | bvh_str,

928 | *,

929 | target='ARMATURE',

930 | rotate_mode='NATIVE',

931 | global_scale=1.0,

932 | use_cyclic=False,

933 | frame_start=1,

934 | global_matrix=None,

935 | use_fps_scale=False,

936 | update_scene_fps=False,

937 | update_scene_duration=False,

938 | report=print,

939 | ):

940 | import time

941 | t1 = time.time()

942 |

943 | bvh_nodes, bvh_frame_time, bvh_frame_count = read_bvh(

944 | context, bvh_str,

945 | rotate_mode=rotate_mode,

946 | global_scale=global_scale,

947 | )

948 |

949 | print("%.4f" % (time.time() - t1))

950 |

951 | scene = context.scene

952 | frame_orig = scene.frame_current

953 |

954 | # Broken BVH handling: guess frame rate when it is not contained in the file.

955 | if bvh_frame_time is None:

956 | report(

957 | {'WARNING'},

958 | "The BVH file does not contain frame duration in its MOTION "

959 | "section, assuming the BVH and Blender scene have the same "

960 | "frame rate"

961 | )

962 | bvh_frame_time = scene.render.fps_base / scene.render.fps

963 | # No need to scale the frame rate, as they're equal now anyway.

964 | use_fps_scale = False

965 |

966 | if update_scene_fps:

967 | _update_scene_fps(context, report, bvh_frame_time)

968 |

969 | # Now that we have a 1-to-1 mapping of Blender frames and BVH frames, there is no need

970 | # to scale the FPS any more. It's even better not to, to prevent roundoff errors.

971 | use_fps_scale = False

972 |

973 | if update_scene_duration:

974 | _update_scene_duration(context, report, bvh_frame_count, bvh_frame_time, frame_start, use_fps_scale)

975 |

976 | t1 = time.time()

977 | print("\timporting to blender...", end="")

978 |

979 | bvh_name = bpy.path.display_name_from_filepath('synsized')

980 |

981 | if target == 'ARMATURE':

982 | bvh_node_dict2armature(

983 | context, bvh_name, bvh_nodes, bvh_frame_time,

984 | rotate_mode=rotate_mode,

985 | frame_start=frame_start,

986 | IMPORT_LOOP=use_cyclic,

987 | global_matrix=global_matrix,

988 | use_fps_scale=use_fps_scale,

989 | )

990 |

991 | elif target == 'OBJECT':

992 | bvh_node_dict2objects(

993 | context, bvh_name, bvh_nodes,

994 | rotate_mode=rotate_mode,

995 | frame_start=frame_start,

996 | IMPORT_LOOP=use_cyclic,

997 | # global_matrix=global_matrix, # TODO

998 | )

999 |

1000 | else:

1001 | report({'ERROR'}, tip_("Invalid target %r (must be 'ARMATURE' or 'OBJECT')") % target)

1002 | return {'CANCELLED'}

1003 |

1004 | print('Done in %.4f\n' % (time.time() - t1))

1005 |

1006 | context.scene.frame_set(frame_orig)

1007 |

1008 | return {'FINISHED'}

1009 |

1010 |

1011 | def _update_scene_fps(context, report, bvh_frame_time):

1012 | """Update the scene's FPS settings from the BVH, but only if the BVH contains enough info."""

1013 |

1014 | # Broken BVH handling: prevent division by zero.

1015 | if bvh_frame_time == 0.0:

1016 | report(

1017 | {'WARNING'},

1018 | "Unable to update scene frame rate, as the BVH file "

1019 | "contains a zero frame duration in its MOTION section",

1020 | )

1021 | return

1022 |

1023 | scene = context.scene

1024 | scene_fps = scene.render.fps / scene.render.fps_base

1025 | new_fps = 1.0 / bvh_frame_time

1026 |

1027 | if scene.render.fps != new_fps or scene.render.fps_base != 1.0:

1028 | print("\tupdating scene FPS (was %f) to BVH FPS (%f)" % (scene_fps, new_fps))

1029 | scene.render.fps = int(round(new_fps))

1030 | scene.render.fps_base = scene.render.fps / new_fps

1031 |

1032 |

1033 | def _update_scene_duration(

1034 | context, report, bvh_frame_count, bvh_frame_time, frame_start,

1035 | use_fps_scale):

1036 | """Extend the scene's duration so that the BVH file fits in its entirety."""

1037 |

1038 | if bvh_frame_count is None:

1039 | report(

1040 | {'WARNING'},

1041 | "Unable to extend the scene duration, as the BVH file does not "

1042 | "contain the number of frames in its MOTION section",

1043 | )

1044 | return

1045 |

1046 | # Not likely, but it can happen when a BVH is just used to store an armature.

1047 | if bvh_frame_count == 0:

1048 | return

1049 |

1050 | if use_fps_scale:

1051 | scene_fps = context.scene.render.fps / context.scene.render.fps_base

1052 | scaled_frame_count = int(ceil(bvh_frame_count * bvh_frame_time * scene_fps))

1053 | bvh_last_frame = frame_start + scaled_frame_count

1054 | else:

1055 | bvh_last_frame = frame_start + bvh_frame_count

1056 |

1057 | # Only extend the scene, never shorten it.

1058 | if context.scene.frame_end < bvh_last_frame:

1059 | context.scene.frame_end = bvh_last_frame

1060 |

1061 |

1062 | # This function is from

1063 | # https://github.com/yuki-koyama/blender-cli-rendering

1064 | def set_smooth_shading(mesh: bpy.types.Mesh) -> None:

1065 | for polygon in mesh.polygons:

1066 | polygon.use_smooth = True

1067 |

1068 |

1069 | # This function is from

1070 | # https://github.com/yuki-koyama/blender-cli-rendering

1071 | def create_mesh_from_pydata(scene: bpy.types.Scene,

1072 | vertices: Iterable[Iterable[float]],

1073 | faces: Iterable[Iterable[int]],

1074 | mesh_name: str,

1075 | object_name: str,

1076 | use_smooth: bool = True) -> bpy.types.Object:

1077 | # Add a new mesh and set vertices and faces

1078 | # Note: In this case, it does not require to set edges.

1079 | # Note: After manipulating mesh data, update() needs to be called.

1080 | new_mesh: bpy.types.Mesh = bpy.data.meshes.new(mesh_name)

1081 | new_mesh.from_pydata(vertices, [], faces)

1082 | new_mesh.update()

1083 | if use_smooth:

1084 | set_smooth_shading(new_mesh)

1085 |

1086 | new_object: bpy.types.Object = bpy.data.objects.new(object_name, new_mesh)

1087 | scene.collection.objects.link(new_object)

1088 |

1089 | return new_object

1090 |

1091 |

1092 | # This function is from

1093 | # https://github.com/yuki-koyama/blender-cli-rendering

1094 | def add_subdivision_surface_modifier(mesh_object: bpy.types.Object, level: int, is_simple: bool = False) -> None:

1095 | '''

1096 | https://docs.blender.org/api/current/bpy.types.SubsurfModifier.html

1097 | '''

1098 |

1099 | modifier: bpy.types.SubsurfModifier = mesh_object.modifiers.new(name="Subsurf", type='SUBSURF')

1100 |

1101 | modifier.levels = level

1102 | modifier.render_levels = level

1103 | modifier.subdivision_type = 'SIMPLE' if is_simple else 'CATMULL_CLARK'

1104 |

1105 |

1106 | # This function is from

1107 | # https://github.com/yuki-koyama/blender-cli-rendering

1108 | def create_armature_mesh(scene: bpy.types.Scene, armature_object: bpy.types.Object, mesh_name: str) -> bpy.types.Object:

1109 | assert armature_object.type == 'ARMATURE', 'Error'

1110 | assert len(armature_object.data.bones) != 0, 'Error'

1111 |

1112 | def add_rigid_vertex_group(target_object: bpy.types.Object, name: str, vertex_indices: Iterable[int]) -> None:

1113 | new_vertex_group = target_object.vertex_groups.new(name=name)

1114 | for vertex_index in vertex_indices:

1115 | new_vertex_group.add([vertex_index], 1.0, 'REPLACE')

1116 |

1117 | def generate_bone_mesh_pydata(radius: float, length: float) -> Tuple[List[mathutils.Vector], List[List[int]]]:

1118 | base_radius = radius

1119 | top_radius = 0.5 * radius

1120 |

1121 | vertices = [

1122 | # Cross section of the base part

1123 | mathutils.Vector((-base_radius, 0.0, +base_radius)),

1124 | mathutils.Vector((+base_radius, 0.0, +base_radius)),

1125 | mathutils.Vector((+base_radius, 0.0, -base_radius)),

1126 | mathutils.Vector((-base_radius, 0.0, -base_radius)),

1127 |

1128 | # Cross section of the top part

1129 | mathutils.Vector((-top_radius, length, +top_radius)),

1130 | mathutils.Vector((+top_radius, length, +top_radius)),

1131 | mathutils.Vector((+top_radius, length, -top_radius)),

1132 | mathutils.Vector((-top_radius, length, -top_radius)),

1133 |

1134 | # End points

1135 | mathutils.Vector((0.0, -base_radius, 0.0)),

1136 | mathutils.Vector((0.0, length + top_radius, 0.0))

1137 | ]

1138 |

1139 | faces = [

1140 | # End point for the base part

1141 | [8, 1, 0],

1142 | [8, 2, 1],

1143 | [8, 3, 2],

1144 | [8, 0, 3],

1145 |

1146 | # End point for the top part

1147 | [9, 4, 5],

1148 | [9, 5, 6],

1149 | [9, 6, 7],

1150 | [9, 7, 4],

1151 |

1152 | # Side faces

1153 | [0, 1, 5, 4],

1154 | [1, 2, 6, 5],

1155 | [2, 3, 7, 6],

1156 | [3, 0, 4, 7],

1157 | ]

1158 |

1159 | return vertices, faces

1160 |

1161 | armature_data: bpy.types.Armature = armature_object.data

1162 |

1163 | vertices: List[mathutils.Vector] = []

1164 | faces: List[List[int]] = []

1165 | vertex_groups: List[Dict[str, Any]] = []

1166 |

1167 | for bone in armature_data.bones:

1168 | radius = 0.10 * (0.10 + bone.length)

1169 | temp_vertices, temp_faces = generate_bone_mesh_pydata(radius, bone.length)

1170 |

1171 | vertex_index_offset = len(vertices)

1172 |

1173 | temp_vertex_group = {'name': bone.name, 'vertex_indices': []}

1174 | for local_index, vertex in enumerate(temp_vertices):

1175 | vertices.append(bone.matrix_local @ vertex)

1176 | temp_vertex_group['vertex_indices'].append(local_index + vertex_index_offset)

1177 | vertex_groups.append(temp_vertex_group)

1178 |

1179 | for face in temp_faces:

1180 | if len(face) == 3:

1181 | faces.append([

1182 | face[0] + vertex_index_offset,

1183 | face[1] + vertex_index_offset,

1184 | face[2] + vertex_index_offset,

1185 | ])

1186 | else:

1187 | faces.append([

1188 | face[0] + vertex_index_offset,

1189 | face[1] + vertex_index_offset,

1190 | face[2] + vertex_index_offset,

1191 | face[3] + vertex_index_offset,

1192 | ])

1193 |

1194 | new_object = create_mesh_from_pydata(scene, vertices, faces, mesh_name, mesh_name)

1195 | new_object.matrix_world = armature_object.matrix_world

1196 |

1197 | for vertex_group in vertex_groups:

1198 | add_rigid_vertex_group(new_object, vertex_group['name'], vertex_group['vertex_indices'])

1199 |

1200 | armature_modifier = new_object.modifiers.new('Armature', 'ARMATURE')

1201 | armature_modifier.object = armature_object

1202 | armature_modifier.use_vertex_groups = True

1203 |

1204 | add_subdivision_surface_modifier(new_object, 1, is_simple=True)

1205 | add_subdivision_surface_modifier(new_object, 2, is_simple=False)

1206 |

1207 | # Set the armature as the parent of the new object

1208 | bpy.ops.object.select_all(action='DESELECT')

1209 | new_object.select_set(True)

1210 | armature_object.select_set(True)

1211 | bpy.context.view_layer.objects.active = armature_object

1212 | bpy.ops.object.parent_set(type='OBJECT')

1213 |

1214 | return new_object

1215 |

1216 |

1217 | class OP_AddMesh(bpy.types.Operator):

1218 |

1219 | bl_idname = "genmm.add_mesh"

1220 | bl_label = "Add mesh"

1221 | bl_description = ""

1222 | bl_options = {"REGISTER", "UNDO"}

1223 |

1224 | def __init__(self) -> None:

1225 | super().__init__()

1226 |

1227 | def execute(self, context: bpy.types.Context):

1228 | name = bpy.context.object.name + "_proxy"

1229 | create_armature_mesh(bpy.context.scene, bpy.context.object, name)

1230 |

1231 | return {'FINISHED'}

1232 |

1233 |

1234 | class OP_RunSynthesis(bpy.types.Operator):

1235 |

1236 | bl_idname = "genmm.run_synthesis"

1237 | bl_label = "Run synthesis"

1238 | bl_description = ""

1239 | bl_options = {"REGISTER", "UNDO"}

1240 |

1241 | def __init__(self) -> None:

1242 | super().__init__()

1243 |

1244 | def execute(self, context: bpy.types.Context):

1245 | setting = context.scene.setting

1246 |

1247 | anim = context.object.animation_data.action

1248 | start_frame, end_frame = map(int, anim.frame_range)

1249 | start_frame = start_frame if setting.start_frame == -1 else start_frame

1250 | end_frame = end_frame if setting.end_frame == -1 else end_frame

1251 |

1252 | bvh_str = get_bvh_data(context, frame_start=start_frame, frame_end=end_frame)

1253 | frames_str, frame_time_str = bvh_str.split('MOTION\n')[1].split('\n')[:2]

1254 | motion_data_str = bvh_str.split('MOTION\n')[1].split('\n')[2:-1]

1255 | motion_data = np.array([item.strip().split(' ') for item in motion_data_str], dtype=np.float32)

1256 |

1257 | motion = [BlenderMotion(motion_data, repr='repr6d', use_velo=True, keep_up_pos=True, up_axis=setting.up_axis, padding_last=False)]

1258 | model = GenMM(device='cuda' if torch.cuda.is_available() else 'cpu', silent=True)

1259 | criteria = PatchCoherentLoss(patch_size=setting.patch_size,

1260 | alpha=setting.alpha,

1261 | loop=setting.loop, cache=True)

1262 |

1263 | syn = model.run(motion, criteria,

1264 | num_frames=str(setting.num_syn_frames),

1265 | num_steps=setting.num_steps,

1266 | noise_sigma=setting.noise,

1267 | patch_size=setting.patch_size,

1268 | coarse_ratio=f'{setting.coarse_ratio}x_nframes',

1269 | pyr_factor=setting.pyr_factor)

1270 | motion_data_str = [' '.join(str(x) for x in item) for item in motion[0].parse(syn)]

1271 |

1272 | load(context, bvh_str.split('MOTION\n')[0].split('\n')+['MOTION']+[frames_str]+[frame_time_str]+motion_data_str)

1273 | # name = bpy.context.object.name + "_proxy"