├── .gitignore

├── LICENSE

├── README.md

├── adaptive-aggregation-networks

├── README.md

├── main.py

├── models

│ ├── modified_linear.py

│ ├── modified_resnet.py

│ ├── modified_resnet_cifar.py

│ ├── modified_resnetmtl.py

│ ├── modified_resnetmtl_cifar.py

│ └── resnet_cifar.py

├── trainer

│ ├── __init__.py

│ ├── base_trainer.py

│ ├── incremental_icarl.py

│ ├── incremental_lucir.py

│ ├── trainer.py

│ └── zeroth_phase.py

└── utils

│ ├── __init__.py

│ ├── gpu_tools.py

│ ├── imagenet

│ ├── __init__.py

│ ├── train_and_eval.py

│ ├── utils_dataset.py

│ └── utils_train.py

│ ├── incremental

│ ├── __init__.py

│ ├── compute_accuracy.py

│ ├── compute_features.py

│ └── conv2d_mtl.py

│ ├── misc.py

│ └── process_fp.py

└── mnemonics-training

├── 1_train

├── main.py

├── models

│ ├── __init__.py

│ ├── modified_linear.py

│ ├── modified_resnet_cifar.py

│ └── modified_resnetmtl_cifar.py

├── trainer

│ ├── __init__.py

│ ├── baseline.py

│ ├── incremental.py

│ └── mnemonics.py

└── utils

│ ├── __init__.py

│ ├── compute_accuracy.py

│ ├── compute_features.py

│ ├── conv2d_mtl.py

│ ├── gpu_tools.py

│ ├── misc.py

│ ├── process_fp.py

│ └── process_mnemonics.py

├── 2_eval

├── README.md

├── main.py

├── models

│ ├── modified_linear.py

│ ├── modified_resnet.py

│ ├── modified_resnet_cifar.py

│ ├── modified_resnetmtl.py

│ ├── modified_resnetmtl_cifar.py

│ └── resnet_cifar.py

├── process_imagenet

│ ├── generate_imagenet.py

│ └── generate_imagenet_subset.py

├── run_eval.sh

├── script

│ └── download_ckpt.sh

├── trainer

│ ├── __init__.py

│ └── train.py

└── utils

│ ├── __init__.py

│ ├── gpu_tools.py

│ ├── imagenet

│ ├── __init__.py

│ ├── train_and_eval.py

│ ├── utils_dataset.py

│ └── utils_train.py

│ ├── incremental

│ ├── __init__.py

│ ├── compute_accuracy.py

│ ├── compute_confusion_matrix.py

│ ├── compute_features.py

│ └── conv2d_mtl.py

│ └── misc.py

└── README.md

/.gitignore:

--------------------------------------------------------------------------------

1 | # File types

2 | *.pyc

3 | *.npy

4 | *.tar.gz

5 | *.sh

6 | *.out

7 |

8 | # Folders

9 | data

10 | logs

11 | runs

12 | __pycache__

13 |

14 | # File

15 | .DS_Store

16 | bashrc

17 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2020-2021 Yaoyao Liu

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Class-Incremental Learning

2 |

3 | [](https://github.com/yaoyao-liu/class-incremental-learning/blob/master/LICENSE)

4 | [](https://www.python.org/)

5 | [](https://pytorch.org/)

6 |

7 | ### Papers

8 |

9 | - Adaptive Aggregation Networks for Class-Incremental Learning,

10 | CVPR 2021. \[[PDF](https://openaccess.thecvf.com/content/CVPR2021/papers/Liu_Adaptive_Aggregation_Networks_for_Class-Incremental_Learning_CVPR_2021_paper.pdf)\] \[[Project Page](https://class-il.mpi-inf.mpg.de/)\]

11 |

12 | - Mnemonics Training: Multi-Class Incremental Learning without Forgetting,

13 | CVPR 2020. \[[PDF](https://arxiv.org/pdf/2002.10211.pdf)\] \[[Project Page](https://class-il.mpi-inf.mpg.de/mnemonics-training/)\]

14 |

15 | ### Citations

16 |

17 | Please cite our papers if they are helpful to your work:

18 |

19 | ```bibtex

20 | @inproceedings{Liu2020AANets,

21 | author = {Liu, Yaoyao and Schiele, Bernt and Sun, Qianru},

22 | title = {Adaptive Aggregation Networks for Class-Incremental Learning},

23 | booktitle = {The IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

24 | pages = {2544-2553},

25 | year = {2021}

26 | }

27 | ```

28 |

29 | ```bibtex

30 | @inproceedings{liu2020mnemonics,

31 | author = {Liu, Yaoyao and Su, Yuting and Liu, An{-}An and Schiele, Bernt and Sun, Qianru},

32 | title = {Mnemonics Training: Multi-Class Incremental Learning without Forgetting},

33 | booktitle = {The IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

34 | pages = {12245--12254},

35 | year = {2020}

36 | }

37 | ```

38 |

39 | ### Acknowledgements

40 |

41 | Our implementation uses the source code from the following repositories:

42 |

43 | * [Learning a Unified Classifier Incrementally via Rebalancing](https://github.com/hshustc/CVPR19_Incremental_Learning)

44 |

45 | * [iCaRL: Incremental Classifier and Representation Learning](https://github.com/srebuffi/iCaRL)

46 |

47 | * [Dataset Distillation](https://github.com/SsnL/dataset-distillation)

48 |

49 | * [Generative Teaching Networks](https://github.com/uber-research/GTN)

50 |

--------------------------------------------------------------------------------

/adaptive-aggregation-networks/README.md:

--------------------------------------------------------------------------------

1 | ## Adaptive Aggregation Networks for Class-Incremental Learning

2 |

3 | [](https://github.com/yaoyao-liu/class-incremental-learning/blob/master/LICENSE)

4 | [](https://www.python.org/)

5 | [](https://pytorch.org/)

6 |

9 |

10 | \[[PDF](https://openaccess.thecvf.com/content/CVPR2021/papers/Liu_Adaptive_Aggregation_Networks_for_Class-Incremental_Learning_CVPR_2021_paper.pdf)\] \[[Project Page](https://class-il.mpi-inf.mpg.de/)\] \[[GitLab@MPI](https://gitlab.mpi-klsb.mpg.de/yaoyaoliu/adaptive-aggregation-networks)\]

11 |

12 | #### Summary

13 |

14 | * [Introduction](#introduction)

15 | * [Getting Started](#getting-started)

16 | * [Download the Datasets](#download-the-datasets)

17 | * [Running Experiments](#running-experiments)

18 | * [Citation](#citation)

19 | * [Acknowledgements](#acknowledgements)

20 |

21 | ### Introduction

22 |

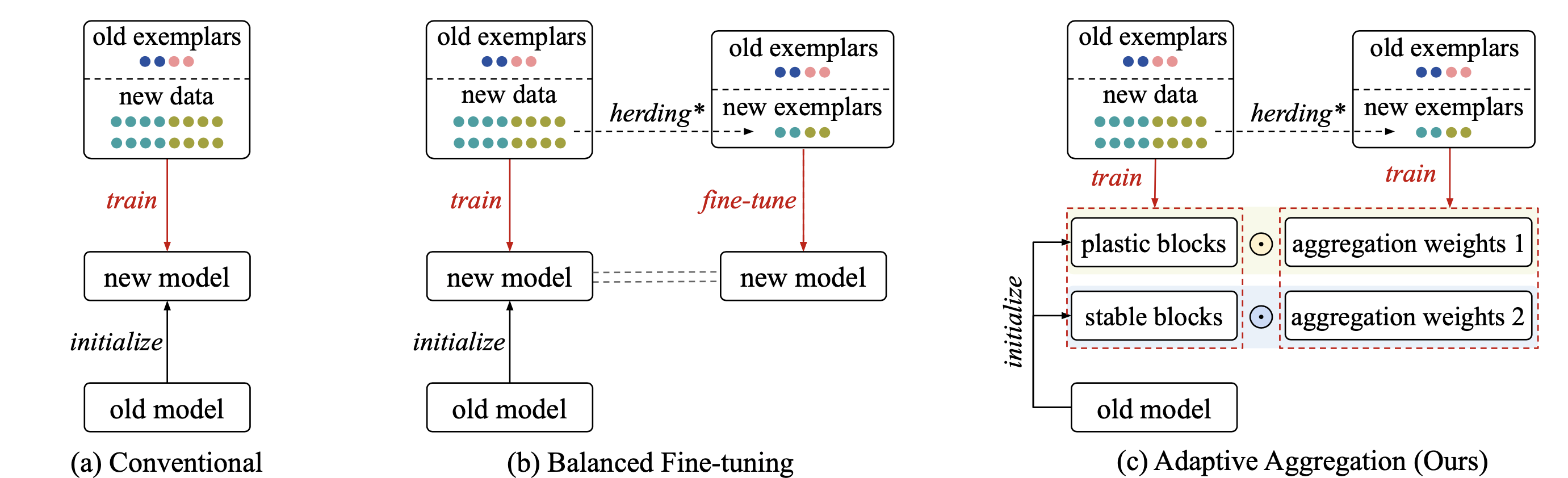

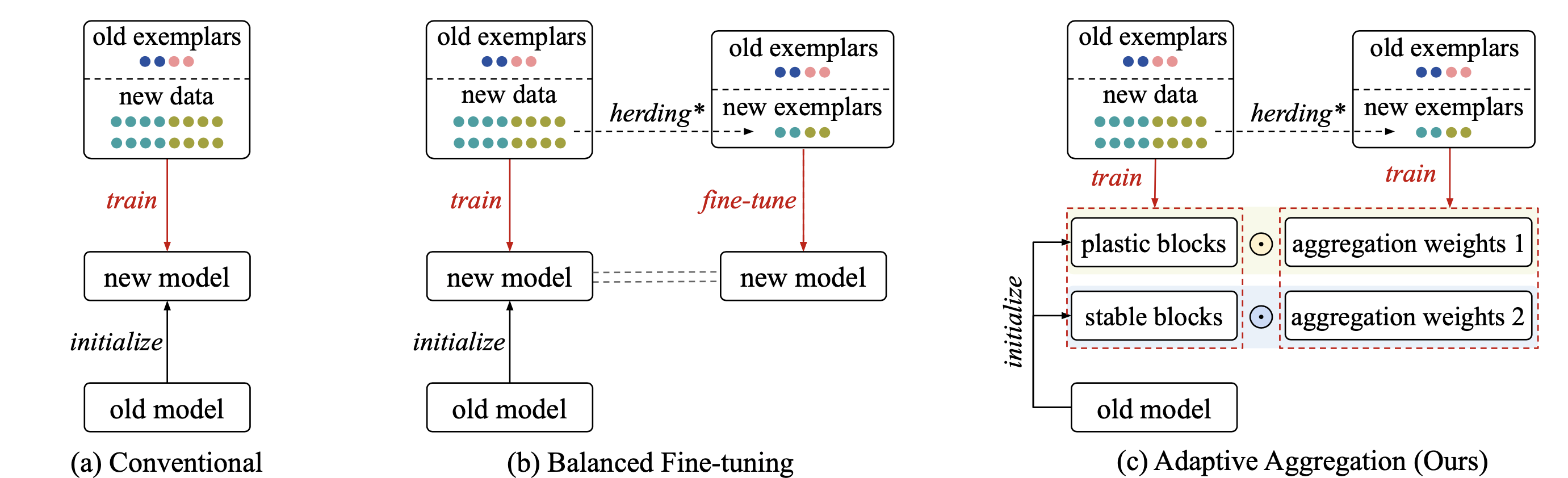

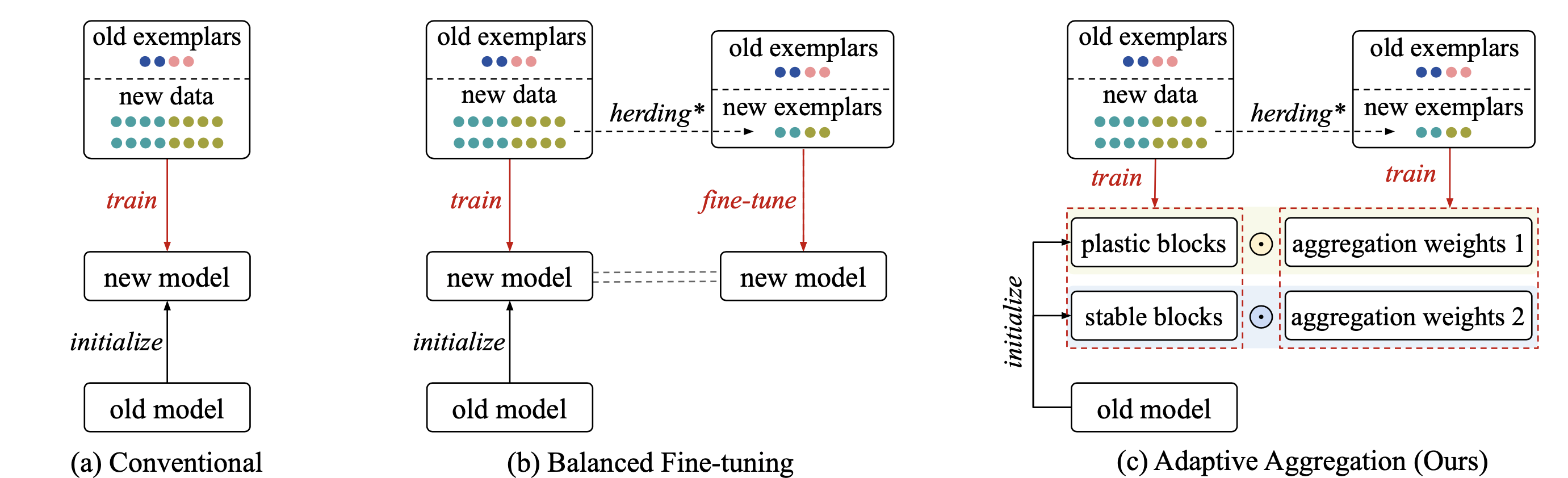

23 | Class-Incremental Learning (CIL) aims to learn a classification model with the number of classes increasing phase-by-phase. The inherent problem in CIL is the stability-plasticity dilemma between the learning of old and new classes, i.e., high-plasticity models easily forget old classes but high-stability models are weak to learn new classes. We alleviate this issue by proposing a novel network architecture called Adaptive Aggregation Networks (AANets) in which we explicitly build two residual blocks at each residual level (taking ResNet as the baseline architecture): a stable block and a plastic block. We aggregate the output feature maps from these two blocks and then feed the results to the next-level blocks. We meta-learn the aggregating weights in order to dynamically optimize and balance between two types of blocks, i.e., between stability and plasticity. We conduct extensive experiments on three CIL benchmarks: CIFAR-100, ImageNet-Subset, and ImageNet, and show that many existing CIL methods can be straightforwardly incorporated on the architecture of AANets to boost their performance.

24 |

25 |

26 |  27 |

27 |

28 |

29 | > Figure: Conceptual illustrations of different CIL methods. (a) Conventional methods use all available data (imbalanced classes) to train the model (Rebuffi et al., 2017; Hou et al., 2019) (b) Castro et al. (2018), Hou et al. (2019) and Douillard et al. (2020) follow the convention but add a fine-tuning step using the balanced set of exemplars. (c) Our AANets approach uses all available data to update the plastic and stable blocks, and use the balanced set of exemplars to meta-learn the aggregating weights. We continuously update these weights such as to dynamically balance between plastic and stable blocks, i.e., between plasticity and stability

30 |

31 | ### Getting Started

32 |

33 | In order to run this repository, we advise you to install python 3.6 and PyTorch 1.2.0 with Anaconda.

34 |

35 | You may download Anaconda and read the installation instruction on their official website:

36 |

37 |

38 | Create a new environment and install PyTorch and torchvision on it:

39 |

40 | ```bash

41 | conda create --name AANets-PyTorch python=3.6

42 | conda activate AANets-PyTorch

43 | conda install pytorch=1.2.0

44 | conda install torchvision -c pytorch

45 | ```

46 |

47 | Install other requirements:

48 | ```bash

49 | pip install tqdm scipy sklearn tensorboardX Pillow==6.2.2

50 | ```

51 |

52 | Clone this repository and enter the folder `adaptive-aggregation-networks`:

53 | ```bash

54 | git clone https://github.com/yaoyao-liu/class-incremental-learning.git

55 | cd class-incremental-learning/adaptive-aggregation-networks

56 |

57 | ```

58 |

59 | ### Download the Datasets

60 | #### CIFAR-100

61 | It will be downloaded automatically by `torchvision` when running the experiments.

62 |

63 | #### ImageNet-Subset

64 | We create the ImageNet-Subset following [LUCIR](https://github.com/hshustc/CVPR19_Incremental_Learning).

65 | You may download the dataset using the following links:

66 | - [Download from Google Drive](https://drive.google.com/file/d/1n5Xg7Iye_wkzVKc0MTBao5adhYSUlMCL/view?usp=sharing)

67 | - [Download from 百度网盘](https://pan.baidu.com/s/1MnhITYKUI1i7aRBzsPrCSw) (提取码: 6uj5)

68 |

69 | File information:

70 | ```

71 | File name: ImageNet-Subset.tar

72 | Size: 15.37 GB

73 | MD5: ab2190e9dac15042a141561b9ba5d6e9

74 | ```

75 | You need to untar the downloaded file, and put the folder `seed_1993_subset_100_imagenet` in `class-incremental-learning/adaptive-aggregation-networks/data`.

76 |

77 | Please note that the ImageNet-Subset is created from ImageNet. ImageNet is only allowed to be downloaded by researchers for non-commercial research and educational purposes. See the terms of ImageNet [here](https://image-net.org/download.php).

78 |

79 | ### Running Experiments

80 | #### Running Experiments w/ AANets on CIFAR-100

81 |

82 | [LUCIR](https://github.com/hshustc/CVPR19_Incremental_Learning) w/ AANets

83 | ```bash

84 | python main.py --nb_cl_fg=50 --nb_cl=10 --gpu=0 --random_seed=1993 --baseline=lucir --branch_mode=dual --branch_1=ss --branch_2=free --dataset=cifar100

85 | python main.py --nb_cl_fg=50 --nb_cl=5 --gpu=0 --random_seed=1993 --baseline=lucir --branch_mode=dual --branch_1=ss --branch_2=free --dataset=cifar100

86 | python main.py --nb_cl_fg=50 --nb_cl=2 --gpu=0 --random_seed=1993 --baseline=lucir --branch_mode=dual --branch_1=ss --branch_2=free --dataset=cifar100

87 | ```

88 |

89 | [iCaRL](https://github.com/hshustc/CVPR19_Incremental_Learning) w/ AANets

90 | ```bash

91 | python main.py --nb_cl_fg=50 --nb_cl=10 --gpu=0 --random_seed=1993 --baseline=icarl --branch_mode=dual --branch_1=ss --branch_2=free --dataset=cifar100

92 | python main.py --nb_cl_fg=50 --nb_cl=5 --gpu=0 --random_seed=1993 --baseline=icarl --branch_mode=dual --branch_1=ss --branch_2=free --dataset=cifar100

93 | python main.py --nb_cl_fg=50 --nb_cl=2 --gpu=0 --random_seed=1993 --baseline=icarl --branch_mode=dual --branch_1=ss --branch_2=free --dataset=cifar100

94 | ```

95 |

96 | #### Running Baseline Experiments on CIFAR-100

97 |

98 | [LUCIR](https://github.com/hshustc/CVPR19_Incremental_Learning) w/o AANets, dual branch

99 | ```bash

100 | python main.py --nb_cl_fg=50 --nb_cl=10 --gpu=0 --random_seed=1993 --baseline=lucir --branch_mode=dual --branch_1=free --branch_2=free --fusion_lr=0.0 --dataset=cifar100

101 | python main.py --nb_cl_fg=50 --nb_cl=5 --gpu=0 --random_seed=1993 --baseline=lucir --branch_mode=dual --branch_1=free --branch_2=free ---fusion_lr=0.0 -dataset=cifar100

102 | python main.py --nb_cl_fg=50 --nb_cl=2 --gpu=0 --random_seed=1993 --baseline=lucir --branch_mode=dual --branch_1=free --branch_2=free --fusion_lr=0.0 --dataset=cifar100

103 | ```

104 |

105 | [iCaRL](https://github.com/hshustc/CVPR19_Incremental_Learning) w/o AANets, dual branch

106 | ```bash

107 | python main.py --nb_cl_fg=50 --nb_cl=10 --gpu=0 --random_seed=1993 --baseline=icarl --branch_mode=dual --branch_1=free --branch_2=free --fusion_lr=0.0 --dataset=cifar100

108 | python main.py --nb_cl_fg=50 --nb_cl=5 --gpu=0 --random_seed=1993 --baseline=icarl --branch_mode=dual --branch_1=free --branch_2=free --fusion_lr=0.0 --dataset=cifar100

109 | python main.py --nb_cl_fg=50 --nb_cl=2 --gpu=0 --random_seed=1993 --baseline=icarl --branch_mode=dual --branch_1=free --branch_2=free --fusion_lr=0.0 --dataset=cifar100

110 | ```

111 |

112 | [LUCIR](https://github.com/hshustc/CVPR19_Incremental_Learning) w/o AANets, single branch

113 | ```bash

114 | python main.py --nb_cl_fg=50 --nb_cl=10 --gpu=0 --random_seed=1993 --baseline=lucir --branch_mode=single --branch_1=free --dataset=cifar100

115 | python main.py --nb_cl_fg=50 --nb_cl=5 --gpu=0 --random_seed=1993 --baseline=lucir --branch_mode=single --branch_1=free -dataset=cifar100

116 | python main.py --nb_cl_fg=50 --nb_cl=2 --gpu=0 --random_seed=1993 --baseline=lucir --branch_mode=single --branch_1=free --dataset=cifar100

117 | ```

118 |

119 | [iCaRL](https://github.com/hshustc/CVPR19_Incremental_Learning) w/o AANets, single branch

120 | ```bash

121 | python main.py --nb_cl_fg=50 --nb_cl=10 --gpu=0 --random_seed=1993 --baseline=icarl --branch_mode=single --branch_1=free --dataset=cifar100

122 | python main.py --nb_cl_fg=50 --nb_cl=5 --gpu=0 --random_seed=1993 --baseline=icarl --branch_mode=single --branch_1=free --dataset=cifar100

123 | python main.py --nb_cl_fg=50 --nb_cl=2 --gpu=0 --random_seed=1993 --baseline=icarl --branch_mode=single --branch_1=free --dataset=cifar100

124 | ```

125 |

126 | #### Running Experiments on ImageNet-Subset

127 | [LUCIR](https://github.com/hshustc/CVPR19_Incremental_Learning) w/ AANets

128 | ```bash

129 | python main.py --nb_cl_fg=50 --nb_cl=10 --gpu=0 --random_seed=1993 --baseline=lucir --branch_mode=dual --branch_1=ss --branch_2=free --dataset=imagenet_sub --test_batch_size=50 --epochs=90 --num_workers=1 --custom_weight_decay=0.0005 --the_lambda=10 --K=2 --dist=0.5 --lw_mr=1 --base_lr1=0.05 --base_lr2=0.05 --dynamic_budget

130 | python main.py --nb_cl_fg=50 --nb_cl=5 --gpu=0 --random_seed=1993 --baseline=lucir --branch_mode=dual --branch_1=ss --branch_2=free --dataset=imagenet_sub --test_batch_size=50 --epochs=90 --num_workers=1 --custom_weight_decay=0.0005 --the_lambda=10 --K=2 --dist=0.5 --lw_mr=1 --base_lr1=0.05 --base_lr2=0.05 --dynamic_budget

131 | python main.py --nb_cl_fg=50 --nb_cl=2 --gpu=0 --random_seed=1993 --baseline=lucir --branch_mode=dual --branch_1=ss --branch_2=free --dataset=imagenet_sub --test_batch_size=50 --epochs=90 --num_workers=1 --custom_weight_decay=0.0005 --the_lambda=10 --K=2 --dist=0.5 --lw_mr=1 --base_lr1=0.05 --base_lr2=0.05 --dynamic_budget

132 | ```

133 |

134 | ### Code for [PODNet](https://github.com/arthurdouillard/incremental_learning.pytorch) w/ AANets

135 |

136 | We are still cleaning up the code for [PODNet](https://github.com/arthurdouillard/incremental_learning.pytorch) w/ AANets. So we will add it to the GitHub repository later.

137 |

138 | If you need to use it now, here is a preliminary version:

139 |

140 | Please note that you need to install the same environment as [PODNet](https://github.com/arthurdouillard/incremental_learning.pytorch) to run this code.

141 |

142 | ### Accuracy for Each Phase

143 |

144 | We provide the accuracy for each phase on CIFAR-100, ImageNet-Subset, and ImageNet-Full in different settings (*N=5, 10, 25*).

145 |

146 | You may view the results using the following link:

147 | [\[Google Sheet Link\]](https://docs.google.com/spreadsheets/d/1rSA0IH7OilDgfx2cvl86ixjVno4I15bmrDWkS4cUtBA/edit?usp=sharing)

148 |

149 | Please note that we re-run some experiments, so some results are slightly different from the paper table.

150 |

151 |

152 | ### Citation

153 |

154 | Please cite our paper if it is helpful to your work:

155 |

156 | ```bibtex

157 | @inproceedings{Liu2020AANets,

158 | author = {Liu, Yaoyao and Schiele, Bernt and Sun, Qianru},

159 | title = {Adaptive Aggregation Networks for Class-Incremental Learning},

160 | booktitle = {The IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

161 | pages = {2544-2553},

162 | year = {2021}

163 | }

164 | ```

165 |

166 | ### Acknowledgements

167 |

168 | Our implementation uses the source code from the following repositories:

169 |

170 | * [Learning a Unified Classifier Incrementally via Rebalancing](https://github.com/hshustc/CVPR19_Incremental_Learning)

171 |

172 | * [iCaRL: Incremental Classifier and Representation Learning](https://github.com/srebuffi/iCaRL)

173 |

174 | * [PODNet: Pooled Outputs Distillation for Small-Tasks Incremental Learning](https://github.com/arthurdouillard/incremental_learning.pytorch)

175 |

--------------------------------------------------------------------------------

/adaptive-aggregation-networks/main.py:

--------------------------------------------------------------------------------

1 | ##+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

2 | ## Created by: Yaoyao Liu

3 | ## Max Planck Institute for Informatics

4 | ## yaoyao.liu@mpi-inf.mpg.de

5 | ## Copyright (c) 2021

6 | ##

7 | ## This source code is licensed under the MIT-style license found in the

8 | ## LICENSE file in the root directory of this source tree

9 | ##+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

10 | """ Main function for this project. """

11 | import os

12 | import argparse

13 | import numpy as np

14 | from trainer.trainer import Trainer

15 | from utils.gpu_tools import occupy_memory

16 |

17 | if __name__ == '__main__':

18 | parser = argparse.ArgumentParser()

19 |

20 | ### Basic parameters

21 | parser.add_argument('--gpu', default='0', help='the index of GPU')

22 | parser.add_argument('--dataset', default='cifar100', type=str, choices=['cifar100', 'imagenet_sub', 'imagenet'])

23 | parser.add_argument('--data_dir', default='data/seed_1993_subset_100_imagenet/data', type=str)

24 | parser.add_argument('--baseline', default='lucir', type=str, choices=['lucir', 'icarl'], help='baseline method')

25 | parser.add_argument('--ckpt_label', type=str, default='exp01', help='the label for the checkpoints')

26 | parser.add_argument('--ckpt_dir_fg', type=str, default='-', help='the checkpoint file for the 0-th phase')

27 | parser.add_argument('--resume_fg', action='store_true', help='resume 0-th phase model from the checkpoint')

28 | parser.add_argument('--resume', action='store_true', help='resume from the checkpoints')

29 | parser.add_argument('--num_workers', default=1, type=int, help='the number of workers for loading data')

30 | parser.add_argument('--random_seed', default=1993, type=int, help='random seed')

31 | parser.add_argument('--train_batch_size', default=128, type=int, help='the batch size for train loader')

32 | parser.add_argument('--test_batch_size', default=100, type=int, help='the batch size for test loader')

33 | parser.add_argument('--eval_batch_size', default=128, type=int, help='the batch size for validation loader')

34 | parser.add_argument('--disable_gpu_occupancy', action='store_false', help='disable GPU occupancy')

35 |

36 | ### Network architecture parameters

37 | parser.add_argument('--branch_mode', default='dual', type=str, choices=['dual', 'single'], help='the branch mode for AANets')

38 | parser.add_argument('--branch_1', default='ss', type=str, choices=['ss', 'fixed', 'free'], help='the network type for the first branch')

39 | parser.add_argument('--branch_2', default='free', type=str, choices=['ss', 'fixed', 'free'], help='the network type for the second branch')

40 | parser.add_argument('--imgnet_backbone', default='resnet18', type=str, choices=['resnet18', 'resnet34'], help='network backbone for ImageNet')

41 |

42 | ### Incremental learning parameters

43 | parser.add_argument('--num_classes', default=100, type=int, help='the total number of classes')

44 | parser.add_argument('--nb_cl_fg', default=50, type=int, help='the number of classes in the 0-th phase')

45 | parser.add_argument('--nb_cl', default=10, type=int, help='the number of classes for each phase')

46 | parser.add_argument('--nb_protos', default=20, type=int, help='the number of exemplars for each class')

47 | parser.add_argument('--epochs', default=160, type=int, help='the number of epochs')

48 | parser.add_argument('--dynamic_budget', action='store_true', help='using dynamic budget setting')

49 | parser.add_argument('--fusion_lr', default=1e-8, type=float, help='the learning rate for the aggregation weights')

50 |

51 | ### General learning parameters

52 | parser.add_argument('--lr_factor', default=0.1, type=float, help='learning rate decay factor')

53 | parser.add_argument('--custom_weight_decay', default=5e-4, type=float, help='weight decay parameter for the optimizer')

54 | parser.add_argument('--custom_momentum', default=0.9, type=float, help='momentum parameter for the optimizer')

55 | parser.add_argument('--base_lr1', default=0.1, type=float, help='learning rate for the 0-th phase')

56 | parser.add_argument('--base_lr2', default=0.1, type=float, help='learning rate for the following phases')

57 |

58 | ### LUCIR parameters

59 | parser.add_argument('--the_lambda', default=5, type=float, help='lamda for LF')

60 | parser.add_argument('--dist', default=0.5, type=float, help='dist for margin ranking losses')

61 | parser.add_argument('--K', default=2, type=int, help='K for margin ranking losses')

62 | parser.add_argument('--lw_mr', default=1, type=float, help='loss weight for margin ranking losses')

63 |

64 | ### iCaRL parameters

65 | parser.add_argument('--icarl_beta', default=0.25, type=float, help='beta for iCaRL')

66 | parser.add_argument('--icarl_T', default=2, type=int, help='T for iCaRL')

67 |

68 | the_args = parser.parse_args()

69 |

70 | # Checke the number of classes, ensure they are reasonable

71 | assert(the_args.nb_cl_fg % the_args.nb_cl == 0)

72 | assert(the_args.nb_cl_fg >= the_args.nb_cl)

73 |

74 | # Print the parameters

75 | print(the_args)

76 |

77 | # Set GPU index

78 | os.environ['CUDA_VISIBLE_DEVICES'] = the_args.gpu

79 | print('Using gpu:', the_args.gpu)

80 |

81 | # Occupy GPU memory in advance

82 | if the_args.disable_gpu_occupancy:

83 | occupy_memory(the_args.gpu)

84 | print('Occupy GPU memory in advance.')

85 |

86 | # Set the trainer and start training

87 | trainer = Trainer(the_args)

88 | trainer.train()

89 |

--------------------------------------------------------------------------------

/adaptive-aggregation-networks/models/modified_linear.py:

--------------------------------------------------------------------------------

1 | ##+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

2 | ## Created by: Yaoyao Liu

3 | ## Modified from: https://github.com/hshustc/CVPR19_Incremental_Learning

4 | ## Max Planck Institute for Informatics

5 | ## yaoyao.liu@mpi-inf.mpg.de

6 | ## Copyright (c) 2021

7 | ##

8 | ## This source code is licensed under the MIT-style license found in the

9 | ## LICENSE file in the root directory of this source tree

10 | ##+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

11 | import math

12 | import torch

13 | from torch.nn.parameter import Parameter

14 | from torch.nn import functional as F

15 | from torch.nn import Module

16 |

17 | class CosineLinear(Module):

18 | def __init__(self, in_features, out_features, sigma=True):

19 | super(CosineLinear, self).__init__()

20 | self.in_features = in_features

21 | self.out_features = out_features

22 | self.weight = Parameter(torch.Tensor(out_features, in_features))

23 | if sigma:

24 | self.sigma = Parameter(torch.Tensor(1))

25 | else:

26 | self.register_parameter('sigma', None)

27 | self.reset_parameters()

28 |

29 | def reset_parameters(self):

30 | stdv = 1. / math.sqrt(self.weight.size(1))

31 | self.weight.data.uniform_(-stdv, stdv)

32 | if self.sigma is not None:

33 | self.sigma.data.fill_(1)

34 |

35 | def forward(self, input):

36 | out = F.linear(F.normalize(input, p=2,dim=1), \

37 | F.normalize(self.weight, p=2, dim=1))

38 | if self.sigma is not None:

39 | out = self.sigma * out

40 | return out

41 |

42 | class SplitCosineLinear(Module):

43 | def __init__(self, in_features, out_features1, out_features2, sigma=True):

44 | super(SplitCosineLinear, self).__init__()

45 | self.in_features = in_features

46 | self.out_features = out_features1 + out_features2

47 | self.fc1 = CosineLinear(in_features, out_features1, False)

48 | self.fc2 = CosineLinear(in_features, out_features2, False)

49 | if sigma:

50 | self.sigma = Parameter(torch.Tensor(1))

51 | self.sigma.data.fill_(1)

52 | else:

53 | self.register_parameter('sigma', None)

54 |

55 | def forward(self, x):

56 | out1 = self.fc1(x)

57 | out2 = self.fc2(x)

58 | out = torch.cat((out1, out2), dim=1)

59 | if self.sigma is not None:

60 | out = self.sigma * out

61 | return out

62 |

--------------------------------------------------------------------------------

/adaptive-aggregation-networks/models/modified_resnet.py:

--------------------------------------------------------------------------------

1 | ##+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

2 | ## Created by: Yaoyao Liu

3 | ## Modified from: https://github.com/hshustc/CVPR19_Incremental_Learning

4 | ## Max Planck Institute for Informatics

5 | ## yaoyao.liu@mpi-inf.mpg.de

6 | ## Copyright (c) 2021

7 | ##

8 | ## This source code is licensed under the MIT-style license found in the

9 | ## LICENSE file in the root directory of this source tree

10 | ##+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

11 | import torch.nn as nn

12 | import math

13 | import torch.utils.model_zoo as model_zoo

14 | import models.modified_linear as modified_linear

15 |

16 | def conv3x3(in_planes, out_planes, stride=1):

17 | return nn.Conv2d(in_planes, out_planes, kernel_size=3, stride=stride,

18 | padding=1, bias=False)

19 |

20 | class BasicBlock(nn.Module):

21 | expansion = 1

22 |

23 | def __init__(self, inplanes, planes, stride=1, downsample=None, last=False):

24 | super(BasicBlock, self).__init__()

25 | self.conv1 = conv3x3(inplanes, planes, stride)

26 | self.bn1 = nn.BatchNorm2d(planes)

27 | self.relu = nn.ReLU(inplace=True)

28 | self.conv2 = conv3x3(planes, planes)

29 | self.bn2 = nn.BatchNorm2d(planes)

30 | self.downsample = downsample

31 | self.stride = stride

32 | self.last = last

33 |

34 | def forward(self, x):

35 | residual = x

36 |

37 | out = self.conv1(x)

38 | out = self.bn1(out)

39 | out = self.relu(out)

40 |

41 | out = self.conv2(out)

42 | out = self.bn2(out)

43 |

44 | if self.downsample is not None:

45 | residual = self.downsample(x)

46 |

47 | out += residual

48 | if not self.last:

49 | out = self.relu(out)

50 |

51 | return out

52 |

53 | class ResNet(nn.Module):

54 |

55 | def __init__(self, block, layers, num_classes=1000):

56 | self.inplanes = 64

57 | super(ResNet, self).__init__()

58 | self.conv1 = nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3,

59 | bias=False)

60 | self.bn1 = nn.BatchNorm2d(64)

61 | self.relu = nn.ReLU(inplace=True)

62 | self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

63 | self.layer1 = self._make_layer(block, 64, layers[0])

64 | self.layer2 = self._make_layer(block, 128, layers[1], stride=2)

65 | self.layer3 = self._make_layer(block, 256, layers[2], stride=2)

66 | self.layer4 = self._make_layer(block, 512, layers[3], stride=2, last_phase=True)

67 | self.avgpool = nn.AvgPool2d(7, stride=1)

68 | self.fc = modified_linear.CosineLinear(512 * block.expansion, num_classes)

69 |

70 | for m in self.modules():

71 | if isinstance(m, nn.Conv2d):

72 | nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

73 | elif isinstance(m, nn.BatchNorm2d):

74 | nn.init.constant_(m.weight, 1)

75 | nn.init.constant_(m.bias, 0)

76 |

77 | def _make_layer(self, block, planes, blocks, stride=1, last_phase=False):

78 | downsample = None

79 | if stride != 1 or self.inplanes != planes * block.expansion:

80 | downsample = nn.Sequential(

81 | nn.Conv2d(self.inplanes, planes * block.expansion,

82 | kernel_size=1, stride=stride, bias=False),

83 | nn.BatchNorm2d(planes * block.expansion),

84 | )

85 |

86 | layers = []

87 | layers.append(block(self.inplanes, planes, stride, downsample))

88 | self.inplanes = planes * block.expansion

89 | if last_phase:

90 | for i in range(1, blocks-1):

91 | layers.append(block(self.inplanes, planes))

92 | layers.append(block(self.inplanes, planes, last=True))

93 | else:

94 | for i in range(1, blocks):

95 | layers.append(block(self.inplanes, planes))

96 |

97 | return nn.Sequential(*layers)

98 |

99 | def forward(self, x):

100 | x = self.conv1(x)

101 | x = self.bn1(x)

102 | x = self.relu(x)

103 | x = self.maxpool(x)

104 |

105 | x = self.layer1(x)

106 | x = self.layer2(x)

107 | x = self.layer3(x)

108 | x = self.layer4(x)

109 |

110 | x = self.avgpool(x)

111 | x = x.view(x.size(0), -1)

112 | x = self.fc(x)

113 |

114 | return x

115 |

116 | def resnet18(pretrained=False, **kwargs):

117 | model = ResNet(BasicBlock, [2, 2, 2, 2], **kwargs)

118 | return model

119 |

120 | def resnet34(pretrained=False, **kwargs):

121 | model = ResNet(BasicBlock, [3, 4, 6, 3], **kwargs)

122 | return model

123 |

--------------------------------------------------------------------------------

/adaptive-aggregation-networks/models/modified_resnet_cifar.py:

--------------------------------------------------------------------------------

1 | ##+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

2 | ## Created by: Yaoyao Liu

3 | ## Modified from: https://github.com/hshustc/CVPR19_Incremental_Learning

4 | ## Max Planck Institute for Informatics

5 | ## yaoyao.liu@mpi-inf.mpg.de

6 | ## Copyright (c) 2021

7 | ##

8 | ## This source code is licensed under the MIT-style license found in the

9 | ## LICENSE file in the root directory of this source tree

10 | ##+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

11 | import torch.nn as nn

12 | import math

13 | import torch.utils.model_zoo as model_zoo

14 | import models.modified_linear as modified_linear

15 |

16 | def conv3x3(in_planes, out_planes, stride=1):

17 | """3x3 convolution with padding"""

18 | return nn.Conv2d(in_planes, out_planes, kernel_size=3, stride=stride,

19 | padding=1, bias=False)

20 |

21 | class BasicBlock(nn.Module):

22 | expansion = 1

23 |

24 | def __init__(self, inplanes, planes, stride=1, downsample=None, last=False):

25 | super(BasicBlock, self).__init__()

26 | self.conv1 = conv3x3(inplanes, planes, stride)

27 | self.bn1 = nn.BatchNorm2d(planes)

28 | self.relu = nn.ReLU(inplace=True)

29 | self.conv2 = conv3x3(planes, planes)

30 | self.bn2 = nn.BatchNorm2d(planes)

31 | self.downsample = downsample

32 | self.stride = stride

33 | self.last = last

34 |

35 | def forward(self, x):

36 | residual = x

37 |

38 | out = self.conv1(x)

39 | out = self.bn1(out)

40 | out = self.relu(out)

41 |

42 | out = self.conv2(out)

43 | out = self.bn2(out)

44 |

45 | if self.downsample is not None:

46 | residual = self.downsample(x)

47 |

48 | out += residual

49 | if not self.last:

50 | out = self.relu(out)

51 |

52 | return out

53 |

54 | class ResNet(nn.Module):

55 |

56 | def __init__(self, block, layers, num_classes=10):

57 | self.inplanes = 16

58 | super(ResNet, self).__init__()

59 | self.conv1 = nn.Conv2d(3, 16, kernel_size=3, stride=1, padding=1,

60 | bias=False)

61 | self.bn1 = nn.BatchNorm2d(16)

62 | self.relu = nn.ReLU(inplace=True)

63 | self.layer1 = self._make_layer(block, 16, layers[0])

64 | self.layer2 = self._make_layer(block, 32, layers[1], stride=2)

65 | self.layer3 = self._make_layer(block, 64, layers[2], stride=2, last_phase=True)

66 | self.avgpool = nn.AvgPool2d(8, stride=1)

67 | self.fc = modified_linear.CosineLinear(64 * block.expansion, num_classes)

68 |

69 | for m in self.modules():

70 | if isinstance(m, nn.Conv2d):

71 | nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

72 | elif isinstance(m, nn.BatchNorm2d):

73 | nn.init.constant_(m.weight, 1)

74 | nn.init.constant_(m.bias, 0)

75 |

76 | def _make_layer(self, block, planes, blocks, stride=1, last_phase=False):

77 | downsample = None

78 | if stride != 1 or self.inplanes != planes * block.expansion:

79 | downsample = nn.Sequential(

80 | nn.Conv2d(self.inplanes, planes * block.expansion,

81 | kernel_size=1, stride=stride, bias=False),

82 | nn.BatchNorm2d(planes * block.expansion),

83 | )

84 |

85 | layers = []

86 | layers.append(block(self.inplanes, planes, stride, downsample))

87 | self.inplanes = planes * block.expansion

88 | if last_phase:

89 | for i in range(1, blocks-1):

90 | layers.append(block(self.inplanes, planes))

91 | layers.append(block(self.inplanes, planes, last=True))

92 | else:

93 | for i in range(1, blocks):

94 | layers.append(block(self.inplanes, planes))

95 |

96 | return nn.Sequential(*layers)

97 |

98 | def forward(self, x):

99 | x = self.conv1(x)

100 | x = self.bn1(x)

101 | x = self.relu(x)

102 |

103 | x = self.layer1(x)

104 | x = self.layer2(x)

105 | x = self.layer3(x)

106 |

107 | x = self.avgpool(x)

108 | x = x.view(x.size(0), -1)

109 | x = self.fc(x)

110 |

111 | return x

112 |

113 | def resnet20(pretrained=False, **kwargs):

114 | n = 3

115 | model = ResNet(BasicBlock, [n, n, n], **kwargs)

116 | return model

117 |

118 | def resnet32(pretrained=False, **kwargs):

119 | n = 5

120 | model = ResNet(BasicBlock, [n, n, n], **kwargs)

121 | return model

122 |

--------------------------------------------------------------------------------

/adaptive-aggregation-networks/models/modified_resnetmtl.py:

--------------------------------------------------------------------------------

1 | ##+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

2 | ## Created by: Yaoyao Liu

3 | ## Modified from: https://github.com/hshustc/CVPR19_Incremental_Learning

4 | ## Max Planck Institute for Informatics

5 | ## yaoyao.liu@mpi-inf.mpg.de

6 | ## Copyright (c) 2021

7 | ##

8 | ## This source code is licensed under the MIT-style license found in the

9 | ## LICENSE file in the root directory of this source tree

10 | ##+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

11 | import torch.nn as nn

12 | import math

13 | import torch.utils.model_zoo as model_zoo

14 | import models.modified_linear as modified_linear

15 | from utils.incremental.conv2d_mtl import Conv2dMtl

16 |

17 | def conv3x3mtl(in_planes, out_planes, stride=1):

18 | """3x3 convolution with padding"""

19 | return Conv2dMtl(in_planes, out_planes, kernel_size=3, stride=stride,

20 | padding=1, bias=False)

21 |

22 |

23 | class BasicBlockMtl(nn.Module):

24 | expansion = 1

25 |

26 | def __init__(self, inplanes, planes, stride=1, downsample=None, last=False):

27 | super(BasicBlockMtl, self).__init__()

28 | self.conv1 = conv3x3mtl(inplanes, planes, stride)

29 | self.bn1 = nn.BatchNorm2d(planes)

30 | self.relu = nn.ReLU(inplace=True)

31 | self.conv2 = conv3x3mtl(planes, planes)

32 | self.bn2 = nn.BatchNorm2d(planes)

33 | self.downsample = downsample

34 | self.stride = stride

35 | self.last = last

36 |

37 | def forward(self, x):

38 | residual = x

39 |

40 | out = self.conv1(x)

41 | out = self.bn1(out)

42 | out = self.relu(out)

43 |

44 | out = self.conv2(out)

45 | out = self.bn2(out)

46 |

47 | if self.downsample is not None:

48 | residual = self.downsample(x)

49 |

50 | out += residual

51 | if not self.last:

52 | out = self.relu(out)

53 |

54 | return out

55 |

56 | class ResNetMtl(nn.Module):

57 |

58 | def __init__(self, block, layers, num_classes=1000):

59 | self.inplanes = 64

60 | super(ResNetMtl, self).__init__()

61 | self.conv1 = Conv2dMtl(3, 64, kernel_size=7, stride=2, padding=3,

62 | bias=False)

63 | self.bn1 = nn.BatchNorm2d(64)

64 | self.relu = nn.ReLU(inplace=True)

65 | self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

66 | self.layer1 = self._make_layer(block, 64, layers[0])

67 | self.layer2 = self._make_layer(block, 128, layers[1], stride=2)

68 | self.layer3 = self._make_layer(block, 256, layers[2], stride=2)

69 | self.layer4 = self._make_layer(block, 512, layers[3], stride=2, last_phase=True)

70 | self.avgpool = nn.AvgPool2d(7, stride=1)

71 | self.fc = modified_linear.CosineLinear(512 * block.expansion, num_classes)

72 |

73 | for m in self.modules():

74 | if isinstance(m, Conv2dMtl):

75 | nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

76 | elif isinstance(m, nn.BatchNorm2d):

77 | nn.init.constant_(m.weight, 1)

78 | nn.init.constant_(m.bias, 0)

79 |

80 | def _make_layer(self, block, planes, blocks, stride=1, last_phase=False):

81 | downsample = None

82 | if stride != 1 or self.inplanes != planes * block.expansion:

83 | downsample = nn.Sequential(

84 | Conv2dMtl(self.inplanes, planes * block.expansion,

85 | kernel_size=1, stride=stride, bias=False),

86 | nn.BatchNorm2d(planes * block.expansion),

87 | )

88 |

89 | layers = []

90 | layers.append(block(self.inplanes, planes, stride, downsample))

91 | self.inplanes = planes * block.expansion

92 | if last_phase:

93 | for i in range(1, blocks-1):

94 | layers.append(block(self.inplanes, planes))

95 | layers.append(block(self.inplanes, planes, last=True))

96 | else:

97 | for i in range(1, blocks):

98 | layers.append(block(self.inplanes, planes))

99 |

100 | return nn.Sequential(*layers)

101 |

102 | def forward(self, x):

103 | x = self.conv1(x)

104 | x = self.bn1(x)

105 | x = self.relu(x)

106 | x = self.maxpool(x)

107 |

108 | x = self.layer1(x)

109 | x = self.layer2(x)

110 | x = self.layer3(x)

111 | x = self.layer4(x)

112 |

113 | x = self.avgpool(x)

114 | x = x.view(x.size(0), -1)

115 | x = self.fc(x)

116 |

117 | return x

118 |

119 | def resnetmtl18(pretrained=False, **kwargs):

120 | model = ResNetMtl(BasicBlockMtl, [2, 2, 2, 2], **kwargs)

121 | return model

122 |

123 | def resnetmtl34(pretrained=False, **kwargs):

124 | model = ResNetMtl(BasicBlockMtl, [3, 4, 6, 3], **kwargs)

125 | return model

126 |

--------------------------------------------------------------------------------

/adaptive-aggregation-networks/models/modified_resnetmtl_cifar.py:

--------------------------------------------------------------------------------

1 | ##+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

2 | ## Created by: Yaoyao Liu

3 | ## Modified from: https://github.com/hshustc/CVPR19_Incremental_Learning

4 | ## Max Planck Institute for Informatics

5 | ## yaoyao.liu@mpi-inf.mpg.de

6 | ## Copyright (c) 2021

7 | ##

8 | ## This source code is licensed under the MIT-style license found in the

9 | ## LICENSE file in the root directory of this source tree

10 | ##+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

11 | import torch.nn as nn

12 | import math

13 | import torch.utils.model_zoo as model_zoo

14 | import models.modified_linear as modified_linear

15 | from utils.incremental.conv2d_mtl import Conv2dMtl

16 |

17 | def conv3x3mtl(in_planes, out_planes, stride=1):

18 | return Conv2dMtl(in_planes, out_planes, kernel_size=3, stride=stride,

19 | padding=1, bias=False)

20 |

21 | class BasicBlockMtl(nn.Module):

22 | expansion = 1

23 |

24 | def __init__(self, inplanes, planes, stride=1, downsample=None, last=False):

25 | super(BasicBlockMtl, self).__init__()

26 | self.conv1 = conv3x3mtl(inplanes, planes, stride)

27 | self.bn1 = nn.BatchNorm2d(planes)

28 | self.relu = nn.ReLU(inplace=True)

29 | self.conv2 = conv3x3mtl(planes, planes)

30 | self.bn2 = nn.BatchNorm2d(planes)

31 | self.downsample = downsample

32 | self.stride = stride

33 | self.last = last

34 |

35 | def forward(self, x):

36 | residual = x

37 |

38 | out = self.conv1(x)

39 | out = self.bn1(out)

40 | out = self.relu(out)

41 |

42 | out = self.conv2(out)

43 | out = self.bn2(out)

44 |

45 | if self.downsample is not None:

46 | residual = self.downsample(x)

47 |

48 | out += residual

49 | if not self.last:

50 | out = self.relu(out)

51 |

52 | return out

53 |

54 | class ResNetMtl(nn.Module):

55 |

56 | def __init__(self, block, layers, num_classes=10):

57 | self.inplanes = 16

58 | super(ResNetMtl, self).__init__()

59 | self.conv1 = Conv2dMtl(3, 16, kernel_size=3, stride=1, padding=1,

60 | bias=False)

61 | self.bn1 = nn.BatchNorm2d(16)

62 | self.relu = nn.ReLU(inplace=True)

63 | self.layer1 = self._make_layer(block, 16, layers[0])

64 | self.layer2 = self._make_layer(block, 32, layers[1], stride=2)

65 | self.layer3 = self._make_layer(block, 64, layers[2], stride=2, last_phase=True)

66 | self.avgpool = nn.AvgPool2d(8, stride=1)

67 | self.fc = modified_linear.CosineLinear(64 * block.expansion, num_classes)

68 |

69 | for m in self.modules():

70 | if isinstance(m, Conv2dMtl):

71 | nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

72 | elif isinstance(m, nn.BatchNorm2d):

73 | nn.init.constant_(m.weight, 1)

74 | nn.init.constant_(m.bias, 0)

75 |

76 | def _make_layer(self, block, planes, blocks, stride=1, last_phase=False):

77 | downsample = None

78 | if stride != 1 or self.inplanes != planes * block.expansion:

79 | downsample = nn.Sequential(

80 | Conv2dMtl(self.inplanes, planes * block.expansion,

81 | kernel_size=1, stride=stride, bias=False),

82 | nn.BatchNorm2d(planes * block.expansion),

83 | )

84 |

85 | layers = []

86 | layers.append(block(self.inplanes, planes, stride, downsample))

87 | self.inplanes = planes * block.expansion

88 | if last_phase:

89 | for i in range(1, blocks-1):

90 | layers.append(block(self.inplanes, planes))

91 | layers.append(block(self.inplanes, planes, last=True))

92 | else:

93 | for i in range(1, blocks):

94 | layers.append(block(self.inplanes, planes))

95 |

96 | return nn.Sequential(*layers)

97 |

98 | def forward(self, x):

99 | x = self.conv1(x)

100 | x = self.bn1(x)

101 | x = self.relu(x)

102 |

103 | x = self.layer1(x)

104 | x = self.layer2(x)

105 | x = self.layer3(x)

106 |

107 | x = self.avgpool(x)

108 | x = x.view(x.size(0), -1)

109 | x = self.fc(x)

110 |

111 | return x

112 |

113 | def resnetmtl20(pretrained=False, **kwargs):

114 | n = 3

115 | model = ResNetMtl(BasicBlockMtl, [n, n, n], **kwargs)

116 | return model

117 |

118 | def resnetmtl32(pretrained=False, **kwargs):

119 | n = 5

120 | model = ResNetMtl(BasicBlockMtl, [n, n, n], **kwargs)

121 | return model

122 |

--------------------------------------------------------------------------------

/adaptive-aggregation-networks/models/resnet_cifar.py:

--------------------------------------------------------------------------------

1 | ##+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

2 | ## Created by: Yaoyao Liu

3 | ## Modified from: https://github.com/hshustc/CVPR19_Incremental_Learning

4 | ## Max Planck Institute for Informatics

5 | ## yaoyao.liu@mpi-inf.mpg.de

6 | ## Copyright (c) 2021

7 | ##

8 | ## This source code is licensed under the MIT-style license found in the

9 | ## LICENSE file in the root directory of this source tree

10 | ##+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

11 | import torch.nn as nn

12 | import math

13 | import torch.utils.model_zoo as model_zoo

14 | from utils.incremental.conv2d_mtl import Conv2d

15 |

16 | def conv3x3(in_planes, out_planes, stride=1):

17 | """3x3 convolution with padding"""

18 | return Conv2d(in_planes, out_planes, kernel_size=3, stride=stride,

19 | padding=1, bias=False)

20 |

21 | class BasicBlock(nn.Module):

22 | expansion = 1

23 |

24 | def __init__(self, inplanes, planes, stride=1, downsample=None):

25 | super(BasicBlock, self).__init__()

26 | self.conv1 = conv3x3(inplanes, planes, stride)

27 | self.bn1 = nn.BatchNorm2d(planes)

28 | self.relu = nn.ReLU(inplace=True)

29 | self.conv2 = conv3x3(planes, planes)

30 | self.bn2 = nn.BatchNorm2d(planes)

31 | self.downsample = downsample

32 | self.stride = stride

33 |

34 | def forward(self, x):

35 | residual = x

36 | import pdb

37 | pdb.set_trace()

38 | out = self.conv1(x)

39 | out = self.bn1(out)

40 | out = self.relu(out)

41 |

42 | out = self.conv2(out)

43 | out = self.bn2(out)

44 |

45 | if self.downsample is not None:

46 | residual = self.downsample(x)

47 |

48 | out += residual

49 | out = self.relu(out)

50 |

51 | return out

52 |

53 |

54 | class Bottleneck(nn.Module):

55 | expansion = 4

56 |

57 | def __init__(self, inplanes, planes, stride=1, downsample=None):

58 | super(Bottleneck, self).__init__()

59 | self.conv1 = nn.Conv2d(inplanes, planes, kernel_size=1, bias=False)

60 | self.bn1 = nn.BatchNorm2d(planes)

61 | self.conv2 = nn.Conv2d(planes, planes, kernel_size=3, stride=stride,

62 | padding=1, bias=False)

63 | self.bn2 = nn.BatchNorm2d(planes)

64 | self.conv3 = nn.Conv2d(planes, planes * self.expansion, kernel_size=1, bias=False)

65 | self.bn3 = nn.BatchNorm2d(planes * self.expansion)

66 | self.relu = nn.ReLU(inplace=True)

67 | self.downsample = downsample

68 | self.stride = stride

69 |

70 | def forward(self, x):

71 | residual = x

72 |

73 | out = self.conv1(x)

74 | out = self.bn1(out)

75 | out = self.relu(out)

76 |

77 | out = self.conv2(out)

78 | out = self.bn2(out)

79 | out = self.relu(out)

80 |

81 | out = self.conv3(out)

82 | out = self.bn3(out)

83 |

84 | if self.downsample is not None:

85 | residual = self.downsample(x)

86 |

87 | out += residual

88 | out = self.relu(out)

89 |

90 | return out

91 |

92 |

93 | class ResNet(nn.Module):

94 |

95 | def __init__(self, block, layers, num_classes=10):

96 | self.inplanes = 16

97 | super(ResNet, self).__init__()

98 | self.conv1 = nn.Conv2d(3, 16, kernel_size=3, stride=1, padding=1,

99 | bias=False)

100 | self.bn1 = nn.BatchNorm2d(16)

101 | self.relu = nn.ReLU(inplace=True)

102 | self.layer1 = self._make_layer(block, 16, layers[0])

103 | self.layer2 = self._make_layer(block, 32, layers[1], stride=2)

104 | self.layer3 = self._make_layer(block, 64, layers[2], stride=2)

105 | self.avgpool = nn.AvgPool2d(8, stride=1)

106 | self.fc = nn.Linear(64 * block.expansion, num_classes)

107 |

108 | for m in self.modules():

109 | if isinstance(m, nn.Conv2d):

110 | nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

111 | elif isinstance(m, nn.BatchNorm2d):

112 | nn.init.constant_(m.weight, 1)

113 | nn.init.constant_(m.bias, 0)

114 |

115 | def _make_layer(self, block, planes, blocks, stride=1):

116 | downsample = None

117 | if stride != 1 or self.inplanes != planes * block.expansion:

118 | downsample = nn.Sequential(

119 | nn.Conv2d(self.inplanes, planes * block.expansion,

120 | kernel_size=1, stride=stride, bias=False),

121 | nn.BatchNorm2d(planes * block.expansion),

122 | )

123 |

124 | layers = []

125 | layers.append(block(self.inplanes, planes, stride, downsample))

126 | self.inplanes = planes * block.expansion

127 | for i in range(1, blocks):

128 | layers.append(block(self.inplanes, planes))

129 |

130 | return nn.Sequential(*layers)

131 |

132 | def forward(self, x):

133 | x = self.conv1(x)

134 | x = self.bn1(x)

135 | x = self.relu(x)

136 |

137 | x = self.layer1(x)

138 | x = self.layer2(x)

139 | x = self.layer3(x)

140 |

141 | x = self.avgpool(x)

142 | x = x.view(x.size(0), -1)

143 | x = self.fc(x)

144 |

145 | return x

146 |

147 | def resnet20(pretrained=False, **kwargs):

148 | n = 3

149 | model = ResNet(BasicBlock, [n, n, n], **kwargs)

150 | return model

151 |

152 | def resnet32(pretrained=False, **kwargs):

153 | n = 5

154 | model = ResNet(BasicBlock, [n, n, n], **kwargs)

155 | return model

156 |

157 | def resnet56(pretrained=False, **kwargs):

158 | n = 9

159 | model = ResNet(Bottleneck, [n, n, n], **kwargs)

160 | return model

161 |

--------------------------------------------------------------------------------

/adaptive-aggregation-networks/trainer/__init__.py:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/yaoyao-liu/class-incremental-learning/701af9f819f559c6ab3d3ee73bb3d7c21e924572/adaptive-aggregation-networks/trainer/__init__.py

--------------------------------------------------------------------------------

/adaptive-aggregation-networks/trainer/incremental_icarl.py:

--------------------------------------------------------------------------------

1 | ##+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

2 | ## Created by: Yaoyao Liu

3 | ## Modified from: https://github.com/hshustc/CVPR19_Incremental_Learning

4 | ## Max Planck Institute for Informatics

5 | ## yaoyao.liu@mpi-inf.mpg.de

6 | ## Copyright (c) 2019

7 | ##

8 | ## This source code is licensed under the MIT-style license found in the

9 | ## LICENSE file in the root directory of this source tree

10 | ##+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

11 | """ Training code for iCaRL """

12 | import torch

13 | import tqdm

14 | import numpy as np

15 | import torch.nn as nn

16 | import torchvision

17 | from torch.optim import lr_scheduler

18 | from torchvision import datasets, models, transforms

19 | from utils.misc import *

20 | from utils.process_fp import process_inputs_fp

21 | import torch.nn.functional as F

22 |

23 | def incremental_train_and_eval(the_args, epochs, fusion_vars, ref_fusion_vars, b1_model, ref_model, b2_model, ref_b2_model, tg_optimizer, tg_lr_scheduler, fusion_optimizer, fusion_lr_scheduler, trainloader, testloader, iteration, start_iteration, X_protoset_cumuls, Y_protoset_cumuls, order_list,lamda, dist, K, lw_mr, balancedloader, T=None, beta=None, fix_bn=False, weight_per_class=None, device=None):

24 |

25 | # Setting up the CUDA device

26 | if device is None:

27 | device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

28 | # Set the 1st branch reference model to the evaluation mode

29 | ref_model.eval()

30 |

31 | # Get the number of old classes

32 | num_old_classes = ref_model.fc.out_features

33 |

34 | # If the 2nd branch reference is not None, set it to the evaluation mode

35 | if iteration > start_iteration+1:

36 | ref_b2_model.eval()

37 |

38 | for epoch in range(epochs):

39 | # Start training for the current phase, set the two branch models to the training mode

40 | b1_model.train()

41 | b2_model.train()

42 |

43 | # Fix the batch norm parameters according to the config

44 | if fix_bn:

45 | for m in b1_model.modules():

46 | if isinstance(m, nn.BatchNorm2d):

47 | m.eval()

48 |

49 | # Set all the losses to zeros

50 | train_loss = 0

51 | train_loss1 = 0

52 | train_loss2 = 0

53 | # Set the counters to zeros

54 | correct = 0

55 | total = 0

56 |

57 | # Learning rate decay

58 | tg_lr_scheduler.step()

59 | fusion_lr_scheduler.step()

60 |

61 | # Print the information

62 | print('\nEpoch: %d, learning rate: ' % epoch, end='')

63 | print(tg_lr_scheduler.get_lr()[0])

64 |

65 | for batch_idx, (inputs, targets) in enumerate(trainloader):

66 |

67 | # Get a batch of training samples, transfer them to the device

68 | inputs, targets = inputs.to(device), targets.to(device)

69 |

70 | # Clear the gradient of the paramaters for the tg_optimizer

71 | tg_optimizer.zero_grad()

72 |

73 | # Forward the samples in the deep networks

74 | outputs, _ = process_inputs_fp(the_args, fusion_vars, b1_model, b2_model, inputs)

75 |

76 | if iteration == start_iteration+1:

77 | ref_outputs = ref_model(inputs)

78 | else:

79 | ref_outputs, ref_features_new = process_inputs_fp(the_args, ref_fusion_vars, ref_model, ref_b2_model, inputs)

80 | # Loss 1: logits-level distillation loss

81 | loss1 = nn.KLDivLoss()(F.log_softmax(outputs[:,:num_old_classes]/T, dim=1), \

82 | F.softmax(ref_outputs.detach()/T, dim=1)) * T * T * beta * num_old_classes

83 | # Loss 2: classification loss

84 | loss2 = nn.CrossEntropyLoss(weight_per_class)(outputs, targets)

85 | # Sum up all looses

86 | loss = loss1 + loss2

87 |

88 | # Backward and update the parameters

89 | loss.backward()

90 | tg_optimizer.step()

91 |

92 | # Record the losses and the number of samples to compute the accuracy

93 | train_loss += loss.item()

94 | train_loss1 += loss1.item()

95 | train_loss2 += loss2.item()

96 | _, predicted = outputs.max(1)

97 | total += targets.size(0)

98 | correct += predicted.eq(targets).sum().item()

99 |

100 | # Print the training losses and accuracies

101 | print('Train set: {}, train loss1: {:.4f}, train loss2: {:.4f}, train loss: {:.4f} accuracy: {:.4f}'.format(len(trainloader), train_loss1/(batch_idx+1), train_loss2/(batch_idx+1), train_loss/(batch_idx+1), 100.*correct/total))

102 |

103 | # Update the aggregation weights

104 | b1_model.eval()

105 | b2_model.eval()

106 |

107 | for batch_idx, (inputs, targets) in enumerate(balancedloader):

108 | fusion_optimizer.zero_grad()

109 | inputs, targets = inputs.to(device), targets.to(device)

110 | outputs, _ = process_inputs_fp(the_args, fusion_vars, b1_model, b2_model, inputs)

111 | loss = nn.CrossEntropyLoss(weight_per_class)(outputs, targets)

112 | loss.backward()

113 | fusion_optimizer.step()

114 |

115 | # Running the test for this epoch

116 | b1_model.eval()

117 | b2_model.eval()

118 | test_loss = 0

119 | correct = 0

120 | total = 0

121 | with torch.no_grad():

122 | for batch_idx, (inputs, targets) in enumerate(testloader):

123 | inputs, targets = inputs.to(device), targets.to(device)

124 | outputs, _ = process_inputs_fp(the_args, fusion_vars, b1_model, b2_model, inputs)

125 | loss = nn.CrossEntropyLoss(weight_per_class)(outputs, targets)

126 | test_loss += loss.item()

127 | _, predicted = outputs.max(1)

128 | total += targets.size(0)

129 | correct += predicted.eq(targets).sum().item()

130 | print('Test set: {} test loss: {:.4f} accuracy: {:.4f}'.format(len(testloader), test_loss/(batch_idx+1), 100.*correct/total))

131 |

132 | print("Removing register forward hook")

133 | return b1_model, b2_model

134 |

--------------------------------------------------------------------------------

/adaptive-aggregation-networks/trainer/incremental_lucir.py:

--------------------------------------------------------------------------------

1 | ##+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

2 | ## Created by: Yaoyao Liu

3 | ## Modified from: https://github.com/hshustc/CVPR19_Incremental_Learning

4 | ## Max Planck Institute for Informatics

5 | ## yaoyao.liu@mpi-inf.mpg.de

6 | ## Copyright (c) 2021

7 | ##

8 | ## This source code is licensed under the MIT-style license found in the

9 | ## LICENSE file in the root directory of this source tree

10 | ##+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

11 | """ Training code for LUCIR """

12 | import torch

13 | import tqdm

14 | import numpy as np

15 | import torch.nn as nn

16 | import torchvision

17 | from torch.optim import lr_scheduler

18 | from torchvision import datasets, models, transforms

19 | from utils.misc import *

20 | from utils.process_fp import process_inputs_fp

21 |

22 | cur_features = []

23 | ref_features = []

24 | old_scores = []

25 | new_scores = []

26 |

27 | def get_ref_features(self, inputs, outputs):

28 | global ref_features

29 | ref_features = inputs[0]

30 |

31 | def get_cur_features(self, inputs, outputs):

32 | global cur_features

33 | cur_features = inputs[0]

34 |

35 | def get_old_scores_before_scale(self, inputs, outputs):

36 | global old_scores

37 | old_scores = outputs

38 |

39 | def get_new_scores_before_scale(self, inputs, outputs):

40 | global new_scores

41 | new_scores = outputs

42 |

43 | def map_labels(order_list, Y_set):

44 | map_Y = []

45 | for idx in Y_set:

46 | map_Y.append(order_list.index(idx))

47 | map_Y = np.array(map_Y)

48 | return map_Y

49 |

50 |

51 | def incremental_train_and_eval(the_args, epochs, fusion_vars, ref_fusion_vars, b1_model, ref_model, b2_model, ref_b2_model, \

52 | tg_optimizer, tg_lr_scheduler, fusion_optimizer, fusion_lr_scheduler, trainloader, testloader, iteration, \

53 | start_iteration, X_protoset_cumuls, Y_protoset_cumuls, order_list, the_lambda, dist, \

54 | K, lw_mr, balancedloader, fix_bn=False, weight_per_class=None, device=None):

55 |

56 | # Setting up the CUDA device

57 | if device is None:

58 | device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

59 | # Set the 1st branch reference model to the evaluation mode

60 | ref_model.eval()

61 |

62 | # Get the number of old classes

63 | num_old_classes = ref_model.fc.out_features

64 |

65 | # Get the features from the current and the reference model

66 | handle_ref_features = ref_model.fc.register_forward_hook(get_ref_features)

67 | handle_cur_features = b1_model.fc.register_forward_hook(get_cur_features)

68 | handle_old_scores_bs = b1_model.fc.fc1.register_forward_hook(get_old_scores_before_scale)

69 | handle_new_scores_bs = b1_model.fc.fc2.register_forward_hook(get_new_scores_before_scale)

70 |

71 | # If the 2nd branch reference is not None, set it to the evaluation mode

72 | if iteration > start_iteration+1:

73 | ref_b2_model.eval()

74 |

75 | for epoch in range(epochs):

76 | # Start training for the current phase, set the two branch models to the training mode

77 | b1_model.train()

78 | b2_model.train()

79 |

80 | # Fix the batch norm parameters according to the config

81 | if fix_bn:

82 | for m in b1_model.modules():

83 | if isinstance(m, nn.BatchNorm2d):

84 | m.eval()

85 |

86 | # Set all the losses to zeros

87 | train_loss = 0

88 | train_loss1 = 0

89 | train_loss2 = 0

90 | train_loss3 = 0

91 | # Set the counters to zeros

92 | correct = 0

93 | total = 0

94 |

95 | # Learning rate decay

96 | tg_lr_scheduler.step()

97 | fusion_lr_scheduler.step()

98 |

99 | # Print the information

100 | print('\nEpoch: %d, learning rate: ' % epoch, end='')

101 | print(tg_lr_scheduler.get_lr()[0])

102 |

103 | for batch_idx, (inputs, targets) in enumerate(trainloader):

104 |

105 | # Get a batch of training samples, transfer them to the device

106 | inputs, targets = inputs.to(device), targets.to(device)

107 |

108 | # Clear the gradient of the paramaters for the tg_optimizer

109 | tg_optimizer.zero_grad()

110 |

111 | # Forward the samples in the deep networks

112 | outputs, _ = process_inputs_fp(the_args, fusion_vars, b1_model, b2_model, inputs)

113 |

114 | # Loss 1: feature-level distillation loss

115 | if iteration == start_iteration+1:

116 | ref_outputs = ref_model(inputs)

117 | loss1 = nn.CosineEmbeddingLoss()(cur_features, ref_features.detach(), torch.ones(inputs.shape[0]).to(device)) * the_lambda

118 | else:

119 | ref_outputs, ref_features_new = process_inputs_fp(the_args, ref_fusion_vars, ref_model, ref_b2_model, inputs)

120 | loss1 = nn.CosineEmbeddingLoss()(cur_features, ref_features_new.detach(), torch.ones(inputs.shape[0]).to(device)) * the_lambda

121 |

122 | # Loss 2: classification loss

123 | loss2 = nn.CrossEntropyLoss(weight_per_class)(outputs, targets)

124 |

125 | # Loss 3: margin ranking loss

126 | outputs_bs = torch.cat((old_scores, new_scores), dim=1)

127 | assert(outputs_bs.size()==outputs.size())

128 | gt_index = torch.zeros(outputs_bs.size()).to(device)

129 | gt_index = gt_index.scatter(1, targets.view(-1,1), 1).ge(0.5)

130 | gt_scores = outputs_bs.masked_select(gt_index)

131 | max_novel_scores = outputs_bs[:, num_old_classes:].topk(K, dim=1)[0]

132 | hard_index = targets.lt(num_old_classes)

133 | hard_num = torch.nonzero(hard_index).size(0)

134 | if hard_num > 0:

135 | gt_scores = gt_scores[hard_index].view(-1, 1).repeat(1, K)

136 | max_novel_scores = max_novel_scores[hard_index]

137 | assert(gt_scores.size() == max_novel_scores.size())

138 | assert(gt_scores.size(0) == hard_num)

139 | loss3 = nn.MarginRankingLoss(margin=dist)(gt_scores.view(-1, 1), max_novel_scores.view(-1, 1), torch.ones(hard_num*K).to(device)) * lw_mr

140 | else:

141 | loss3 = torch.zeros(1).to(device)

142 |

143 | # Sum up all looses

144 | loss = loss1 + loss2 + loss3

145 |

146 | # Backward and update the parameters

147 | loss.backward()

148 | tg_optimizer.step()

149 |

150 | # Record the losses and the number of samples to compute the accuracy

151 | train_loss += loss.item()

152 | train_loss1 += loss1.item()

153 | train_loss2 += loss2.item()

154 | train_loss3 += loss3.item()

155 | _, predicted = outputs.max(1)

156 | total += targets.size(0)

157 | correct += predicted.eq(targets).sum().item()

158 |

159 | # Print the training losses and accuracies

160 | print('Train set: {}, train loss1: {:.4f}, train loss2: {:.4f}, train loss3: {:.4f}, train loss: {:.4f} accuracy: {:.4f}'.format(len(trainloader), train_loss1/(batch_idx+1), train_loss2/(batch_idx+1), train_loss3/(batch_idx+1), train_loss/(batch_idx+1), 100.*correct/total))

161 |

162 | # Update the aggregation weights

163 | b1_model.eval()

164 | b2_model.eval()

165 |

166 | for batch_idx, (inputs, targets) in enumerate(balancedloader):

167 | if batch_idx <= 500:

168 | inputs, targets = inputs.to(device), targets.to(device)

169 | outputs, _ = process_inputs_fp(the_args, fusion_vars, b1_model, b2_model, inputs)

170 | loss = nn.CrossEntropyLoss(weight_per_class)(outputs, targets)

171 | loss.backward()

172 | fusion_optimizer.step()

173 |

174 | # Running the test for this epoch

175 | b1_model.eval()

176 | b2_model.eval()

177 | test_loss = 0

178 | correct = 0

179 | total = 0

180 | with torch.no_grad():

181 | for batch_idx, (inputs, targets) in enumerate(testloader):

182 | inputs, targets = inputs.to(device), targets.to(device)

183 | outputs, _ = process_inputs_fp(the_args, fusion_vars, b1_model, b2_model, inputs)

184 | loss = nn.CrossEntropyLoss(weight_per_class)(outputs, targets)

185 | test_loss += loss.item()

186 | _, predicted = outputs.max(1)

187 | total += targets.size(0)

188 | correct += predicted.eq(targets).sum().item()

189 | print('Test set: {} test loss: {:.4f} accuracy: {:.4f}'.format(len(testloader), test_loss/(batch_idx+1), 100.*correct/total))

190 |

191 | print("Removing register forward hook")

192 | handle_ref_features.remove()

193 | handle_cur_features.remove()

194 | handle_old_scores_bs.remove()

195 | handle_new_scores_bs.remove()

196 | return b1_model, b2_model

197 |

--------------------------------------------------------------------------------

/adaptive-aggregation-networks/trainer/trainer.py:

--------------------------------------------------------------------------------

1 | ##+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

2 | ## Created by: Yaoyao Liu

3 | ## Modified from: https://github.com/hshustc/CVPR19_Incremental_Learning

4 | ## Max Planck Institute for Informatics

5 | ## yaoyao.liu@mpi-inf.mpg.de

6 | ## Copyright (c) 2021

7 | ##

8 | ## This source code is licensed under the MIT-style license found in the

9 | ## LICENSE file in the root directory of this source tree

10 | ##+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

11 | """ Class-incremental learning trainer. """

12 | import torch

13 | import torch.nn as nn

14 | import torch.nn.functional as F

15 | import torch.optim as optim

16 | from torch.optim import lr_scheduler

17 | import torchvision

18 | from torchvision import datasets, models, transforms

19 | from torch.autograd import Variable

20 | from tensorboardX import SummaryWriter

21 | import numpy as np

22 | import time

23 | import os

24 | import os.path as osp

25 | import sys

26 | import copy

27 | import argparse

28 | from PIL import Image

29 | try:

30 | import cPickle as pickle

31 | except:

32 | import pickle

33 | import math

34 | import utils.misc

35 | import models.modified_resnet_cifar as modified_resnet_cifar

36 | import models.modified_resnetmtl_cifar as modified_resnetmtl_cifar

37 | import models.modified_resnet as modified_resnet

38 | import models.modified_resnetmtl as modified_resnetmtl

39 | import models.modified_linear as modified_linear

40 | from utils.imagenet.utils_dataset import split_images_labels

41 | from utils.imagenet.utils_dataset import merge_images_labels

42 | from utils.incremental.compute_accuracy import compute_accuracy

43 | from trainer.incremental_lucir import incremental_train_and_eval as incremental_train_and_eval_lucir

44 | from trainer.incremental_icarl import incremental_train_and_eval as incremental_train_and_eval_icarl

45 | from trainer.zeroth_phase import incremental_train_and_eval_zeroth_phase as incremental_train_and_eval_zeroth_phase

46 | from utils.misc import process_mnemonics

47 | from trainer.base_trainer import BaseTrainer

48 | import warnings

49 | warnings.filterwarnings('ignore')

50 |

51 | class Trainer(BaseTrainer):

52 | def train(self):

53 | """The class that contains the code for the class-incremental system.

54 | This trianer is based on the base_trainer.py in the same folder.

55 | If you hope to find the source code of the functions used in this trainer, you may find them in base_trainer.py.

56 | """

57 |

58 | # Set tensorboard recorder

59 | self.train_writer = SummaryWriter(comment=self.save_path)

60 |

61 | # Initial the array to store the accuracies for each phase

62 | top1_acc_list_cumul = np.zeros((int(self.args.num_classes/self.args.nb_cl), 3, 1))

63 | top1_acc_list_ori = np.zeros((int(self.args.num_classes/self.args.nb_cl), 3, 1))

64 |

65 | # Load the training and test samples from the dataset

66 | X_train_total, Y_train_total, X_valid_total, Y_valid_total = self.set_dataset()

67 |

68 | # Initialize the aggregation weights

69 | self.init_fusion_vars()

70 |

71 | # Initialize the class order

72 | order, order_list = self.init_class_order()

73 | np.random.seed(None)

74 |

75 | # Set empty lists for the data

76 | X_valid_cumuls = []

77 | X_protoset_cumuls = []

78 | X_train_cumuls = []

79 | Y_valid_cumuls = []

80 | Y_protoset_cumuls = []

81 | Y_train_cumuls = []

82 |

83 | # Initialize the prototypes

84 | alpha_dr_herding, prototypes = self.init_prototypes(self.dictionary_size, order, X_train_total, Y_train_total)

85 |

86 | # Set the starting iteration

87 | # We start training the class-incremental learning system from e.g., 50 classes to provide a good initial encoder

88 | start_iter = int(self.args.nb_cl_fg/self.args.nb_cl)-1

89 |

90 | # Set the models and some parameter to None

91 | # These models and parameters will be assigned in the following phases

92 | b1_model = None

93 | ref_model = None

94 | b2_model = None

95 | ref_b2_model = None

96 | the_lambda_mult = None

97 |

98 | for iteration in range(start_iter, int(self.args.num_classes/self.args.nb_cl)):

99 | ### Initialize models for the current phase

100 | b1_model, b2_model, ref_model, ref_b2_model, lambda_mult, cur_lambda, last_iter = self.init_current_phase_model(iteration, start_iter, b1_model, b2_model)

101 |

102 | ### Initialize datasets for the current phase

103 | if iteration == start_iter:

104 | indices_train_10, X_valid_cumul, X_train_cumul, Y_valid_cumul, Y_train_cumul, \

105 | X_train_cumuls, Y_valid_cumuls, X_protoset_cumuls, Y_protoset_cumuls, X_valid_cumuls, Y_valid_cumuls, \

106 | X_train, map_Y_train, map_Y_valid_cumul, X_valid_ori, Y_valid_ori = \

107 | self.init_current_phase_dataset(iteration, \

108 | start_iter, last_iter, order, order_list, X_train_total, Y_train_total, X_valid_total, Y_valid_total, \

109 | X_train_cumuls, Y_train_cumuls, X_valid_cumuls, Y_valid_cumuls, X_protoset_cumuls, Y_protoset_cumuls)

110 | else:

111 | indices_train_10, X_valid_cumul, X_train_cumul, Y_valid_cumul, Y_train_cumul, \

112 | X_train_cumuls, Y_valid_cumuls, X_protoset_cumuls, Y_protoset_cumuls, X_valid_cumuls, Y_valid_cumuls, \

113 | X_train, map_Y_train, map_Y_valid_cumul, X_protoset, Y_protoset = \

114 | self.init_current_phase_dataset(iteration, \

115 | start_iter, last_iter, order, order_list, X_train_total, Y_train_total, X_valid_total, Y_valid_total, \

116 | X_train_cumuls, Y_train_cumuls, X_valid_cumuls, Y_valid_cumuls, X_protoset_cumuls, Y_protoset_cumuls)

117 |

118 | is_start_iteration = (iteration == start_iter)

119 |

120 | # Imprint weights

121 | if iteration > start_iter:

122 | b1_model = self.imprint_weights(b1_model, b2_model, iteration, is_start_iteration, X_train, map_Y_train, self.dictionary_size)

123 |

124 | # Update training and test dataloader

125 | trainloader, testloader = self.update_train_and_valid_loader(X_train, map_Y_train, X_valid_cumul, map_Y_valid_cumul, \

126 | iteration, start_iter)

127 |

128 | # Set the names for the checkpoints

129 | ckp_name = osp.join(self.save_path, 'iter_{}_b1.pth'.format(iteration))

130 | ckp_name_b2 = osp.join(self.save_path, 'iter_{}_b2.pth'.format(iteration))

131 | print('Check point name: ', ckp_name)

132 |

133 | if iteration==start_iter and self.args.resume_fg:

134 | # Resume the 0-th phase model according to the config

135 | b1_model = torch.load(self.args.ckpt_dir_fg)

136 | elif self.args.resume and os.path.exists(ckp_name):

137 | # Resume other models according to the config

138 | b1_model = torch.load(ckp_name)

139 | b2_model = torch.load(ckp_name_b2)

140 | else:

141 | # Start training (if we don't resume the models from the checkppoints)

142 |

143 | # Set the optimizer

144 | tg_optimizer, tg_lr_scheduler, fusion_optimizer, fusion_lr_scheduler = self.set_optimizer(iteration, \

145 | start_iter, b1_model, ref_model, b2_model, ref_b2_model)