├── .gitignore

├── LICENSE

├── README.md

├── base

├── __init__.py

├── base_dataloader.py

├── base_dataset.py

├── base_model.py

└── base_trainer.py

├── configs

└── config.json

├── dataloaders

├── __init__.py

├── voc.py

└── voc_splits

│ ├── 1000_train_supervised.txt

│ ├── 1000_train_unsupervised.txt

│ ├── 100_train_supervised.txt

│ ├── 100_train_unsupervised.txt

│ ├── 1464_train_supervised.txt

│ ├── 1464_train_unsupervised.txt

│ ├── 200_train_supervised.txt

│ ├── 200_train_unsupervised.txt

│ ├── 300_train_supervised.txt

│ ├── 300_train_unsupervised.txt

│ ├── 500_train_supervised.txt

│ ├── 500_train_unsupervised.txt

│ ├── 60_train_supervised.txt

│ ├── 60_train_unsupervised.txt

│ ├── 800_train_supervised.txt

│ ├── 800_train_unsupervised.txt

│ ├── boxes.json

│ ├── classes.json

│ └── val.txt

├── inference.py

├── models

├── __init__.py

├── backbones

│ ├── __init__.py

│ ├── get_pretrained_model.sh

│ ├── module_helper.py

│ ├── resnet_backbone.py

│ └── resnet_models.py

├── decoders.py

├── encoder.py

└── model.py

├── pseudo_labels

├── README.md

├── cam_to_pseudo_labels.py

├── make_cam.py

├── misc

│ ├── imutils.py

│ ├── pyutils.py

│ └── torchutils.py

├── net

│ ├── resnet50.py

│ └── resnet50_cam.py

├── run.py

├── train_cam.py

└── voc12

│ ├── cls_labels.npy

│ ├── dataloader.py

│ ├── make_cls_labels.py

│ ├── test.txt

│ ├── train.txt

│ ├── train_aug.txt

│ └── val.txt

├── requirements.txt

├── train.py

├── trainer.py

└── utils

├── __init__.py

├── helpers.py

├── htmlwriter.py

├── logger.py

├── losses.py

├── lr_scheduler.py

├── metrics.py

├── pallete.py

└── ramps.py

/.gitignore:

--------------------------------------------------------------------------------

1 | # Byte-compiled / optimized / DLL files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 |

6 | # C extensions

7 | *.so

8 | pretrained/

9 | tb_history/

10 | logs/

11 | cls_runs/

12 | slurm_logs/

13 |

14 | #experiments/

15 | experiments/

16 | experiments_da/

17 | config[0-9]*

18 | *.png

19 | *.pth

20 |

21 | # Distribution / packaging

22 | .Python

23 | env/

24 | build/

25 | develop-eggs/

26 | dist/

27 | downloads/

28 | eggs/

29 | .eggs/

30 | lib/

31 | lib64/

32 | parts/

33 | sdist/

34 | var/

35 | wheels/

36 | *.egg-info/

37 | .installed.cfg

38 | *.egg

39 |

40 | # PyInstaller

41 | # Usually these files are written by a python script from a template

42 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

43 | *.manifest

44 | *.spec

45 |

46 | # Installer logs

47 | pip-log.txt

48 | pip-delete-this-directory.txt

49 |

50 | # Unit test / coverage reports

51 | htmlcov/

52 | .tox/

53 | .coverage

54 | .coverage.*

55 | .cache

56 | nosetests.xml

57 | coverage.xml

58 | *.cover

59 | .hypothesis/

60 |

61 | # Translations

62 | *.mo

63 | *.pot

64 |

65 | # Django stuff:

66 | *.log

67 | local_settings.py

68 |

69 | # Flask stuff:

70 | instance/

71 | .webassets-cache

72 |

73 | # Scrapy stuff:

74 | .scrapy

75 |

76 | # Sphinx documentation

77 | docs/_build/

78 |

79 | # PyBuilder

80 | target/

81 |

82 | # Jupyter Notebook

83 | .ipynb_checkpoints

84 |

85 | # pyenv

86 | .python-version

87 |

88 | # celery beat schedule file

89 | celerybeat-schedule

90 |

91 | # SageMath parsed files

92 | *.sage.py

93 |

94 | # dotenv

95 | .env

96 |

97 | # virtualenv

98 | .venv

99 | venv/

100 | ENV/

101 |

102 | # Spyder project settings

103 | .spyderproject

104 | .spyproject

105 |

106 | # Rope project settings

107 | .ropeproject

108 |

109 | # mkdocs documentation

110 | /site

111 |

112 | # mypy

113 | .mypy_cache/

114 |

115 | # input data, saved log, checkpoints

116 | data/

117 | input/

118 | saved/

119 | outputs/

120 | datasets/

121 |

122 | # editor, os cache directory

123 | .vscode/

124 | .idea/

125 | __MACOSX/

126 |

127 |

--------------------------------------------------------------------------------

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2020 Yassine Ouali

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 |

2 |

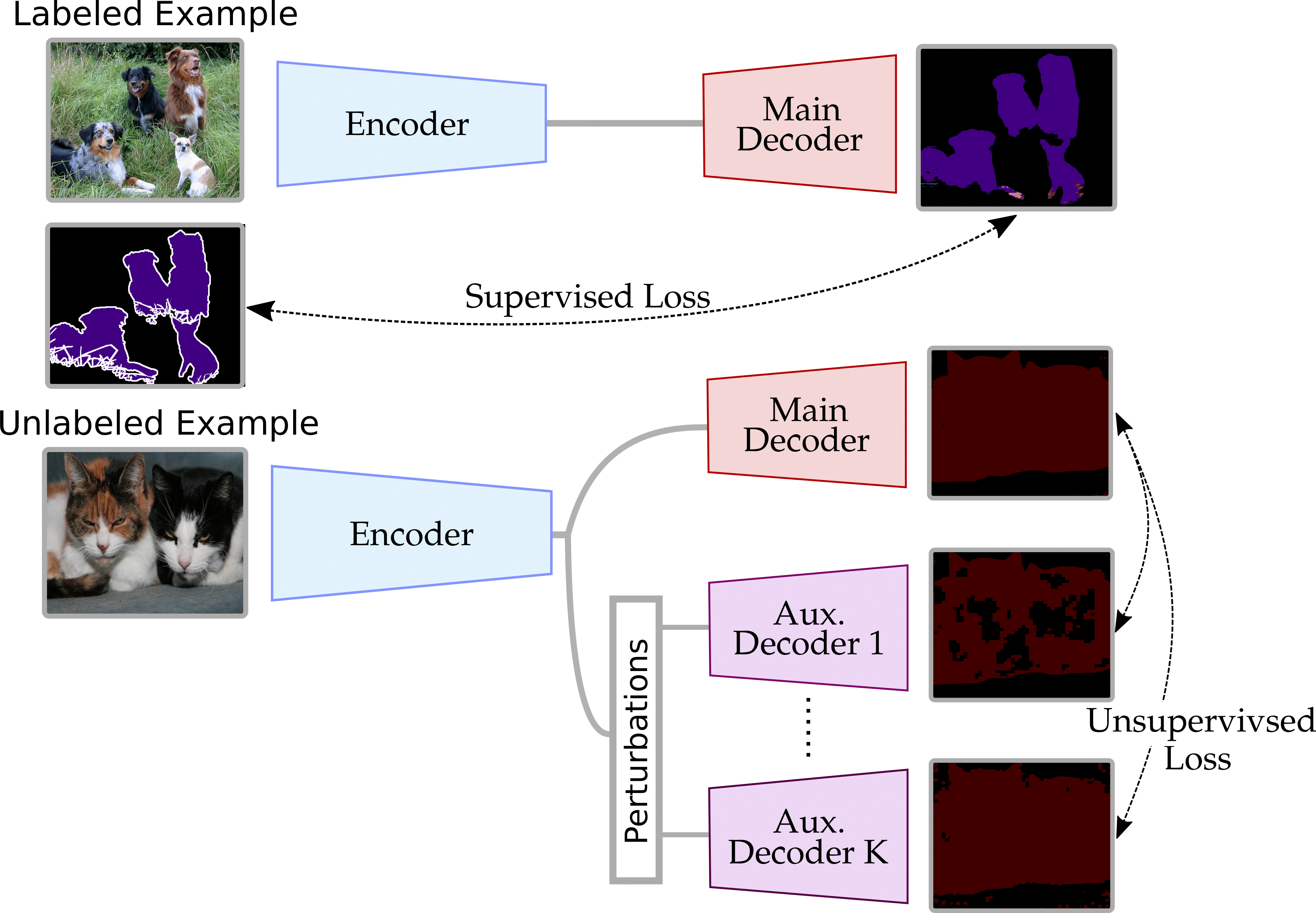

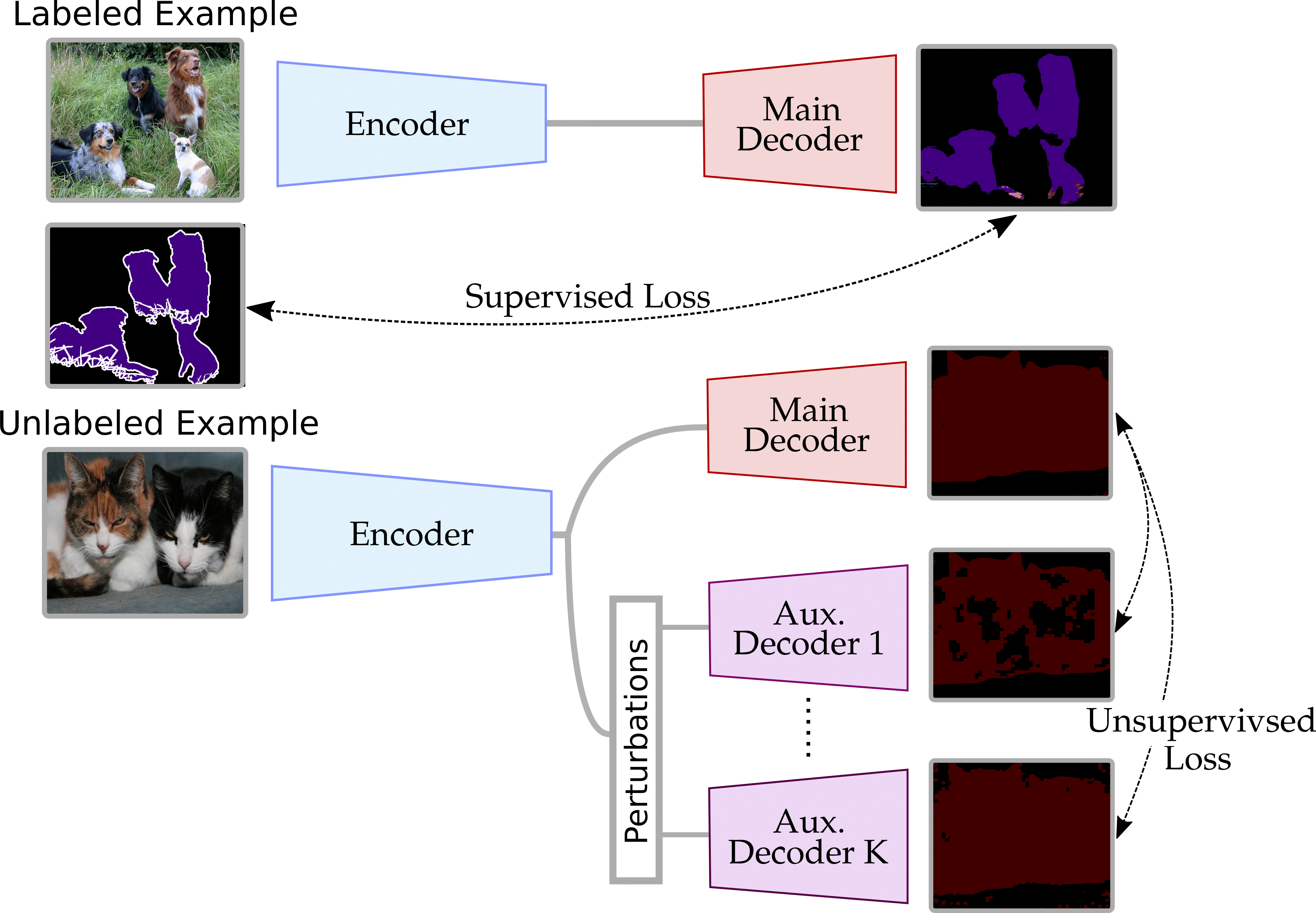

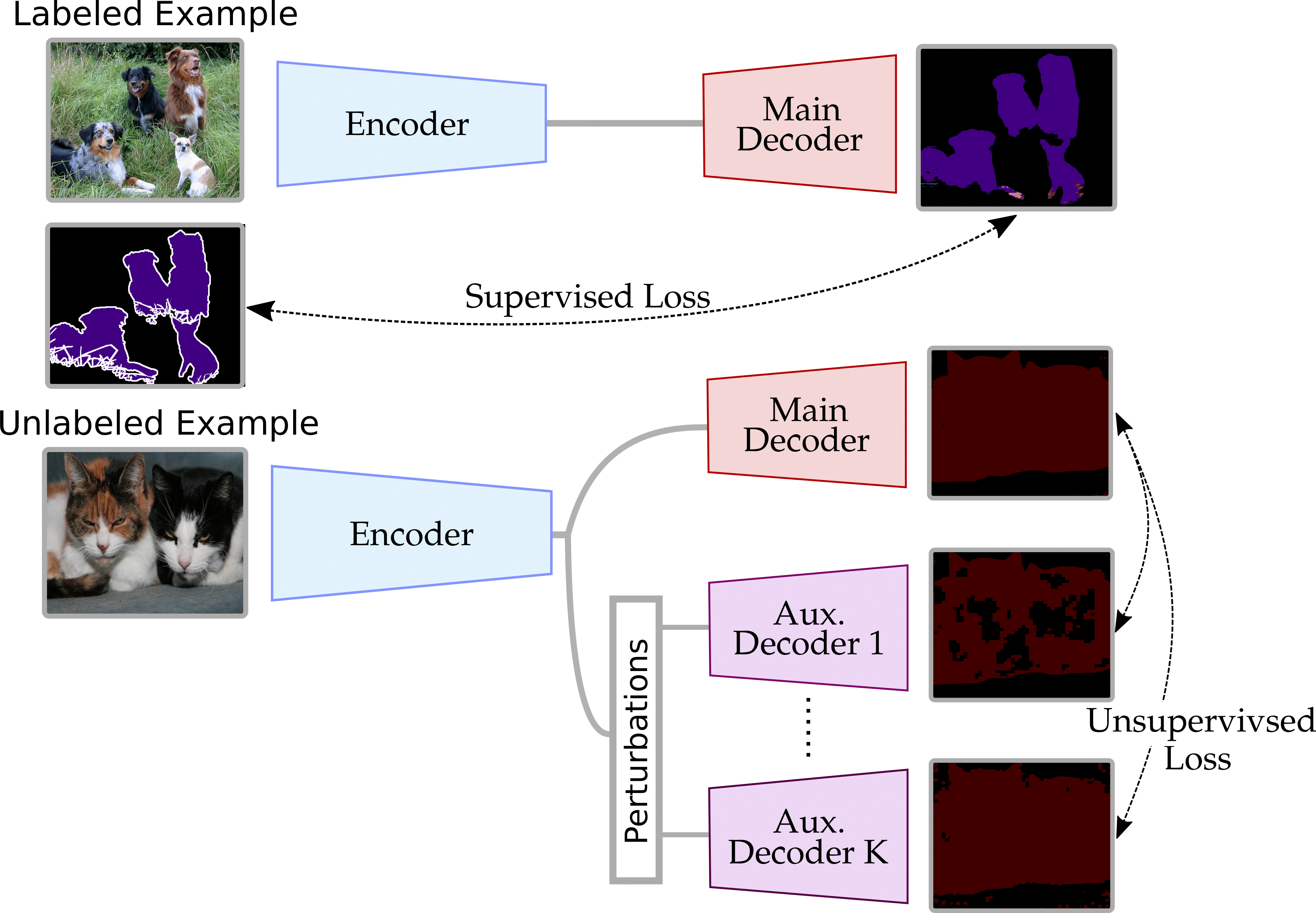

3 | ## Semi-Supervised Semantic Segmentation with Cross-Consistency Training (CCT)

4 |

5 | #### [Paper](https://arxiv.org/abs/2003.09005), [Project Page](https://yassouali.github.io/cct_page/)

6 |

7 | This repo contains the official implementation of CVPR 2020 paper: Semi-Supervised Semantic Segmentation with Cross-Consistency Training, which

8 | adapts the traditional consistency training framework of semi-supervised learning for semantic segmentation, with an extension to weak-supervised

9 | learning and learning on multiple domains.

10 |

11 |

12 |

13 | ### Highlights

14 |

15 | **(1) Consistency Training for semantic segmentation.** \

16 | We observe that for semantic segmentation, due to the dense nature of the task,

17 | the cluster assumption is more easily enforced over the hidden representations rather than the inputs.

18 |

19 | **(2) Cross-Consistency Training.** \

20 | We propose CCT (Cross-Consistency Training) for semi-supervised semantic segmentation, where we define

21 | a number of novel perturbations, and show the effectiveness of enforcing consistency over the encoder's outputs

22 | rather than the inputs.

23 |

24 | **(3) Using weak-labels and pixel-level labels from multiple domains.** \

25 | The proposed method is quite simple and flexible, and can easily be extended to use image-level labels and

26 | pixel-level labels from multiple-domains.

27 |

28 |

29 |

30 | ### Requirements

31 |

32 | This repo was tested with Ubuntu 18.04.3 LTS, Python 3.7, PyTorch 1.1.0, and CUDA 10.0. But it should be runnable with recent PyTorch versions >=1.1.0.

33 |

34 | The required packages are `pytorch` and `torchvision`, together with `PIL` and `opencv` for data-preprocessing and `tqdm` for showing the training progress.

35 | With some additional modules like `dominate` to save the results in the form of HTML files. To setup the necessary modules, simply run:

36 |

37 | ```bash

38 | pip install -r requirements.txt

39 | ```

40 |

41 | ### Dataset

42 |

43 | In this repo, we use **Pascal VOC**, to obtain it, first download the [original dataset](http://host.robots.ox.ac.uk/pascal/VOC/voc2012/VOCtrainval_11-May-2012.tar), after extracting the files we'll end up with `VOCtrainval_11-May-2012/VOCdevkit/VOC2012` containing the image sets, the XML annotation for both object detection and segmentation, and JPEG images.\

44 | The second step is to augment the dataset using the additionnal annotations provided by [Semantic Contours from Inverse Detectors](http://home.bharathh.info/pubs/pdfs/BharathICCV2011.pdf). Download the rest of the annotations [SegmentationClassAug](https://www.dropbox.com/s/oeu149j8qtbs1x0/SegmentationClassAug.zip?dl=0) and add them to the path `VOCtrainval_11-May-2012/VOCdevkit/VOC2012`, now we're set, for training use the path to `VOCtrainval_11-May-2012`.

45 |

46 |

47 | ### Training

48 |

49 | To train a model, first download PASCAL VOC as detailed above, then set `data_dir` to the dataset path in the config file in `configs/config.json` and set the rest of the parameters, like the number of GPUs, cope size, data augmentation ... etc ,you can also change CCT hyperparameters if you wish, more details below. Then simply run:

50 |

51 | ```bash

52 | python train.py --config configs/config.json

53 | ```

54 |

55 | The log files and the `.pth` checkpoints will be saved in `saved\EXP_NAME`, to monitor the training using tensorboard, please run:

56 |

57 | ```bash

58 | tensorboard --logdir saved

59 | ```

60 |

61 | To resume training using a saved `.pth` model:

62 |

63 | ```bash

64 | python train.py --config configs/config.json --resume saved/CCT/checkpoint.pth

65 | ```

66 |

67 | **Results**: The results will be saved in `saved` as an html file, containing the validation results,

68 | and the name it will take is `experim_name` specified in `configs/config.json`.

69 |

70 | ### Pseudo-labels

71 |

72 | If you want to use image level labels to train the auxiliary labels as explained in section 3.3 of the paper. First generate the pseudo-labels

73 | using the code in `pseudo_labels`:

74 |

75 |

76 | ```bash

77 | cd pseudo_labels

78 | python run.py --voc12_root DATA_PATH

79 | ```

80 |

81 | `DATA_PATH` must point to the folder containing `JPEGImages` in Pascal Voc dataset. The results will be

82 | saved in `pseudo_labels/result/pseudo_labels` as PNG files, the flag `use_weak_labels` needs to be set to True in the config file, and

83 | then we can train the model as detailed above.

84 |

85 |

86 | ### Inference

87 |

88 | For inference, we need a pretrained model, the jpg images we'd like to segment and the config used in training (to load the correct model and other parameters),

89 |

90 | ```bash

91 | python inference.py --config config.json --model best_model.pth --images images_folder

92 | ```

93 |

94 | The predictions will be saved as `.png` images in `outputs\` is used, for Pacal VOC the default palette is:

95 |

96 |

97 |

98 | Here are the flags available for inference:

99 |

100 | ```

101 | --images Folder containing the jpg images to segment.

102 | --model Path to the trained pth model.

103 | --config The config file used for training the model.

104 | ```

105 |

106 | ### Pre-trained models

107 |

108 | Pre-trained models can be downloaded [here](https://github.com/yassouali/CCT/releases).

109 |

110 | ### Citation ✏️ 📄

111 |

112 | If you find this repo useful for your research, please consider citing the paper as follows:

113 |

114 | ```

115 | @InProceedings{Ouali_2020_CVPR,

116 | author = {Ouali, Yassine and Hudelot, Celine and Tami, Myriam},

117 | title = {Semi-Supervised Semantic Segmentation With Cross-Consistency Training},

118 | booktitle = {The IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

119 | month = {June},

120 | year = {2020}

121 | }

122 | ```

123 |

124 | For any questions, please contact Yassine Ouali.

125 |

126 | #### Config file details ⚙️

127 |

128 | Bellow we detail the CCT parameters that can be controlled in the config file `configs/config.json`, the rest of the parameters

129 | are self-explanatory.

130 |

131 | ```javascript

132 | {

133 | "name": "CCT",

134 | "experim_name": "CCT", // The name the results will take (html and the folder in /saved)

135 | "n_gpu": 1, // Number of GPUs

136 | "n_labeled_examples": 1000, // Number of labeled examples (choices are 60, 100, 200,

137 | // 300, 500, 800, 1000, 1464, and the splits are in dataloaders/voc_splits)

138 | "diff_lrs": true,

139 | "ramp_up": 0.1, // The unsupervised loss will be slowly scaled up in the first 10% of Training time

140 | "unsupervised_w": 30, // Weighting of the unsupervised loss

141 | "ignore_index": 255,

142 | "lr_scheduler": "Poly",

143 | "use_weak_labels": false, // If the pseudo-labels were generated, we can use them to train the aux. decoders

144 | "weakly_loss_w": 0.4, // Weighting of the weakly-supervised loss

145 | "pretrained": true,

146 |

147 | "model":{

148 | "supervised": true, // Supervised setting (training only on the labeled examples)

149 | "semi": false, // Semi-supervised setting

150 | "supervised_w": 1, // Weighting of the supervised loss

151 |

152 | "sup_loss": "CE", // supervised loss, choices are CE and ab-CE = ["CE", "ABCE"]

153 | "un_loss": "MSE", // unsupervised loss, choices are CE and KL-divergence = ["MSE", "KL"]

154 |

155 | "softmax_temp": 1,

156 | "aux_constraint": false, // Pair-wise loss (sup. mat.)

157 | "aux_constraint_w": 1,

158 | "confidence_masking": false, // Confidence masking (sup. mat.)

159 | "confidence_th": 0.5,

160 |

161 | "drop": 6, // Number of DropOut decoders

162 | "drop_rate": 0.5, // Dropout probability

163 | "spatial": true,

164 |

165 | "cutout": 6, // Number of G-Cutout decoders

166 | "erase": 0.4, // We drop 40% of the area

167 |

168 | "vat": 2, // Number of I-VAT decoders

169 | "xi": 1e-6, // VAT parameters

170 | "eps": 2.0,

171 |

172 | "context_masking": 2, // Number of Con-Msk decoders

173 | "object_masking": 2, // Number of Obj-Msk decoders

174 | "feature_drop": 6, // Number of F-Drop decoders

175 |

176 | "feature_noise": 6, // Number of F-Noise decoders

177 | "uniform_range": 0.3 // The range of the noise

178 | },

179 | ```

180 |

181 | #### Acknowledgements

182 |

183 | - Pseudo-labels generation is based on Jiwoon Ahn's implementation [irn](https://github.com/jiwoon-ahn/irn).

184 | - Code structure was based on [Pytorch-Template](https://github.com/victoresque/pytorch-template/blob/master/README.m)

185 | - ResNet backbone was downloaded from [torchcv](https://github.com/donnyyou/torchcv)

186 |

--------------------------------------------------------------------------------

/base/__init__.py:

--------------------------------------------------------------------------------

1 | from .base_dataloader import *

2 | from .base_dataset import *

3 | from .base_model import *

4 | from .base_trainer import *

5 |

6 |

7 |

--------------------------------------------------------------------------------

/base/base_dataloader.py:

--------------------------------------------------------------------------------

1 | import numpy as np

2 | from copy import deepcopy

3 | import torch

4 | from torch.utils.data import DataLoader

5 | from torch.utils.data.sampler import SubsetRandomSampler

6 |

7 | class BaseDataLoader(DataLoader):

8 | def __init__(self, dataset, batch_size, shuffle, num_workers, val_split = 0.0):

9 | self.shuffle = shuffle

10 | self.dataset = dataset

11 | self.nbr_examples = len(dataset)

12 | if val_split:

13 | self.train_sampler, self.val_sampler = self._split_sampler(val_split)

14 | else:

15 | self.train_sampler, self.val_sampler = None, None

16 |

17 | self.init_kwargs = {

18 | 'dataset': self.dataset,

19 | 'batch_size': batch_size,

20 | 'shuffle': self.shuffle,

21 | 'num_workers': num_workers,

22 | 'pin_memory': True

23 | }

24 | super(BaseDataLoader, self).__init__(sampler=self.train_sampler, **self.init_kwargs)

25 |

26 | def _split_sampler(self, split):

27 | if split == 0.0:

28 | return None, None

29 |

30 | self.shuffle = False

31 |

32 | split_indx = int(self.nbr_examples * split)

33 | np.random.seed(0)

34 |

35 | indxs = np.arange(self.nbr_examples)

36 | np.random.shuffle(indxs)

37 | train_indxs = indxs[split_indx:]

38 | val_indxs = indxs[:split_indx]

39 | self.nbr_examples = len(train_indxs)

40 |

41 | train_sampler = SubsetRandomSampler(train_indxs)

42 | val_sampler = SubsetRandomSampler(val_indxs)

43 | return train_sampler, val_sampler

44 |

45 | def get_val_loader(self):

46 | if self.val_sampler is None:

47 | return None

48 | return DataLoader(sampler=self.val_sampler, **self.init_kwargs)

49 |

--------------------------------------------------------------------------------

/base/base_dataset.py:

--------------------------------------------------------------------------------

1 | import random, math

2 | import numpy as np

3 | import cv2

4 | import torch

5 | import torch.nn.functional as F

6 | from torch.utils.data import Dataset

7 | from PIL import Image

8 | from torchvision import transforms

9 | from scipy import ndimage

10 | from math import ceil

11 |

12 | class BaseDataSet(Dataset):

13 | def __init__(self, data_dir, split, mean, std, ignore_index, base_size=None, augment=True, val=False,

14 | jitter=False, use_weak_lables=False, weak_labels_output=None, crop_size=None, scale=False, flip=False, rotate=False,

15 | blur=False, return_id=False, n_labeled_examples=None):

16 |

17 | self.root = data_dir

18 | self.split = split

19 | self.mean = mean

20 | self.std = std

21 | self.augment = augment

22 | self.crop_size = crop_size

23 | self.jitter = jitter

24 | self.image_padding = (np.array(mean)*255.).tolist()

25 | self.ignore_index = ignore_index

26 | self.return_id = return_id

27 | self.n_labeled_examples = n_labeled_examples

28 | self.val = val

29 |

30 | self.use_weak_lables = use_weak_lables

31 | self.weak_labels_output = weak_labels_output

32 |

33 | if self.augment:

34 | self.base_size = base_size

35 | self.scale = scale

36 | self.flip = flip

37 | self.rotate = rotate

38 | self.blur = blur

39 |

40 | self.jitter_tf = transforms.ColorJitter(brightness=0.1, contrast=0.1, saturation=0.1, hue=0.1)

41 | self.to_tensor = transforms.ToTensor()

42 | self.normalize = transforms.Normalize(mean, std)

43 |

44 | self.files = []

45 | self._set_files()

46 |

47 | cv2.setNumThreads(0)

48 |

49 | def _set_files(self):

50 | raise NotImplementedError

51 |

52 | def _load_data(self, index):

53 | raise NotImplementedError

54 |

55 | def _rotate(self, image, label):

56 | # Rotate the image with an angle between -10 and 10

57 | h, w, _ = image.shape

58 | angle = random.randint(-10, 10)

59 | center = (w / 2, h / 2)

60 | rot_matrix = cv2.getRotationMatrix2D(center, angle, 1.0)

61 | image = cv2.warpAffine(image, rot_matrix, (w, h), flags=cv2.INTER_CUBIC)#, borderMode=cv2.BORDER_REFLECT)

62 | label = cv2.warpAffine(label, rot_matrix, (w, h), flags=cv2.INTER_NEAREST)#, borderMode=cv2.BORDER_REFLECT)

63 | return image, label

64 |

65 | def _crop(self, image, label):

66 | # Padding to return the correct crop size

67 | if (isinstance(self.crop_size, list) or isinstance(self.crop_size, tuple)) and len(self.crop_size) == 2:

68 | crop_h, crop_w = self.crop_size

69 | elif isinstance(self.crop_size, int):

70 | crop_h, crop_w = self.crop_size, self.crop_size

71 | else:

72 | raise ValueError

73 |

74 | h, w, _ = image.shape

75 | pad_h = max(crop_h - h, 0)

76 | pad_w = max(crop_w - w, 0)

77 | pad_kwargs = {

78 | "top": 0,

79 | "bottom": pad_h,

80 | "left": 0,

81 | "right": pad_w,

82 | "borderType": cv2.BORDER_CONSTANT,}

83 | if pad_h > 0 or pad_w > 0:

84 | image = cv2.copyMakeBorder(image, value=self.image_padding, **pad_kwargs)

85 | label = cv2.copyMakeBorder(label, value=self.ignore_index, **pad_kwargs)

86 |

87 | # Cropping

88 | h, w, _ = image.shape

89 | start_h = random.randint(0, h - crop_h)

90 | start_w = random.randint(0, w - crop_w)

91 | end_h = start_h + crop_h

92 | end_w = start_w + crop_w

93 | image = image[start_h:end_h, start_w:end_w]

94 | label = label[start_h:end_h, start_w:end_w]

95 | return image, label

96 |

97 | def _blur(self, image, label):

98 | # Gaussian Blud (sigma between 0 and 1.5)

99 | sigma = random.random() * 1.5

100 | ksize = int(3.3 * sigma)

101 | ksize = ksize + 1 if ksize % 2 == 0 else ksize

102 | image = cv2.GaussianBlur(image, (ksize, ksize), sigmaX=sigma, sigmaY=sigma, borderType=cv2.BORDER_REFLECT_101)

103 | return image, label

104 |

105 | def _flip(self, image, label):

106 | # Random H flip

107 | if random.random() > 0.5:

108 | image = np.fliplr(image).copy()

109 | label = np.fliplr(label).copy()

110 | return image, label

111 |

112 | def _resize(self, image, label, bigger_side_to_base_size=True):

113 | if isinstance(self.base_size, int):

114 | h, w, _ = image.shape

115 | if self.scale:

116 | longside = random.randint(int(self.base_size*0.5), int(self.base_size*2.0))

117 | #longside = random.randint(int(self.base_size*0.5), int(self.base_size*1))

118 | else:

119 | longside = self.base_size

120 |

121 | if bigger_side_to_base_size:

122 | h, w = (longside, int(1.0 * longside * w / h + 0.5)) if h > w else (int(1.0 * longside * h / w + 0.5), longside)

123 | else:

124 | h, w = (longside, int(1.0 * longside * w / h + 0.5)) if h < w else (int(1.0 * longside * h / w + 0.5), longside)

125 | image = np.asarray(Image.fromarray(np.uint8(image)).resize((w, h), Image.BICUBIC))

126 | label = cv2.resize(label, (w, h), interpolation=cv2.INTER_NEAREST)

127 | return image, label

128 |

129 | elif (isinstance(self.base_size, list) or isinstance(self.base_size, tuple)) and len(self.base_size) == 2:

130 | h, w, _ = image.shape

131 | if self.scale:

132 | scale = random.random() * 1.5 + 0.5 # Scaling between [0.5, 2]

133 | h, w = int(self.base_size[0] * scale), int(self.base_size[1] * scale)

134 | else:

135 | h, w = self.base_size

136 | image = np.asarray(Image.fromarray(np.uint8(image)).resize((w, h), Image.BICUBIC))

137 | label = cv2.resize(label, (w, h), interpolation=cv2.INTER_NEAREST)

138 | return image, label

139 |

140 | else:

141 | raise ValueError

142 |

143 | def _val_augmentation(self, image, label):

144 | if self.base_size is not None:

145 | image, label = self._resize(image, label)

146 | image = self.normalize(self.to_tensor(Image.fromarray(np.uint8(image))))

147 | return image, label

148 |

149 | image = self.normalize(self.to_tensor(Image.fromarray(np.uint8(image))))

150 | return image, label

151 |

152 | def _augmentation(self, image, label):

153 | h, w, _ = image.shape

154 |

155 | if self.base_size is not None:

156 | image, label = self._resize(image, label)

157 |

158 | if self.crop_size is not None:

159 | image, label = self._crop(image, label)

160 |

161 | if self.flip:

162 | image, label = self._flip(image, label)

163 |

164 | image = Image.fromarray(np.uint8(image))

165 | image = self.jitter_tf(image) if self.jitter else image

166 |

167 | return self.normalize(self.to_tensor(image)), label

168 |

169 | def __len__(self):

170 | return len(self.files)

171 |

172 | def __getitem__(self, index):

173 | image, label, image_id = self._load_data(index)

174 | if self.val:

175 | image, label = self._val_augmentation(image, label)

176 | elif self.augment:

177 | image, label = self._augmentation(image, label)

178 |

179 | label = torch.from_numpy(np.array(label, dtype=np.int32)).long()

180 | return image, label

181 |

182 | def __repr__(self):

183 | fmt_str = "Dataset: " + self.__class__.__name__ + "\n"

184 | fmt_str += " # data: {}\n".format(self.__len__())

185 | fmt_str += " Split: {}\n".format(self.split)

186 | fmt_str += " Root: {}".format(self.root)

187 | return fmt_str

188 |

189 |

--------------------------------------------------------------------------------

/base/base_model.py:

--------------------------------------------------------------------------------

1 | import logging

2 | import torch.nn as nn

3 | import numpy as np

4 |

5 | class BaseModel(nn.Module):

6 | def __init__(self):

7 | super(BaseModel, self).__init__()

8 | self.logger = logging.getLogger(self.__class__.__name__)

9 |

10 | def forward(self):

11 | raise NotImplementedError

12 |

13 | def summary(self):

14 | model_parameters = filter(lambda p: p.requires_grad, self.parameters())

15 | nbr_params = sum([np.prod(p.size()) for p in model_parameters])

16 | self.logger.info(f'Nbr of trainable parameters: {nbr_params}')

17 |

18 | def __str__(self):

19 | model_parameters = filter(lambda p: p.requires_grad, self.parameters())

20 | nbr_params = int(sum([np.prod(p.size()) for p in model_parameters]))

21 | return f'\nNbr of trainable parameters: {nbr_params}'

22 | #return super(BaseModel, self).__str__() + f'\nNbr of trainable parameters: {nbr_params}'

23 |

--------------------------------------------------------------------------------

/base/base_trainer.py:

--------------------------------------------------------------------------------

1 | import os, json, math, logging, sys, datetime

2 | import torch

3 | from torch.utils import tensorboard

4 | from utils import helpers

5 | from utils import logger

6 | import utils.lr_scheduler

7 | from utils.htmlwriter import HTML

8 |

9 | def get_instance(module, name, config, *args):

10 | return getattr(module, config[name]['type'])(*args, **config[name]['args'])

11 |

12 | class BaseTrainer:

13 | def __init__(self, model, resume, config, iters_per_epoch, train_logger=None):

14 | self.model = model

15 | self.config = config

16 |

17 | self.train_logger = train_logger

18 | self.logger = logging.getLogger(self.__class__.__name__)

19 | self.do_validation = self.config['trainer']['val']

20 | self.start_epoch = 1

21 | self.improved = False

22 |

23 | # SETTING THE DEVICE

24 | self.device, availble_gpus = self._get_available_devices(self.config['n_gpu'])

25 | self.model = torch.nn.DataParallel(self.model, device_ids=availble_gpus)

26 | self.model.to(self.device)

27 |

28 | # CONFIGS

29 | cfg_trainer = self.config['trainer']

30 | self.epochs = cfg_trainer['epochs']

31 | self.save_period = cfg_trainer['save_period']

32 |

33 | # OPTIMIZER

34 | trainable_params = [{'params': filter(lambda p:p.requires_grad, self.model.module.get_other_params())},

35 | {'params': filter(lambda p:p.requires_grad, self.model.module.get_backbone_params()),

36 | 'lr': config['optimizer']['args']['lr'] / 10}]

37 |

38 | self.optimizer = get_instance(torch.optim, 'optimizer', config, trainable_params)

39 | model_params = sum([i.shape.numel() for i in list(model.parameters())])

40 | opt_params = sum([i.shape.numel() for j in self.optimizer.param_groups for i in j['params']])

41 | assert opt_params == model_params, 'some params are missing in the opt'

42 |

43 | self.lr_scheduler = getattr(utils.lr_scheduler, config['lr_scheduler'])(optimizer=self.optimizer, num_epochs=self.epochs,

44 | iters_per_epoch=iters_per_epoch)

45 |

46 | # MONITORING

47 | self.monitor = cfg_trainer.get('monitor', 'off')

48 | if self.monitor == 'off':

49 | self.mnt_mode = 'off'

50 | self.mnt_best = 0

51 | else:

52 | self.mnt_mode, self.mnt_metric = self.monitor.split()

53 | assert self.mnt_mode in ['min', 'max']

54 | self.mnt_best = -math.inf if self.mnt_mode == 'max' else math.inf

55 | self.early_stoping = cfg_trainer.get('early_stop', math.inf)

56 |

57 | # CHECKPOINTS & TENSOBOARD

58 | date_time = datetime.datetime.now().strftime('%m-%d_%H-%M')

59 | run_name = config['experim_name']

60 | self.checkpoint_dir = os.path.join(cfg_trainer['save_dir'], run_name)

61 | helpers.dir_exists(self.checkpoint_dir)

62 | config_save_path = os.path.join(self.checkpoint_dir, 'config.json')

63 | with open(config_save_path, 'w') as handle:

64 | json.dump(self.config, handle, indent=4, sort_keys=True)

65 |

66 | writer_dir = os.path.join(cfg_trainer['log_dir'], run_name)

67 | self.writer = tensorboard.SummaryWriter(writer_dir)

68 | self.html_results = HTML(web_dir=config['trainer']['save_dir'], exp_name=config['experim_name'],

69 | save_name=config['experim_name'], config=config, resume=resume)

70 |

71 | if resume: self._resume_checkpoint(resume)

72 |

73 | def _get_available_devices(self, n_gpu):

74 | sys_gpu = torch.cuda.device_count()

75 | if sys_gpu == 0:

76 | self.logger.warning('No GPUs detected, using the CPU')

77 | n_gpu = 0

78 | elif n_gpu > sys_gpu:

79 | self.logger.warning(f'Nbr of GPU requested is {n_gpu} but only {sys_gpu} are available')

80 | n_gpu = sys_gpu

81 |

82 | device = torch.device('cuda:0' if n_gpu > 0 else 'cpu')

83 | self.logger.info(f'Detected GPUs: {sys_gpu} Requested: {n_gpu}')

84 | available_gpus = list(range(n_gpu))

85 | return device, available_gpus

86 |

87 |

88 |

89 | def train(self):

90 | for epoch in range(self.start_epoch, self.epochs+1):

91 | results = self._train_epoch(epoch)

92 | if self.do_validation and epoch % self.config['trainer']['val_per_epochs'] == 0:

93 | results = self._valid_epoch(epoch)

94 | self.logger.info('\n\n')

95 | for k, v in results.items():

96 | self.logger.info(f' {str(k):15s}: {v}')

97 |

98 | if self.train_logger is not None:

99 | log = {'epoch' : epoch, **results}

100 | self.train_logger.add_entry(log)

101 |

102 | # CHECKING IF THIS IS THE BEST MODEL (ONLY FOR VAL)

103 | if self.mnt_mode != 'off' and epoch % self.config['trainer']['val_per_epochs'] == 0:

104 | try:

105 | if self.mnt_mode == 'min': self.improved = (log[self.mnt_metric] < self.mnt_best)

106 | else: self.improved = (log[self.mnt_metric] > self.mnt_best)

107 | except KeyError:

108 | self.logger.warning(f'The metrics being tracked ({self.mnt_metric}) has not been calculated. Training stops.')

109 | break

110 |

111 | if self.improved:

112 | self.mnt_best = log[self.mnt_metric]

113 | self.not_improved_count = 0

114 | else:

115 | self.not_improved_count += 1

116 |

117 | if self.not_improved_count > self.early_stoping:

118 | self.logger.info(f'\nPerformance didn\'t improve for {self.early_stoping} epochs')

119 | self.logger.warning('Training Stoped')

120 | break

121 |

122 | # SAVE CHECKPOINT

123 | if epoch % self.save_period == 0:

124 | self._save_checkpoint(epoch, save_best=self.improved)

125 | self.html_results.save()

126 |

127 |

128 | def _save_checkpoint(self, epoch, save_best=False):

129 | state = {

130 | 'arch': type(self.model).__name__,

131 | 'epoch': epoch,

132 | 'state_dict': self.model.state_dict(),

133 | 'monitor_best': self.mnt_best,

134 | 'config': self.config

135 | }

136 |

137 | filename = os.path.join(self.checkpoint_dir, f'checkpoint.pth')

138 | self.logger.info(f'\nSaving a checkpoint: {filename} ...')

139 | torch.save(state, filename)

140 |

141 | if save_best:

142 | filename = os.path.join(self.checkpoint_dir, f'best_model.pth')

143 | torch.save(state, filename)

144 | self.logger.info("Saving current best: best_model.pth")

145 |

146 | def _resume_checkpoint(self, resume_path):

147 | self.logger.info(f'Loading checkpoint : {resume_path}')

148 | checkpoint = torch.load(resume_path)

149 | self.start_epoch = checkpoint['epoch'] + 1

150 | self.mnt_best = checkpoint['monitor_best']

151 | self.not_improved_count = 0

152 |

153 | try:

154 | self.model.load_state_dict(checkpoint['state_dict'])

155 | except Exception as e:

156 | print(f'Error when loading: {e}')

157 | self.model.load_state_dict(checkpoint['state_dict'], strict=False)

158 |

159 | if "logger" in checkpoint.keys():

160 | self.train_logger = checkpoint['logger']

161 | self.logger.info(f'Checkpoint <{resume_path}> (epoch {self.start_epoch}) was loaded')

162 |

163 | def _train_epoch(self, epoch):

164 | raise NotImplementedError

165 |

166 | def _valid_epoch(self, epoch):

167 | raise NotImplementedError

168 |

169 | def _eval_metrics(self, output, target):

170 | raise NotImplementedError

171 |

--------------------------------------------------------------------------------

/configs/config.json:

--------------------------------------------------------------------------------

1 | {

2 | "name": "CCT",

3 | "experim_name": "CCT",

4 | "n_gpu": 1,

5 | "n_labeled_examples": 1464,

6 | "diff_lrs": true,

7 | "ramp_up": 0.1,

8 | "unsupervised_w": 30,

9 | "ignore_index": 255,

10 | "lr_scheduler": "Poly",

11 | "use_weak_lables":false,

12 | "weakly_loss_w": 0.4,

13 | "pretrained": true,

14 |

15 | "model":{

16 | "supervised": false,

17 | "semi": true,

18 | "supervised_w": 1,

19 |

20 | "sup_loss": "CE",

21 | "un_loss": "MSE",

22 |

23 | "softmax_temp": 1,

24 | "aux_constraint": false,

25 | "aux_constraint_w": 1,

26 | "confidence_masking": false,

27 | "confidence_th": 0.5,

28 |

29 | "drop": 6,

30 | "drop_rate": 0.5,

31 | "spatial": true,

32 |

33 | "cutout": 6,

34 | "erase": 0.4,

35 |

36 | "vat": 2,

37 | "xi": 1e-6,

38 | "eps": 2.0,

39 |

40 | "context_masking": 2,

41 | "object_masking": 2,

42 | "feature_drop": 6,

43 |

44 | "feature_noise": 6,

45 | "uniform_range": 0.3

46 | },

47 |

48 |

49 | "optimizer": {

50 | "type": "SGD",

51 | "args":{

52 | "lr": 1e-2,

53 | "weight_decay": 1e-4,

54 | "momentum": 0.9

55 | }

56 | },

57 |

58 |

59 | "train_supervised": {

60 | "data_dir": "VOCtrainval_11-May-2012",

61 | "batch_size": 10,

62 | "crop_size": 320,

63 | "shuffle": true,

64 | "base_size": 400,

65 | "scale": true,

66 | "augment": true,

67 | "flip": true,

68 | "rotate": false,

69 | "blur": false,

70 | "split": "train_supervised",

71 | "num_workers": 8

72 | },

73 |

74 | "train_unsupervised": {

75 | "data_dir": "VOCtrainval_11-May-2012",

76 | "weak_labels_output": "pseudo_labels/result/pseudo_labels",

77 | "batch_size": 10,

78 | "crop_size": 320,

79 | "shuffle": true,

80 | "base_size": 400,

81 | "scale": true,

82 | "augment": true,

83 | "flip": true,

84 | "rotate": false,

85 | "blur": false,

86 | "split": "train_unsupervised",

87 | "num_workers": 8

88 | },

89 |

90 | "val_loader": {

91 | "data_dir": "VOCtrainval_11-May-2012",

92 | "batch_size": 1,

93 | "val": true,

94 | "split": "val",

95 | "shuffle": false,

96 | "num_workers": 4

97 | },

98 |

99 | "trainer": {

100 | "epochs": 80,

101 | "save_dir": "saved/",

102 | "save_period": 5,

103 |

104 | "monitor": "max Mean_IoU",

105 | "early_stop": 10,

106 |

107 | "tensorboardX": true,

108 | "log_dir": "saved/",

109 | "log_per_iter": 20,

110 |

111 | "val": true,

112 | "val_per_epochs": 5

113 | }

114 | }

115 |

--------------------------------------------------------------------------------

/dataloaders/__init__.py:

--------------------------------------------------------------------------------

1 | from .voc import VOC

--------------------------------------------------------------------------------

/dataloaders/voc.py:

--------------------------------------------------------------------------------

1 | from base import BaseDataSet, BaseDataLoader

2 | from utils import pallete

3 | import numpy as np

4 | import os

5 | import scipy

6 | import torch

7 | from PIL import Image

8 | import cv2

9 | from torch.utils.data import Dataset

10 | from torchvision import transforms

11 | import json

12 |

13 | class VOCDataset(BaseDataSet):

14 | def __init__(self, **kwargs):

15 | self.num_classes = 21

16 |

17 | self.palette = pallete.get_voc_pallete(self.num_classes)

18 | super(VOCDataset, self).__init__(**kwargs)

19 |

20 | def _set_files(self):

21 | self.root = os.path.join(self.root, 'VOCdevkit/VOC2012')

22 | if self.split == "val":

23 | file_list = os.path.join("dataloaders/voc_splits", f"{self.split}" + ".txt")

24 | elif self.split in ["train_supervised", "train_unsupervised"]:

25 | file_list = os.path.join("dataloaders/voc_splits", f"{self.n_labeled_examples}_{self.split}" + ".txt")

26 | else:

27 | raise ValueError(f"Invalid split name {self.split}")

28 |

29 | file_list = [line.rstrip().split(' ') for line in tuple(open(file_list, "r"))]

30 | self.files, self.labels = list(zip(*file_list))

31 |

32 | def _load_data(self, index):

33 | image_path = os.path.join(self.root, self.files[index][1:])

34 | image = np.asarray(Image.open(image_path), dtype=np.float32)

35 | image_id = self.files[index].split("/")[-1].split(".")[0]

36 | if self.use_weak_lables:

37 | label_path = os.path.join(self.weak_labels_output, image_id+".png")

38 | else:

39 | label_path = os.path.join(self.root, self.labels[index][1:])

40 | label = np.asarray(Image.open(label_path), dtype=np.int32)

41 | return image, label, image_id

42 |

43 | class VOC(BaseDataLoader):

44 | def __init__(self, kwargs):

45 |

46 | self.MEAN = [0.485, 0.456, 0.406]

47 | self.STD = [0.229, 0.224, 0.225]

48 | self.batch_size = kwargs.pop('batch_size')

49 | kwargs['mean'] = self.MEAN

50 | kwargs['std'] = self.STD

51 | kwargs['ignore_index'] = 255

52 | try:

53 | shuffle = kwargs.pop('shuffle')

54 | except:

55 | shuffle = False

56 | num_workers = kwargs.pop('num_workers')

57 |

58 | self.dataset = VOCDataset(**kwargs)

59 |

60 | super(VOC, self).__init__(self.dataset, self.batch_size, shuffle, num_workers, val_split=None)

61 |

--------------------------------------------------------------------------------

/dataloaders/voc_splits/100_train_supervised.txt:

--------------------------------------------------------------------------------

1 | /JPEGImages/2007_000032.jpg /SegmentationClassAug/2007_000032.png

2 | /JPEGImages/2007_000039.jpg /SegmentationClassAug/2007_000039.png

3 | /JPEGImages/2007_000063.jpg /SegmentationClassAug/2007_000063.png

4 | /JPEGImages/2007_000068.jpg /SegmentationClassAug/2007_000068.png

5 | /JPEGImages/2007_000121.jpg /SegmentationClassAug/2007_000121.png

6 | /JPEGImages/2007_000170.jpg /SegmentationClassAug/2007_000170.png

7 | /JPEGImages/2007_000241.jpg /SegmentationClassAug/2007_000241.png

8 | /JPEGImages/2007_000243.jpg /SegmentationClassAug/2007_000243.png

9 | /JPEGImages/2007_000250.jpg /SegmentationClassAug/2007_000250.png

10 | /JPEGImages/2007_000256.jpg /SegmentationClassAug/2007_000256.png

11 | /JPEGImages/2007_000333.jpg /SegmentationClassAug/2007_000333.png

12 | /JPEGImages/2007_000363.jpg /SegmentationClassAug/2007_000363.png

13 | /JPEGImages/2007_000364.jpg /SegmentationClassAug/2007_000364.png

14 | /JPEGImages/2007_000392.jpg /SegmentationClassAug/2007_000392.png

15 | /JPEGImages/2007_000480.jpg /SegmentationClassAug/2007_000480.png

16 | /JPEGImages/2007_000504.jpg /SegmentationClassAug/2007_000504.png

17 | /JPEGImages/2007_000515.jpg /SegmentationClassAug/2007_000515.png

18 | /JPEGImages/2007_000528.jpg /SegmentationClassAug/2007_000528.png

19 | /JPEGImages/2007_000549.jpg /SegmentationClassAug/2007_000549.png

20 | /JPEGImages/2007_000584.jpg /SegmentationClassAug/2007_000584.png

21 | /JPEGImages/2007_000645.jpg /SegmentationClassAug/2007_000645.png

22 | /JPEGImages/2007_000648.jpg /SegmentationClassAug/2007_000648.png

23 | /JPEGImages/2007_000713.jpg /SegmentationClassAug/2007_000713.png

24 | /JPEGImages/2007_000720.jpg /SegmentationClassAug/2007_000720.png

25 | /JPEGImages/2007_000733.jpg /SegmentationClassAug/2007_000733.png

26 | /JPEGImages/2007_000738.jpg /SegmentationClassAug/2007_000738.png

27 | /JPEGImages/2007_000768.jpg /SegmentationClassAug/2007_000768.png

28 | /JPEGImages/2007_000793.jpg /SegmentationClassAug/2007_000793.png

29 | /JPEGImages/2007_000822.jpg /SegmentationClassAug/2007_000822.png

30 | /JPEGImages/2007_000836.jpg /SegmentationClassAug/2007_000836.png

31 | /JPEGImages/2007_000876.jpg /SegmentationClassAug/2007_000876.png

32 | /JPEGImages/2007_000904.jpg /SegmentationClassAug/2007_000904.png

33 | /JPEGImages/2007_001027.jpg /SegmentationClassAug/2007_001027.png

34 | /JPEGImages/2007_001073.jpg /SegmentationClassAug/2007_001073.png

35 | /JPEGImages/2007_001149.jpg /SegmentationClassAug/2007_001149.png

36 | /JPEGImages/2007_001185.jpg /SegmentationClassAug/2007_001185.png

37 | /JPEGImages/2007_001225.jpg /SegmentationClassAug/2007_001225.png

38 | /JPEGImages/2007_001397.jpg /SegmentationClassAug/2007_001397.png

39 | /JPEGImages/2007_001416.jpg /SegmentationClassAug/2007_001416.png

40 | /JPEGImages/2007_001420.jpg /SegmentationClassAug/2007_001420.png

41 | /JPEGImages/2007_001439.jpg /SegmentationClassAug/2007_001439.png

42 | /JPEGImages/2007_001487.jpg /SegmentationClassAug/2007_001487.png

43 | /JPEGImages/2007_001595.jpg /SegmentationClassAug/2007_001595.png

44 | /JPEGImages/2007_001602.jpg /SegmentationClassAug/2007_001602.png

45 | /JPEGImages/2007_001609.jpg /SegmentationClassAug/2007_001609.png

46 | /JPEGImages/2007_001698.jpg /SegmentationClassAug/2007_001698.png

47 | /JPEGImages/2007_001704.jpg /SegmentationClassAug/2007_001704.png

48 | /JPEGImages/2007_001709.jpg /SegmentationClassAug/2007_001709.png

49 | /JPEGImages/2007_001724.jpg /SegmentationClassAug/2007_001724.png

50 | /JPEGImages/2007_001764.jpg /SegmentationClassAug/2007_001764.png

51 | /JPEGImages/2007_001825.jpg /SegmentationClassAug/2007_001825.png

52 | /JPEGImages/2007_001834.jpg /SegmentationClassAug/2007_001834.png

53 | /JPEGImages/2007_001857.jpg /SegmentationClassAug/2007_001857.png

54 | /JPEGImages/2007_001872.jpg /SegmentationClassAug/2007_001872.png

55 | /JPEGImages/2007_001901.jpg /SegmentationClassAug/2007_001901.png

56 | /JPEGImages/2007_001917.jpg /SegmentationClassAug/2007_001917.png

57 | /JPEGImages/2007_001960.jpg /SegmentationClassAug/2007_001960.png

58 | /JPEGImages/2007_002024.jpg /SegmentationClassAug/2007_002024.png

59 | /JPEGImages/2007_002055.jpg /SegmentationClassAug/2007_002055.png

60 | /JPEGImages/2007_002088.jpg /SegmentationClassAug/2007_002088.png

61 | /JPEGImages/2007_002099.jpg /SegmentationClassAug/2007_002099.png

62 | /JPEGImages/2007_002105.jpg /SegmentationClassAug/2007_002105.png

63 | /JPEGImages/2007_002212.jpg /SegmentationClassAug/2007_002212.png

64 | /JPEGImages/2007_002216.jpg /SegmentationClassAug/2007_002216.png

65 | /JPEGImages/2007_002227.jpg /SegmentationClassAug/2007_002227.png

66 | /JPEGImages/2007_002234.jpg /SegmentationClassAug/2007_002234.png

67 | /JPEGImages/2007_002281.jpg /SegmentationClassAug/2007_002281.png

68 | /JPEGImages/2007_002361.jpg /SegmentationClassAug/2007_002361.png

69 | /JPEGImages/2007_002368.jpg /SegmentationClassAug/2007_002368.png

70 | /JPEGImages/2007_002370.jpg /SegmentationClassAug/2007_002370.png

71 | /JPEGImages/2007_002462.jpg /SegmentationClassAug/2007_002462.png

72 | /JPEGImages/2007_002760.jpg /SegmentationClassAug/2007_002760.png

73 | /JPEGImages/2007_002845.jpg /SegmentationClassAug/2007_002845.png

74 | /JPEGImages/2007_002896.jpg /SegmentationClassAug/2007_002896.png

75 | /JPEGImages/2007_002953.jpg /SegmentationClassAug/2007_002953.png

76 | /JPEGImages/2007_002967.jpg /SegmentationClassAug/2007_002967.png

77 | /JPEGImages/2007_003178.jpg /SegmentationClassAug/2007_003178.png

78 | /JPEGImages/2007_003189.jpg /SegmentationClassAug/2007_003189.png

79 | /JPEGImages/2007_003190.jpg /SegmentationClassAug/2007_003190.png

80 | /JPEGImages/2007_003207.jpg /SegmentationClassAug/2007_003207.png

81 | /JPEGImages/2007_003251.jpg /SegmentationClassAug/2007_003251.png

82 | /JPEGImages/2007_003286.jpg /SegmentationClassAug/2007_003286.png

83 | /JPEGImages/2007_003525.jpg /SegmentationClassAug/2007_003525.png

84 | /JPEGImages/2007_003593.jpg /SegmentationClassAug/2007_003593.png

85 | /JPEGImages/2007_003604.jpg /SegmentationClassAug/2007_003604.png

86 | /JPEGImages/2007_003788.jpg /SegmentationClassAug/2007_003788.png

87 | /JPEGImages/2007_003815.jpg /SegmentationClassAug/2007_003815.png

88 | /JPEGImages/2007_004081.jpg /SegmentationClassAug/2007_004081.png

89 | /JPEGImages/2007_004627.jpg /SegmentationClassAug/2007_004627.png

90 | /JPEGImages/2007_004707.jpg /SegmentationClassAug/2007_004707.png

91 | /JPEGImages/2007_005210.jpg /SegmentationClassAug/2007_005210.png

92 | /JPEGImages/2007_005273.jpg /SegmentationClassAug/2007_005273.png

93 | /JPEGImages/2007_005902.jpg /SegmentationClassAug/2007_005902.png

94 | /JPEGImages/2007_006530.jpg /SegmentationClassAug/2007_006530.png

95 | /JPEGImages/2007_006581.jpg /SegmentationClassAug/2007_006581.png

96 | /JPEGImages/2007_006605.jpg /SegmentationClassAug/2007_006605.png

97 | /JPEGImages/2007_007432.jpg /SegmentationClassAug/2007_007432.png

98 | /JPEGImages/2007_009709.jpg /SegmentationClassAug/2007_009709.png

99 | /JPEGImages/2007_009788.jpg /SegmentationClassAug/2007_009788.png

100 | /JPEGImages/2008_000015.jpg /SegmentationClassAug/2008_000015.png

101 |

--------------------------------------------------------------------------------

/dataloaders/voc_splits/200_train_supervised.txt:

--------------------------------------------------------------------------------

1 | /JPEGImages/2007_000032.jpg /SegmentationClassAug/2007_000032.png

2 | /JPEGImages/2007_000039.jpg /SegmentationClassAug/2007_000039.png

3 | /JPEGImages/2007_000063.jpg /SegmentationClassAug/2007_000063.png

4 | /JPEGImages/2007_000068.jpg /SegmentationClassAug/2007_000068.png

5 | /JPEGImages/2007_000121.jpg /SegmentationClassAug/2007_000121.png

6 | /JPEGImages/2007_000170.jpg /SegmentationClassAug/2007_000170.png

7 | /JPEGImages/2007_000241.jpg /SegmentationClassAug/2007_000241.png

8 | /JPEGImages/2007_000243.jpg /SegmentationClassAug/2007_000243.png

9 | /JPEGImages/2007_000250.jpg /SegmentationClassAug/2007_000250.png

10 | /JPEGImages/2007_000256.jpg /SegmentationClassAug/2007_000256.png

11 | /JPEGImages/2007_000333.jpg /SegmentationClassAug/2007_000333.png

12 | /JPEGImages/2007_000363.jpg /SegmentationClassAug/2007_000363.png

13 | /JPEGImages/2007_000364.jpg /SegmentationClassAug/2007_000364.png

14 | /JPEGImages/2007_000392.jpg /SegmentationClassAug/2007_000392.png

15 | /JPEGImages/2007_000480.jpg /SegmentationClassAug/2007_000480.png

16 | /JPEGImages/2007_000504.jpg /SegmentationClassAug/2007_000504.png

17 | /JPEGImages/2007_000515.jpg /SegmentationClassAug/2007_000515.png

18 | /JPEGImages/2007_000528.jpg /SegmentationClassAug/2007_000528.png

19 | /JPEGImages/2007_000549.jpg /SegmentationClassAug/2007_000549.png

20 | /JPEGImages/2007_000584.jpg /SegmentationClassAug/2007_000584.png

21 | /JPEGImages/2007_000645.jpg /SegmentationClassAug/2007_000645.png

22 | /JPEGImages/2007_000648.jpg /SegmentationClassAug/2007_000648.png

23 | /JPEGImages/2007_000713.jpg /SegmentationClassAug/2007_000713.png

24 | /JPEGImages/2007_000720.jpg /SegmentationClassAug/2007_000720.png

25 | /JPEGImages/2007_000733.jpg /SegmentationClassAug/2007_000733.png

26 | /JPEGImages/2007_000738.jpg /SegmentationClassAug/2007_000738.png

27 | /JPEGImages/2007_000768.jpg /SegmentationClassAug/2007_000768.png

28 | /JPEGImages/2007_000793.jpg /SegmentationClassAug/2007_000793.png

29 | /JPEGImages/2007_000822.jpg /SegmentationClassAug/2007_000822.png

30 | /JPEGImages/2007_000836.jpg /SegmentationClassAug/2007_000836.png

31 | /JPEGImages/2007_000876.jpg /SegmentationClassAug/2007_000876.png

32 | /JPEGImages/2007_000904.jpg /SegmentationClassAug/2007_000904.png

33 | /JPEGImages/2007_001027.jpg /SegmentationClassAug/2007_001027.png

34 | /JPEGImages/2007_001073.jpg /SegmentationClassAug/2007_001073.png

35 | /JPEGImages/2007_001149.jpg /SegmentationClassAug/2007_001149.png

36 | /JPEGImages/2007_001185.jpg /SegmentationClassAug/2007_001185.png

37 | /JPEGImages/2007_001225.jpg /SegmentationClassAug/2007_001225.png

38 | /JPEGImages/2007_001340.jpg /SegmentationClassAug/2007_001340.png

39 | /JPEGImages/2007_001397.jpg /SegmentationClassAug/2007_001397.png

40 | /JPEGImages/2007_001416.jpg /SegmentationClassAug/2007_001416.png

41 | /JPEGImages/2007_001420.jpg /SegmentationClassAug/2007_001420.png

42 | /JPEGImages/2007_001439.jpg /SegmentationClassAug/2007_001439.png

43 | /JPEGImages/2007_001487.jpg /SegmentationClassAug/2007_001487.png

44 | /JPEGImages/2007_001595.jpg /SegmentationClassAug/2007_001595.png

45 | /JPEGImages/2007_001602.jpg /SegmentationClassAug/2007_001602.png

46 | /JPEGImages/2007_001609.jpg /SegmentationClassAug/2007_001609.png

47 | /JPEGImages/2007_001698.jpg /SegmentationClassAug/2007_001698.png

48 | /JPEGImages/2007_001704.jpg /SegmentationClassAug/2007_001704.png

49 | /JPEGImages/2007_001709.jpg /SegmentationClassAug/2007_001709.png

50 | /JPEGImages/2007_001724.jpg /SegmentationClassAug/2007_001724.png

51 | /JPEGImages/2007_001764.jpg /SegmentationClassAug/2007_001764.png

52 | /JPEGImages/2007_001825.jpg /SegmentationClassAug/2007_001825.png

53 | /JPEGImages/2007_001834.jpg /SegmentationClassAug/2007_001834.png

54 | /JPEGImages/2007_001857.jpg /SegmentationClassAug/2007_001857.png

55 | /JPEGImages/2007_001872.jpg /SegmentationClassAug/2007_001872.png

56 | /JPEGImages/2007_001901.jpg /SegmentationClassAug/2007_001901.png

57 | /JPEGImages/2007_001917.jpg /SegmentationClassAug/2007_001917.png

58 | /JPEGImages/2007_001960.jpg /SegmentationClassAug/2007_001960.png

59 | /JPEGImages/2007_002024.jpg /SegmentationClassAug/2007_002024.png

60 | /JPEGImages/2007_002055.jpg /SegmentationClassAug/2007_002055.png

61 | /JPEGImages/2007_002088.jpg /SegmentationClassAug/2007_002088.png

62 | /JPEGImages/2007_002099.jpg /SegmentationClassAug/2007_002099.png

63 | /JPEGImages/2007_002105.jpg /SegmentationClassAug/2007_002105.png

64 | /JPEGImages/2007_002107.jpg /SegmentationClassAug/2007_002107.png

65 | /JPEGImages/2007_002120.jpg /SegmentationClassAug/2007_002120.png

66 | /JPEGImages/2007_002142.jpg /SegmentationClassAug/2007_002142.png

67 | /JPEGImages/2007_002198.jpg /SegmentationClassAug/2007_002198.png

68 | /JPEGImages/2007_002212.jpg /SegmentationClassAug/2007_002212.png

69 | /JPEGImages/2007_002216.jpg /SegmentationClassAug/2007_002216.png

70 | /JPEGImages/2007_002227.jpg /SegmentationClassAug/2007_002227.png

71 | /JPEGImages/2007_002234.jpg /SegmentationClassAug/2007_002234.png

72 | /JPEGImages/2007_002273.jpg /SegmentationClassAug/2007_002273.png

73 | /JPEGImages/2007_002281.jpg /SegmentationClassAug/2007_002281.png

74 | /JPEGImages/2007_002293.jpg /SegmentationClassAug/2007_002293.png

75 | /JPEGImages/2007_002361.jpg /SegmentationClassAug/2007_002361.png

76 | /JPEGImages/2007_002368.jpg /SegmentationClassAug/2007_002368.png

77 | /JPEGImages/2007_002370.jpg /SegmentationClassAug/2007_002370.png

78 | /JPEGImages/2007_002403.jpg /SegmentationClassAug/2007_002403.png

79 | /JPEGImages/2007_002462.jpg /SegmentationClassAug/2007_002462.png

80 | /JPEGImages/2007_002488.jpg /SegmentationClassAug/2007_002488.png

81 | /JPEGImages/2007_002545.jpg /SegmentationClassAug/2007_002545.png

82 | /JPEGImages/2007_002611.jpg /SegmentationClassAug/2007_002611.png

83 | /JPEGImages/2007_002669.jpg /SegmentationClassAug/2007_002669.png

84 | /JPEGImages/2007_002760.jpg /SegmentationClassAug/2007_002760.png

85 | /JPEGImages/2007_002789.jpg /SegmentationClassAug/2007_002789.png

86 | /JPEGImages/2007_002845.jpg /SegmentationClassAug/2007_002845.png

87 | /JPEGImages/2007_002896.jpg /SegmentationClassAug/2007_002896.png

88 | /JPEGImages/2007_002953.jpg /SegmentationClassAug/2007_002953.png

89 | /JPEGImages/2007_002967.jpg /SegmentationClassAug/2007_002967.png

90 | /JPEGImages/2007_003000.jpg /SegmentationClassAug/2007_003000.png

91 | /JPEGImages/2007_003178.jpg /SegmentationClassAug/2007_003178.png

92 | /JPEGImages/2007_003189.jpg /SegmentationClassAug/2007_003189.png

93 | /JPEGImages/2007_003190.jpg /SegmentationClassAug/2007_003190.png

94 | /JPEGImages/2007_003207.jpg /SegmentationClassAug/2007_003207.png

95 | /JPEGImages/2007_003251.jpg /SegmentationClassAug/2007_003251.png

96 | /JPEGImages/2007_003267.jpg /SegmentationClassAug/2007_003267.png

97 | /JPEGImages/2007_003286.jpg /SegmentationClassAug/2007_003286.png

98 | /JPEGImages/2007_003330.jpg /SegmentationClassAug/2007_003330.png

99 | /JPEGImages/2007_003451.jpg /SegmentationClassAug/2007_003451.png

100 | /JPEGImages/2007_003525.jpg /SegmentationClassAug/2007_003525.png

101 | /JPEGImages/2007_003565.jpg /SegmentationClassAug/2007_003565.png

102 | /JPEGImages/2007_003593.jpg /SegmentationClassAug/2007_003593.png

103 | /JPEGImages/2007_003604.jpg /SegmentationClassAug/2007_003604.png

104 | /JPEGImages/2007_003668.jpg /SegmentationClassAug/2007_003668.png

105 | /JPEGImages/2007_003715.jpg /SegmentationClassAug/2007_003715.png

106 | /JPEGImages/2007_003778.jpg /SegmentationClassAug/2007_003778.png

107 | /JPEGImages/2007_003788.jpg /SegmentationClassAug/2007_003788.png

108 | /JPEGImages/2007_003815.jpg /SegmentationClassAug/2007_003815.png

109 | /JPEGImages/2007_003876.jpg /SegmentationClassAug/2007_003876.png

110 | /JPEGImages/2007_003889.jpg /SegmentationClassAug/2007_003889.png

111 | /JPEGImages/2007_003910.jpg /SegmentationClassAug/2007_003910.png

112 | /JPEGImages/2007_004003.jpg /SegmentationClassAug/2007_004003.png

113 | /JPEGImages/2007_004009.jpg /SegmentationClassAug/2007_004009.png

114 | /JPEGImages/2007_004065.jpg /SegmentationClassAug/2007_004065.png

115 | /JPEGImages/2007_004081.jpg /SegmentationClassAug/2007_004081.png

116 | /JPEGImages/2007_004166.jpg /SegmentationClassAug/2007_004166.png

117 | /JPEGImages/2007_004423.jpg /SegmentationClassAug/2007_004423.png

118 | /JPEGImages/2007_004481.jpg /SegmentationClassAug/2007_004481.png

119 | /JPEGImages/2007_004500.jpg /SegmentationClassAug/2007_004500.png

120 | /JPEGImages/2007_004537.jpg /SegmentationClassAug/2007_004537.png

121 | /JPEGImages/2007_004627.jpg /SegmentationClassAug/2007_004627.png

122 | /JPEGImages/2007_004663.jpg /SegmentationClassAug/2007_004663.png

123 | /JPEGImages/2007_004705.jpg /SegmentationClassAug/2007_004705.png

124 | /JPEGImages/2007_004707.jpg /SegmentationClassAug/2007_004707.png

125 | /JPEGImages/2007_004768.jpg /SegmentationClassAug/2007_004768.png

126 | /JPEGImages/2007_004810.jpg /SegmentationClassAug/2007_004810.png

127 | /JPEGImages/2007_004830.jpg /SegmentationClassAug/2007_004830.png

128 | /JPEGImages/2007_004948.jpg /SegmentationClassAug/2007_004948.png

129 | /JPEGImages/2007_004951.jpg /SegmentationClassAug/2007_004951.png

130 | /JPEGImages/2007_004998.jpg /SegmentationClassAug/2007_004998.png

131 | /JPEGImages/2007_005124.jpg /SegmentationClassAug/2007_005124.png

132 | /JPEGImages/2007_005130.jpg /SegmentationClassAug/2007_005130.png

133 | /JPEGImages/2007_005210.jpg /SegmentationClassAug/2007_005210.png

134 | /JPEGImages/2007_005212.jpg /SegmentationClassAug/2007_005212.png

135 | /JPEGImages/2007_005248.jpg /SegmentationClassAug/2007_005248.png

136 | /JPEGImages/2007_005262.jpg /SegmentationClassAug/2007_005262.png

137 | /JPEGImages/2007_005264.jpg /SegmentationClassAug/2007_005264.png

138 | /JPEGImages/2007_005266.jpg /SegmentationClassAug/2007_005266.png

139 | /JPEGImages/2007_005273.jpg /SegmentationClassAug/2007_005273.png

140 | /JPEGImages/2007_005314.jpg /SegmentationClassAug/2007_005314.png

141 | /JPEGImages/2007_005360.jpg /SegmentationClassAug/2007_005360.png

142 | /JPEGImages/2007_005647.jpg /SegmentationClassAug/2007_005647.png

143 | /JPEGImages/2007_005688.jpg /SegmentationClassAug/2007_005688.png

144 | /JPEGImages/2007_005878.jpg /SegmentationClassAug/2007_005878.png

145 | /JPEGImages/2007_005902.jpg /SegmentationClassAug/2007_005902.png

146 | /JPEGImages/2007_005951.jpg /SegmentationClassAug/2007_005951.png

147 | /JPEGImages/2007_006066.jpg /SegmentationClassAug/2007_006066.png

148 | /JPEGImages/2007_006134.jpg /SegmentationClassAug/2007_006134.png

149 | /JPEGImages/2007_006136.jpg /SegmentationClassAug/2007_006136.png

150 | /JPEGImages/2007_006151.jpg /SegmentationClassAug/2007_006151.png

151 | /JPEGImages/2007_006254.jpg /SegmentationClassAug/2007_006254.png

152 | /JPEGImages/2007_006281.jpg /SegmentationClassAug/2007_006281.png

153 | /JPEGImages/2007_006303.jpg /SegmentationClassAug/2007_006303.png

154 | /JPEGImages/2007_006317.jpg /SegmentationClassAug/2007_006317.png

155 | /JPEGImages/2007_006400.jpg /SegmentationClassAug/2007_006400.png

156 | /JPEGImages/2007_006409.jpg /SegmentationClassAug/2007_006409.png

157 | /JPEGImages/2007_006490.jpg /SegmentationClassAug/2007_006490.png

158 | /JPEGImages/2007_006530.jpg /SegmentationClassAug/2007_006530.png

159 | /JPEGImages/2007_006581.jpg /SegmentationClassAug/2007_006581.png

160 | /JPEGImages/2007_006605.jpg /SegmentationClassAug/2007_006605.png

161 | /JPEGImages/2007_006641.jpg /SegmentationClassAug/2007_006641.png

162 | /JPEGImages/2007_006660.jpg /SegmentationClassAug/2007_006660.png

163 | /JPEGImages/2007_006699.jpg /SegmentationClassAug/2007_006699.png

164 | /JPEGImages/2007_006704.jpg /SegmentationClassAug/2007_006704.png

165 | /JPEGImages/2007_006832.jpg /SegmentationClassAug/2007_006832.png

166 | /JPEGImages/2007_006899.jpg /SegmentationClassAug/2007_006899.png

167 | /JPEGImages/2007_006900.jpg /SegmentationClassAug/2007_006900.png

168 | /JPEGImages/2007_007098.jpg /SegmentationClassAug/2007_007098.png

169 | /JPEGImages/2007_007250.jpg /SegmentationClassAug/2007_007250.png

170 | /JPEGImages/2007_007398.jpg /SegmentationClassAug/2007_007398.png

171 | /JPEGImages/2007_007432.jpg /SegmentationClassAug/2007_007432.png

172 | /JPEGImages/2007_007530.jpg /SegmentationClassAug/2007_007530.png

173 | /JPEGImages/2007_007585.jpg /SegmentationClassAug/2007_007585.png

174 | /JPEGImages/2007_007890.jpg /SegmentationClassAug/2007_007890.png

175 | /JPEGImages/2007_007930.jpg /SegmentationClassAug/2007_007930.png

176 | /JPEGImages/2007_008140.jpg /SegmentationClassAug/2007_008140.png

177 | /JPEGImages/2007_008203.jpg /SegmentationClassAug/2007_008203.png

178 | /JPEGImages/2007_008468.jpg /SegmentationClassAug/2007_008468.png

179 | /JPEGImages/2007_008948.jpg /SegmentationClassAug/2007_008948.png

180 | /JPEGImages/2007_009216.jpg /SegmentationClassAug/2007_009216.png

181 | /JPEGImages/2007_009550.jpg /SegmentationClassAug/2007_009550.png

182 | /JPEGImages/2007_009605.jpg /SegmentationClassAug/2007_009605.png

183 | /JPEGImages/2007_009709.jpg /SegmentationClassAug/2007_009709.png

184 | /JPEGImages/2007_009788.jpg /SegmentationClassAug/2007_009788.png

185 | /JPEGImages/2007_009889.jpg /SegmentationClassAug/2007_009889.png

186 | /JPEGImages/2007_009899.jpg /SegmentationClassAug/2007_009899.png

187 | /JPEGImages/2008_000015.jpg /SegmentationClassAug/2008_000015.png

188 | /JPEGImages/2008_000043.jpg /SegmentationClassAug/2008_000043.png

189 | /JPEGImages/2008_000067.jpg /SegmentationClassAug/2008_000067.png

190 | /JPEGImages/2008_000133.jpg /SegmentationClassAug/2008_000133.png

191 | /JPEGImages/2008_000154.jpg /SegmentationClassAug/2008_000154.png

192 | /JPEGImages/2008_000188.jpg /SegmentationClassAug/2008_000188.png

193 | /JPEGImages/2008_000191.jpg /SegmentationClassAug/2008_000191.png

194 | /JPEGImages/2008_000194.jpg /SegmentationClassAug/2008_000194.png

195 | /JPEGImages/2008_000196.jpg /SegmentationClassAug/2008_000196.png

196 | /JPEGImages/2008_000272.jpg /SegmentationClassAug/2008_000272.png

197 | /JPEGImages/2008_000703.jpg /SegmentationClassAug/2008_000703.png

198 | /JPEGImages/2008_001225.jpg /SegmentationClassAug/2008_001225.png

199 | /JPEGImages/2008_001405.jpg /SegmentationClassAug/2008_001405.png

200 | /JPEGImages/2008_001744.jpg /SegmentationClassAug/2008_001744.png

201 |

--------------------------------------------------------------------------------

/dataloaders/voc_splits/300_train_supervised.txt:

--------------------------------------------------------------------------------

1 | /JPEGImages/2007_000032.jpg /SegmentationClassAug/2007_000032.png

2 | /JPEGImages/2007_000039.jpg /SegmentationClassAug/2007_000039.png

3 | /JPEGImages/2007_000063.jpg /SegmentationClassAug/2007_000063.png

4 | /JPEGImages/2007_000068.jpg /SegmentationClassAug/2007_000068.png

5 | /JPEGImages/2007_000121.jpg /SegmentationClassAug/2007_000121.png

6 | /JPEGImages/2007_000170.jpg /SegmentationClassAug/2007_000170.png

7 | /JPEGImages/2007_000241.jpg /SegmentationClassAug/2007_000241.png

8 | /JPEGImages/2007_000243.jpg /SegmentationClassAug/2007_000243.png

9 | /JPEGImages/2007_000250.jpg /SegmentationClassAug/2007_000250.png

10 | /JPEGImages/2007_000256.jpg /SegmentationClassAug/2007_000256.png

11 | /JPEGImages/2007_000333.jpg /SegmentationClassAug/2007_000333.png

12 | /JPEGImages/2007_000363.jpg /SegmentationClassAug/2007_000363.png

13 | /JPEGImages/2007_000364.jpg /SegmentationClassAug/2007_000364.png

14 | /JPEGImages/2007_000392.jpg /SegmentationClassAug/2007_000392.png

15 | /JPEGImages/2007_000480.jpg /SegmentationClassAug/2007_000480.png

16 | /JPEGImages/2007_000504.jpg /SegmentationClassAug/2007_000504.png

17 | /JPEGImages/2007_000515.jpg /SegmentationClassAug/2007_000515.png

18 | /JPEGImages/2007_000528.jpg /SegmentationClassAug/2007_000528.png

19 | /JPEGImages/2007_000549.jpg /SegmentationClassAug/2007_000549.png

20 | /JPEGImages/2007_000584.jpg /SegmentationClassAug/2007_000584.png

21 | /JPEGImages/2007_000645.jpg /SegmentationClassAug/2007_000645.png

22 | /JPEGImages/2007_000648.jpg /SegmentationClassAug/2007_000648.png

23 | /JPEGImages/2007_000713.jpg /SegmentationClassAug/2007_000713.png

24 | /JPEGImages/2007_000720.jpg /SegmentationClassAug/2007_000720.png

25 | /JPEGImages/2007_000733.jpg /SegmentationClassAug/2007_000733.png

26 | /JPEGImages/2007_000738.jpg /SegmentationClassAug/2007_000738.png

27 | /JPEGImages/2007_000768.jpg /SegmentationClassAug/2007_000768.png

28 | /JPEGImages/2007_000793.jpg /SegmentationClassAug/2007_000793.png

29 | /JPEGImages/2007_000822.jpg /SegmentationClassAug/2007_000822.png

30 | /JPEGImages/2007_000836.jpg /SegmentationClassAug/2007_000836.png

31 | /JPEGImages/2007_000876.jpg /SegmentationClassAug/2007_000876.png

32 | /JPEGImages/2007_000904.jpg /SegmentationClassAug/2007_000904.png

33 | /JPEGImages/2007_001027.jpg /SegmentationClassAug/2007_001027.png

34 | /JPEGImages/2007_001073.jpg /SegmentationClassAug/2007_001073.png

35 | /JPEGImages/2007_001149.jpg /SegmentationClassAug/2007_001149.png

36 | /JPEGImages/2007_001185.jpg /SegmentationClassAug/2007_001185.png

37 | /JPEGImages/2007_001225.jpg /SegmentationClassAug/2007_001225.png

38 | /JPEGImages/2007_001340.jpg /SegmentationClassAug/2007_001340.png

39 | /JPEGImages/2007_001397.jpg /SegmentationClassAug/2007_001397.png

40 | /JPEGImages/2007_001416.jpg /SegmentationClassAug/2007_001416.png

41 | /JPEGImages/2007_001420.jpg /SegmentationClassAug/2007_001420.png

42 | /JPEGImages/2007_001439.jpg /SegmentationClassAug/2007_001439.png

43 | /JPEGImages/2007_001487.jpg /SegmentationClassAug/2007_001487.png

44 | /JPEGImages/2007_001595.jpg /SegmentationClassAug/2007_001595.png

45 | /JPEGImages/2007_001602.jpg /SegmentationClassAug/2007_001602.png

46 | /JPEGImages/2007_001609.jpg /SegmentationClassAug/2007_001609.png

47 | /JPEGImages/2007_001698.jpg /SegmentationClassAug/2007_001698.png

48 | /JPEGImages/2007_001704.jpg /SegmentationClassAug/2007_001704.png

49 | /JPEGImages/2007_001709.jpg /SegmentationClassAug/2007_001709.png

50 | /JPEGImages/2007_001724.jpg /SegmentationClassAug/2007_001724.png

51 | /JPEGImages/2007_001764.jpg /SegmentationClassAug/2007_001764.png

52 | /JPEGImages/2007_001825.jpg /SegmentationClassAug/2007_001825.png

53 | /JPEGImages/2007_001834.jpg /SegmentationClassAug/2007_001834.png

54 | /JPEGImages/2007_001857.jpg /SegmentationClassAug/2007_001857.png

55 | /JPEGImages/2007_001872.jpg /SegmentationClassAug/2007_001872.png

56 | /JPEGImages/2007_001901.jpg /SegmentationClassAug/2007_001901.png

57 | /JPEGImages/2007_001917.jpg /SegmentationClassAug/2007_001917.png

58 | /JPEGImages/2007_001960.jpg /SegmentationClassAug/2007_001960.png

59 | /JPEGImages/2007_002024.jpg /SegmentationClassAug/2007_002024.png

60 | /JPEGImages/2007_002055.jpg /SegmentationClassAug/2007_002055.png

61 | /JPEGImages/2007_002088.jpg /SegmentationClassAug/2007_002088.png

62 | /JPEGImages/2007_002099.jpg /SegmentationClassAug/2007_002099.png

63 | /JPEGImages/2007_002105.jpg /SegmentationClassAug/2007_002105.png

64 | /JPEGImages/2007_002107.jpg /SegmentationClassAug/2007_002107.png

65 | /JPEGImages/2007_002120.jpg /SegmentationClassAug/2007_002120.png

66 | /JPEGImages/2007_002142.jpg /SegmentationClassAug/2007_002142.png

67 | /JPEGImages/2007_002198.jpg /SegmentationClassAug/2007_002198.png

68 | /JPEGImages/2007_002212.jpg /SegmentationClassAug/2007_002212.png

69 | /JPEGImages/2007_002216.jpg /SegmentationClassAug/2007_002216.png

70 | /JPEGImages/2007_002227.jpg /SegmentationClassAug/2007_002227.png

71 | /JPEGImages/2007_002234.jpg /SegmentationClassAug/2007_002234.png

72 | /JPEGImages/2007_002273.jpg /SegmentationClassAug/2007_002273.png

73 | /JPEGImages/2007_002281.jpg /SegmentationClassAug/2007_002281.png

74 | /JPEGImages/2007_002293.jpg /SegmentationClassAug/2007_002293.png

75 | /JPEGImages/2007_002361.jpg /SegmentationClassAug/2007_002361.png

76 | /JPEGImages/2007_002368.jpg /SegmentationClassAug/2007_002368.png

77 | /JPEGImages/2007_002370.jpg /SegmentationClassAug/2007_002370.png

78 | /JPEGImages/2007_002403.jpg /SegmentationClassAug/2007_002403.png

79 | /JPEGImages/2007_002462.jpg /SegmentationClassAug/2007_002462.png

80 | /JPEGImages/2007_002488.jpg /SegmentationClassAug/2007_002488.png

81 | /JPEGImages/2007_002545.jpg /SegmentationClassAug/2007_002545.png

82 | /JPEGImages/2007_002611.jpg /SegmentationClassAug/2007_002611.png

83 | /JPEGImages/2007_002639.jpg /SegmentationClassAug/2007_002639.png

84 | /JPEGImages/2007_002668.jpg /SegmentationClassAug/2007_002668.png

85 | /JPEGImages/2007_002669.jpg /SegmentationClassAug/2007_002669.png

86 | /JPEGImages/2007_002760.jpg /SegmentationClassAug/2007_002760.png

87 | /JPEGImages/2007_002789.jpg /SegmentationClassAug/2007_002789.png

88 | /JPEGImages/2007_002845.jpg /SegmentationClassAug/2007_002845.png

89 | /JPEGImages/2007_002895.jpg /SegmentationClassAug/2007_002895.png

90 | /JPEGImages/2007_002896.jpg /SegmentationClassAug/2007_002896.png

91 | /JPEGImages/2007_002914.jpg /SegmentationClassAug/2007_002914.png

92 | /JPEGImages/2007_002953.jpg /SegmentationClassAug/2007_002953.png

93 | /JPEGImages/2007_002954.jpg /SegmentationClassAug/2007_002954.png

94 | /JPEGImages/2007_002967.jpg /SegmentationClassAug/2007_002967.png

95 | /JPEGImages/2007_003000.jpg /SegmentationClassAug/2007_003000.png

96 | /JPEGImages/2007_003178.jpg /SegmentationClassAug/2007_003178.png

97 | /JPEGImages/2007_003189.jpg /SegmentationClassAug/2007_003189.png

98 | /JPEGImages/2007_003190.jpg /SegmentationClassAug/2007_003190.png

99 | /JPEGImages/2007_003207.jpg /SegmentationClassAug/2007_003207.png

100 | /JPEGImages/2007_003251.jpg /SegmentationClassAug/2007_003251.png

101 | /JPEGImages/2007_003267.jpg /SegmentationClassAug/2007_003267.png

102 | /JPEGImages/2007_003286.jpg /SegmentationClassAug/2007_003286.png

103 | /JPEGImages/2007_003330.jpg /SegmentationClassAug/2007_003330.png

104 | /JPEGImages/2007_003451.jpg /SegmentationClassAug/2007_003451.png

105 | /JPEGImages/2007_003525.jpg /SegmentationClassAug/2007_003525.png

106 | /JPEGImages/2007_003565.jpg /SegmentationClassAug/2007_003565.png

107 | /JPEGImages/2007_003593.jpg /SegmentationClassAug/2007_003593.png

108 | /JPEGImages/2007_003604.jpg /SegmentationClassAug/2007_003604.png

109 | /JPEGImages/2007_003668.jpg /SegmentationClassAug/2007_003668.png

110 | /JPEGImages/2007_003715.jpg /SegmentationClassAug/2007_003715.png

111 | /JPEGImages/2007_003778.jpg /SegmentationClassAug/2007_003778.png

112 | /JPEGImages/2007_003788.jpg /SegmentationClassAug/2007_003788.png

113 | /JPEGImages/2007_003815.jpg /SegmentationClassAug/2007_003815.png

114 | /JPEGImages/2007_003876.jpg /SegmentationClassAug/2007_003876.png

115 | /JPEGImages/2007_003889.jpg /SegmentationClassAug/2007_003889.png

116 | /JPEGImages/2007_003910.jpg /SegmentationClassAug/2007_003910.png

117 | /JPEGImages/2007_004003.jpg /SegmentationClassAug/2007_004003.png

118 | /JPEGImages/2007_004009.jpg /SegmentationClassAug/2007_004009.png

119 | /JPEGImages/2007_004065.jpg /SegmentationClassAug/2007_004065.png

120 | /JPEGImages/2007_004081.jpg /SegmentationClassAug/2007_004081.png

121 | /JPEGImages/2007_004166.jpg /SegmentationClassAug/2007_004166.png

122 | /JPEGImages/2007_004423.jpg /SegmentationClassAug/2007_004423.png

123 | /JPEGImages/2007_004459.jpg /SegmentationClassAug/2007_004459.png

124 | /JPEGImages/2007_004481.jpg /SegmentationClassAug/2007_004481.png

125 | /JPEGImages/2007_004500.jpg /SegmentationClassAug/2007_004500.png

126 | /JPEGImages/2007_004537.jpg /SegmentationClassAug/2007_004537.png

127 | /JPEGImages/2007_004627.jpg /SegmentationClassAug/2007_004627.png

128 | /JPEGImages/2007_004663.jpg /SegmentationClassAug/2007_004663.png

129 | /JPEGImages/2007_004705.jpg /SegmentationClassAug/2007_004705.png

130 | /JPEGImages/2007_004707.jpg /SegmentationClassAug/2007_004707.png

131 | /JPEGImages/2007_004768.jpg /SegmentationClassAug/2007_004768.png

132 | /JPEGImages/2007_004810.jpg /SegmentationClassAug/2007_004810.png

133 | /JPEGImages/2007_004830.jpg /SegmentationClassAug/2007_004830.png

134 | /JPEGImages/2007_004841.jpg /SegmentationClassAug/2007_004841.png

135 | /JPEGImages/2007_004948.jpg /SegmentationClassAug/2007_004948.png

136 | /JPEGImages/2007_004951.jpg /SegmentationClassAug/2007_004951.png

137 | /JPEGImages/2007_004988.jpg /SegmentationClassAug/2007_004988.png

138 | /JPEGImages/2007_004998.jpg /SegmentationClassAug/2007_004998.png

139 | /JPEGImages/2007_005043.jpg /SegmentationClassAug/2007_005043.png

140 | /JPEGImages/2007_005124.jpg /SegmentationClassAug/2007_005124.png

141 | /JPEGImages/2007_005130.jpg /SegmentationClassAug/2007_005130.png

142 | /JPEGImages/2007_005210.jpg /SegmentationClassAug/2007_005210.png

143 | /JPEGImages/2007_005212.jpg /SegmentationClassAug/2007_005212.png

144 | /JPEGImages/2007_005248.jpg /SegmentationClassAug/2007_005248.png

145 | /JPEGImages/2007_005262.jpg /SegmentationClassAug/2007_005262.png

146 | /JPEGImages/2007_005264.jpg /SegmentationClassAug/2007_005264.png

147 | /JPEGImages/2007_005266.jpg /SegmentationClassAug/2007_005266.png

148 | /JPEGImages/2007_005273.jpg /SegmentationClassAug/2007_005273.png

149 | /JPEGImages/2007_005314.jpg /SegmentationClassAug/2007_005314.png

150 | /JPEGImages/2007_005360.jpg /SegmentationClassAug/2007_005360.png

151 | /JPEGImages/2007_005647.jpg /SegmentationClassAug/2007_005647.png

152 | /JPEGImages/2007_005688.jpg /SegmentationClassAug/2007_005688.png

153 | /JPEGImages/2007_005797.jpg /SegmentationClassAug/2007_005797.png

154 | /JPEGImages/2007_005878.jpg /SegmentationClassAug/2007_005878.png

155 | /JPEGImages/2007_005902.jpg /SegmentationClassAug/2007_005902.png

156 | /JPEGImages/2007_005951.jpg /SegmentationClassAug/2007_005951.png

157 | /JPEGImages/2007_005989.jpg /SegmentationClassAug/2007_005989.png

158 | /JPEGImages/2007_006066.jpg /SegmentationClassAug/2007_006066.png

159 | /JPEGImages/2007_006134.jpg /SegmentationClassAug/2007_006134.png

160 | /JPEGImages/2007_006136.jpg /SegmentationClassAug/2007_006136.png

161 | /JPEGImages/2007_006151.jpg /SegmentationClassAug/2007_006151.png

162 | /JPEGImages/2007_006212.jpg /SegmentationClassAug/2007_006212.png

163 | /JPEGImages/2007_006254.jpg /SegmentationClassAug/2007_006254.png

164 | /JPEGImages/2007_006281.jpg /SegmentationClassAug/2007_006281.png

165 | /JPEGImages/2007_006303.jpg /SegmentationClassAug/2007_006303.png

166 | /JPEGImages/2007_006317.jpg /SegmentationClassAug/2007_006317.png

167 | /JPEGImages/2007_006400.jpg /SegmentationClassAug/2007_006400.png

168 | /JPEGImages/2007_006409.jpg /SegmentationClassAug/2007_006409.png

169 | /JPEGImages/2007_006445.jpg /SegmentationClassAug/2007_006445.png

170 | /JPEGImages/2007_006490.jpg /SegmentationClassAug/2007_006490.png

171 | /JPEGImages/2007_006530.jpg /SegmentationClassAug/2007_006530.png

172 | /JPEGImages/2007_006581.jpg /SegmentationClassAug/2007_006581.png

173 | /JPEGImages/2007_006585.jpg /SegmentationClassAug/2007_006585.png

174 | /JPEGImages/2007_006605.jpg /SegmentationClassAug/2007_006605.png