├── .gitignore

├── .ipynb_checkpoints

├── Tutorial_10 - [transfer learning] Add new variables to graph and save the new model-checkpoint.ipynb

├── Tutorial_8 - A very simple example for tensorboard-checkpoint.ipynb

└── Tutorial_9 - How to save the model-checkpoint.ipynb

├── README.md

├── ckpt

├── bi-lstm.ckpt-12.data-00000-of-00001

├── bi-lstm.ckpt-12.index

├── bi-lstm.ckpt-12.meta

└── checkpoint

├── data

└── msr_train.txt

├── doc

└── problem-solution.md

├── example-notebook

├── .ipynb_checkpoints

│ ├── Tutorial_01 Basic Usage-checkpoint.ipynb

│ ├── Tutorial_02 A simple feedforward network for MNIST-checkpoint.ipynb

│ ├── Tutorial_03_1 The usage of name_scope and variable_scope-checkpoint.ipynb

│ ├── Tutorial_03_2 The usage of Collection-checkpoint.ipynb

│ ├── Tutorial_04_1 Convolutional network for MNIST(1)-checkpoint.ipynb

│ ├── Tutorial_04_2 Convolutional network for MNIST(2)-checkpoint.ipynb

│ ├── Tutorial_04_3 Convolutional network for MNIST(3)-checkpoint.ipynb

│ ├── Tutorial_04_3 Convolutional network for MNIST(4)-BN-checkpoint.ipynb

│ ├── Tutorial_05_1 An understandable example to implement Multi-LSTM for MNIST(1)-Copy1-checkpoint.ipynb

│ ├── Tutorial_05_1 An understandable example to implement Multi-LSTM for MNIST-checkpoint.ipynb

│ ├── Tutorial_05_2 An understandable example to implement Multi-GRU for MNIST-checkpoint.ipynb

│ ├── Tutorial_05_3 Bi-GRU for MNIST-checkpoint.ipynb

│ ├── Tutorial_06 A very simple example for tensorboard-checkpoint.ipynb

│ ├── Tutorial_07 How to save the model-checkpoint.ipynb

│ ├── Tutorial_08 [transfer learning] Add new variables to graph and save the new model-checkpoint.ipynb

│ ├── Tutorial_09 [tfrecord] use tfrecord to store sequences of different length-checkpoint.ipynb

│ ├── Tutorial_10 [Dataset] numpy data-checkpoint.ipynb

│ ├── Tutorial_10_3 [Dataset] sequence data-checkpoint.ipynb

│ ├── Tutorial_11 [Dataset] image data-checkpoint.ipynb

│ ├── Tutorial_12 [tf.layer]high layer API-checkpoint.ipynb

│ └── Untitled-checkpoint.ipynb

├── MNIST_data

│ ├── t10k-images-idx3-ubyte.gz

│ ├── t10k-labels-idx1-ubyte.gz

│ ├── train-images-idx3-ubyte.gz

│ └── train-labels-idx1-ubyte.gz

├── Tutorial_01 Basic Usage.ipynb

├── Tutorial_02 A simple feedforward network for MNIST.ipynb

├── Tutorial_03_1 The usage of name_scope and variable_scope.ipynb

├── Tutorial_03_2 The usage of Collection.ipynb

├── Tutorial_04_1 Convolutional network for MNIST(1).ipynb

├── Tutorial_04_2 Convolutional network for MNIST(2).ipynb

├── Tutorial_04_3 Convolutional network for MNIST(3).ipynb

├── Tutorial_05_1 An understandable example to implement Multi-LSTM for MNIST.ipynb

├── Tutorial_05_2 An understandable example to implement Multi-GRU for MNIST.ipynb

├── Tutorial_05_3 Bi-GRU for MNIST.ipynb

├── Tutorial_06 A very simple example for tensorboard.ipynb

├── Tutorial_07 How to save the model.ipynb

├── Tutorial_08 [transfer learning] Add new variables to graph and save the new model.ipynb

├── Tutorial_09 [tfrecord] use tfrecord to store sequences of different length.ipynb

├── Tutorial_10 [Dataset] numpy data.ipynb

├── Tutorial_11 [Dataset] image data.ipynb

└── Tutorial_12 [tf.layer]high layer API.ipynb

├── example-python

├── .ipynb_checkpoints

│ └── tfrecord_sequence_example-checkpoint.ipynb

├── Tutorial-graph.py

├── Tutorial_10_1_numpy_data_1.py

├── Tutorial_10_1_numpy_data_2.py

├── Tutorial_10_2_image_data_1.py

└── Tutorial_10_2_image_data_2.py

├── figs

├── conv_mnist.png

├── graph2.png

├── lstm_8.png

└── lstm_mnist.png

├── models

├── README.md

├── Tutorial_13 - Basic Seq2seq example.ipynb

├── Tutorial_6 - Bi-directional LSTM for sequence labeling (Chinese segmentation).ipynb

├── m01_batch_normalization

│ ├── README.md

│ ├── __init__.py

│ ├── mnist_cnn.py

│ ├── predict.py

│ └── train.py

├── m02_dcgan

│ ├── README.md

│ ├── __init__.py

│ ├── dcgan 生成二次元头像.ipynb

│ └── dcgan.py

├── m03_wgan

│ ├── README.md

│ ├── wgan.py

│ └── wgan_gp.py

└── m04_pix2pix

│ ├── README.md

│ ├── __init__.py

│ ├── model.py

│ ├── pix2pix.py

│ ├── test.py

│ ├── test.sh

│ ├── train.py

│ ├── train.sh

│ └── utils.py

└── utils

├── u01_logging

├── logging1.py

└── training.log

├── u02_tfrecord

├── README.md

├── __init__.py

├── reader_without_shuffle.ipynb

├── sequence_example_lib.py

├── tfrecord_1_numpy_reader.py

├── tfrecord_1_numpy_reader_without_shuffle.py

├── tfrecord_1_numpy_writer.py

├── tfrecord_2_seqence_reader.py

├── tfrecord_2_seqence_writer.py

├── tfrecord_3_image_reader.py

└── tfrecord_3_image_writer.py

└── u03_flags.py

/.gitignore:

--------------------------------------------------------------------------------

1 | ckpt/*

2 | summary/*

3 | data/*

4 | *.pyc

5 | **/.ipynb_checkpoints/

6 | mnist_png/*

7 | *.tfrecord

8 | **/generated_img/

9 | **/generated_img2/

10 | **/generated_img_gp/

11 | **/MNIST_data/

12 | **/ckpt/

13 | **/ckpt*/

14 | **/summary/

15 | **/data/

16 | events*

17 | **/facades_train/

18 | **/facades_test/

19 |

20 |

21 |

--------------------------------------------------------------------------------

/.ipynb_checkpoints/Tutorial_10 - [transfer learning] Add new variables to graph and save the new model-checkpoint.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "markdown",

5 | "metadata": {},

6 | "source": [

7 | "## 【迁移学习】往一个已经保存好的 模型添加新的变量\n",

8 | "在迁移学习中,通常我们已经训练好一个模型,现在需要修改模型的部分结构,用于我们的新任务。\n",

9 | "\n",

10 | "比如:\n",

11 | "\n",

12 | "在一个图片分类任务中,我们使用别人训练好的网络来提取特征,但是我们的分类数目和原模型不同,这样我们只能取到 fc 层,后面的分类层需要重新写。这样我们就需要添加新的变量。那么这些新加入的变量必须得初始化才能使用。可是我们又不能使用 'tf.global_variables_initializer()' 来初始化,否则原本训练好的模型就没用了。\n",

13 | "\n",

14 | "关于怎么样单独初始化新增的变量,可以参考下面两个链接:\n",

15 | "- [1]https://stackoverflow.com/questions/35164529/in-tensorflow-is-there-any-way-to-just-initialize-uninitialised-variables\n",

16 | "- [2]https://stackoverflow.com/questions/35013080/tensorflow-how-to-get-all-variables-from-rnn-cell-basiclstm-rnn-cell-multirnn\n",

17 | "\n",

18 | "简单的例子可以直接看下面我的实现方式。"

19 | ]

20 | },

21 | {

22 | "cell_type": "markdown",

23 | "metadata": {},

24 | "source": [

25 | "### 初始模型定义与保存\n",

26 | "首先定义一个模型,里边有 v1, v2 两个变量,我们把这个模型保存起来。"

27 | ]

28 | },

29 | {

30 | "cell_type": "code",

31 | "execution_count": 1,

32 | "metadata": {

33 | "collapsed": false

34 | },

35 | "outputs": [

36 | {

37 | "name": "stdout",

38 | "output_type": "stream",

39 | "text": [

40 | "Model saved in file: ./ckpt/test-model.ckpt-1\n"

41 | ]

42 | }

43 | ],

44 | "source": [

45 | "import tensorflow as tf\n",

46 | "config = tf.ConfigProto()\n",

47 | "config.gpu_options.allow_growth = True\n",

48 | "sess = tf.Session(config=config)\n",

49 | "\n",

50 | "# Create some variables.\n",

51 | "v1 = tf.Variable([1.0, 2.3], name=\"v1\")\n",

52 | "v2 = tf.Variable(55.5, name=\"v2\")\n",

53 | "\n",

54 | "\n",

55 | "init_op = tf.global_variables_initializer()\n",

56 | "saver = tf.train.Saver()\n",

57 | "ckpt_path = './ckpt/test-model.ckpt'\n",

58 | "sess.run(init_op)\n",

59 | "save_path = saver.save(sess, ckpt_path, global_step=1)\n",

60 | "print(\"Model saved in file: %s\" % save_path)"

61 | ]

62 | },

63 | {

64 | "cell_type": "markdown",

65 | "metadata": {},

66 | "source": [

67 | "**restart(重启kernel然后执行下面cell的代码)**\n",

68 | "### 导入已经保存好的模型,并添加新的变量\n",

69 | "现在把之前保存好的模型,我只需要其中的 v1 变量。同时我还要添加新的变量 v3。"

70 | ]

71 | },

72 | {

73 | "cell_type": "code",

74 | "execution_count": 1,

75 | "metadata": {

76 | "collapsed": false,

77 | "scrolled": false

78 | },

79 | "outputs": [

80 | {

81 | "name": "stdout",

82 | "output_type": "stream",

83 | "text": [

84 | "INFO:tensorflow:Restoring parameters from ./ckpt/test-model.ckpt-1\n",

85 | "[ 1. 2.29999995]\n",

86 | "[ 1. 2.29999995]\n",

87 | "666\n"

88 | ]

89 | },

90 | {

91 | "data": {

92 | "text/plain": [

93 | "'./ckpt/test-model.ckpt-2'"

94 | ]

95 | },

96 | "execution_count": 1,

97 | "metadata": {},

98 | "output_type": "execute_result"

99 | }

100 | ],

101 | "source": [

102 | "import tensorflow as tf\n",

103 | "config = tf.ConfigProto()\n",

104 | "config.gpu_options.allow_growth = True\n",

105 | "sess = tf.Session(config=config)\n",

106 | "\n",

107 | "# Create some variables.\n",

108 | "v1 = tf.Variable([11.0, 16.3], name=\"v1\")\n",

109 | "\n",

110 | "# ** 导入训练好的模型\n",

111 | "saver = tf.train.Saver()\n",

112 | "ckpt_path = './ckpt/test-model.ckpt'\n",

113 | "saver.restore(sess, ckpt_path + '-'+ str(1))\n",

114 | "print(sess.run(v1))\n",

115 | "\n",

116 | "# ** 定义新的变量并单独初始化新定义的变量\n",

117 | "v3 = tf.Variable(666, name='v3', dtype=tf.int32)\n",

118 | "init_new = tf.variables_initializer([v3])\n",

119 | "sess.run(init_new)\n",

120 | "# 。。。这里就可以进行 fine-tune 了\n",

121 | "print(sess.run(v1))\n",

122 | "print(sess.run(v3))\n",

123 | "\n",

124 | "# ** 保存新的模型。 \n",

125 | "# 注意!注意!注意! 一定一定一定要重新定义 saver, 这样才能把 v3 添加到 checkpoint 中\n",

126 | "saver = tf.train.Saver()\n",

127 | "saver.save(sess, ckpt_path, global_step=2)"

128 | ]

129 | },

130 | {

131 | "cell_type": "markdown",

132 | "metadata": {},

133 | "source": [

134 | "**restart(重启kernel然后执行下面cell的代码)**\n",

135 | "### 这样就完成了 fine-tune, 得到了新的模型"

136 | ]

137 | },

138 | {

139 | "cell_type": "code",

140 | "execution_count": 1,

141 | "metadata": {

142 | "collapsed": false

143 | },

144 | "outputs": [

145 | {

146 | "name": "stdout",

147 | "output_type": "stream",

148 | "text": [

149 | "INFO:tensorflow:Restoring parameters from ./ckpt/test-model.ckpt-2\n",

150 | "Model restored.\n",

151 | "[ 1. 2.29999995]\n",

152 | "666\n"

153 | ]

154 | }

155 | ],

156 | "source": [

157 | "import tensorflow as tf\n",

158 | "config = tf.ConfigProto()\n",

159 | "config.gpu_options.allow_growth = True\n",

160 | "sess = tf.Session(config=config)\n",

161 | "\n",

162 | "# Create some variables.\n",

163 | "v1 = tf.Variable([11.0, 16.3], name=\"v1\")\n",

164 | "v3 = tf.Variable(666, name='v3', dtype=tf.int32)\n",

165 | "# Add ops to save and restore all the variables.\n",

166 | "saver = tf.train.Saver()\n",

167 | "\n",

168 | "# Later, launch the model, use the saver to restore variables from disk, and\n",

169 | "# do some work with the model.\n",

170 | "# Restore variables from disk.\n",

171 | "ckpt_path = './ckpt/test-model.ckpt'\n",

172 | "saver.restore(sess, ckpt_path + '-'+ str(2))\n",

173 | "print(\"Model restored.\")\n",

174 | "\n",

175 | "print(sess.run(v1))\n",

176 | "print(sess.run(v3))"

177 | ]

178 | },

179 | {

180 | "cell_type": "code",

181 | "execution_count": null,

182 | "metadata": {

183 | "collapsed": true

184 | },

185 | "outputs": [],

186 | "source": []

187 | }

188 | ],

189 | "metadata": {

190 | "anaconda-cloud": {},

191 | "kernelspec": {

192 | "display_name": "Python [conda root]",

193 | "language": "python",

194 | "name": "conda-root-py"

195 | },

196 | "language_info": {

197 | "codemirror_mode": {

198 | "name": "ipython",

199 | "version": 2

200 | },

201 | "file_extension": ".py",

202 | "mimetype": "text/x-python",

203 | "name": "python",

204 | "nbconvert_exporter": "python",

205 | "pygments_lexer": "ipython2",

206 | "version": "2.7.12"

207 | }

208 | },

209 | "nbformat": 4,

210 | "nbformat_minor": 1

211 | }

212 |

--------------------------------------------------------------------------------

/.ipynb_checkpoints/Tutorial_9 - How to save the model-checkpoint.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "markdown",

5 | "metadata": {},

6 | "source": [

7 | "## 如何使用 tf.train.Saver() 来保存模型\n",

8 | "之前一直出错,主要是因为坑爹的编码问题。所以要注意文件的路径绝对不不要出现什么中文呀。"

9 | ]

10 | },

11 | {

12 | "cell_type": "code",

13 | "execution_count": 1,

14 | "metadata": {

15 | "collapsed": false

16 | },

17 | "outputs": [

18 | {

19 | "name": "stdout",

20 | "output_type": "stream",

21 | "text": [

22 | "Model saved in file: ./ckpt/test-model.ckpt-1\n"

23 | ]

24 | }

25 | ],

26 | "source": [

27 | "import tensorflow as tf\n",

28 | "config = tf.ConfigProto()\n",

29 | "config.gpu_options.allow_growth = True\n",

30 | "sess = tf.Session(config=config)\n",

31 | "\n",

32 | "# Create some variables.\n",

33 | "v1 = tf.Variable([1.0, 2.3], name=\"v1\")\n",

34 | "v2 = tf.Variable(55.5, name=\"v2\")\n",

35 | "\n",

36 | "# Add an op to initialize the variables.\n",

37 | "init_op = tf.global_variables_initializer()\n",

38 | "\n",

39 | "# Add ops to save and restore all the variables.\n",

40 | "saver = tf.train.Saver()\n",

41 | "\n",

42 | "ckpt_path = './ckpt/test-model.ckpt'\n",

43 | "# Later, launch the model, initialize the variables, do some work, save the\n",

44 | "# variables to disk.\n",

45 | "sess.run(init_op)\n",

46 | "save_path = saver.save(sess, ckpt_path, global_step=1)\n",

47 | "print(\"Model saved in file: %s\" % save_path)"

48 | ]

49 | },

50 | {

51 | "cell_type": "markdown",

52 | "metadata": {},

53 | "source": [

54 | "注意,在上面保存完了模型之后。**Restart kernel** 之后才能使用下面的模型导入。否则会因为两次命名 \"v1\" 而导致名字错误。"

55 | ]

56 | },

57 | {

58 | "cell_type": "code",

59 | "execution_count": 1,

60 | "metadata": {

61 | "collapsed": false

62 | },

63 | "outputs": [

64 | {

65 | "name": "stdout",

66 | "output_type": "stream",

67 | "text": [

68 | "INFO:tensorflow:Restoring parameters from ./ckpt/test-model.ckpt-1\n",

69 | "Model restored.\n",

70 | "[ 1. 2.29999995]\n",

71 | "55.5\n"

72 | ]

73 | }

74 | ],

75 | "source": [

76 | "import tensorflow as tf\n",

77 | "config = tf.ConfigProto()\n",

78 | "config.gpu_options.allow_growth = True\n",

79 | "sess = tf.Session(config=config)\n",

80 | "\n",

81 | "# Create some variables.\n",

82 | "v1 = tf.Variable([11.0, 16.3], name=\"v1\")\n",

83 | "v2 = tf.Variable(33.5, name=\"v2\")\n",

84 | "\n",

85 | "# Add ops to save and restore all the variables.\n",

86 | "saver = tf.train.Saver()\n",

87 | "\n",

88 | "# Later, launch the model, use the saver to restore variables from disk, and\n",

89 | "# do some work with the model.\n",

90 | "# Restore variables from disk.\n",

91 | "ckpt_path = './ckpt/test-model.ckpt'\n",

92 | "saver.restore(sess, ckpt_path + '-'+ str(1))\n",

93 | "print(\"Model restored.\")\n",

94 | "\n",

95 | "print sess.run(v1)\n",

96 | "print sess.run(v2)"

97 | ]

98 | },

99 | {

100 | "cell_type": "markdown",

101 | "metadata": {},

102 | "source": [

103 | "导入模型之前,必须重新再定义一遍变量。\n",

104 | "\n",

105 | "但是并不需要全部变量都重新进行定义,只定义我们需要的变量就行了。\n",

106 | "\n",

107 | "也就是说,**你所定义的变量一定要在 checkpoint 中存在;但不是所有在checkpoint中的变量,你都要重新定义。**"

108 | ]

109 | },

110 | {

111 | "cell_type": "code",

112 | "execution_count": 1,

113 | "metadata": {

114 | "collapsed": false

115 | },

116 | "outputs": [

117 | {

118 | "name": "stdout",

119 | "output_type": "stream",

120 | "text": [

121 | "INFO:tensorflow:Restoring parameters from ./ckpt/test-model.ckpt-1\n",

122 | "Model restored.\n",

123 | "[ 1. 2.29999995]\n"

124 | ]

125 | }

126 | ],

127 | "source": [

128 | "import tensorflow as tf\n",

129 | "config = tf.ConfigProto()\n",

130 | "config.gpu_options.allow_growth = True\n",

131 | "sess = tf.Session(config=config)\n",

132 | "\n",

133 | "# Create some variables.\n",

134 | "v1 = tf.Variable([11.0, 16.3], name=\"v1\")\n",

135 | "\n",

136 | "# Add ops to save and restore all the variables.\n",

137 | "saver = tf.train.Saver()\n",

138 | "\n",

139 | "# Later, launch the model, use the saver to restore variables from disk, and\n",

140 | "# do some work with the model.\n",

141 | "# Restore variables from disk.\n",

142 | "ckpt_path = './ckpt/test-model.ckpt'\n",

143 | "saver.restore(sess, ckpt_path + '-'+ str(1))\n",

144 | "print(\"Model restored.\")\n",

145 | "\n",

146 | "print sess.run(v1)"

147 | ]

148 | },

149 | {

150 | "cell_type": "markdown",

151 | "metadata": {},

152 | "source": [

153 | "## 关于模型保存的一点心得\n",

154 | "\n",

155 | "```\n",

156 | "saver = tf.train.Saver(max_to_keep=3)\n",

157 | "```\n",

158 | "在定义 saver 的时候一般会定义最多保存模型的数量,一般来说,我都不会保存太多模型,模型多了浪费空间,很多时候其实保存最好的模型就够了。方法就是每次迭代到一定步数就在验证集上计算一次 accuracy 或者 f1 值,如果本次结果比上次好才保存新的模型,否则没必要保存。\n",

159 | "\n",

160 | "如果你想用不同 epoch 保存下来的模型进行融合的话,3到5 个模型已经足够了,假设这各融合的模型成为 M,而最好的一个单模型称为 m_best, 这样融合的话对于M 确实可以比 m_best 更好。但是如果拿这个模型和其他结构的模型再做融合的话,M 的效果并没有 m_best 好,因为M 相当于做了平均操作,减少了该模型的“特性”。"

161 | ]

162 | }

163 | ],

164 | "metadata": {

165 | "anaconda-cloud": {},

166 | "kernelspec": {

167 | "display_name": "Python [conda root]",

168 | "language": "python",

169 | "name": "conda-root-py"

170 | },

171 | "language_info": {

172 | "codemirror_mode": {

173 | "name": "ipython",

174 | "version": 2

175 | },

176 | "file_extension": ".py",

177 | "mimetype": "text/x-python",

178 | "name": "python",

179 | "nbconvert_exporter": "python",

180 | "pygments_lexer": "ipython2",

181 | "version": "2.7.12"

182 | }

183 | },

184 | "nbformat": 4,

185 | "nbformat_minor": 1

186 | }

187 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # Tensorflow-Tutorial

2 |

3 | 2018-04 更新说明

4 |

5 | 时间过去一年,TensorFlow 已经从 1.0 版本更新到了 1.8 版本,而且最近更新的非常频繁。最烦的就是每次更新很多 API 都改了,一些老版本的代码就跑不通了。因为本项目关注的人越来越多了,所以自己也感觉到非常有必要更新并更正一些之前的错误,否则误人子弟就不好了。这里不少内容可以直接在官方的教程中找到,官方文档也在不断完善中,我也是把里边的例子跑一下,加深理解而已,更多的还是要自己在具体任务中去搭模型,训模型才能很好地掌握。

6 |

7 | 这一次更新主要内容如下:

8 |

9 | - 使用较新版本的 tfmaster

10 | - 所有的代码改成 python3.5

11 | - 重新整理了基础用例

12 | - 添加实战例子

13 |

14 | 因为工作和学习比较忙,所以这些内容也没办法一下子完成。和之前的版本不同,之前我是作为一个入门菜鸟一遍学一边做笔记。虽然现在依然还是理解得不够,但是比之前掌握的知识应该多了不少,希望能够整理成一个更好的教程。

15 |

16 | 之前的代码我放在了另外一个分支上: https://github.com/yongyehuang/Tensorflow-Tutorial/tree/1.2.1

17 |

18 | 如果有什么问题或者建议,欢迎开issue或者邮件与我联系:yongye@bupt.edu.cn

19 |

20 |

21 | ## 运行环境

22 | - python 3.5

23 | - tensorflow master (gpu version)

24 |

25 |

26 | ## 文件结构

27 | ```

28 | |- Tensorflow-Tutorial

29 | | |- example-notebook # 入门教程 notebook 版

30 | | |- example-python # 入门教程 .py 版

31 | | |- utils # 一些工具函数(logging, tf.flags)

32 | | |- models # 一些实战的例子(BN, GAN, 序列标注,seq2seq 等,持续更新)

33 | | |- data # 数据

34 | | |- doc # 相关文档

35 | ```

36 |

37 | ## 1.入门例子

38 | #### T_01.TensorFlow 的基本用法

39 | - [notebook](https://github.com/yongyehuang/Tensorflow-Tutorial/blob/master/example-notebook/Tutorial_01%20Basic%20Usage.ipynb)

40 |

41 | 介绍 TensorFlow 的变量、常量和基本操作,最后介绍了一个非常简单的回归拟合例子。

42 |

43 |

44 |

45 | #### T_02.实现一个两层的全连接网络对 MNIST 进行分类

46 | - [notebook](https://github.com/yongyehuang/Tensorflow-Tutorial/blob/master/example-notebook/Tutorial_02%20A%20simple%20feedforward%20network%20for%20MNIST.ipynb)

47 |

48 |

49 |

50 | #### T_03.TensorFlow 变量命名管理机制

51 | - [notebook1](https://github.com/yongyehuang/Tensorflow-Tutorial/blob/master/example-notebook/Tutorial_03_1%20The%20usage%20of%20%20name_scope%20and%20variable_scope.ipynb)

52 | 介绍 tf.Variable() 和 tf.get_variable() 创建变量的区别;介绍如何使用 tf.name_scope() 和 tf.variable_scope() 管理命名空间。

53 |

54 | - [notebook2](https://github.com/yongyehuang/Tensorflow-Tutorial/blob/master/example-notebook/Tutorial_03_2%20The%20usage%20of%20%20Collection.ipynb)

55 | 除了使用变量命名来管理变量之外,还经常用到 collection 的方式来聚合一些变量或者操作。

56 |

57 |

58 |

59 | #### T_04.实现一个两层的卷积神经网络(CNN)对 MNIST 进行分类

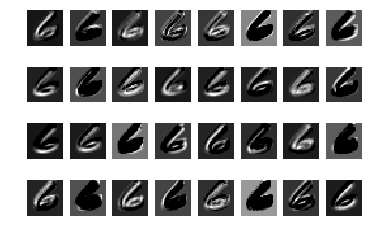

60 | - [notebook1-使用原生API构建CNN](https://github.com/yongyehuang/Tensorflow-Tutorial/blob/master/example-notebook/Tutorial_04_1%20Convolutional%20network%20for%20MNIST(1).ipynb)

61 |

62 | 构建一个非常简单的 CNN 网络,同时输出中间各个核的可视化来理解 CNN 的原理。

63 |  64 | 第一层卷积核可视化

65 |

66 | - [notebook2-自定义函数构建CNN](https://github.com/yongyehuang/Tensorflow-Tutorial/blob/master/example-notebook/Tutorial_04_2%20Convolutional%20network%20for%20MNIST(2).ipynb)

67 |

68 | - [notebook3-使用tf.layers高级API构建CNN](https://github.com/yongyehuang/Tensorflow-Tutorial/blob/master/example-notebook/Tutorial_04_3%20Convolutional%20network%20for%20MNIST(3).ipynb)

69 |

70 | - [code-加入 BN 层的 CNN](https://github.com/yongyehuang/Tensorflow-Tutorial/tree/master/utils_and_models/m01_batch_normalization)

71 |

72 | 在上一个例子的基础上,加入 BN 层。在 CNN 中,使用 BN 层可以加速收敛速度,同时也能够减小初始化方式的影响。在使用 BN 层的时候要注意训练时用的是 mini-batch 的均值方差,测试时用的是指数平均的均值方差。所以在训练的过程中,一定要记得更新并保存均值方差。

73 |

74 | 在这个小网络中:迭代 10000 步,batch_size=100,大概耗时 45s;添加了 BN 层之后,迭代同样的次数,大概耗时 90s.

75 |

76 |

77 | #### T_05.实现多层的 LSTM 和 GRU 网络对 MNIST 进行分类

78 | - [LSTM-notebook](https://github.com/yongyehuang/Tensorflow-Tutorial/blob/master/example-notebook/Tutorial_05_1%20An%20understandable%20example%20to%20implement%20Multi-LSTM%20for%20MNIST.ipynb)

79 | - [GRU-notebook](https://github.com/yongyehuang/Tensorflow-Tutorial/blob/master/example-notebook/Tutorial_05_2%20An%20understandable%20example%20to%20implement%20Multi-GRU%20for%20MNIST.ipynb)

80 | - [Bi-GRU-notebook](https://github.com/yongyehuang/Tensorflow-Tutorial/blob/master/example-notebook/Tutorial_05_3%20Bi-GRU%20for%20MNIST.ipynb)

81 |

82 |

64 | 第一层卷积核可视化

65 |

66 | - [notebook2-自定义函数构建CNN](https://github.com/yongyehuang/Tensorflow-Tutorial/blob/master/example-notebook/Tutorial_04_2%20Convolutional%20network%20for%20MNIST(2).ipynb)

67 |

68 | - [notebook3-使用tf.layers高级API构建CNN](https://github.com/yongyehuang/Tensorflow-Tutorial/blob/master/example-notebook/Tutorial_04_3%20Convolutional%20network%20for%20MNIST(3).ipynb)

69 |

70 | - [code-加入 BN 层的 CNN](https://github.com/yongyehuang/Tensorflow-Tutorial/tree/master/utils_and_models/m01_batch_normalization)

71 |

72 | 在上一个例子的基础上,加入 BN 层。在 CNN 中,使用 BN 层可以加速收敛速度,同时也能够减小初始化方式的影响。在使用 BN 层的时候要注意训练时用的是 mini-batch 的均值方差,测试时用的是指数平均的均值方差。所以在训练的过程中,一定要记得更新并保存均值方差。

73 |

74 | 在这个小网络中:迭代 10000 步,batch_size=100,大概耗时 45s;添加了 BN 层之后,迭代同样的次数,大概耗时 90s.

75 |

76 |

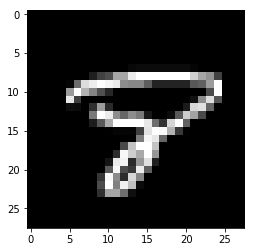

77 | #### T_05.实现多层的 LSTM 和 GRU 网络对 MNIST 进行分类

78 | - [LSTM-notebook](https://github.com/yongyehuang/Tensorflow-Tutorial/blob/master/example-notebook/Tutorial_05_1%20An%20understandable%20example%20to%20implement%20Multi-LSTM%20for%20MNIST.ipynb)

79 | - [GRU-notebook](https://github.com/yongyehuang/Tensorflow-Tutorial/blob/master/example-notebook/Tutorial_05_2%20An%20understandable%20example%20to%20implement%20Multi-GRU%20for%20MNIST.ipynb)

80 | - [Bi-GRU-notebook](https://github.com/yongyehuang/Tensorflow-Tutorial/blob/master/example-notebook/Tutorial_05_3%20Bi-GRU%20for%20MNIST.ipynb)

81 |

82 |  83 | 字符 8

84 |

85 |

83 | 字符 8

84 |

85 |  86 | lstm 对字符 8 的识别过程

87 |

88 |

89 |

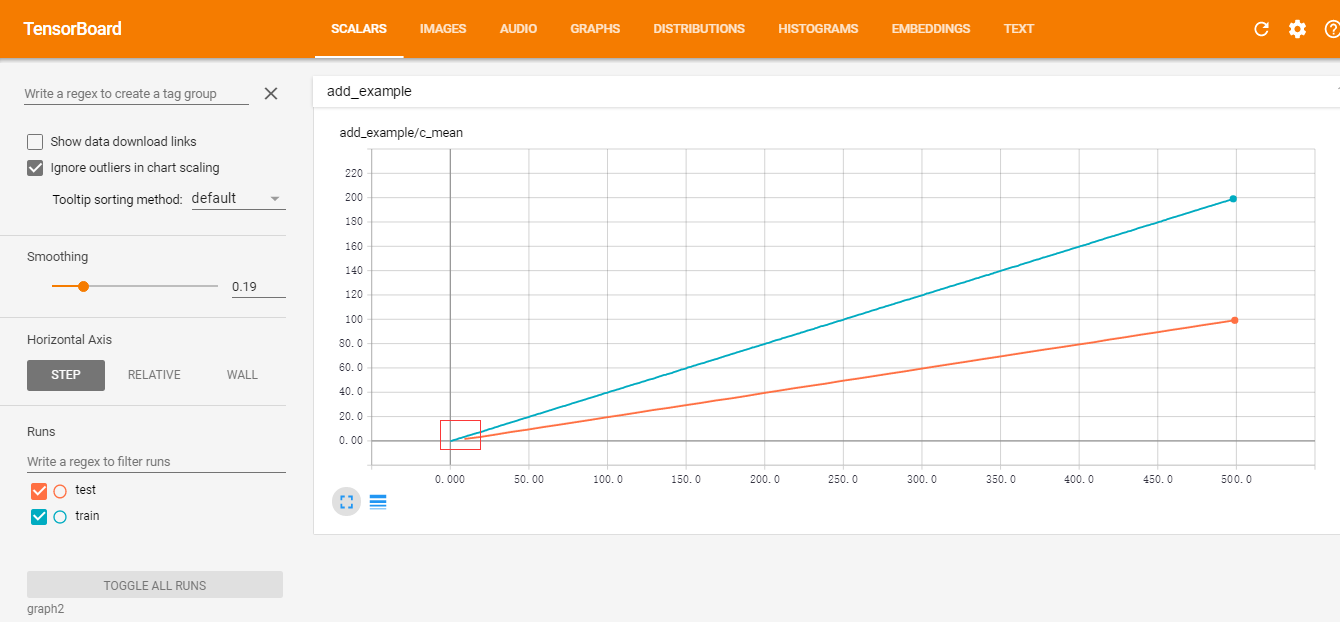

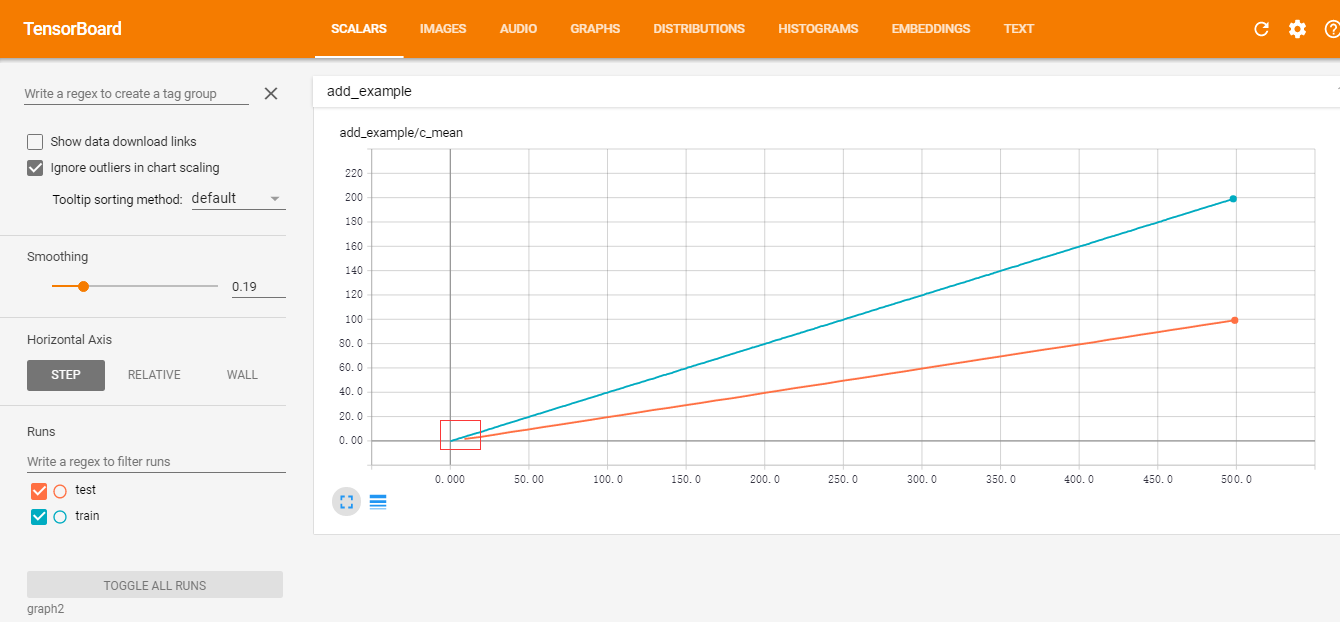

90 | #### T_06.tensorboard 的简单用法

91 | - [notebook](https://github.com/yongyehuang/Tensorflow-Tutorial/blob/master/example-notebook/Tutorial_06%20A%20very%20simple%20example%20for%20tensorboard.ipynb)

92 |

93 |

86 | lstm 对字符 8 的识别过程

87 |

88 |

89 |

90 | #### T_06.tensorboard 的简单用法

91 | - [notebook](https://github.com/yongyehuang/Tensorflow-Tutorial/blob/master/example-notebook/Tutorial_06%20A%20very%20simple%20example%20for%20tensorboard.ipynb)

92 |

93 |  94 | 简单的 tensorboard 可视化

95 |

96 |

97 |

98 | #### T_07.使用 tf.train.Saver() 来保存模型

99 | - [notebook](https://github.com/yongyehuang/Tensorflow-Tutorial/blob/master/example-notebook/Tutorial_07%20How%20to%20save%20the%20model.ipynb)

100 |

101 |

102 |

103 | #### T_08.【迁移学习】往一个已经保存好的 模型添加新的变量

104 | - [notebook](https://github.com/yongyehuang/Tensorflow-Tutorial/blob/master/example-notebook/Tutorial_08%20%20%5Btransfer%20learning%5D%20Add%20new%20variables%20to%20graph%20and%20save%20the%20new%20model.ipynb)

105 |

106 |

107 |

108 | #### T_09.使用 tfrecord 打包不定长的序列数据

109 | - [notebook](https://github.com/yongyehuang/Tensorflow-Tutorial/blob/master/example-notebook/Tutorial_09%20%5Btfrecord%5D%20use%20tfrecord%20to%20store%20sequences%20of%20different%20length.ipynb)

110 | - [reader-code](https://github.com/yongyehuang/Tensorflow-Tutorial/blob/master/utils_and_models/u02_tfrecord/tfrecord_2_seqence_reader.py)

111 | - [writer-code](https://github.com/yongyehuang/Tensorflow-Tutorial/blob/master/utils_and_models/u02_tfrecord/tfrecord_2_seqence_writer.py)

112 |

113 |

114 |

115 | #### T_10.使用 tf.data.Dataset 和 tfrecord 给 numpy 数据构建数据集

116 | - [dataset-notebook](https://github.com/yongyehuang/Tensorflow-Tutorial/blob/master/example-notebook/Tutorial_10%20%5BDataset%5D%20numpy%20data.ipynb)

117 | - [tfrecord-reader-code](https://github.com/yongyehuang/Tensorflow-Tutorial/blob/master/utils_and_models/u02_tfrecord/tfrecord_1_numpy_reader.py)

118 | - [tfrecord-writer-code](https://github.com/yongyehuang/Tensorflow-Tutorial/blob/master/utils_and_models/u02_tfrecord/tfrecord_1_numpy_writer.py)

119 |

120 |

121 | 下面是对 MNIST 数据训练集 55000 个样本 读取的一个速度比较,统一 `batch_size=128`,主要比较 `one-shot` 和 `initializable` 两种迭代方式:

122 |

123 | |iter_mode|buffer_size|100 batch(s)|

124 | |:----:|:---:|:---:|

125 | |one-shot|2000|125|

126 | |one-shot|5000|149|

127 | |initializable|2000|0.7|

128 | |initializable|5000|0.7|

129 |

130 | 可以看到,使用 `initializable` 方式的速度明显要快很多。因为使用 `one-shot` 方式会把整个矩阵放在图中,计算非常非常慢。

131 |

132 |

133 |

134 | #### T_11.使用 tf.data.Dataset 和 tfrecord 给 图片数据 构建数据集

135 | - [dataset-notebook](https://github.com/yongyehuang/Tensorflow-Tutorial/blob/master/example-notebook/Tutorial_11%20%5BDataset%5D%20image%20data.ipynb)

136 | - [tfrecord-writer-code](https://github.com/yongyehuang/Tensorflow-Tutorial/blob/master/utils_and_models/u02_tfrecord/tfrecord_3_image_writer.py)

137 | - [tfrecord-reader-code](https://github.com/yongyehuang/Tensorflow-Tutorial/blob/master/utils_and_models/u02_tfrecord/tfrecord_3_image_reader.py)

138 |

139 | 对于 png 数据的读取,我尝试了 3 组不同的方式: one-shot 方式, tf 的队列方式(queue), tfrecord 方式. 同样是在机械硬盘上操作, 结果是 tfrecord 方式明显要快一些。(batch_size=128,图片大小为256*256,机械硬盘)

140 |

141 | |iter_mode|buffer_size|100 batch(s)|

142 | |:----:|:---:|:---:|

143 | |one-shot|2000|75|

144 | |one-shot|5000|86|

145 | |tf.queue|2000|11|

146 | |tf.queue|5000|11|

147 | |tfrecord|2000|5.3|

148 | |tfrecord|5000|5.3|

149 |

150 | 如果是在 SSD 上面的话,tf 的队列方式应该也是比较快的.打包成 tfrecord 格式只是减少了小文件的读取,其实现也是使用队列的。

151 |

152 |

153 | #### T_12.TensorFlow 高级API tf.layers 的使用

154 | - [notebook](https://github.com/yongyehuang/Tensorflow-Tutorial/blob/master/example-notebook/Tutorial_12%20%5Btf.layer%5Dhigh%20layer%20API.ipynb)

155 |

156 | 使用 TensorFlow 原生的 API 能够帮助自己很好的理解网络的细节,但是往往比较低效。 tf.layers 和 tf.keras 一样,是一个封装得比较好的一个高级库,接口用着挺方便的。所以在开发的时候,可以使用高级的接口能够有效的提高工作效率。

157 |

158 |

159 | # 2.TensorFlow 实战(持续更新)

160 | 下面的每个例子都是相互独立的,每个文件夹下面的代码都是可以单独运行的,不依赖于其他文件夹。

161 |

162 | ## [m01_batch_normalization: Batch Normalization 的使用](https://github.com/yongyehuang/Tensorflow-Tutorial/tree/master/models/m01_batch_normalization)

163 | 参考:[tensorflow中batch normalization的用法](https://www.cnblogs.com/hrlnw/p/7227447.html)

164 |

165 | ## [m02_dcgan: 使用 DCGAN 生成二次元头像](https://github.com/yongyehuang/Tensorflow-Tutorial/tree/master/models/m02_dcgan)

166 | 参考:

167 | - [原论文:Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks](https://link.zhihu.com/?target=https%3A//arxiv.org/abs/1511.06434)

168 | - [GAN学习指南:从原理入门到制作生成Demo](https://zhuanlan.zhihu.com/p/24767059)

169 | - [代码:carpedm20/DCGAN-tensorflow](https://github.com/carpedm20/DCGAN-tensorflow)

170 | - [代码:aymericdamien/TensorFlow-Examples](https://github.com/aymericdamien/TensorFlow-Examples/blob/master/examples/3_NeuralNetworks/dcgan.py)

171 |

172 | 这里的 notebook 和 .py 文件的内容是一样的。本例子和下面的 GAN 模型用的数据集也是用了[GAN学习指南:从原理入门到制作生成Demo](https://zhuanlan.zhihu.com/p/24767059) 的二次元头像,感觉这里例子比较有意思。如果想使用其他数据集的话,只需要把数据集换一下就行了。

173 |

174 | 下载链接: https://pan.baidu.com/s/1HBJpfkIFaGh0s2nfNXJsrA 密码: x39r

175 |

176 | 下载后把所有的图片解压到一个文件夹中,比如本例中是: `data_path = '../../data/anime/'`

177 |

178 | 运行: `python dcgan.py `

179 |

180 | ## [m03_wgan: 使用 WGAN 生成二次元头像](https://github.com/yongyehuang/Tensorflow-Tutorial/tree/master/models/m03_wgan)

181 | 这里的生成器和判别器我只实现了 DCGAN,没有实现 MLP. 如果想实现的话可以参考下面的两个例子。

182 | 参考:

183 | - [原论文:Wasserstein GAN](https://arxiv.org/pdf/1701.07875.pdf)

184 | - [代码:jiamings/wgan](https://github.com/jiamings/wgan)

185 | - [代码:Zardinality/WGAN-tensorflow](https://github.com/Zardinality/WGAN-tensorflow)

186 |

187 | 原版的 wgan: `python wgan.py `

188 |

189 | 改进的 wgan-gp: `python wgan_gp.py`

190 |

191 |

192 | ## [m04_pix2pix: image-to-image](https://github.com/yongyehuang/Tensorflow-Tutorial/tree/master/models/m04_pix2pix)

193 | 代码来自:[affinelayer/pix2pix-tensorflow](https://github.com/affinelayer/pix2pix-tensorflow)

194 |

195 |

--------------------------------------------------------------------------------

/ckpt/bi-lstm.ckpt-12.data-00000-of-00001:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/yongyehuang/Tensorflow-Tutorial/88b5cdbdf402a8ae7f65a540e5f81f3da8f45e7d/ckpt/bi-lstm.ckpt-12.data-00000-of-00001

--------------------------------------------------------------------------------

/ckpt/bi-lstm.ckpt-12.index:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/yongyehuang/Tensorflow-Tutorial/88b5cdbdf402a8ae7f65a540e5f81f3da8f45e7d/ckpt/bi-lstm.ckpt-12.index

--------------------------------------------------------------------------------

/ckpt/bi-lstm.ckpt-12.meta:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/yongyehuang/Tensorflow-Tutorial/88b5cdbdf402a8ae7f65a540e5f81f3da8f45e7d/ckpt/bi-lstm.ckpt-12.meta

--------------------------------------------------------------------------------

/ckpt/checkpoint:

--------------------------------------------------------------------------------

1 | model_checkpoint_path: "test-model.ckpt-2"

2 | all_model_checkpoint_paths: "test-model.ckpt-2"

3 |

--------------------------------------------------------------------------------

/data/msr_train.txt:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/yongyehuang/Tensorflow-Tutorial/88b5cdbdf402a8ae7f65a540e5f81f3da8f45e7d/data/msr_train.txt

--------------------------------------------------------------------------------

/doc/problem-solution.md:

--------------------------------------------------------------------------------

1 | ## 1.为什么 relu 激活函数会有失活现象?

2 | > [一个非常大的梯度流过一个 ReLU 神经元,更新过参数之后,这个神经元再也不会对任何数据有激活现象了。](http://blog.csdn.net/cyh_24/article/details/50593400)

3 | > [Must Know Tips/Tricks in Deep Neural Networks (by Xiu-Shen Wei)](http://lamda.nju.edu.cn/weixs/project/CNNTricks/CNNTricks.html)

4 | > [深度学习系列(8):激活函数](https://plushunter.github.io/2017/05/12/%E6%B7%B1%E5%BA%A6%E5%AD%A6%E4%B9%A0%E7%B3%BB%E5%88%97%EF%BC%888%EF%BC%89%EF%BC%9A%E6%BF%80%E6%B4%BB%E5%87%BD%E6%95%B0/)

5 |

6 | A: 在某次反向传播中神经元 C 传过一个很大的梯度,和 C 相连的所有权重系数 w 都变成了很大的负数。假设前一层输出也是 relu 激活函数,那么前一层的输出(C 的输入)一定是非负数。那么神经元 C 一定会处于0梯度状态,没有任何数据(输入)能够拯救他。也就是失活了。

7 |

8 | 那么,**我觉得,在初始化的时候,如果本层(L)使用 relu 激活函数,那么 biases 最好使用较小的正数初始化,如0.01,0.005等。这样可以在一定程度上使得输出偏向于正数,从而避免梯度消失。** 但是也有一点好处,由于部分神经元失活了,这和做了 dropout 一样,能够有助于防止过拟合。

9 |

10 | **除了上面的失活现象以外,relu 激活函数还有偏移现象:输出均值恒大于零。** 失活现象和偏移现象会共同影响网络的收敛性。#8.数据偏移怎么影响网络的收敛?#

11 |

12 |

13 |

14 | ## 2.[code]AlexNet(等 CNN model) 调参过程中要注意什么?

15 | > 在 github 上面有很多不同版本的 AlexNet,网络结构基本都是一样的,在官方的 tensorflow slim 框架中也提供了 alexnet-v2 版本。在我的任务中,发现 slim 版本的 alexnet 效果要比其他版本的好很多(2~4个百分点)。明明结构一样,为什么差别这么大。

16 |

17 | A:虽然我觉得 slim 框架很不友好,但是里边的参数应该都是经过测试得到的较好的结果。很多小的细节都会影响最后的结果,具体要注意的有下面这些点:

18 | - 初始化方式。在我的任务中,初始化方式影响很大。slim 中的每一层的初始化方式和 caffe 的版本中基本一致。包括 conv 层, fc 层的 weights, biases。我就是最后一个 fc 层改了一下初始化,loss 嗖嗖地就降了。

19 | - 优化器。在原版中用的是 RMSProp 优化器,但坑的是用的不是默认参数,最好设置成跟 slim 一致。

20 | - 数据预处理。我用 caffe 算出了整个数据集的均值,然后把原始图片[0.~255.]减去均值,效果还不错。

21 | - lrn。在 slim 的 alexnet-v2 版本中去掉了局部响应层。结果没有变差,速度快了大概一倍吧。

22 |

23 |

24 |

25 | ## 3.[code]tensorflow 训练模型,报无法分配 memory,但是程序继续运行,这时运行的结果有问题吗?

26 | >**ran out of memory trying to allocate 3.27GiB. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory is available.** 这时候,使用 watch nvidia-smi 看显卡使用情况,就会发现使用率非常低。

27 |

28 | A:这种情况下就应该把batch_size减小了!!!虽然程序还在运行,但是你会发现,结果根本不对,loss 根本就没降。这不是因为你的模型不好,学习率不对。把 batch_size 调小看看结果再下定论。

29 |

30 |

31 |

32 | ## 4.什么情况下用 sigmoid 会比 relu 好呢?

33 | > sigmoid 函数有陷入饱和区(造成梯度消失),非零中心和计算效率低的缺点。那么有什么情况下用 sigmoid 会好呢?

34 |

35 | A: 如果只是作为一个普通隐含层的激活函数的话,还是用 relu 就好了。但是如果你后继需要对某层特征进行量化处理的话,sigmoid 就起作用了。relu 层的输出范围太大,不适合做量化。这时候就体现出 0 和 1 的优越性了。同样,使用 tanh 层也是可以的。

36 |

37 |

38 |

39 | ## 5.sigmoid 非 zero-center 会有什么影响,relu 有这样的影响吗?怎么理解因此导致梯度下降权重更新时出现 z 字型的下降?

40 | > [Sigmoid函数的输出不是零中心的。这个性质并不是我们想要的,因为在神经网络后面层中神经元得到的数据不是零中心的。这一情况将影响梯度下降的运作,因为如果输入神经元的数据总是正数(比如在 f=wx+b 中每个元素都是 x>0),那么关于 w 的梯度在反向传播中,将会要么全部是正数,或者全部是负数(比如f=-wx+b).这将会导致梯度下降权重更新时出现z字型的下降(如下图所示)。](https://plushunter.github.io/2017/05/12/%E6%B7%B1%E5%BA%A6%E5%AD%A6%E4%B9%A0%E7%B3%BB%E5%88%97%EF%BC%888%EF%BC%89%EF%BC%9A%E6%BF%80%E6%B4%BB%E5%87%BD%E6%95%B0/)

41 |

42 |

43 |

44 |

45 | ## 6.在 relu 层前面的 BN 层中,为什么不用对 scale 做处理

46 | > [mnist_4.1_batchnorm_five_layers_relu.py](https://github.com/martin-gorner/tensorflow-mnist-tutorial/blob/master/mnist_4.1_batchnorm_five_layers_relu.py)

47 |

48 | A:没想明白.

49 |

50 |

51 |

52 | ## 7.在问题 1 中,relu 激活函数的偏移现象就是数据的输入分布(zero-center)和输出分布变了(no-zero-center).在 BN 中也提到过,这种数据分布的变化为什么会影响网络的收敛性?

53 | > [对于神经网络的各层输出,由于它们经过了层内操作作用,其分布显然与各层对应的输入信号分布不同,而且差异会随着网络深度增大而增大,可是它们所能“指示”的样本标记(label)仍然是不变的,这便符合了covariate shift的定义。](https://www.zhihu.com/question/38102762) 这样源空间 -> 目标空间 的变化怎么影响网络的收敛?

54 |

55 |

56 |

57 | ## 8.在BN中,为什么通过将activation规范为均值和方差一致的手段使得原本会减小的activation的scale变大,防止梯度消失?

58 | [在BN中,是通过将activation规范为均值和方差一致的手段使得原本会减小的activation的scale变大。可以说是一种更有效的local response normalization方法(见4.2.1节)。](https://www.zhihu.com/question/38102762) 在 BN 中,通过mini-batch来规范化某些层/所有层的输入,从而可以固定每层输入信号的均值与方差。对于 mini-batch,首先减去均值,方差归一;接着进行 scale and shift 操作,也就是用学习到的全局均值和方差替换掉了 mini-batch 的均值和方差。 然后送入激活函数如(sigmoid)中,**这时候并没有把sigmoid的输入约束到 0 附近呀,为什么就能够避免梯度消失?**

59 |

60 | A:首先,对于 sigmoid 和 tanh 来说,当输入的绝对值很大时就会陷入饱和区。这个激活函数的输入一般指的是 z(=wx)。

61 | z 很大 -> 陷入饱和区

62 | z 很大 -> wx 这两个向量的点积很大 -> 如果 w 或者 x 的元素值很大,较容易使得 wx 很大? -> w,x 的值很大

63 | BN 避免了梯度消失 -> BN 处理使得 x 向 0 靠近了。但是没有呀??

64 |

65 | [BN使得输入分布大致稳定,从而缩短了参数适应分布的过程,进而缩短训练时间](https://zhuanlan.zhihu.com/p/26532249)

66 |

67 |

68 |

69 | ## 9.center loss 训练有什么需要注意的?center loss 会使类间的相对距离增大吗?

70 | > center loss 最小化类内距离,但是并没有显式地去最大化类间距离。在我的实验中,对加了center loss 的高层特征进行统计分析,发现类内特征的方差明显要比原来更小了,但是特征均值也比原来更小了。这样类间的绝对距离也会比原来小,假设类间中心距离为 L-inter,类内的距离为 L-intra, 类间的相对距离为 L-relative = (L-inter / L-intra)。那么,加上 center loss 后, L-relative 怎么变化?

71 |

72 | A:1.在模型训练的时候记得把每个类的中心特征向量留出接口,以便后继分析。

73 |

74 |

75 |

76 |

--------------------------------------------------------------------------------

/example-notebook/.ipynb_checkpoints/Tutorial_02 A simple feedforward network for MNIST-checkpoint.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "markdown",

5 | "metadata": {},

6 | "source": [

7 | "# 两层FC层做分类:MNIST\n",

8 | "\n",

9 | "在本教程中,我们来实现一个非常简单的两层全连接层来完成MNIST数据的分类问题。\n",

10 | "\n",

11 | "输入[-1,28*28], FC1 有 1024 个neurons, FC2 有 10 个neurons。这么简单的一个全连接网络,结果测试准确率达到了 0.98。还是非常棒的!!!"

12 | ]

13 | },

14 | {

15 | "cell_type": "code",

16 | "execution_count": 1,

17 | "metadata": {},

18 | "outputs": [],

19 | "source": [

20 | "from __future__ import print_function\n",

21 | "from __future__ import division\n",

22 | "from __future__ import absolute_import\n",

23 | "\n",

24 | "import warnings\n",

25 | "warnings.filterwarnings('ignore') # 不打印 warning \n",

26 | "\n",

27 | "import numpy as np\n",

28 | "import tensorflow as tf\n",

29 | "\n",

30 | "# 设置按需使用GPU\n",

31 | "config = tf.ConfigProto()\n",

32 | "config.gpu_options.allow_growth = True\n",

33 | "sess = tf.Session(config=config)"

34 | ]

35 | },

36 | {

37 | "cell_type": "markdown",

38 | "metadata": {},

39 | "source": [

40 | "### 1.导入数据\n",

41 | "下面导入数据这部分的警告可以不用管先,TensorFlow 非常恶心的地方就是不停地改 API,改到发麻。"

42 | ]

43 | },

44 | {

45 | "cell_type": "code",

46 | "execution_count": 2,

47 | "metadata": {},

48 | "outputs": [

49 | {

50 | "name": "stdout",

51 | "output_type": "stream",

52 | "text": [

53 | "WARNING:tensorflow:From /usr/local/lib/python3.5/dist-packages/tensorflow/contrib/learn/python/learn/datasets/base.py:198: retry (from tensorflow.contrib.learn.python.learn.datasets.base) is deprecated and will be removed in a future version.\n",

54 | "Instructions for updating:\n",

55 | "Use the retry module or similar alternatives.\n",

56 | "WARNING:tensorflow:From :3: read_data_sets (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version.\n",

57 | "Instructions for updating:\n",

58 | "Please use alternatives such as official/mnist/dataset.py from tensorflow/models.\n",

59 | "WARNING:tensorflow:From /usr/local/lib/python3.5/dist-packages/tensorflow/contrib/learn/python/learn/datasets/mnist.py:260: maybe_download (from tensorflow.contrib.learn.python.learn.datasets.base) is deprecated and will be removed in a future version.\n",

60 | "Instructions for updating:\n",

61 | "Please write your own downloading logic.\n",

62 | "WARNING:tensorflow:From /usr/local/lib/python3.5/dist-packages/tensorflow/contrib/learn/python/learn/datasets/mnist.py:262: extract_images (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version.\n",

63 | "Instructions for updating:\n",

64 | "Please use tf.data to implement this functionality.\n",

65 | "Extracting ../data/MNIST_data/train-images-idx3-ubyte.gz\n",

66 | "WARNING:tensorflow:From /usr/local/lib/python3.5/dist-packages/tensorflow/contrib/learn/python/learn/datasets/mnist.py:267: extract_labels (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version.\n",

67 | "Instructions for updating:\n",

68 | "Please use tf.data to implement this functionality.\n",

69 | "Extracting ../data/MNIST_data/train-labels-idx1-ubyte.gz\n",

70 | "Extracting ../data/MNIST_data/t10k-images-idx3-ubyte.gz\n",

71 | "Extracting ../data/MNIST_data/t10k-labels-idx1-ubyte.gz\n",

72 | "WARNING:tensorflow:From /usr/local/lib/python3.5/dist-packages/tensorflow/contrib/learn/python/learn/datasets/mnist.py:290: DataSet.__init__ (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version.\n",

73 | "Instructions for updating:\n",

74 | "Please use alternatives such as official/mnist/dataset.py from tensorflow/models.\n"

75 | ]

76 | }

77 | ],

78 | "source": [

79 | "# 用tensorflow 导入数据\n",

80 | "from tensorflow.examples.tutorials.mnist import input_data\n",

81 | "mnist = input_data.read_data_sets('../data/MNIST_data', one_hot=False, source_url='http://yann.lecun.com/exdb/mnist/')"

82 | ]

83 | },

84 | {

85 | "cell_type": "code",

86 | "execution_count": 3,

87 | "metadata": {},

88 | "outputs": [

89 | {

90 | "name": "stdout",

91 | "output_type": "stream",

92 | "text": [

93 | "training data shape (55000, 784)\n",

94 | "training label shape (55000,)\n"

95 | ]

96 | }

97 | ],

98 | "source": [

99 | "print('training data shape ', mnist.train.images.shape)\n",

100 | "print('training label shape ', mnist.train.labels.shape)"

101 | ]

102 | },

103 | {

104 | "cell_type": "markdown",

105 | "metadata": {},

106 | "source": [

107 | "### 2. 构建网络"

108 | ]

109 | },

110 | {

111 | "cell_type": "code",

112 | "execution_count": 4,

113 | "metadata": {},

114 | "outputs": [

115 | {

116 | "name": "stdout",

117 | "output_type": "stream",

118 | "text": [

119 | "Tensor(\"add_1:0\", shape=(?, 10), dtype=float32)\n"

120 | ]

121 | }

122 | ],

123 | "source": [

124 | "# 权值初始化\n",

125 | "def weight_variable(shape):\n",

126 | " # 用正态分布来初始化权值\n",

127 | " initial = tf.truncated_normal(shape, stddev=0.1)\n",

128 | " return tf.Variable(initial)\n",

129 | "\n",

130 | "def bias_variable(shape):\n",

131 | " # 本例中用relu激活函数,所以用一个很小的正偏置较好\n",

132 | " initial = tf.constant(0.1, shape=shape)\n",

133 | " return tf.Variable(initial)\n",

134 | "\n",

135 | "\n",

136 | "# input_layer\n",

137 | "X_input = tf.placeholder(tf.float32, [None, 784])\n",

138 | "y_input = tf.placeholder(tf.int64, [None]) # 不使用 one-hot \n",

139 | "\n",

140 | "# FC1\n",

141 | "W_fc1 = weight_variable([784, 1024])\n",

142 | "b_fc1 = bias_variable([1024])\n",

143 | "h_fc1 = tf.nn.relu(tf.matmul(X_input, W_fc1) + b_fc1)\n",

144 | "\n",

145 | "# FC2\n",

146 | "W_fc2 = weight_variable([1024, 10])\n",

147 | "b_fc2 = bias_variable([10])\n",

148 | "# y_pre = tf.nn.softmax(tf.matmul(h_fc1, W_fc2) + b_fc2)\n",

149 | "logits = tf.matmul(h_fc1, W_fc2) + b_fc2\n",

150 | "\n",

151 | "print(logits)"

152 | ]

153 | },

154 | {

155 | "cell_type": "markdown",

156 | "metadata": {},

157 | "source": [

158 | "### 3.训练和评估\n",

159 | "下面我们将输入 mnist 的数据来进行训练。在下面有个 mnist.train.next_batch(batch_size=100) 的操作,每次从数据集中取出100个样本。如果想了解怎么实现的话建议看一下源码,其实挺简单的,很多时候需要我们自己去实现读取数据的这个函数。"

160 | ]

161 | },

162 | {

163 | "cell_type": "code",

164 | "execution_count": 5,

165 | "metadata": {},

166 | "outputs": [

167 | {

168 | "name": "stdout",

169 | "output_type": "stream",

170 | "text": [

171 | "step 500, train cost=0.153510, acc=0.950000; test cost=0.123346, acc=0.962500\n",

172 | "step 1000, train cost=0.087353, acc=0.960000; test cost=0.104876, acc=0.967400\n",

173 | "step 1500, train cost=0.033138, acc=0.990000; test cost=0.080937, acc=0.975200\n",

174 | "step 2000, train cost=0.028831, acc=0.990000; test cost=0.077214, acc=0.977800\n",

175 | "step 2500, train cost=0.004817, acc=1.000000; test cost=0.066441, acc=0.980000\n",

176 | "step 3000, train cost=0.015692, acc=1.000000; test cost=0.067741, acc=0.979400\n",

177 | "step 3500, train cost=0.011569, acc=1.000000; test cost=0.075138, acc=0.979400\n",

178 | "step 4000, train cost=0.006310, acc=1.000000; test cost=0.074501, acc=0.978300\n",

179 | "step 4500, train cost=0.008795, acc=1.000000; test cost=0.076483, acc=0.979100\n",

180 | "step 5000, train cost=0.027700, acc=0.980000; test cost=0.073973, acc=0.980000\n"

181 | ]

182 | }

183 | ],

184 | "source": [

185 | "# 1.损失函数:cross_entropy\n",

186 | "# 如果label是 one-hot 的话要换一下损失函数,参考:https://blog.csdn.net/tz_zs/article/details/76086457\n",

187 | "cross_entropy = tf.reduce_mean(tf.nn.sparse_softmax_cross_entropy_with_logits(labels=y_input, logits=logits)) \n",

188 | "# 2.优化函数:AdamOptimizer, 优化速度要比 GradientOptimizer 快很多\n",

189 | "train_step = tf.train.AdamOptimizer(0.001).minimize(cross_entropy)\n",

190 | "\n",

191 | "# 3.预测结果评估\n",

192 | "# 预测值中最大值(1)即分类结果,是否等于原始标签中的(1)的位置。argmax()取最大值所在的下标\n",

193 | "correct_prediction = tf.equal(tf.argmax(logits, 1), y_input) \n",

194 | "accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))\n",

195 | "\n",

196 | "# 开始运行\n",

197 | "sess.run(tf.global_variables_initializer())\n",

198 | "# 这大概迭代了不到 10 个 epoch, 训练准确率已经达到了0.98\n",

199 | "for i in range(5000):\n",

200 | " X_batch, y_batch = mnist.train.next_batch(batch_size=100)\n",

201 | " cost, acc, _ = sess.run([cross_entropy, accuracy, train_step], feed_dict={X_input: X_batch, y_input: y_batch})\n",

202 | " if (i+1) % 500 == 0:\n",

203 | " test_cost, test_acc = sess.run([cross_entropy, accuracy], feed_dict={X_input: mnist.test.images, y_input: mnist.test.labels})\n",

204 | " print(\"step {}, train cost={:.6f}, acc={:.6f}; test cost={:.6f}, acc={:.6f}\".format(i+1, cost, acc, test_cost, test_acc))\n",

205 | " "

206 | ]

207 | }

208 | ],

209 | "metadata": {

210 | "anaconda-cloud": {},

211 | "kernelspec": {

212 | "display_name": "Python 3",

213 | "language": "python",

214 | "name": "python3"

215 | },

216 | "language_info": {

217 | "codemirror_mode": {

218 | "name": "ipython",

219 | "version": 3

220 | },

221 | "file_extension": ".py",

222 | "mimetype": "text/x-python",

223 | "name": "python",

224 | "nbconvert_exporter": "python",

225 | "pygments_lexer": "ipython3",

226 | "version": "3.5.2"

227 | }

228 | },

229 | "nbformat": 4,

230 | "nbformat_minor": 1

231 | }

232 |

--------------------------------------------------------------------------------

/example-notebook/.ipynb_checkpoints/Tutorial_03_2 The usage of Collection-checkpoint.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "markdown",

5 | "metadata": {},

6 | "source": [

7 | "# TensorFlow 变量命名管理机制(二)\n",

8 | "\n",

9 | "\n",

10 | "### 1. 用 collection 来聚合变量\n",

11 | "前面介绍了 tensorflow 的变量命名机制,这里要补充一下 `tf.add_to_collection` 和 `tf.get_collection`的用法。\n",

12 | "\n",

13 | "因为神经网络中的参数非常多,有时候我们 只想对某些参数进行操作。除了前面通过变量名称的方法来获取参数之外, TensorFlow 中还有 collection 这么一种操作。\n",

14 | "\n",

15 | "collection 可以聚合多个**变量**或者**操作**。"

16 | ]

17 | },

18 | {

19 | "cell_type": "code",

20 | "execution_count": 1,

21 | "metadata": {},

22 | "outputs": [],

23 | "source": [

24 | "import warnings\n",

25 | "warnings.filterwarnings('ignore') # 不打印 warning \n",

26 | "\n",

27 | "import tensorflow as tf\n",

28 | "\n",

29 | "# 设置GPU按需增长\n",

30 | "config = tf.ConfigProto()\n",

31 | "config.gpu_options.allow_growth = True\n",

32 | "sess = tf.Session(config=config)"

33 | ]

34 | },

35 | {

36 | "cell_type": "markdown",

37 | "metadata": {},

38 | "source": [

39 | "参考:[如何利用tf.add_to_collection、tf.get_collection以及tf.add_n来简化正则项的计算](https://blog.csdn.net/weixin_39980291/article/details/78352125)"

40 | ]

41 | },

42 | {

43 | "cell_type": "code",

44 | "execution_count": 2,

45 | "metadata": {},

46 | "outputs": [

47 | {

48 | "name": "stdout",

49 | "output_type": "stream",

50 | "text": [

51 | "vars in col1: [, ]\n"

52 | ]

53 | }

54 | ],

55 | "source": [

56 | "# 把变量添加到一个 collection 中\n",

57 | "v1 = tf.Variable([1,2,3], name='v1')\n",

58 | "v2 = tf.Variable([2], name='v2')\n",

59 | "v3 = tf.get_variable(name='v3', shape=(2,3))\n",

60 | "\n",

61 | "tf.add_to_collection('col1', v1) # 把 v1 添加到 col1 中\n",

62 | "tf.add_to_collection('col1', v3)\n",

63 | "\n",

64 | "col1s = tf.get_collection(key='col1') # 获取 col1 的变量\n",

65 | "print('vars in col1:', col1s)"

66 | ]

67 | },

68 | {

69 | "cell_type": "markdown",

70 | "metadata": {},

71 | "source": [

72 | "除了把变量添加到集合中,还可以把操作添加到集合中。"

73 | ]

74 | },

75 | {

76 | "cell_type": "code",

77 | "execution_count": 3,

78 | "metadata": {},

79 | "outputs": [

80 | {

81 | "name": "stdout",

82 | "output_type": "stream",

83 | "text": [

84 | "vars in col1: [, , ]\n"

85 | ]

86 | }

87 | ],

88 | "source": [

89 | "op1 = tf.add(v1, 2, name='add_op')\n",

90 | "tf.add_to_collection('col1', op1)\n",

91 | "col1s = tf.get_collection(key='col1') # 获取 col1 的变量\n",

92 | "print('vars in col1:', col1s)"

93 | ]

94 | },

95 | {

96 | "cell_type": "markdown",

97 | "metadata": {},

98 | "source": [

99 | "此外,还可以加上 scope 的约束。"

100 | ]

101 | },

102 | {

103 | "cell_type": "code",

104 | "execution_count": 3,

105 | "metadata": {},

106 | "outputs": [

107 | {

108 | "name": "stdout",

109 | "output_type": "stream",

110 | "text": [

111 | "vars in col1 with scope=model: []\n"

112 | ]

113 | }

114 | ],

115 | "source": [

116 | "with tf.variable_scope('model'):\n",

117 | " v4 = tf.get_variable('v4', shape=[3,4])\n",

118 | " v5 = tf.Variable([1,2,3], name='v5')\n",

119 | "\n",

120 | "tf.add_to_collection('col1', v5)\n",

121 | "col1_vars = tf.get_collection(key='col1', scope='model') # 获取 col1 的变量\n",

122 | "print('vars in col1 with scope=model: ', col1_vars)"

123 | ]

124 | },

125 | {

126 | "cell_type": "markdown",

127 | "metadata": {},

128 | "source": [

129 | "### 2. tf.GraphKeys \n",

130 | "\n",

131 | "参考:[tf.GraphKeys 函数](https://www.w3cschool.cn/tensorflow_python/tensorflow_python-ne7t2ezd.html)\n",

132 | "\n",

133 | "用于图形集合的标准名称。\n",

134 | "\n",

135 | "\n",

136 | "标准库使用各种已知的名称来收集和检索与图形相关联的值。例如,如果没有指定,则 tf.Optimizer 子类默认优化收集的变量tf.GraphKeys.TRAINABLE_VARIABLES,但也可以传递显式的变量列表。\n",

137 | "\n",

138 | "定义了以下标准键:\n",

139 | "\n",

140 | "- GLOBAL_VARIABLES:默认的 Variable 对象集合,在分布式环境共享(模型变量是其中的子集)。参考:tf.global_variables。通常,所有TRAINABLE_VARIABLES 变量都将在 MODEL_VARIABLES,所有 MODEL_VARIABLES 变量都将在 GLOBAL_VARIABLES。\n",

141 | "- LOCAL_VARIABLES:每台计算机的局部变量对象的子集。通常用于临时变量,如计数器。注意:使用 tf.contrib.framework.local_variable 添加到此集合。\n",

142 | "- MODEL_VARIABLES:在模型中用于推理(前馈)的变量对象的子集。注意:使用 tf.contrib.framework.model_variable 添加到此集合。\n",

143 | "- TRAINABLE_VARIABLES:将由优化器训练的变量对象的子集。\n",

144 | "- SUMMARIES:在关系图中创建的汇总张量对象。\n",

145 | "- QUEUE_RUNNERS:用于为计算生成输入的 QueueRunner 对象。\n",

146 | "- MOVING_AVERAGE_VARIABLES:变量对象的子集,它也将保持移动平均值。\n",

147 | "- REGULARIZATION_LOSSES:在图形构造期间收集的正规化损失。\n",

148 | "\n",

149 | "这个知道就好了,要用的时候知道是怎么回事就行了。比如在 BN 层中就有这东西。"

150 | ]

151 | },

152 | {

153 | "cell_type": "code",

154 | "execution_count": null,

155 | "metadata": {},

156 | "outputs": [],

157 | "source": []

158 | },

159 | {

160 | "cell_type": "code",

161 | "execution_count": null,

162 | "metadata": {},

163 | "outputs": [],

164 | "source": []

165 | }

166 | ],

167 | "metadata": {

168 | "anaconda-cloud": {},

169 | "kernelspec": {

170 | "display_name": "Python 3",

171 | "language": "python",

172 | "name": "python3"

173 | },

174 | "language_info": {

175 | "codemirror_mode": {

176 | "name": "ipython",

177 | "version": 3

178 | },

179 | "file_extension": ".py",

180 | "mimetype": "text/x-python",

181 | "name": "python",

182 | "nbconvert_exporter": "python",

183 | "pygments_lexer": "ipython3",

184 | "version": "3.5.2"

185 | }

186 | },

187 | "nbformat": 4,

188 | "nbformat_minor": 1

189 | }

190 |

--------------------------------------------------------------------------------

/example-notebook/.ipynb_checkpoints/Tutorial_04_3 Convolutional network for MNIST(3)-checkpoint.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "markdown",

5 | "metadata": {},

6 | "source": [

7 | "## CNN做MNIST分类\n",

8 | "\n",

9 | "使用 tf.layers 高级 API 构建 CNN "

10 | ]

11 | },

12 | {

13 | "cell_type": "code",

14 | "execution_count": 1,

15 | "metadata": {},

16 | "outputs": [

17 | {

18 | "name": "stderr",

19 | "output_type": "stream",

20 | "text": [

21 | "/usr/local/lib/python3.5/dist-packages/h5py/__init__.py:36: FutureWarning: Conversion of the second argument of issubdtype from `float` to `np.floating` is deprecated. In future, it will be treated as `np.float64 == np.dtype(float).type`.\n",

22 | " from ._conv import register_converters as _register_converters\n"

23 | ]

24 | }

25 | ],

26 | "source": [

27 | "import numpy as np\n",

28 | "import tensorflow as tf\n",

29 | "\n",

30 | "# 设置按需使用GPU\n",

31 | "config = tf.ConfigProto()\n",

32 | "config.gpu_options.allow_growth = True\n",

33 | "sess = tf.InteractiveSession(config=config)\n",

34 | "\n",

35 | "import time"

36 | ]

37 | },

38 | {

39 | "cell_type": "markdown",

40 | "metadata": {},

41 | "source": [

42 | "## 1.导入数据,用 tensorflow 导入"

43 | ]

44 | },

45 | {

46 | "cell_type": "code",

47 | "execution_count": 2,

48 | "metadata": {},

49 | "outputs": [

50 | {

51 | "name": "stdout",

52 | "output_type": "stream",

53 | "text": [

54 | "WARNING:tensorflow:From /usr/local/lib/python3.5/dist-packages/tensorflow/contrib/learn/python/learn/datasets/base.py:198: retry (from tensorflow.contrib.learn.python.learn.datasets.base) is deprecated and will be removed in a future version.\n",

55 | "Instructions for updating:\n",

56 | "Use the retry module or similar alternatives.\n",

57 | "WARNING:tensorflow:From :3: read_data_sets (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version.\n",

58 | "Instructions for updating:\n",

59 | "Please use alternatives such as official/mnist/dataset.py from tensorflow/models.\n",

60 | "WARNING:tensorflow:From /usr/local/lib/python3.5/dist-packages/tensorflow/contrib/learn/python/learn/datasets/mnist.py:260: maybe_download (from tensorflow.contrib.learn.python.learn.datasets.base) is deprecated and will be removed in a future version.\n",

61 | "Instructions for updating:\n",

62 | "Please write your own downloading logic.\n",

63 | "WARNING:tensorflow:From /usr/local/lib/python3.5/dist-packages/tensorflow/contrib/learn/python/learn/datasets/mnist.py:262: extract_images (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version.\n",

64 | "Instructions for updating:\n",

65 | "Please use tf.data to implement this functionality.\n",

66 | "Extracting MNIST_data/train-images-idx3-ubyte.gz\n",

67 | "WARNING:tensorflow:From /usr/local/lib/python3.5/dist-packages/tensorflow/contrib/learn/python/learn/datasets/mnist.py:267: extract_labels (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version.\n",

68 | "Instructions for updating:\n",

69 | "Please use tf.data to implement this functionality.\n",

70 | "Extracting MNIST_data/train-labels-idx1-ubyte.gz\n",

71 | "Extracting MNIST_data/t10k-images-idx3-ubyte.gz\n",

72 | "Extracting MNIST_data/t10k-labels-idx1-ubyte.gz\n",

73 | "WARNING:tensorflow:From /usr/local/lib/python3.5/dist-packages/tensorflow/contrib/learn/python/learn/datasets/mnist.py:290: DataSet.__init__ (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version.\n",

74 | "Instructions for updating:\n",

75 | "Please use alternatives such as official/mnist/dataset.py from tensorflow/models.\n",

76 | "(10000,)\n",

77 | "(55000,)\n"

78 | ]

79 | }

80 | ],

81 | "source": [

82 | "# 用tensorflow 导入数据\n",

83 | "from tensorflow.examples.tutorials.mnist import input_data\n",

84 | "mnist = input_data.read_data_sets('MNIST_data', one_hot=False)\n",

85 | "# 看看咱们样本的数量\n",

86 | "print(mnist.test.labels.shape)\n",

87 | "print(mnist.train.labels.shape)"

88 | ]

89 | },

90 | {

91 | "cell_type": "markdown",

92 | "metadata": {},

93 | "source": [

94 | "## 2. 构建网络"

95 | ]

96 | },

97 | {

98 | "cell_type": "code",

99 | "execution_count": 3,

100 | "metadata": {},

101 | "outputs": [

102 | {

103 | "name": "stdout",

104 | "output_type": "stream",

105 | "text": [

106 | "Finished building network.\n"

107 | ]

108 | }

109 | ],

110 | "source": [

111 | "with tf.name_scope('inputs'):\n",

112 | " X_ = tf.placeholder(tf.float32, [None, 784])\n",

113 | " y_ = tf.placeholder(tf.int64, [None])\n",

114 | "\n",

115 | "# 把X转为卷积所需要的形式\n",

116 | "X = tf.reshape(X_, [-1, 28, 28, 1])\n",

117 | "h_conv1 = tf.layers.conv2d(X, filters=32, kernel_size=5, strides=1, padding='same', activation=tf.nn.relu, name='conv1')\n",

118 | "h_pool1 = tf.layers.max_pooling2d(h_conv1, pool_size=2, strides=2, padding='same', name='pool1')\n",

119 | "\n",

120 | "h_conv2 = tf.layers.conv2d(h_pool1, filters=64, kernel_size=5, strides=1, padding='same',activation=tf.nn.relu, name='conv2')\n",

121 | "h_pool2 = tf.layers.max_pooling2d(h_conv2, pool_size=2, strides=2, padding='same', name='pool2')\n",

122 | "\n",

123 | "# flatten\n",

124 | "h_pool2_flat = tf.reshape(h_pool2, [-1, 7*7*64])\n",

125 | "h_fc1 = tf.layers.dense(h_pool2_flat, 1024, name='fc1', activation=tf.nn.relu)\n",

126 | "\n",

127 | "# dropout: 输出的维度和h_fc1一样,只是随机部分值被值为零\n",

128 | "keep_prob = tf.placeholder(tf.float32)\n",

129 | "h_fc1_drop = tf.nn.dropout(h_fc1, 0.5) # 实际测试的时候这里不应该使用 0.5,这里为了方便演示都这样写而已\n",

130 | "h_fc2 = tf.layers.dense(h_fc1_drop, units=10, name='fc2')\n",

131 | "# y_conv = tf.nn.softmax(h_fc2)\n",

132 | "y_conv = h_fc2\n",

133 | "print('Finished building network.')"

134 | ]

135 | },

136 | {

137 | "cell_type": "code",

138 | "execution_count": 4,

139 | "metadata": {},

140 | "outputs": [

141 | {

142 | "name": "stdout",

143 | "output_type": "stream",

144 | "text": [

145 | "Tensor(\"conv1/Relu:0\", shape=(?, 28, 28, 32), dtype=float32)\n",

146 | "Tensor(\"pool1/MaxPool:0\", shape=(?, 14, 14, 32), dtype=float32)\n",

147 | "Tensor(\"conv2/Relu:0\", shape=(?, 14, 14, 64), dtype=float32)\n",

148 | "Tensor(\"pool2/MaxPool:0\", shape=(?, 7, 7, 64), dtype=float32)\n",

149 | "Tensor(\"Reshape_1:0\", shape=(?, 3136), dtype=float32)\n",

150 | "Tensor(\"fc1/Relu:0\", shape=(?, 1024), dtype=float32)\n",

151 | "Tensor(\"fc2/BiasAdd:0\", shape=(?, 10), dtype=float32)\n"

152 | ]

153 | }

154 | ],

155 | "source": [

156 | "print(h_conv1)\n",

157 | "print(h_pool1)\n",

158 | "print(h_conv2)\n",

159 | "print(h_pool2)\n",

160 | "\n",

161 | "print(h_pool2_flat)\n",

162 | "print(h_fc1)\n",

163 | "print(h_fc2)"

164 | ]

165 | },

166 | {

167 | "cell_type": "markdown",

168 | "metadata": {},

169 | "source": [

170 | "## 3.训练和评估"

171 | ]

172 | },

173 | {

174 | "cell_type": "markdown",

175 | "metadata": {},

176 | "source": [

177 | " 在测试的时候不使用 mini_batch, 那么测试的时候会占用较多的GPU(4497M),这在 notebook 交互式编程中是不推荐的。"

178 | ]

179 | },

180 | {

181 | "cell_type": "code",

182 | "execution_count": null,

183 | "metadata": {

184 | "scrolled": false

185 | },

186 | "outputs": [

187 | {

188 | "name": "stdout",

189 | "output_type": "stream",

190 | "text": [

191 | "step 0, training accuracy = 0.1100, pass 1.18s \n",

192 | "step 1000, training accuracy = 0.9800, pass 6.01s \n",

193 | "step 2000, training accuracy = 0.9800, pass 10.82s \n"

194 | ]

195 | }

196 | ],

197 | "source": [

198 | "# cross_entropy = -tf.reduce_sum(y_*tf.log(y_conv))\n",

199 | "cross_entropy = tf.reduce_mean(tf.nn.sparse_softmax_cross_entropy_with_logits(\n",

200 | " labels=tf.cast(y_, dtype=tf.int32), logits=y_conv))\n",

201 | "\n",

202 | "train_step = tf.train.AdamOptimizer(1e-4).minimize(cross_entropy)\n",

203 | "\n",

204 | "correct_prediction = tf.equal(tf.argmax(y_conv,1), y_)\n",

205 | "accuracy = tf.reduce_mean(tf.cast(correct_prediction, \"float\"))\n",

206 | "sess.run(tf.global_variables_initializer())\n",

207 | "\n",

208 | "tic = time.time()\n",

209 | "for i in range(10000):\n",

210 | " batch = mnist.train.next_batch(100)\n",

211 | " if i%1000 == 0:\n",

212 | " train_accuracy = accuracy.eval(feed_dict={\n",

213 | " X_:batch[0], y_: batch[1], keep_prob: 1.0})\n",

214 | " print(\"step {}, training accuracy = {:.4f}, pass {:.2f}s \".format(i, train_accuracy, time.time() - tic))\n",

215 | " train_step.run(feed_dict={X_: batch[0], y_: batch[1]})\n",

216 | "\n",

217 | "print(\"test accuracy %g\"%accuracy.eval(feed_dict={\n",

218 | " X_: mnist.test.images, y_: mnist.test.labels}))"

219 | ]

220 | }

221 | ],

222 | "metadata": {

223 | "anaconda-cloud": {},

224 | "kernelspec": {

225 | "display_name": "Python 3",

226 | "language": "python",

227 | "name": "python3"

228 | },

229 | "language_info": {

230 | "codemirror_mode": {

231 | "name": "ipython",

232 | "version": 3

233 | },

234 | "file_extension": ".py",

235 | "mimetype": "text/x-python",

236 | "name": "python",

237 | "nbconvert_exporter": "python",

238 | "pygments_lexer": "ipython3",

239 | "version": "3.5.2"

240 | }

241 | },

242 | "nbformat": 4,

243 | "nbformat_minor": 1

244 | }

245 |

--------------------------------------------------------------------------------

/example-notebook/.ipynb_checkpoints/Tutorial_04_3 Convolutional network for MNIST(4)-BN-checkpoint.ipynb:

--------------------------------------------------------------------------------

1 | {

2 | "cells": [

3 | {

4 | "cell_type": "markdown",

5 | "metadata": {},

6 | "source": [

7 | "## CNN做MNIST分类(四)\n",

8 | "\n",

9 | "使用 tf.layers 高级 API 构建 CNN,添加 BN 层。"

10 | ]

11 | },

12 | {

13 | "cell_type": "code",

14 | "execution_count": 1,

15 | "metadata": {},

16 | "outputs": [

17 | {

18 | "name": "stderr",

19 | "output_type": "stream",

20 | "text": [

21 | "/usr/local/lib/python3.5/dist-packages/h5py/__init__.py:36: FutureWarning: Conversion of the second argument of issubdtype from `float` to `np.floating` is deprecated. In future, it will be treated as `np.float64 == np.dtype(float).type`.\n",

22 | " from ._conv import register_converters as _register_converters\n"

23 | ]

24 | }

25 | ],

26 | "source": [

27 | "import numpy as np\n",

28 | "import tensorflow as tf\n",

29 | "\n",

30 | "# 设置按需使用GPU\n",

31 | "config = tf.ConfigProto()\n",

32 | "config.gpu_options.allow_growth = True\n",

33 | "sess = tf.InteractiveSession(config=config)\n",

34 | "\n",

35 | "import time"

36 | ]

37 | },

38 | {

39 | "cell_type": "markdown",

40 | "metadata": {},

41 | "source": [

42 | "## 1.导入数据,用 tensorflow 导入"

43 | ]

44 | },

45 | {

46 | "cell_type": "code",

47 | "execution_count": 2,

48 | "metadata": {},

49 | "outputs": [

50 | {

51 | "name": "stdout",

52 | "output_type": "stream",

53 | "text": [

54 | "WARNING:tensorflow:From /usr/local/lib/python3.5/dist-packages/tensorflow/contrib/learn/python/learn/datasets/base.py:198: retry (from tensorflow.contrib.learn.python.learn.datasets.base) is deprecated and will be removed in a future version.\n",

55 | "Instructions for updating:\n",

56 | "Use the retry module or similar alternatives.\n",

57 | "WARNING:tensorflow:From :3: read_data_sets (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version.\n",

58 | "Instructions for updating:\n",

59 | "Please use alternatives such as official/mnist/dataset.py from tensorflow/models.\n",

60 | "WARNING:tensorflow:From /usr/local/lib/python3.5/dist-packages/tensorflow/contrib/learn/python/learn/datasets/mnist.py:260: maybe_download (from tensorflow.contrib.learn.python.learn.datasets.base) is deprecated and will be removed in a future version.\n",

61 | "Instructions for updating:\n",

62 | "Please write your own downloading logic.\n",

63 | "WARNING:tensorflow:From /usr/local/lib/python3.5/dist-packages/tensorflow/contrib/learn/python/learn/datasets/base.py:219: retry..wrap..wrapped_fn (from tensorflow.contrib.learn.python.learn.datasets.base) is deprecated and will be removed in a future version.\n",

64 | "Instructions for updating:\n",

65 | "Please use urllib or similar directly.\n",

66 | "Successfully downloaded train-images-idx3-ubyte.gz 9912422 bytes.\n",

67 | "WARNING:tensorflow:From /usr/local/lib/python3.5/dist-packages/tensorflow/contrib/learn/python/learn/datasets/mnist.py:262: extract_images (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version.\n",

68 | "Instructions for updating:\n",

69 | "Please use tf.data to implement this functionality.\n",

70 | "Extracting MNIST_data/train-images-idx3-ubyte.gz\n",

71 | "Successfully downloaded train-labels-idx1-ubyte.gz 28881 bytes.\n",

72 | "WARNING:tensorflow:From /usr/local/lib/python3.5/dist-packages/tensorflow/contrib/learn/python/learn/datasets/mnist.py:267: extract_labels (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version.\n",

73 | "Instructions for updating:\n",

74 | "Please use tf.data to implement this functionality.\n",

75 | "Extracting MNIST_data/train-labels-idx1-ubyte.gz\n",

76 | "Successfully downloaded t10k-images-idx3-ubyte.gz 1648877 bytes.\n",

77 | "Extracting MNIST_data/t10k-images-idx3-ubyte.gz\n",

78 | "Successfully downloaded t10k-labels-idx1-ubyte.gz 4542 bytes.\n",

79 | "Extracting MNIST_data/t10k-labels-idx1-ubyte.gz\n",

80 | "WARNING:tensorflow:From /usr/local/lib/python3.5/dist-packages/tensorflow/contrib/learn/python/learn/datasets/mnist.py:290: DataSet.__init__ (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version.\n",

81 | "Instructions for updating:\n",

82 | "Please use alternatives such as official/mnist/dataset.py from tensorflow/models.\n",

83 | "(10000,)\n",

84 | "(55000,)\n"

85 | ]

86 | }

87 | ],

88 | "source": [

89 | "# 用tensorflow 导入数据\n",

90 | "from tensorflow.examples.tutorials.mnist import input_data\n",

91 | "mnist = input_data.read_data_sets('MNIST_data', one_hot=False)\n",

92 | "# 看看咱们样本的数量\n",

93 | "print(mnist.test.labels.shape)\n",

94 | "print(mnist.train.labels.shape)"

95 | ]

96 | },

97 | {

98 | "cell_type": "markdown",

99 | "metadata": {},

100 | "source": [

101 | "## 2. 构建网络"

102 | ]

103 | },

104 | {

105 | "cell_type": "code",

106 | "execution_count": 3,

107 | "metadata": {},

108 | "outputs": [

109 | {

110 | "name": "stdout",

111 | "output_type": "stream",

112 | "text": [

113 | "Finished building network.\n"

114 | ]

115 | }

116 | ],

117 | "source": [

118 | "with tf.name_scope('inputs'):\n",

119 | " X_ = tf.placeholder(tf.float32, [None, 784])\n",

120 | " y_ = tf.placeholder(tf.int64, [None])\n",

121 | "\n",

122 | "# 把X转为卷积所需要的形式\n",

123 | "X = tf.reshape(X_, [-1, 28, 28, 1])\n",

124 | "h_conv1 = tf.layers.conv2d(X, filters=32, kernel_size=5, strides=1, padding='same', name='conv1')\n",

125 | "h_bn1 = tf.layers.batch_normalization(h_conv1, )\n",

126 | "h_pool1 = tf.layers.max_pooling2d(h_conv1, pool_size=2, strides=2, padding='same', name='pool1')\n",

127 | "\n",

128 | "h_conv2 = tf.layers.conv2d(h_pool1, filters=64, kernel_size=5, strides=1, padding='same',activation=tf.nn.relu, name='conv2')\n",

129 | "h_pool2 = tf.layers.max_pooling2d(h_conv2, pool_size=2, strides=2, padding='same', name='pool2')\n",

130 | "\n",

131 | "# flatten\n",

132 | "h_pool2_flat = tf.reshape(h_pool2, [-1, 7*7*64])\n",

133 | "h_fc1 = tf.layers.dense(h_pool2_flat, 1024, name='fc1', activation=tf.nn.relu)\n",

134 | "\n",

135 | "# dropout: 输出的维度和h_fc1一样,只是随机部分值被值为零\n",

136 | "keep_prob = tf.placeholder(tf.float32)\n",

137 | "h_fc1_drop = tf.nn.dropout(h_fc1, 0.5) # 实际测试的时候这里不应该使用 0.5,这里为了方便演示都这样写而已\n",

138 | "h_fc2 = tf.layers.dense(h_fc1_drop, units=10, name='fc2')\n",

139 | "# y_conv = tf.nn.softmax(h_fc2)\n",

140 | "y_conv = h_fc2\n",

141 | "print('Finished building network.')"

142 | ]

143 | },

144 | {

145 | "cell_type": "code",

146 | "execution_count": 4,

147 | "metadata": {},

148 | "outputs": [

149 | {

150 | "name": "stdout",

151 | "output_type": "stream",

152 | "text": [

153 | "Tensor(\"conv1/Relu:0\", shape=(?, 28, 28, 32), dtype=float32)\n",

154 | "Tensor(\"pool1/MaxPool:0\", shape=(?, 14, 14, 32), dtype=float32)\n",

155 | "Tensor(\"conv2/Relu:0\", shape=(?, 14, 14, 64), dtype=float32)\n",

156 | "Tensor(\"pool2/MaxPool:0\", shape=(?, 7, 7, 64), dtype=float32)\n",

157 | "Tensor(\"Reshape_1:0\", shape=(?, 3136), dtype=float32)\n",

158 | "Tensor(\"fc1/Relu:0\", shape=(?, 1024), dtype=float32)\n",

159 | "Tensor(\"fc2/BiasAdd:0\", shape=(?, 10), dtype=float32)\n"

160 | ]

161 | }

162 | ],

163 | "source": [

164 | "print(h_conv1)\n",

165 | "print(h_pool1)\n",

166 | "print(h_conv2)\n",

167 | "print(h_pool2)\n",

168 | "\n",

169 | "print(h_pool2_flat)\n",

170 | "print(h_fc1)\n",

171 | "print(h_fc2)"

172 | ]

173 | },

174 | {

175 | "cell_type": "markdown",

176 | "metadata": {},

177 | "source": [

178 | "## 3.训练和评估"

179 | ]

180 | },

181 | {

182 | "cell_type": "markdown",

183 | "metadata": {},

184 | "source": [

185 | " 在测试的时候不使用 mini_batch, 那么测试的时候会占用较多的GPU(4497M),这在 notebook 交互式编程中是不推荐的。"

186 | ]

187 | },

188 | {

189 | "cell_type": "code",

190 | "execution_count": 5,

191 | "metadata": {

192 | "scrolled": false

193 | },

194 | "outputs": [

195 | {

196 | "name": "stdout",

197 | "output_type": "stream",

198 | "text": [

199 | "step 0, training accuracy = 0.1100, pass 1.18s \n",

200 | "step 1000, training accuracy = 0.9800, pass 6.01s \n",

201 | "step 2000, training accuracy = 0.9800, pass 10.82s \n",

202 | "step 3000, training accuracy = 0.9700, pass 15.65s \n",

203 | "step 4000, training accuracy = 1.0000, pass 20.40s \n",

204 | "step 5000, training accuracy = 0.9800, pass 25.26s \n",

205 | "step 6000, training accuracy = 1.0000, pass 30.00s \n",

206 | "step 7000, training accuracy = 0.9900, pass 34.84s \n",

207 | "step 8000, training accuracy = 1.0000, pass 39.66s \n",

208 | "step 9000, training accuracy = 1.0000, pass 44.55s \n",

209 | "test accuracy 0.9924\n"

210 | ]

211 | }

212 | ],

213 | "source": [

214 | "# cross_entropy = -tf.reduce_sum(y_*tf.log(y_conv))\n",

215 | "cross_entropy = tf.reduce_mean(tf.nn.sparse_softmax_cross_entropy_with_logits(\n",

216 | " labels=tf.cast(y_, dtype=tf.int32), logits=y_conv))\n",

217 | "\n",

218 | "train_step = tf.train.AdamOptimizer(1e-4).minimize(cross_entropy)\n",

219 | "\n",

220 | "correct_prediction = tf.equal(tf.argmax(y_conv,1), y_)\n",

221 | "accuracy = tf.reduce_mean(tf.cast(correct_prediction, \"float\"))\n",

222 | "sess.run(tf.global_variables_initializer())\n",

223 | "\n",

224 | "tic = time.time()\n",

225 | "for i in range(10000):\n",

226 | " batch = mnist.train.next_batch(100)\n",

227 | " if i%1000 == 0:\n",

228 | " train_accuracy = accuracy.eval(feed_dict={\n",

229 | " X_:batch[0], y_: batch[1], keep_prob: 1.0})\n",

230 | " print(\"step {}, training accuracy = {:.4f}, pass {:.2f}s \".format(i, train_accuracy, time.time() - tic))\n",

231 | " train_step.run(feed_dict={X_: batch[0], y_: batch[1]})\n",

232 | "\n",

233 | "print(\"test accuracy %g\"%accuracy.eval(feed_dict={\n",

234 | " X_: mnist.test.images, y_: mnist.test.labels}))"

235 | ]

236 | }

237 | ],

238 | "metadata": {

239 | "anaconda-cloud": {},

240 | "kernelspec": {

241 | "display_name": "Python 3",

242 | "language": "python",

243 | "name": "python3"

244 | },

245 | "language_info": {

246 | "codemirror_mode": {

247 | "name": "ipython",

248 | "version": 3

249 | },

250 | "file_extension": ".py",

251 | "mimetype": "text/x-python",

252 | "name": "python",

253 | "nbconvert_exporter": "python",

254 | "pygments_lexer": "ipython3",

255 | "version": "3.5.2"

256 | }

257 | },

258 | "nbformat": 4,

259 | "nbformat_minor": 1

260 | }

261 |

--------------------------------------------------------------------------------

/example-notebook/.ipynb_checkpoints/Tutorial_07 How to save the model-checkpoint.ipynb:

--------------------------------------------------------------------------------

1 | {