├── .github

├── test

│ └── .gitkeep

└── workflows

│ └── publish_action.yml

├── requirements.txt

├── nodes

├── convert

│ ├── FloatToInt.py

│ ├── FloatsToWeightsStrategy.py

│ └── MaskToFloat.py

├── utils

│ ├── InvertFloats.py

│ ├── RepeatImageToCount.py

│ └── FloatsVisualizer.py

└── audio

│ ├── AudioPromptSchedule.py

│ ├── AudioAnimateDiffSchedule.py

│ ├── AudioControlNetSchedule.py

│ ├── LoadAudioSeparationModel.py

│ ├── AudioPeaksDetection.py

│ ├── EditAudioWeights.py

│ ├── AudioIPAdapterTransitions.py

│ ├── AudioRemixer.py

│ └── AudioAnalysis.py

├── pyproject.toml

├── .gitignore

├── web

└── js

│ ├── appearance.js

│ └── help_popup.js

├── __init__.py

├── node_configs.py

├── README.md

├── yvann_web_async

├── purify.min.js

├── svg-path-properties.min.js

└── marked.min.js

└── LICENCE

/.github/test/.gitkeep:

--------------------------------------------------------------------------------

1 |

--------------------------------------------------------------------------------

/requirements.txt:

--------------------------------------------------------------------------------

1 | numpy

2 | torch

3 | openunmix

4 | matplotlib

5 | pillow

6 | torchaudio

7 | termcolor

--------------------------------------------------------------------------------

/.github/workflows/publish_action.yml:

--------------------------------------------------------------------------------

1 | name: Publish to Comfy registry

2 | on:

3 | workflow_dispatch:

4 | push:

5 | branches:

6 | - main

7 | paths:

8 | - "pyproject.toml"

9 |

10 | jobs:

11 | publish-node:

12 | name: Publish Custom Node to registry

13 | runs-on: ubuntu-latest

14 | steps:

15 | - name: Check out code

16 | uses: actions/checkout@v4

17 | - name: Publish Custom Node

18 | uses: Comfy-Org/publish-node-action@main

19 | with:

20 | personal_access_token: ${{ secrets.REGISTRY_ACCESS_TOKEN }}

21 |

--------------------------------------------------------------------------------

/nodes/convert/FloatToInt.py:

--------------------------------------------------------------------------------

1 | from ... import Yvann

2 | import numpy as np

3 |

4 | class ConvertNodeBase(Yvann):

5 | CATEGORY = "👁️ Yvann Nodes/🔄 Convert"

6 |

7 | class FloatToInt(ConvertNodeBase):

8 | @classmethod

9 | def INPUT_TYPES(cls):

10 | return {

11 | "required": {

12 | "float": ("FLOAT", {"forceInput": True}),

13 | }

14 | }

15 | RETURN_TYPES = ("INT",)

16 | RETURN_NAMES = ("int",)

17 | FUNCTION = "convert_floats_to_ints"

18 |

19 | def convert_floats_to_ints(self, float):

20 |

21 | floats_array = np.array(float)

22 |

23 | ints_array = np.round(floats_array)

24 |

25 | ints_array = ints_array.astype(int)

26 | integers = ints_array.tolist()

27 |

28 | return (integers,)

29 |

--------------------------------------------------------------------------------

/pyproject.toml:

--------------------------------------------------------------------------------

1 | [project]

2 | name = "comfyui_yvann-nodes"

3 | description = "Audio Reactive nodes for AI animations 🔊 Analyze audio, extract drums, bass, vocals. Compatible with IPAdapter, ControlNets, AnimateDiff... Generate reactive masks and weights. Create audio-driven visuals. Produce weight graphs and audio masks. Ideal for music videos and reactive animations. Features audio scheduling and waveform analysis"

4 | version = "2.0.2"

5 | license = {file = "LICENSE"}

6 | dependencies = ["openunmix", "numpy", "torch", "matplotlib", "pillow", "scipy.signal"]

7 |

8 | [project.urls]

9 | Repository = "https://github.com/yvann-ba/ComfyUI_Yvann-Nodes"

10 | # Used by Comfy Registry https://comfyregistry.org

11 |

12 | [tool.comfy]

13 | PublisherId = "yvann"

14 | DisplayName = "ComfyUI_Yvann-Nodes"

15 | Icon = ""

16 |

--------------------------------------------------------------------------------

/nodes/convert/FloatsToWeightsStrategy.py:

--------------------------------------------------------------------------------

1 | from ... import Yvann

2 |

3 | class ConvertNodeBase(Yvann):

4 | CATEGORY = "👁️ Yvann Nodes/🔄 Convert"

5 |

6 | class FloatsToWeightsStrategy(ConvertNodeBase):

7 | @classmethod

8 | def INPUT_TYPES(cls):

9 | return {

10 | "required": {

11 | "floats": ("FLOAT", {"forceInput": True}),

12 | }

13 | }

14 | RETURN_TYPES = ("WEIGHTS_STRATEGY",)

15 | RETURN_NAMES = ("WEIGHTS_STRATEGY",)

16 | FUNCTION = "convert"

17 |

18 | def convert(self, floats):

19 | frames = len(floats)

20 | weights_str = ", ".join(map(lambda x: f"{x:.3f}", floats))

21 |

22 | weights_strategy = {

23 | "weights": weights_str,

24 | "timing": "custom",

25 | "frames": frames,

26 | "start_frame": 0,

27 | "end_frame": frames,

28 | "add_starting_frames": 0,

29 | "add_ending_frames": 0,

30 | "method": "full batch",

31 | "frame_count": frames,

32 | }

33 | return (weights_strategy,)

34 |

--------------------------------------------------------------------------------

/nodes/utils/InvertFloats.py:

--------------------------------------------------------------------------------

1 | from ... import Yvann

2 | import numpy as np

3 |

4 |

5 | class UtilsNodeBase(Yvann):

6 | CATEGORY = "👁️ Yvann Nodes/🛠️ Utils"

7 |

8 | class InvertFloats(UtilsNodeBase):

9 | @classmethod

10 | def INPUT_TYPES(cls):

11 | return {

12 | "required": {

13 | "floats": ("FLOAT", {"forceInput": True}),

14 | }

15 | }

16 | RETURN_TYPES = ("FLOAT",)

17 | RETURN_NAMES = ("inverted_floats",)

18 | FUNCTION = "invert_floats"

19 |

20 | def invert_floats(self, floats):

21 | floats_array = np.array(floats)

22 | min_value = floats_array.min()

23 | max_value = floats_array.max()

24 |

25 | # Invert the values relative to the range midpoint

26 | range_midpoint = (max_value + min_value) / 2.0

27 | floats_invert_array = (2 * range_midpoint) - floats_array

28 | floats_invert_array = np.round(floats_invert_array, decimals=6)

29 |

30 | # Convert back to list

31 | floats_invert = floats_invert_array.tolist()

32 |

33 | return (floats_invert,)

34 |

--------------------------------------------------------------------------------

/nodes/utils/RepeatImageToCount.py:

--------------------------------------------------------------------------------

1 | from ... import Yvann

2 | import torch

3 | class UtilsNodeBase(Yvann):

4 | CATEGORY = "👁️ Yvann Nodes/🛠️ Utils"

5 |

6 | class RepeatImageToCount(UtilsNodeBase):

7 | @classmethod

8 | def INPUT_TYPES(cls):

9 | return {

10 | "required": {

11 | "image": ("IMAGE", {"forceInput": True}),

12 | "count": ("INT", {"default": 1, "min": 1}),

13 | }

14 | }

15 | RETURN_TYPES = ("IMAGE",)

16 | RETURN_NAMES = ("images",)

17 | FUNCTION = "repeat_image_to_count"

18 |

19 | def repeat_image_to_count(self, image, count):

20 | num_images = image.size(0) # Number of images in the input batch

21 |

22 | # Create indices to select images from input batch

23 | indices = [i % num_images for i in range(count)] # Cycle through images to reach the desired count

24 |

25 | # Select images using the computed indices

26 | images = image[indices]

27 | return (images,)

--------------------------------------------------------------------------------

/nodes/convert/MaskToFloat.py:

--------------------------------------------------------------------------------

1 | from ... import Yvann

2 |

3 | # To do the opposite (Float To Mask), you can install the node pack "ComfyUI-KJNodes"

4 | class ConvertNodeBase(Yvann):

5 | CATEGORY = "👁️ Yvann Nodes/🔄 Convert"

6 |

7 | class MaskToFloat(ConvertNodeBase):

8 | @classmethod

9 | def INPUT_TYPES(cls):

10 | return {

11 | "required": {

12 | "mask": ("MASK", {"forceInput": True}),

13 | },

14 | }

15 | RETURN_TYPES = ("FLOAT",)

16 | RETURN_NAMES = ("float",)

17 | FUNCTION = "mask_to_float"

18 |

19 | def mask_to_float(self, mask):

20 | import torch

21 |

22 | # Ensure mask is a torch.Tensor

23 | if not isinstance(mask, torch.Tensor):

24 | raise ValueError("Input 'mask' must be a torch.Tensor")

25 |

26 | # Handle case where mask may have shape [H, W] instead of [B, H, W]

27 | if mask.dim() == 2:

28 | mask = mask.unsqueeze(0) # Add batch dimension

29 |

30 | # mask has shape [B, H, W]

31 | batch_size = mask.shape[0]

32 | output_values = []

33 |

34 | for i in range(batch_size):

35 | single_mask = mask[i] # shape [H, W]

36 | mean_value = round(single_mask.mean().item(), 6) # Compute mean pixel value

37 | output_values.append(mean_value)

38 |

39 | return (output_values,)

40 |

--------------------------------------------------------------------------------

/.gitignore:

--------------------------------------------------------------------------------

1 | # Python cache files

2 | __pycache__/

3 | *.py[cod]

4 | *$py.class

5 | **/*.pyc

6 | **/__pycache__

7 | **/.pytest_cache

8 |

9 |

10 | audio_learn.ipynb

11 | # C extensions

12 | *.so

13 | readme.md

14 | # Distribution / packaging

15 | .Python

16 | build/

17 | develop-eggs/

18 | dist/

19 | downloads/

20 | eggs/

21 | .eggs/

22 | lib/

23 | lib64/

24 | parts/

25 | sdist/

26 | var/

27 | wheels/

28 | *.egg-info/

29 | .installed.cfg

30 | *.egg

31 |

32 | # PyInstaller

33 | *.manifest

34 | *.spec

35 |

36 | # Installer logs

37 | pip-log.txt

38 | pip-delete-this-directory.txt

39 |

40 | # Unit test / coverage reports

41 | htmlcov/

42 | .tox/

43 | .coverage

44 | .coverage.*

45 | .cache

46 | nosetests.xml

47 | coverage.xml

48 | *.cover

49 | .hypothesis/

50 |

51 | # Jupyter Notebook

52 | .ipynb_checkpoints

53 |

54 | # pyenv

55 | .python-version

56 |

57 | # Environment directories

58 | .env

59 | .venv

60 | env/

61 | venv/

62 | ENV/

63 |

64 | # Spyder project settings

65 | .spyderproject

66 | .spyproject

67 |

68 | # Rope project settings

69 | .ropeproject

70 |

71 | # mkdocs documentation

72 | /site

73 |

74 | # mypy

75 | .mypy_cache/

76 |

77 | # IDE settings

78 | .vscode/

79 | .idea/

80 |

81 | # OS generated files

82 | .DS_Store

83 | .DS_Store?

84 | ._*

85 | .Spotlight-V100

86 | .Trashes

87 | ehthumbs.db

88 | Thumbs.db

89 |

90 | # ComfyUI specific (adjust as needed)

91 | # output/

92 | # input/

93 | # models/

--------------------------------------------------------------------------------

/nodes/audio/AudioPromptSchedule.py:

--------------------------------------------------------------------------------

1 | from ... import Yvann

2 |

3 | class AudioNodeBase(Yvann):

4 | CATEGORY = "👁️ Yvann Nodes/🔊 Audio"

5 |

6 | class AudioPromptSchedule(AudioNodeBase):

7 | @classmethod

8 | def INPUT_TYPES(cls):

9 | return {

10 | "required": {

11 | "peaks_index": ("STRING", {"forceInput": True}),

12 | "prompts": ("STRING", {"default": "", "multiline": True}),

13 | }

14 | }

15 |

16 | RETURN_TYPES = ("STRING",)

17 | RETURN_NAMES = ("prompt_schedule",)

18 | FUNCTION = "create_prompt_schedule"

19 |

20 | def create_prompt_schedule(self, peaks_index, prompts=""):

21 | switch_index = peaks_index

22 | if isinstance(switch_index, str):

23 | switch_index = [int(idx.strip()) for idx in peaks_index.split(",")]

24 | else:

25 | switch_index = [int(idx) for idx in switch_index]

26 |

27 | # Parse the prompts, split by newline, and remove empty lines

28 | prompts_list = [p.strip() for p in prompts.split("\n") if p.strip() != ""]

29 |

30 | # Ensure the number of prompts matches the number of indices by looping prompts

31 | num_indices = len(switch_index)

32 | num_prompts = len(prompts_list)

33 |

34 | if num_prompts > 0:

35 | # Loop prompts to match the number of indices

36 | extended_prompts = []

37 | while len(extended_prompts) < num_indices:

38 | for p in prompts_list:

39 | extended_prompts.append(p)

40 | if len(extended_prompts) == num_indices:

41 | break

42 | prompts_list = extended_prompts

43 | else:

44 | # If no prompts provided, fill with empty strings

45 | prompts_list = [""] * num_indices

46 |

47 | # Create the formatted prompt schedule string

48 | out = ""

49 | for idx, frame in enumerate(switch_index):

50 | out += f"\"{frame}\": \"{prompts_list[idx]}\",\n"

51 |

52 | return (out,)

53 |

--------------------------------------------------------------------------------

/web/js/appearance.js:

--------------------------------------------------------------------------------

1 | import { app } from "../../../scripts/app.js";

2 |

3 | const COLOR_THEMES = {

4 | blue: { nodeColor: "#153a61", nodeBgColor: "#1A4870" },

5 | };

6 |

7 | const NODE_COLORS = {

8 | "Audio Analysis": "blue",

9 | "Audio IPAdapter Transitions": "blue",

10 | "Audio Prompt Schedule": "blue",

11 | "Audio Peaks Detection": "blue",

12 | "Audio Remixer": "blue",

13 | "Edit Audio Weights": "blue",

14 | "Audio ControlNet Schedule": "blue",

15 | "Load Audio Separation Model": "blue",

16 | "Floats To Weights Strategy": "blue",

17 | "Invert Floats": "blue",

18 | "Floats Visualizer": "blue",

19 | "Mask To Float": "blue",

20 | "Repeat Image To Count": "blue",

21 | "Float to Int": "blue"

22 | };

23 |

24 | function shuffleArray(array) {

25 | for (let i = array.length - 1; i > 0; i--) {

26 | const j = Math.floor(Math.random() * (i + 1));

27 | [array[i], array[j]] = [array[j], array[i]]; // Swap elements

28 | }

29 | }

30 |

31 | let colorKeys = Object.keys(COLOR_THEMES).filter(key => key !== "none");

32 | shuffleArray(colorKeys); // Shuffle the color themes initially

33 |

34 | function setNodeColors(node, theme) {

35 | if (!theme) { return; }

36 | node.shape = "box";

37 | if (theme.nodeColor && theme.nodeBgColor) {

38 | node.color = theme.nodeColor;

39 | node.bgcolor = theme.nodeBgColor;

40 | }

41 | }

42 |

43 | const ext = {

44 | name: "Yvann.appearance",

45 |

46 | nodeCreated(node) {

47 | const nclass = node.comfyClass;

48 | if (NODE_COLORS.hasOwnProperty(nclass)) {

49 | let colorKey = NODE_COLORS[nclass];

50 |

51 | if (colorKey === "random") {

52 | // Check for a valid color key before popping

53 | if (colorKeys.length === 0 || !COLOR_THEMES[colorKeys[colorKeys.length - 1]]) {

54 | colorKeys = Object.keys(COLOR_THEMES).filter(key => key !== "none");

55 | shuffleArray(colorKeys);

56 | }

57 | colorKey = colorKeys.pop();

58 | }

59 |

60 | const theme = COLOR_THEMES[colorKey];

61 | setNodeColors(node, theme);

62 | }

63 | }

64 | };

65 |

66 | app.registerExtension(ext);

67 |

--------------------------------------------------------------------------------

/nodes/audio/AudioAnimateDiffSchedule.py:

--------------------------------------------------------------------------------

1 | from ... import Yvann

2 | import numpy as np

3 |

4 | class AudioNodeBase(Yvann):

5 | CATEGORY = "👁️ Yvann Nodes/🔊 Audio"

6 |

7 | class AudioAnimateDiffSchedule(AudioNodeBase):

8 | @classmethod

9 | def INPUT_TYPES(cls):

10 | return {

11 | "required": {

12 | "any_audio_weights": ("FLOAT", {"forceInput": True}),

13 | "smooth": ("FLOAT", {"default": 0.0, "min": 0.0, "max": 1.0, "step": 0.01}),

14 | "min_range": ("FLOAT", {"default": 0.95, "min": 0.8, "max": 1.49, "step": 0.01}),

15 | "max_range": ("FLOAT", {"default": 1.25, "min": 0.81, "max": 1.5, "step": 0.01}),

16 | }

17 | }

18 |

19 | RETURN_TYPES = ("FLOAT",)

20 | RETURN_NAMES = ("float_val",)

21 | FUNCTION = "process_any_audio_weights"

22 |

23 | CATEGORY = "👁️ Yvann Nodes/🔊 Audio"

24 |

25 | def process_any_audio_weights(self, any_audio_weights, smooth, min_range, max_range):

26 | if not isinstance(any_audio_weights, (list, np.ndarray)):

27 | print("Invalid any_audio_weights input")

28 | return None

29 |

30 | any_audio_weights = np.array(any_audio_weights, dtype=np.float32)

31 |

32 | # Apply smoothing

33 | smoothed_signal = np.zeros_like(any_audio_weights)

34 | for i in range(len(any_audio_weights)):

35 | if i == 0:

36 | smoothed_signal[i] = any_audio_weights[i]

37 | else:

38 | smoothed_signal[i] = smoothed_signal[i-1] * smooth + any_audio_weights[i] * (1 - smooth)

39 |

40 | # Normalize the smoothed signal

41 | min_val = np.min(smoothed_signal)

42 | max_val = np.max(smoothed_signal)

43 | if max_val - min_val != 0:

44 | normalized_signal = (smoothed_signal - min_val) / (max_val - min_val)

45 | else:

46 | normalized_signal = smoothed_signal - min_val # All values are the same

47 |

48 | # Rescale to specified range

49 | rescaled_signal = normalized_signal * (max_range - min_range) + min_range

50 | rescaled_signal.tolist()

51 | rounded_rescaled_signal = [round(elem, 6) for elem in rescaled_signal]

52 |

53 | return (rounded_rescaled_signal,)

54 |

--------------------------------------------------------------------------------

/nodes/audio/AudioControlNetSchedule.py:

--------------------------------------------------------------------------------

1 | from ... import Yvann

2 | import numpy as np

3 |

4 | class AudioNodeBase(Yvann):

5 | CATEGORY = "👁️ Yvann Nodes/🔊 Audio"

6 |

7 | class AudioControlNetSchedule(AudioNodeBase):

8 | @classmethod

9 | def INPUT_TYPES(cls):

10 | return {

11 | "required": {

12 | "any_audio_weights": ("FLOAT", {"forceInput": True}),

13 | "smooth": ("FLOAT", {"default": 0.0, "min": 0.0, "max": 1.0, "step": 0.01}),

14 | "min_range": ("FLOAT", {"default": 0.0, "min": 0.0, "max": 0.99, "step": 0.01}),

15 | "max_range": ("FLOAT", {"default": 1.0, "min": 0.01, "max": 1.0, "step": 0.01}),

16 | }

17 | }

18 |

19 | RETURN_TYPES = ("FLOAT",)

20 | RETURN_NAMES = ("processed_weights",)

21 | FUNCTION = "process_any_audio_weights"

22 |

23 | CATEGORY = "👁️ Yvann Nodes/🔊 Audio"

24 |

25 | def process_any_audio_weights(self, any_audio_weights, smooth, min_range, max_range):

26 | if not isinstance(any_audio_weights, (list, np.ndarray)):

27 | print("Invalid any_audio_weights input")

28 | return None

29 |

30 | any_audio_weights = np.array(any_audio_weights, dtype=np.float32)

31 |

32 | # Apply smoothing

33 | smoothed_signal = np.zeros_like(any_audio_weights)

34 | for i in range(len(any_audio_weights)):

35 | if i == 0:

36 | smoothed_signal[i] = any_audio_weights[i]

37 | else:

38 | smoothed_signal[i] = smoothed_signal[i-1] * smooth + any_audio_weights[i] * (1 - smooth)

39 |

40 | # Normalize the smoothed signal

41 | min_val = np.min(smoothed_signal)

42 | max_val = np.max(smoothed_signal)

43 | if max_val - min_val != 0:

44 | normalized_signal = (smoothed_signal - min_val) / (max_val - min_val)

45 | else:

46 | normalized_signal = smoothed_signal - min_val # All values are the same

47 |

48 | # Rescale to specified range

49 | rescaled_signal = normalized_signal * (max_range - min_range) + min_range

50 | rescaled_signal.tolist()

51 | rounded_rescaled_signal = [round(elem, 6) for elem in rescaled_signal]

52 |

53 | return (rounded_rescaled_signal,)

54 |

55 |

--------------------------------------------------------------------------------

/nodes/audio/LoadAudioSeparationModel.py:

--------------------------------------------------------------------------------

1 | import os

2 | import folder_paths

3 | import torch

4 | from torchaudio.pipelines import HDEMUCS_HIGH_MUSDB_PLUS

5 | from typing import Any

6 | from ... import Yvann

7 | import comfy.model_management as mm

8 |

9 |

10 | class AudioNodeBase(Yvann):

11 | CATEGORY = "👁️ Yvann Nodes/🔊 Audio"

12 |

13 | class LoadAudioSeparationModel(AudioNodeBase):

14 | audio_models = ["Hybrid Demucs", "Open-Unmix"]

15 |

16 | @classmethod

17 | def INPUT_TYPES(cls) -> dict[str, dict[str, tuple]]:

18 | return {

19 | "required": {

20 | "model": (cls.audio_models,),

21 | }

22 | }

23 |

24 | RETURN_TYPES = ("AUDIO_SEPARATION_MODEL",)

25 | RETURN_NAMES = ("audio_sep_model",)

26 | FUNCTION = "main"

27 |

28 | def load_OpenUnmix(self, model):

29 | device = mm.get_torch_device()

30 | download_path = os.path.join(folder_paths.models_dir, "openunmix")

31 | os.makedirs(download_path, exist_ok=True)

32 |

33 | model_file = "umxl.pth"

34 | model_path = os.path.join(download_path, model_file)

35 |

36 | if not os.path.exists(model_path):

37 | print(f"Downloading {model} model...")

38 | try:

39 | separator = torch.hub.load('sigsep/open-unmix-pytorch', 'umxl', device='cpu')

40 | except RuntimeError as e:

41 | print(f"Failed to download model : {e}")

42 | return None

43 | torch.save(separator.state_dict(), model_path)

44 | print(f"Model saved to: {model_path}")

45 | else:

46 | print(f"Loading model from: {model_path}")

47 | separator = torch.hub.load('sigsep/open-unmix-pytorch', 'umxl', device='cpu')

48 | separator.load_state_dict(torch.load(model_path, map_location='cpu'))

49 |

50 | separator = separator.to(device)

51 | separator.eval()

52 |

53 | return (separator,)

54 |

55 | def load_HDemucs(self):

56 |

57 | device = mm.get_torch_device()

58 | bundle: Any = HDEMUCS_HIGH_MUSDB_PLUS

59 | print("Hybrid Demucs model is loaded")

60 | model_info = {

61 | "demucs": True,

62 | "model": bundle.get_model().to(device),

63 | "sample_rate": bundle.sample_rate

64 | }

65 | return (model_info,)

66 |

67 |

68 | def main(self, model):

69 |

70 | if model == "Open-Unmix":

71 | return (self.load_OpenUnmix(model))

72 | else:

73 | return (self.load_HDemucs())

--------------------------------------------------------------------------------

/nodes/utils/FloatsVisualizer.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import matplotlib.pyplot as plt

3 | import tempfile

4 | import numpy as np

5 | from PIL import Image

6 | from ... import Yvann

7 |

8 | class UtilsNodeBase(Yvann):

9 | CATEGORY = "👁️ Yvann Nodes/🛠️ Utils"

10 |

11 | class FloatsVisualizer(UtilsNodeBase):

12 | # Define class variables for line styles and colors

13 | line_styles = ["-", "--", "-."]

14 | line_colors = ["blue", "green", "red"]

15 |

16 | @classmethod

17 | def INPUT_TYPES(cls):

18 | return {

19 | "required": {

20 | "floats": ("FLOAT", {"forceInput": True}),

21 | "title": ("STRING", {"default": "Graph"}),

22 | "x_label": ("STRING", {"default": "X-Axis"}),

23 | "y_label": ("STRING", {"default": "Y-Axis"}),

24 | },

25 | "optional": {

26 | "floats_optional1": ("FLOAT", {"forceInput": True}),

27 | "floats_optional2": ("FLOAT", {"forceInput": True}),

28 | }

29 | }

30 |

31 | RETURN_TYPES = ("IMAGE",)

32 | RETURN_NAMES = ("visual_graph",)

33 | FUNCTION = "floats_to_graph"

34 |

35 | def floats_to_graph(self, floats, title="Graph", x_label="X-Axis", y_label="Y-Axis",

36 | floats_optional1=None, floats_optional2=None):

37 |

38 | try:

39 | # Create a list of tuples containing (label, data)

40 | floats_list = [("floats", floats)]

41 | if floats_optional1 is not None:

42 | floats_list.append(("floats_optional1", floats_optional1))

43 | if floats_optional2 is not None:

44 | floats_list.append(("floats_optional2", floats_optional2))

45 |

46 | # Convert all floats to NumPy arrays and ensure they are the same length

47 | processed_floats_list = []

48 | min_length = None

49 | for label, floats_data in floats_list:

50 | if isinstance(floats_data, list):

51 | floats_array = np.array(floats_data)

52 | elif isinstance(floats_data, torch.Tensor):

53 | floats_array = floats_data.cpu().numpy()

54 | else:

55 | raise ValueError(f"Unsupported type for '{label}' input")

56 | if min_length is None or len(floats_array) < min_length:

57 | min_length = len(floats_array)

58 | processed_floats_list.append((label, floats_array))

59 |

60 | # Truncate all arrays to the minimum length to match x-axis

61 | processed_floats_list = [

62 | (label, floats_array[:min_length]) for label, floats_array in processed_floats_list

63 | ]

64 |

65 | # Create the plot

66 | figsize = 12.0

67 | plt.figure(figsize=(figsize, figsize * 0.6), facecolor='white')

68 |

69 | x_values = range(min_length) # Use the minimum length

70 |

71 | for idx, (label, floats_array) in enumerate(processed_floats_list):

72 | color = self.line_colors[idx % len(self.line_colors)]

73 | style = self.line_styles[idx % len(self.line_styles)]

74 | plt.plot(x_values, floats_array, label=label, color=color, linestyle=style)

75 |

76 | plt.title(title)

77 | plt.xlabel(x_label)

78 | plt.ylabel(y_label)

79 | plt.grid(True)

80 | plt.legend()

81 |

82 | # Save the plot to a temporary file

83 | with tempfile.NamedTemporaryFile(delete=False, suffix=".png") as tmpfile:

84 | plt.savefig(tmpfile.name, format='png', bbox_inches='tight')

85 | tmpfile_path = tmpfile.name

86 | plt.close()

87 |

88 | # Load the image and convert to tensor

89 | visualization = Image.open(tmpfile_path).convert("RGB")

90 | visualization = np.array(visualization).astype(np.float32) / 255.0

91 | visualization = torch.from_numpy(visualization).unsqueeze(0) # Shape: [1, C, H, W]

92 |

93 | except Exception as e:

94 | print(f"Error in creating visualization: {e}")

95 | visualization = None

96 |

97 | return (visualization,)

98 |

--------------------------------------------------------------------------------

/nodes/audio/AudioPeaksDetection.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import matplotlib.pyplot as plt

3 | from matplotlib.ticker import MaxNLocator # Import for integer x-axis labels

4 | import tempfile

5 | import numpy as np

6 | from PIL import Image

7 | from scipy.signal import find_peaks

8 | from ... import Yvann

9 |

10 | class AudioNodeBase(Yvann):

11 | CATEGORY = "👁️ Yvann Nodes/🔊 Audio"

12 |

13 | class AudioPeaksDetection(AudioNodeBase):

14 | @classmethod

15 | def INPUT_TYPES(cls):

16 | return {

17 | "required": {

18 | "audio_weights": ("FLOAT", {"forceInput": True}),

19 | "peaks_threshold": ("FLOAT", {"default": 0.4, "min": 0.0, "max": 1.0, "step": 0.01}),

20 | "min_peaks_distance": ("INT", {"default": 5, "min": 1, "max":100})

21 | }

22 | }

23 |

24 | RETURN_TYPES = ("FLOAT", "FLOAT", "STRING", "INT", "IMAGE")

25 | RETURN_NAMES = ("peaks_weights", "peaks_alternate_weights", "peaks_index", "peaks_count", "graph_peaks")

26 | FUNCTION = "detect_peaks"

27 |

28 | def detect_peaks(self, audio_weights, peaks_threshold, min_peaks_distance):

29 | if not isinstance(audio_weights, (list, np.ndarray)):

30 | print("Invalid audio_weights input")

31 | return None, None

32 |

33 | audio_weights = np.array(audio_weights, dtype=np.float32)

34 |

35 | peaks, _ = find_peaks(audio_weights, height=peaks_threshold, distance=min_peaks_distance)

36 |

37 | # Generate binary peaks array: 1 for peaks, 0 for non-peaks

38 | peaks_binary = np.zeros_like(audio_weights, dtype=int)

39 | peaks_binary[peaks] = 1

40 |

41 | actual_value = 0

42 | peaks_alternate = np.zeros_like(peaks_binary)

43 | for i in range (len(peaks_binary)):

44 | if peaks_binary[i] == 1:

45 | actual_value = 1 - actual_value

46 | peaks_alternate[i] = actual_value

47 |

48 | audio_peaks_index = np.array(peaks, dtype=int)

49 | audio_peaks_index = np.insert(audio_peaks_index, 0, 0)

50 |

51 | peaks_count = len(audio_peaks_index)

52 | str_peaks_index = ', '.join(map(str, audio_peaks_index))

53 | # Generate visualization

54 | try:

55 | figsize = 12.0

56 | plt.figure(figsize=(figsize, figsize * 0.6), facecolor='white')

57 | plt.plot(range(0, len(audio_weights)), audio_weights, label='Audio Weights', color='blue', alpha=0.5)

58 | plt.scatter(peaks, audio_weights[peaks], color='red', label='Detected Peaks')

59 |

60 | plt.xlabel('Frames')

61 | plt.ylabel('Audio Weights')

62 | plt.title('Audio Weights and Detected Peaks')

63 | plt.legend()

64 | plt.grid(True)

65 |

66 | # Ensure x-axis labels are integers

67 | ax = plt.gca()

68 | ax.xaxis.set_major_locator(MaxNLocator(integer=True))

69 |

70 | with tempfile.NamedTemporaryFile(delete=False, suffix=".png") as tmpfile:

71 | plt.savefig(tmpfile.name, format='png', bbox_inches='tight')

72 | tmpfile_path = tmpfile.name

73 | plt.close()

74 |

75 | visualization = Image.open(tmpfile_path).convert("RGB")

76 | visualization = np.array(visualization).astype(np.float32) / 255.0

77 | visualization = torch.from_numpy(visualization).unsqueeze(0) # Shape: [1, H, W, C]

78 |

79 | except Exception as e:

80 | print(f"Error in creating visualization: {e}")

81 | visualization = None

82 |

83 | return (peaks_binary.tolist(), peaks_alternate.tolist(), str_peaks_index, peaks_count, visualization)

84 |

--------------------------------------------------------------------------------

/nodes/audio/EditAudioWeights.py:

--------------------------------------------------------------------------------

1 | from ... import Yvann

2 | import numpy as np

3 | import matplotlib.pyplot as plt

4 | from matplotlib.ticker import MaxNLocator

5 | import tempfile

6 | from PIL import Image

7 | import torch

8 |

9 | class AudioNodeBase(Yvann):

10 | CATEGORY = "👁️ Yvann Nodes/🔊 Audio"

11 |

12 | class EditAudioWeights(AudioNodeBase):

13 | @classmethod

14 | def INPUT_TYPES(cls):

15 | return {

16 | "required": {

17 | "any_audio_weights": ("FLOAT", {"forceInput": True}),

18 | "smooth": ("FLOAT", {"default": 0.0, "min": 0.0, "max": 1.0, "step": 0.01}),

19 | "min_range": ("FLOAT", {"default": 0.0, "min": 0.0, "max": 2.99, "step": 0.01}),

20 | "max_range": ("FLOAT", {"default": 1, "min": 0.01, "max": 3, "step": 0.01}),

21 | }

22 | }

23 |

24 | RETURN_TYPES = ("FLOAT", "IMAGE")

25 | RETURN_NAMES = ("process_weights", "graph_audio")

26 | FUNCTION = "process_any_audio_weights"

27 |

28 | CATEGORY = "👁️ Yvann Nodes/🔊 Audio"

29 |

30 | def process_any_audio_weights(self, any_audio_weights, smooth, min_range, max_range):

31 | if not isinstance(any_audio_weights, (list, np.ndarray)):

32 | print("Invalid any_audio_weights input")

33 | return None

34 |

35 | any_audio_weights = np.array(any_audio_weights, dtype=np.float32)

36 |

37 | # Apply smoothing

38 | smoothed_signal = np.zeros_like(any_audio_weights)

39 | for i in range(len(any_audio_weights)):

40 | if i == 0:

41 | smoothed_signal[i] = any_audio_weights[i]

42 | else:

43 | smoothed_signal[i] = smoothed_signal[i-1] * smooth + any_audio_weights[i] * (1 - smooth)

44 |

45 | # Normalize the smoothed signal

46 | min_val = np.min(smoothed_signal)

47 | max_val = np.max(smoothed_signal)

48 | if max_val - min_val != 0:

49 | normalized_signal = (smoothed_signal - min_val) / (max_val - min_val)

50 | else:

51 | normalized_signal = smoothed_signal - min_val # All values are the same

52 |

53 | # Rescale to specified range

54 | rescaled_signal = normalized_signal * (max_range - min_range) + min_range

55 | rescaled_signal.tolist()

56 |

57 | rounded_rescaled_signal = [round(float(elem), 6) for elem in rescaled_signal]

58 |

59 | # Plot the rescaled signal

60 | try:

61 | figsize = 12.0

62 | plt.figure(figsize=(figsize, figsize * 0.6), facecolor='white')

63 | plt.plot(

64 | list(range(len(rounded_rescaled_signal))),

65 | rounded_rescaled_signal,

66 | label='Processed Weights',

67 | color='blue'

68 | )

69 | plt.xlabel('Frames')

70 | plt.ylabel('Weights')

71 | plt.title('Processed Audio Weights')

72 | plt.legend()

73 | plt.grid(True)

74 |

75 | ax = plt.gca()

76 | ax.xaxis.set_major_locator(MaxNLocator(integer=True))

77 |

78 | with tempfile.NamedTemporaryFile(delete=False, suffix=".png") as tmpfile:

79 | plt.savefig(tmpfile.name, format='png', bbox_inches='tight')

80 | tmpfile_path = tmpfile.name

81 | plt.close()

82 |

83 | weights_graph = Image.open(tmpfile_path).convert("RGB")

84 | weights_graph = np.array(weights_graph).astype(np.float32) / 255.0

85 | weights_graph = torch.from_numpy(weights_graph).unsqueeze(0)

86 | except Exception as e:

87 | print(f"Error in creating weights graph: {e}")

88 | weights_graph = torch.zeros((1, 400, 300, 3))

89 |

90 | return (rounded_rescaled_signal, weights_graph)

91 |

--------------------------------------------------------------------------------

/__init__.py:

--------------------------------------------------------------------------------

1 | # Thanks to RyanOnTheInside, KJNodes, MTB, Fill, Akatz, Matheo, their works helped me a lot

2 | from pathlib import Path

3 | from aiohttp import web

4 | from .node_configs import CombinedMeta

5 | from collections import OrderedDict

6 | from server import PromptServer

7 |

8 | class Yvann(metaclass=CombinedMeta):

9 | @classmethod

10 | def get_description(cls):

11 | footer = "\n\n"

12 | footer = "#### 🐙 Docs, Workflows and Code: [Yvann-Nodes GitHub](https://github.com/yvann-ba/ComfyUI_Yvann-Nodes) "

13 | footer += " 👁️ Tutorials: [Yvann Youtube](https://www.youtube.com/@yvann.mp4)\n"

14 |

15 | desc = ""

16 |

17 | if hasattr(cls, 'DESCRIPTION'):

18 | desc += f"{cls.DESCRIPTION}\n\n{footer}"

19 | return desc

20 |

21 | if hasattr(cls, 'TOP_DESCRIPTION'):

22 | desc += f"{cls.TOP_DESCRIPTION}\n\n"

23 |

24 | if hasattr(cls, "BASE_DESCRIPTION"):

25 | desc += cls.BASE_DESCRIPTION + "\n\n"

26 |

27 | additional_info = OrderedDict()

28 | for c in cls.mro()[::-1]:

29 | if hasattr(c, 'ADDITIONAL_INFO'):

30 | info = c.ADDITIONAL_INFO.strip()

31 | additional_info[c.__name__] = info

32 |

33 | if additional_info:

34 | desc += "\n\n".join(additional_info.values()) + "\n\n"

35 |

36 | if hasattr(cls, 'BOTTOM_DESCRIPTION'):

37 | desc += f"{cls.BOTTOM_DESCRIPTION}\n\n"

38 |

39 | desc += footer

40 | return desc

41 |

42 | from .nodes.audio.LoadAudioSeparationModel import LoadAudioSeparationModel

43 | from .nodes.audio.AudioAnalysis import AudioAnalysis

44 | from .nodes.audio.AudioPeaksDetection import AudioPeaksDetection

45 | from .nodes.audio.AudioIPAdapterTransitions import AudioIPAdapterTransitions

46 | from .nodes.audio.AudioPromptSchedule import AudioPromptSchedule

47 | from .nodes.audio.EditAudioWeights import EditAudioWeights

48 | from .nodes.audio.AudioRemixer import AudioRemixer

49 | #from .nodes.audio.AudioControlNetSchedule import AudioControlNetSchedule

50 |

51 | from .nodes.utils.RepeatImageToCount import RepeatImageToCount

52 | from .nodes.utils.InvertFloats import InvertFloats

53 | from .nodes.utils.FloatsVisualizer import FloatsVisualizer

54 |

55 | from .nodes.convert.MaskToFloat import MaskToFloat

56 | from .nodes.convert.FloatsToWeightsStrategy import FloatsToWeightsStrategy

57 | from .nodes.convert.FloatToInt import FloatToInt

58 |

59 | #"Audio ControlNet Schedule": AudioControlNetSchedule,

60 | NODE_CLASS_MAPPINGS = {

61 | "Load Audio Separation Model": LoadAudioSeparationModel,

62 | "Audio Analysis": AudioAnalysis,

63 | "Audio Peaks Detection": AudioPeaksDetection,

64 | "Audio IPAdapter Transitions": AudioIPAdapterTransitions,

65 | "Audio Prompt Schedule": AudioPromptSchedule,

66 | "Edit Audio Weights": EditAudioWeights,

67 | "Audio Remixer": AudioRemixer,

68 |

69 | "Repeat Image To Count": RepeatImageToCount,

70 | "Invert Floats": InvertFloats,

71 | "Floats Visualizer": FloatsVisualizer,

72 |

73 | "Mask To Float": MaskToFloat,

74 | "Floats To Weights Strategy": FloatsToWeightsStrategy,

75 | "Float to Int": FloatToInt

76 | }

77 |

78 | WEB_DIRECTORY = "./web/js"

79 |

80 | #"Audio ControlNet Schedule": "Audio ControlNet Schedule",

81 | NODE_DISPLAY_NAME_MAPPINGS = {

82 | "Load Audio Separation Model": "Load Audio Separation Model",

83 | "Audio Analysis": "Audio Analysis",

84 | "Audio Peaks Detection": "Audio Peaks Detection",

85 | "Audio IPAdapter Transitions": "Audio IPAdapter Transitions",

86 | "Audio Prompt Schedule": "Audio Prompt Schedule",

87 | "Edit Audio Weights": "Edit Audio Weights",

88 | "Audio Remixer": "Audio Remixer",

89 |

90 | "Repeat Image To Count": "Repeat Image To Count",

91 | "Invert Floats": "Invert Floats",

92 | "Floats Visualizer": "Floats Visualizer",

93 |

94 | "Mask To Float": "Mask To Float",

95 | "Floats To Weights Strategy": "Floats To Weights Strategy",

96 | "Float to Int" : "Float to Int",

97 | }

98 |

99 | Yvann_Print = """

100 | 🔊 Yvann Audio Reactive & Utils Node"""

101 |

102 | print("\033[38;5;195m" + Yvann_Print +

103 | "\033[38;5;222m" + " : Loaded\n" + "\033[0m")

104 |

105 |

106 | if hasattr(PromptServer, "instance"):

107 | # NOTE: we add an extra static path to avoid comfy mechanism

108 | # that loads every script in web.

109 | PromptServer.instance.app.add_routes(

110 | [web.static("/yvann_web_async",

111 | (Path(__file__).parent.absolute() / "yvann_web_async").as_posix())]

112 | )

113 |

114 | for node_name, node_class in NODE_CLASS_MAPPINGS.items():

115 | if hasattr(node_class, 'get_description'):

116 | desc = node_class.get_description()

117 | node_class.DESCRIPTION = desc

118 |

119 | __all__ = ["NODE_CLASS_MAPPINGS",

120 | "NODE_DISPLAY_NAME_MAPPINGS", "WEB_DIRECTORY"]

121 |

--------------------------------------------------------------------------------

/nodes/audio/AudioIPAdapterTransitions.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import matplotlib.pyplot as plt

3 | from matplotlib.ticker import MaxNLocator

4 | import tempfile

5 | import numpy as np

6 | import math

7 | from PIL import Image

8 | from ... import Yvann

9 |

10 | class AudioNodeBase(Yvann):

11 | CATEGORY = "👁️ Yvann Nodes/🔊 Audio"

12 |

13 | class AudioIPAdapterTransitions(AudioNodeBase):

14 | @classmethod

15 | def INPUT_TYPES(cls):

16 | return {

17 | "required": {

18 | "images": ("IMAGE", {"forceInput": True}),

19 | "peaks_weights": ("FLOAT", {"forceInput": True}),

20 | "transition_mode": (["linear", "ease_in_out", "ease_in", "ease_out"], {"default": "linear"}),

21 | "transition_length": ("INT", {"default": 5, "min": 1, "max":100, "step": 2}),

22 | "min_IPA_weight": ("FLOAT", {"default": 0.0, "min": 0.0, "max": 1.99, "step": 0.01}),

23 | "max_IPA_weight": ("FLOAT", {"default": 1.0, "min": 0.1, "max": 2.0, "step": 0.01}),

24 | }

25 | }

26 |

27 | RETURN_TYPES = ("IMAGE", "FLOAT", "IMAGE", "FLOAT", "IMAGE")

28 | RETURN_NAMES = ("image_1", "weights", "image_2", "weights_invert", "graph_transition")

29 | FUNCTION = "process_transitions"

30 |

31 | def process_transitions(self, images, peaks_weights, transition_mode, transition_length, min_IPA_weight, max_IPA_weight):

32 |

33 | if not isinstance(peaks_weights, (list, np.ndarray)):

34 | print("Invalid peaks_weights input")

35 | return None, None, None, None, None

36 |

37 | # Convert peaks_weights to numpy array and ensure it's binary (0 or 1)

38 | peaks_binary = np.array(peaks_weights, dtype=int)

39 | total_frames = len(peaks_binary)

40 |

41 | # Generate switch indices by incrementing index at each peak

42 | switch_indices = []

43 | index_value = 0

44 | for peak in peaks_binary:

45 | if peak == 1:

46 | index_value += 1

47 | switch_indices.append(index_value)

48 |

49 | # images is a batch of images: tensor of shape [B, H, W, C]

50 | if images.dim() == 3:

51 | images = images.unsqueeze(0) # Add batch dimension if missing

52 |

53 | num_images = images.shape[0]

54 | if num_images < 2:

55 | print("At least two images are required for transitions.")

56 | return None, None, None, None, None

57 |

58 | unique_indices = sorted(set(switch_indices))

59 | num_indices = len(unique_indices)

60 |

61 | # Map indices to image indices (cycling through images if necessary)

62 | image_indices = [i % num_images for i in unique_indices]

63 |

64 | # Create a mapping from switch index to image

65 | image_mapping = {idx: images[image_idx] for idx, image_idx in zip(unique_indices, image_indices)}

66 |

67 | # Initialize blending_weights, images1, images2

68 | blending_weights = np.zeros(total_frames, dtype=np.float32)

69 | images1 = [image_mapping[switch_indices[i]] for i in range(total_frames)]

70 | images2 = images1.copy()

71 |

72 | # Identify frames where index changes

73 | change_frames = [i for i in range(1, total_frames) if switch_indices[i] != switch_indices[i - 1]]

74 |

75 | # For each transition, compute blending weights

76 | for change_frame in change_frames:

77 | start = max(0, change_frame - transition_length // 2)

78 | end = min(total_frames, change_frame + (transition_length + 1) // 2)

79 | n = end - start - 1

80 | idx_prev = switch_indices[change_frame - 1] if change_frame > 0 else switch_indices[change_frame]

81 | idx_next = switch_indices[change_frame]

82 |

83 | for i in range(start, end):

84 | t = (i - start) / n if n > 0 else 1.0

85 |

86 | # Compute blending weight based on transition_mode

87 | if transition_mode == "linear":

88 | blending_weight = t

89 | elif transition_mode == "ease_in_out":

90 | blending_weight = (1 - math.cos(t * math.pi)) / 2

91 | elif transition_mode == "ease_in":

92 | blending_weight = math.sin(t * math.pi / 2)

93 | elif transition_mode == "ease_out":

94 | blending_weight = 1 - math.cos(t * math.pi / 2)

95 | else:

96 | blending_weight = t

97 |

98 | blending_weight = min(max(blending_weight, 0.0), 1.0)

99 |

100 | # Update blending_weights

101 | blending_weights[i] = blending_weight

102 |

103 | # Update images1 and images2

104 | images1[i] = image_mapping[idx_prev]

105 | images2[i] = image_mapping[idx_next]

106 |

107 | # Now, blending_weights correspond to image_2

108 | blending_weights_raw = blending_weights.copy() # Keep the raw weights for internal use

109 |

110 | # Apply custom range to weights

111 | blending_weights = blending_weights * (max_IPA_weight - min_IPA_weight) + min_IPA_weight

112 | blending_weights = [round(w, 6) for w in blending_weights]

113 | weights_invert = [(max_IPA_weight + min_IPA_weight) - w for w in blending_weights]

114 | weights_invert = [round(w, 6) for w in weights_invert]

115 |

116 | # Convert lists to tensors

117 | images1 = torch.stack(images1)

118 | images2 = torch.stack(images2)

119 | blending_weights_tensor = torch.tensor(blending_weights_raw, dtype=images1.dtype).view(-1, 1, 1, 1)

120 |

121 | # Ensure blending weights are compatible with image dimensions

122 | blending_weights_tensor = blending_weights_tensor.to(images1.device)

123 |

124 | # Generate visualization of transitions

125 | try:

126 | figsize = 12.0

127 | plt.figure(figsize=(figsize, 8), facecolor='white')

128 |

129 | blending_weights_array = np.array(blending_weights_raw)

130 | plt.plot(range(0, len(blending_weights_array)), blending_weights_array, label='Blending Weights', color='green', alpha=0.7)

131 | plt.scatter(change_frames, blending_weights_array[change_frames], color='red', label='Transition')

132 |

133 | plt.xlabel('Frames', fontsize=12)

134 | plt.title('Images Transitions over Frames', fontsize=14)

135 | plt.legend()

136 | plt.grid(True)

137 |

138 | # Remove Y-axis labels

139 | plt.yticks([])

140 |

141 | # Ensure x-axis labels are integers

142 | ax = plt.gca()

143 | ax.xaxis.set_major_locator(MaxNLocator(integer=True, prune='both'))

144 |

145 | with tempfile.NamedTemporaryFile(delete=False, suffix=".png") as tmpfile:

146 | plt.savefig(tmpfile.name, format='png', bbox_inches='tight')

147 | tmpfile_path = tmpfile.name

148 | plt.close()

149 |

150 | visualization = Image.open(tmpfile_path).convert("RGB")

151 | visualization = np.array(visualization).astype(np.float32) / 255.0

152 | visualization = torch.from_numpy(visualization).unsqueeze(0) # Shape: [1, C, H, W]

153 |

154 | except Exception as e:

155 | print(f"Error in creating visualization: {e}")

156 | visualization = None

157 |

158 | # Return values with adjusted weights and images

159 | return images2, blending_weights, images1, weights_invert, visualization

160 |

--------------------------------------------------------------------------------

/node_configs.py:

--------------------------------------------------------------------------------

1 | #NOTE: this abstraction allows for both the documentation to be centrally managed and inherited

2 | from abc import ABCMeta

3 | class NodeConfigMeta(type):

4 | def __new__(cls, name, bases, attrs):

5 | new_class = super().__new__(cls, name, bases, attrs)

6 | if name in NODE_CONFIGS:

7 | for key, value in NODE_CONFIGS[name].items():

8 | setattr(new_class, key, value)

9 | return new_class

10 |

11 | class CombinedMeta(NodeConfigMeta, ABCMeta):

12 | pass

13 |

14 | def add_node_config(node_name, config):

15 | NODE_CONFIGS[node_name] = config

16 |

17 | NODE_CONFIGS = {}

18 |

19 | add_node_config("LoadAudioSeparationModel", {

20 | "BASE_DESCRIPTION": """

21 | Load an audio separation model, If unavailable :

22 | downloads to `ComfyUI/models/audio_separation_model/

23 |

24 | **Parameters:**

25 |

26 | - **model**: Audio separation model to load

27 | - [HybridDemucs](https://github.com/facebookresearch/demucs): Most accurate fastest and lightweight

28 | - [OpenUnmix](https://github.com/sigsep/open-unmix-pytorch): Alternative model

29 |

30 | **Outputs:**

31 |

32 | - **audio_sep_model**: Loaded audio separation model

33 | Connect it to "Audio Analysis" or "Audio Remixer"

34 | """

35 | })

36 |

37 | add_node_config("AudioAnalysis", {

38 | "BASE_DESCRIPTION": """

39 | Analyzes audio to generate reactive weights and graph

40 | Can extract specific elements like drums, vocals, bass

41 | Parameters allow manual control over audio weights

42 |

43 | **Inputs:**

44 |

45 | - **audio_sep_model**: Loaded model from "Load Audio Separation Model"

46 | - **audio**: Input audio file

47 | - **batch_size**: Number of frames to associate with audio weights

48 | - **fps**: Frames per second for processing audio weights

49 |

50 | **Parameters:**

51 |

52 | - **analysis_mode**: Select audio component to analyze

53 | - **threshold**: Minimum weight value to pass through

54 | - **multiply**: Amplification factor for weights before normalization

55 |

56 | **Outputs:**

57 |

58 | - **graph_audio**: Graph image of audio weights over frames

59 | - **processed_audio**: Separated or processed audio (e.g., drums vocals)

60 | - **original_audio**: Original unmodified audio input

61 | - **audio_weights**: List of audio-reactive weights based on processed audio

62 | """

63 | })

64 |

65 | add_node_config("AudioPeaksDetection", {

66 | "BASE_DESCRIPTION": """

67 | Detects peaks in audio weights based on a threshold and minimum distance

68 | Identifies significant audio events to trigger visual changes or actions

69 |

70 | **Inputs:**

71 |

72 | - **audio_weights**: "audio_weights" from "Audio Analysis"

73 |

74 | **Parameters:**

75 |

76 | - **peaks_threshold**: Threshold for peak detection

77 | - **min_peaks_distance**: Minimum frames between consecutive peaks

78 | help remove close unwanted peaks around big peaks

79 |

80 | **Outputs:**

81 |

82 | - **peaks_weights**: Binary list indicating peak presence (1 for peak 0 otherwise)

83 | - **peaks_alternate_weights**: Alternating binary list based on detected peaks

84 | - **peaks_index**: String of peak indices

85 | - **peaks_count**: Total number of detected peaks

86 | - **graph_peaks**: Visualization image of detected peaks over audio weights

87 | """

88 | })

89 |

90 | add_node_config("AudioIPAdapterTransitions", {

91 | "BASE_DESCRIPTION": """

92 | Uses "peaks_weights" from "Audio Peaks Detection" to control image transitions based on audio peaks

93 | Outputs images and weights for two IPAdapter batches, logic from "IPAdapter Weights", [IPAdapter_Plus](https://github.com/cubiq/ComfyUI_IPAdapter_plus)

94 |

95 | **Inputs:**

96 |

97 | - **images**: Batch of images for transitions, Loops images to match peak count

98 | - **peaks_weights**: List of audio peaks from "Audio Peaks Detection"

99 |

100 | **Parameters:**

101 |

102 | - **blend_mode**: transition method applied to weights

103 | - **transitions_length**: Frames used to blend between images

104 | - **min_IPA_weight**: Minimum weight applied by IPAdapter per frame

105 | - **max_IPA_weight**: Maximum weight applied by IPAdapter per frame

106 |

107 | **Outputs:**

108 |

109 | - **image_1**: Starting image for transition Connect to first IPAdapter batch "image"

110 | - **weights**: Blending weights for transitions Connect to first IPAdapter batch "weight"

111 | - **image_2**: Ending image for transition Connect to second IPAdapter batch "image"

112 | - **weights_invert**: Inversed weights Connect to second IPAdapter batch "weight"

113 | - **graph_transitions**: Visualization of weight transitions over frames

114 | """

115 | })

116 |

117 | add_node_config("AudioPromptSchedule", {

118 | "BASE_DESCRIPTION": """

119 | Associates "prompts" with "peaks_index" into a scheduled format

120 | Connect output to "batch prompt schedule" of [Fizz Nodes](https://github.com/FizzleDorf/ComfyUI_FizzNodes)

121 | add an empty line between each individual prompts

122 |

123 | **Inputs:**

124 |

125 | - **peaks_index**: frames where peaks occurs from "Audio Peaks Detections"

126 | - **prompts**: Multiline string of prompts for each index

127 |

128 | **Outputs:**

129 |

130 | - **prompt_schedule**: String mapping each audio index to a prompt

131 | """

132 | })

133 |

134 | add_node_config("EditAudioWeights", {

135 | "BASE_DESCRIPTION": """

136 | Smooths and rescales audio weights, Connect to "Multival [Float List]"

137 | from [AnimateDiff-Evolved](https://github.com/Kosinkadink/ComfyUI-AnimateDiff-Evolved) to schedule motion with audio

138 | or to "Latent Keyframe From List" to schedule ControlNet Apply

139 |

140 | **Inputs:**

141 |

142 | - **any_audio_weights**: audio weights from "Audio Peaks Detection"

143 | or "Audio Analysis", basically any *_weights audio

144 |

145 | **Parameters:**

146 |

147 | - **smooth**: Smoothing factor (0.0 to 1.0) Higher values result in smoother transitions

148 | - **min_range**: Minimum value of the rescaled weights

149 | AnimateDiff multival works better between 0.9 and 1.3 range

150 | - **max_range**: Maximum value of the rescaled weights

151 |

152 | **Outputs:**

153 |

154 | - **float_val**: Smoothed and rescaled audio weights

155 | connect it to AD multival to influence the motion with audio

156 | """

157 | })

158 |

159 | add_node_config("AudioRemixer", {

160 | "BASE_DESCRIPTION": """

161 | Modify input audio by adjusting the intensity of drums bass vocals or others elements

162 |

163 | **Inputs:**

164 |

165 | - **audio_sep_model**: Loaded model from "Load Audio Separation Model"

166 | - **audio**: Input audio file

167 |

168 | **Parameters:**

169 |

170 | - **bass_volume**: Adjusts bass volume

171 | - **drums_volume**: Adjusts drums volume

172 | - **others_volume**: Adjusts others elements' volume

173 | - **vocals_volume**: Adjusts vocals volume

174 |

175 | **Outputs:**

176 |

177 | - **merged_audio**: Composition of separated tracks with applied modifications

178 | """

179 | })

180 |

181 | add_node_config("RepeatImageToCount", {

182 | "BASE_DESCRIPTION": """

183 | Repeats images N times, Cycles inputs if N > images

184 | **Inputs:**

185 |

186 | - **image**: Batch of input images to repeat

187 | - **count**: Number of repetitions

188 |

189 | **Outputs:**

190 |

191 | - **images**: Batch of repeated images matching the specified count

192 | """

193 | })

194 |

195 | add_node_config("InvertFloats", {

196 | "BASE_DESCRIPTION": """

197 | Inverts each value in a list of floats

198 |

199 | **Inputs:**

200 |

201 | - **floats**: List of float values to invert

202 |

203 | **Outputs:**

204 |

205 | - **inverted_floats**: Inverted list of float values

206 | """

207 | })

208 |

209 | add_node_config("FloatsVisualizer", {

210 | "BASE_DESCRIPTION": """

211 | Generates a graph from floats for visual data comparison

212 | Useful to compare audio weights

213 |

214 | **Inputs:**

215 |

216 | - **floats**: Primary list of floats to visualize

217 | - **floats_optional1**: (Optional) Second list of floats

218 | - **floats_optional2**: (Optional) Third list of floats

219 |

220 | **Parameters:**

221 |

222 | - **title**: Graph title

223 | - **x_label**: Label for the x-axis

224 | - **y_label**: Label for the y-axis

225 |

226 | **Outputs:**

227 |

228 | - **visual_graph**: Visual graph of provided floats

229 | """

230 | })

231 |

232 | add_node_config("MaskToFloat", {

233 | "BASE_DESCRIPTION": """

234 | Converts mask into float

235 | works with batch of mask

236 | **Inputs:**

237 |

238 | - **mask**: Mask input to convert

239 |

240 | **Outputs:**

241 |

242 | - **float**: Float value

243 | """

244 | })

245 |

246 | add_node_config("FloatsToWeightsStrategy", {

247 | "BASE_DESCRIPTION": """

248 | Converts a list of floats into an IPAdapter weights strategy format

249 | Use with "IPAdapter Weights From Strategy" or "Prompt Schedule From Weights Strategy"

250 | to integrate output into [IPAdapter](https://github.com/cubiq/ComfyUI_IPAdapter_plus) pipeline

251 |

252 | **Inputs:**

253 |

254 | - **floats**: List of float values to convert

255 |

256 | **Outputs:**

257 |

258 | - **WEIGHTS_STRATEGY**: Dictionary of the weights strategy

259 | """

260 | })

261 |

--------------------------------------------------------------------------------

/nodes/audio/AudioRemixer.py:

--------------------------------------------------------------------------------

1 | import torch

2 | import torchaudio

3 |

4 | # from IPython.display import Audio

5 | # from mir_eval import separation

6 | from torchaudio.pipelines import HDEMUCS_HIGH_MUSDB_PLUS

7 |

8 | from typing import Dict, Tuple, Any

9 | from torchaudio.transforms import Fade, Resample

10 | from termcolor import colored

11 |

12 | from ... import Yvann

13 |

14 | class AudioNodeBase(Yvann):

15 | CATEGORY = "👁️ Yvann Nodes/🔊 Audio"

16 |

17 | class AudioRemixer(AudioNodeBase):

18 |

19 | @classmethod

20 | def INPUT_TYPES(cls):

21 | return {

22 | "required": {

23 | "audio_sep_model": ("AUDIO_SEPARATION_MODEL", {"forceInput": True}),

24 | "audio": ("AUDIO", {"forceInput": True}),

25 | "drums_volume": ("FLOAT", {"default": 0.0, "min": -10.0, "max": 10, "step": 0.1}),

26 | "vocals_volume": ("FLOAT", {"default": 0.0, "min": -10.0, "max": 10, "step": 0.1}),

27 | "bass_volume": ("FLOAT", {"default": 0.0, "min": -10.0, "max": 10, "step": 0.1}),

28 | "others_volume": ("FLOAT", {"default": 0.0, "min": -10.0, "max": 10, "step": 0.1}),

29 | }

30 | }

31 |

32 | RETURN_TYPES = ("AUDIO",)

33 | RETURN_NAMES = ("merged_audio",)

34 | FUNCTION = "main"

35 |

36 |

37 | def main(self, audio_sep_model, audio: Dict[str, torch.Tensor], drums_volume: float, vocals_volume: float, bass_volume: float, others_volume: float) -> tuple[torch.Tensor]:

38 |

39 | model = audio_sep_model

40 | # 1. Prepare audio and device

41 | device, waveform = self.prepare_audio_and_device(audio)

42 |

43 | # 2. Apply model and extract sources

44 | sources, sources_list = self.apply_model_and_extract_sources(model, waveform, device)

45 |

46 | if sources is None:

47 | return None # Return if the model is unrecognized

48 |

49 | # 3. Adjust volumes and merge sources

50 | merge_audio = self.process_and_merge_audio(sources, sources_list, drums_volume, vocals_volume, bass_volume, others_volume)

51 |

52 | return (merge_audio,)

53 |

54 |

55 | def prepare_audio_and_device(self, audio: Dict[str, torch.Tensor]) -> Tuple[torch.device, torch.Tensor]:

56 | """Prepares the device (GPU or CPU) and sets up the audio waveform."""

57 | device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

58 | waveform = audio['waveform'].squeeze(0).to(device)

59 | self.audio_sample_rate = audio['sample_rate']

60 | return device, waveform

61 |

62 |

63 | def apply_model_and_extract_sources(self, model, waveform: torch.Tensor, device: torch.device) -> Tuple[torch.Tensor, list[str]]:

64 | """Applies the model and extracts audio sources, handling both Open-Unmix and GDemucs cases."""

65 | sources, sources_list = None, []

66 |

67 | if isinstance(model, torch.nn.Module): # Open-Unmix model

68 | print(colored("Applying Open_Unmix model on audio.", 'green'))

69 | self.model_sample_rate = int(model.sample_rate)

70 |

71 | if self.audio_sample_rate != self.model_sample_rate:

72 | resampler = torchaudio.transforms.Resample(orig_freq=self.audio_sample_rate, new_freq=self.model_sample_rate).to(device)

73 | waveform = resampler(waveform)

74 | sources = model(waveform.unsqueeze(0)).squeeze(0)

75 | sources_list = ['bass', 'drums', 'other', 'vocals']

76 |

77 | elif "demucs" in model and model["demucs"]: # GDemucs model

78 | print(colored("Applying GDemucs model on audio", 'green'))

79 | self.model_sample_rate = int(model["sample_rate"])

80 | model = model["model"]

81 |

82 | if self.audio_sample_rate != self.model_sample_rate:

83 | resampler = torchaudio.transforms.Resample(orig_freq=self.audio_sample_rate, new_freq=self.model_sample_rate).to(device)

84 | waveform = resampler(waveform)

85 | ref = waveform.mean(0)

86 | waveform = (waveform - ref.mean()) / ref.std()

87 | sources = self.separate_sources(model, waveform[None], segment=10.0, overlap=0.1, device=device)[0]

88 | sources = sources * ref.std() + ref.mean()

89 | sources_list = model.sources

90 |

91 | else:

92 | print(colored("Unrecognized model type", 'red'))

93 | return None, []

94 |

95 | return sources, sources_list

96 |

97 |

98 | def process_and_merge_audio(self, sources: torch.Tensor, sources_list: list[str], drums_volume: float, vocals_volume: float, bass_volume: float, others_volume: float) -> torch.Tensor:

99 | """Adjusts source volumes and merges them into a single audio output"""

100 | required_sources = ['bass', 'drums', 'other', 'vocals']

101 | for source in required_sources:

102 | if source not in sources_list:

103 | print(colored(f"Warning: '{source}' not found in sources_list", 'yellow'))

104 |

105 | # Adjust volume levels

106 | drums_volume = self.adjust_volume_range(drums_volume)

107 | vocals_volume = self.adjust_volume_range(vocals_volume)

108 | bass_volume = self.adjust_volume_range(bass_volume)

109 | others_volume = self.adjust_volume_range(others_volume)

110 |

111 | # Convert to tuple and blend

112 | audios = self.sources_to_tuple(drums_volume, vocals_volume, bass_volume, others_volume, dict(zip(sources_list, sources)))

113 | return self.blend_audios([audios[0]["waveform"], audios[1]["waveform"], audios[2]["waveform"], audios[3]["waveform"]])

114 |

115 | def adjust_volume_range(self, value):

116 | if value <= -10:

117 | return 0

118 | elif value >= 10:

119 | return 10

120 | elif value <= 0:

121 | return (value + 10) / 10

122 | else:

123 | return 1 + (value / 10) * 9

124 |

125 | def blend_audios(self, audio_tensors):

126 | blended_audio = sum(audio_tensors)

127 |

128 | if blended_audio.dim() == 2:

129 | blended_audio = blended_audio.unsqueeze(0)

130 |

131 | return {

132 | "waveform": blended_audio.cpu(),

133 | "sample_rate": self.model_sample_rate,

134 | }

135 |

136 | def sources_to_tuple(self, drums_volume, vocals_volume, bass_volume, others_volume, sources: Dict[str, torch.Tensor]) -> Tuple[Any, Any, Any, Any]:

137 |

138 | threshold = 0.00

139 |

140 | output_order = ["bass", "drums", "other", "vocals"]

141 | outputs = []

142 |

143 | for source in output_order:

144 | if source not in sources:

145 | raise ValueError(f"Missing source {source} in the output")

146 | outputs.append(

147 | {

148 | "waveform": sources[source].cpu().unsqueeze(0),

149 | "sample_rate": self.model_sample_rate,

150 | }

151 | )

152 |

153 | for i, volume in enumerate([bass_volume, drums_volume, others_volume, vocals_volume]):

154 | waveform = outputs[i]["waveform"]

155 | mask = torch.abs(waveform) > threshold

156 | outputs[i]["waveform"] = waveform * volume * mask.float() + waveform * (1 - mask.float())

157 |

158 | return tuple(outputs)

159 |

160 | def separate_sources(self, model, mix, segment=10.0, overlap=0.1, device=None,

161 | ):

162 | """

163 | Apply model to a given mixture. Use fade, and add segments together in order to add model segment by segment.

164 |

165 | Args:

166 | segment (int): segment length in seconds

167 | device (torch.device, str, or None): if provided, device on which to

168 | execute the computation, otherwise `mix.device` is assumed.

169 | When `device` is different from `mix.device`, only local computations will

170 | be on `device`, while the entire tracks will be stored on `mix.device`.

171 | """

172 | if device is None:

173 | device = mix.device

174 | else:

175 | device = torch.device(device)

176 |

177 | batch, channels, length = mix.shape

178 | sample_rate = self.model_sample_rate

179 |

180 | chunk_len = int(sample_rate * segment * (1 + overlap))

181 | start = 0

182 | end = chunk_len

183 | overlap_frames = overlap * sample_rate

184 | fade = Fade(fade_in_len=0, fade_out_len=int(overlap_frames), fade_shape="linear")

185 |

186 | final = torch.zeros(batch, len(model.sources), channels, length, device=device)

187 |

188 | while start < length - overlap_frames:

189 | chunk = mix[:, :, start:end]

190 | with torch.no_grad():

191 | out = model.forward(chunk)

192 | out = fade(out)

193 | final[:, :, :, start:end] += out

194 | if start == 0:

195 | fade.fade_in_len = int(overlap_frames)

196 | start += int(chunk_len - overlap_frames)

197 | else:

198 | start += chunk_len

199 | end += chunk_len

200 | if end >= length:

201 | fade.fade_out_len = 0

202 | return final

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # 🔊 ComfyUI_Yvann-Nodes [](https://www.youtube.com/channel/yvann_ba)

2 |

3 | #### Made with the great help of [Lilien](https://x.com/Lilien_RIG) 😎

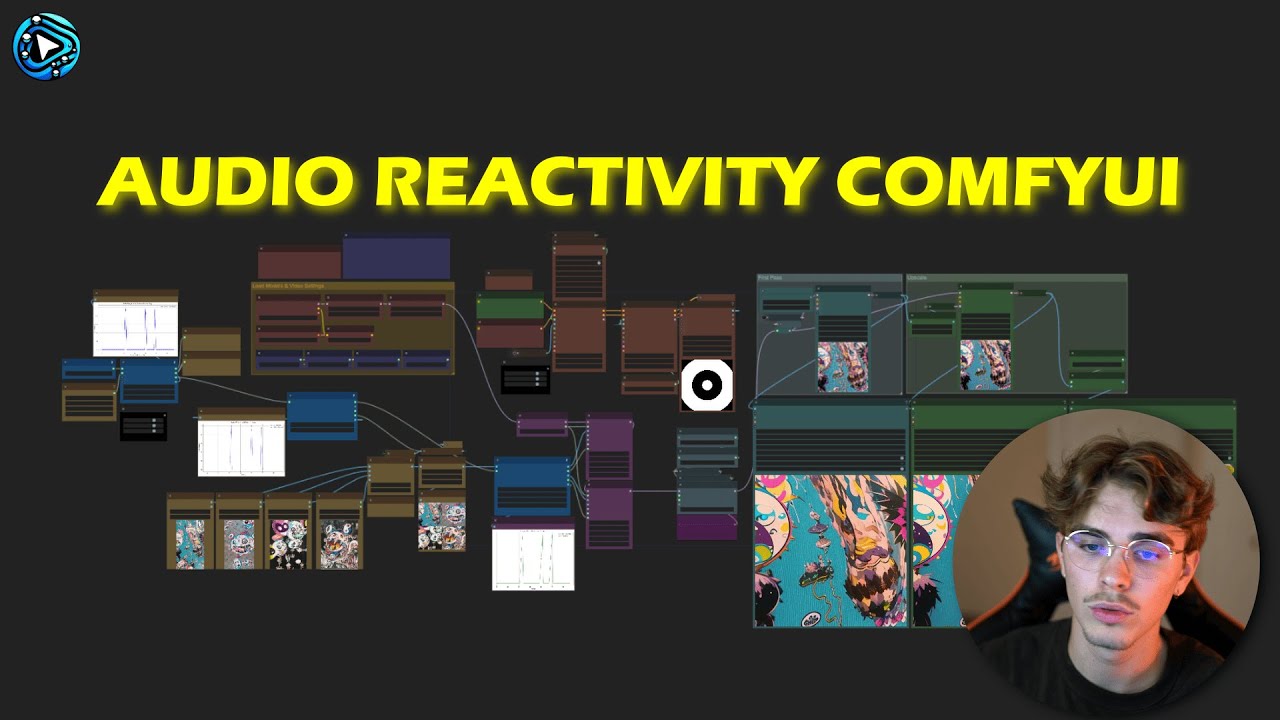

4 | ### **A pack of custom nodes that enable audio reactivity within [ComfyUI](https://github.com/comfyanonymous/ComfyUI), allowing you to generate AI-driven animations that sync with music**

5 |

6 | ---

7 |

8 | ## What Does This Do?

9 |

10 | - **Create** Audio Reactive AI videos, enable controls over AI generations styles, content and composition with any audio

11 | - **Simple**: Just Drop one of our [Workflows](/example_workflows) in ComfyUI and specify your audio and visuals input

12 | - **Flexible**: Works with existing ComfyUI AI tech and nodes (eg: IPAdapter, AnimateDiff, ControlNet, etc.)

13 |

14 | ---

15 |

16 | ## Video result (generated by our amazing users 😁)

17 |

18 |

19 | #### Audio Reactive Images to Video ⬇️

20 |

21 |

22 | |

23 |

24 | |

25 |

26 |

27 | |

28 |

29 |

30 | |

31 |

32 |

33 |

34 |

35 |

36 | |

37 |

38 | |

39 |

40 |

41 | |

42 |

43 |

44 | |

45 |

46 |

47 |

48 |

49 |

50 | #### Audio Reactive Video to Video ⬇️

51 |

52 |

53 | |

54 |

55 | |

56 |

57 |

58 | |

59 |

60 |

61 |

62 |

63 |

64 |

65 | |

66 |

67 | |

68 |

69 |

70 |

71 | ## Quick Setup

72 |

73 | - Install [ComfyUI](https://github.com/comfyanonymous/ComfyUI) and [ComfyUI-Manager](https://github.com/ltdrdata/ComfyUI-Manager)

74 |

75 | ### Pick a Workflow (Images → Video or Video → Video)

76 |

77 | 1. **Images → Video**

78 | - Takes a **set of images** plus an **audio** track.

79 | - *Watch Tutorial*:

80 | [](https://www.youtube.com/watch?v=O2s6NseXlMc)

81 |

82 | 2. **Video → Video**

83 | - Takes a **source video** plus an **audio** track.

84 | - *Watch Tutorial*:

85 | [](https://www.youtube.com/watch?v=BiQHWKP3q0c)

86 |

87 | ---

88 |

89 | ### Load Your Chosen Workflow in ComfyUI

90 |

91 | 1. **Download** the `.json` file for the workflow you picked:

92 | - [AudioReactive_ImagesToVideo_Yvann.json](example_workflows/AudioReactive_ImagesToVideo_Yvann.json)

93 | - [AudioReactive_VideoToVideo_Yvann.json](example_workflows/AudioReactive_VideoToVideo_Yvann.json)

94 |

95 | 2. **Drop** the `.json` file into the **ComfyUI window**.

96 |

97 | 3. **Open the "🧩 Manager"** → **"Install Missing Custom Nodes"**

98 | - Install each pack of nodes that appears.

99 | - **Restart** ComfyUI if prompted.

100 |

101 | 4. **Set Your Inputs & Generate**

102 | - Provide the inputs needed (everything explained [here](https://www.youtube.com/@yvann_ba)

103 | - Click **Queue** button to produce your **audio-reactive** animation!

104 |

105 | **That's it!** Have fun playing with the differents settings now !!

106 | (if you have any questions or problems, check my [Youtube Tutorials](https://www.youtube.com/@yvann_ba)

107 |

108 | ---

109 |

110 | ## Nodes Details

111 |

112 |

113 | Click to Expand: Node-by-Node Reference

114 |

115 | ### Audio Analysis 🔍

116 | Analyzes audio to generate reactive weights for each frame.

117 |

118 | Node Parameters

119 |

120 | - **audio_sep_model**: Model from "Load Audio Separation Model"

121 | - **audio**: Input audio file

122 | - **batch_size**: Frames to associate with audio weights

123 | - **fps**: Frame rate for the analysis

124 |

125 | **Parameters**:

126 | - **analysis_mode**: e.g., Drums Only, Vocals, Full Audio

127 | - **threshold**: Minimum weight pass-through

128 | - **multiply**: Amplification factor

129 |

130 | **Outputs**:

131 | - **graph_audio** (image preview),

132 | - **processed_audio**, **original_audio**,

133 | - **audio_weights** (list of values).

134 |

135 |

136 |

137 | ---

138 |

139 | ### Load Audio Separation Model 🎧

140 | Loads or downloads an audio separation model (e.g., HybridDemucs, OpenUnmix).

141 |

142 | Node Parameters

143 |

144 | - **model**: Choose between HybridDemucs / OpenUnmix.

145 | - **Outputs**: **audio_sep_model** (connect to Audio Analysis or Remixer).

146 |

147 |

148 |

149 | ---

150 |

151 | ### Audio Peaks Detection 📈

152 | Identifies peaks in the audio weights to trigger transitions or events.

153 |

154 | Node Parameters

155 |

156 | - **peaks_threshold**: Sensitivity.

157 | - **min_peaks_distance**: Minimum gap in frames between peaks.

158 | - **Outputs**: Binary peak list, alternate list, peak indices/count, graph.

159 |

160 |

161 |

162 | ---

163 |

164 | ### Audio IP Adapter Transitions 🔄

165 | Manages transitions between images based on peaks. Great for stable or style transitions.

166 |

167 | Node Parameters

168 |

169 | - **images**: Batch of images.

170 | - **peaks_weights**: From "Audio Peaks Detection".

171 | - **blend_mode**, **transitions_length**, **min_IPA_weight**, etc.

172 |

173 |

174 |

175 | ---

176 |

177 | ### Audio Prompt Schedule 📝

178 | Links text prompts to peak indices.

179 |

180 | Node Parameters

181 |

182 | - **peaks_index**: Indices from peaks detection.

183 | - **prompts**: multiline string.

184 | - **Outputs**: mapped schedule string.

185 |

186 |

187 |

188 | ---

189 |

190 | ### Audio Remixer 🎛️

191 | Adjusts volume levels (drums, vocals, bass, others) in a track.

192 |

193 | Node Parameters

194 |

195 | - **drums_volume**, **vocals_volume**, **bass_volume**, **others_volume**

196 | - **Outputs**: single merged audio track.

197 |

198 |

199 |

200 | ---

201 |

202 | ### Repeat Image To Count 🔁

203 | Repeats a set of images N times.

204 |

205 | Node Parameters

206 |

207 | - **mask**: Mask input.

208 | - **Outputs**: Repeated images.

209 |

210 |

211 |

212 | ---

213 |

214 | ### Invert Floats 🔄

215 | Flips sign of float values.

216 |

217 | Node Parameters

218 |

219 | - **floats**: list of floats.

220 | - **Outputs**: inverted list.

221 |

222 |

223 |

224 | ---

225 |

226 | ### Floats Visualizer 📈

227 | Plots float values as a graph.

228 |

229 | Node Parameters

230 |

231 | - **floats** (and optional second/third).

232 | - **Outputs**: visual graph image.

233 |

234 |

235 |

236 | ---

237 |

238 | ### Mask To Float 🎭

239 | Converts a mask into a single float value.

240 |

241 | Node Parameters

242 |

243 | - **mask**: input.

244 | - **Outputs**: float.

245 |

246 |

247 |

248 | ---

249 |

250 | ### Floats To Weights Strategy 🏋️

251 | Transforms float lists into an IPAdapter "weight strategy."

252 |

253 | Node Parameters

254 |

255 | - **floats**: list of floats.

256 | - **Outputs**: dictionary with strategy info.

257 |

258 |

259 |

260 |

261 |

262 | ---

263 |

264 | Please give a ⭐ on GitHub it helps us enhance our Tool and it's Free !! (:

265 |

266 |

267 |

268 |

269 |

270 |

271 |

272 |  273 |

274 |

275 |

273 |

274 |

275 |

276 |

277 |

278 |

279 |  280 |

281 |

280 |

281 |

282 |

--------------------------------------------------------------------------------

/web/js/help_popup.js:

--------------------------------------------------------------------------------

1 | import { app } from "../../../scripts/app.js";

2 |

3 | // code based on mtb nodes by Mel Massadian https://github.com/melMass/comfy_mtb/

4 | export const loadScript = (

5 | FILE_URL,

6 | async = true,

7 | type = 'text/javascript',

8 | ) => {

9 | return new Promise((resolve, reject) => {

10 | try {