├── .gitignore

├── LICENSE

├── README.md

├── README_CN.md

├── docs

├── _config.yml

└── index.md

├── v1.0

└── main.py

├── v2.0

└── gesture.py

└── v3.0

├── 01_image_processing_and_data_augmentation.ipynb

├── 02_munge_data.py

├── 03_Modeling_and_Inference.ipynb

├── LICENSE

├── README.md

├── modeling_data

└── aug_data

│ └── annotations.csv

├── ord.txt

├── windows_v1.8.1

├── data

│ └── predefined_classes.txt

└── labelImg.exe

└── yolov5

├── .dockerignore

├── .gitattributes

├── .github

├── ISSUE_TEMPLATE

│ ├── --bug-report.md

│ ├── --feature-request.md

│ └── -question.md

└── workflows

│ ├── ci-testing.yml

│ ├── greetings.yml

│ ├── rebase.yml

│ └── stale.yml

├── .gitignore

├── Dockerfile

├── LICENSE

├── README.md

├── config.yaml

├── detect.py

├── hubconf.py

├── models

├── __init__.py

├── common.py

├── experimental.py

├── export.py

├── hub

│ ├── yolov3-spp.yaml

│ ├── yolov5-fpn.yaml

│ └── yolov5-panet.yaml

├── yolo.py

├── yolov5l.yaml

├── yolov5m.yaml

├── yolov5s.yaml

└── yolov5x.yaml

├── requirements.txt

├── sotabench.py

├── test.py

├── train.py

├── tutorial.ipynb

├── utils

├── __init__.py

├── activations.py

├── datasets.py

├── evolve.sh

├── general.py

├── google_app_engine

│ ├── Dockerfile

│ ├── additional_requirements.txt

│ └── app.yaml

├── google_utils.py

└── torch_utils.py

├── weights

└── download_weights.sh

└── yolo_data

└── labels

├── train.cache

└── validation.cache

/.gitignore:

--------------------------------------------------------------------------------

1 | # visual studio code

2 | .vscode/

3 | .idea/

4 |

5 | # python

6 | venv/

7 | virtualenv/

8 | __pycache__

9 |

10 | # misc

11 | .DS_Store

12 |

13 | # results

14 | *.npy

15 | *.npz

16 | *.png

17 | *.PNG

18 | *.jpg

19 | *.JPG

20 | *.jpeg

21 |

22 | # notebook checkpoints

23 | .ipynb_checkpoints

24 |

25 | # path

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | ## Hi 👋

2 |

3 | Come here, don’t you star this progect? & Forgive my pool English.

4 |

5 | Welcome to star this repo!

6 |

7 | Mid-air brush [Demo]

8 |

9 | README [EN|CN]

10 |

11 | ## Description

12 |

13 | Mid-air gesture recognition and drawing, the default gesture 1 is a brush, gesture 2 is to change the color, and gesture 5 is to clear the drawing board

14 | Display based on OpenCV.

15 |

16 |

17 | ## Change Log

18 |

19 | ### v3.0

20 |

21 | This version of the project is based on GA_Data_Science_Capstone

22 |

23 | Use Yolo_v5 to recognize gestures and index fingers for drawing. Please make your own gesture dataset and label them. Data preprocessing is in files 01 and 02.

24 | The project can be run on Raspberry Pi, use the Raspberry Pi to collect images and push them to the computer for reasoning, there is a delay.

25 |

26 | #### How to run

27 |

28 | ```sh

29 | cd v3.0

30 | pip install -r requirements.txt

31 | jupyter notebook

32 |

33 | # open and run 01_image_processing_and_data_augmentation.ipynb

34 |

35 | # run labelImg to label data 1, 2, 5, forefinger

36 |

37 | python 02_munge_data.py

38 |

39 | # train model

40 | python train.py --img 512 --batch 16 --epochs 100 --data config.yaml --cfg models/yolov5s.yaml --name yolo_example

41 | tensorboard --logdir runs/

42 |

43 | # run use pc cam

44 | python detect.py --weights weights/best.pt --img 512 --conf 0.3 --source 0

45 |

46 | # run use raspi

47 | # run on raspi

48 | sudo raspivid -o - -rot 180 -t 0 -w 640 -h 360 -fps 30|cvlc -vvv stream:///dev/stdin --sout '#standard{access=http,mux=ts,dst=:8080}' :demux=h264

49 | # run on pc

50 | python detect.py --weights runs/exp12_yolo_example/weights/best.pt --img 512 --conf 0.15 --source http://192.168.43.46:8080/

51 | ```

52 |

53 | ### v2.0

54 |

55 | Gesture recognition based on OpenCV and convex hull detection.

56 | Skin color detection + convex hull + number of contour lines (count the number of fingers).

57 |

58 | #### How to run

59 |

60 | ```sh

61 | cd v2.0

62 | python gesture.py

63 | ```

64 |

65 |

66 |

67 | ### v1.0

68 |

69 | Skin color detection + convex hull based on OpenCV.

70 |

71 |

72 | #### How to run

73 | ```sh

74 | cd v1.0

75 | python main.py

76 | ```

77 |

78 |

79 |

80 |

--------------------------------------------------------------------------------

/README_CN.md:

--------------------------------------------------------------------------------

1 | ## Hi 👋

2 |

3 | 来都来了,不点个小星星吗?

4 |

5 | Welcome to star this repo

6 |

7 | 凌空画笔 [Demo]

8 |

9 | README [EN|CN]

10 |

11 | ## Description

12 |

13 | 凌空手势识别和绘制,默认手势1是画笔,手势2是更换颜色,手势5是清空画板

14 | 显示基于OpenCV

15 |

16 |

17 | ## Change Log

18 |

19 | ### v3.0

20 |

21 | 该版本项目基于GA_Data_Science_Capstone

22 |

23 | 用Yolo_v5识别手势和食指进行绘制,请自行手势数据集并进行标注,数据预处理在01和02文件中

24 | 该项目可移植到树莓派上运行,利用树莓派收集图像,推流到电脑进行推理,有延迟

25 |

26 | #### How to run

27 |

28 | ```sh

29 | cd v3.0

30 | pip install -r requirements.txt

31 | jupyter notebook

32 |

33 | # open and run 01_image_processing_and_data_augmentation.ipynb

34 |

35 | # run labelImg to label data 1, 2, 5, forefinger

36 |

37 | python 02_munge_data.py

38 |

39 | # train model

40 | python train.py --img 512 --batch 16 --epochs 100 --data config.yaml --cfg models/yolov5s.yaml --name yolo_example

41 | tensorboard --logdir runs/

42 |

43 | # run use pc cam

44 | python detect.py --weights weights/best.pt --img 512 --conf 0.3 --source 0

45 |

46 | # run use raspi

47 | # run on raspi

48 | sudo raspivid -o - -rot 180 -t 0 -w 640 -h 360 -fps 30|cvlc -vvv stream:///dev/stdin --sout '#standard{access=http,mux=ts,dst=:8080}' :demux=h264

49 | # run on pc

50 | python detect.py --weights runs/exp12_yolo_example/weights/best.pt --img 512 --conf 0.15 --source http://192.168.43.46:8080/

51 | ```

52 |

53 | ### v2.0

54 |

55 | 基于OpenCV和凸包检测的手势识别

56 | 肤色检测+凸包+数轮廓线个数(统计手指数量)

57 |

58 | #### How to run

59 |

60 | ```sh

61 | cd v2.0

62 | python gesture.py

63 | ```

64 |

65 |

66 |

67 | ### v1.0

68 |

69 | 基于OpenCV的肤色检测+凸包

70 |

71 |

72 | #### How to run

73 | ```sh

74 | cd v1.0

75 | python main.py

76 | ```

77 |

78 |

79 |

80 |

--------------------------------------------------------------------------------

/docs/_config.yml:

--------------------------------------------------------------------------------

1 | theme: jekyll-theme-cayman

--------------------------------------------------------------------------------

/docs/index.md:

--------------------------------------------------------------------------------

1 | ## mid-air-draw

2 |

3 | mid-air-draw[Demo]

4 |

5 | Welcome to star this repo

6 |

7 | ### v1.0

8 | 肤色检测+凸包

9 |

10 | ```sh

11 | cd v1.0

12 | python main.py

13 | ```

14 |

15 | ### v2.0

16 | 肤色检测+凸包+数轮廓线个数(统计手指数量)

17 |

18 | #### How to run

19 |

20 | ```sh

21 | cd v2.0

22 | python gesture.py

23 | ```

24 |

25 |

26 | ### v3.0

27 |

28 | ```sh

29 | cd v3.0

30 | pip install -r requirements.txt

31 | jupyter notebook

32 |

33 | # open and run 01_image_processing_and_data_augmentation.ipynb

34 |

35 | # run labelImg to label data 1, 2, 5, forefinger

36 |

37 | python 02_munge_data.py

38 |

39 | # train model

40 | python train.py --img 512 --batch 16 --epochs 100 --data config.yaml --cfg models/yolov5s.yaml --name yolo_example

41 | tensorboard --logdir runs/

42 |

43 | # run use pc cam

44 | python detect.py --weights weights/best.pt --img 512 --conf 0.3 --source 0

45 |

46 | # run use raspi

47 | # run on raspi

48 | sudo raspivid -o - -rot 180 -t 0 -w 640 -h 360 -fps 30|cvlc -vvv stream:///dev/stdin --sout '#standard{access=http,mux=ts,dst=:8080}' :demux=h264

49 | # run on pc

50 | python detect.py --weights runs/exp12_yolo_example/weights/best.pt --img 512 --conf 0.15 --source http://192.168.43.46:8080/

51 | ```

--------------------------------------------------------------------------------

/v1.0/main.py:

--------------------------------------------------------------------------------

1 | import cv2

2 | import numpy as np

3 |

4 |

5 | def main():

6 | cap = cv2.VideoCapture(0)

7 | init = 0

8 | last_point = 0

9 | font = cv2.FONT_HERSHEY_SIMPLEX # 设置字体

10 | size = 0.5 # 设置大小

11 | width, height = 300, 300 # 设置拍摄窗口大小

12 | x0, y0 = 100, 100 # 设置选取位置

13 | while cap.isOpened():

14 | ret, img = cap.read()

15 | img = cv2.flip(img, 2)

16 | roi = binaryMask(img, x0, y0, width, height)

17 | res = skinMask(roi)

18 | contours = getContours(res)

19 | if init == 0:

20 | img2 = roi.copy()

21 | img2[:, :, :] = 255

22 | init = 1

23 |

24 | print(len(contours))

25 | if len(contours) > 0:

26 | first = [x[0] for x in contours[0]]

27 | first = np.array(first[:])

28 | print(first)

29 | y_min = roi.shape[1]

30 | idx = 0

31 | for i, (x, y) in enumerate(first):

32 | if y < y_min:

33 | y_min = y

34 | idx = i

35 | print(first[idx])

36 | point = (first[idx][0], first[idx][1])

37 | cv2.circle(img2, point, 1, (255, 0, 0))

38 | if last_point != 0:

39 | cv2.line(img2, point, last_point, (255, 0, 0), 1)

40 | last_point = point

41 |

42 | # print(img2)

43 | cv2.drawContours(roi, contours, -1, (0, 255, 0), 2)

44 | cv2.imshow('capture', img)

45 | cv2.imshow('roi', roi)

46 | cv2.imshow('draw', img2)

47 | k = cv2.waitKey(10)

48 | if k == 27:

49 | break

50 |

51 |

52 | def getContours(img):

53 | kernel = np.ones((5, 5), np.uint8)

54 | closed = cv2.morphologyEx(img, cv2.MORPH_OPEN, kernel)

55 | closed = cv2.morphologyEx(closed, cv2.MORPH_CLOSE, kernel)

56 | contours, h = cv2.findContours(closed, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

57 | vaildContours = []

58 | for cont in contours:

59 | if cv2.contourArea(cont) > 9000:

60 | # x,y,w,h = cv2.boundingRect(cont)

61 | # if h/w >0.75:

62 | # filter face failed

63 | vaildContours.append(cv2.convexHull(cont))

64 | # print(cv2.convexHull(cont))

65 | # rect = cv2.minAreaRect(cont)

66 | # box = cv2.cv.BoxPoint(rect)

67 | # vaildContours.append(np.int0(box))

68 | return vaildContours

69 |

70 |

71 | def binaryMask(frame, x0, y0, width, height):

72 | cv2.rectangle(frame, (x0, y0), (x0 + width, y0 + height), (0, 255, 0)) # 画出截取的手势框图

73 | roi = frame[y0:y0 + height, x0:x0 + width] # 获取手势框图

74 | return roi

75 |

76 |

77 | def HSVBin(img):

78 | hsv = cv2.cvtColor(img, cv2.COLOR_RGB2HSV)

79 |

80 | lower_skin = np.array([100, 50, 0])

81 | upper_skin = np.array([125, 255, 255])

82 |

83 | mask = cv2.inRange(hsv, lower_skin, upper_skin)

84 | # res = cv2.bitwise_and(img,img,mask=mask)

85 | return mask

86 |

87 |

88 | def skinMask1(roi):

89 | rgb = cv2.cvtColor(roi, cv2.COLOR_BGR2RGB) # 转换到RGB空间

90 | (R, G, B) = cv2.split(rgb) # 获取图像每个像素点的RGB的值,即将一个二维矩阵拆成三个二维矩阵

91 | skin = np.zeros(R.shape, dtype=np.uint8) # 掩膜

92 | (x, y) = R.shape # 获取图像的像素点的坐标范围

93 | for i in range(0, x):

94 | for j in range(0, y):

95 | # 判断条件,不在肤色范围内则将掩膜设为黑色,即255

96 | if (abs(R[i][j] - G[i][j]) > 15) and (R[i][j] > G[i][j]) and (R[i][j] > B[i][j]):

97 | if (R[i][j] > 95) and (G[i][j] > 40) and (B[i][j] > 20) \

98 | and (max(R[i][j], G[i][j], B[i][j]) - min(R[i][j], G[i][j], B[i][j]) > 15):

99 | skin[i][j] = 255

100 | elif (R[i][j] > 220) and (G[i][j] > 210) and (B[i][j] > 170):

101 | skin[i][j] = 255

102 | # res = cv2.bitwise_and(roi, roi, mask=skin) # 图像与运算

103 | return skin

104 |

105 |

106 | def skinMask2(roi):

107 | low = np.array([0, 48, 50]) # 最低阈值

108 | high = np.array([20, 255, 255]) # 最高阈值

109 | hsv = cv2.cvtColor(roi, cv2.COLOR_BGR2HSV) # 转换到HSV空间

110 | mask = cv2.inRange(hsv, low, high) # 掩膜,不在范围内的设为255

111 | # res = cv2.bitwise_and(roi, roi, mask=mask) # 图像与运算

112 | return mask

113 |

114 |

115 | def skinMask3(roi):

116 | skinCrCbHist = np.zeros((256, 256), dtype=np.uint8)

117 | cv2.ellipse(skinCrCbHist, (113, 155), (23, 25), 43, 0, 360, (255, 255, 255), -1) # 绘制椭圆弧线

118 | YCrCb = cv2.cvtColor(roi, cv2.COLOR_BGR2YCR_CB) # 转换至YCrCb空间

119 | (y, Cr, Cb) = cv2.split(YCrCb) # 拆分出Y,Cr,Cb值

120 | skin = np.zeros(Cr.shape, dtype=np.uint8) # 掩膜

121 | (x, y) = Cr.shape

122 | for i in range(0, x):

123 | for j in range(0, y):

124 | if skinCrCbHist[Cr[i][j], Cb[i][j]] > 0: # 若不在椭圆区间中

125 | skin[i][j] = 255

126 | # res = cv2.bitwise_and(roi, roi, mask=skin)

127 | return skin

128 |

129 |

130 | def skinMask4(roi):

131 | YCrCb = cv2.cvtColor(roi, cv2.COLOR_BGR2YCR_CB) # 转换至YCrCb空间

132 | (y, cr, cb) = cv2.split(YCrCb) # 拆分出Y,Cr,Cb值

133 | cr1 = cv2.GaussianBlur(cr, (5, 5), 0)

134 | _, skin = cv2.threshold(cr1, 0, 255, cv2.THRESH_BINARY + cv2.THRESH_OTSU) # Ostu处理

135 | # res = cv2.bitwise_and(roi, roi, mask=skin)

136 | return skin

137 |

138 |

139 | def skinMask5(roi):

140 | YCrCb = cv2.cvtColor(roi, cv2.COLOR_BGR2YCR_CB) # 转换至YCrCb空间

141 | (y, cr, cb) = cv2.split(YCrCb) # 拆分出Y,Cr,Cb值

142 | skin = np.zeros(cr.shape, dtype=np.uint8)

143 | (x, y) = cr.shape

144 | for i in range(0, x):

145 | for j in range(0, y):

146 | # 每个像素点进行判断

147 | if (cr[i][j] > 130) and (cr[i][j] < 175) and (cb[i][j] > 77) and (cb[i][j] < 127):

148 | skin[i][j] = 255

149 | # res = cv2.bitwise_and(roi, roi, mask=skin)

150 | return skin

151 |

152 |

153 | def skinMask(roi):

154 | return skinMask4(roi)

155 |

156 |

157 | if __name__ == '__main__':

158 | main()

159 |

--------------------------------------------------------------------------------

/v2.0/gesture.py:

--------------------------------------------------------------------------------

1 | import cv2

2 | import numpy as np

3 | import copy

4 | import math

5 |

6 | # from appscript import app

7 |

8 | # Environment:

9 | # hardware:Raspberry Pi 4B

10 | # OS : Raspbian GNU/Linux 10 (buster)

11 | # python: 3.7.3

12 | # opencv: 4.2.0

13 |

14 | # parameters

15 | cap_region_x_begin = 0.6 # start point/total width

16 | cap_region_y_end = 0.6 # start point/total width

17 | threshold = 60 # BINARY threshold

18 | blurValue = 41 # GaussianBlur parameter

19 | bgSubThreshold = 50

20 | learningRate = 0

21 |

22 | # variables

23 | isBgCaptured = 0 # bool, whether the background captured

24 | triggerSwitch = False # if true, keyborad simulator works

25 |

26 |

27 | def skinMask1(roi):

28 | rgb = cv2.cvtColor(roi, cv2.COLOR_BGR2RGB) # 转换到RGB空间

29 | (R, G, B) = cv2.split(rgb) # 获取图像每个像素点的RGB的值,即将一个二维矩阵拆成三个二维矩阵

30 | skin = np.zeros(R.shape, dtype=np.uint8) # 掩膜

31 | (x, y) = R.shape # 获取图像的像素点的坐标范围

32 | for i in range(0, x):

33 | for j in range(0, y):

34 | # 判断条件,不在肤色范围内则将掩膜设为黑色,即255

35 | if (abs(R[i][j] - G[i][j]) > 15) and (R[i][j] > G[i][j]) and (R[i][j] > B[i][j]):

36 | if (R[i][j] > 95) and (G[i][j] > 40) and (B[i][j] > 20) \

37 | and (max(R[i][j], G[i][j], B[i][j]) - min(R[i][j], G[i][j], B[i][j]) > 15):

38 | skin[i][j] = 255

39 | elif (R[i][j] > 220) and (G[i][j] > 210) and (B[i][j] > 170):

40 | skin[i][j] = 255

41 | # res = cv2.bitwise_and(roi, roi, mask=skin) # 图像与运算

42 | return skin

43 |

44 |

45 | def skinMask2(roi):

46 | low = np.array([0, 48, 50]) # 最低阈值

47 | high = np.array([20, 255, 255]) # 最高阈值

48 | hsv = cv2.cvtColor(roi, cv2.COLOR_BGR2HSV) # 转换到HSV空间

49 | mask = cv2.inRange(hsv, low, high) # 掩膜,不在范围内的设为255

50 | # res = cv2.bitwise_and(roi, roi, mask=mask) # 图像与运算

51 | return mask

52 |

53 |

54 | def skinMask3(roi):

55 | skinCrCbHist = np.zeros((256, 256), dtype=np.uint8)

56 | cv2.ellipse(skinCrCbHist, (113, 155), (23, 25), 43, 0, 360, (255, 255, 255), -1) # 绘制椭圆弧线

57 | YCrCb = cv2.cvtColor(roi, cv2.COLOR_BGR2YCR_CB) # 转换至YCrCb空间

58 | (y, Cr, Cb) = cv2.split(YCrCb) # 拆分出Y,Cr,Cb值

59 | skin = np.zeros(Cr.shape, dtype=np.uint8) # 掩膜

60 | (x, y) = Cr.shape

61 | for i in range(0, x):

62 | for j in range(0, y):

63 | if skinCrCbHist[Cr[i][j], Cb[i][j]] > 0: # 若不在椭圆区间中

64 | skin[i][j] = 255

65 | # res = cv2.bitwise_and(roi, roi, mask=skin)

66 | return skin

67 |

68 |

69 | def skinMask4(roi):

70 | YCrCb = cv2.cvtColor(roi, cv2.COLOR_BGR2YCR_CB) # 转换至YCrCb空间

71 | (y, cr, cb) = cv2.split(YCrCb) # 拆分出Y,Cr,Cb值

72 | cr1 = cv2.GaussianBlur(cr, (5, 5), 0)

73 | _, skin = cv2.threshold(cr1, 0, 255, cv2.THRESH_BINARY + cv2.THRESH_OTSU) # Ostu处理

74 | # res = cv2.bitwise_and(roi, roi, mask=skin)

75 | return skin

76 |

77 |

78 | def skinMask5(roi):

79 | YCrCb = cv2.cvtColor(roi, cv2.COLOR_BGR2YCR_CB) # 转换至YCrCb空间

80 | (y, cr, cb) = cv2.split(YCrCb) # 拆分出Y,Cr,Cb值

81 | skin = np.zeros(cr.shape, dtype=np.uint8)

82 | (x, y) = cr.shape

83 | for i in range(0, x):

84 | for j in range(0, y):

85 | # 每个像素点进行判断

86 | if (cr[i][j] > 130) and (cr[i][j] < 175) and (cb[i][j] > 77) and (cb[i][j] < 127):

87 | skin[i][j] = 255

88 | # res = cv2.bitwise_and(roi, roi, mask=skin)

89 | return skin

90 |

91 |

92 | def skinMask(roi):

93 | return skinMask4(roi)

94 |

95 |

96 | def dis(p1, p2):

97 | (x1, y1) = p1

98 | (x2, y2) = p2

99 | return np.sqrt((x1 - x2) ** 2 + (y1 - y2) ** 2)

100 |

101 |

102 | def printThreshold(thr):

103 | print("! Changed threshold to " + str(thr))

104 |

105 |

106 | def removeBG(frame):

107 | """

108 | fgmask = bgModel.apply(frame, learningRate=learningRate)

109 | # kernel = cv2.getStructuringElement(cv2.MORPH_ELLIPSE, (3, 3))

110 | # res = cv2.morphologyEx(fgmask, cv2.MORPH_OPEN, kernel)

111 |

112 | kernel = np.ones((3, 3), np.uint8)

113 | fgmask = cv2.erode(fgmask, kernel, iterations=1)

114 | """

115 | res = cv2.bitwise_and(frame, frame, mask=skinMask(frame))

116 | return res

117 |

118 |

119 | def calculateFingers(res, drawing): # -> finished bool, cnt: finger count

120 | # convexity defect

121 | hull = cv2.convexHull(res, returnPoints=False)

122 | if len(hull) > 3:

123 | defects = cv2.convexityDefects(res, hull)

124 | if type(defects) != type(None): # avoid crashing. (BUG not found)

125 |

126 | cnt = 0

127 | for i in range(defects.shape[0]): # calculate the angle

128 | s, e, f, d = defects[i][0]

129 | start = tuple(res[s][0])

130 | end = tuple(res[e][0])

131 | far = tuple(res[f][0])

132 | a = math.sqrt((end[0] - start[0]) ** 2 + (end[1] - start[1]) ** 2)

133 | b = math.sqrt((far[0] - start[0]) ** 2 + (far[1] - start[1]) ** 2)

134 | c = math.sqrt((end[0] - far[0]) ** 2 + (end[1] - far[1]) ** 2)

135 | angle = math.acos((b ** 2 + c ** 2 - a ** 2) / (2 * b * c)) # cosine theorem

136 | if angle <= math.pi / 2: # angle less than 90 degree, treat as fingers

137 | cnt += 1

138 | cv2.line(drawing, far, start, [211, 200, 200], 2)

139 | cv2.line(drawing, far, end, [211, 200, 200], 2)

140 | cv2.circle(drawing, far, 8, [211, 84, 0], -1)

141 | return True, cnt

142 | return False, 0

143 |

144 |

145 | # Camera

146 | camera = cv2.VideoCapture(0)

147 | # rt = camera.get(10)

148 | # print(rt)

149 | camera.set(10, 150)

150 | cv2.namedWindow('trackbar')

151 | cv2.createTrackbar('trh1', 'trackbar', threshold, 100, printThreshold)

152 |

153 | last_point = 0

154 | init = 0

155 |

156 | while camera.isOpened():

157 | ret, frame = camera.read()

158 | threshold = cv2.getTrackbarPos('trh1', 'trackbar')

159 | frame = cv2.bilateralFilter(frame, 5, 50, 100) # smoothing filter

160 | frame = cv2.flip(frame, 1) # flip the frame horizontally

161 | cv2.rectangle(frame, (int(cap_region_x_begin * frame.shape[1]), 0),

162 | (frame.shape[1], int(cap_region_y_end * frame.shape[0])), (255, 0, 0), 2)

163 | cv2.imshow('original', frame)

164 | print(frame.shape)

165 |

166 | # Main operation

167 | if isBgCaptured == 1: # this part wont run until background captured

168 | img = removeBG(frame)

169 | img = img[0:int(cap_region_y_end * frame.shape[0]),

170 | int(cap_region_x_begin * frame.shape[1]):frame.shape[1]] # clip the ROI

171 | cv2.imshow('mask', img)

172 | if init == 0:

173 | img2 = img.copy()

174 | img2[:, :] = 255

175 | init = 1

176 | # convert the image into binary image

177 | gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

178 | blur = cv2.GaussianBlur(gray, (blurValue, blurValue), 0)

179 | # cv2.imshow('blur', blur)

180 | ret, thresh = cv2.threshold(blur, threshold, 255, cv2.THRESH_BINARY)

181 | # cv2.imshow('ori', thresh)

182 |

183 | # get the coutours

184 | thresh1 = copy.deepcopy(thresh)

185 | contours, hierarchy = cv2.findContours(thresh1, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

186 | length = len(contours)

187 | maxArea = -1

188 | drawing = np.zeros(img.shape, np.uint8)

189 | if length > 0:

190 | for i in range(length): # find the biggest contour (according to area)

191 | temp = contours[i]

192 | area = cv2.contourArea(temp)

193 | if area > maxArea:

194 | maxArea = area

195 | ci = i

196 |

197 | res = contours[ci]

198 |

199 | # print(last_point)

200 | # print(res)

201 | hull = cv2.convexHull(res)

202 | drawing = np.zeros(img.shape, np.uint8)

203 | # cv2.drawContours(drawing, [], 0, (0, 255, 0), 2)

204 | cv2.drawContours(drawing, [res], 0, (0, 255, 0), 2)

205 | cv2.drawContours(drawing, [hull], 0, (0, 0, 255), 3)

206 |

207 | isFinishCal, cnt = calculateFingers(res, drawing)

208 | if cnt > 2:

209 | img2[:, :] = 255

210 | # print(cnt)

211 | if triggerSwitch is True:

212 | # if isFinishCal is True and cnt <= 2:

213 | if isFinishCal is True:

214 | print(cnt)

215 | # app('System Events').keystroke(' ') # simulate pressing blank space

216 | if cnt <= 2:

217 | first = [x[0] for x in contours[ci]]

218 | first = np.array(first[:])

219 | # print(first)

220 | y_min = frame.shape[1]

221 | idx = 0

222 | for i, (x, y) in enumerate(first):

223 | if y < y_min:

224 | y_min = y

225 | idx = i

226 | # print(first[idx])

227 | point = (first[idx][0], first[idx][1])

228 | cv2.circle(img2, point, 3, (255, 0, 0))

229 | if last_point != 0:

230 | # print('????')

231 | if dis(last_point, point) < 30:

232 | cv2.line(img2, point, last_point, (255, 0, 0), 3)

233 | last_point = point

234 | '''

235 | if cnt > 1:

236 | first = [x[0] for x in contours[ci]]

237 | else:

238 | first = [x[0] for x in contours[0]]

239 | first = [x[0] for x in contours[ci]]

240 | first = np.array(first[:])

241 | # print(first)

242 | y_min = frame.shape[1]

243 | idx = 0

244 | for i, (x, y) in enumerate(first):

245 | if y < y_min:

246 | y_min = y

247 | idx = i

248 | # print(first[idx])

249 | point = (first[idx][0], first[idx][1])

250 | cv2.circle(img2, point, 3, (255, 255, 255))

251 | if last_point != 0:

252 | # print('????')

253 | cv2.line(img2, point, last_point, (255, 255, 255), 3)

254 | last_point = point

255 | '''

256 |

257 | cv2.imshow('output', drawing)

258 | cv2.imshow('draw', img2)

259 |

260 | # Keyboard OP

261 | k = cv2.waitKey(10)

262 | if k == 27: # press ESC to exit

263 | camera.release()

264 | cv2.destroyAllWindows()

265 | break

266 | elif k == ord('b'): # press 'b' to capture the background

267 | bgModel = cv2.createBackgroundSubtractorMOG2(0, bgSubThreshold)

268 | isBgCaptured = 1

269 | print('!!!Background Captured!!!')

270 | elif k == ord('r'): # press 'r' to reset the background

271 | bgModel = None

272 | triggerSwitch = False

273 | isBgCaptured = 0

274 | print('!!!Reset BackGround!!!')

275 | elif k == ord('n'):

276 | triggerSwitch = True

277 | print('!!!Trigger On!!!')

278 | elif k == ord('c'):

279 | img2[:, :] = 255

280 | print('!!!img2 Clear!!!')

281 |

--------------------------------------------------------------------------------

/v3.0/02_munge_data.py:

--------------------------------------------------------------------------------

1 | """

2 | The purpose of this python script is to create an unbiased training and validation set.

3 | The split data will be run in the terminal calling a function (process_data) that will join the

4 | annotations.csv file with new .txt files for bounding box class and coordinates for each image.

5 | """

6 | # Credit to Abhishek Thakur, as this is a modified version of this notebook.

7 | # Source to video, where he goes over his code: https://www.youtube.com/watch?v=NU9Xr_NYslo&t=1392s

8 |

9 | # Import libraries

10 | import os

11 | import ast

12 | import pandas as pd

13 | import numpy as np

14 | from sklearn import model_selection

15 | from tqdm import tqdm

16 | import shutil

17 |

18 | # The DATA_PATH will be where your augmented images and annotations.csv files are.

19 | # The OUTPUT_PATH is where the train and validation images and labels will go to.

20 | DATA_PATH = './modeling_data/aug_data/'

21 | OUTPUT_PATH = './yolov5/yolo_data/'

22 |

23 |

24 | # Function for taking each row in the annotations file

25 | def process_data(data, data_type='train'):

26 | for _, row in tqdm(data.iterrows(), total=len(data)):

27 | image_name = row['image_id'][:-4] # removing file extension .jpeg

28 | bounding_boxes = row['bboxes']

29 | yolo_data = []

30 | for bbox in bounding_boxes:

31 | category = bbox[0]

32 | x_center = bbox[1]

33 | y_center = bbox[2]

34 | w = bbox[3]

35 | h = bbox[4]

36 | yolo_data.append([category, x_center, y_center, w, h]) # yolo formated labels

37 | yolo_data = np.array(yolo_data)

38 |

39 | np.savetxt(

40 | # Outputting .txt file to appropriate train/validation folders

41 | os.path.join(OUTPUT_PATH, f"labels/{data_type}/{image_name}.txt"),

42 | yolo_data,

43 | fmt=["%d", "%f", "%f", "%f", "%f"]

44 | )

45 | shutil.copyfile(

46 | # Copying the augmented images to the appropriate train/validation folders

47 | os.path.join(DATA_PATH, f"images/{image_name}.jpg"),

48 | os.path.join(OUTPUT_PATH, f"images/{data_type}/{image_name}.jpg"),

49 | )

50 |

51 |

52 | if __name__ == '__main__':

53 | df = pd.read_csv(os.path.join(DATA_PATH, 'annotations.csv'))

54 | df.bbox = df.bbox.apply(ast.literal_eval) # Convert string to list for bounding boxes

55 | df = df.groupby('image_id')['bbox'].apply(list).reset_index(name='bboxes')

56 |

57 | # splitting data to a 90/10 split

58 | df_train, df_valid = model_selection.train_test_split(

59 | df,

60 | test_size=0.1,

61 | random_state=42,

62 | shuffle=True

63 | )

64 |

65 | df_train = df_train.reset_index(drop=True)

66 | df_valid = df_valid.reset_index(drop=True)

67 |

68 | # Run function to have our data ready for modeling in 03_Modeling_and_Inference.ipynb

69 | process_data(df_train, data_type='train')

70 | process_data(df_valid, data_type='validation')

71 |

--------------------------------------------------------------------------------

/v3.0/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2020 David Lee

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/v3.0/README.md:

--------------------------------------------------------------------------------

1 | ### GA_Data_Science_Capstone_Project

2 | # **Interactive ABC's with American Sign Language**

3 | ### A step in Increasing Accessability for the Deaf Community with Computer Vision utilizing Yolov5.

4 |

5 |

6 |

7 | # **Executive Summary**

8 | Utilizing Yolov5, a custom computer vision model was created on the American Sign Language alphabet. The project was promoted on social platforms to diversify the dataset. A total of 721 images were collected in the span of two weeks using DropBox request forms. Manual labels were created of the original images which were then resized, and organized for preprocessing. Several carefully selected augmentations were made to the images to compensate for the small dataset count. A total of 18,000 images were then used for modeling. Transfer learning was incorporated with Yolov5m weights and training completed on 300 epochs with an image size of 1024 in 163 hours. A mean average precision score of 0.8527 was achieved. Inference tests were successfully performed with areas identifying the models strengths and weaknesses for future development.

9 |

10 | All operations were performed on my local Linux machine with a CUDA/cudNN setup using Pytorch.

11 |

12 |

13 | # **Table of Contents**

14 |

15 | - [Executive Summary](#executivesummary)

16 | - [Table of Contents](#contents)

17 | - [Data Colelction Method](#data)

18 | - [Preprocessing](#preprocessing)

19 | - [Modeling](#modeling)

20 | - [Inference](#inference)

21 | - [Conclusions](#conclusions)

22 | - [Next Steps](#nextsteps)

23 | - [Citations](#cite)

24 | - [Special Thanks](#thanks)

25 |

26 |

27 |

28 |

29 | - [Back to Contents](#contents)

30 | # **Problem Statement:**

31 | Have you ever considered how easy it is to perform simple communication tasks such as ordering food at a drive thru, discussing financial information with a banker, telling a physician your symptoms at a hospital, or even negotiating your wages from your employer? What if there was a rule where you couldn’t speak and were only able to use your hands for each of these circumstances? The deaf community cannot do what most of the population take for granted and are often placed in degrading situations due to these challenges they face every day. Access to qualified interpretation services isn’t feasible in most cases leaving many in the deaf community with underemployment, social isolation, and public health challenges. To give these members of our community a greater voice, I have attempted to answer this question:

32 |

33 |

34 | **Can computer vision bridge the gap for the deaf and hard of hearing by learning American Sign Language?**

35 |

36 | In order to do this, a Yolov5 model was trained on the ASL alphabet. If successful, it may mark a step in the right direction for both greater accessibility and educational resources.

37 |

38 |

39 | - [Back to Contents](#contents)

40 | # **Data Collection Method:**

41 | The decision was made to create an original dataset for a few reasons. The first was to mirror the intended environment on a mobile device or webcam. These often have resolutions of 720 or 1080p. Several existing datasets have a low resolution and many do not include the letters “j” and “z” as they require movements.

42 |

43 | A letter request form was created with an introduction to my project along with instruction on how to submit voluntary sign language images with dropbox file request forms. This was distributed on social platforms to bring awareness, and to collect data.

44 |

45 |

46 | #### Dropbox request form used: (Deadline Sep. 27th, 2020)

47 | https://docs.google.com/document/d/1ChZPPr1dsHtgNqQ55a0FMngJj8PJbGgArm8xsiNYlRQ/edit?usp=sharing

48 | [link](https://docs.google.com/document/d/1ChZPPr1dsHtgNqQ55a0FMngJj8PJbGgArm8xsiNYlRQ/edit?usp=sharing)

49 |

50 | A total of 720 images were collected:

51 |

52 | Here is the distributions of images: (Letters / Counts)

53 |

54 | A - 29

55 | B - 25

56 | C - 25

57 | D - 28

58 | E - 25

59 | F - 30

60 | G - 30

61 | H - 29

62 | I - 30

63 | J - 38

64 | K - 27

65 | L - 28

66 | M - 28

67 | N - 27

68 | O - 28

69 | P - 25

70 | Q - 26

71 | R - 25

72 | S - 30

73 | T - 25

74 | U - 25

75 | V - 28

76 | W - 27

77 | X - 26

78 | Y - 26

79 | Z - 30

80 |

81 |

82 | - [Back to Contents](#contents)

83 | # **Preproccessing**

84 | ### Labeling the images

85 | Manual bounding box labels were created on the original images using the labelImg software.

86 |

87 | Each of the pictures and bounding box coordinates were then passed through an albumentations pipeline that resized the images to 1024 x 1024 pixel squares and added probabilities of different transformations.

88 |

89 | These transformations included specified degrees of rotations, shifts in the image locations, blurs, horizontal flips, random erase, and a variety of other color transformations.

90 |

91 |

92 |

93 |

94 | 25 augmented images were created for each image resulting in an image set of 18,000 used for modeling.

95 |

96 |

97 | - [Back to Contents](#contents)

98 | # **Modeling: Yolov5**

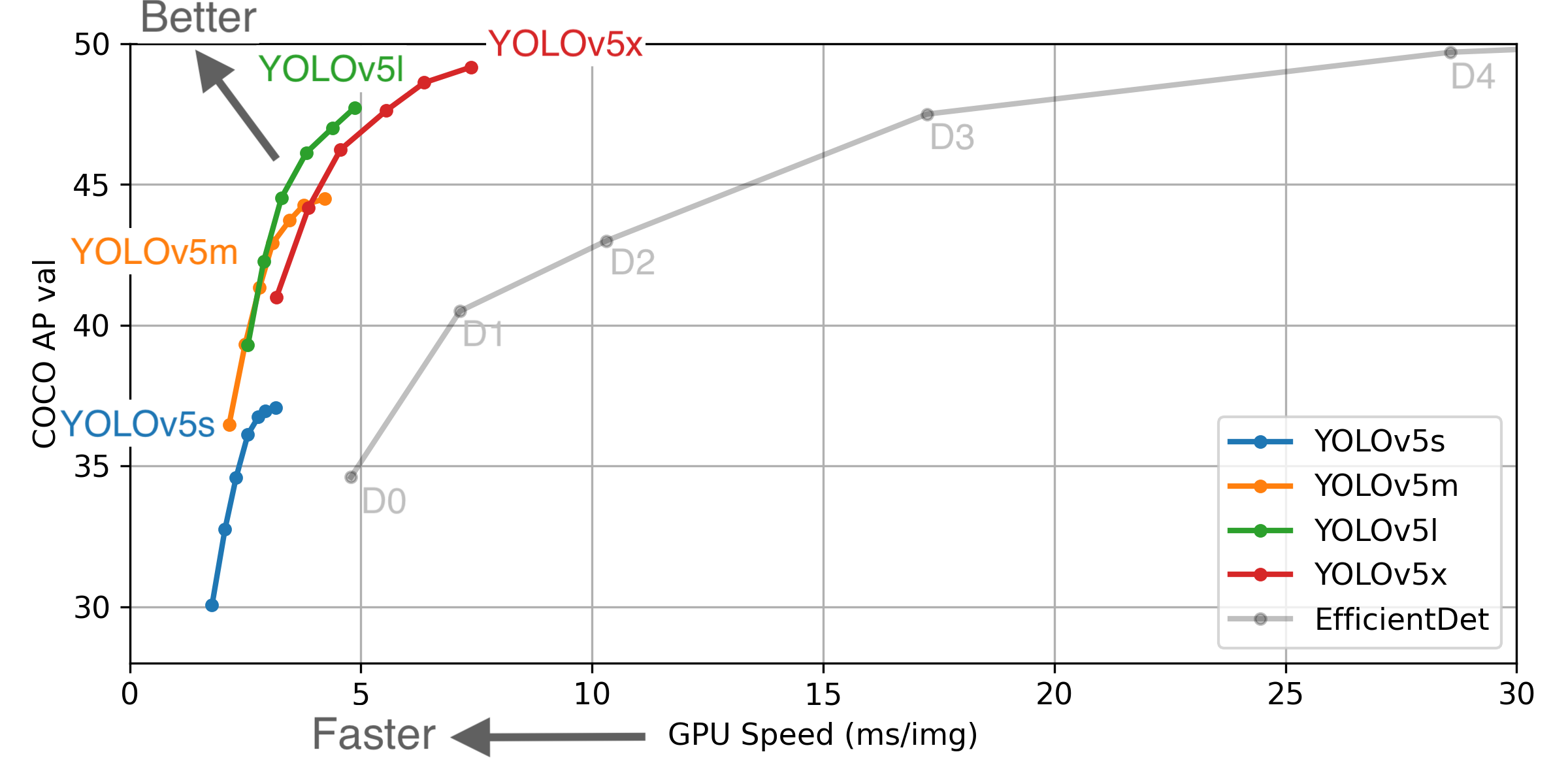

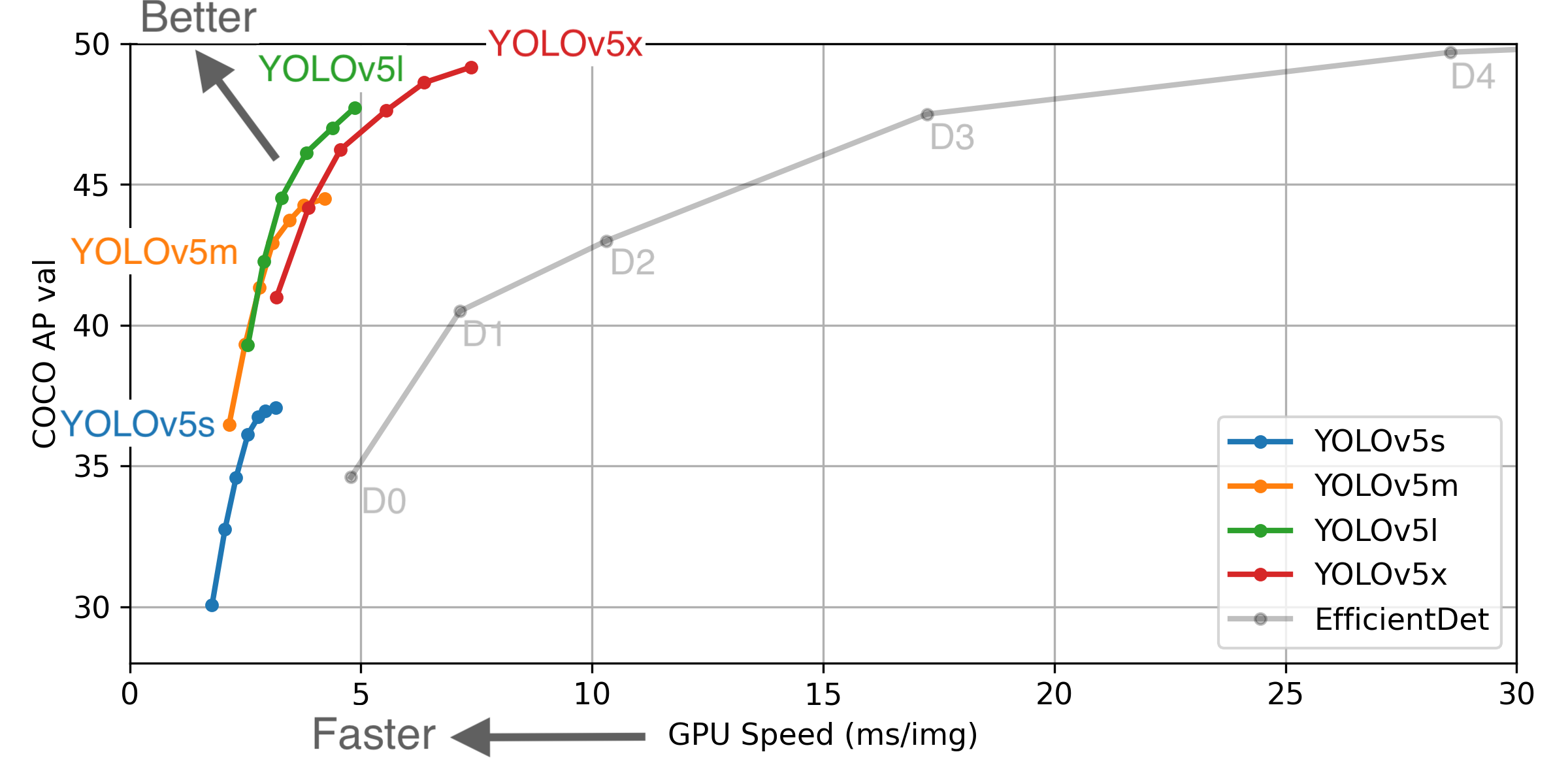

99 | To address acceptable inference speeds and size, Yolov5 was chosen for modeling.

100 |

101 | This was released in June 10th of this year, and is still in active development. Although Yolov5 by Ultralytics is not created by the original Yolo authors, Yolo v5 is said to be faster and more lightweight, with accuracy on par with Yolo v4 which is widely considered as the fastest and most accurate real-time object detection model.

102 |

103 |

104 |

105 | Yolo was designed as a convolutional neural network for real time object detection. Its more complex than basic classification as object detection needs to identify the objects and locate where it is on the image. This single stage object detector, has 3 main components:

106 |

107 | The backbone basically extracts important features of an image, the neck mainly uses feature pyramids which help in generalizing the object scaling for better performance on unseen data. The model head does the actual detection part where anchor boxes are applied on features that generate output vectors.

108 | These vectors include the class probabilities, the objectness scores, and bounding boxes.

109 |

110 |

111 | The model used was yolov5m with transfer learning on pretrained weights.

112 |

113 | #### **Model Training**

114 | Epochs: 300

115 | Batch Size: 8

116 | Image Size: 1024 x 1024

117 | Weights: yolov5m.pt

118 |

119 |

120 |

121 | mAP@.5: 98.17%

122 |

123 | **mAP@.5:.95: 85.27%**

124 |

125 | Training batch example:

126 |

127 |

128 | Test batch predictions example:

129 |

130 |

131 |

132 | - [Back to Contents](#contents)

133 | # **Inference**

134 | ### **Images**

135 | I had reserved a test set of my son’s attempts at each letter that was not included in any of the training and validation sets. In fact no pictures of hands from children were used for training the model. Ideally several more images would help in showcasing how well our model performs, but this a start.

136 |

137 |

138 | Out of 26 letters, 18 were correctly predicted.

139 |

140 | Letters that did not receive a prediction (G, H, J, and Z)

141 |

142 | Letters that were incorrectly predicted were:

143 | “D” predicted as “F”

144 | “E” predicted as “T”

145 | “P“ predicted as “Q”

146 | “R” predicted as “U”

147 |

148 |

149 | ## **Video Findings:**

150 |

151 | ==============================================================

152 | **Left-handed:**

153 | This test shows that our image augmentation pipeline performed well as it was set to flip the images horizontally at a 50% probability.

154 |

155 |

156 | ==============================================================

157 | **Child's hand:**

158 | The test on my son's hand was performed, and the model still performs well here.

159 |

160 |

161 | ==============================================================

162 | **Multiple letters on screen:**

163 | Simultaneous letters were also detected. Although sign language is not used like the video on the right, it shows that multiple people can be on screen and the model will be able to distinguish more than one instance of the language.

164 |

165 |

166 | ==============================================================

167 | ## **Video Limitations:**

168 | ==============================================================

169 | **Distance**

170 | There were limitations I’ve discovered in my model. The biggest one is distance. As many of the original pictures were taken from my phone on my hands, the distance of my hand to the camera was very close, negatively impacting inference at further distances.

171 |

172 |

173 |

174 | ==============================================================

175 | **New environments**

176 | These video clips of volunteers below were not included in any of the model training. Although the model picks up a lot of the letters, the prediction confidence levels are lower, and there are more misclassifications present.

177 |

178 |

179 |

180 | I've verified this with a video of my own.

181 |

182 |

183 | **Even though the original image set was on only 720 pictures, the implications of the results displayed bring us to an exciting conclusion.**

184 |

185 | ==============================================================

186 |

187 |

188 | - [Back to Contents](#contents)

189 | # **Conclusions**

190 | Computer vision can and should be used in marking a step in greater accessibility and educational resources for our deaf and hard of hearing communities!

191 |

192 | - Even though the original image set was on only 720 pictures, the implications of the results displayed here is promising

193 | - Gathering more image data from a variety of sources would help our model inference in different distances and environments better.

194 | - Even letters with movements are able to be recognized through computer vision.

195 |

196 |

197 |

198 | - [Back to Contents](#contents)

199 | # **Next Steps**

200 | I believe this project is aligned with the vision of the National Association of the Deaf in bringing better accessibility and education for this underrepresented community. If I am able to bring awareness to the project, and partner with an organization like the NAD, I will be able to gather better data on the people that speak this language natively to push the project further.

201 |

202 | The technology is still very new, and the model I have trained for this presentation was primarily used to find out if it would work. I’m happy with my initial results and I’ve already trained a smaller model that I’ll be testing for mobile deployment in the future.

203 |

204 | I believe computer vision can help give our deaf and hard of hearing neighbors a voice with the right support and project awareness.

205 |

206 | - [Back to Contents](#contents)

207 |

208 | # **Citations**

209 | Python Version: 3.8

210 | Packages: pandas, numpy, matplotlib, sklearn, opencv, os, ast, albumentations, tqdm, torch, IPython, PIL, shutil

211 |

212 | ### Resources:

213 |

214 | Yolov5 github

215 | https://github.com/ultralytics/yolov5

216 |

217 | Yolov5 requirements

218 | https://github.com/ultralytics/yolov5/blob/master/requirements.txt

219 |

220 | Cudnn install guide:

221 | https://docs.nvidia.com/deeplearning/cudnn/install-guide/index.html

222 |

223 | Install Opencv:

224 | https://www.codegrepper.com/code-examples/python/how+to+install+opencv+in+python+3.8

225 |

226 | Roboflow augmentation process:

227 | https://docs.roboflow.com/image-transformations/image-augmentation

228 |

229 | Heavily utilized research paper on image augmentations:

230 | https://journalofbigdata.springeropen.com/articles/10.1186/s40537-019-0197-0#Sec3

231 |

232 | Pillow library:

233 | https://pillow.readthedocs.io/en/latest/handbook/index.html

234 |

235 | Labeling Software labelImg:

236 | https://github.com/tzutalin/labelImg

237 |

238 | Albumentations library

239 | https://github.com/albumentations-team/albumentations

240 |

241 | # **Special Thanks**

242 | Joseph Nelson, CEO of Roboflow.ai, for delivering a computer vision lesson to our class, and answering my questions directly.

243 |

244 | And to my volunteers:

245 | Nathan & Roxanne Seither

246 | Juhee Sung-Schenck

247 | Josh Mizraji

248 | Lydia Kajeckas

249 | Aidan Curley

250 | Chris Johnson

251 | Eric Lee

252 |

253 | And to the General Assembly DSI-720 instructors:

254 | Adi Bronshtein

255 | Patrick Wales-Dinan

256 | Kelly Slatery

257 | Noah Christiansen

258 | Jacob Ellena

259 | Bradford Smith

260 |

261 | This project would not have been possible without the time all of you invested in me. Thank you!

--------------------------------------------------------------------------------

/v3.0/ord.txt:

--------------------------------------------------------------------------------

1 | python train.py --img 512 --batch 16 --epochs 100 --data config.yaml --cfg models/yolov5s.yaml --name yolo_example

2 | tensorboard --logdir runs/

3 | python detect.py --weights weights/best.pt --img 512 --conf 0.3 --source 0

4 | python detect.py --weights runs/exp12_yolo_example/weights/best.pt --img 512 --conf 0.15 --source 0

5 | python detect.py --weights runs/exp12_yolo_example/weights/best.pt --img 512 --conf 0.15 --source rtsp://192.168.0.106:8554/

6 | python detect.py --weights runs/exp12_yolo_example/weights/best.pt --img 512 --conf 0.15 --source http://192.168.0.106:8080/

7 | python detect.py --weights runs/exp12_yolo_example/weights/best.pt --img 512 --conf 0.15 --source http://192.168.43.46:8080/

8 |

9 | sudo raspivid -o - -rot 180 -t 0 -w 640 -h 480 -fps 30|cvlc -vvv stream:///dev/stdin --sout '#standard{access=http,mux=ts,dst=:8080}' :demux=h264

10 | sudo raspivid -o - -rot 180 -t 0 -w 640 -h 360 -fps 30|cvlc -vvv stream:///dev/stdin --sout '#standard{access=http,mux=ts,dst=:8080}' :demux=h264

11 |

12 | sudo raspivid -o - -rot 180 -t 0 -w 640 -h 360 -fps 25|cvlc -vvv stream:///dev/stdin --sout '#standard access=http,mux=ts,dst=:8090}' :demux=h264

--------------------------------------------------------------------------------

/v3.0/windows_v1.8.1/data/predefined_classes.txt:

--------------------------------------------------------------------------------

1 | 1

2 | 2

3 | 5

4 | forefinger

--------------------------------------------------------------------------------

/v3.0/windows_v1.8.1/labelImg.exe:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/yyyanbj/mid-air-draw/9ce05fe981e9037d8c0151be66c0254f8f2523d5/v3.0/windows_v1.8.1/labelImg.exe

--------------------------------------------------------------------------------

/v3.0/yolov5/.dockerignore:

--------------------------------------------------------------------------------

1 | # Repo-specific DockerIgnore -------------------------------------------------------------------------------------------

2 | #.git

3 | .cache

4 | .idea

5 | runs

6 | output

7 | coco

8 | storage.googleapis.com

9 |

10 | data/samples/*

11 | **/results*.txt

12 | *.jpg

13 |

14 | # Neural Network weights -----------------------------------------------------------------------------------------------

15 | **/*.weights

16 | **/*.pt

17 | **/*.pth

18 | **/*.onnx

19 | **/*.mlmodel

20 | **/*.torchscript

21 |

22 |

23 | # Below Copied From .gitignore -----------------------------------------------------------------------------------------

24 | # Below Copied From .gitignore -----------------------------------------------------------------------------------------

25 |

26 |

27 | # GitHub Python GitIgnore ----------------------------------------------------------------------------------------------

28 | # Byte-compiled / optimized / DLL files

29 | __pycache__/

30 | *.py[cod]

31 | *$py.class

32 |

33 | # C extensions

34 | *.so

35 |

36 | # Distribution / packaging

37 | .Python

38 | env/

39 | build/

40 | develop-eggs/

41 | dist/

42 | downloads/

43 | eggs/

44 | .eggs/

45 | lib/

46 | lib64/

47 | parts/

48 | sdist/

49 | var/

50 | wheels/

51 | *.egg-info/

52 | .installed.cfg

53 | *.egg

54 |

55 | # PyInstaller

56 | # Usually these files are written by a python script from a template

57 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

58 | *.manifest

59 | *.spec

60 |

61 | # Installer logs

62 | pip-log.txt

63 | pip-delete-this-directory.txt

64 |

65 | # Unit test / coverage reports

66 | htmlcov/

67 | .tox/

68 | .coverage

69 | .coverage.*

70 | .cache

71 | nosetests.xml

72 | coverage.xml

73 | *.cover

74 | .hypothesis/

75 |

76 | # Translations

77 | *.mo

78 | *.pot

79 |

80 | # Django stuff:

81 | *.log

82 | local_settings.py

83 |

84 | # Flask stuff:

85 | instance/

86 | .webassets-cache

87 |

88 | # Scrapy stuff:

89 | .scrapy

90 |

91 | # Sphinx documentation

92 | docs/_build/

93 |

94 | # PyBuilder

95 | target/

96 |

97 | # Jupyter Notebook

98 | .ipynb_checkpoints

99 |

100 | # pyenv

101 | .python-version

102 |

103 | # celery beat schedule file

104 | celerybeat-schedule

105 |

106 | # SageMath parsed files

107 | *.sage.py

108 |

109 | # dotenv

110 | .env

111 |

112 | # virtualenv

113 | .venv*

114 | venv*/

115 | ENV*/

116 |

117 | # Spyder project settings

118 | .spyderproject

119 | .spyproject

120 |

121 | # Rope project settings

122 | .ropeproject

123 |

124 | # mkdocs documentation

125 | /site

126 |

127 | # mypy

128 | .mypy_cache/

129 |

130 |

131 | # https://github.com/github/gitignore/blob/master/Global/macOS.gitignore -----------------------------------------------

132 |

133 | # General

134 | .DS_Store

135 | .AppleDouble

136 | .LSOverride

137 |

138 | # Icon must end with two \r

139 | Icon

140 | Icon?

141 |

142 | # Thumbnails

143 | ._*

144 |

145 | # Files that might appear in the root of a volume

146 | .DocumentRevisions-V100

147 | .fseventsd

148 | .Spotlight-V100

149 | .TemporaryItems

150 | .Trashes

151 | .VolumeIcon.icns

152 | .com.apple.timemachine.donotpresent

153 |

154 | # Directories potentially created on remote AFP share

155 | .AppleDB

156 | .AppleDesktop

157 | Network Trash Folder

158 | Temporary Items

159 | .apdisk

160 |

161 |

162 | # https://github.com/github/gitignore/blob/master/Global/JetBrains.gitignore

163 | # Covers JetBrains IDEs: IntelliJ, RubyMine, PhpStorm, AppCode, PyCharm, CLion, Android Studio and WebStorm

164 | # Reference: https://intellij-support.jetbrains.com/hc/en-us/articles/206544839

165 |

166 | # User-specific stuff:

167 | .idea/*

168 | .idea/**/workspace.xml

169 | .idea/**/tasks.xml

170 | .idea/dictionaries

171 | .html # Bokeh Plots

172 | .pg # TensorFlow Frozen Graphs

173 | .avi # videos

174 |

175 | # Sensitive or high-churn files:

176 | .idea/**/dataSources/

177 | .idea/**/dataSources.ids

178 | .idea/**/dataSources.local.xml

179 | .idea/**/sqlDataSources.xml

180 | .idea/**/dynamic.xml

181 | .idea/**/uiDesigner.xml

182 |

183 | # Gradle:

184 | .idea/**/gradle.xml

185 | .idea/**/libraries

186 |

187 | # CMake

188 | cmake-build-debug/

189 | cmake-build-release/

190 |

191 | # Mongo Explorer plugin:

192 | .idea/**/mongoSettings.xml

193 |

194 | ## File-based project format:

195 | *.iws

196 |

197 | ## Plugin-specific files:

198 |

199 | # IntelliJ

200 | out/

201 |

202 | # mpeltonen/sbt-idea plugin

203 | .idea_modules/

204 |

205 | # JIRA plugin

206 | atlassian-ide-plugin.xml

207 |

208 | # Cursive Clojure plugin

209 | .idea/replstate.xml

210 |

211 | # Crashlytics plugin (for Android Studio and IntelliJ)

212 | com_crashlytics_export_strings.xml

213 | crashlytics.properties

214 | crashlytics-build.properties

215 | fabric.properties

216 |

--------------------------------------------------------------------------------

/v3.0/yolov5/.gitattributes:

--------------------------------------------------------------------------------

1 | # this drop notebooks from GitHub language stats

2 | *.ipynb linguist-vendored

3 |

--------------------------------------------------------------------------------

/v3.0/yolov5/.github/ISSUE_TEMPLATE/--bug-report.md:

--------------------------------------------------------------------------------

1 | ---

2 | name: "\U0001F41BBug report"

3 | about: Create a report to help us improve

4 | title: ''

5 | labels: bug

6 | assignees: ''

7 |

8 | ---

9 |

10 | Before submitting a bug report, please be aware that your issue **must be reproducible** with all of the following, otherwise it is non-actionable, and we can not help you:

11 | - **Current repo**: run `git fetch && git status -uno` to check and `git pull` to update repo

12 | - **Common dataset**: coco.yaml or coco128.yaml

13 | - **Common environment**: Colab, Google Cloud, or Docker image. See https://github.com/ultralytics/yolov5#environments

14 |

15 | If this is a custom dataset/training question you **must include** your `train*.jpg`, `test*.jpg` and `results.png` figures, or we can not help you. You can generate these with `utils.plot_results()`.

16 |

17 |

18 | ## 🐛 Bug

19 | A clear and concise description of what the bug is.

20 |

21 |

22 | ## To Reproduce (REQUIRED)

23 |

24 | Input:

25 | ```

26 | import torch

27 |

28 | a = torch.tensor([5])

29 | c = a / 0

30 | ```

31 |

32 | Output:

33 | ```

34 | Traceback (most recent call last):

35 | File "/Users/glennjocher/opt/anaconda3/envs/env1/lib/python3.7/site-packages/IPython/core/interactiveshell.py", line 3331, in run_code

36 | exec(code_obj, self.user_global_ns, self.user_ns)

37 | File "", line 5, in

38 | c = a / 0

39 | RuntimeError: ZeroDivisionError

40 | ```

41 |

42 |

43 | ## Expected behavior

44 | A clear and concise description of what you expected to happen.

45 |

46 |

47 | ## Environment

48 | If applicable, add screenshots to help explain your problem.

49 |

50 | - OS: [e.g. Ubuntu]

51 | - GPU [e.g. 2080 Ti]

52 |

53 |

54 | ## Additional context

55 | Add any other context about the problem here.

56 |

--------------------------------------------------------------------------------

/v3.0/yolov5/.github/ISSUE_TEMPLATE/--feature-request.md:

--------------------------------------------------------------------------------

1 | ---

2 | name: "\U0001F680Feature request"

3 | about: Suggest an idea for this project

4 | title: ''

5 | labels: enhancement

6 | assignees: ''

7 |

8 | ---

9 |

10 | ## 🚀 Feature

11 |

12 |

13 | ## Motivation

14 |

15 |

16 |

17 | ## Pitch

18 |

19 |

20 |

21 | ## Alternatives

22 |

23 |

24 |

25 | ## Additional context

26 |

27 |

28 |

--------------------------------------------------------------------------------

/v3.0/yolov5/.github/ISSUE_TEMPLATE/-question.md:

--------------------------------------------------------------------------------

1 | ---

2 | name: "❓Question"

3 | about: Ask a general question

4 | title: ''

5 | labels: question

6 | assignees: ''

7 |

8 | ---

9 |

10 | ## ❔Question

11 |

12 |

13 | ## Additional context

14 |

--------------------------------------------------------------------------------

/v3.0/yolov5/.github/workflows/ci-testing.yml:

--------------------------------------------------------------------------------

1 | name: CI CPU testing

2 |

3 | on: # https://help.github.com/en/actions/reference/events-that-trigger-workflows

4 | push:

5 | pull_request:

6 | schedule:

7 | - cron: "0 0 * * *"

8 |

9 | jobs:

10 | cpu-tests:

11 |

12 | runs-on: ${{ matrix.os }}

13 | strategy:

14 | fail-fast: false

15 | matrix:

16 | os: [ubuntu-latest, macos-latest, windows-latest]

17 | python-version: [3.8]

18 | model: ['yolov5s'] # models to test

19 |

20 | # Timeout: https://stackoverflow.com/a/59076067/4521646

21 | timeout-minutes: 50

22 | steps:

23 | - uses: actions/checkout@v2

24 | - name: Set up Python ${{ matrix.python-version }}

25 | uses: actions/setup-python@v2

26 | with:

27 | python-version: ${{ matrix.python-version }}

28 |

29 | # Note: This uses an internal pip API and may not always work

30 | # https://github.com/actions/cache/blob/master/examples.md#multiple-oss-in-a-workflow

31 | - name: Get pip cache

32 | id: pip-cache

33 | run: |

34 | python -c "from pip._internal.locations import USER_CACHE_DIR; print('::set-output name=dir::' + USER_CACHE_DIR)"

35 |

36 | - name: Cache pip

37 | uses: actions/cache@v1

38 | with:

39 | path: ${{ steps.pip-cache.outputs.dir }}

40 | key: ${{ runner.os }}-${{ matrix.python-version }}-pip-${{ hashFiles('requirements.txt') }}

41 | restore-keys: |

42 | ${{ runner.os }}-${{ matrix.python-version }}-pip-

43 |

44 | - name: Install dependencies

45 | run: |

46 | python -m pip install --upgrade pip

47 | pip install -qr requirements.txt -f https://download.pytorch.org/whl/cpu/torch_stable.html

48 | pip install -q onnx

49 | python --version

50 | pip --version

51 | pip list

52 | shell: bash

53 |

54 | - name: Download data

55 | run: |

56 | # curl -L -o tmp.zip https://github.com/ultralytics/yolov5/releases/download/v1.0/coco128.zip

57 | # unzip -q tmp.zip -d ../

58 | # rm tmp.zip

59 |

60 | - name: Tests workflow

61 | run: |

62 | # export PYTHONPATH="$PWD" # to run '$ python *.py' files in subdirectories

63 | di=cpu # inference devices # define device

64 |

65 | # train

66 | python train.py --img 256 --batch 8 --weights weights/${{ matrix.model }}.pt --cfg models/${{ matrix.model }}.yaml --epochs 1 --device $di

67 | # detect

68 | python detect.py --weights weights/${{ matrix.model }}.pt --device $di

69 | python detect.py --weights runs/exp0/weights/last.pt --device $di

70 | # test

71 | python test.py --img 256 --batch 8 --weights weights/${{ matrix.model }}.pt --device $di

72 | python test.py --img 256 --batch 8 --weights runs/exp0/weights/last.pt --device $di

73 |

74 | python models/yolo.py --cfg models/${{ matrix.model }}.yaml # inspect

75 | python models/export.py --img 256 --batch 1 --weights weights/${{ matrix.model }}.pt # export

76 | shell: bash

77 |

--------------------------------------------------------------------------------

/v3.0/yolov5/.github/workflows/greetings.yml:

--------------------------------------------------------------------------------

1 | name: Greetings

2 |

3 | on: [pull_request_target, issues]

4 |

5 | jobs:

6 | greeting:

7 | runs-on: ubuntu-latest

8 | steps:

9 | - uses: actions/first-interaction@v1

10 | with:

11 | repo-token: ${{ secrets.GITHUB_TOKEN }}

12 | pr-message: |

13 | Hello @${{ github.actor }}, thank you for submitting a PR! To allow your work to be integrated as seamlessly as possible, we advise you to:

14 | - Verify your PR is **up-to-date with origin/master.** If your PR is behind origin/master update by running the following, replacing 'feature' with the name of your local branch:

15 | ```bash

16 | git remote add upstream https://github.com/ultralytics/yolov5.git

17 | git fetch upstream

18 | git checkout feature # <----- replace 'feature' with local branch name

19 | git rebase upstream/master

20 | git push -u origin -f

21 | ```

22 | - Verify all Continuous Integration (CI) **checks are passing**.

23 | - Reduce changes to the absolute **minimum** required for your bug fix or feature addition. _"It is not daily increase but daily decrease, hack away the unessential. The closer to the source, the less wastage there is."_ -Bruce Lee

24 |

25 | issue-message: |

26 | Hello @${{ github.actor }}, thank you for your interest in our work! Please visit our [Custom Training Tutorial](https://github.com/ultralytics/yolov5/wiki/Train-Custom-Data) to get started, and see our [Jupyter Notebook](https://github.com/ultralytics/yolov5/blob/master/tutorial.ipynb)  , [Docker Image](https://hub.docker.com/r/ultralytics/yolov5), and [Google Cloud Quickstart Guide](https://github.com/ultralytics/yolov5/wiki/GCP-Quickstart) for example environments.

27 |

28 | If this is a bug report, please provide screenshots and **minimum viable code to reproduce your issue**, otherwise we can not help you.

29 |

30 | If this is a custom model or data training question, please note Ultralytics does **not** provide free personal support. As a leader in vision ML and AI, we do offer professional consulting, from simple expert advice up to delivery of fully customized, end-to-end production solutions for our clients, such as:

31 | - **Cloud-based AI** systems operating on **hundreds of HD video streams in realtime.**

32 | - **Edge AI** integrated into custom iOS and Android apps for realtime **30 FPS video inference.**

33 | - **Custom data training**, hyperparameter evolution, and model exportation to any destination.

34 |

35 | For more information please visit https://www.ultralytics.com.

36 |

--------------------------------------------------------------------------------

/v3.0/yolov5/.github/workflows/rebase.yml:

--------------------------------------------------------------------------------

1 | name: Automatic Rebase

2 | # https://github.com/marketplace/actions/automatic-rebase

3 |

4 | on:

5 | issue_comment:

6 | types: [created]

7 |

8 | jobs:

9 | rebase:

10 | name: Rebase

11 | if: github.event.issue.pull_request != '' && contains(github.event.comment.body, '/rebase')

12 | runs-on: ubuntu-latest

13 | steps:

14 | - name: Checkout the latest code

15 | uses: actions/checkout@v2

16 | with:

17 | fetch-depth: 0

18 | - name: Automatic Rebase

19 | uses: cirrus-actions/rebase@1.3.1

20 | env:

21 | GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

22 |

--------------------------------------------------------------------------------

/v3.0/yolov5/.github/workflows/stale.yml:

--------------------------------------------------------------------------------

1 | name: Close stale issues

2 | on:

3 | schedule:

4 | - cron: "0 0 * * *"

5 |

6 | jobs:

7 | stale:

8 | runs-on: ubuntu-latest

9 | steps:

10 | - uses: actions/stale@v1

11 | with:

12 | repo-token: ${{ secrets.GITHUB_TOKEN }}

13 | stale-issue-message: 'This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.'

14 | stale-pr-message: 'This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.'

15 | days-before-stale: 30

16 | days-before-close: 5

17 | exempt-issue-labels: 'documentation,tutorial'

18 | operations-per-run: 100 # The maximum number of operations per run, used to control rate limiting.

19 |

--------------------------------------------------------------------------------

/v3.0/yolov5/.gitignore:

--------------------------------------------------------------------------------

1 | # Repo-specific GitIgnore ----------------------------------------------------------------------------------------------

2 | *.jpg

3 | *.jpeg

4 | *.png

5 | *.bmp

6 | *.tif

7 | *.tiff

8 | *.heic

9 | *.JPG

10 | *.JPEG

11 | *.PNG

12 | *.BMP

13 | *.TIF

14 | *.TIFF

15 | *.HEIC

16 | *.mp4

17 | *.mov

18 | *.MOV

19 | *.avi

20 | *.data

21 | *.json

22 |

23 | *.cfg

24 | !cfg/yolov3*.cfg

25 |

26 | storage.googleapis.com

27 | runs/*

28 | data/*

29 | !data/samples/zidane.jpg

30 | !data/samples/bus.jpg

31 | !data/coco.names

32 | !data/coco_paper.names

33 | !data/coco.data

34 | !data/coco_*.data

35 | !data/coco_*.txt

36 | !data/trainvalno5k.shapes

37 | !data/*.sh

38 |

39 | pycocotools/*

40 | results*.txt

41 | gcp_test*.sh

42 |

43 | # MATLAB GitIgnore -----------------------------------------------------------------------------------------------------

44 | *.m~

45 | *.mat

46 | !targets*.mat

47 |

48 | # Neural Network weights -----------------------------------------------------------------------------------------------

49 | *.weights

50 | *.pt

51 | *.onnx

52 | *.mlmodel

53 | *.torchscript

54 | darknet53.conv.74

55 | yolov3-tiny.conv.15

56 |

57 | # GitHub Python GitIgnore ----------------------------------------------------------------------------------------------

58 | # Byte-compiled / optimized / DLL files

59 | __pycache__/

60 | *.py[cod]

61 | *$py.class

62 |

63 | # C extensions

64 | *.so

65 |

66 | # Distribution / packaging

67 | .Python

68 | env/

69 | build/

70 | develop-eggs/

71 | dist/

72 | downloads/

73 | eggs/

74 | .eggs/

75 | lib/

76 | lib64/

77 | parts/

78 | sdist/

79 | var/

80 | wheels/

81 | *.egg-info/

82 | .installed.cfg

83 | *.egg

84 |

85 | # PyInstaller

86 | # Usually these files are written by a python script from a template

87 | # before PyInstaller builds the exe, so as to inject date/other infos into it.

88 | *.manifest

89 | *.spec

90 |

91 | # Installer logs

92 | pip-log.txt

93 | pip-delete-this-directory.txt

94 |

95 | # Unit test / coverage reports

96 | htmlcov/

97 | .tox/

98 | .coverage

99 | .coverage.*

100 | .cache

101 | nosetests.xml

102 | coverage.xml

103 | *.cover

104 | .hypothesis/

105 |

106 | # Translations

107 | *.mo

108 | *.pot

109 |

110 | # Django stuff:

111 | *.log

112 | local_settings.py

113 |

114 | # Flask stuff:

115 | instance/

116 | .webassets-cache

117 |

118 | # Scrapy stuff:

119 | .scrapy

120 |

121 | # Sphinx documentation

122 | docs/_build/

123 |

124 | # PyBuilder

125 | target/

126 |

127 | # Jupyter Notebook

128 | .ipynb_checkpoints

129 |

130 | # pyenv

131 | .python-version

132 |

133 | # celery beat schedule file

134 | celerybeat-schedule

135 |

136 | # SageMath parsed files

137 | *.sage.py

138 |

139 | # dotenv

140 | .env

141 |

142 | # virtualenv

143 | .venv*

144 | venv*/

145 | ENV*/

146 |

147 | # Spyder project settings

148 | .spyderproject

149 | .spyproject

150 |

151 | # Rope project settings

152 | .ropeproject

153 |

154 | # mkdocs documentation

155 | /site

156 |

157 | # mypy

158 | .mypy_cache/

159 |

160 |

161 | # https://github.com/github/gitignore/blob/master/Global/macOS.gitignore -----------------------------------------------

162 |

163 | # General

164 | .DS_Store

165 | .AppleDouble

166 | .LSOverride

167 |

168 | # Icon must end with two \r

169 | Icon

170 | Icon?

171 |

172 | # Thumbnails

173 | ._*

174 |

175 | # Files that might appear in the root of a volume

176 | .DocumentRevisions-V100

177 | .fseventsd

178 | .Spotlight-V100

179 | .TemporaryItems

180 | .Trashes

181 | .VolumeIcon.icns

182 | .com.apple.timemachine.donotpresent

183 |

184 | # Directories potentially created on remote AFP share

185 | .AppleDB

186 | .AppleDesktop

187 | Network Trash Folder

188 | Temporary Items

189 | .apdisk

190 |

191 |

192 | # https://github.com/github/gitignore/blob/master/Global/JetBrains.gitignore

193 | # Covers JetBrains IDEs: IntelliJ, RubyMine, PhpStorm, AppCode, PyCharm, CLion, Android Studio and WebStorm

194 | # Reference: https://intellij-support.jetbrains.com/hc/en-us/articles/206544839

195 |

196 | # User-specific stuff:

197 | .idea/*

198 | .idea/**/workspace.xml

199 | .idea/**/tasks.xml

200 | .idea/dictionaries

201 | .html # Bokeh Plots

202 | .pg # TensorFlow Frozen Graphs

203 | .avi # videos

204 |

205 | # Sensitive or high-churn files:

206 | .idea/**/dataSources/

207 | .idea/**/dataSources.ids

208 | .idea/**/dataSources.local.xml

209 | .idea/**/sqlDataSources.xml

210 | .idea/**/dynamic.xml

211 | .idea/**/uiDesigner.xml

212 |

213 | # Gradle:

214 | .idea/**/gradle.xml

215 | .idea/**/libraries

216 |

217 | # CMake

218 | cmake-build-debug/

219 | cmake-build-release/

220 |

221 | # Mongo Explorer plugin:

222 | .idea/**/mongoSettings.xml

223 |

224 | ## File-based project format:

225 | *.iws

226 |

227 | ## Plugin-specific files:

228 |

229 | # IntelliJ

230 | out/

231 |

232 | # mpeltonen/sbt-idea plugin

233 | .idea_modules/

234 |

235 | # JIRA plugin

236 | atlassian-ide-plugin.xml

237 |

238 | # Cursive Clojure plugin

239 | .idea/replstate.xml

240 |

241 | # Crashlytics plugin (for Android Studio and IntelliJ)

242 | com_crashlytics_export_strings.xml

243 | crashlytics.properties

244 | crashlytics-build.properties

245 | fabric.properties

246 |

--------------------------------------------------------------------------------

/v3.0/yolov5/Dockerfile:

--------------------------------------------------------------------------------

1 | # Start FROM Nvidia PyTorch image https://ngc.nvidia.com/catalog/containers/nvidia:pytorch

2 | FROM nvcr.io/nvidia/pytorch:20.10-py3

3 |

4 | # Install dependencies

5 | RUN pip install --upgrade pip

6 | # COPY requirements.txt .

7 | # RUN pip install -r requirements.txt

8 | RUN pip install gsutil

9 |

10 | # Create working directory

11 | RUN mkdir -p /usr/src/app

12 | WORKDIR /usr/src/app

13 |

14 | # Copy contents

15 | COPY . /usr/src/app

16 |

17 | # Copy weights

18 | #RUN python3 -c "from models import *; \

19 | #attempt_download('weights/yolov5s.pt'); \

20 | #attempt_download('weights/yolov5m.pt'); \

21 | #attempt_download('weights/yolov5l.pt')"

22 |

23 |

24 | # --------------------------------------------------- Extras Below ---------------------------------------------------

25 |

26 | # Build and Push

27 | # t=ultralytics/yolov5:latest && sudo docker build -t $t . && sudo docker push $t

28 | # for v in {300..303}; do t=ultralytics/coco:v$v && sudo docker build -t $t . && sudo docker push $t; done

29 |

30 | # Pull and Run

31 | # t=ultralytics/yolov5:latest && sudo docker pull $t && sudo docker run -it --ipc=host $t

32 |

33 | # Pull and Run with local directory access

34 | # t=ultralytics/yolov5:latest && sudo docker pull $t && sudo docker run -it --ipc=host --gpus all -v "$(pwd)"/coco:/usr/src/coco $t

35 |

36 | # Kill all

37 | # sudo docker kill $(sudo docker ps -q)

38 |

39 | # Kill all image-based

40 | # sudo docker kill $(sudo docker ps -a -q --filter ancestor=ultralytics/yolov5:latest)

41 |

42 | # Bash into running container

43 | # sudo docker container exec -it ba65811811ab bash

44 |

45 | # Bash into stopped container

46 | # sudo docker commit 092b16b25c5b usr/resume && sudo docker run -it --gpus all --ipc=host -v "$(pwd)"/coco:/usr/src/coco --entrypoint=sh usr/resume

47 |

48 | # Send weights to GCP

49 | # python -c "from utils.general import *; strip_optimizer('runs/exp0_*/weights/best.pt', 'tmp.pt')" && gsutil cp tmp.pt gs://*.pt

50 |

51 | # Clean up

52 | # docker system prune -a --volumes

53 |

--------------------------------------------------------------------------------

/v3.0/yolov5/README.md:

--------------------------------------------------------------------------------

1 |

2 |

, [Docker Image](https://hub.docker.com/r/ultralytics/yolov5), and [Google Cloud Quickstart Guide](https://github.com/ultralytics/yolov5/wiki/GCP-Quickstart) for example environments.

27 |

28 | If this is a bug report, please provide screenshots and **minimum viable code to reproduce your issue**, otherwise we can not help you.

29 |

30 | If this is a custom model or data training question, please note Ultralytics does **not** provide free personal support. As a leader in vision ML and AI, we do offer professional consulting, from simple expert advice up to delivery of fully customized, end-to-end production solutions for our clients, such as:

31 | - **Cloud-based AI** systems operating on **hundreds of HD video streams in realtime.**

32 | - **Edge AI** integrated into custom iOS and Android apps for realtime **30 FPS video inference.**

33 | - **Custom data training**, hyperparameter evolution, and model exportation to any destination.

34 |

35 | For more information please visit https://www.ultralytics.com.

36 |

--------------------------------------------------------------------------------

/v3.0/yolov5/.github/workflows/rebase.yml:

--------------------------------------------------------------------------------

1 | name: Automatic Rebase

2 | # https://github.com/marketplace/actions/automatic-rebase

3 |

4 | on:

5 | issue_comment:

6 | types: [created]

7 |

8 | jobs:

9 | rebase:

10 | name: Rebase

11 | if: github.event.issue.pull_request != '' && contains(github.event.comment.body, '/rebase')

12 | runs-on: ubuntu-latest

13 | steps:

14 | - name: Checkout the latest code

15 | uses: actions/checkout@v2

16 | with:

17 | fetch-depth: 0

18 | - name: Automatic Rebase

19 | uses: cirrus-actions/rebase@1.3.1

20 | env:

21 | GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

22 |

--------------------------------------------------------------------------------

/v3.0/yolov5/.github/workflows/stale.yml:

--------------------------------------------------------------------------------

1 | name: Close stale issues

2 | on:

3 | schedule:

4 | - cron: "0 0 * * *"

5 |

6 | jobs:

7 | stale:

8 | runs-on: ubuntu-latest

9 | steps:

10 | - uses: actions/stale@v1

11 | with:

12 | repo-token: ${{ secrets.GITHUB_TOKEN }}