2 |

3 |

4 |

NLP-Space

5 |

papers read & learning notes & some code

6 |

7 |

12 |

13 | #### Definition: **meaning** (Webster dictionary)

14 | #### Common solution: **WordNet**

15 | #### Problems with resources like WordNet:

16 | * Great as a resource but missing nuance, 细微差别

17 | * missing new meaning of words, 单词含义

18 | * Subjective, 主观的

19 | * Requires human labor to create and adapt, 需要人工

20 | * Can't compute accurate word similarity, 无法计算相似度

21 |

22 | #### Representing words as discrete symbols

23 | traditional NLP,a localist representation

24 | **Means one 1, the rest 0s**

25 | 独热编码(ont-hot)

26 | ```

27 | motel=[0 0 0 0 1 0]

28 | hotel=[0 1 0 0 0 0]

29 | ```

30 | 但是独热编码的结果是,这些词向量都是正交的,并且不能表达语义相似度。orthogonal(正交)、no natural notion of similarity

31 | 解决方案就是`learn to encode similarity in the vectors themselves`

32 |

33 | #### Representing words by their context

34 |

12 |

13 | #### Definition: **meaning** (Webster dictionary)

14 | #### Common solution: **WordNet**

15 | #### Problems with resources like WordNet:

16 | * Great as a resource but missing nuance, 细微差别

17 | * missing new meaning of words, 单词含义

18 | * Subjective, 主观的

19 | * Requires human labor to create and adapt, 需要人工

20 | * Can't compute accurate word similarity, 无法计算相似度

21 |

22 | #### Representing words as discrete symbols

23 | traditional NLP,a localist representation

24 | **Means one 1, the rest 0s**

25 | 独热编码(ont-hot)

26 | ```

27 | motel=[0 0 0 0 1 0]

28 | hotel=[0 1 0 0 0 0]

29 | ```

30 | 但是独热编码的结果是,这些词向量都是正交的,并且不能表达语义相似度。orthogonal(正交)、no natural notion of similarity

31 | 解决方案就是`learn to encode similarity in the vectors themselves`

32 |

33 | #### Representing words by their context

34 |  35 |

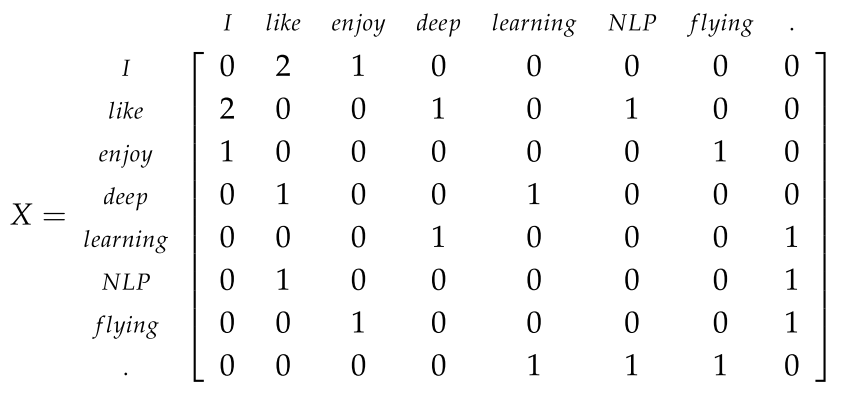

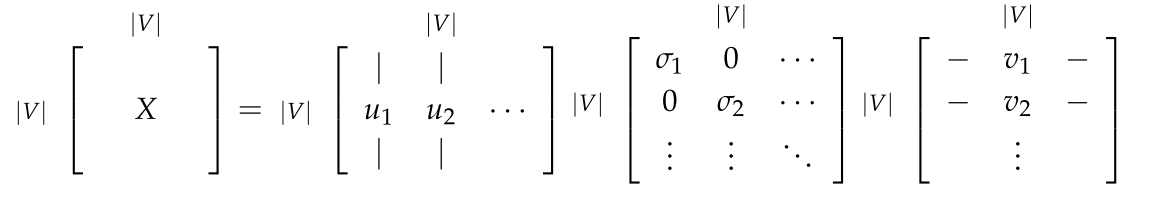

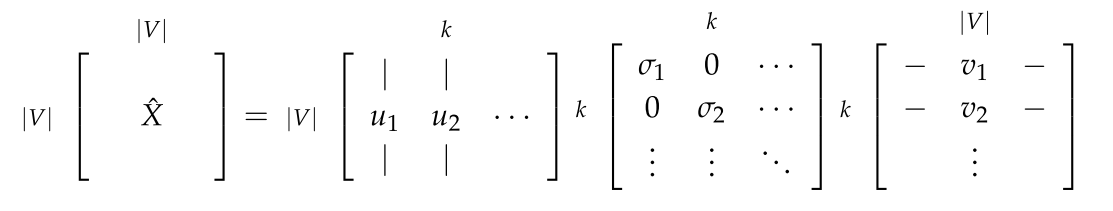

36 | **Distributional semantics**: A word's meaning is given by the words that frequently appear close-by

37 | **Word vectors** (word embeddings): dense vector

38 |

39 | #### Word meaning as as neural word vector - visualization

40 |

35 |

36 | **Distributional semantics**: A word's meaning is given by the words that frequently appear close-by

37 | **Word vectors** (word embeddings): dense vector

38 |

39 | #### Word meaning as as neural word vector - visualization

40 |  41 |

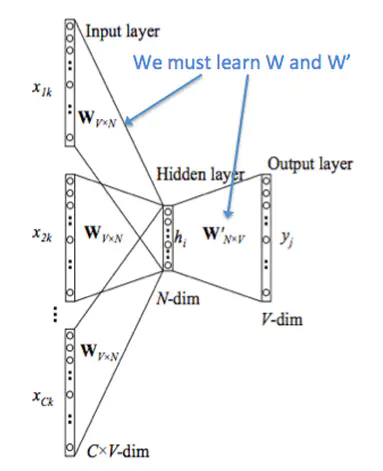

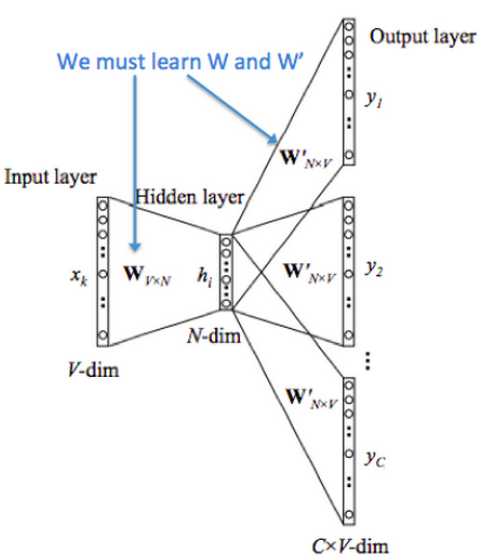

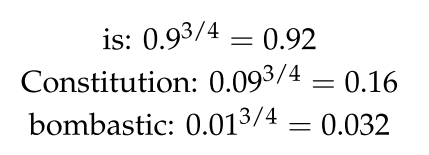

42 | ### 2、Word2vec: Overview

43 | **Word2vec (Mikolov et al. 2013) is a framework for learning word vectors.**

44 | **Idea:**

45 | * a large corpus of text,首先有一个语料库

46 | * 每个词给一个初始化的vector

47 | * 遍历text中的每个位置,包含了center word [c]和context words [o]

48 | * 根据c和o的词向量的相似度来计算,给出c得出o的似然

49 | * 调整优化word vectors来最小化似然

50 |

51 | 图示:

52 |

41 |

42 | ### 2、Word2vec: Overview

43 | **Word2vec (Mikolov et al. 2013) is a framework for learning word vectors.**

44 | **Idea:**

45 | * a large corpus of text,首先有一个语料库

46 | * 每个词给一个初始化的vector

47 | * 遍历text中的每个位置,包含了center word [c]和context words [o]

48 | * 根据c和o的词向量的相似度来计算,给出c得出o的似然

49 | * 调整优化word vectors来最小化似然

50 |

51 | 图示:

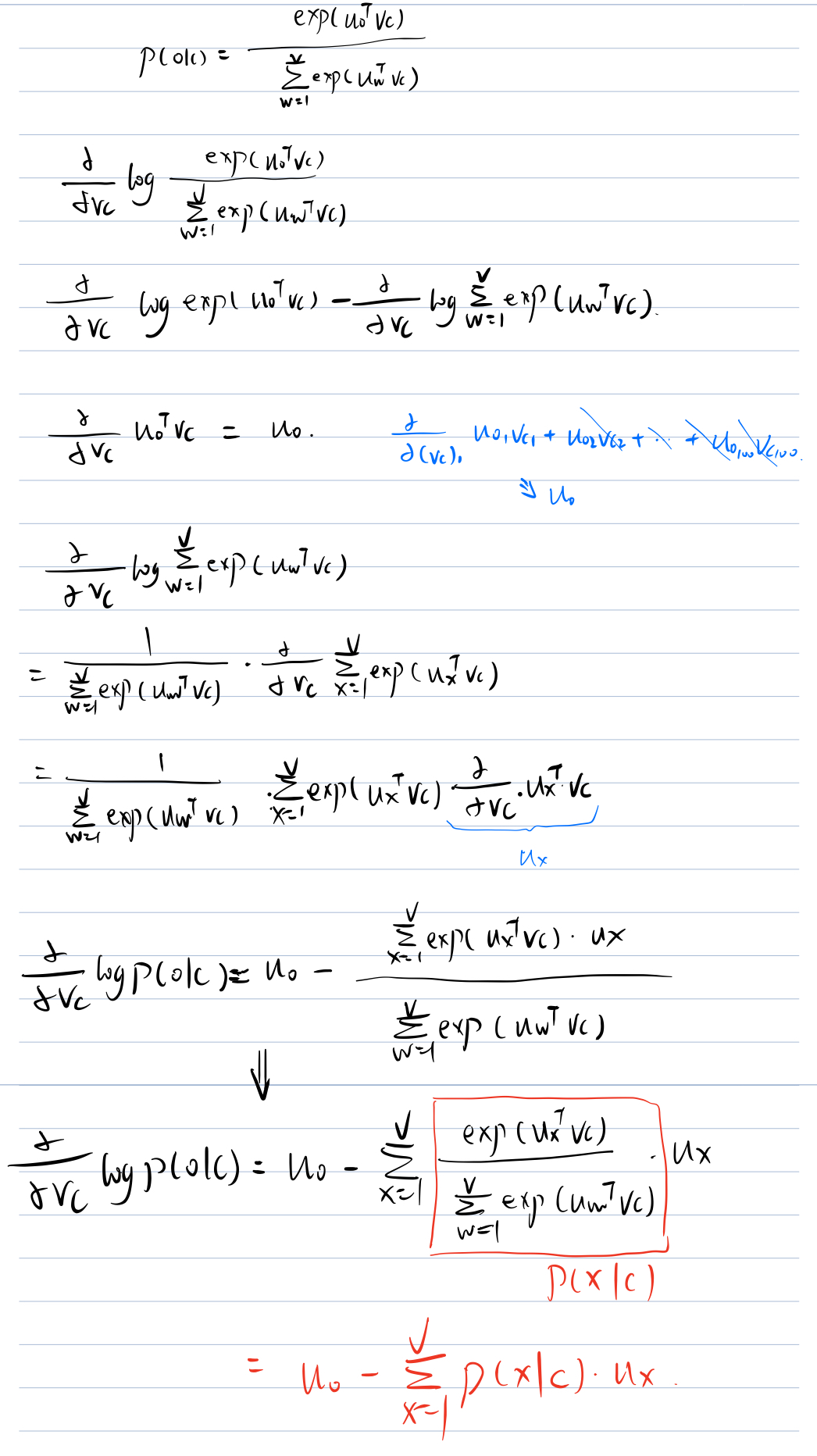

52 |  53 | 计算$P(w_{t+j}|w_t)$

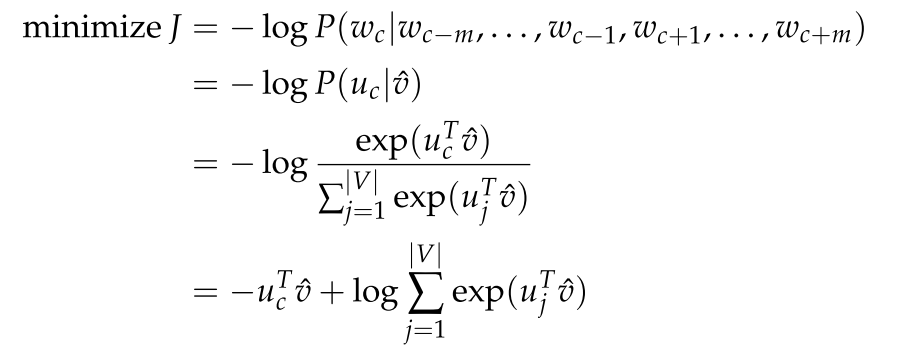

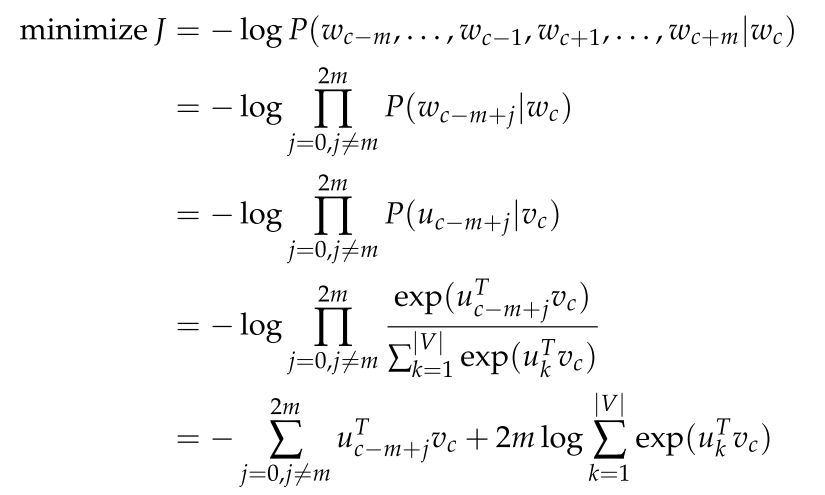

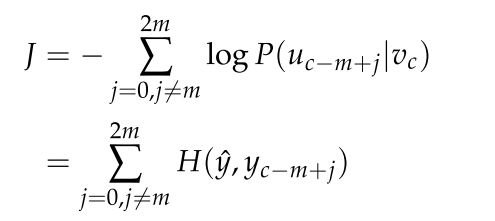

54 | #### Word2vec: objective function

55 | 对于每一个text的位置$t=1,...,T$,给出中心词$w_j$,预测窗口为m内的上下文。

56 | 其似然值为:

57 | $$Likelihood=L(\theta)=\prod_{t=1}^T\prod_{-m\le{j}\le{m} \atop{j\ne0}}P(w_{t+j}|w_t)$$ $\theta$ is all variables to be optimized.

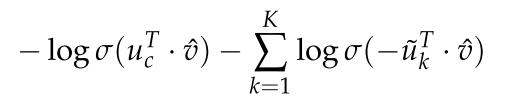

58 | 损失函数$J(\theta)$是(平均)负的对数似然,**negative log likelihood**:

59 | $$J(\theta)=-\frac{1}{T}logJ(\theta)=-\frac{1}{T}\sum_{t=1}^T\sum_{-m\le{j}\le{m} \atop{j\ne0}}P(w_{t+j}|w_t)$$**Minimizing objective function <==> Maxmizing predictive accuracy**

60 |

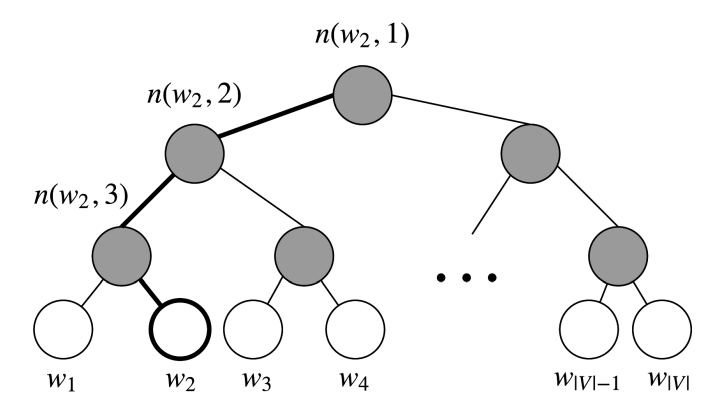

61 | 想要最小化损失函数,首先要考虑怎么计算$P(w_{t+j}|w_t)$

62 | 对于每个词给定两个词向量

63 | * $v_w$,当w为中心词时

64 | * $u_w$,当w为上下文时

65 |

66 | 对于每个中心词c和上下文词o,有:

67 | $$P(o|c)=\frac{exp(u_o^Tv_c)}{\sum_{w\in{V}}exp(u_w^Tv_c)}$$分子上的向量点乘表达的是两个词的相似度,分母是中心词和所有词的相似度(**注意:这里是所有词,后续优化**)

68 |

53 | 计算$P(w_{t+j}|w_t)$

54 | #### Word2vec: objective function

55 | 对于每一个text的位置$t=1,...,T$,给出中心词$w_j$,预测窗口为m内的上下文。

56 | 其似然值为:

57 | $$Likelihood=L(\theta)=\prod_{t=1}^T\prod_{-m\le{j}\le{m} \atop{j\ne0}}P(w_{t+j}|w_t)$$ $\theta$ is all variables to be optimized.

58 | 损失函数$J(\theta)$是(平均)负的对数似然,**negative log likelihood**:

59 | $$J(\theta)=-\frac{1}{T}logJ(\theta)=-\frac{1}{T}\sum_{t=1}^T\sum_{-m\le{j}\le{m} \atop{j\ne0}}P(w_{t+j}|w_t)$$**Minimizing objective function <==> Maxmizing predictive accuracy**

60 |

61 | 想要最小化损失函数,首先要考虑怎么计算$P(w_{t+j}|w_t)$

62 | 对于每个词给定两个词向量

63 | * $v_w$,当w为中心词时

64 | * $u_w$,当w为上下文时

65 |

66 | 对于每个中心词c和上下文词o,有:

67 | $$P(o|c)=\frac{exp(u_o^Tv_c)}{\sum_{w\in{V}}exp(u_w^Tv_c)}$$分子上的向量点乘表达的是两个词的相似度,分母是中心词和所有词的相似度(**注意:这里是所有词,后续优化**)

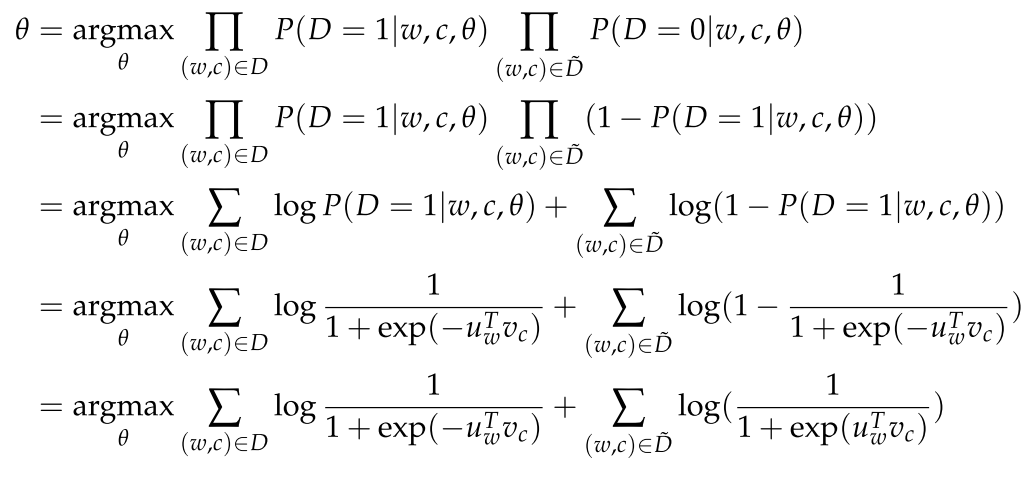

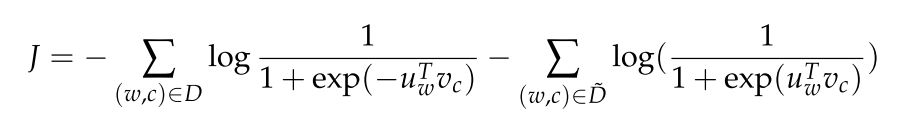

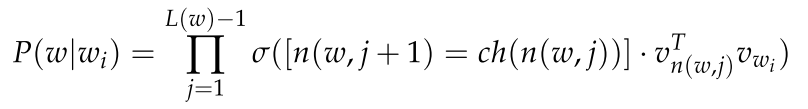

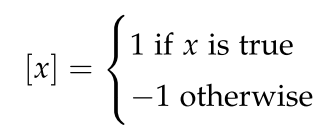

68 |  69 | **softmax** function:为什么成为softmax

70 |

69 | **softmax** function:为什么成为softmax

70 |  71 |

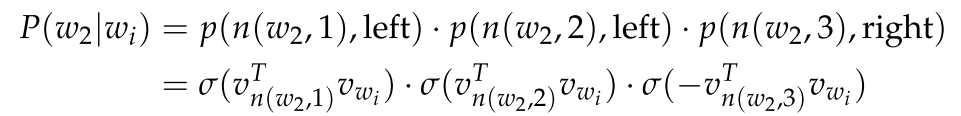

72 | #### To train the model: Compute all vector gradients

73 | $\theta$ represents all model parameters, in one long vector

74 | Remember: every word has two vectors

75 |

71 |

72 | #### To train the model: Compute all vector gradients

73 | $\theta$ represents all model parameters, in one long vector

74 | Remember: every word has two vectors

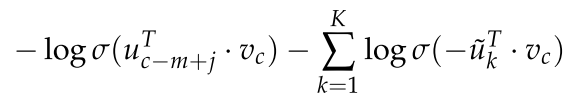

75 |  76 | 上述推导中,$P(x|c)$是给定中心词 $c$,模型所给出的为 $x$ 的概率。

77 |

78 | 这个推导结果很有趣!等号左边是给出中心词 $c$ 其上下文 $o$ 的对数概率的偏导,是我们要找的一个下降对快的一个方向,多维空间上的一个斜坡。等号右边的含义是,我们观察到的上下文的词 $o$ ,从中减去我们的模型认为的上下文的样子,后面一部分是模型的期望。实际的上下文与模型认为的上下文,这两者之间的差异决定了下降的方向。

--------------------------------------------------------------------------------

/notes/CS224N-2019/img/2020-06-12-12-42-12.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/zspo/NLP-Space/1c9891be0f44cf618d5b7fc1be2b03e12ce7fc24/notes/CS224N-2019/img/2020-06-12-12-42-12.png

--------------------------------------------------------------------------------

/notes/CS224N-2019/img/2020-06-12-13-22-31.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/zspo/NLP-Space/1c9891be0f44cf618d5b7fc1be2b03e12ce7fc24/notes/CS224N-2019/img/2020-06-12-13-22-31.png

--------------------------------------------------------------------------------

/notes/CS224N-2019/img/2020-06-12-13-24-36.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/zspo/NLP-Space/1c9891be0f44cf618d5b7fc1be2b03e12ce7fc24/notes/CS224N-2019/img/2020-06-12-13-24-36.png

--------------------------------------------------------------------------------

/notes/CS224N-2019/img/2020-06-12-15-11-11.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/zspo/NLP-Space/1c9891be0f44cf618d5b7fc1be2b03e12ce7fc24/notes/CS224N-2019/img/2020-06-12-15-11-11.png

--------------------------------------------------------------------------------

/notes/CS224N-2019/img/2020-06-12-15-37-09.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/zspo/NLP-Space/1c9891be0f44cf618d5b7fc1be2b03e12ce7fc24/notes/CS224N-2019/img/2020-06-12-15-37-09.png

--------------------------------------------------------------------------------

/notes/CS224N-2019/img/2020-06-12-15-42-22.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/zspo/NLP-Space/1c9891be0f44cf618d5b7fc1be2b03e12ce7fc24/notes/CS224N-2019/img/2020-06-12-15-42-22.png

--------------------------------------------------------------------------------

/notes/CS224N-2019/img/微信图片_20200612171355.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/zspo/NLP-Space/1c9891be0f44cf618d5b7fc1be2b03e12ce7fc24/notes/CS224N-2019/img/微信图片_20200612171355.jpg

--------------------------------------------------------------------------------

/notes/CS224N-2019/img/微信截图_20200612183129.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/zspo/NLP-Space/1c9891be0f44cf618d5b7fc1be2b03e12ce7fc24/notes/CS224N-2019/img/微信截图_20200612183129.png

--------------------------------------------------------------------------------

/notes/Word2Vec学习笔记(CS224N笔记及相关论文学习).md:

--------------------------------------------------------------------------------

1 | ***[参考CS224N笔记](https://web.stanford.edu/class/archive/cs/cs224n/cs224n.1194/readings/cs224n-2019-notes01-wordvecs1.pdf)

2 | [The Skip-Gram Model](http://mccormickml.com/2016/04/19/word2vec-tutorial-the-skip-gram-model/)

3 | [word2vec paper](https://arxiv.org/pdf/1301.3781.pdf)

4 | [negative sampling paper](http://papers.nips.cc/paper/5021-distributed-representations-of-words-and-phrases-and-their-compositionality.pdf)***

5 |

6 | @[toc]

7 | ### NLP

8 | 人类语言是独特的传达含义的系统,不同于计算机视觉及其他的机器学习任务。

9 | NLP领域有着不同难度等级的任务,从语音处理到语义解释等。NLP的目标是设计出算法令计算机“理解”自然语言以解决实际的任务。

10 | - Easy的任务包括:拼写纠正、关键词搜索、同义词查找等;

11 | - Medium的任务包括:信息解析等;

12 | - Hard任务包括:机器翻译、情感分析、指代、问答系统等。

13 |

14 | ### 1、Word Vectors

15 | 英语中估计有13 million单词,他们相互之间并不全是无关的,Feline to cat (猫科动物->猫)、hotel to motel (旅馆->汽车旅馆)等。我们希望用一些向量来编码每个单词,在同一词空间内以点的形式进行表示。直接的方法是构建一个$N(N\le13 million)$维度的空间,这个空间足够将我们的单词进行编码,每个维度可以编码某些我们语言的含义。这些维度可能表示时态、计数、性别等。

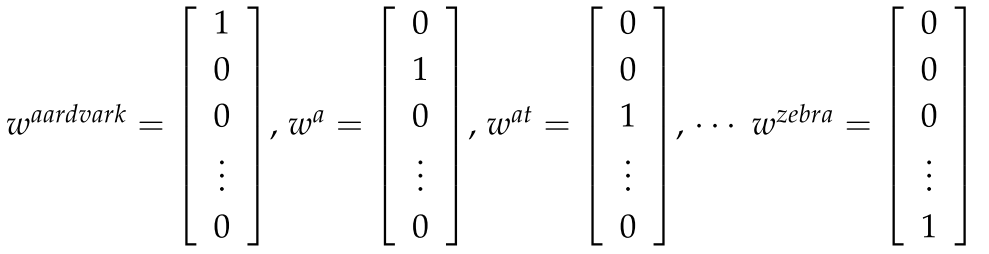

16 | 独热编码是直接的编码方法,将每个词表示为$\mathbb{R}^{|V|\times1}$向量,该词在固定顺序下的索引处为1,其他位置都为0。如下

17 |

76 | 上述推导中,$P(x|c)$是给定中心词 $c$,模型所给出的为 $x$ 的概率。

77 |

78 | 这个推导结果很有趣!等号左边是给出中心词 $c$ 其上下文 $o$ 的对数概率的偏导,是我们要找的一个下降对快的一个方向,多维空间上的一个斜坡。等号右边的含义是,我们观察到的上下文的词 $o$ ,从中减去我们的模型认为的上下文的样子,后面一部分是模型的期望。实际的上下文与模型认为的上下文,这两者之间的差异决定了下降的方向。

--------------------------------------------------------------------------------

/notes/CS224N-2019/img/2020-06-12-12-42-12.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/zspo/NLP-Space/1c9891be0f44cf618d5b7fc1be2b03e12ce7fc24/notes/CS224N-2019/img/2020-06-12-12-42-12.png

--------------------------------------------------------------------------------

/notes/CS224N-2019/img/2020-06-12-13-22-31.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/zspo/NLP-Space/1c9891be0f44cf618d5b7fc1be2b03e12ce7fc24/notes/CS224N-2019/img/2020-06-12-13-22-31.png

--------------------------------------------------------------------------------

/notes/CS224N-2019/img/2020-06-12-13-24-36.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/zspo/NLP-Space/1c9891be0f44cf618d5b7fc1be2b03e12ce7fc24/notes/CS224N-2019/img/2020-06-12-13-24-36.png

--------------------------------------------------------------------------------

/notes/CS224N-2019/img/2020-06-12-15-11-11.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/zspo/NLP-Space/1c9891be0f44cf618d5b7fc1be2b03e12ce7fc24/notes/CS224N-2019/img/2020-06-12-15-11-11.png

--------------------------------------------------------------------------------

/notes/CS224N-2019/img/2020-06-12-15-37-09.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/zspo/NLP-Space/1c9891be0f44cf618d5b7fc1be2b03e12ce7fc24/notes/CS224N-2019/img/2020-06-12-15-37-09.png

--------------------------------------------------------------------------------

/notes/CS224N-2019/img/2020-06-12-15-42-22.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/zspo/NLP-Space/1c9891be0f44cf618d5b7fc1be2b03e12ce7fc24/notes/CS224N-2019/img/2020-06-12-15-42-22.png

--------------------------------------------------------------------------------

/notes/CS224N-2019/img/微信图片_20200612171355.jpg:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/zspo/NLP-Space/1c9891be0f44cf618d5b7fc1be2b03e12ce7fc24/notes/CS224N-2019/img/微信图片_20200612171355.jpg

--------------------------------------------------------------------------------

/notes/CS224N-2019/img/微信截图_20200612183129.png:

--------------------------------------------------------------------------------

https://raw.githubusercontent.com/zspo/NLP-Space/1c9891be0f44cf618d5b7fc1be2b03e12ce7fc24/notes/CS224N-2019/img/微信截图_20200612183129.png

--------------------------------------------------------------------------------

/notes/Word2Vec学习笔记(CS224N笔记及相关论文学习).md:

--------------------------------------------------------------------------------

1 | ***[参考CS224N笔记](https://web.stanford.edu/class/archive/cs/cs224n/cs224n.1194/readings/cs224n-2019-notes01-wordvecs1.pdf)

2 | [The Skip-Gram Model](http://mccormickml.com/2016/04/19/word2vec-tutorial-the-skip-gram-model/)

3 | [word2vec paper](https://arxiv.org/pdf/1301.3781.pdf)

4 | [negative sampling paper](http://papers.nips.cc/paper/5021-distributed-representations-of-words-and-phrases-and-their-compositionality.pdf)***

5 |

6 | @[toc]

7 | ### NLP

8 | 人类语言是独特的传达含义的系统,不同于计算机视觉及其他的机器学习任务。

9 | NLP领域有着不同难度等级的任务,从语音处理到语义解释等。NLP的目标是设计出算法令计算机“理解”自然语言以解决实际的任务。

10 | - Easy的任务包括:拼写纠正、关键词搜索、同义词查找等;

11 | - Medium的任务包括:信息解析等;

12 | - Hard任务包括:机器翻译、情感分析、指代、问答系统等。

13 |

14 | ### 1、Word Vectors

15 | 英语中估计有13 million单词,他们相互之间并不全是无关的,Feline to cat (猫科动物->猫)、hotel to motel (旅馆->汽车旅馆)等。我们希望用一些向量来编码每个单词,在同一词空间内以点的形式进行表示。直接的方法是构建一个$N(N\le13 million)$维度的空间,这个空间足够将我们的单词进行编码,每个维度可以编码某些我们语言的含义。这些维度可能表示时态、计数、性别等。

16 | 独热编码是直接的编码方法,将每个词表示为$\mathbb{R}^{|V|\times1}$向量,该词在固定顺序下的索引处为1,其他位置都为0。如下

17 |