├── LICENSE

├── README.md

├── data

└── README.md

├── dataset

└── referit_loader.py

├── ln_data

├── README.md

└── download_data.sh

├── model

├── darknet.py

├── grounding_model.py

└── yolov3.cfg

├── saved_models

├── README.md

└── yolov3_weights.sh

├── train_yolo.py

└── utils

├── __init__.py

├── losses.py

├── misc_utils.py

├── parsing_metrics.py

├── transforms.py

├── utils.py

└── word_utils.py

/LICENSE:

--------------------------------------------------------------------------------

1 | MIT License

2 |

3 | Copyright (c) 2019 Zhengyuan Yang

4 |

5 | Permission is hereby granted, free of charge, to any person obtaining a copy

6 | of this software and associated documentation files (the "Software"), to deal

7 | in the Software without restriction, including without limitation the rights

8 | to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

9 | copies of the Software, and to permit persons to whom the Software is

10 | furnished to do so, subject to the following conditions:

11 |

12 | The above copyright notice and this permission notice shall be included in all

13 | copies or substantial portions of the Software.

14 |

15 | THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

16 | IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

17 | FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

18 | AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

19 | LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

20 | OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

21 | SOFTWARE.

22 |

--------------------------------------------------------------------------------

/README.md:

--------------------------------------------------------------------------------

1 | # One-Stage Visual Grounding

2 | **\*\*\*\*\* New: Our recent work on One-stage VG is available at [ReSC](https://github.com/zyang-ur/ReSC).\*\*\*\*\***

3 |

4 |

5 | [A Fast and Accurate One-Stage Approach to Visual Grounding](https://arxiv.org/pdf/1908.06354.pdf)

6 |

7 | by [Zhengyuan Yang](http://cs.rochester.edu/u/zyang39/), [Boqing Gong](http://boqinggong.info/), [Liwei Wang](http://www.deepcv.net/), Wenbing Huang, Dong Yu, and [Jiebo Luo](http://cs.rochester.edu/u/jluo)

8 |

9 | IEEE International Conference on Computer Vision (ICCV), 2019, Oral

10 |

11 |

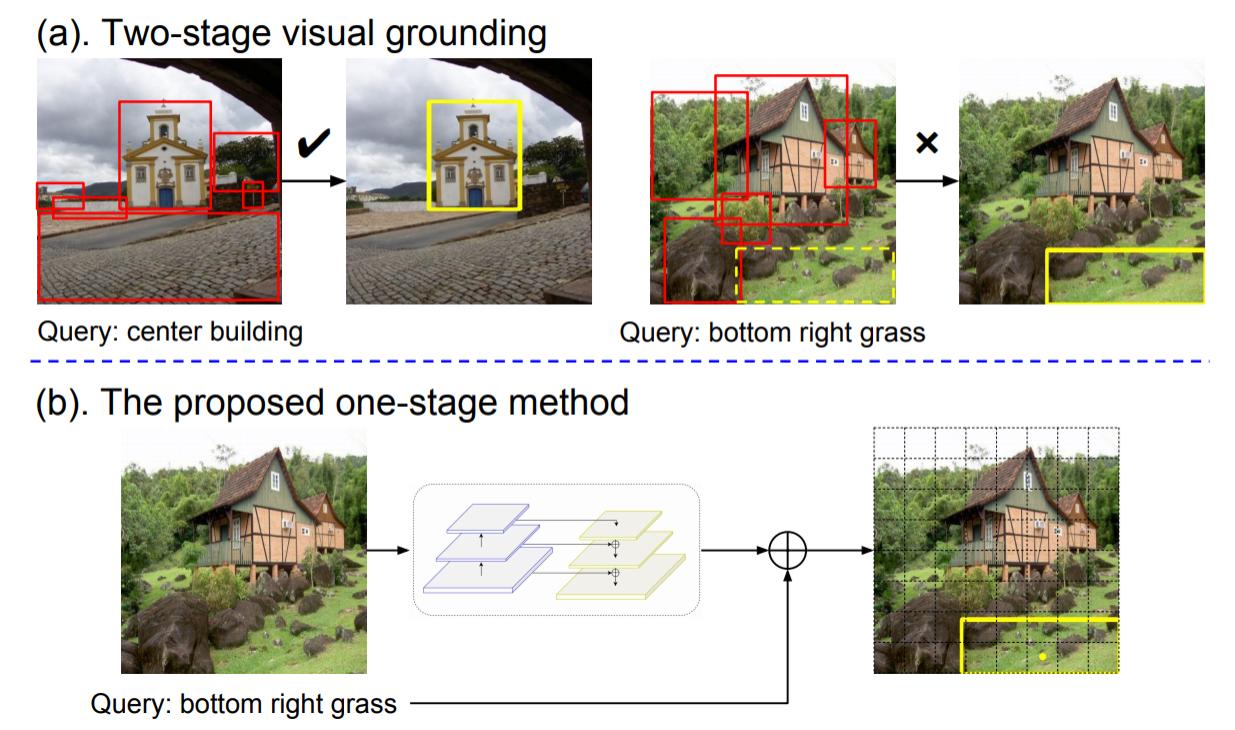

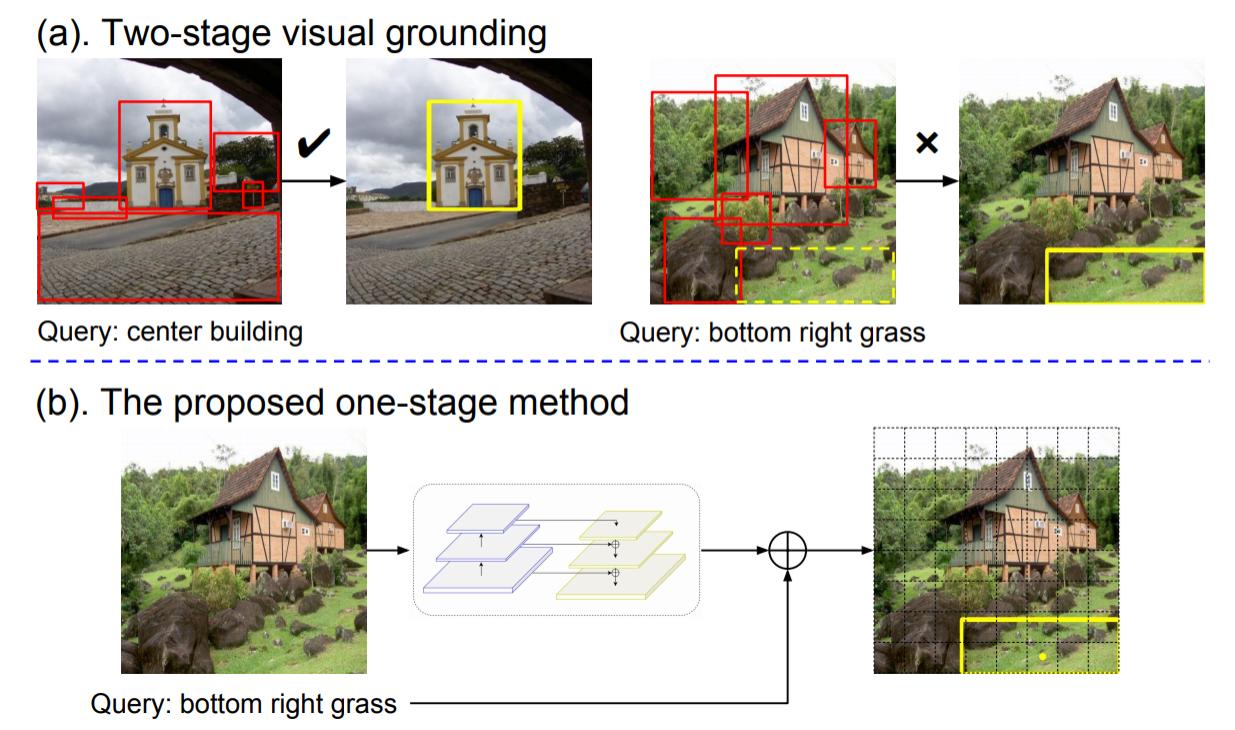

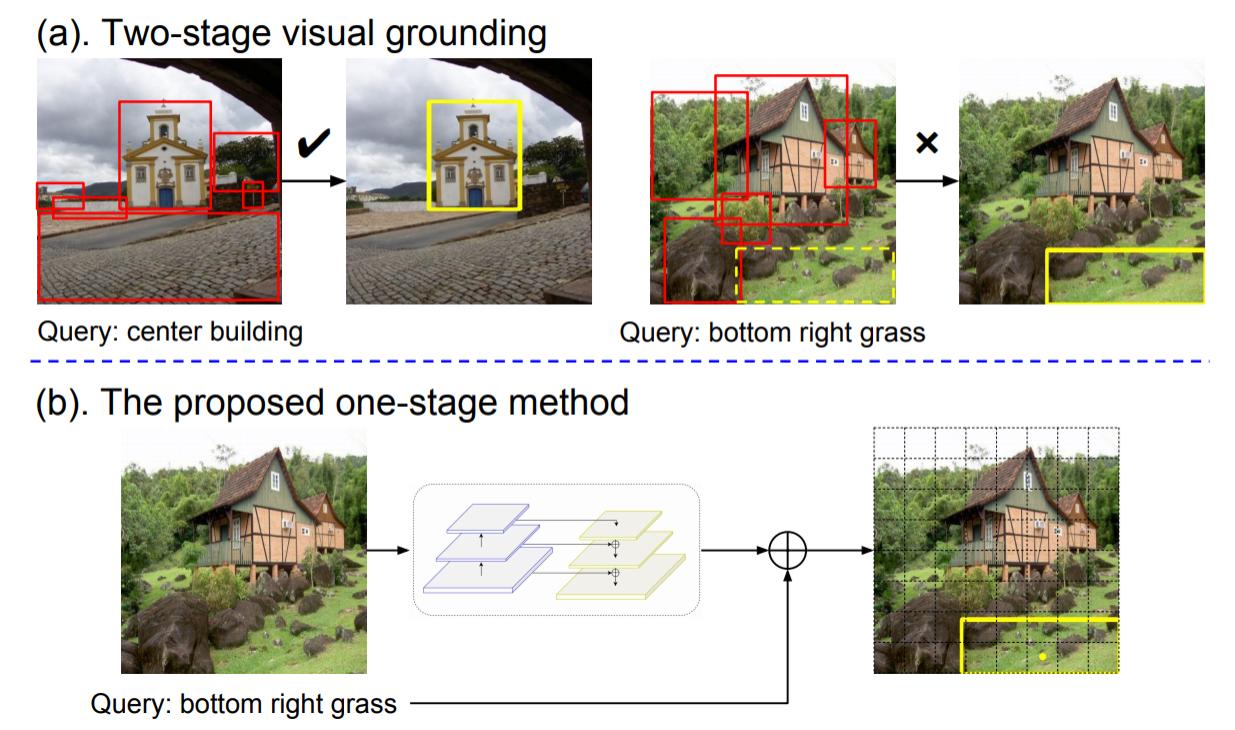

12 | ### Introduction

13 | We propose a simple, fast, and accurate one-stage approach

14 | to visual grounding. For more details, please refer to our

15 | [paper](https://arxiv.org/pdf/1908.06354.pdf).

16 |

17 |

19 |

20 |  21 |

21 |

22 |

23 | ### Citation

24 |

25 | @inproceedings{yang2019fast,

26 | title={A Fast and Accurate One-Stage Approach to Visual Grounding},

27 | author={Yang, Zhengyuan and Gong, Boqing and Wang, Liwei and Huang

28 | , Wenbing and Yu, Dong and Luo, Jiebo},

29 | booktitle={ICCV},

30 | year={2019}

31 | }

32 |

33 | ### Prerequisites

34 |

35 | * Python 3.5 (3.6 tested)

36 | * Pytorch 0.4.1

37 | * Others ([Pytorch-Bert](https://pypi.org/project/pytorch-pretrained-bert/), OpenCV, Matplotlib, scipy, etc.)

38 |

39 | ## Installation

40 |

41 | 1. Clone the repository

42 |

43 | ```

44 | git clone https://github.com/zyang-ur/onestage_grounding.git

45 | ```

46 |

47 | 2. Prepare the submodules and associated data

48 |

49 | * RefCOCO & ReferItGame Dataset: place the data or the soft link of dataset folder under ``./ln_data/``. We follow dataset structure [DMS](https://github.com/BCV-Uniandes/DMS). To accomplish this, the ``download_dataset.sh`` [bash script](https://github.com/BCV-Uniandes/DMS/blob/master/download_data.sh) from DMS can be used.

50 | ```bash

51 | bash ln_data/download_data.sh --path ./ln_data

52 | ```

53 |

54 |

60 | * Flickr30K Entities Dataset: please download the images for the dataset on the website for the [Flickr30K Entities Dataset](http://bryanplummer.com/Flickr30kEntities/) and the original [Flickr30k Dataset](http://shannon.cs.illinois.edu/DenotationGraph/). Images should be placed under ``./ln_data/Flickr30k/flickr30k_images``.

61 |

62 |

63 | * Data index: download the generated index files and place them as the ``./data`` folder. Availble at [[Gdrive]](https://drive.google.com/open?id=1cZI562MABLtAzM6YU4WmKPFFguuVr0lZ), [[One Drive]](https://uofr-my.sharepoint.com/:f:/g/personal/zyang39_ur_rochester_edu/Epw5WQ_mJ-tOlAbK5LxsnrsBElWwvNdU7aus0UIzWtwgKQ?e=XHQm7F).

64 | ```

65 | rm -r data

66 | tar xf data.tar

67 | ```

68 |

69 | * Model weights: download the pretrained model of [Yolov3](https://pjreddie.com/media/files/yolov3.weights) and place the file in ``./saved_models``.

70 | ```

71 | sh saved_models/yolov3_weights.sh

72 | ```

73 | More pretrained models are availble in the performance table [[Gdrive]](https://drive.google.com/open?id=1-DXvhEbWQtVWAUT_-G19zlz-0Ekcj5d7), [[One Drive]](https://uofr-my.sharepoint.com/:f:/g/personal/zyang39_ur_rochester_edu/ErrXDnw1igFGghwbH5daoKwBX4vtE_erXbOo1JGnraCE4Q?e=tQUCk7) and should also be placed in ``./saved_models``.

74 |

75 |

76 | ### Training

77 | 3. Train the model, run the code under main folder.

78 | Using flag ``--lstm`` to access lstm encoder, Bert is used as the default.

79 | Using flag ``--light`` to access the light model.

80 |

81 | ```

82 | python train_yolo.py --data_root ./ln_data/ --dataset referit \

83 | --gpu gpu_id --batch_size 32 --resume saved_models/lstm_referit_model.pth.tar \

84 | --lr 1e-4 --nb_epoch 100 --lstm

85 | ```

86 |

87 | 4. Evaluate the model, run the code under main folder.

88 | Using flag ``--test`` to access test mode.

89 |

90 | ```

91 | python train_yolo.py --data_root ./ln_data/ --dataset referit \

92 | --gpu gpu_id --resume saved_models/lstm_referit_model.pth.tar \

93 | --lstm --test

94 | ```

95 |

96 | 5. Visulizations. Flag ``--save_plot`` will save visulizations.

97 |

98 |

99 | ## Performance and Pre-trained Models

100 | Please check the detailed experiment settings in our [paper](https://arxiv.org/pdf/1908.06354.pdf).

101 |

102 |

103 |

104 | | Dataset |

105 | Ours-LSTM |

106 | Performance (Accu@0.5) |

107 | Ours-Bert |

108 | Performance (Accu@0.5) |

109 |

110 |

111 |

112 |

113 | | ReferItGame |

114 | Gdrive |

115 | 58.76 |

116 | Gdrive |

117 | 59.30 |

118 |

119 |

120 | | Flickr30K Entities |

121 | One Drive |

122 | 67.62 |

123 | One Drive |

124 | 68.69 |

125 |

126 |

127 | | RefCOCO |

128 |

129 | | val: 73.66 |

130 |

131 | | val: 72.05 |

132 |

133 |

134 | | testA: 75.78 |

135 | testA: 74.81 |

136 |

137 |

138 | | testB: 71.32 |

139 | testB: 67.59 |

140 |

141 |

142 |

143 |

144 |

145 | ### Credits

146 | Part of the code or models are from

147 | [DMS](https://github.com/BCV-Uniandes/DMS),

148 | [MAttNet](https://github.com/lichengunc/MAttNet),

149 | [Yolov3](https://pjreddie.com/darknet/yolo/) and

150 | [Pytorch-yolov3](https://github.com/eriklindernoren/PyTorch-YOLOv3).

151 |

--------------------------------------------------------------------------------

/data/README.md:

--------------------------------------------------------------------------------

1 | Please download cache from [Gdrive](https://drive.google.com/open?id=1i9fjhZ3cmn5YOxlacGMpcxWmrNnRNU4B), or [OneDrive]

--------------------------------------------------------------------------------

/dataset/referit_loader.py:

--------------------------------------------------------------------------------

1 | # -*- coding: utf-8 -*-

2 |

3 | """

4 | ReferIt, UNC, UNC+ and GRef referring image segmentation PyTorch dataset.

5 |

6 | Define and group batches of images, segmentations and queries.

7 | Based on:

8 | https://github.com/chenxi116/TF-phrasecut-public/blob/master/build_batches.py

9 | """

10 |

11 | import os

12 | import sys

13 | import cv2

14 | import json

15 | import uuid

16 | import tqdm

17 | import math

18 | import torch

19 | import random

20 | # import h5py

21 | import numpy as np

22 | import os.path as osp

23 | import scipy.io as sio

24 | import torch.utils.data as data

25 | from collections import OrderedDict

26 | sys.path.append('.')

27 | import utils

28 | from utils import Corpus

29 |

30 | import argparse

31 | import collections

32 | import logging

33 | import json

34 | import re

35 |

36 | from pytorch_pretrained_bert.tokenization import BertTokenizer

37 | from pytorch_pretrained_bert.modeling import BertModel

38 | from utils.transforms import letterbox, random_affine

39 |

40 | sys.modules['utils'] = utils

41 |

42 | cv2.setNumThreads(0)

43 |

44 | def read_examples(input_line, unique_id):

45 | """Read a list of `InputExample`s from an input file."""

46 | examples = []

47 | # unique_id = 0

48 | line = input_line #reader.readline()

49 | # if not line:

50 | # break

51 | line = line.strip()

52 | text_a = None

53 | text_b = None

54 | m = re.match(r"^(.*) \|\|\| (.*)$", line)

55 | if m is None:

56 | text_a = line

57 | else:

58 | text_a = m.group(1)

59 | text_b = m.group(2)

60 | examples.append(

61 | InputExample(unique_id=unique_id, text_a=text_a, text_b=text_b))

62 | # unique_id += 1

63 | return examples

64 |

65 | def bbox_randscale(bbox, miniou=0.75):

66 | w,h = bbox[2]-bbox[0], bbox[3]-bbox[1]

67 | scale_shrink = (1-math.sqrt(miniou))/2.

68 | scale_expand = (math.sqrt(1./miniou)-1)/2.

69 | w1,h1 = random.uniform(-scale_expand, scale_shrink)*w, random.uniform(-scale_expand, scale_shrink)*h

70 | w2,h2 = random.uniform(-scale_shrink, scale_expand)*w, random.uniform(-scale_shrink, scale_expand)*h

71 | bbox[0],bbox[2] = bbox[0]+w1,bbox[2]+w2

72 | bbox[1],bbox[3] = bbox[1]+h1,bbox[3]+h2

73 | return bbox

74 |

75 | ## Bert text encoding

76 | class InputExample(object):

77 | def __init__(self, unique_id, text_a, text_b):

78 | self.unique_id = unique_id

79 | self.text_a = text_a

80 | self.text_b = text_b

81 |

82 | class InputFeatures(object):

83 | """A single set of features of data."""

84 | def __init__(self, unique_id, tokens, input_ids, input_mask, input_type_ids):

85 | self.unique_id = unique_id

86 | self.tokens = tokens

87 | self.input_ids = input_ids

88 | self.input_mask = input_mask

89 | self.input_type_ids = input_type_ids

90 |

91 | def convert_examples_to_features(examples, seq_length, tokenizer):

92 | """Loads a data file into a list of `InputBatch`s."""

93 | features = []

94 | for (ex_index, example) in enumerate(examples):

95 | tokens_a = tokenizer.tokenize(example.text_a)

96 |

97 | tokens_b = None

98 | if example.text_b:

99 | tokens_b = tokenizer.tokenize(example.text_b)

100 |

101 | if tokens_b:

102 | # Modifies `tokens_a` and `tokens_b` in place so that the total

103 | # length is less than the specified length.

104 | # Account for [CLS], [SEP], [SEP] with "- 3"

105 | _truncate_seq_pair(tokens_a, tokens_b, seq_length - 3)

106 | else:

107 | # Account for [CLS] and [SEP] with "- 2"

108 | if len(tokens_a) > seq_length - 2:

109 | tokens_a = tokens_a[0:(seq_length - 2)]

110 | tokens = []

111 | input_type_ids = []

112 | tokens.append("[CLS]")

113 | input_type_ids.append(0)

114 | for token in tokens_a:

115 | tokens.append(token)

116 | input_type_ids.append(0)

117 | tokens.append("[SEP]")

118 | input_type_ids.append(0)

119 |

120 | if tokens_b:

121 | for token in tokens_b:

122 | tokens.append(token)

123 | input_type_ids.append(1)

124 | tokens.append("[SEP]")

125 | input_type_ids.append(1)

126 |

127 | input_ids = tokenizer.convert_tokens_to_ids(tokens)

128 |

129 | # The mask has 1 for real tokens and 0 for padding tokens. Only real

130 | # tokens are attended to.

131 | input_mask = [1] * len(input_ids)

132 |

133 | # Zero-pad up to the sequence length.

134 | while len(input_ids) < seq_length:

135 | input_ids.append(0)

136 | input_mask.append(0)

137 | input_type_ids.append(0)

138 |

139 | assert len(input_ids) == seq_length

140 | assert len(input_mask) == seq_length

141 | assert len(input_type_ids) == seq_length

142 | features.append(

143 | InputFeatures(

144 | unique_id=example.unique_id,

145 | tokens=tokens,

146 | input_ids=input_ids,

147 | input_mask=input_mask,

148 | input_type_ids=input_type_ids))

149 | return features

150 |

151 | class DatasetNotFoundError(Exception):

152 | pass

153 |

154 | class ReferDataset(data.Dataset):

155 | SUPPORTED_DATASETS = {

156 | 'referit': {'splits': ('train', 'val', 'trainval', 'test')},

157 | 'unc': {

158 | 'splits': ('train', 'val', 'trainval', 'testA', 'testB'),

159 | 'params': {'dataset': 'refcoco', 'split_by': 'unc'}

160 | },

161 | 'unc+': {

162 | 'splits': ('train', 'val', 'trainval', 'testA', 'testB'),

163 | 'params': {'dataset': 'refcoco+', 'split_by': 'unc'}

164 | },

165 | 'gref': {

166 | 'splits': ('train', 'val'),

167 | 'params': {'dataset': 'refcocog', 'split_by': 'google'}

168 | },

169 | 'flickr': {

170 | 'splits': ('train', 'val', 'test')}

171 | }

172 |

173 | def __init__(self, data_root, split_root='data', dataset='referit', imsize=256,

174 | transform=None, augment=False, return_idx=False, testmode=False,

175 | split='train', max_query_len=128, lstm=False, bert_model='bert-base-uncased'):

176 | self.images = []

177 | self.data_root = data_root

178 | self.split_root = split_root

179 | self.dataset = dataset

180 | self.imsize = imsize

181 | self.query_len = max_query_len

182 | self.lstm = lstm

183 | self.corpus = Corpus()

184 | self.transform = transform

185 | self.testmode = testmode

186 | self.split = split

187 | self.tokenizer = BertTokenizer.from_pretrained(bert_model, do_lower_case=True)

188 | self.augment=augment

189 | self.return_idx=return_idx

190 |

191 | if self.dataset == 'referit':

192 | self.dataset_root = osp.join(self.data_root, 'referit')

193 | self.im_dir = osp.join(self.dataset_root, 'images')

194 | self.split_dir = osp.join(self.dataset_root, 'splits')

195 | elif self.dataset == 'flickr':

196 | self.dataset_root = osp.join(self.data_root, 'Flickr30k')

197 | self.im_dir = osp.join(self.dataset_root, 'flickr30k_images')

198 | else: ## refcoco, etc.

199 | self.dataset_root = osp.join(self.data_root, 'other')

200 | self.im_dir = osp.join(

201 | self.dataset_root, 'images', 'mscoco', 'images', 'train2014')

202 | self.split_dir = osp.join(self.dataset_root, 'splits')

203 |

204 | if not self.exists_dataset():

205 | # self.process_dataset()

206 | print('Please download index cache to data folder: \n \

207 | https://drive.google.com/open?id=1cZI562MABLtAzM6YU4WmKPFFguuVr0lZ')

208 | exit(0)

209 |

210 | dataset_path = osp.join(self.split_root, self.dataset)

211 | corpus_path = osp.join(dataset_path, 'corpus.pth')

212 | valid_splits = self.SUPPORTED_DATASETS[self.dataset]['splits']

213 |

214 | if split not in valid_splits:

215 | raise ValueError(

216 | 'Dataset {0} does not have split {1}'.format(

217 | self.dataset, split))

218 | self.corpus = torch.load(corpus_path)

219 |

220 | splits = [split]

221 | if self.dataset != 'referit':

222 | splits = ['train', 'val'] if split == 'trainval' else [split]

223 | for split in splits:

224 | imgset_file = '{0}_{1}.pth'.format(self.dataset, split)

225 | imgset_path = osp.join(dataset_path, imgset_file)

226 | self.images += torch.load(imgset_path)

227 |

228 | def exists_dataset(self):

229 | return osp.exists(osp.join(self.split_root, self.dataset))

230 |

231 | def pull_item(self, idx):

232 | if self.dataset == 'flickr':

233 | img_file, bbox, phrase = self.images[idx]

234 | else:

235 | img_file, _, bbox, phrase, attri = self.images[idx]

236 | ## box format: to x1y1x2y2

237 | if not (self.dataset == 'referit' or self.dataset == 'flickr'):

238 | bbox = np.array(bbox, dtype=int)

239 | bbox[2], bbox[3] = bbox[0]+bbox[2], bbox[1]+bbox[3]

240 | else:

241 | bbox = np.array(bbox, dtype=int)

242 |

243 | img_path = osp.join(self.im_dir, img_file)

244 | img = cv2.imread(img_path)

245 | ## duplicate channel if gray image

246 | if img.shape[-1] > 1:

247 | img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

248 | else:

249 | img = np.stack([img] * 3)

250 | return img, phrase, bbox

251 |

252 | def tokenize_phrase(self, phrase):

253 | return self.corpus.tokenize(phrase, self.query_len)

254 |

255 | def untokenize_word_vector(self, words):

256 | return self.corpus.dictionary[words]

257 |

258 | def __len__(self):

259 | return len(self.images)

260 |

261 | def __getitem__(self, idx):

262 | img, phrase, bbox = self.pull_item(idx)

263 | # phrase = phrase.decode("utf-8").encode().lower()

264 | phrase = phrase.lower()

265 | if self.augment:

266 | augment_flip, augment_hsv, augment_affine = True,True,True

267 |

268 | ## seems a bug in torch transformation resize, so separate in advance

269 | h,w = img.shape[0], img.shape[1]

270 | if self.augment:

271 | ## random horizontal flip

272 | if augment_flip and random.random() > 0.5:

273 | img = cv2.flip(img, 1)

274 | bbox[0], bbox[2] = w-bbox[2]-1, w-bbox[0]-1

275 | phrase = phrase.replace('right','*&^special^&*').replace('left','right').replace('*&^special^&*','left')

276 | ## random intensity, saturation change

277 | if augment_hsv:

278 | fraction = 0.50

279 | img_hsv = cv2.cvtColor(cv2.cvtColor(img, cv2.COLOR_RGB2BGR), cv2.COLOR_BGR2HSV)

280 | S = img_hsv[:, :, 1].astype(np.float32)

281 | V = img_hsv[:, :, 2].astype(np.float32)

282 | a = (random.random() * 2 - 1) * fraction + 1

283 | if a > 1:

284 | np.clip(S, a_min=0, a_max=255, out=S)

285 | a = (random.random() * 2 - 1) * fraction + 1

286 | V *= a

287 | if a > 1:

288 | np.clip(V, a_min=0, a_max=255, out=V)

289 |

290 | img_hsv[:, :, 1] = S.astype(np.uint8)

291 | img_hsv[:, :, 2] = V.astype(np.uint8)

292 | img = cv2.cvtColor(cv2.cvtColor(img_hsv, cv2.COLOR_HSV2BGR), cv2.COLOR_BGR2RGB)

293 | img, _, ratio, dw, dh = letterbox(img, None, self.imsize)

294 | bbox[0], bbox[2] = bbox[0]*ratio+dw, bbox[2]*ratio+dw

295 | bbox[1], bbox[3] = bbox[1]*ratio+dh, bbox[3]*ratio+dh

296 | ## random affine transformation

297 | if augment_affine:

298 | img, _, bbox, M = random_affine(img, None, bbox, \

299 | degrees=(-5, 5), translate=(0.10, 0.10), scale=(0.90, 1.10))

300 | else: ## should be inference, or specified training

301 | img, _, ratio, dw, dh = letterbox(img, None, self.imsize)

302 | bbox[0], bbox[2] = bbox[0]*ratio+dw, bbox[2]*ratio+dw

303 | bbox[1], bbox[3] = bbox[1]*ratio+dh, bbox[3]*ratio+dh

304 |

305 | ## Norm, to tensor

306 | if self.transform is not None:

307 | img = self.transform(img)

308 | if self.lstm:

309 | phrase = self.tokenize_phrase(phrase)

310 | word_id = phrase

311 | word_mask = np.zeros(word_id.shape)

312 | else:

313 | ## encode phrase to bert input

314 | examples = read_examples(phrase, idx)

315 | features = convert_examples_to_features(

316 | examples=examples, seq_length=self.query_len, tokenizer=self.tokenizer)

317 | word_id = features[0].input_ids

318 | word_mask = features[0].input_mask

319 | if self.testmode:

320 | return img, np.array(word_id, dtype=int), np.array(word_mask, dtype=int), \

321 | np.array(bbox, dtype=np.float32), np.array(ratio, dtype=np.float32), \

322 | np.array(dw, dtype=np.float32), np.array(dh, dtype=np.float32), self.images[idx][0]

323 | else:

324 | return img, np.array(word_id, dtype=int), np.array(word_mask, dtype=int), \

325 | np.array(bbox, dtype=np.float32)

326 |

327 | if __name__ == '__main__':

328 | import nltk

329 | import argparse

330 | from torch.utils.data import DataLoader

331 | from torchvision.transforms import Compose, ToTensor, Normalize

332 | # from utils.transforms import ResizeImage, ResizeAnnotation

333 | parser = argparse.ArgumentParser(

334 | description='Dataloader test')

335 | parser.add_argument('--size', default=416, type=int,

336 | help='image size')

337 | parser.add_argument('--data', type=str, default='./ln_data/',

338 | help='path to ReferIt splits data folder')

339 | parser.add_argument('--dataset', default='referit', type=str,

340 | help='referit/flickr/unc/unc+/gref')

341 | parser.add_argument('--split', default='train', type=str,

342 | help='name of the dataset split used to train')

343 | parser.add_argument('--time', default=20, type=int,

344 | help='maximum time steps (lang length) per batch')

345 | args = parser.parse_args()

346 |

347 | torch.manual_seed(13)

348 | np.random.seed(13)

349 | torch.backends.cudnn.deterministic = True

350 | torch.backends.cudnn.benchmark = False

351 |

352 | input_transform = Compose([

353 | ToTensor(),

354 | Normalize(

355 | mean=[0.485, 0.456, 0.406],

356 | std=[0.229, 0.224, 0.225])

357 | ])

358 |

359 | refer_val = ReferDataset(data_root=args.data,

360 | dataset=args.dataset,

361 | split='val',

362 | imsize = args.size,

363 | transform=input_transform,

364 | max_query_len=args.time,

365 | testmode=True)

366 | val_loader = DataLoader(refer_val, batch_size=8, shuffle=False,

367 | pin_memory=False, num_workers=0)

368 |

369 |

370 | bbox_list=[]

371 | for batch_idx, (imgs, masks, word_id, word_mask, bbox) in enumerate(val_loader):

372 | bboxes = (bbox[:,2:]-bbox[:,:2]).numpy().tolist()

373 | for bbox in bboxes:

374 | bbox_list.append(bbox)

375 | if batch_idx%10000==0 and batch_idx!=0:

376 | print(batch_idx)

--------------------------------------------------------------------------------

/ln_data/README.md:

--------------------------------------------------------------------------------

1 | # Data Folder

2 | * RefCOCO & ReferItGame Dataset: place the soft link of dataset folder under the current folder. We follow dataset structure [DMS](https://github.com/BCV-Uniandes/DMS). To accomplish this, the ``download_dataset.sh`` [bash script](https://github.com/BCV-Uniandes/DMS/blob/master/download_data.sh) from DMS can be used.

3 | ```bash

4 | bash download_data --path .

5 | ```

6 |

7 |

11 | * Flickr30K Entities Dataset: please download the images for the dataset on the website for the [Flickr30K Entities Dataset](http://bryanplummer.com/Flickr30kEntities/) and the original [Flickr30k Dataset](http://shannon.cs.illinois.edu/DenotationGraph/). Images should be placed under ``./Flickr30k/flickr30k_images``.

12 |

13 | * Data index: download the generated index files and place them in the ``../data`` folder. Availble at [[Gdrive]](https://drive.google.com/open?id=1cZI562MABLtAzM6YU4WmKPFFguuVr0lZ), [[One Drive]](https://uofr-my.sharepoint.com/:f:/g/personal/zyang39_ur_rochester_edu/Epw5WQ_mJ-tOlAbK5LxsnrsBElWwvNdU7aus0UIzWtwgKQ?e=XHQm7F).

14 | ```

15 | cd ..

16 | rm -r data

17 | tar xf data.tar

18 | ```

--------------------------------------------------------------------------------

/ln_data/download_data.sh:

--------------------------------------------------------------------------------

1 | #!/bin/bash

2 |

3 | version="0.1"

4 |

5 | # This is an optional arguments-only example of Argbash potential

6 | #

7 | # ARG_OPTIONAL_SINGLE([path],[p],[path onto which files are to be downloaded],[data])

8 | # ARG_VERSION([echo test v$version])

9 | # ARG_HELP([The general script's help msg])

10 | # ARGBASH_GO()

11 | # needed because of Argbash --> m4_ignore([

12 | ### START OF CODE GENERATED BY Argbash v2.5.0 one line above ###

13 | # Argbash is a bash code generator used to get arguments parsing right.

14 | # Argbash is FREE SOFTWARE, see https://argbash.io for more info

15 | # Generated online by https://argbash.io/generate

16 |

17 | die()

18 | {

19 | local _ret=$2

20 | test -n "$_ret" || _ret=1

21 | test "$_PRINT_HELP" = yes && print_help >&2

22 | echo "$1" >&2

23 | exit ${_ret}

24 | }

25 |

26 | begins_with_short_option()

27 | {

28 | local first_option all_short_options

29 | all_short_options='pvh'

30 | first_option="${1:0:1}"

31 | test "$all_short_options" = "${all_short_options/$first_option/}" && return 1 || return 0

32 | }

33 |

34 |

35 |

36 | # THE DEFAULTS INITIALIZATION - OPTIONALS

37 | _arg_path="referit_data"

38 |

39 | print_help ()

40 | {

41 | printf "%s\n" "download ReferIt data script"

42 | printf 'Usage: %s [-p|--path ] [-v|--version] [-h|--help]\n' "$0"

43 | printf "\t%s\n" "-p,--path: path onto which files are to be downloaded (default: '"referit_data"')"

44 | printf "\t%s\n" "-v,--version: Prints version"

45 | printf "\t%s\n" "-h,--help: Prints help"

46 | }

47 |

48 | parse_commandline ()

49 | {

50 | while test $# -gt 0

51 | do

52 | _key="$1"

53 | case "$_key" in

54 | -p|--path)

55 | test $# -lt 2 && die "Missing value for the optional argument '$_key'." 1

56 | _arg_path="$2"

57 | shift

58 | ;;

59 | --path=*)

60 | _arg_path="${_key##--path=}"

61 | ;;

62 | -p*)

63 | _arg_path="${_key##-p}"

64 | ;;

65 | -v|--version)

66 | echo test v$version

67 | exit 0

68 | ;;

69 | -v*)

70 | echo test v$version

71 | exit 0

72 | ;;

73 | -h|--help)

74 | print_help

75 | exit 0

76 | ;;

77 | -h*)

78 | print_help

79 | exit 0

80 | ;;

81 | *)

82 | _PRINT_HELP=yes die "FATAL ERROR: Got an unexpected argument '$1'" 1

83 | ;;

84 | esac

85 | shift

86 | done

87 | }

88 |

89 | parse_commandline "$@"

90 |

91 | # OTHER STUFF GENERATED BY Argbash

92 |

93 | ### END OF CODE GENERATED BY Argbash (sortof) ### ])

94 | # [ <-- needed because of Argbash

95 |

96 |

97 | echo "Save data to: $_arg_path"

98 |

99 |

100 | REFERIT_SPLITS_URL="https://s3-sa-east-1.amazonaws.com/query-objseg/referit_splits.tar.bz2"

101 | REFERIT_DATA_URL="http://www.eecs.berkeley.edu/~ronghang/projects/cvpr16_text_obj_retrieval/referitdata.tar.gz"

102 | COCO_DATA_URL="http://images.cocodataset.org/zips/train2014.zip"

103 |

104 | REFCOCO_URL="http://bvisionweb1.cs.unc.edu/licheng/referit/data/refcoco.zip"

105 | REFCOCO_PLUS_URL="http://bvisionweb1.cs.unc.edu/licheng/referit/data/refcoco+.zip"

106 | REFCOCOG_URL="http://bvisionweb1.cs.unc.edu/licheng/referit/data/refcocog.zip"

107 |

108 | REFERIT_FILE=${REFERIT_DATA_URL#*cvpr16_text_obj_retrieval/}

109 | SPLIT_FILE=${REFERIT_SPLITS_URL#*query-objseg/}

110 | COCO_FILE=${COCO_DATA_URL#*zips/}

111 |

112 |

113 | if [ ! -d $_arg_path ]; then

114 | mkdir $_arg_path

115 | fi

116 | cd $_arg_path

117 |

118 | mkdir referit

119 | cd referit

120 |

121 | printf "Downloading ReferIt dataset (This may take a while...)"

122 | aria2c -x 8 $REFERIT_DATA_URL

123 |

124 |

125 | printf "Uncompressing data..."

126 | tar -xzvf $REFERIT_FILE

127 | rm $REFERIT_FILE

128 |

129 | mkdir splits

130 | cd splits

131 |

132 | printf "Downloading ReferIt Splits..."

133 | aria2c -x 8 $REFERIT_SPLITS_URL

134 |

135 | tar -xjvf $SPLIT_FILE

136 | rm $SPLIT_FILE

137 |

138 | cd ../..

139 |

140 | mkdir -p other/images/mscoco/images

141 | cd other/images/mscoco/images

142 |

143 | printf "Downloading MS COCO 2014 train images (This may take a while...)"

144 | aria2c -x 8 $COCO_DATA_URL

145 |

146 | unzip $COCO_FILE

147 | rm $COCO_FILE

148 |

149 | cd ../../..

150 | printf "Downloading refcoco, refcocog and refcoco+ splits..."

151 | aria2c -x 8 $REFCOCO_URL

152 | aria2c -x 8 $REFCOCO_PLUS_URL

153 | aria2c -x 8 $REFCOCOG_URL

154 |

155 | unzip "*.zip"

156 | rm *.zip

--------------------------------------------------------------------------------

/model/darknet.py:

--------------------------------------------------------------------------------

1 | from __future__ import division

2 |

3 | import math

4 | import torch

5 | import torch.nn as nn

6 | import torch.nn.functional as F

7 | from torch.autograd import Variable

8 | import numpy as np

9 | from collections import defaultdict, OrderedDict

10 |

11 | from PIL import Image

12 |

13 | # from utils.parse_config import *

14 | from utils.utils import *

15 | # import matplotlib.pyplot as plt

16 | # import matplotlib.patches as patches

17 |

18 | exist_id = [1, 2, 3, 4, 5, 6, 7, 8, 9, 10, \

19 | 11, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, \

20 | 23, 24, 25, 27, 28, 31, 32, 33, 34, 35, 36, \

21 | 37, 38, 39, 40, 41, 42, 43, 44, 46, 47, 48, \

22 | 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, \

23 | 60, 61, 62, 63, 64, 65, 67, 70, 72, 73, 74, \

24 | 75, 76, 77, 78, 79, 80, 81, 82, 84, 85, 86, \

25 | 87, 88, 89, 90]

26 | catmap_dict = OrderedDict()

27 | for ii in range(len(exist_id)):

28 | catmap_dict[exist_id[ii]] = ii

29 |

30 | def build_object_targets(

31 | pred_boxes, pred_conf, pred_cls, target, anchors, num_anchors, num_classes, grid_size, ignore_thres, img_dim

32 | ):

33 | nB = target.size(0)

34 | nA = num_anchors

35 | nC = num_classes

36 | nG = grid_size

37 | mask = torch.zeros(nB, nA, nG, nG)

38 | conf_mask = torch.ones(nB, nA, nG, nG)

39 | tx = torch.zeros(nB, nA, nG, nG)

40 | ty = torch.zeros(nB, nA, nG, nG)

41 | tw = torch.zeros(nB, nA, nG, nG)

42 | th = torch.zeros(nB, nA, nG, nG)

43 | tconf = torch.ByteTensor(nB, nA, nG, nG).fill_(0)

44 | tcls = torch.ByteTensor(nB, nA, nG, nG, nC).fill_(0)

45 |

46 | nGT = 0

47 | nCorrect = 0

48 | for b in range(nB):

49 | for t in range(target.shape[1]):

50 | if target[b, t].sum() == 0:

51 | continue

52 | nGT += 1

53 | # Convert to position relative to box

54 | gx = target[b, t, 1] * nG

55 | gy = target[b, t, 2] * nG

56 | gw = target[b, t, 3] * nG

57 | gh = target[b, t, 4] * nG

58 | # Get grid box indices

59 | gi = int(gx)

60 | gj = int(gy)

61 | # Get shape of gt box

62 | gt_box = torch.FloatTensor(np.array([0, 0, gw, gh])).unsqueeze(0)

63 | # Get shape of anchor box

64 | anchor_shapes = torch.FloatTensor(np.concatenate((np.zeros((len(anchors), 2)), np.array(anchors)), 1))

65 | # Calculate iou between gt and anchor shapes

66 | anch_ious = bbox_iou(gt_box, anchor_shapes)

67 | # Where the overlap is larger than threshold set mask to zero (ignore)

68 | conf_mask[b, anch_ious > ignore_thres, gj, gi] = 0

69 | # Find the best matching anchor box

70 | best_n = np.argmax(anch_ious)

71 | # Get ground truth box

72 | gt_box = torch.FloatTensor(np.array([gx, gy, gw, gh])).unsqueeze(0)

73 | # Get the best prediction

74 | pred_box = pred_boxes[b, best_n, gj, gi].unsqueeze(0)

75 | # Masks

76 | mask[b, best_n, gj, gi] = 1

77 | conf_mask[b, best_n, gj, gi] = 1

78 | # Coordinates

79 | tx[b, best_n, gj, gi] = gx - gi

80 | ty[b, best_n, gj, gi] = gy - gj

81 | # Width and height

82 | tw[b, best_n, gj, gi] = math.log(gw / anchors[best_n][0] + 1e-16)

83 | th[b, best_n, gj, gi] = math.log(gh / anchors[best_n][1] + 1e-16)

84 | # One-hot encoding of label

85 | target_label = int(target[b, t, 0])

86 | target_label = catmap_dict[target_label]

87 | tcls[b, best_n, gj, gi, target_label] = 1

88 | tconf[b, best_n, gj, gi] = 1

89 |

90 | # Calculate iou between ground truth and best matching prediction

91 | iou = bbox_iou(gt_box, pred_box, x1y1x2y2=False)

92 | pred_label = torch.argmax(pred_cls[b, best_n, gj, gi])

93 | score = pred_conf[b, best_n, gj, gi]

94 | if iou > 0.5 and pred_label == target_label and score > 0.5:

95 | nCorrect += 1

96 |

97 | return nGT, nCorrect, mask, conf_mask, tx, ty, tw, th, tconf, tcls

98 |

99 | def parse_model_config(path):

100 | """Parses the yolo-v3 layer configuration file and returns module definitions"""

101 | file = open(path, 'r')

102 | lines = file.read().split('\n')

103 | lines = [x for x in lines if x and not x.startswith('#')]

104 | lines = [x.rstrip().lstrip() for x in lines] # get rid of fringe whitespaces

105 | module_defs = []

106 | for line in lines:

107 | if line.startswith('['): # This marks the start of a new block

108 | module_defs.append({})

109 | module_defs[-1]['type'] = line[1:-1].rstrip()

110 | if module_defs[-1]['type'] == 'convolutional' or module_defs[-1]['type'] == 'yoloconvolutional':

111 | module_defs[-1]['batch_normalize'] = 0

112 | else:

113 | key, value = line.split("=")

114 | value = value.strip()

115 | module_defs[-1][key.rstrip()] = value.strip()

116 | return module_defs

117 |

118 | class ConvBatchNormReLU(nn.Sequential):

119 | def __init__(

120 | self,

121 | in_channels,

122 | out_channels,

123 | kernel_size,

124 | stride,

125 | padding,

126 | dilation,

127 | leaky=False,

128 | relu=True,

129 | ):

130 | super(ConvBatchNormReLU, self).__init__()

131 | self.add_module(

132 | "conv",

133 | nn.Conv2d(

134 | in_channels=in_channels,

135 | out_channels=out_channels,

136 | kernel_size=kernel_size,

137 | stride=stride,

138 | padding=padding,

139 | dilation=dilation,

140 | bias=False,

141 | ),

142 | )

143 | self.add_module(

144 | "bn",

145 | nn.BatchNorm2d(

146 | num_features=out_channels, eps=1e-5, momentum=0.999, affine=True

147 | ),

148 | )

149 |

150 | if leaky:

151 | self.add_module("relu", nn.LeakyReLU(0.1))

152 | elif relu:

153 | self.add_module("relu", nn.ReLU())

154 |

155 | def forward(self, x):

156 | return super(ConvBatchNormReLU, self).forward(x)

157 |

158 | class MyUpsample2(nn.Module):

159 | def forward(self, x):

160 | return x[:, :, :, None, :, None].expand(-1, -1, -1, 2, -1, 2).reshape(x.size(0), x.size(1), x.size(2)*2, x.size(3)*2)

161 |

162 | def create_modules(module_defs):

163 | """

164 | Constructs module list of layer blocks from module configuration in module_defs

165 | """

166 | hyperparams = module_defs.pop(0)

167 | output_filters = [int(hyperparams["channels"])]

168 | module_list = nn.ModuleList()

169 | for i, module_def in enumerate(module_defs):

170 | modules = nn.Sequential()

171 |

172 | if module_def["type"] == "convolutional" or module_def["type"] == "yoloconvolutional":

173 | bn = int(module_def["batch_normalize"])

174 | filters = int(module_def["filters"])

175 | kernel_size = int(module_def["size"])

176 | pad = (kernel_size - 1) // 2 if int(module_def["pad"]) else 0

177 | modules.add_module(

178 | "conv_%d" % i,

179 | nn.Conv2d(

180 | in_channels=output_filters[-1],

181 | out_channels=filters,

182 | kernel_size=kernel_size,

183 | stride=int(module_def["stride"]),

184 | padding=pad,

185 | bias=not bn,

186 | ),

187 | )

188 | if bn:

189 | modules.add_module("batch_norm_%d" % i, nn.BatchNorm2d(filters))

190 | if module_def["activation"] == "leaky":

191 | modules.add_module("leaky_%d" % i, nn.LeakyReLU(0.1))

192 |

193 | elif module_def["type"] == "maxpool":

194 | kernel_size = int(module_def["size"])

195 | stride = int(module_def["stride"])

196 | if kernel_size == 2 and stride == 1:

197 | padding = nn.ZeroPad2d((0, 1, 0, 1))

198 | modules.add_module("_debug_padding_%d" % i, padding)

199 | maxpool = nn.MaxPool2d(

200 | kernel_size=int(module_def["size"]),

201 | stride=int(module_def["stride"]),

202 | padding=int((kernel_size - 1) // 2),

203 | )

204 | modules.add_module("maxpool_%d" % i, maxpool)

205 |

206 | elif module_def["type"] == "upsample":

207 | # upsample = nn.Upsample(scale_factor=int(module_def["stride"]), mode="nearest")

208 | assert(int(module_def["stride"])==2)

209 | upsample = MyUpsample2()

210 | modules.add_module("upsample_%d" % i, upsample)

211 |

212 | elif module_def["type"] == "route":

213 | layers = [int(x) for x in module_def["layers"].split(",")]

214 | filters = sum([output_filters[layer_i] for layer_i in layers])

215 | modules.add_module("route_%d" % i, EmptyLayer())

216 |

217 | elif module_def["type"] == "shortcut":

218 | filters = output_filters[int(module_def["from"])]

219 | modules.add_module("shortcut_%d" % i, EmptyLayer())

220 |

221 | elif module_def["type"] == "yolo":

222 | anchor_idxs = [int(x) for x in module_def["mask"].split(",")]

223 | # Extract anchors

224 | anchors = [int(x) for x in module_def["anchors"].split(",")]

225 | anchors = [(anchors[i], anchors[i + 1]) for i in range(0, len(anchors), 2)]

226 | anchors = [anchors[i] for i in anchor_idxs]

227 | num_classes = int(module_def["classes"])

228 | img_height = int(hyperparams["height"])

229 | # Define detection layer

230 | # yolo_layer = YOLOLayer(anchors, num_classes, img_height)

231 | yolo_layer = YOLOLayer(anchors, num_classes, 256)

232 | modules.add_module("yolo_%d" % i, yolo_layer)

233 | # Register module list and number of output filters

234 | module_list.append(modules)

235 | output_filters.append(filters)

236 |

237 | return hyperparams, module_list

238 |

239 | class EmptyLayer(nn.Module):

240 | """Placeholder for 'route' and 'shortcut' layers"""

241 |

242 | def __init__(self):

243 | super(EmptyLayer, self).__init__()

244 |

245 | class YOLOLayer(nn.Module):

246 | """Detection layer"""

247 |

248 | def __init__(self, anchors, num_classes, img_dim):

249 | super(YOLOLayer, self).__init__()

250 | self.anchors = anchors

251 | self.num_anchors = len(anchors)

252 | self.num_classes = num_classes

253 | self.bbox_attrs = 5 + num_classes

254 | self.image_dim = img_dim

255 | self.ignore_thres = 0.5

256 | self.lambda_coord = 1

257 |

258 | self.mse_loss = nn.MSELoss(size_average=True) # Coordinate loss

259 | self.bce_loss = nn.BCELoss(size_average=True) # Confidence loss

260 | self.ce_loss = nn.CrossEntropyLoss() # Class loss

261 |

262 | def forward(self, x, targets=None):

263 | nA = self.num_anchors

264 | nB = x.size(0)

265 | nG = x.size(2)

266 | stride = self.image_dim / nG

267 |

268 | # Tensors for cuda support

269 | FloatTensor = torch.cuda.FloatTensor if x.is_cuda else torch.FloatTensor

270 | LongTensor = torch.cuda.LongTensor if x.is_cuda else torch.LongTensor

271 | ByteTensor = torch.cuda.ByteTensor if x.is_cuda else torch.ByteTensor

272 |

273 | prediction = x.view(nB, nA, self.bbox_attrs, nG, nG).permute(0, 1, 3, 4, 2).contiguous()

274 |

275 | # Get outputs

276 | x = torch.sigmoid(prediction[..., 0]) # Center x

277 | y = torch.sigmoid(prediction[..., 1]) # Center y

278 | w = prediction[..., 2] # Width

279 | h = prediction[..., 3] # Height

280 | pred_conf = torch.sigmoid(prediction[..., 4]) # Conf

281 | pred_cls = torch.sigmoid(prediction[..., 5:]) # Cls pred.

282 |

283 | # Calculate offsets for each grid

284 | grid_x = torch.arange(nG).repeat(nG, 1).view([1, 1, nG, nG]).type(FloatTensor)

285 | grid_y = torch.arange(nG).repeat(nG, 1).t().view([1, 1, nG, nG]).type(FloatTensor)

286 | # scaled_anchors = FloatTensor([(a_w / stride, a_h / stride) for a_w, a_h in self.anchors])

287 | scaled_anchors = FloatTensor([(a_w / (416 / nG), a_h / (416 / nG)) for a_w, a_h in self.anchors])

288 | anchor_w = scaled_anchors[:, 0:1].view((1, nA, 1, 1))

289 | anchor_h = scaled_anchors[:, 1:2].view((1, nA, 1, 1))

290 |

291 | # Add offset and scale with anchors

292 | pred_boxes = FloatTensor(prediction[..., :4].shape)

293 | pred_boxes[..., 0] = x.data + grid_x

294 | pred_boxes[..., 1] = y.data + grid_y

295 | pred_boxes[..., 2] = torch.exp(w.data) * anchor_w

296 | pred_boxes[..., 3] = torch.exp(h.data) * anchor_h

297 |

298 | # Training

299 | if targets is not None:

300 | targets = targets.clone()

301 | targets[:,:,1:] = targets[:,:,1:]/self.image_dim

302 | for b_i in range(targets.shape[0]):

303 | targets[b_i,:,1:] = xyxy2xywh(targets[b_i,:,1:])

304 |

305 | if x.is_cuda:

306 | self.mse_loss = self.mse_loss.cuda()

307 | self.bce_loss = self.bce_loss.cuda()

308 | self.ce_loss = self.ce_loss.cuda()

309 |

310 | nGT, nCorrect, mask, conf_mask, tx, ty, tw, th, tconf, tcls = build_object_targets(

311 | pred_boxes=pred_boxes.cpu().data,

312 | pred_conf=pred_conf.cpu().data,

313 | pred_cls=pred_cls.cpu().data,

314 | target=targets.cpu().data,

315 | anchors=scaled_anchors.cpu().data,

316 | num_anchors=nA,

317 | num_classes=self.num_classes,

318 | grid_size=nG,

319 | ignore_thres=self.ignore_thres,

320 | img_dim=self.image_dim,

321 | )

322 |

323 | nProposals = int((pred_conf > 0.5).sum().item())

324 | recall = float(nCorrect / nGT) if nGT else 1

325 | precision = float(nCorrect / nProposals) if nProposals else 0

326 |

327 | # Handle masks

328 | mask = Variable(mask.type(ByteTensor))

329 | conf_mask = Variable(conf_mask.type(ByteTensor))

330 |

331 | # Handle target variables

332 | tx = Variable(tx.type(FloatTensor), requires_grad=False)

333 | ty = Variable(ty.type(FloatTensor), requires_grad=False)

334 | tw = Variable(tw.type(FloatTensor), requires_grad=False)

335 | th = Variable(th.type(FloatTensor), requires_grad=False)

336 | tconf = Variable(tconf.type(FloatTensor), requires_grad=False)

337 | tcls = Variable(tcls.type(LongTensor), requires_grad=False)

338 |

339 | # Get conf mask where gt and where there is no gt

340 | conf_mask_true = mask

341 | conf_mask_false = conf_mask - mask

342 |

343 | # Mask outputs to ignore non-existing objects

344 | loss_x = self.mse_loss(x[mask], tx[mask])

345 | loss_y = self.mse_loss(y[mask], ty[mask])

346 | loss_w = self.mse_loss(w[mask], tw[mask])

347 | loss_h = self.mse_loss(h[mask], th[mask])

348 | loss_conf = self.bce_loss(pred_conf[conf_mask_false], tconf[conf_mask_false]) + self.bce_loss(

349 | pred_conf[conf_mask_true], tconf[conf_mask_true]

350 | )

351 | loss_cls = (1 / nB) * self.ce_loss(pred_cls[mask], torch.argmax(tcls[mask], 1))

352 | loss = loss_x + loss_y + loss_w + loss_h + loss_conf + loss_cls

353 | return (

354 | loss,

355 | loss_x.item(),

356 | loss_y.item(),

357 | loss_w.item(),

358 | loss_h.item(),

359 | loss_conf.item(),

360 | loss_cls.item(),

361 | recall,

362 | precision,

363 | )

364 |

365 | else:

366 | # If not in training phase return predictions

367 | output = torch.cat(

368 | (

369 | pred_boxes.view(nB, -1, 4) * stride,

370 | pred_conf.view(nB, -1, 1),

371 | pred_cls.view(nB, -1, self.num_classes),

372 | ),

373 | -1,

374 | )

375 | return output

376 |

377 | class Darknet(nn.Module):

378 | """YOLOv3 object detection model"""

379 |

380 | def __init__(self, config_path='./model/yolov3.cfg', img_size=416, obj_out=False):

381 | super(Darknet, self).__init__()

382 | self.config_path = config_path

383 | self.obj_out = obj_out

384 | self.module_defs = parse_model_config(config_path)

385 | self.hyperparams, self.module_list = create_modules(self.module_defs)

386 | self.img_size = img_size

387 | self.seen = 0

388 | self.header_info = np.array([0, 0, 0, self.seen, 0])

389 | self.loss_names = ["x", "y", "w", "h", "conf", "cls", "recall", "precision"]

390 |

391 | def forward(self, x, targets=None):

392 | batch = x.shape[0]

393 | is_training = targets is not None

394 | output, output_obj = [], []

395 | self.losses = defaultdict(float)

396 | layer_outputs = []

397 | for i, (module_def, module) in enumerate(zip(self.module_defs, self.module_list)):

398 | if module_def["type"] in ["convolutional", "upsample", "maxpool"]:

399 | x = module(x)

400 | elif module_def["type"] == "route":

401 | layer_i = [int(x) for x in module_def["layers"].split(",")]

402 | x = torch.cat([layer_outputs[i] for i in layer_i], 1)

403 | elif module_def["type"] == "shortcut":

404 | layer_i = int(module_def["from"])

405 | x = layer_outputs[-1] + layer_outputs[layer_i]

406 | elif module_def["type"] == "yoloconvolutional":

407 | output.append(x) ## save final feature block

408 | x = module(x)

409 | elif module_def["type"] == "yolo":

410 | # Train phase: get loss

411 | if is_training:

412 | x, *losses = module[0](x, targets)

413 | for name, loss in zip(self.loss_names, losses):

414 | self.losses[name] += loss

415 | # Test phase: Get detections

416 | else:

417 | x = module(x)

418 | output_obj.append(x)

419 | # x = module(x)

420 | # output.append(x)

421 | layer_outputs.append(x)

422 |

423 | self.losses["recall"] /= 3

424 | self.losses["precision"] /= 3

425 | # return sum(output) if is_training else torch.cat(output, 1)

426 | # return torch.cat(output, 1)

427 | if self.obj_out:

428 | return output, sum(output_obj) if is_training else torch.cat(output_obj, 1), self.losses["precision"], self.losses["recall"]

429 | # return output, sum(output_obj)/(len(output_obj)*batch) if is_training else torch.cat(output_obj, 1)

430 | else:

431 | return output

432 |

433 | def load_weights(self, weights_path):

434 | """Parses and loads the weights stored in 'weights_path'"""

435 |

436 | # Open the weights file

437 | fp = open(weights_path, "rb")

438 | if self.config_path=='./model/yolo9000.cfg':

439 | header = np.fromfile(fp, dtype=np.int32, count=4) # First five are header values

440 | else:

441 | header = np.fromfile(fp, dtype=np.int32, count=5) # First five are header values

442 | # Needed to write header when saving weights

443 | self.header_info = header

444 |

445 | self.seen = header[3]

446 | weights = np.fromfile(fp, dtype=np.float32) # The rest are weights

447 | fp.close()

448 |

449 | ptr = 0

450 | for i, (module_def, module) in enumerate(zip(self.module_defs, self.module_list)):

451 | if module_def["type"] == "convolutional" or module_def["type"] == "yoloconvolutional":

452 | conv_layer = module[0]

453 | if module_def["batch_normalize"]:

454 | # Load BN bias, weights, running mean and running variance

455 | bn_layer = module[1]

456 | num_b = bn_layer.bias.numel() # Number of biases

457 | # Bias

458 | bn_b = torch.from_numpy(weights[ptr : ptr + num_b]).view_as(bn_layer.bias)

459 | bn_layer.bias.data.copy_(bn_b)

460 | ptr += num_b

461 | # Weight

462 | bn_w = torch.from_numpy(weights[ptr : ptr + num_b]).view_as(bn_layer.weight)

463 | bn_layer.weight.data.copy_(bn_w)

464 | ptr += num_b

465 | # Running Mean

466 | bn_rm = torch.from_numpy(weights[ptr : ptr + num_b]).view_as(bn_layer.running_mean)

467 | bn_layer.running_mean.data.copy_(bn_rm)

468 | ptr += num_b

469 | # Running Var

470 | bn_rv = torch.from_numpy(weights[ptr : ptr + num_b]).view_as(bn_layer.running_var)

471 | bn_layer.running_var.data.copy_(bn_rv)

472 | ptr += num_b

473 | else:

474 | # Load conv. bias

475 | num_b = conv_layer.bias.numel()

476 | conv_b = torch.from_numpy(weights[ptr : ptr + num_b]).view_as(conv_layer.bias)

477 | conv_layer.bias.data.copy_(conv_b)

478 | ptr += num_b

479 | # Load conv. weights

480 | num_w = conv_layer.weight.numel()

481 | conv_w = torch.from_numpy(weights[ptr : ptr + num_w]).view_as(conv_layer.weight)

482 | conv_layer.weight.data.copy_(conv_w)

483 | ptr += num_w

484 |

485 | """

486 | @:param path - path of the new weights file

487 | @:param cutoff - save layers between 0 and cutoff (cutoff = -1 -> all are saved)

488 | """

489 |

490 | def save_weights(self, path, cutoff=-1):

491 |

492 | fp = open(path, "wb")

493 | self.header_info[3] = self.seen

494 | self.header_info.tofile(fp)

495 |

496 | # Iterate through layers

497 | for i, (module_def, module) in enumerate(zip(self.module_defs[:cutoff], self.module_list[:cutoff])):

498 | if module_def["type"] == "convolutional":

499 | conv_layer = module[0]

500 | # If batch norm, load bn first

501 | if module_def["batch_normalize"]:

502 | bn_layer = module[1]

503 | bn_layer.bias.data.cpu().numpy().tofile(fp)

504 | bn_layer.weight.data.cpu().numpy().tofile(fp)

505 | bn_layer.running_mean.data.cpu().numpy().tofile(fp)

506 | bn_layer.running_var.data.cpu().numpy().tofile(fp)

507 | # Load conv bias

508 | else:

509 | conv_layer.bias.data.cpu().numpy().tofile(fp)

510 | # Load conv weights

511 | conv_layer.weight.data.cpu().numpy().tofile(fp)

512 |

513 | fp.close

514 |

515 |

516 | if __name__ == "__main__":

517 | import torch

518 | import numpy as np

519 | torch.manual_seed(13)

520 | np.random.seed(13)

521 | torch.backends.cudnn.deterministic = True

522 | torch.backends.cudnn.benchmark = False

523 |

524 | model = Darknet()

525 | model.load_weights('./saved_models/yolov3.weights')

526 | # model.eval()

527 |

528 | image = torch.autograd.Variable(torch.randn(1, 3, 416, 416))

529 | output1, output2, output3 = model(image)

530 | print(output1)

531 | # print(output1.size(), output2.size(), output3.size())

532 | # print(model(image))

533 | # print(len(output), output[0].size(), output[1].size(), output[2].size())

534 |

--------------------------------------------------------------------------------

/model/grounding_model.py:

--------------------------------------------------------------------------------

1 | from collections import OrderedDict

2 |

3 | import torch

4 | import torch.nn as nn

5 | import torch.nn.functional as F

6 | import torch.utils.model_zoo as model_zoo

7 | from torch.utils.data import TensorDataset, DataLoader, SequentialSampler

8 | from torch.utils.data.distributed import DistributedSampler

9 |

10 | from .darknet import *

11 |

12 | import argparse

13 | import collections

14 | import logging

15 | import json

16 | import re

17 | import time

18 | ## can be commented if only use LSTM encoder

19 | from pytorch_pretrained_bert.tokenization import BertTokenizer

20 | from pytorch_pretrained_bert.modeling import BertModel

21 |

22 | def generate_coord(batch, height, width):

23 | # coord = Variable(torch.zeros(batch,8,height,width).cuda())

24 | xv, yv = torch.meshgrid([torch.arange(0,height), torch.arange(0,width)])

25 | xv_min = (xv.float()*2 - width)/width

26 | yv_min = (yv.float()*2 - height)/height

27 | xv_max = ((xv+1).float()*2 - width)/width

28 | yv_max = ((yv+1).float()*2 - height)/height

29 | xv_ctr = (xv_min+xv_max)/2

30 | yv_ctr = (yv_min+yv_max)/2

31 | hmap = torch.ones(height,width)*(1./height)

32 | wmap = torch.ones(height,width)*(1./width)

33 | coord = torch.autograd.Variable(torch.cat([xv_min.unsqueeze(0), yv_min.unsqueeze(0),\

34 | xv_max.unsqueeze(0), yv_max.unsqueeze(0),\

35 | xv_ctr.unsqueeze(0), yv_ctr.unsqueeze(0),\

36 | hmap.unsqueeze(0), wmap.unsqueeze(0)], dim=0).cuda())

37 | coord = coord.unsqueeze(0).repeat(batch,1,1,1)

38 | return coord

39 |

40 | class RNNEncoder(nn.Module):

41 | def __init__(self, vocab_size, word_embedding_size, word_vec_size, hidden_size, bidirectional=False,

42 | input_dropout_p=0, dropout_p=0, n_layers=1, rnn_type='lstm', variable_lengths=True):

43 | super(RNNEncoder, self).__init__()

44 | self.variable_lengths = variable_lengths

45 | self.embedding = nn.Embedding(vocab_size, word_embedding_size)

46 | self.input_dropout = nn.Dropout(input_dropout_p)

47 | self.mlp = nn.Sequential(nn.Linear(word_embedding_size, word_vec_size),

48 | nn.ReLU())

49 | self.rnn_type = rnn_type

50 | self.rnn = getattr(nn, rnn_type.upper())(word_vec_size, hidden_size, n_layers,

51 | batch_first=True,

52 | bidirectional=bidirectional,

53 | dropout=dropout_p)

54 | self.num_dirs = 2 if bidirectional else 1

55 |

56 | def forward(self, input_labels):

57 | """

58 | Inputs:

59 | - input_labels: Variable long (batch, seq_len)

60 | Outputs:

61 | - output : Variable float (batch, max_len, hidden_size * num_dirs)

62 | - hidden : Variable float (batch, num_layers * num_dirs * hidden_size)

63 | - embedded: Variable float (batch, max_len, word_vec_size)

64 | """

65 | if self.variable_lengths:

66 | input_lengths = (input_labels!=0).sum(1) # Variable (batch, )

67 |

68 | # make ixs

69 | input_lengths_list = input_lengths.data.cpu().numpy().tolist()

70 | sorted_input_lengths_list = np.sort(input_lengths_list)[::-1].tolist() # list of sorted input_lengths

71 | sort_ixs = np.argsort(input_lengths_list)[::-1].tolist() # list of int sort_ixs, descending

72 | s2r = {s: r for r, s in enumerate(sort_ixs)} # O(n)

73 | recover_ixs = [s2r[s] for s in range(len(input_lengths_list))] # list of int recover ixs

74 | assert max(input_lengths_list) == input_labels.size(1)

75 |

76 | # move to long tensor

77 | sort_ixs = input_labels.data.new(sort_ixs).long() # Variable long

78 | recover_ixs = input_labels.data.new(recover_ixs).long() # Variable long

79 |

80 | # sort input_labels by descending order

81 | input_labels = input_labels[sort_ixs]

82 |

83 | # embed

84 | embedded = self.embedding(input_labels) # (n, seq_len, word_embedding_size)

85 | embedded = self.input_dropout(embedded) # (n, seq_len, word_embedding_size)

86 | embedded = self.mlp(embedded) # (n, seq_len, word_vec_size)

87 | if self.variable_lengths:

88 | embedded = nn.utils.rnn.pack_padded_sequence(embedded, sorted_input_lengths_list, batch_first=True)

89 | # forward rnn

90 | output, hidden = self.rnn(embedded)

91 | # recover

92 | if self.variable_lengths:

93 | # recover rnn

94 | output, _ = nn.utils.rnn.pad_packed_sequence(output, batch_first=True) # (batch, max_len, hidden)

95 | output = output[recover_ixs]

96 | sent_output = []

97 | for ii in range(output.shape[0]):

98 | sent_output.append(output[ii,int(input_lengths_list[ii]-1),:])

99 | return torch.stack(sent_output, dim=0)

100 |

101 | class grounding_model(nn.Module):

102 | def __init__(self, corpus=None, emb_size=256, jemb_drop_out=0.1, bert_model='bert-base-uncased', \

103 | coordmap=True, leaky=False, dataset=None, light=False):

104 | super(grounding_model, self).__init__()

105 | self.coordmap = coordmap

106 | self.light = light

107 | self.lstm = (corpus is not None)

108 | self.emb_size = emb_size

109 | if bert_model=='bert-base-uncased':

110 | self.textdim=768

111 | else:

112 | self.textdim=1024

113 | ## Visual model

114 | self.visumodel = Darknet(config_path='./model/yolov3.cfg')

115 | self.visumodel.load_weights('./saved_models/yolov3.weights')

116 | ## Text model

117 | if self.lstm:

118 | self.textdim, self.embdim=1024, 512

119 | self.textmodel = RNNEncoder(vocab_size=len(corpus),

120 | word_embedding_size=self.embdim,

121 | word_vec_size=self.textdim//2,

122 | hidden_size=self.textdim//2,

123 | bidirectional=True,

124 | input_dropout_p=0.2,

125 | variable_lengths=True)

126 | else:

127 | self.textmodel = BertModel.from_pretrained(bert_model)

128 |

129 | ## Mapping module

130 | self.mapping_visu = nn.Sequential(OrderedDict([

131 | ('0', ConvBatchNormReLU(1024, emb_size, 1, 1, 0, 1, leaky=leaky)),

132 | ('1', ConvBatchNormReLU(512, emb_size, 1, 1, 0, 1, leaky=leaky)),

133 | ('2', ConvBatchNormReLU(256, emb_size, 1, 1, 0, 1, leaky=leaky))

134 | ]))

135 | self.mapping_lang = torch.nn.Sequential(

136 | nn.Linear(self.textdim, emb_size),

137 | nn.BatchNorm1d(emb_size),

138 | nn.ReLU(),

139 | nn.Dropout(jemb_drop_out),

140 | nn.Linear(emb_size, emb_size),

141 | nn.BatchNorm1d(emb_size),

142 | nn.ReLU(),

143 | )

144 | embin_size = emb_size*2

145 | if self.coordmap:

146 | embin_size+=8

147 | if self.light:

148 | self.fcn_emb = nn.Sequential(OrderedDict([

149 | ('0', torch.nn.Sequential(

150 | ConvBatchNormReLU(embin_size, emb_size, 1, 1, 0, 1, leaky=leaky),)),

151 | ('1', torch.nn.Sequential(

152 | ConvBatchNormReLU(embin_size, emb_size, 1, 1, 0, 1, leaky=leaky),)),

153 | ('2', torch.nn.Sequential(

154 | ConvBatchNormReLU(embin_size, emb_size, 1, 1, 0, 1, leaky=leaky),)),

155 | ]))

156 | self.fcn_out = nn.Sequential(OrderedDict([

157 | ('0', torch.nn.Sequential(

158 | nn.Conv2d(emb_size, 3*5, kernel_size=1),)),

159 | ('1', torch.nn.Sequential(

160 | nn.Conv2d(emb_size, 3*5, kernel_size=1),)),

161 | ('2', torch.nn.Sequential(

162 | nn.Conv2d(emb_size, 3*5, kernel_size=1),)),

163 | ]))

164 | else:

165 | self.fcn_emb = nn.Sequential(OrderedDict([

166 | ('0', torch.nn.Sequential(

167 | ConvBatchNormReLU(embin_size, emb_size, 1, 1, 0, 1, leaky=leaky),

168 | ConvBatchNormReLU(emb_size, emb_size, 3, 1, 1, 1, leaky=leaky),

169 | ConvBatchNormReLU(emb_size, emb_size, 1, 1, 0, 1, leaky=leaky),)),

170 | ('1', torch.nn.Sequential(

171 | ConvBatchNormReLU(embin_size, emb_size, 1, 1, 0, 1, leaky=leaky),

172 | ConvBatchNormReLU(emb_size, emb_size, 3, 1, 1, 1, leaky=leaky),

173 | ConvBatchNormReLU(emb_size, emb_size, 1, 1, 0, 1, leaky=leaky),)),

174 | ('2', torch.nn.Sequential(

175 | ConvBatchNormReLU(embin_size, emb_size, 1, 1, 0, 1, leaky=leaky),

176 | ConvBatchNormReLU(emb_size, emb_size, 3, 1, 1, 1, leaky=leaky),

177 | ConvBatchNormReLU(emb_size, emb_size, 1, 1, 0, 1, leaky=leaky),)),

178 | ]))

179 | self.fcn_out = nn.Sequential(OrderedDict([

180 | ('0', torch.nn.Sequential(

181 | ConvBatchNormReLU(emb_size, emb_size//2, 1, 1, 0, 1, leaky=leaky),

182 | nn.Conv2d(emb_size//2, 3*5, kernel_size=1),)),

183 | ('1', torch.nn.Sequential(

184 | ConvBatchNormReLU(emb_size, emb_size//2, 1, 1, 0, 1, leaky=leaky),

185 | nn.Conv2d(emb_size//2, 3*5, kernel_size=1),)),

186 | ('2', torch.nn.Sequential(

187 | ConvBatchNormReLU(emb_size, emb_size//2, 1, 1, 0, 1, leaky=leaky),

188 | nn.Conv2d(emb_size//2, 3*5, kernel_size=1),)),

189 | ]))

190 |

191 | def forward(self, image, word_id, word_mask):

192 | ## Visual Module

193 | ## [1024, 13, 13], [512, 26, 26], [256, 52, 52]

194 | batch_size = image.size(0)

195 | raw_fvisu = self.visumodel(image)

196 | fvisu = []

197 | for ii in range(len(raw_fvisu)):

198 | fvisu.append(self.mapping_visu._modules[str(ii)](raw_fvisu[ii]))

199 | fvisu[ii] = F.normalize(fvisu[ii], p=2, dim=1)

200 |

201 | ## Language Module

202 | if self.lstm:

203 | # max_len = (word_id != 0).sum(1).max().data[0]

204 | max_len = (word_id != 0).sum(1).max().item()

205 | word_id = word_id[:, :max_len]

206 | raw_flang = self.textmodel(word_id)

207 | else:

208 | all_encoder_layers, _ = self.textmodel(word_id, \

209 | token_type_ids=None, attention_mask=word_mask)

210 | ## Sentence feature at the first position [cls]

211 | raw_flang = (all_encoder_layers[-1][:,0,:] + all_encoder_layers[-2][:,0,:]\

212 | + all_encoder_layers[-3][:,0,:] + all_encoder_layers[-4][:,0,:])/4

213 | ## fix bert during training

214 | raw_flang = raw_flang.detach()

215 | flang = self.mapping_lang(raw_flang)

216 | flang = F.normalize(flang, p=2, dim=1)

217 |

218 | flangvisu = []

219 | for ii in range(len(fvisu)):

220 | flang_tile = flang.view(flang.size(0), flang.size(1), 1, 1).\

221 | repeat(1, 1, fvisu[ii].size(2), fvisu[ii].size(3))

222 | if self.coordmap:

223 | coord = generate_coord(batch_size, fvisu[ii].size(2), fvisu[ii].size(3))

224 | flangvisu.append(torch.cat([fvisu[ii], flang_tile, coord], dim=1))

225 | else:

226 | flangvisu.append(torch.cat([fvisu[ii], flang_tile], dim=1))

227 | ## fcn

228 | intmd_fea, outbox = [], []

229 | for ii in range(len(fvisu)):

230 | intmd_fea.append(self.fcn_emb._modules[str(ii)](flangvisu[ii]))

231 | outbox.append(self.fcn_out._modules[str(ii)](intmd_fea[ii]))

232 | return outbox

233 |

234 | if __name__ == "__main__":

235 | import sys

236 | import argparse

237 | sys.path.append('.')

238 | from dataset.referit_loader import *

239 | from torch.autograd import Variable

240 | from torch.utils.data import DataLoader

241 | from torchvision.transforms import Compose, ToTensor, Normalize

242 | from utils.transforms import ResizeImage, ResizeAnnotation

243 | parser = argparse.ArgumentParser(

244 | description='Dataloader test')

245 | parser.add_argument('--size', default=416, type=int,

246 | help='image size')

247 | parser.add_argument('--data', type=str, default='./ln_data/',

248 | help='path to ReferIt splits data folder')

249 | parser.add_argument('--dataset', default='referit', type=str,

250 | help='referit/flickr/unc/unc+/gref')

251 | parser.add_argument('--split', default='train', type=str,

252 | help='name of the dataset split used to train')

253 | parser.add_argument('--time', default=20, type=int,

254 | help='maximum time steps (lang length) per batch')

255 | parser.add_argument('--emb_size', default=256, type=int,

256 | help='word embedding dimensions')

257 | # parser.add_argument('--lang_layers', default=3, type=int,

258 | # help='number of SRU/LSTM stacked layers')

259 |

260 | args = parser.parse_args()

261 |

262 | torch.manual_seed(13)

263 | np.random.seed(13)

264 | torch.backends.cudnn.deterministic = True

265 | torch.backends.cudnn.benchmark = False

266 | input_transform = Compose([

267 | ToTensor(),

268 | # ResizeImage(args.size),

269 | Normalize(

270 | mean=[0.485, 0.456, 0.406],

271 | std=[0.229, 0.224, 0.225])

272 | ])

273 |

274 | refer = ReferDataset(data_root=args.data,

275 | dataset=args.dataset,

276 | split=args.split,

277 | imsize = args.size,

278 | transform=input_transform,

279 | max_query_len=args.time)

280 |

281 | train_loader = DataLoader(refer, batch_size=2, shuffle=True,

282 | pin_memory=True, num_workers=1)

283 |

284 | model = textcam_yolo_light(emb_size=args.emb_size)

285 |

286 | for batch_idx, (imgs, word_id, word_mask, bbox) in enumerate(train_loader):

287 | image = Variable(imgs)

288 | word_id = Variable(word_id)

289 | word_mask = Variable(word_mask)

290 | bbox = Variable(bbox)

291 | bbox = torch.clamp(bbox,min=0,max=args.size-1)

292 |

293 | pred_anchor_list = model(image, word_id, word_mask)

294 | for pred_anchor in pred_anchor_list:

295 | print(pred_anchor)

296 | print(pred_anchor.shape)

297 |

--------------------------------------------------------------------------------

/model/yolov3.cfg:

--------------------------------------------------------------------------------

1 | [net]

2 | # Testing

3 | #batch=1

4 | #subdivisions=1

5 | # Training

6 | batch=16

7 | subdivisions=1

8 | width=416

9 | height=416

10 | channels=3

11 | momentum=0.9

12 | decay=0.0005

13 | angle=0

14 | saturation = 1.5

15 | exposure = 1.5

16 | hue=.1

17 |

18 | learning_rate=0.001

19 | burn_in=1000

20 | max_batches = 500200

21 | policy=steps

22 | steps=400000,450000

23 | scales=.1,.1

24 |

25 | [convolutional]

26 | batch_normalize=1

27 | filters=32

28 | size=3

29 | stride=1

30 | pad=1

31 | activation=leaky

32 |

33 | # Downsample

34 |

35 | [convolutional]

36 | batch_normalize=1

37 | filters=64

38 | size=3

39 | stride=2

40 | pad=1

41 | activation=leaky

42 |

43 | [convolutional]

44 | batch_normalize=1

45 | filters=32

46 | size=1

47 | stride=1

48 | pad=1

49 | activation=leaky

50 |

51 | [convolutional]

52 | batch_normalize=1

53 | filters=64

54 | size=3

55 | stride=1

56 | pad=1

57 | activation=leaky

58 |

59 | [shortcut]

60 | from=-3

61 | activation=linear

62 |

63 | # Downsample

64 |

65 | [convolutional]

66 | batch_normalize=1

67 | filters=128

68 | size=3

69 | stride=2

70 | pad=1

71 | activation=leaky

72 |

73 | [convolutional]

74 | batch_normalize=1

75 | filters=64

76 | size=1

77 | stride=1

78 | pad=1

79 | activation=leaky

80 |

81 | [convolutional]

82 | batch_normalize=1

83 | filters=128

84 | size=3

85 | stride=1

86 | pad=1

87 | activation=leaky

88 |

89 | [shortcut]

90 | from=-3

91 | activation=linear

92 |

93 | [convolutional]

94 | batch_normalize=1

95 | filters=64

96 | size=1

97 | stride=1

98 | pad=1

99 | activation=leaky

100 |

101 | [convolutional]

102 | batch_normalize=1

103 | filters=128

104 | size=3

105 | stride=1

106 | pad=1

107 | activation=leaky

108 |

109 | [shortcut]

110 | from=-3

111 | activation=linear

112 |

113 | # Downsample

114 |

115 | [convolutional]

116 | batch_normalize=1

117 | filters=256

118 | size=3

119 | stride=2

120 | pad=1

121 | activation=leaky

122 |

123 | [convolutional]

124 | batch_normalize=1

125 | filters=128

126 | size=1

127 | stride=1

128 | pad=1

129 | activation=leaky

130 |

131 | [convolutional]

132 | batch_normalize=1

133 | filters=256

134 | size=3

135 | stride=1

136 | pad=1

137 | activation=leaky

138 |

139 | [shortcut]

140 | from=-3

141 | activation=linear

142 |

143 | [convolutional]

144 | batch_normalize=1

145 | filters=128

146 | size=1

147 | stride=1

148 | pad=1

149 | activation=leaky

150 |

151 | [convolutional]

152 | batch_normalize=1

153 | filters=256

154 | size=3

155 | stride=1

156 | pad=1

157 | activation=leaky

158 |

159 | [shortcut]

160 | from=-3

161 | activation=linear

162 |

163 | [convolutional]

164 | batch_normalize=1

165 | filters=128

166 | size=1

167 | stride=1

168 | pad=1

169 | activation=leaky

170 |

171 | [convolutional]

172 | batch_normalize=1

173 | filters=256

174 | size=3

175 | stride=1

176 | pad=1

177 | activation=leaky

178 |

179 | [shortcut]

180 | from=-3

181 | activation=linear

182 |

183 | [convolutional]

184 | batch_normalize=1

185 | filters=128

186 | size=1

187 | stride=1

188 | pad=1

189 | activation=leaky

190 |

191 | [convolutional]

192 | batch_normalize=1

193 | filters=256

194 | size=3

195 | stride=1

196 | pad=1

197 | activation=leaky

198 |

199 | [shortcut]

200 | from=-3

201 | activation=linear

202 |

203 |

204 | [convolutional]

205 | batch_normalize=1

206 | filters=128

207 | size=1

208 | stride=1

209 | pad=1

210 | activation=leaky

211 |

212 | [convolutional]

213 | batch_normalize=1

214 | filters=256

215 | size=3

216 | stride=1

217 | pad=1

218 | activation=leaky

219 |

220 | [shortcut]

221 | from=-3

222 | activation=linear

223 |

224 | [convolutional]

225 | batch_normalize=1

226 | filters=128

227 | size=1

228 | stride=1

229 | pad=1

230 | activation=leaky

231 |

232 | [convolutional]

233 | batch_normalize=1

234 | filters=256

235 | size=3

236 | stride=1

237 | pad=1

238 | activation=leaky

239 |

240 | [shortcut]

241 | from=-3

242 | activation=linear

243 |

244 | [convolutional]

245 | batch_normalize=1

246 | filters=128

247 | size=1

248 | stride=1

249 | pad=1

250 | activation=leaky

251 |

252 | [convolutional]

253 | batch_normalize=1

254 | filters=256

255 | size=3

256 | stride=1

257 | pad=1

258 | activation=leaky

259 |

260 | [shortcut]

261 | from=-3

262 | activation=linear

263 |

264 | [convolutional]

265 | batch_normalize=1

266 | filters=128

267 | size=1

268 | stride=1

269 | pad=1

270 | activation=leaky

271 |

272 | [convolutional]

273 | batch_normalize=1

274 | filters=256

275 | size=3

276 | stride=1

277 | pad=1

278 | activation=leaky

279 |

280 | [shortcut]

281 | from=-3

282 | activation=linear

283 |

284 | # Downsample

285 |

286 | [convolutional]

287 | batch_normalize=1

288 | filters=512

289 | size=3

290 | stride=2

291 | pad=1

292 | activation=leaky

293 |

294 | [convolutional]

295 | batch_normalize=1

296 | filters=256

297 | size=1

298 | stride=1

299 | pad=1

300 | activation=leaky

301 |

302 | [convolutional]

303 | batch_normalize=1

304 | filters=512

305 | size=3

306 | stride=1

307 | pad=1

308 | activation=leaky

309 |

310 | [shortcut]

311 | from=-3

312 | activation=linear

313 |

314 |

315 | [convolutional]

316 | batch_normalize=1

317 | filters=256

318 | size=1

319 | stride=1

320 | pad=1

321 | activation=leaky

322 |

323 | [convolutional]

324 | batch_normalize=1

325 | filters=512

326 | size=3

327 | stride=1

328 | pad=1

329 | activation=leaky

330 |

331 | [shortcut]

332 | from=-3

333 | activation=linear

334 |

335 |

336 | [convolutional]

337 | batch_normalize=1

338 | filters=256

339 | size=1

340 | stride=1

341 | pad=1

342 | activation=leaky

343 |

344 | [convolutional]

345 | batch_normalize=1

346 | filters=512

347 | size=3

348 | stride=1

349 | pad=1

350 | activation=leaky

351 |

352 | [shortcut]

353 | from=-3

354 | activation=linear

355 |

356 |

357 | [convolutional]

358 | batch_normalize=1

359 | filters=256

360 | size=1

361 | stride=1

362 | pad=1

363 | activation=leaky

364 |

365 | [convolutional]

366 | batch_normalize=1

367 | filters=512

368 | size=3

369 | stride=1

370 | pad=1

371 | activation=leaky

372 |

373 | [shortcut]

374 | from=-3

375 | activation=linear

376 |

377 | [convolutional]

378 | batch_normalize=1

379 | filters=256

380 | size=1

381 | stride=1

382 | pad=1

383 | activation=leaky

384 |

385 | [convolutional]

386 | batch_normalize=1

387 | filters=512

388 | size=3

389 | stride=1

390 | pad=1

391 | activation=leaky

392 |

393 | [shortcut]

394 | from=-3

395 | activation=linear

396 |

397 |

398 | [convolutional]

399 | batch_normalize=1

400 | filters=256

401 | size=1

402 | stride=1

403 | pad=1

404 | activation=leaky

405 |

406 | [convolutional]

407 | batch_normalize=1

408 | filters=512

409 | size=3

410 | stride=1

411 | pad=1

412 | activation=leaky

413 |

414 | [shortcut]

415 | from=-3

416 | activation=linear

417 |

418 |

419 | [convolutional]

420 | batch_normalize=1

421 | filters=256

422 | size=1

423 | stride=1

424 | pad=1

425 | activation=leaky

426 |

427 | [convolutional]

428 | batch_normalize=1

429 | filters=512

430 | size=3

431 | stride=1

432 | pad=1

433 | activation=leaky

434 |

435 | [shortcut]

436 | from=-3

437 | activation=linear

438 |

439 | [convolutional]

440 | batch_normalize=1

441 | filters=256

442 | size=1

443 | stride=1

444 | pad=1

445 | activation=leaky

446 |

447 | [convolutional]

448 | batch_normalize=1

449 | filters=512

450 | size=3

451 | stride=1

452 | pad=1

453 | activation=leaky

454 |

455 | [shortcut]

456 | from=-3

457 | activation=linear

458 |

459 | # Downsample

460 |

461 | [convolutional]

462 | batch_normalize=1

463 | filters=1024

464 | size=3